We have structured the following case studies as a deliberate progression, arranged in order of ascending complexity. The initial case establishes a foundational scenario, and each subsequent study introduces additional layers or variables, building directly on the previous ones. This tiered approach means that with each new level of complexity, the amount of inherent uncertainty to be managed also increases systematically. Therefore, the following case studies are presented with an integrated structure that combines methodology and results. This format was intentionally chosen to clearly associate the specific procedures used in each case with the findings they produced. Therefore, each case study begins with a description of the methodology, followed directly by an analysis of the resulting data.

3.1. Ideal Storage Devices with Convex Cost Functions

To initiate our comparative analysis, we first address the classical problem of managing an ideal grid-connected energy storage device. This scenario, extensively explored in prior literature using traditional optimal control methods, for instance, in [

15,

17], serves as a crucial baseline. In this study, we develop a systematic approach to tackle this problem using reinforcement learning methods. These RL techniques operate with incomplete information regarding the system’s model dynamics. A key objective here is to meticulously demonstrate and quantify the effects of this missing knowledge on the operational results, especially when contrasted with traditional optimal control methods that presume and operate with complete, deterministic knowledge of both the system model and all relevant signals, such as load and generation profiles. This initial, simplified case is fundamental for understanding the performance degradation that might occur when relying on data-driven approaches under ideal conditions where optimal control is expected to excel.

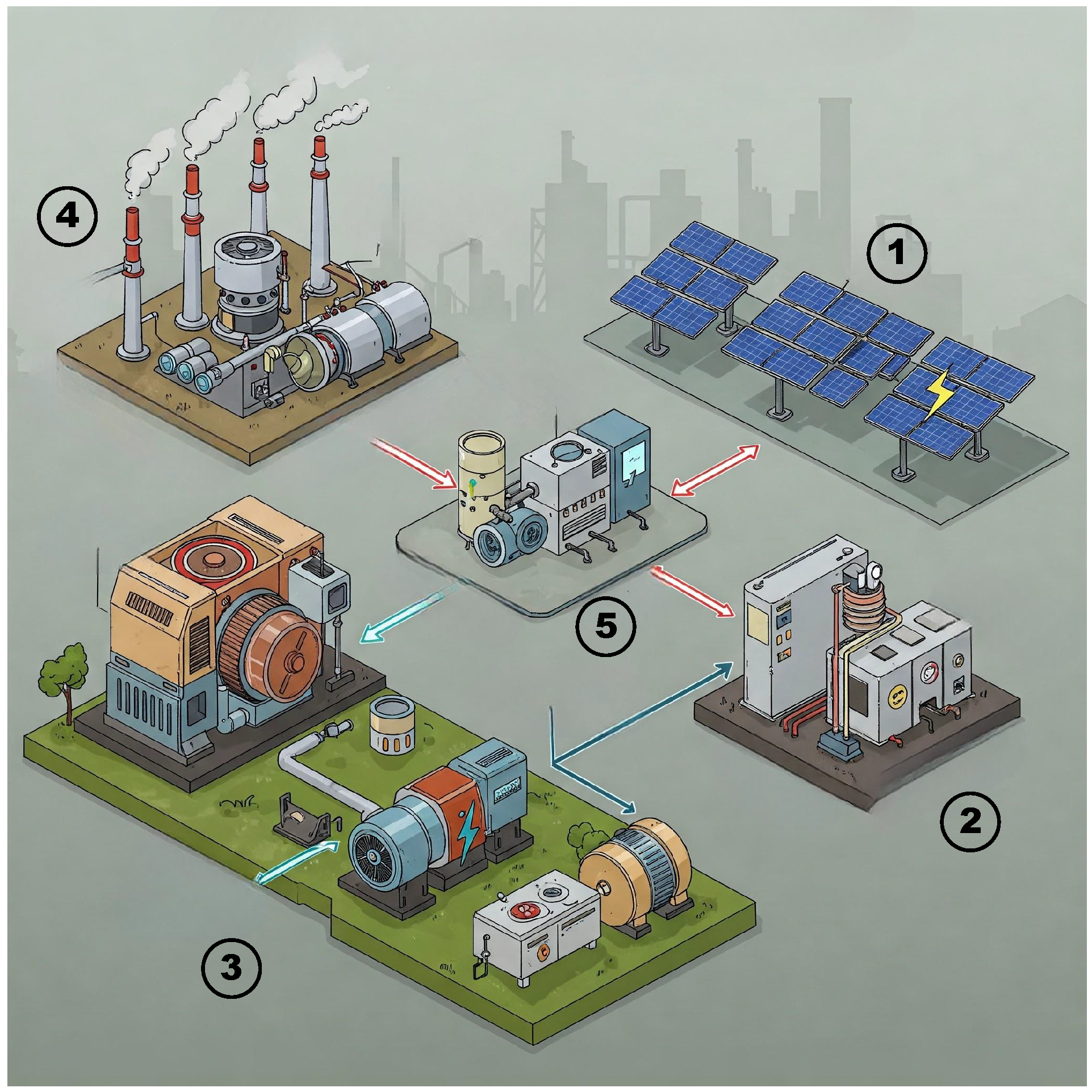

In this context, we consider a system comprising a grid-connected storage device and a photovoltaic (PV) panel, as illustrated in

Figure 2. This configuration involves the storage device being charged from both the main electrical grid and the local PV generation system. Subsequently, the stored energy, along with direct generation, is used to supply an aggregated electrical load, which is characterized by its active power consumption. The active power demand of this load, denoted as

, is modeled as a continuous positive function over a finite and known time interval

. Complementing this, the renewable energy generated by the PV system is represented by

, a piece-wise continuous and semi-positive function reflecting the available solar power. The net power consumption of the load is then defined as

. The charging or discharging power of the storage unit,

, is determined by the balance between the power drawn from the grid,

, and the net load demand, such that

. Correspondingly,

,

, and

represent the cumulative generated energy from the grid, the cumulative energy consumed by the load, and the energy stored in the battery, respectively. These energy quantities are derived by integrating their respective power functions over time, formally,

. For this foundational scenario, complexities such as internal storage losses or transmission line losses are intentionally omitted to establish an unambiguous performance benchmark for an ideal system.

The power drawn from or supplied to the grid,

, is the primary controllable variable in this system. Its usage is characterized by a fuel consumption function,

, which quantifies the cost, for example, fuel consumed or monetary cost, associated with generating power

at any time

. This cost function can also be interpreted more broadly to represent other objectives, such as minimizing carbon emissions or other environmental impacts linked to power generation. A critical assumption, following the original work [

15] in the paper, is that this function

is twice differentiable and strictly convex, meaning its second derivative

. This convexity is significant as it generally ensures that any local minimum found is also the global minimum, simplifying the optimization process. The overall objective is to minimize the total cost of fuel consumption over the entire period,

. This leads to the formulation of the following optimization problem:

These constraints ensure energy balance, respect the storage capacity limits (), and define the operational cycle of the storage.

An optimal solution to this problem, under the assumption of complete knowledge, can be efficiently found using the Shortest-Path method, as introduced in the work [

15] in the paper. This method posits that the optimal trajectory of generated energy,

, corresponds to a minimal-cost path. This path is constrained to lie between the cumulative energy demand

and the maximum possible stored energy level

. The identification of this path is achieved by applying Dijkstra’s algorithm to a specially constructed graph that represents the feasible energy states and transitions over time. To construct this graph, the continuous time horizon

is discretized into

N uniform intervals of duration

, such that

for

. At each discrete time step

, the permissible energy levels

are bounded by

. The search graph

is then defined where each vertex

signifies a discretized energy level

at time

, and each edge

represents a feasible transition from an energy state

at time

to another state

at the subsequent time step

. These transitions are governed by feasible charging/discharging rates,

, which must be within the range

(assuming

can also represent maximum charge/discharge capability within a

interval). The power drawn from the generator for such a transition is

. The weight assigned to each edge,

, is determined by the cost function

, leveraging the strict convexity of

. Due to the continuous nature of

, a uniform discretization with resolution

is applied in the energy space, yielding a finite set of allowable energy levels at each time step. This results in a layered graph structure, where layers correspond to time steps

, and nodes within each layer represent feasible energy states at that step. Dijkstra’s algorithm then systematically explores paths starting from the initial node

, expanding paths of incrementally increasing cumulative cost until it reaches a terminal node at

that satisfies the end condition

. The path identified with the overall minimum cumulative cost dictates the optimal energy dispatch trajectory for the storage system. This method’s efficacy is fundamentally tied to the availability of complete prior knowledge of system dynamics and load signals.

In contrast, the reinforcement learning formulation approaches the problem by replacing the assumption of a deterministic and fully known load function with a generative or learned model of the environment. The environment is conceptualized as an MDP, characterized by a continuous state space and a continuous action space . At each discrete time step k, the state of the system is defined by a tuple , where is the state of charge of the battery, indicates the current hour of the day, providing temporal context, and is the net load demand at that time . The action , taken by the RL agent, determines the amount of energy to be charged into or discharged from the battery, constrained by , with for charging, with similar bounds for discharging, representing the energy transfer limit, and also by the battery’s maximum capacity, . Following an action , the system transitions deterministically, in this ideal, lossless model, to a new state , and the agent receives a scalar reward . This reward is defined by the negative of the generation cost, , where . This interaction process unfolds over the specified time horizon until a terminal time is reached, yielding a complete trajectory of states, actions, and rewards, and, thus, a cumulative reward or cost. The core objective for the RL agent is to learn an optimal policy that minimizes the expected cumulative operational cost over time. This learning occurs through trial and error or experience, without explicit programming of the optimal strategy, making it suitable for model-free approaches where the agent directly optimizes its policy based on environmental interactions.

To empirically evaluate and compare these methodologies, the optimal control policy derived from the Shortest-Path method was implemented in MATLAB R2024a, while three distinct model-free RL algorithms—Soft Actor–Critic (SAC), Proximal Policy Optimization (PPO), and Twin Delayed DDPG (TD3)—were implemented in Python 3.9 using the Stable-Baselines library, version 2.7.1a3. The corresponding code files are accessible via a public git repository [

20]. The operational task is to determine a policy that specifies the generation actions, consequently inducing charging or discharging of the storage unit, over a 24 h interval, setting

h for this study. The selection of these model-free RL approaches was driven by their widespread use in various control applications, particularly for energy storage, owing to their general applicability and relative ease of adaptation to new or poorly defined domains where system dynamics are not explicitly modeled.

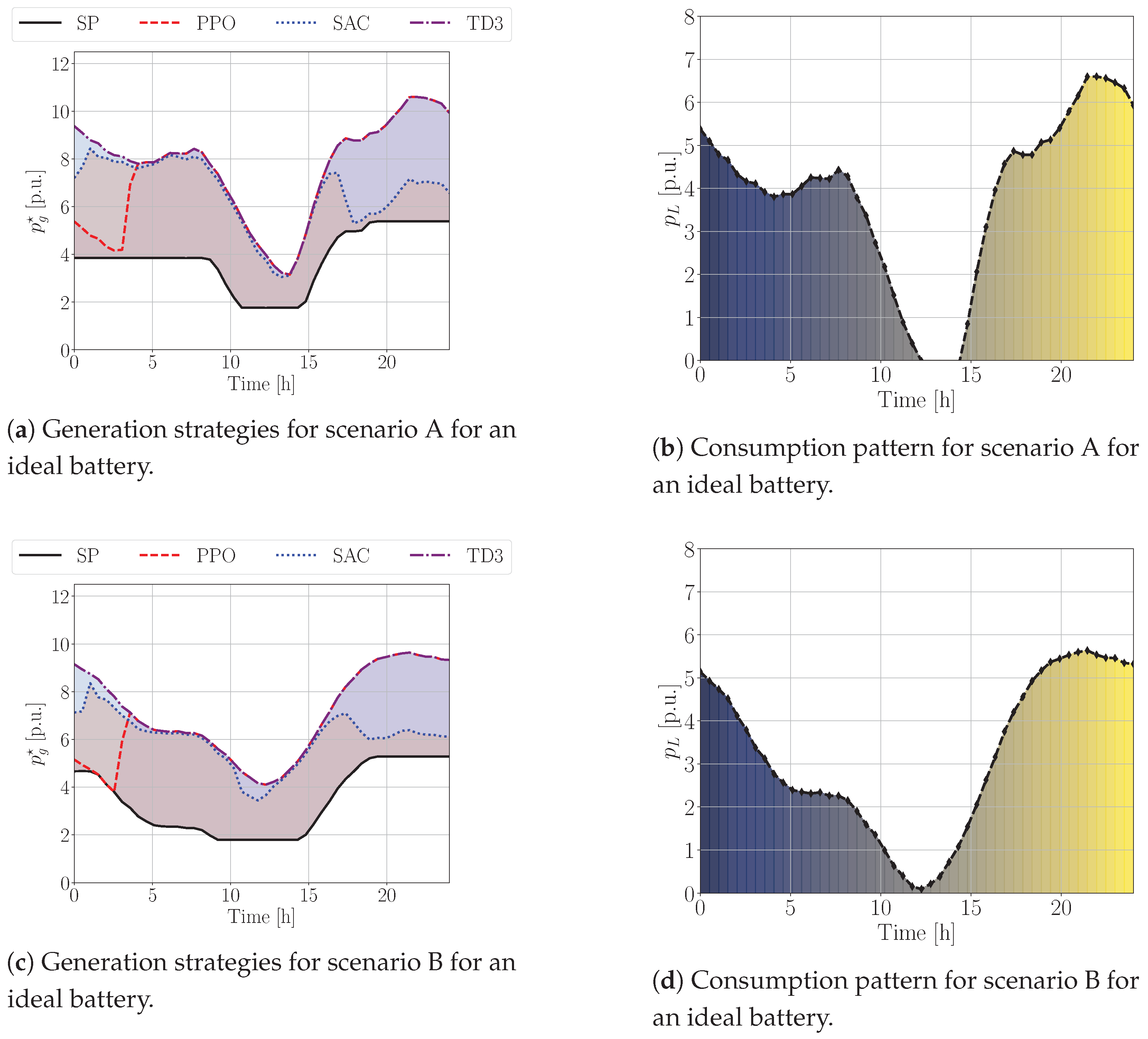

Results: The results from this first scenario, presented in

Figure 3, are interesting, and back up the initial assumptions. None of the examined RL methods succeeded in learning an effective operational policy when benchmarked against the optimal path determined by the classical Shortest-Path algorithm. An intriguing secondary observation was the similarity in the policies learned by both the PPO and TD3 methods. This is noteworthy because PPO employs a stochastic policy search, whereas TD3 relies on a deterministic policy. This convergence to similar policies, despite algorithmic differences, offers several insights into the underlying structure of this specific control problem.

Firstly, if two different days exhibit similar patterns of energy demand and PV production, and if their initial storage conditions are close, they are likely to result in similar operational policies. This consistency can be attributed to the fact that the value function, which the RL agent seeks to optimize, is Cauchy bounded, implying that small changes in input conditions lead to correspondingly small changes in output values or actions. Secondly, the inherent dynamics governing the interactions among the system’s components, the storage device, PV generation, grid power, and load demand are assumed to be time invariant in this idealized model. This means that the fundamental relationships and operational characteristics of the system do not change over the simulation period, providing a stable learning environment. Thirdly, the reward function, derived from the strictly convex generation cost function

, is itself convex. A convex reward landscape is bereft of local minima, which means that different optimization algorithms, even if they explore the solution space differently, are more likely to be guided towards the same global optimum or a similar region of high performance. Finally, the reward function may exhibit symmetries concerning certain actions or states, thereby guiding different algorithms towards similar policies. For instance, consider two distinct scenarios: one (state

) where high PV production fully meets the current demand, but high future demand is predicted, prompting a decision to charge the storage with

[p.u.]. Another scenario (state

) might involve low PV production and an empty storage unit, necessitating the purchase of 1 [p.u.] from the grid merely to satisfy the current demand. Despite these being entirely different operational situations, they could potentially yield the same immediate reward (cost). If such reward equivalences are common, RL algorithms might develop policies that are relatively insensitive to the nuanced differences between these states, causing disparate algorithms like PPO and TD3 to converge towards similar, possibly generalized, strategies. This insensitivity, if it leads to overlooking critical state distinctions, could also contribute to the observed suboptimal performance of the RL agents compared to the fully informed optimal control solution. The daily costs over a 100-day test set, shown in

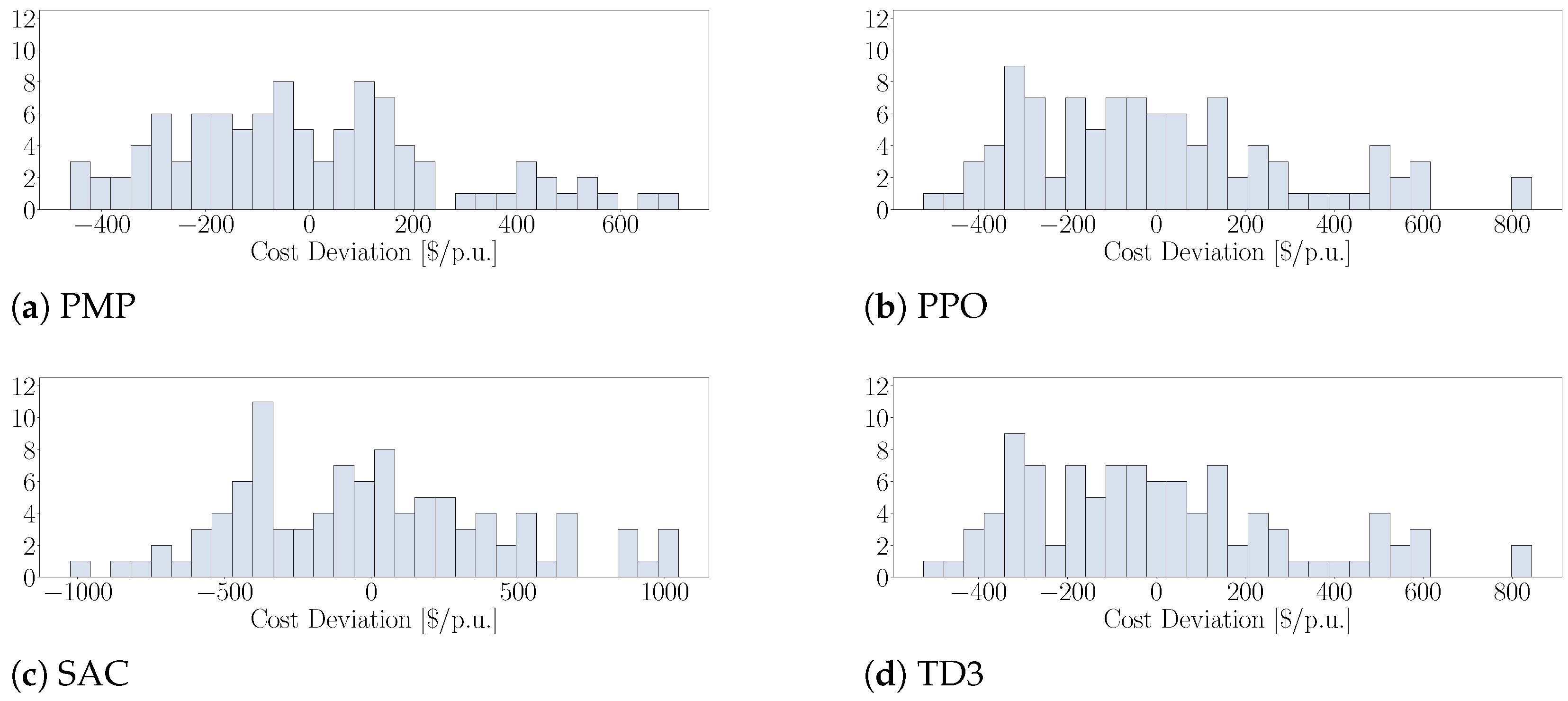

Figure 4 and

Table 1, further quantify this performance gap, with RL methods incurring substantially higher mean costs. Specifically, the PPO algorithm resulted in

USD/p.u., the SAC algorithm resulted in

USD/p.u., and the TD3 resulted in

USD/p.u., in comparison to the optimal control approach, using the SP algorithm, that resulted in

USD/p.u. Moreover, the model-free RL approaches exhibited greater variance compared to the SP method. The cost deviations depicted in

Figure 5 highlight the tighter concentration of costs around the mean for SP, which is mostly within

USD/p.u., in comparison to a wider spread for the RL algorithms, as evident by examining the PPO and TD3 results, which exceed

USD/p.u.

3.2. Grid Connected Lossy Storage Devices

To continue the comparative analysis and delve into more realistic operational conditions, we now introduce a model with more intricate dynamics by incorporating a lossy storage device, as detailed in work [

16]. This progression from an ideal to a lossy model inherently increases the complexity and the amount of uncertainty that both traditional optimal control and reinforcement learning algorithms must handle. The presence of energy losses during charging, discharging, and even self-decay introduces a new layer of challenge in optimizing the storage operation for minimal cost.

To formally incorporate these battery losses, we define an efficiency function

as a piece-wise continuous function. This function characterizes the efficiency of the storage device and depends on the power flowing into or out of it,

, and it is defined as

Here,

represents the charging efficiency,

is the discharging efficiency, and

accounts for inherent energy decay or self-discharge over time. Furthermore, we assess a generalized non-affine dynamic model for the battery’s state of charge,

, which is defined by the following differential equation:

This dynamic model directly links the rate of change of stored energy to the power flow and the state-dependent efficiency. This formulation leads to the following optimization problem:

where

is the primary cost function, accounting for generation cost, as the power flowing bidirectionally from the battery

influences

). The function

introduces a penalty for violating storage capacity constraints. This penalty function is defined as

with

being a large penalty coefficient. By setting

to a sufficiently large value, we implicitly enforce that the stored energy

remains within the operational bounds

, as deviations would incur extremely high costs. Solving for the optimal energy storage trajectory

and the corresponding power flow

allows for the determination of the grid power

and generated energy

through the system’s power balance equations. For this scenario, Pontryagin’s minimum principle is employed as the classical optimal control method to find an analytical solution.

Consequently, the explicit analytical solution derived using PMP is described by the dynamics of the optimal state

and an associated Lagrange multiplier, the costate variable,

. The optimal rate of change of stored energy is

, with boundary conditions

. The costate variable evolves according to

, where

is the derivative of the penalty function. The optimal storage power

is then determined based on

, the net load

, and the charging or discharging efficiencies, referred to as

and

in this context:

This solution provides the benchmark optimal control policy under the assumption of known loss characteristics.

The RL formulation for this lossy storage case closely mirrors that of the ideal storage scenario, with the crucial difference lying in the definition of the MDP’s state transition function. To accurately reflect the system’s behavior, the transition function must now explicitly account for the energy losses inherent in the storage device. When the agent takes an action , representing the energy intended to be charged or discharged before losses, the resulting next state , which is the new state of charge , is calculated as . This occurs under the assumption that the transition respects the battery’s physical capacity limits, formally, . The term represents the effective efficiency for that specific transition. If , meaning active charging or discharging occurs, incorporates both the relevant charge or discharge efficiency, denoted by or , respectively, and the self-decay component . If , meaning no active charging or discharging, then simply reflects the self-decay over the time step . This modification ensures that the RL agent learns to operate the storage device while being subjected to realistic energy loss dynamics.

Results: The experimental results for this scenario, presented in

Figure 6, reveal a notable shift in comparative performance. While the classical PMP algorithm still achieved the lowest operational costs, the performance gap between the optimal control algorithm and some of the RL methods narrowed considerably. Specifically, the policies learned by PPO and TD3 were again observed to be quite similar, and their mean daily operational costs were significantly closer to the PMP benchmark than in the ideal storage case. As shown in

Table 2 of the original paper, the PMP method achieved a mean daily cost of

USD/p.u. Remarkably, both PPO and TD3 achieved mean costs of

USD/p.u., which is only approximately

higher than the PMP optimal. This suggests that when the uncertainty, in the form of power loss, is primarily confined to the internal dynamics of the storage device, these RL algorithms can learn the storage behavior quite effectively and achieve a substantial improvement in their relative cost performance, even without explicit prior knowledge of the loss model. The SAC algorithm, however, did not perform as well, with a mean cost of

USD/p.u., indicating its potential struggles with this specific problem configuration or hyperparameter tuning.

An interesting observation from

Table 2 is that the mean and variance of the classical PMP algorithm’s costs were lower than those of the SP algorithm in the ideal case, with

[USD/p.u.] in comparison to

[USD/p.u.] achieved by the SP algorithm. This might suggest that PMP, which is well suited for continuous-time optimal control problems, handles the continuous dynamics of the lossy storage model more adeptly than the Dijkstra-based SP method would if applied to a discretized version of this more complex problem. Furthermore, the mean costs for PPO and TD3 also decreased compared to their performance in the ideal case, with

USD/p.u. in comparison to

USD/p.u. for PPO and

USD/p.u. for TD3. This may be atributed to the improvement to their inherent ability to navigate and learn within large and complex state spaces, allowing them to capture some of the nuances of the lossy environment more effectively than they did in the simpler ideal environment where their learning seemed less focused. However, it is also crucial to note that the introduction of model complexity in the form of losses led to an increase in the variance of the daily costs for all RL algorithms.

Figure 7, which presents the daily costs, and

Figure 8, which presents the deviations of the costs, visually confirm these trends, showing PPO and TD3 tracking much closer to the PMP optimal than in the first scenario, while SAC remains significantly higher. The cost deviations for PMP remained tightly centered, mostly within

USD/p.u., whereas SAC exhibited the largest spread, indicating higher variability.

3.3. Storage Devices Within a Transmission Grid with Losses

In this section, we advance our comparative analysis by eliminating an additional simplifying assumption. We now introduce and analyze a model that incorporates power losses occurring over the transmission lines situated between the power generation source and the consumer block, which includes the energy storage system. This represents the most complex scenario in our study, aiming to capture a more comprehensive set of uncertainties that real-world systems face. The system under consideration can be conceptualized as consisting of a primary power source, modeled as a synchronous generator, and a non-linear aggregated load, similarly to the illustration in

Figure 2. This non-linear load block comprises the photovoltaic generation unit, the energy storage device with a defined capacity

, and the end-consumer load that consumes active power

. A key modeling assumption here is that the components encapsulated within this non-linear load block (PV, storage, and consumer load) are located in close physical proximity to each other, rendering the energy transmission between these internal components effectively lossless. However, the transmission from the main synchronous generator to this aggregated load block is subject to losses.

To perform this analysis, we adopt a distributed circuit model perspective, specifically considering a short-length transmission line. The power generated at the source,

, and the power received at the aggregated load and storage blocks are related through a loss model. It is denoted by

, with

being the power flowing bidirectionally from storage and

being the consumer load demand. The power generated by the source,

, must be greater than

to compensate for these losses. We approximate the generated power required as

Here,

is the power flowing into or from the storage device. The term

represents the quadratic transmission losses, where

is a constant determined by the transmission line resistance

R and the square of the magnitude of the line voltage

. Such a quadratic relationship for transmission losses is a well-established approximation in power systems literature, as discussed, for example, by [

23]. This formulation leads to the following optimization problem:

The function is the cost of generation at the source, now applied to the total power that includes losses. The term represents the efficiency of the storage device itself, as in the previous lossy storage scenario, potentially including charge, discharge and decay effects. For this complex problem, dynamic programming (DP) is chosen as the classical optimal control method.

To apply DP, the problem is discretized. We define a time resolution

, with discrete time steps

for

. Accordingly, the energy stored at time

is

, and the power values are approximated as constant over each interval:

,

, and

. The discrete version of the optimization problem is given by

Here, represents the aggregated energy loss efficiency of the storage device for the i-th interval.

For the reinforcement learning solution in this scenario, the model must now account for transmission line losses in addition to the storage’s internal losses. Consequently, the state transition function is further modified. When an action is taken from a state with storage energy , the resulting next state with storage energy is determined by a two-stage loss application. First, the energy is subject to transmission line losses, represented by an efficiency factor . The energy effectively reaching or leaving the storage block is then . This amount is then subject to the storage’s internal charge or discharge and decay efficiencies, collectively denoted by , similarly to in the previous case study. Thus, the new state of charge might be approximated as . This compounded loss model should make the environment significantly more challenging for the RL agent to learn and control optimally.

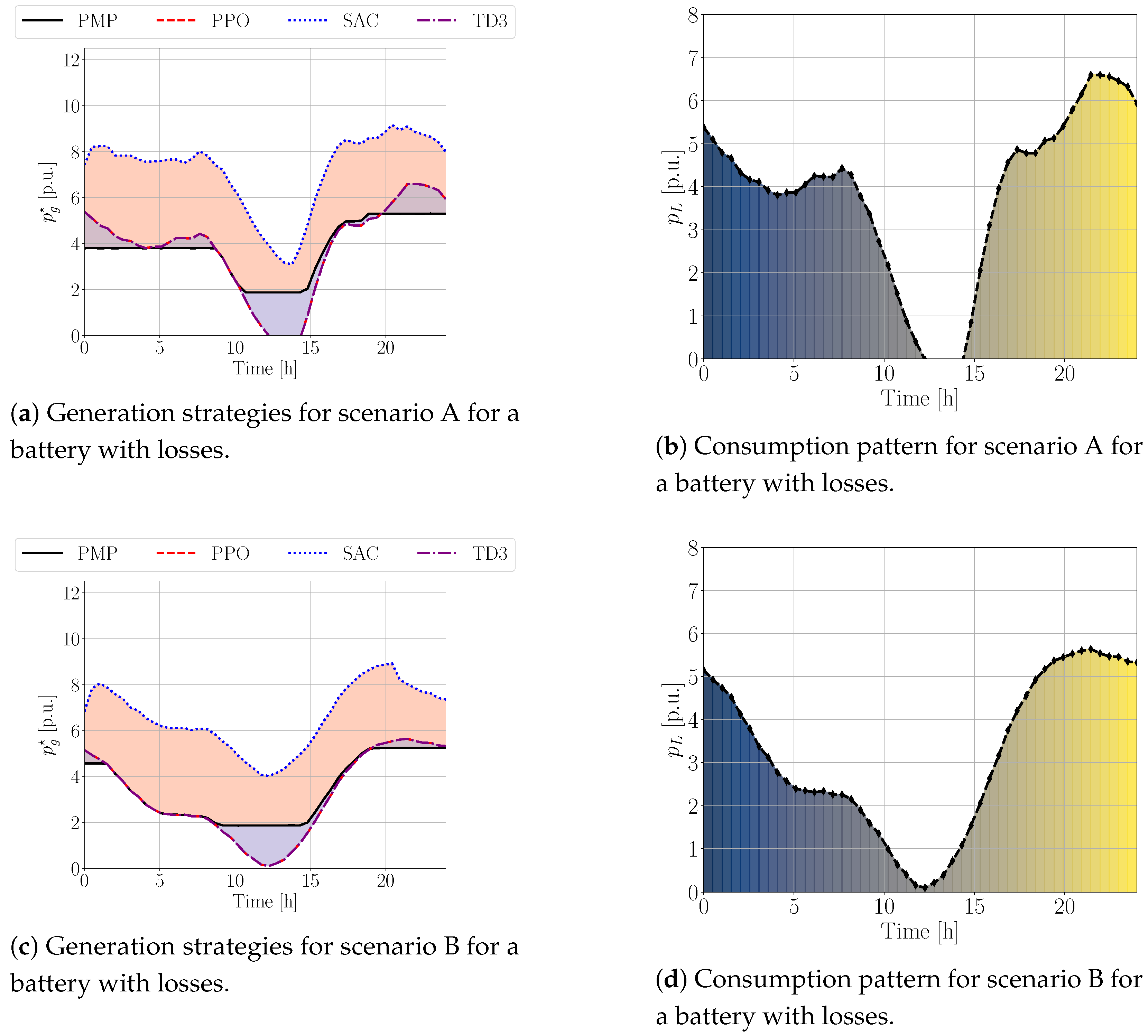

Results: The experimental results for this third scenario, presented in

Figure 9, demonstrate a further degradation in the performance of the RL algorithms when compared to the DP optimal solution. As detailed in

Table 3, the DP method achieved a mean daily cost of

USD/p.u.. In contrast, the PPO algorithm resulted in a mean cost of

USD/p.u., a

increase over DP. SAC incurred

USD/p.u., resulting in a

increase, and TD3 performed notably worse with a mean cost of

USD/p.u., showing a

increase, more than 3.5 times higher than the DP algorithm. These results underscore the difficulty RL methods face when dealing with external, system-level uncertainties like transmission line losses, which are not directly part of the storage device’s internal dynamics that the agent might more easily learn through interaction. The mean and variance of the classical DP algorithm also increased in this scenario compared to PMP in the lossy-storage-only case, where the mean value increased to

in comparison to

when applying PMP, and the value of the variance increased to

in comparison to

when applying PMP. This reflects the added difficulty posed by the transmission losses even for the optimal control approach. However, for all RL algorithms, there was a general trend of performance improvement relative to their own baseline in the ideal case, but a degradation compared to the lossy-storage case for PPO and SAC, while TD3 remained poor. This suggests that while RL can handle some forms of uncertainty, the nature and location of that uncertainty, whether it is internal, and is captured by the model, or external to the modeled MDP, significantly impacts its effectiveness.

A particularly interesting observation in this scenario was that the PPO and TD3 algorithms no longer produced similar policies, unlike in the previous two cases. The policy generated by the PPO algorithm, which employs a stochastic policy search, was observed to be smoother and more effective than that of the TD3 algorithm, which relies on a deterministic policy. This divergence and the superior performance of PPO further emphasize the potential benefits of using stochastic policies when navigating environments with highly unpredictable or complex dynamics, such as those introduced by combined storage and transmission losses. The deterministic policy of TD3 appeared more vulnerable to these compounded uncertainties, leading to significantly higher operational costs. The daily cost comparisons in

Figure 10 and the cost deviation histograms in

Figure 11 of the original paper visually corroborate these findings, showing PPO and SAC performing relatively better than TD3 but all RL methods trailing the DP benchmark by a wider margin than in the previous scenario. The cost deviations for PPO and SAC remained largely within

USD/p.u. of their mean, while TD3 exhibited a very wide spread, up to

USD/p.u., confirming that its deterministic policy struggled significantly with the unpredictable dynamics of this scenario.

Figure 10 presents for each algorithm the daily cost of energy production over a test set of 100 days. The mean and variance for each algorithm are presented in

Table 3.

The results in the table show few trends. (a) The mean and variance of the classical algorithm has increased. This is caused by the additional uncertainty of the dynamic model. (b) For all RL algorithms, we see improvement in the performance, which highlights their effectiveness when operating in highly uncertain environments, with stochastic transitions. (c) The PPO algorithm indeed achieves better results than TD3, which further emphasizes the effectiveness of using stochastic policies for such unpredictable model dynamics. The deviation from the mean of the daily costs of each algorithm over these 100 test days is depicted in

Figure 11.