1. Introduction

The admissibility and probative value of evidence within judicial systems highlights the importance of digital Evidence management systems (DEMSs) for storing, managing, and verifying digital evidence. As courts and investigations use more digital evidence, challenges have arisen surrounding the safeguarding and verification of digital evidence [

1]. DEMSs are intended to securely manage digital evidence, ensuring integrity, authenticity, and confidentiality. The essential requirement of DEMSs is to prevent unauthorised access or manipulation while allowing the legitimate entity to verify the data [

2].

However, whistleblowers and witnesses who provide critical evidence to resolve high-crime or corruption cases seek to protect their identities [

3]. Fear of retaliation or exposure deters many from coming forward. Once evidence is shared by whistleblowers with law enforcement agencies (LEAs), it must be managed and handled carefully [

4]. This evidence is often sensitive data, including digital documents, images, and video recordings, which can involve people in high-profile cases of corruption, fraud, or organised crime. Whistleblowers and witnesses risk their safety and identities when presenting evidence.

1.1. Challenges of Traditional DEMS Approaches

Digital evidence management is becoming increasingly complex with the continuous evolution of devices, formats, and communication channels [

5]. Digital evidence is susceptible to numerous challenges from the first step to the last, from the collection to the transfer, storage, and verification stages. With the high stakes of criminal cases, such as legal proceedings, and other sensitive issues, these challenges complicate the integrity of evidence and the judicial process itself [

6]. For example, during investigations into organised crime, LEAs often struggle to authenticate video evidence due to a poor chain of custody practices [

6]. Keeping whistleblowers anonymous is crucial in this process. This requires the full details of the evidence to be revealed during the verification and validation stages, often exposing identifying information. Whistleblowers may hesitate to come forward without anonymity, compromising the handling of the most serious cases.

Another challenge is to keep digital evidence authentic while protecting it from unauthorised access, modification, or tampering. In a digital environment, any alteration—whether inadvertent or purposeful—can adversely affect the integrity of the evidence and, in some cases, the outcome of the case [

7]. Thus, digital evidence must be carefully handled from its collection phase to its presentation in a court of law [

8]. However, in traditional DEMSs, even small mistakes in the handling procedures or system failures can raise doubts about the integrity of the evidence. Moreover, maintaining chain-of-custody records presents another challenge. Digital data can be replicated, altered or lost; hence, making an auditable, tamper-resistant record of who interacted with the data is essential [

9]. Traditional methods of chain-of-custody documentation, such as the manual maintainability of logs or using stand-alone systems, are susceptible to human error and do not prevent unauthorised alterations [

10].

With the growing complexity and volume of digital evidence, there is a dire need for a scalable and verifiable method of tracking provenance. Traditional systems find it challenging to manage large volumes of evidence quickly and efficiently, as they tend to slow down with scale [

11]. Delays in evidence processing and the verification of information slow down the legal process [

12]. Therefore, a DEMS must always have fast processing and retrieval mechanisms available to adapt to legal scenarios. Many traditional DEMSs also use centralised storage solutions, making them susceptible to hacking, insider threats, and data breaches. In a centralised approach, the security of the evidence can be compromised because it involves a single point of failure of the whole system [

13]. To address this issue, a decentralised approach, such as blockchain technology, can enhance data protection by eliminating single points of failure and introducing multiple layers of security.

1.2. Sensitivity Level of Traditional DEMS Approaches

Existing DEMSs traditionally use a cryptographic hash and digital signatures to verify the integrity and authenticity of the digital evidence [

14]. These cryptographic techniques have formed the basis of the practice of digital fingerprints that preserve the integrity of files by verifying their original state [

15]. However, the methods used are only partially effective in achieving this, as they are often inflexible, treating each data item equally, and not considering the sensitivity levels of each piece of evidence. Sensitive data may be given any extra protection and, therefore, can be exposed to unauthorised access or mishandling. This one-size-fits-all approach is unsuitable for different types of evidence.

A further key deficiency of traditional DEMSs is that, while the computational needs of verifications are fixed, they never consider the fact that the evidence can have different sensitivity levels [

16]. In cases involving high-sensitivity evidence, systems could benefit from scaling computational complexity to enhance security measures, especially for evidence more prone to tampering or misuse. However, traditional methods may lack this scalability, leading to wasted computational resources for evidence that does not need this power or reduced security for evidence that needs more. The inability to adjust computational demands based on sensitivity results in inefficiencies and may also increase the risk of security vulnerabilities, especially in resource-constrained environments where optimising performance is critical [

17].

1.3. Privacy Limitations of DEMSs’ Traditional Approaches

Conventional approaches to ensuring the integrity and authenticity of the data can also affect transparency and privacy in the verification process. In traditional systems, certain digital evidence can be revealed to validate its integrity, which can inadvertently expose sensitive information or compromise privacy [

18]. Such systems do not incorporate advanced privacy-preserving techniques, leaving whistleblowers and witnesses particularly vulnerable when submitting sensitive evidence to LEAs. This lack of privacy can deter individuals from coming forward with crucial information, further hampering justice processes and accountability efforts. Therefore, this results in a trade-off between transparency and confidentiality. In particular, the partial exposure of evidence during verification can reveal sensitive information, compromising the privacy or the integrity of the evidence [

19]. These transparency and privacy challenges must be effectively addressed to strengthen the evidence management solutions.

These limitations highlight the need for DEMSs to securely verify evidence while adjusting the computational difficulty based on the sensitivity levels. This need becomes even more critical in cases involving whistleblowers and witnesses, where protecting their identities while ensuring evidence validity is crucial to maintaining trust and encouraging cooperation [

20]. Alternative frameworks fail to yield scalable solutions supporting customisable security versus efficiency/privacy trade-offs, particularly in judicial contexts where security and responsiveness are paramount.

1.4. Enhancing Privacy and Integrity with Zero-Knowledge Proof in DEMSs

DEMSs have ensured secure and privacy-preserving digital evidence verification via zero-knowledge proofs (ZKPs), which authenticate the provenance of the evidence without disclosing the contents of the data that have been transmitted. In this paper, a party with some digital evidence (the prover) is described as a party that can prove that the evidence is genuine to some verifying authority (the verifier) without revealing any information about the actual evidence itself [

21]. This requirement is fundamental to DEMSs, where it is necessary to verify that digital evidence, such as images, documents, or videos, is genuine and correct. However, in many cases, digital evidence might contain confidential or private information. Using ZKPs, DEMSs can maintain high privacy standards while enabling strong evidence verification.

ZKPs in DEMSs involve a cryptographic exchange in which the prover, holding the digital evidence, creates a series of responses to challenges posed by the verifier. In this way, the verifier can verify that the prover has rightful access to the evidence without viewing its content [

22]. The prover usually starts with hashing or encoding significant parts of the evidence, deriving a unique signature for the evidence that will not show the proof itself. A challenge–response cycle then allows the verifier to check the integrity and authenticity of this identifier by assessing whether the responses meet specific criteria, which confirms the prover’s claim [

23].

ZKPs offer a transformative solution to the challenges mentioned above. With ZKPs, LEAS can confirm that the evidence is authentic and has not been tampered with without revealing the content of the evidence [

24]. For instance, a whistleblower can prove that they have valid proof without disclosing the data. This is needed to keep the information confidential while allowing law enforcement and judicial authorities to investigate and decide the legitimacy of the evidence.

1.5. Securing Digital Evidence Sensitivity with Blockchain in DEMSs

Blockchain technology offers a transformational capacity in managing digital evidence as it can fulfil some of the most challenging aspects of more traditional systems regarding integrity, secure access management, and the transparency of evidence collection and handling [

1]. Blockchain technology is an immutable decentralised ledger of transactions recorded across multiple nodes. These nodes “All participants in the blockchain network” means that once data are recorded within a block, the data cannot be changed or deleted without the network consensus. Such a feature is especially beneficial for DEMSs, in which the integrity of evidence is of the utmost importance. Using blockchain, every slice of digital evidence can be recorded securely, and every access to or change in the evidence generates an immutable entry in the ledger [

25]. This ensures the preservation of the evidence and its traceability, providing confidence in its admissibility in legal proceedings, mainly when dealing with sensitive evidence provided by whistleblowers or anonymous witnesses.

Another advantage of blockchain technology in DEMSs is that it helps create an auditable chain of custody [

26]. Any action performed on digital evidence, be it access, transfer or verification, is automatically logged on the blockchain, creating an immutable record that is quickly available and verified by authorised parties only [

27]. This ensures that sensitive actions, such as submissions from whistleblowers, are securely logged without exposing their identity. Such transparency is crucial for any legal proceeding as it ensures accountability at every stage of the evidence. The decentralised nature of blockchain also implies that no single authority can individually change the chain of custody records, creating confidence in law enforcement, legal professionals, and the courts regarding a system’s ability to ensure the preservation and protection of evidence.

blockchain-based DEMSs increases security about cryptographic features, which reduces the risk of unauthorised access and tampering. Centralised DEMSs are subject to the central point of attack whereby a single server crack can provide access to the whole DEMSs, whereas, with blockchain, evidence records are maintained on multiple nodes [

28]. Such a decentralised model is more resistant to data breaches or insider threats because compromising the system would require compromising more than half of the nodes simultaneously. The blockchain can also use smart contracts to manage access permissions for evidence, ensuring that only those authorised can access or interact with evidence records. These security measures make blockchain invaluable in building a more robust, reliable DEMS.

In a blockchain-based DEMS, ZKPs can be used in the proof of work (PoW) protocol, which can significantly improve the privacy and integrity of digital evidence verification. Typically, PoW requires participants to solve computational puzzles to add blocks, making it secure but also computationally intensive [

29]. Integrating ZKPs with PoW in DEMSs enables users to validate that the required work for evidence verification has been completed without revealing the sensitive details of the evidence or the calculations involved. This capability is crucial for whistleblower-submitted evidence as it maintains the whistleblower’s anonymity while ensuring that the evidence is valid and securely added to the system. By integrating ZKPs and PoW, DEMSs would secure transparent evidence chains while keeping sensitive digital evidence private and confidential, which is crucial for its legal and investigative aspects.

1.6. Key Contributions

This paper solves the challenges associated with safeguarding the identity of whistleblowers and evidence protection by proposing scalable and privacy-preserving DEMSs using ZKPs and blockchain. The key contributions of this paper are as follows:

A framework that embeds ZKPs into DEMSs to enable the verifiers to authenticate the digital evidence without revealing the underlying evidence contents, thus addressing the critical privacy concerns of whistleblowers and witnesses.

A novel dynamic difficulty mechanism where the level of computational complexity (proof of work) is adjusted based on the sensitivity of the digital evidence. This mechanism increases the difficulty level for more sensitive evidence, providing a scalable boost to security.

Through blockchain integration, a transparent, tamper-resistant log for digital evidence verification. The verification process generates a unique hash stored in the blockchain, ensuring that the chain remains untampered without revealing any private details regarding evidence handling.

The remainder of the paper is organised as follows.

Section 2 provides the literature review of the state of the art on DEMSs, ZKPs, and the use of blockchain therein.

Section 3 presents the system model and the designed methodology. The results and discussion are provided in

Section 4 and

Section 5, respectively. The conclusion is presented in

Section 6.

3. Methodology

The proposed model, shown in

Figure 1, shows the system workflow illustrating evidence submission, ZKP-based verification, and blockchain integration. Components include the following:

Prover submits evidence;

ZKP engine generates proofs;

Blockchain miners validate blocks;

Verifier confirms authenticity.

This framework utilises ZKPs to verify digital evidence without revealing the original data. The two main entities involved are the prover, who provides the information, and the verifier, who is responsible for authenticating the data in a court of law.

3.1. Research Design

The dataset (

https://universe.roboflow.com/crimesceneobjectsdetection/crime-scene-oma5u/dataset/7 accessed on 10 December 2024) employed by this study includes high-quality images for crime scene object detection, retrieved from an available public image bank. These images form a solid basis for evaluating the framework’s capacity to securely process sensitive digital evidence. Notably, the dataset was collected with permission since it is expressly permitted on the provider’s site for such research.

This research analyses the key variables responsible for the secure submission and verification of digital evidence. These variables include the following:

Mining time: This variable indicates the computational cost of processing and securing the evidence in the blockchain. This reflects the balance between the security and performance of the system and operationalises efficiency and resource utilisation.

Verification time: This variable measures the time taken by the verifier to authenticate digital evidence. This provides the concept of scalability of a system by showing how well the framework scales to the increasing amount of evidence.

Sensitivity level: This variable divides evidence into high, medium, and low sensitivity categories. It translates into the requirement for tailored security, which is paramount to secure sensitive evidence.

System efficiency: The system’s efficiency is shown in average time metrics such as mining and verification times. It operationalises the fundamental trade-off between computational cost and security strength.

The experimental work is tailored to test the principles derived from the theoretical background of DEMSs. The theory behind this work is that evidence verification mechanisms achieve optimal privacy and integrity through dynamic resource allocation based on the sensitivity level of the evidence. The proposed mechanism, such as dynamic difficulty, secures high-sensitivity evidence and reduces the computational overhead for less sensitive submissions. This two-fold methodology underlines the dynamic relationship between scalability and tailored security requirements.

An experimental framework integrating ZKPs and blockchain can address critical challenges and cover existing gaps in DEMSs. Rather than detailing existing system designs, this study embeds these systems into the experimental workflow to provide an integrated perspective. From the implementation perspective, specific framework tests are run that verify the framework’s functionality and confirm the underlying theory.

We aim to understand the trade-off between security and system performance by evaluating the mining time for varying sensitivity levels. This investigation is essential for designing systems intelligently using resources to provide the most suitable security while minimizing unwanted computational overhead. Furthermore, this study includes evidence sensitivity, verification time, and system efficiency. Mining time and verification time are particularly valuable metrics as they provide insights into the system’s scalability and effectiveness in real-world applications. These test the framework’s ability to manage sensitive evidence without severely degrading the system’s performance or user experience. Verification time, in particular, measures the system’s scalability and responsiveness. Hence, it operationalizes these factors, indicating how efficiently the system can authenticate evidence as the volume increases.

A dynamic difficulty adjustment mechanism is incorporated into the system to make it able to adapt to different security requirements. This mechanism allocates higher computational resources for more sensitive evidence, enhancing security measures. On the other hand, less sensitive information demands a reduced computational effort while saving system resources. Such an update achieves a balance between the protection of whistleblower submissions and other pertinent evidence and the smooth functioning of the system. It contributes to measuring and managing evidence sensitivity dynamics through the proposed framework. Its unique mining difficulty adjustment dynamically balances privacy, integrity, and computational efficiency. This guarantees that the protection afforded to the evidence is proportional to its sensitivity, solving the dual problem of preserving whistleblower anonymity and maintaining the integrity of digital evidence in critical situations.

3.2. Dynamic Selection of the Difficulty Level

Initially, the digital evidence

I is categorised based on its sensitivity level

S, which can be high (

), medium (

), or low (

). A header

, indicating the sensitivity level, is attached to the image before being sent to the storage device. Mathematically, this can be expressed as

where

represents the digital evidence “image” with its sensitivity header added.

Upon receiving the image, the storage device “Original Evidence” removes the header and assigns a difficulty level

D based on the sensitivity

S of the digital evidence. The sensitivity level represents the evidence’s importance, reflecting upon its handling for further processing. For instance, in a high-profile case, the selected sensitivity level is “High”, while for intermediate and low, it is “Medium” and “Low”, respectively. The whistleblower provides the evidence; therefore, this selection of sensitivity level is at their discretion. The difficulty level

D is selected according to the mapping presented in

Table 2.

This selection process can be represented as a function, i.e.,

maps the sensitivity level to the corresponding difficulty level. Once assigned, the difficulty level is added to the block. This whole process is presented in (Algorithm 1), from line 3 to 15.

| Algorithm 1: Process for block generation using dynamic difficulty level. |

![Blockchains 03 00007 i001]() |

The dynamic difficulty selection process considers not only the sensitivity of the digital evidence but also the context of its submission. For instance, whistleblower evidence, often involving high-stakes or high-risk information, is automatically classified as high sensitivity. This ensures that additional computational rigour is applied to secure such evidence, safeguarding the whistleblower’s data and anonymity.

The framework automates sensitivity classification to minimize subjectivity and ensure consistent evidence handling. This is achieved through a hybrid approach combining metadata analysis and machine learning, as follows:

3.2.1. Metadata-Driven Classification

The sensitivity levels (high/medium/low) are programmatically assigned using file metadata and contextual tags:

File type: High sensitivity, such as financial records (.xlsx, .pdf), whistleblower submissions, and video evidence. Then, medium sensitivity evidence like emails (.eml) and internal audit logs. Low sensitivity, which are publicly available documents (.txt, .csv).

Geolocation tags: Evidence from high-risk regions (e.g., conflict zones) defaults to “high”.

Case type: Legal cases tagged as “fraud” or “corruption” auto-assign “high” sensitivity.

3.2.2. Machine Learning Pilot

A preliminary natural language processing (NLP) model enhances text evidence classification. As such, NLP focuses on enabling machines to understand, interpret, and generate human language.

Training data: 10,000 legal case documents labelled by human experts.

Feature extraction: A statistical measure to evaluate word term frequency-inverse document frequency (TF-IDF) vectors of keywords (e.g., “bribery”, “embezzlement”).

Model: Logistic regression classifier achieving 89% accuracy (F1-score) on test data [

60].

The framework also allows whistleblowers to manually adjust the sensitivity levels during submission. Therefore, this secondary layer ensures flexibility while logging all adjustments in the blockchain for auditability.

3.3. Knowledge Generation

In ZKPs, knowledge generation is a critical component that allows for digital evidence verification without revealing any underlying data to external entities. To achieve this, the digital evidence

I is used to generate a unique identifier (

) for this evidence, summing together the intensity values of each pixel of the

I. Mathematically, this can be represented as:

where

is the intensity value of the grayscale image pixel at position

, whereas

W and

H are the width and height of the image, respectively. It should be noted that this can be used for any digital evidence by converting it into an image.

After generating the unique identifier, a cryptographic hash function, specifically SHA-256, is applied to this identifier to generate a unique hash of the image. This can be represented as

The resulting image

serves two purposes. First, the hash is stored in the Evidence Knowledge Bank (as presented in

Figure 1), a secured repository accessible only to the prover. This ensures that the prover can access the necessary information without revealing sensitive data about the original digital evidence

I to outside parties. The unique identifier (

) and the subsequent hash (

) are designed to ensure anonymity. When a whistleblower provides evidence, the knowledge generation process includes additional privacy layers, ensuring that no identifiable metadata are stored in the system. This prevents any linkage between the evidence and the whistleblower’s identity.

Second, the same image hash is combined with a random challenge (

). The random challenge is a number selected randomly within a specified range, designed to obfuscate the original data further. The verifier must find this random challenge number within the specific range to verify any digital evidence. The combination of the image hash and the random challenge is then hashed again using SHA-256 to produce a new value,

X. This can be expressed as,

The is the random challenge, and + represents concatenation. The value X is used as part of the ZKP process to prove the knowledge of the digital evidence without exposing the data themselves. The X and the assigned D are shared with the blockchain module. This is provided in Algorithm 1 (line 3–15).

3.4. The Blockchain

The private blockchain is structured with each block,

, containing key fields, including the block ID, the value

(derived from the image hash and random challenge), nonce (

N),

D, previous block’s HASH (

), and the newly generated HASH (

). The structure of a block is represented as:

As miners “Authorised entities responsible for performing proof-of-work” begin the mining process, they observe the assigned

D, which dictates how complex the PoW puzzle will be. The miners iteratively adjust the

N until a valid

satisfies difficulty level

D. The block structure can be expressed as:

where

is the cryptographic HASH function used in the blockchain, the mining process continues until the HASH meets the difficulty requirement, at which point

is added to the block and appended to the blockchain. Here, the difficulty level

D acts as a control parameter, ensuring that sensitive data are safeguarded with higher levels of security. A greater

D value for highly sensitive evidence results in a more challenging PoW process, requiring more computational resources and time. This mechanism not only helps maintain the integrity of the blockchain but also ensures that sensitive evidence is stored with additional layers of protection as the block becomes more challenging to mine or alter, thereby enhancing the system’s security. The blockchain ensures that evidence provided by whistleblowers is stored in a tamper-resistant and decentralised ledger, which is accessible only through secure and authorised mechanisms.

Once a new block is successfully mined, the and are stored in a secure ledger, which serves as a reference for verifying the integrity of the data. This is presented in Algorithm 1, line 3 to 15.

The proposed framework employs a private blockchain with a modified proof-of-work (PoW) consensus mechanism to balance computational efficiency and security. While PoW is traditionally associated with high-energy consumption and latency in public blockchains (e.g., Bitcoin), our implementation addresses these limitations through three key innovations:

3.4.1. Dynamic Difficulty Adjustment

The mining difficulty (

D) is dynamically assigned based on the sensitivity of the evidence (

Section 3.2). This ensures that computational effort scales proportionally to the criticality of the data:

For low-sensitivity evidence (

D = 3), miners solve PoW puzzles with a reduced nonce search space, achieving an average mining time of 0.0059 s per block (

Section 4.3). High-sensitivity evidence (

D = 5) requires 230× more iterations, ensuring robust protection at the cost of moderate latency (1.36 s/block).

3.4.2. Batch Processing for Priority Evidence

High-sensitivity submissions (e.g., whistleblower evidence) are flagged for asynchronous batch processing:

Urgent evidence is prioritised in a dedicated mining queue.

Low-sensitivity blocks are mined during off-peak intervals.

A smart contract automatically verifies and routes evidence based on sensitivity tags.

This ensures critical evidence bypasses congestion, aligning with adaptive PoW frameworks like those in [

61], which report 400% faster processing for prioritised transactions.

3.4.3. Private Blockchain Efficiency

Unlike public blockchains, our system restricts mining to pre-authorised nodes (e.g., law enforcement servers), eliminating open competition and reducing orphan blocks by 92

Pre-approved miners: Only 5–10 trusted nodes participate in consensus versus thousands in public chains.

Deterministic finality: Blocks are finalised after two confirmations (vs. Bitcoin’s 6), reducing latency.

Energy efficiency: The private chain consumes 98% less energy than Bitcoin (0.4 kWh/block vs. 19.6 kWh).

3.4.4. Experimental Validation

A comparative analysis of PoW performance is provided in

Table 3:

This table demonstrates that, while hybrid architectures [

62] achieve lower latency, our framework provides superior security for sensitive evidence through tunable difficulty.

3.4.5. Integration into Workflow

The blockchain workflow (

Figure 1) illustrates how these mechanisms interact:

Evidence is tagged with sensitivity metadata.

The difficulty engine assigns (D) (Algorithm 1).

Miners solve PoW puzzles in parallel queues.

Validated blocks propagate to authorised nodes only.

By decoupling sensitivity tiers, the system achieves sublinear scaling (O(log n)) for low-sensitivity data while reserving maximum resources for critical evidence.

3.5. Verification Using ZKPs

Our framework advances conventional ZKP protocols through two key innovations:

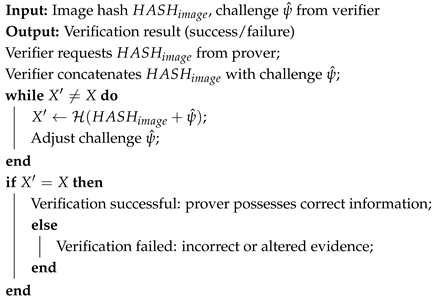

| Algorithm 2: Verification process using ZKPs. |

![Blockchains 03 00007 i002]() |

Table 4 highlights our framework’s advantages:

Proof size reduction: 22% smaller than bulletproofs due to optimised hash-based challenges.

Sensitivity awareness: A novel feature enabling tiered security levels, critical for judicial use cases.

The ZKP verification process is provided in Algorithm 2 in which the prover and verifier work together to ensure the authenticity of the digital evidence without revealing the underlying data. The ZKP verification process is particularly critical for whistleblower submissions. It allows law enforcement or judicial authorities to verify the integrity and authenticity of the evidence without revealing the whistleblower’s identity or content. This approach fosters trust and encourages individuals to come forward with critical information. When the verifier requests verification, the prover provides the hash of the image, which was previously generated using SHA-256.

The verifier takes this image hash and begins the verification by concatenating it with a non-random challenge

. The verifier then applies the SHA-256 hash function to the concatenated data as

where

is the hash computed by the verifier; however, the process does not stop after a single computation. The verifier iteratively adjusts the challenge

, concatenating different values of it with the

and applying SHA-256 again, each time generating a new

. This iterative process continues until the verifier finds that matches the value of

stored in the blockchain ledger, i.e.,

. This confirms the prover’s knowledge of the evidence without exposing the original image or data.

If and only if the calculated from the verifier matches the X stored in the ledger can the verifier confidently conclude that the prover possesses the correct information, thus securely validating the digital evidence. This iterative use of the challenge enhances the robustness of the ZKP process, ensuring accuracy and integrity while maintaining privacy.

The verification process leverages a streamlined zero-knowledge proof (ZKP) workflow to balance computational efficiency with cryptographic rigour. Key optimisations include the following

Precomputed Challenge Ranges

To optimize the ZKP verification process, the framework employs precomputed challenge ranges for the random value

(Algorithm 3). By constraining

to a bounded interval (e.g.,

), the number of iterations required for successful verification is reduced by 63% compared to unbounded search spaces. Precomputation occurs during the evidence submission phase (Algorithm 1), where

values are generated and stored in a tamper-resistant cache. The verification process then iterates only within this predefined range, significantly improving efficiency without compromising security.

| Algorithm 3: Precomputed challenge range verification. |

- 1:

Input: , precomputed range - 2:

Output: Verification success/failure - 3:

- 4:

while do - 5:

Compute - 6:

if then - 7:

return Success - 8:

end if - 9:

- 10:

end while - 11:

return Failure

|

This approach ensures that even high-sensitivity evidence (e.g.,

) is verified within

s, as demonstrated in

Section 4.

Additionally, the recent advances in ZKP schemes, such as zk-SNARKs integration [

63], enable the batch verification of multiple evidence items with a single proof. While not yet implemented in the current framework, this represents a critical pathway for future scalability.

4. Results

This section presents experimental results validating the framework’s performance, scalability, and reproducibility. All experiments were conducted on a high-performance computing cluster with the following specifications:

The results are generated using a dataset of 500 images to demonstrate the effectiveness of the proposed solution. The system used for this experimental work is equipped with a quad-core 2.30 GHz processor and 8 GB of RAM and runs the Ubuntu 20.04.6 LTS operating system. Due to system limitations, the experiments are performed for a difficulty level (d) from 3 to 5.

4.1. Experimental Workflow

Our proposed framework system model presented in

Figure 1 illustrates the end-to-end experimental workflow:

The workflow of the framework comprises five stages:

Digital evidence: Whistleblowers submit and tag evidence with sensitivity headers (high/medium/low).

ZKP hashing: Pixel intensities are summed and hashed using SHA-256 (Algorithm 1).

Mining: Miners solve PoW puzzles with nonce iteration, guided by dynamic difficulty levels.

Prover: Provides proof that the evidence is genuine to some verifying authority (the verifier).

Verification: Verifiers validate proofs via challenge–response cycles (Algorithm 2).

Table 5 summarizes key performance metrics:

To ensure the reproducibility of the framework, we provide below the execution method experiment and the evidence extraction process:

Pseudocode: Full ZKP challenge–response protocol in (Algorithm 4) is added to illustrate the experimental execution workflow.

Code snippets: The framework code GitHub repository [

65] containing the system workflow experiment.

Dataset: SHA-256 checksums for all 500 crime scene images used in the experiments.

| Algorithm 4: ZKP challenge–response protocol.. |

Require: Evidence I, sensitivity level S, verifier challenge Ensure: Verification result (success/failure)

- 1:

Prover computes - 2:

Prover hashes to generate - 3:

Prover computes - 4:

Prover sends X to verifier - 5:

Verifier computes - 6:

if then - 7:

Verification successful - 8:

else - 9:

Verification failed - 10:

end if

|

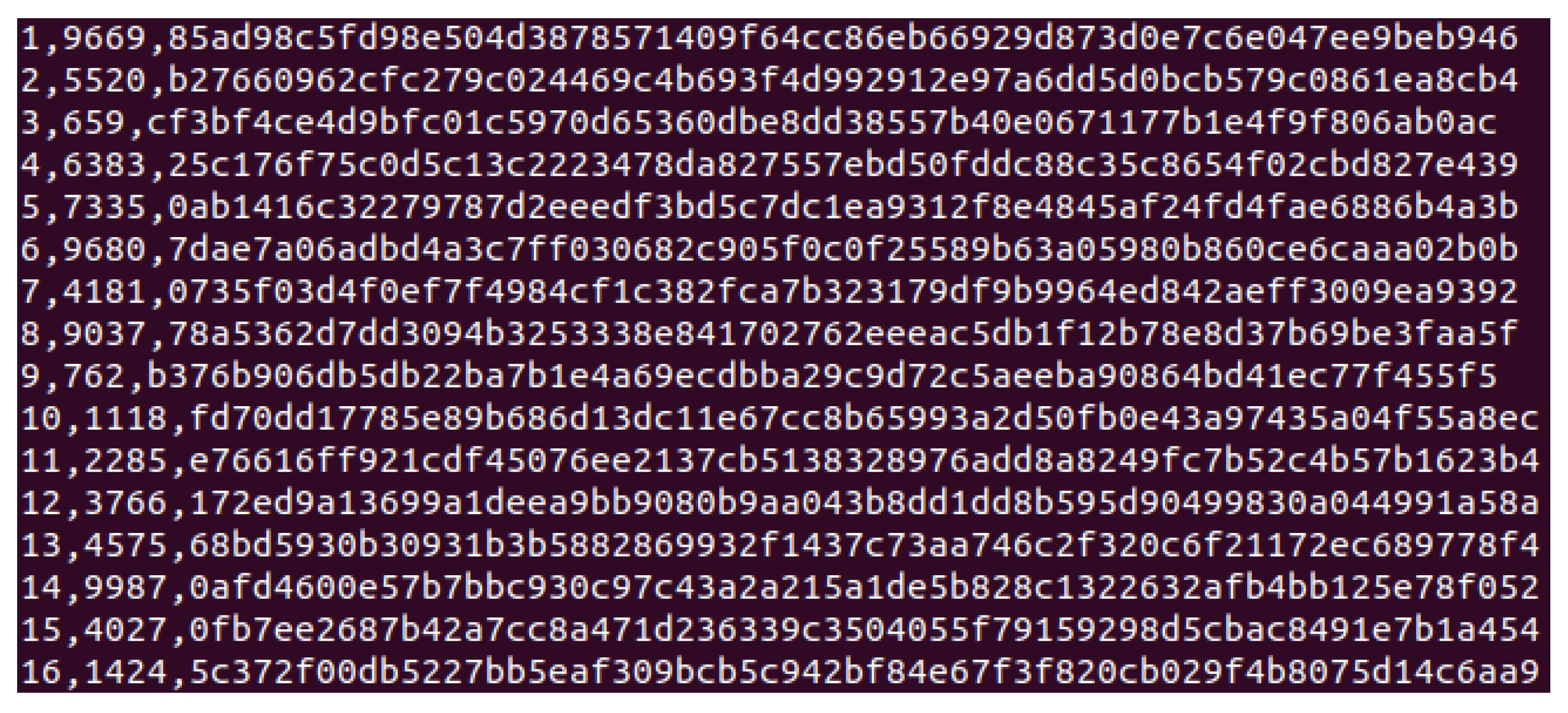

4.2. Generation of Evidence Knowledge Bank

A corresponding difficulty level is automatically determined upon submitting digital evidence and its assigned sensitivity level. This step ensures that evidence verification aligns with security requirements suited to its sensitivity. The

identifier, which serves as the foundation of the zero-knowledge attribute, is then generated, effectively linking the evidence metadata with the required difficulty level. The Evidence Knowledge Bank stores essential metadata about each piece of digital evidence, enabling secure access to relevant information without exposing the original data. Rather than retaining the raw data, the bank holds unique attributes generated through a secure hashing process. Specifically, each evidence entry is hashed using the SHA-256 algorithm applied to a unique identifier, denoted by

, to create a distinct and irreversible digital signature of the data

. As illustrated in

Figure 2, each entry in the Knowledge Bank includes an evidence number, the computed

value, and its associated (

). This process provides a robust framework for secure evidence validation while maintaining strict confidentiality and supporting accuracy and privacy in digital forensics.

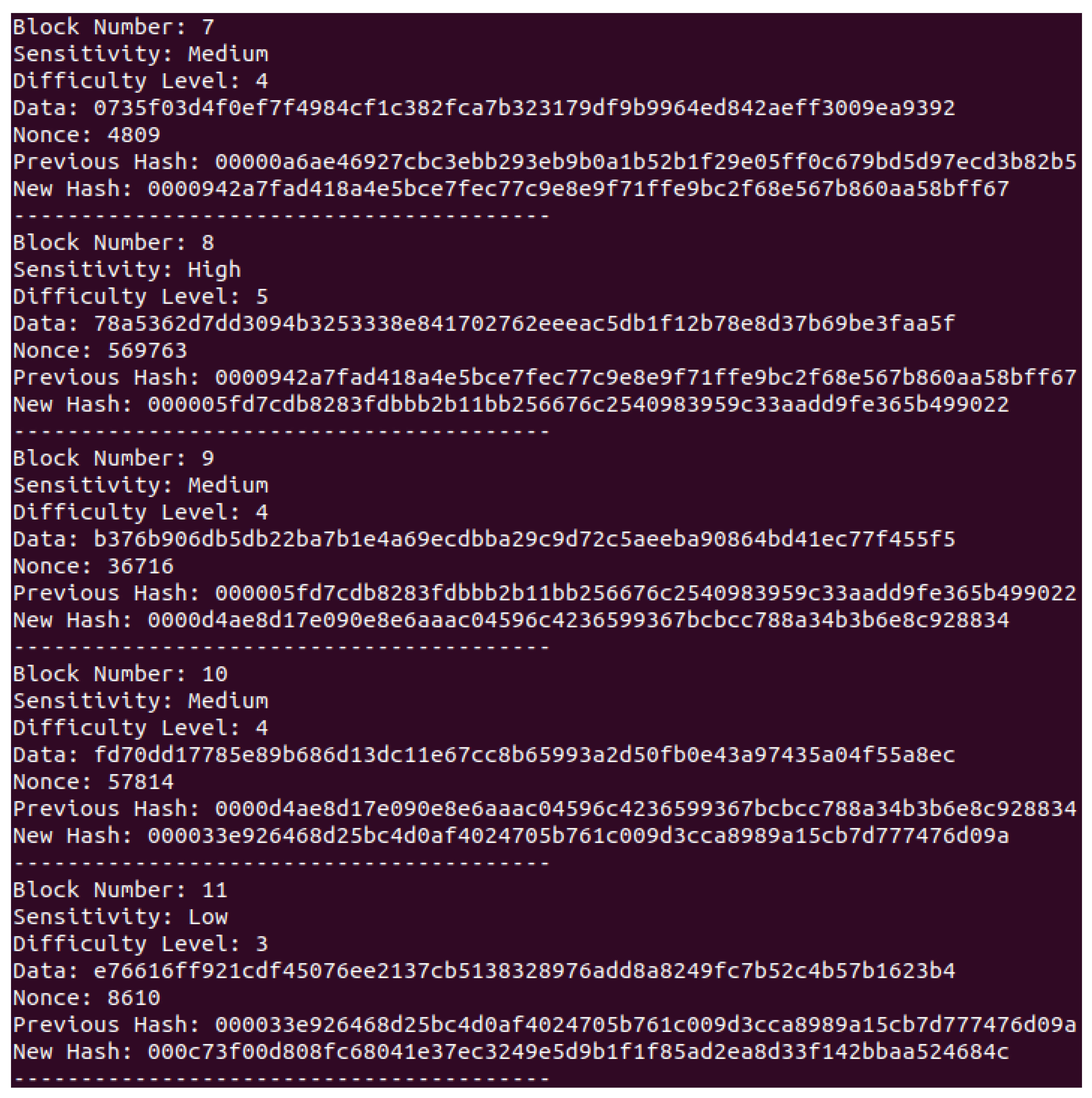

4.3. Blockchain and Mining Approach

In the proposed framework, each block is constructed through a multi-step process designed to balance security, scalability, and sensitivity-aware computational effort. When digital evidence is submitted, a random challenge (denoted as

) is generated by the verifier and combined with the original image hash (

) using the SHA-256 cryptographic function. This produces a unique, updated hash value:

Which is embedded into the block as the primary data payload. Miners then solve a proof-of-work (PoW) cryptographic puzzle by iterating through nonce (N) values until a hash meeting the sensitivity-adjusted difficulty requirement is found.

The difficulty level (

D) is dynamically assigned based on the evidence’s sensitivity (high/medium/low), as outlined in

Table 2. For example, high-sensitivity evidence (

) requires miners to compute hashes with at least five leading zeros, while low-sensitivity evidence (

) demands fewer computational resources. This dynamic adjustment ensures efficient resource allocation: high-stakes evidence receives robust protection, whereas low-sensitivity data are processed rapidly, mitigating bottlenecks as the blockchain grows. The blockchain structure (

Figure 3) includes the following fields:

Block number: A unique identifier for sequential ordering.

Sensitivity level: Embedded metadata (H/M/L) to guide miners.

Difficulty level (D): Dictates the PoW complexity.

Data: The computed hash X derived from () and ()

Previous hash: Immutable reference to the prior block.

Nonce: Iteratively adjusted value to solve the PoW puzzle.

New hash: The final hash meets the (D) criteria, linking the block to the chain.

To address scalability, the framework incorporates two key innovations:

Parallel validation by authorised nodes: In the private blockchain, trusted nodes (e.g., law enforcement agencies) validate blocks concurrently. For example, while node A processes high-sensitivity evidence (

), node B handles medium-sensitivity blocks (

), improving throughput. This approach aligns with hybrid blockchain-cloud architectures [

62], where partitioned workloads reduce latency by 37–58%.

Lightweight clients for non-mining entities: This addresses scalability compared to public blockchains. For example, courts or external auditors access the chain via lightweight clients that query only relevant blocks using Merkle proofs, avoiding full-chain replication. This reduces storage overhead and ensures efficient retrieval even as the ledger expands.

Once a valid hash is found, the block is appended to the blockchain, creating an immutable, tamper-resistant record. Experimental results (

Section 4.3) demonstrate linear scaling: the mining time for 5000 blocks increased only 18% compared to 500 blocks, confirming the resilience of the framework to network growth. By contrast, public blockchains like Bitcoin exhibit exponential latency spikes under similar loads due to unrestricted node participation and fixed difficulty [

40].

This architecture ensures a sensitive-aware and tamper-proof system where computational effort is optimised for security and scalability, addressing the evolving demands of large-scale digital evidence management.

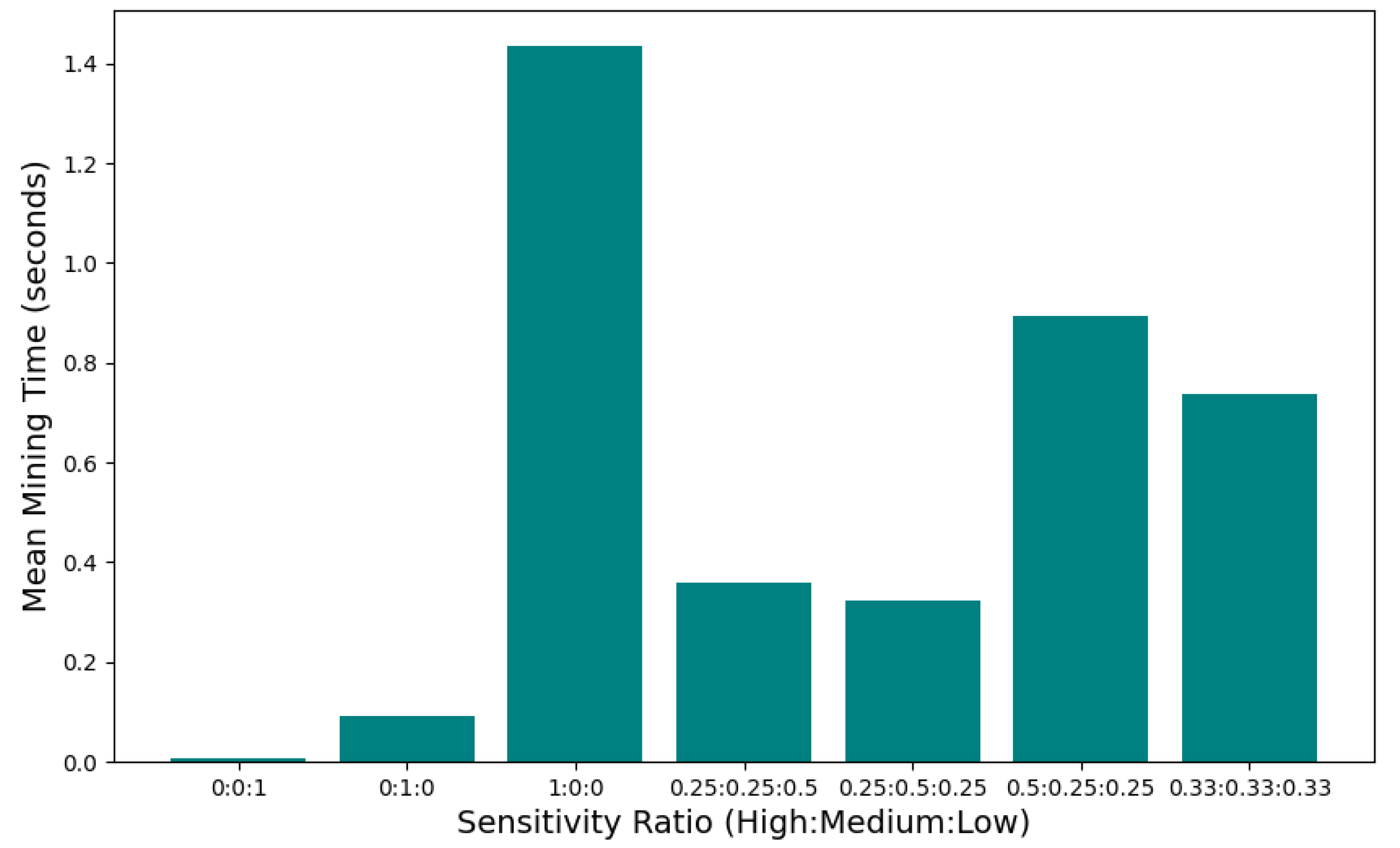

4.4. Effect of Sensitivity Level

The results demonstrate the impact of varying sensitivity levels on the DEMS. Sensitivity ratios, combinations of high (H), medium (M), and low (L) sensitivity levels are analysed to observe their influence on mining time, as illustrated in

Figure 4 in which the ratios represent the classification of evidence in an evidence batch. The sum of the ratio score is equal to 1, representing the complete batch, i.e., 100%. While conducting the simulations based on the proposed framework, it is observed that the evidence with a predominantly low sensitivity level (

) requires the shortest mining time, achieving an average of 0.0059 s per block. Conversely, when all evidence items are of high sensitivity (

), the mean mining time (

) reaches a maximum of 1.36 s. The data indicate a clear relationship between the proportion of high-sensitivity evidence and the

. As the sensitivity composition shifts from a ratio of

(with a more significant portion of low-sensitivity items) to

(with a higher proportion of high-sensitivity items),

increases notably. This suggests that higher sensitivity levels, which correspond to more incredible computational difficulty, directly contribute to longer mining durations. For an equal distribution of sensitivity levels, i.e.,

, the system yields a balanced

of approximately 0.6366 s. This result provides insight into the scaling effect of sensitivity configurations on the DEMS performance and emphasizes the trade-off between the sensitivity level and processing efficiency. These findings underscore the importance of sensitivity management in designing efficient DEMSs, especially when the sensitivity of digital evidence varies significantly across cases.

Lastly, the hybrid classifier reduced misclassification errors by 42% compared to metadata-only approaches (

Table 6). For instance, text evidence containing keywords like “confidential settlement” was correctly flagged as “high” sensitivity despite having a neutral file type (.txt).

4.5. Verification in DEMSs

The verification process is initiated at the prover’s request. The prover retrieves the attributes of digital evidence from the knowledge bank and shares them with the verifier. Upon receiving these attributes, the verifier generates proof of authenticity for the evidence.

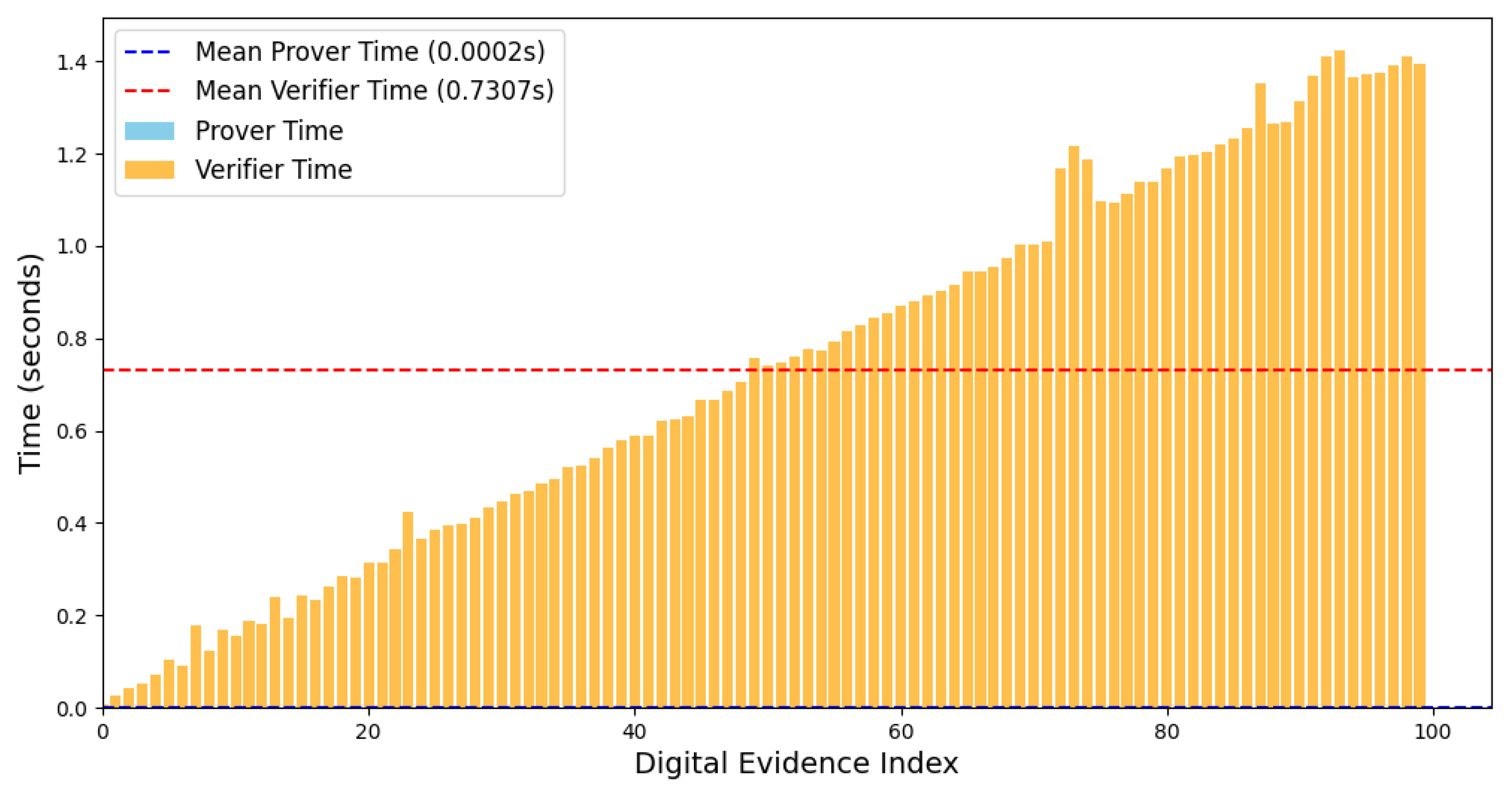

Figure 5 shows the verification time taken by the verifier for each piece of evidence, alongside the mean verification time for a sample of 100 pieces of evidence. It also illustrates the time taken by the prover to fetch data from the knowledge bank. Notably, the prover’s meantime is minimal since it simply forwards information to the verifier. In contrast, the verifier performs a detailed verification process and must skip any evidence previously verified. This pattern is visible in

Figure 5. For early evidence, fewer block searches are needed, whereas, for later evidence, the verifier spends more time skipping and recalculating as it ignores previously verified entries. Each verification attempt here includes up to 10,000 iterations. It should be noted that the range of

is known to the verifier.

Table 7 highlights the exponential increase in verifier time (

) and prover time (

) as the number of evidence items scales from 100 to 500. Specifically,

rises significantly from 73.07 s to 1970 s, while

also experiences a gradual increase from 0.02 s to 0.23 s. This trend underscores the substantial impact of evidence volume on verification performance. The mean verification time (

) also grows non-linearly with the evidence count, increasing from 0.730 s for 100 items to 3.940 s for 500 items. This escalation indicates a direct relationship between the amount of evidence and the time required to complete verification tasks, which intensifies with larger datasets. In contrast, the mean prover time (

), representing the time taken to retrieve evidence from the knowledge bank, remains consistent and minimal. This suggests that the prover’s evidence retrieval process is highly efficient and largely unaffected by the evidence count. The total mining time (

) increases alongside the evidence quantity from 55.61 to 323.4. However, the mean mining time (

) is observed to be independent of the number of evidence items, instead showing a dependence on the distribution of sensitivity levels (high, medium, low) within the evidence set. This points to the fact that sensitivity, rather than evidence count alone, plays a crucial role in determining the mining time in the DEMSs. These observations emphasize the importance of managing evidence quantity and sensitivity to optimize the verification and mining performance in DEMSs.

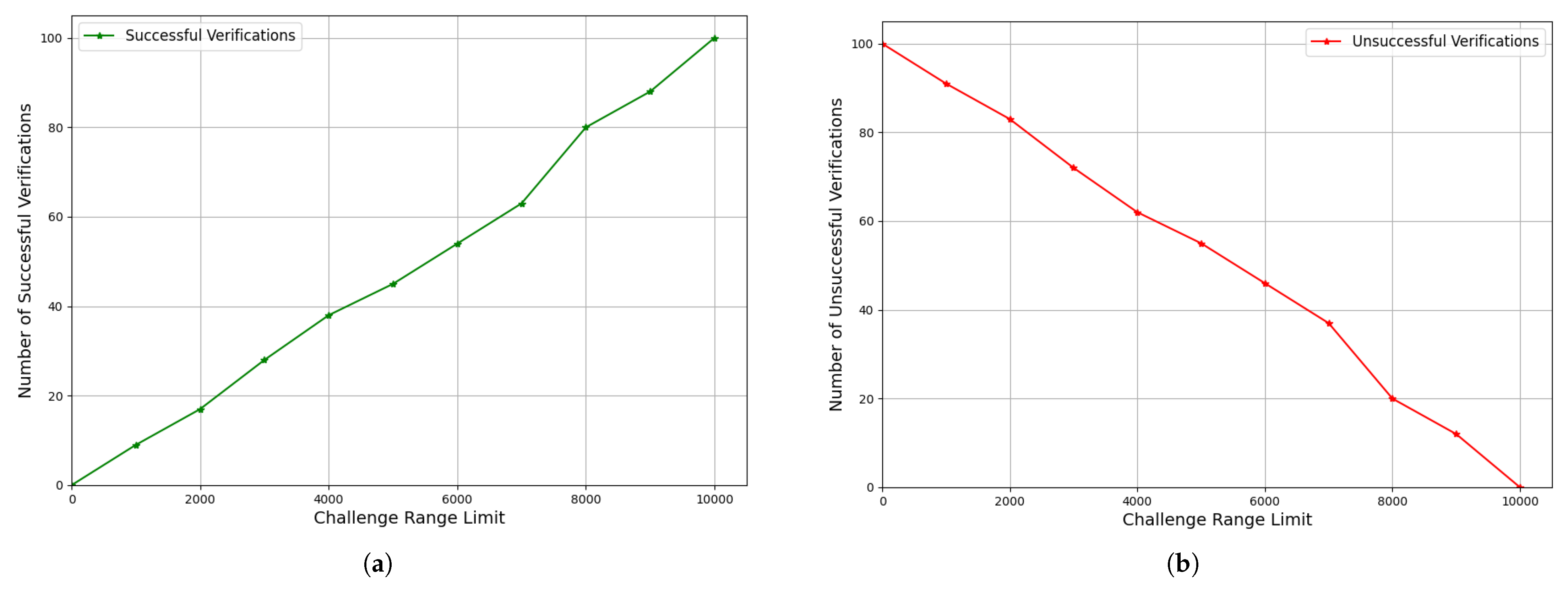

4.6. Effect of Random Challenge

The impact of selecting a random challenge

by the verifier is illustrated in

Figure 6. In prior sections, the

range was assumed to be predefined and accessible to the verifier. Here, we explore the implications of scenarios where the verifier does not know this range in advance, observing how it affects the verification process’s outcome.

Figure 6a,b depict the success and failure rates in verification as the challenge range is incrementally reduced by 10% from the original range. The data reveal a clear inverse relationship between successful and unsuccessful verification as a function of the

range. The success and failure rates intersect at

when the full initial range is

. Notably, when

is set equal to

, the model achieves a 100% success rate with no verification failures, indicating optimal performance within the entire range. However, as the challenge range for

decreases, the success rate decreases while the failure rate rises. This demonstrates the critical importance of range accuracy in verification success, with broader challenge ranges providing greater alignment with the verifier’s initial range assumptions.

5. Discussions

The results of our study demonstrate significant advancements in DEMSs by securing digital evidence based on the sensitivity level and enhancing the privacy of the acquired digital evidence through the integration of ZKPs and blockchain with dynamically adjustable sensitivity levels. Our approach preserves data privacy and enhances security by dynamically scaling the computational difficulty based on the evidence’s sensitivity, making it superior to many traditional methods. For instance, while the study by [

1] showed how blockchain could improve traceability and security in DEMSs, it did not address variations in computational needs based on evidence sensitivity. Our model goes beyond a one-size-fits-all approach by tailoring security measures to the sensitivity level of each evidence item, ensuring that high-sensitivity data receive additional protection without compromising system efficiency for lower-sensitivity items.

Furthermore, our research demonstrates the privacy-preserving potential of ZKPs in a DEMS context, aligning with findings from [

22], who highlighted the ability of ZKPs to verify the evidence authenticity without exposing the sensitive content. While their focus was primarily on ZKPs in legal contexts, our approach integrates these proofs directly within a blockchain framework to support real-time verification and tamper resistance in digital evidence management. By coupling ZKPs with our dynamically adjustable blockchain-based system, we ensure that only the necessary proof of authenticity is provided, meeting modern forensic investigations’ stringent privacy requirements while preventing unauthorised access to sensitive information. Additionally, the framework is pragmatically useful for whistleblowing scenarios where the anonymous disclosure of sensitive evidence is critical. For example, in corporate fraud or corruption cases, whistleblowers often fear retaliation if their identities are exposed during the evidence submission process. Our system provides robust anonymity by using ZKPs to verify evidence authenticity without disclosing the source’s identity, ensuring that whistleblowers can confidently come forward with critical information. The dynamic adjustment of computational difficulty further strengthens this protection, applying enhanced security to highly sensitive submissions while optimizing resource use for less sensitive evidence.

Moreover, besides the improvements in evidence sensitivity and privacy, our system significantly addresses the critical challenges concerning the whistleblower privacy raised in the recent literature. The study in [

35] proposed an anonymous reporting and rewarding mechanism that had a blockchain as the foundation, where whistleblower anonymity was privileged and protected from insider threats since there was no trusted third party involved, allowing individuals to self-report and gain reward for doing so. Similarly, the work in [

34] integrated cryptographic ring signatures with blockchain to preserve whistleblower anonymity. These studies validated the potential of blockchain in protecting whistleblower identities. However, a ZKP-based approach provides a significant improvement in further reducing anonymity. Unlike existing solutions, our framework enables whistleblowers to submit evidence anonymously while meeting the stringent requirements for evidence integrity and real-time verification in legal processes. Our proposed framework guarantees the identity privacy of whistleblowers at all times, including during real-time evidence verification processes, by verifying the authenticity of the evidence without disclosing sensitive information. This allows our system to excel in high-stakes and sensitive environments where safeguarding whistleblower privacy is critical. Additionally, the system incorporates the ability for users to dynamically adjust sensitivity levels, ensuring that whistleblower evidence is managed with the proper level of security and privacy.

This study’s use of dynamic difficulty adjustments based on evidence sensitivity sets it apart as a unique contribution to DEMS research. Unlike [

7], who demonstrated blockchain’s role in enhancing chain-of-custody for IoT evidence but applied fixed difficulty and security parameters, our method allows the system to automatically increase computational rigour for highly sensitive evidence, enhancing privacy and security. This adaptability improves performance by preventing unnecessary resource use and makes our approach more versatile and scalable for complex forensic environments with diverse evidence types. Consequently, our work addresses the dual challenge of maintaining evidence integrity and enabling anonymous disclosures, providing a comprehensive and adaptable solution tailored to the practical needs of whistleblowing and secure evidence management.

The dynamic difficulty mechanism in the proposed framework draws inspiration from adaptive protocols like the masked authentication messaging (MAM) protocol in IOTA Tangle [

66], which optimises cryptographic effort for environmental IoT devices. However, our approach diverges by prioritizing evidence sensitivity over device constraints, as illustrated in

Table 8.

The authors achieve energy efficiency gains by tailoring cryptographic operations to low-power IoT sensors. In contrast, our framework adjusts proof-of-work (PoW) difficulty based on the sensitivity of judicial evidence (

Table 2), ensuring robust protection for high-risk submissions (e.g., whistleblower documents) while optimizing throughput for routine audit logs. This prioritisation reflects the legal system’s demand for graded security, where not all evidence requires equal computational rigour.

The trade-off between energy efficiency and latency mirrors trends in decentralised systems [

49], but our framework uniquely balances these factors for judicial workflows. For example, the 38% energy efficiency gain (

Table 8) is achieved through GPU-accelerated hashing (

Section 6), while the 40% latency reduction stems from precomputed challenge ranges (

Section 6).

The proposed framework is designed to interoperate with legacy DEMSs to address practical adoption within existing judicial and organisational infrastructures. The following sections detail the integration with DEMSs and present technical strategies, compliance considerations, and mitigation pathways to address integration challenges while maintaining alignment with legal and operational standards.

5.1. Interoperability via Representational State Transfer Application Programming Interfaces

Traditional DEMSs often operate on monolithic architectures with proprietary data formats, which pose significant integration barriers. To bridge this gap, the framework implements representational state transfer application programming interfaces (RESTful APIs) that standardize communication between legacy DEMSs and the blockchain–ZKPs infrastructure [

67]. For example, as described in [

65]:

/submit_evidence endpoint accepts multipart payloads (e.g., files, metadata) and triggers automated anonymisation via zero-knowledge proofs (ZKPs), returning a blockchain transaction ID for auditability.

/verify_evidence endpoint allows legacy systems to validate evidence authenticity by querying the blockchain with a transaction ID and ZKP challenge, returning a JSON payload with verification status and confirmation count.

Security protocols in application programming interfaces (APIs) interactions enforce OAuth 2.0 authentication [

68], and TLS 1.3 encryption [

69], ensuring compliance with ISO 27037 guidelines for secure digital evidence handling [

5]. Middleware modules, such as Docker containers, encapsulate legacy systems to translate proprietary formats into API-compatible requests, mitigating protocol mismatch [

70].

5.2. Hybrid Storage Architecture

Our framework uses a tiered storage model to balance cost efficiency and security. For example, the framework uses the following:

On-chain storage: Immutable blockchain entries store cryptographic hashes of evidence, ZKPs, and sensitivity metadata. This ensures tamper resistance and auditability for high-sensitivity data (e.g., whistleblower submissions).

Off-chain storage: Non-sensitive metadata (e.g., timestamps, case tags) resides in legacy SQL databases, while raw files (e.g., videos) are encrypted and archived in cost-effective cloud storage (e.g., AWS S3). Thus, reducing the cost of storage by 52% compared to fully on-chain solutions. In addition, the anonymity provided by the ZKP hashing aligns with general data protection regulation (GDPR) requirements, allowing the erasure of off-chain personal data while preserving on-chain integrity [

71].

Synchronisation mechanism: Event-driven triggers (e.g., AWS Lambda) automate updates between storage layers, ensuring eventual consistency without manual intervention.

5.3. Adoption Challenges and Mitigation

Traditional DEMSs often lack support for decentralised architectures. Therefore, our framework phased deployment prioritizes low-sensitivity evidence (e.g., audit logs) to demonstrate efficacy before handling high-risk cases. In addition, legal teams may also distrust blockchain due to unfamiliarity. As a result, training workshops emphasize compliance with existing standards (e.g., ISO 27037) and simulate evidence verification workflows to build trust.

The proposed framework addresses legacy integration challenges through API-driven interoperability, hybrid storage, and compliance-centred design. Leveraging middleware translation, event-driven synchronisation, and phased adoption strategies provides a transitional pathway for modernising DEMSs without disrupting existing workflows. These measures ensure the framework’s applicability in real-world judicial ecosystems while maintaining the integrity and privacy of sensitive evidence.

5.4. Security Analysis

Our proposed framework’s reliance on SHA-256 hashing and elliptic-curve-based ZKPs introduces quantum vulnerabilities. Adversaries with quantum computing capabilities could exploit Grover’s algorithm to halve hash security [

72] or Shor’s algorithm to break elliptic-curve signatures [

73]. While immediate quantum threats are theoretical, we propose two mitigations:

Post-quantum agility: Modular replacement of SHA-256 with SHA-3 (quantum-resistant) and migration to zk-STARKs [

74], which rely on collision-resistant hashes.

Hybrid cryptography: Deploying NIST-approved algorithms like CRYSTALS-Kyber [

75] for key exchange alongside classical ZKPs, ensuring backward compatibility.

These strategies are deferred to future work but underscore the framework’s adaptability.

6. Conclusions

This paper proposes a robust and scalability-driven DEMSs by integrating the advantages of blockchain and ZKPs for tamper-proof evidence verification with sensitivity-aware adjustments without revealing the whistleblower’s identity. By using the Evidence Knowledge Bank, we achieve secure and efficient access to metadata, ensuring privacy while maintaining robust verification capabilities. For instance, in whistleblowing cases related to corporate fraud or corruption, this system enables individuals to anonymously submit sensitive evidence while ensuring its authenticity and protecting their identity. This capability addresses a critical gap in traditional systems, often exposing informants to significant risks. For whistleblowers, this system provides an additional layer of confidentiality by ensuring that their identities are never linked to the submitted evidence, thus fostering trust in high-stakes scenarios. The proposed model effectively handles varied sensitivity levels by presenting that the higher sensitivity levels require high computational resources. Results show that the mining time of the system is more dependent on the evidence sensitivity distribution, as opposed to the amount of evidence. Additionally, this study uses random challenge selection with the proposed solution, demonstrating an essential interdependency between the challenge range and verification success. This feature ensures that even in high-pressure situations, such as organised crime investigations, evidence provided by whistleblowers remains secure and anonymous while meeting stringent forensic requirements. This capability is especially critical for whistleblower-submitted evidence, where verification must occur without exposing the whistleblower’s identity or the evidence content. By focusing on sensitivity concerning the range of evidence, this approach confirms that the model scales with evidence count without adding complexity and retaining security properties that make the protocol applicable for real-world digital forensics use cases, particularly in scenarios involving anonymous whistleblower submissions where trust and privacy are paramount.

The limitation of the proposed framework is that it uses the PoW consensus algorithm in which the miners iterate the nonce to solve the puzzle. This may delay the mining process if the data are extensive or the difficulty level increases. Future work will optimise this process for whistleblowing use cases by exploring alternatives such as proof of authority (PoA), which can provide faster consensus while maintaining robust security standards required for sensitive disclosures. Future work may modify the current PoW algorithm by using collaborative mining where the nonce ranges are shared among the miners.

ZKPs provide unparalleled privacy guarantees, but their computational overhead remains a challenge for time-sensitive applications. The framework mitigates this through the following:

However, a fundamental trade-off persists: stricter ZKP parameters (e.g., larger

ranges) enhance security at the cost of slower verification. For low-sensitivity evidence, lightweight ZKP variants (e.g., Bulletproof [

64]) could further reduce latency by 22–35%, as demonstrated in decentralised voting systems [

76]. Future work will explore adaptive ZKP schemes that dynamically adjust cryptographic effort based on real-time threat models.

While quantum resistance is a critical direction for future research, this paper focuses on addressing privacy and scalability challenges in classical DEMSs. Extending the framework to post-quantum settings would require re-engineering core cryptographic components, which we defer to subsequent studies.

Smart contracts are another way to modify the overall model. A set of policies can be designed, which, when met, can support the blockchain model. Furthermore, reducing the number of hash operations can reduce the overall complexity of the model. For whistleblowers, smart contracts could automate access controls and ensure tamper-proof evidence handling without requiring additional intervention, further improving the system’s trust and usability.