1. Introduction

In the digital era, the adoption of IoT technologies has catalyzed a transformative shift in various sectors, most notably in healthcare. The burgeoning network of interconnected devices, collectively known as the

Internet of Medical Things (IoMT), has fundamentally enhanced the capabilities of

smart healthcare systems (SHSs) by enabling sophisticated data analytics and real-time patient monitoring [

1,

2,

3]. With an estimated 30 billion IoT devices projected by 2030 [

4,

5], the healthcare sector is on the brink of a data revolution, poised to significantly improve medical diagnostics and patient care through

Artificial Intelligence (AI) and

machine learning (ML) [

6].

However, the exponential growth of data within SHSs introduces complex privacy and security challenges [

7]. The highly sensitive nature of personal health information requires robust mechanisms to protect data against unauthorized access and breaches, in accordance with strict regulations like the

General Data Protection Regulation (GDPR) [

8] and the

Health Insurance Portability and Accountability Act (HIPAA) [

9]. In response, FL [

10] has emerged as an innovative solution that enables decentralized model training on diverse devices without the need to share their sensitive raw data. Although it inherently embraces privacy by design, solely relying on its basic privacy features proves insufficient to ensure comprehensive privacy in smart healthcare [

11]. According to [

12,

13], FL is vulnerable to attacks such as membership inference [

14,

15], model reconstruction [

8,

16], and model inversion [

8,

17], which can lead to significant privacy risks. In addition, the introduction of FL into SHSs also raises some privacy concerns [

18]. To enhance its privacy-preserving capabilities, the augmentation of FL with advanced technologies like

Differential Privacy (DP),

Homomorphic Encryption (HE), and

Secure Multi-Party Computation (SMPC) upon the concept of

Privacy-Preserving Federated Learning (PPFL) is actively explored [

19]. PPFL has thus evolved into a compelling paradigm for enhancing both privacy and security in SHSs [

7,

20]. Moreover, blockchain [

21,

22,

23] with its features seems to be another serious option for enhancing both privacy and security in an FL context. In fact, incorporating blockchain into FL enhances privacy and trust throughout the process, especially in sensitive operations involving health data [

24]. Blockchain technology augments FL by providing an immutable, transparent ledger for gradients upload, aggregation processes, and model storage, thus enhancing trust and privacy assurance throughout the FL lifecycle.

Although the potential advantages of integrating FL with PETs and blockchain for privacy protection in SHSs are considerable, significant challenges remain. On one hand, the challenge lies in the diversity of privacy threats in SHSs and the rapidly increasing number of new threats. For instance, the adoption of FL-enabled SHS introduces numerous privacy threats arising from both technical vulnerabilities and malicious users. On the other hand, the challenge aligns with the technical intricacies associated with seamlessly integrating these technologies into SHSs for privacy protection. Actually, it is crucial to investigate the best possible combination between these technologies to mitigate the effects of threats on user privacy by considering factors such as compatibility, efficiency, and communication overhead. Therefore, it is important to explore how PETs and blockchain mechanisms can empower FL for privacy protection, particularly in the burgeoning landscape of smart healthcare. In this regard, addressing these challenges requires not only technological innovation but also a reevaluation of regulatory frameworks to facilitate the effective adoption of these advanced solutions [

25].

Motivated by the promising features and recent developments of FL, researchers have conducted many studies to survey the potential of integrating PETs and blockchain to enhance privacy protection in FL-based systems including FL-based smart healthcare. For example, the work in [

26] reviewed the applications of FL in healthcare, highlighting its effectiveness across several domains such as mammogram analysis, COVID-19 classification, and wearable health monitoring. However, it does not explore the potential of the integration of PETs and blockchain with FL to enhance its privacy capacity in SHSs. Similarly, the works in [

27,

28] have explored the potential of combining FL with PETs for privacy enhancement, but the study does not explore the application in SHSs. In addition, ref. [

29] comprehensively surveyed the application of FL in SHSs, highlighting its ability to enhance privacy in remote health monitoring, medical imaging, COVID-19 detection and electronic health records. However, it lacks emphasizing the potential of integrating FL with PETs and blockchain, which could significantly enhance data privacy and security in SHSs. In the work by [

12], a systematic review of privacy-preserving methods that integrate blockchain and FL in telemedicine is provided, highlighting their potential to enhance data security and trustworthiness in remote healthcare systems. However, the study does not adequately explore the implications of combining PETs with these frameworks, which could further strengthen privacy measures and address existing vulnerabilities in SHSs. The summary of the comparison with other surveys is given in

Table 1.

Given the aforementioned limitations of existing surveys and the fast-ever development of FL, we find it necessary to conduct a comprehensive survey that reviews the most recent findings, identifies the gap, and suggests the future research directions related to PPFL in SHSs. Therefore, this survey uniquely examines the technical integration of PETs and blockchain in FL frameworks, providing a comprehensive analysis of how these technologies can collectively enhance privacy and security in SHSs. We first review the privacy concerns in SHSs and find out a classification of privacy threats. Then, we conduct an extensive review of PPFL and its integration in SHSs. We finally examine the potential of the integration of blockchain and PETs with FL to enhance privacy in SHSs. We focus on some specific applications of smart healthcare such as health data management, remote health management, medical imaging, and health finance management.

Through a systematic exploration of current studies and emerging technologies, this work aims to spur further research into robust, efficient, and scalable privacy-preserving frameworks, contributing to the evolution of smart healthcare into a domain where cutting-edge technology and stringent privacy protection coexist harmoniously.

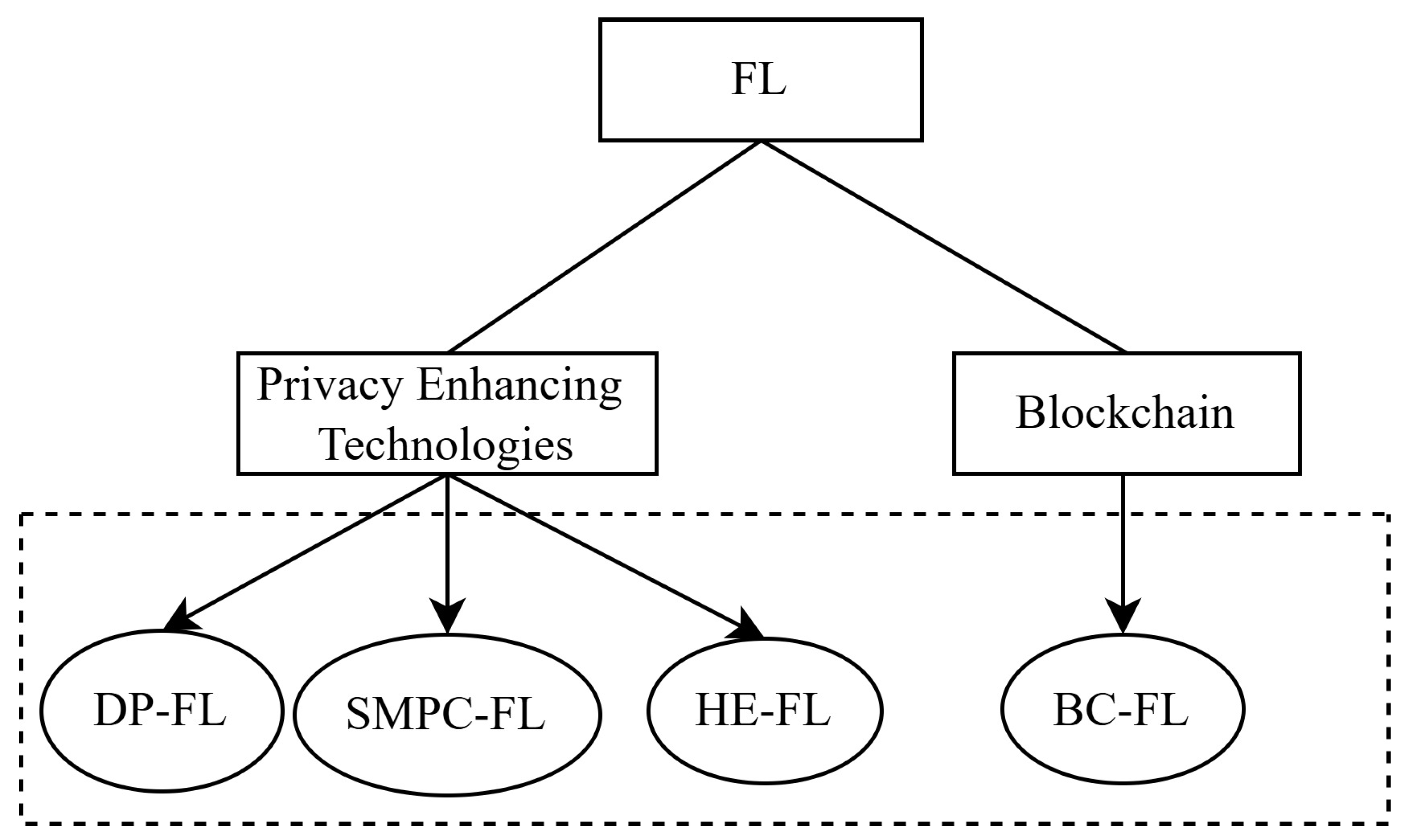

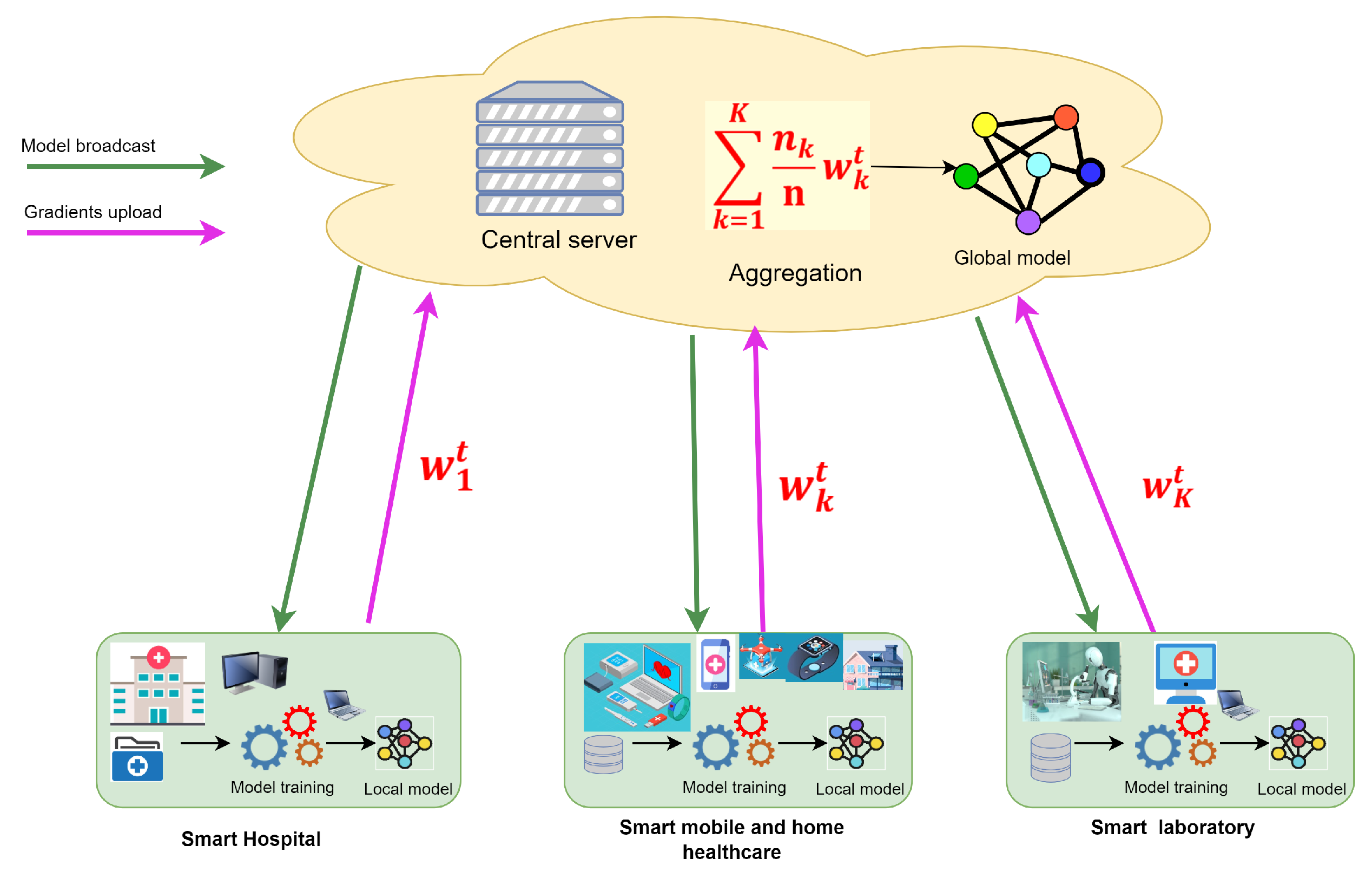

This survey is built based on two main dimensions as shown in

Figure 1: the augmentation of FL with PETs for enhancing privacy protection, and its integration with blockchain to further secure and decentralize healthcare data processes.

This work’s contributions are fourfold:

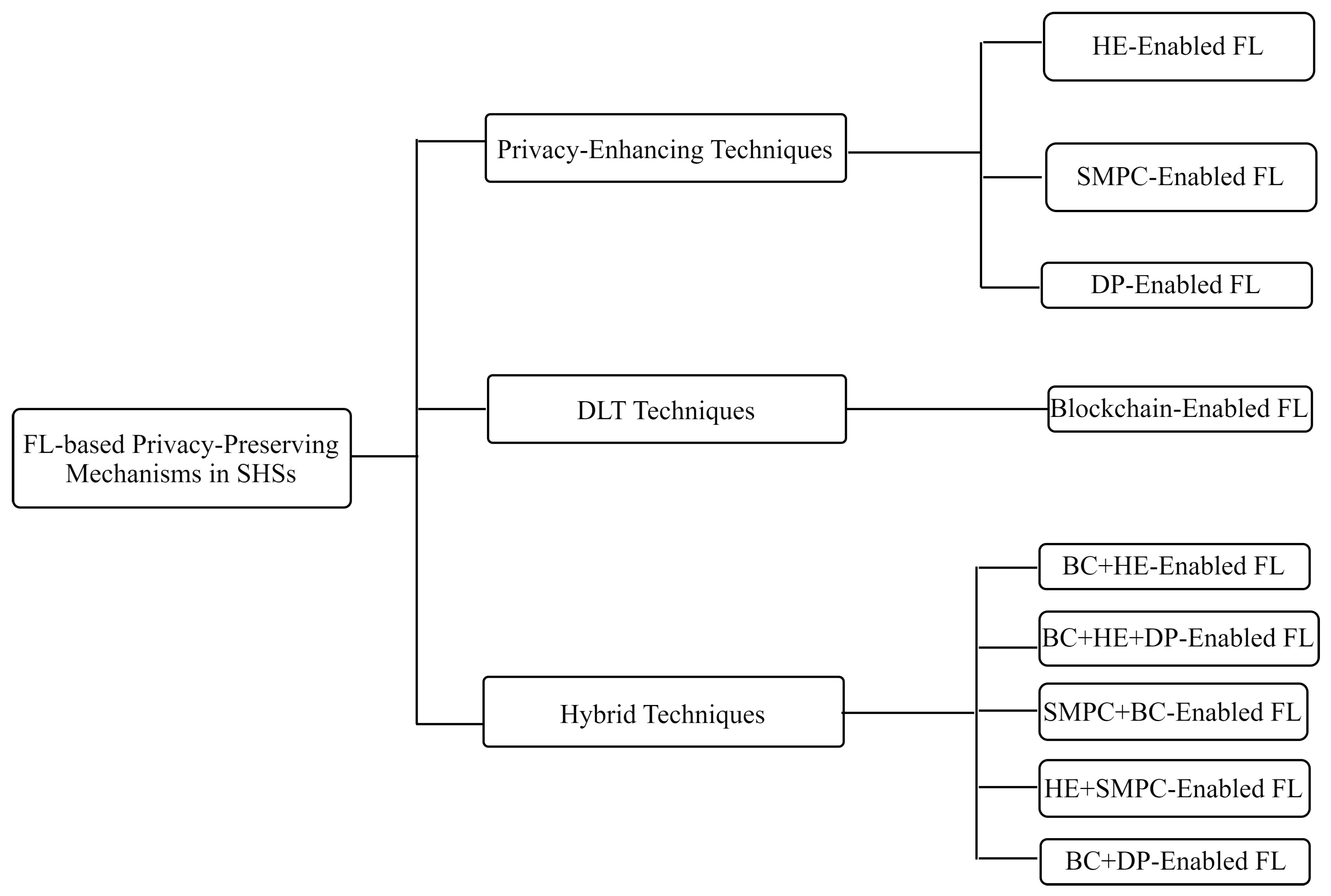

We investigate the main privacy threats currently in SHSs, elaborate on the main FL-based privacy mechanisms in SHSs, and propose a taxonomy of FL-based privacy mechanisms developed in recent studies.

This survey meticulously explores the intersection of FL with DP, HE, and SMPC within SHSs. It outlines key contributions and performs a comparative analysis that focuses on privacy, scalability, computational efficiency, and the pros and cons of each technology, aiming to build robust and reliable SHSs.

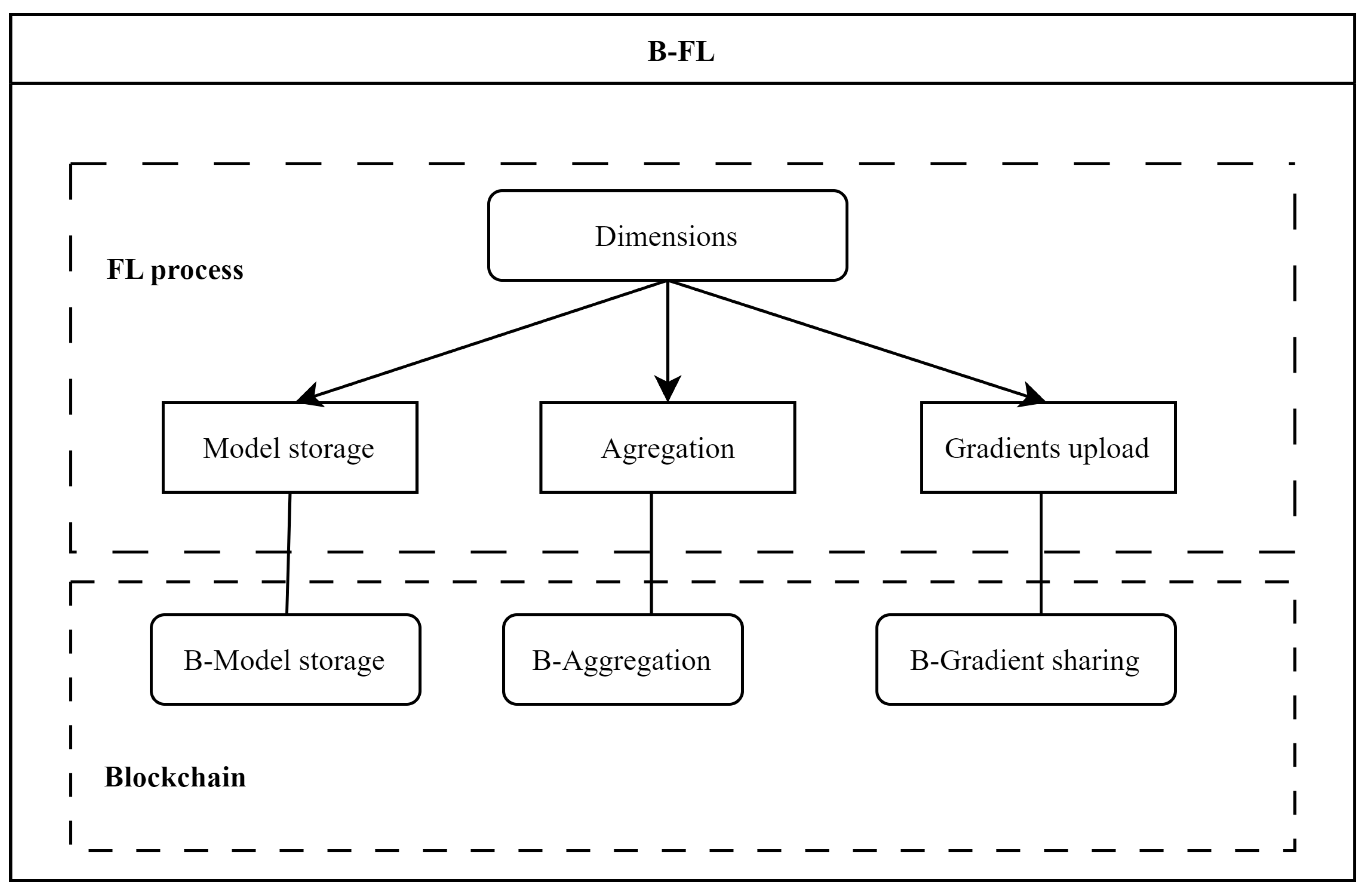

The survey analyzes the cutting-edge developments in integrating blockchain technology with PPFL within SHSs. We use three key technical dimensions: model storage, gradient upload, and aggregation, to succinctly summarize contemporary advancements and organize the discussion. In addition, we compare recent significant studies on the integration of blockchain technology in PPFL, evaluating each based on its respective advantages and limitations.

Finally, we identify current deficiencies of PPFL and propose potential avenues for future research.

To ensure a comprehensive understanding of the intersection between FL, PETs, and blockchain in SHS, our survey is based on the literature published between 2018 and 2024. We consulted databases such as ACM Digital Library, IEEE Xplore, Scopus, and PubMed using keywords related to our core topics. Studies were included based on criteria such as relevance to the SHSs context, focus on privacy and security aspects, and use of FL, PETs, or blockchain. Data extraction focused on methods used, findings related to the effectiveness of technology implementations, and identified challenges. This approach allowed us to analyze trends and gaps in the current research landscape systematically.

Table 2 presents the key acronyms used in this survey.

The organization of the remainder of this survey follows a logical structure starting with a discussion on privacy challenges in SHS in

Section 2, followed by an overview of FL and its integration into SHS in

Section 3. We then examine the major privacy-enhancing technologies integrated with FL aimed at increasing privacy preservation in smart healthcare in

Section 4. Additionally, a study of existing works concerning the integration of blockchain in PPFL based on three technical dimensions is provided in

Section 5. Discussions and future work are given in

Section 6, and we conclude this survey in

Section 7.

2. Privacy Concerns in Smart Healthcare

In this section, we review the key concepts of privacy and address the privacy threats in SHSs. We start by clearly defining the concept of privacy and classifying privacy in SHSs. We then discuss the privacy threats in SHSs and provide a relevant classification.

Modern technologies have profoundly transformed healthcare systems, enhancing their efficiency, improving patient care, and also accelerating research in medical fields. In fact, wearable technology,

electronic health records (EHRs) [

3], and IoT devices are among the technologies upon which smart healthcare is built. These technologies generate a plethora of data that can be used for personalized treatment recommendations, illness diagnosis, remote health monitoring, enhancing elderly care, and predictive analytics [

30,

31]. These innovations, underpinned by IoT sensor technology [

21,

32], play a pivotal role in enabling smart hospital services and remote health monitoring, fundamentally redefining healthcare delivery [

33,

34,

35].

Such advancements predicate the smart healthcare model, which is predicated on a patient-centric, interconnected ecosystem bolstered by AI and IoT for optimized health management and decision-making [

36,

37,

38]. This system establishes a cohesive network for the secure transmission of health data, enhancing the integration of various healthcare platforms and community resources [

39,

40]. With the integration of AI and IoT in SHSs, vast amounts of data are produced, analyzed, stored, and shared [

41]. In this process, it is essential to protect users’ privacy.

Privacy, a multifaceted concept, has undergone nuanced definitions over time, reflecting the dynamic interplay between societal evolution and technological advancement. One of the earliest definitions, originating from a seminal law review, characterizes privacy as the fundamental right to “be let alone” [

42]. This foundational definition has been echoed and elaborated upon in various domains, notably within the

Information and Technology (IT) sector. Here, privacy is construed as the capacity to protect sensitive information from unauthorized access and use [

43]. The

National Institute of Standards and Technology (NIST) further refines this conception, defining privacy as the assurance of protecting the confidentiality of, and controlling access to, information pertaining to individuals or organizations [

44].

Moreover, privacy is increasingly perceived as the prerogative of individuals to manage the dissemination of their personal data [

45,

46]. A recent study by Singh et al. [

47] straightforwardly posits privacy as the fundamental mechanism for safeguarding sensitive information. Within the AI and FL field, ref. [

48] elucidates privacy as the structured preservation of individualized data entities. Across these varied conceptualizations, a common thread emerges: privacy assumes paramount importance within the

Information and Communication Technology (ICT) landscape, necessitating specialized attention.

Notably, emerging technologies including blockchain and AI are leveraged for protecting privacy, employing diverse techniques to enhance the efficacy of safeguarding sensitive information. These advancements underscore the ongoing efforts to fortify privacy frameworks amidst the evolving technological landscape.

2.1. Types of Privacy

In [

49], Ding et al. proposed five types of privacy in SHSs, including identity, location, query, owner, and footprint privacy. Zhu et al. [

21] also described five main types of privacy associated with IoT systems, including identity, location, trajectory, query, and report privacy. In the above works, location, query, and identity privacy are commonly discussed by researchers. Moreover, Chen et al. [

50] classified privacy in three main categories, namely identity-based privacy, data-based privacy, as well as location privacy. While identity privacy refers to protecting personal identifiers, location privacy consists of protecting the user’s location data such as geographic position and its parameters [

21] from disclosure. As well, data privacy entails the protection of the user’s personal data. Most recently, Wang et al. [

51] proposed two main types of privacy in SHSs, identity privacy and data privacy. Data privacy consists of a user or patient’s physiological information, and identity privacy involves information about the identification of the participating clients. In the present study, we explore two distinct categories of privacy as shown in

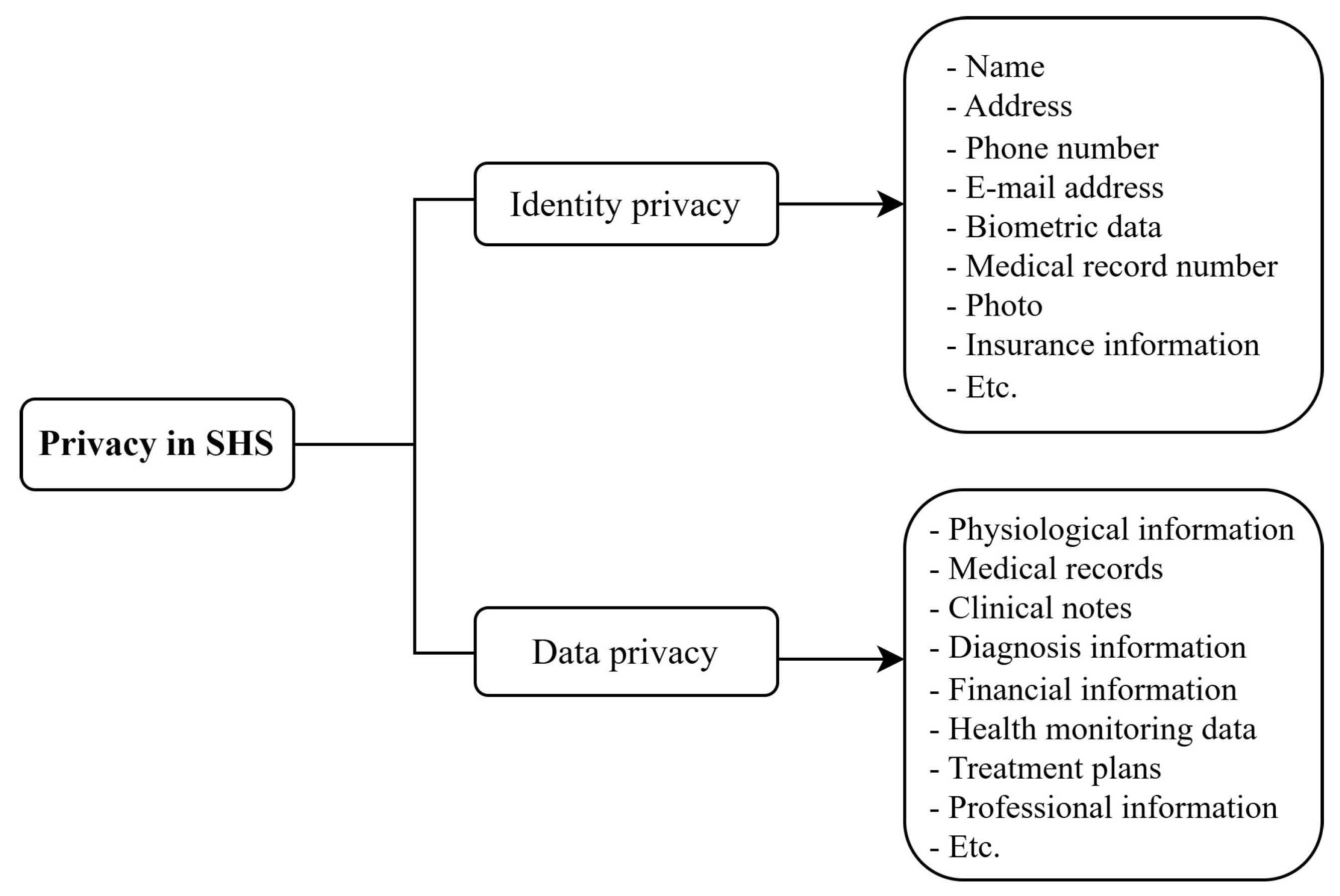

Figure 2, namely identity privacy and data privacy.

Identity privacy refers to safeguarding the identifying information of users, encompassing data elements facilitating the unequivocal identification of an entity, including but not limited to name, date of birth, address, biometric details, photographic representations, among others. To mitigate identity privacy apprehensions within smart healthcare environments, Ali et al. [

18] advocated for the adoption of anonymization techniques on users’

personal identifiable information (PII). Furthermore, they proposed three distinct strategies aimed at strengthening user access to the system, encompassing login-based authentication, pseudonym use, and anonymity protocols, respectively. Still in addressing identity privacy concerns, the adoption of pseudonyms is commonly advocated by [

21,

49,

50].

In contrast,

data privacy refers to the protection of physiological data and other personalized information, such as medical records, clinical notes, diagnostic data, locational data, professional particulars, and financial details. Various strategies, prominently including data encryption, have been deployed to uphold data privacy standards [

50]. Moreover, safeguarding data privacy assumes paramount significance within the healthcare sector, particularly in the context of FL and AI applications [

52]. As underscored by the literature [

52], the imperative of data privacy in healthcare is multifaceted, encompassing considerations of patient trust and confidence, ethical obligations, regulatory compliance imperatives, and the deterrence of illicit data access.

2.2. Privacy Threats in Smart Healthcare

Privacy emerges as a paramount concern within SHSs owing to the exceedingly sensitive nature of the data they manage. While certain privacy concerns are shared with those encountered in IoT systems, others are specifically tailored to the domain of smart healthcare. Over the past decade, numerous scholars have extensively examined the literature concerning privacy preservation within SHSs, yielding diverse insights. Notably, Stojkov et al. [

53] proposed a taxonomy that delineates privacy concerns within the healthcare domain, structured around the tripartite architecture of IoT. As a result of their investigation, a compendium of these concerns has been cataloged, as shown in

Table 3.

Ranjith et al. [

54] carried out a comprehensive examination of the privacy challenges inherent in SHSs, elucidating a spectrum of security and privacy obstacles. These include RFID security vulnerabilities,

Distributed Denial of Service (DDoS) attacks, man-in-the-middle attacks, intra-device authentication complexities, and secure communication protocols and key management methodologies. Additionally, Ali et al. [

18] have delineated a series of potential privacy concerns and challenges specific to SHSs leveraging the IoMT. Principally, these concerns revolve around the integrity of the end user identities and their associated information. Notably, instances of data leakage may manifest during communication exchanges between healthcare practitioners and IoMT devices, or between healthcare providers and patients’ personal devices. Prominent among the identified threats are eavesdropping attacks, falsified data dissemination to healthcare providers, and data flow analysis vulnerabilities [

18].

The integration of advanced technologies and connectivity to improve medical services in SHSs also raises significant privacy concerns due to the sensitivity of the health-related data they handle. In this survey, as shown in

Figure 3, we provide a classification of privacy threats in SHSs grouped in five key types including data breaches and unauthorized access, insider threats and social engineering, technical vulnerabilities, users privacy concerns, and regulatory compliance and data misuse.

Data breaches and unauthorized access: In an SHSs context, it refers to incidents where sensitive medical information is exposed or accessed by unauthorized individuals. The consequences of such threats are severe, as they compromise patient privacy, may lead to identity theft, financial fraud, violations of patient confidentiality, and can erode trust in healthcare providers. These incidents can occur in various ways including hacking, phishing, exploiting software vulnerabilities, cyberattacks, and insider threats [

55]. These privacy threats can result in the exposure of patient identities, medical records, and other confidential data to malicious actors through various medical devices such as invasive, non-invasive, and active therapeutic devices as well as sensors (physiological, biological, and environmental) [

56]. Despite sophisticated security measures, the prevalence of data breaches in healthcare facilities highlights the ongoing challenges in safeguarding sensitive information. To mitigate this type of privacy threat, it is critical to implement strong access controls, use encryption for data at rest and in transit, and perform frequent security audits.

Insider threats and social engineering: Insider threats [

57] in healthcare refer to risks posed by individuals within a healthcare organization, such as employees, who have authorized access to the SHS and data. An insider can be former and current employees, business partners, or consultants [

58]. These insiders may intentionally or unintentionally compromise security by accessing patient records without permission or engaging in data breaches. According to [

57], several human factors such as awareness, selfishness, devotion, access, leadership, and caring are associated with insider threats. On the other hand, social engineering threats [

59] involve manipulative operations employed by cyber criminals to trick users into revealing important personal information like passwords and other sensitive data. This can be performed through methods like phishing emails, where attackers impersonate trustworthy sources to trick victims into clicking malicious links or downloading harmful files. Both insider threats and social engineering attacks are significant cybersecurity concerns in the healthcare sector, requiring robust security measures and employee awareness. Implementing strict access controls and monitoring, conducting background checks, and training employees to recognize and resist social engineering tactics are crucial.

Technical vulnerabilities: SHSs involve the use of various devices and software in providing healthcare services [

56]. Technical vulnerability threats are vulnerabilities within the software, hardware, or network components of SHSs that can be utilized to gain unauthorized access or disrupt healthcare services [

60]. These vulnerabilities may arise from unpatched software, outdated systems, or inadequately configured networks [

57]. These can lead to data corruption, system downtime, or unauthorized data access, impacting patient care and data integrity. To mitigate these types of threats, robust system architecture, regular updates and patches, and comprehensive vulnerability assessments are essential.

Users privacy threats: It concerns threats related to data practices transparency and the autonomy of users over their data. Concerns often arise about how data are collected, used, shared, and stored within SHSs. Inadequate management of these concerns may result in a distrust of patients regarding healthcare providers, reluctance to share data, and potential non-compliance with privacy regulations. Implementing clear privacy policies, ensuring informed consent, and providing patients with easy access to their data and control over their use are crucial steps.

Regulatory compliance and data misuse: Adherence to the regulatory and legal frameworks is important to protect privacy in SHSs. Non-compliance and misuse of data for purposes other than those consented to by the patient fall under this category. Regulatory violations may result in legal penalties, loss of licenses, and damage to an organization’s reputation. Data misuse can also infringe patient rights and trust. Healthcare providers must ensure that all SHS activities comply with applicable laws like HIPAA in the US, GDPR in Europe, and other local data protection regulations [

8,

9]. Regular compliance audits and ethical reviews of data usage practices are also recommended.

4. Federated Learning Meets Privacy-Enhancing Technologies

In this section, we explore privacy-enhancing technologies (PETs) that enhance FL for privacy protection. The discussed combinations include Differential Privacy-enabled FL, Secure Multi-Party Computation-enabled FL, and Homomorphic Encryption-enabled FL. Each subsection elaborates on the mechanisms, applications, and limitations of these PETs, with a focus on their relevance to smart healthcare data protection. We conclude with a comparison between the above PETs.

4.1. Differential Privacy-Enabled Federated Learning

DP [

100] is a technique that consists of adding noise to data to mask individual contributions. It aims to ensure robust assurances about the privacy of individuals whose data serve for analysis or computation [

101]. Specifically, given two adjacent datasets

and

which differ solely at one data point, applying the DP technique to perturb the original values can render the outputs of these datasets indistinguishable. Formally, DP can be defined as follows.

Definition 1 (

-Differential Privacy [

100]).

A randomized mechanism M satisfies ϵ-Differential Privacy (ϵ-DP) if, for any pair of neighboring datasets and , and for any possible output , where the parameter ϵ denotes the privacy budget, a mathematical concept that quantifies the maximum possible privacy loss. DP can be classified into two main types, including Global Differential Privacy (GDP) and Local Differential Privacy (LDP) [28]. While GDP focuses on adding noise centrally before sharing data, LDP perturbs data locally, allowing each client to protect their information independently. The problem of privacy preservation using DP in the FL context has been a focus of several research works. Abadi et al. [102] proposed a way of combining DP with deep learning to preserve privacy with some important results in terms of accuracy and privacy. Afterwards, various approaches tightly linked to the FL context have been proposed. In Table 4, we compare DP-based PPFL methods based on key metrics such as privacy level, key technologies, datasets, accuracy, and limitations. Zheng et al. [

83] proposed an approach to enhance privacy in the FL context that consists of injecting local DP noise into the model updates prior to transmission. Furthermore, they indicated that LDP primarily gains advantages from an extensive user community and requires fewer CPU/battery resources on portable devices while ensuring a robust level of privacy protection [

83]. They obtained valuable insights from the proposed solution but suggested further and in-depth studies and experimentation to enhance the accuracy of the scheme. Li et al. [

84] proposed ADDETECTOR, a privacy-preserving smart healthcare scheme designed for the early detection of Alzheimer’s disease (AD) in an easy-to-use and cost-effective manner. The system addresses the challenges in remote AD detection and proposes a solution that utilizes IoT appliances and security protocols to ensure privacy. By employing FL and DP mechanisms, ADDETECTOR achieves high accuracy and low time overhead in AD detection trials, demonstrating its effectiveness and efficiency. However, the ability of the proposed approach to overcome potential security threats and privacy breaches in real-world scenarios remains a critical challenge.

Yang et al. [

103] proposed another approach, named PLU-FedOA, that optimizes FL with personalized local DP in mixed privacy preservation situations. Their algorithm consists of two components: PLU, that helps clients to transmit local updates under DP of individually chosen privacy degree, and FedOA, that allows the server to aggregate local parameters with optimized weight in combined privacy-preserving scenarios. Compared with other existing FL solutions like FedAvg, GDP-FL, and LDP-FL, PLU-FedOA has shown superior performance in a mixed privacy-preserving setting [

103]. Although this solution is promising, its potential efficiency on various datasets and real-world applications still needs to be proved.

Li et al. [

104] presented a novel FL scheme called PGC-FedSGD that integrates personalized LDP and the

Federated Stochastic Gradient Descent (FedSGD) algorithm. In this solution, PGC-LDP is utilized by the clients to ensure local DP of the gradient, while FedSGD is used by the server for the aggregation. In this framework, users can choose their privacy levels regarding a FedSGD algorithm with LDP. The experiments on the MNIST and CIFAR-10 datasets demonstrated good results, as PGC-FedSGD has a simple architecture and algorithm design with a strong privacy assurance. However, the proposed approach, which results in clients uniformly selecting their privacy level within an empirical domain, appears unreasonable, as most participants tend to seek robust privacy guarantees if possible.

Unlike [

103,

104] approaches where DP is applied locally on the clients’ side, Weng et al. [

101] proposed another approach, in which DP is used by both the server and clients to obtain stronger privacy protection. Their scheme also applies sparse gradients and

momentum gradient descent (MGD) to enhance accuracy performance and decrease communication overhead. The main findings include outperforming other DP-based FL schemes concerning model accuracy and providing a more robust privacy assurance [

101]. The proposed scheme can achieve optimal accuracy performance while reducing communication costs by up to 90%. However, the potential degradation of accuracy performance due to the injection of noise for privacy protection and the need to choose the noise scale carefully to balance privacy protection and model performance are some limitations of this approach.

The work of Khanna et al. [

105] proposed an FL algorithm that implements DP for ML model training on distributed healthcare data. The framework was tested for forecasting breast cancer status based on gene expression data and achieved similar accuracy and precision as a non-private model, demonstrating its effectiveness. However, there are still some challenges for their proposition including privacy concerns when models were trained on data from various institutions and hospitals and the need to set the privacy parameter by a user with expertise to mitigate potential privacy breaches.

Gu et al. [

25] introduced a PPFL framework using DP for artificial IoT systems. Their approach includes two techniques, gradient perturbation and gradient permutation, to safeguard both the privacy of data and the identity of clients throughout the FL process. The gradient perturbation mechanism involves adding exponential noise to the computed gradient on the client side to satisfy data privacy, while the gradient shuffling mechanism guarantees that the server cannot discern which gradient belongs to which client, preserving the client’s identity [

25].

Maria et al. [

106] introduced an Optimized DP (ODP) approach to safeguard the privacy of individual data points while facilitating the extraction of useful insights. Their scheme is evaluated on the MNIST dataset and analyzed with the FedAvg aggregator. The main findings include leveraging DP within FL to bolster privacy, experimentation with diverse DP parameters to optimize outcomes, and presentation of quantitative results detailing the accuracy of trained models alongside their corresponding privacy guarantees. Moreover, it demonstrates that maintaining constant epsilon values while varying noise levels and delta values leads to heightened privacy protections.

Nevertheless, strategies based on noise necessitate the algorithm to meticulously fine-tune the generation of noise to keep the model’s performance, including accuracy. Failure to do so could significantly impair performance.

4.2. SMPC-Enabled Federated Learning

SMPC is an advanced cryptographic method that permits decentralized participants to collaboratively compute an objective function without disclosing their individual data [

28]. It allows multiple users to collaborate when performing computations on their raw data without the need to share them. SMPC utilizes some advanced cryptographic protocols, such as secret sharing, garbled circuits, and HE, to facilitate confidential computations over private data.

While there is limited literature on integrating SMPC with FL for privacy preservation, some authors have explored this area. For instance, Kanagavelu et al. [

78] introduced a two-phase mechanism using Multi-Party Computation (MPC) to enhance privacy in FL. The key findings of their approach include the successful integration of MPC for model aggregation in FL, enabling companies to collectively train models while preserving privacy. However, the limitations of the study involve high communication overhead and scalability issues with MPC-enabled model aggregation, the complexity introduced by the need for a small committee, and the focus on neural network models limiting generalizability to other machine learning models.

In addition, Tran et al. [

79] proposed an approach called ComEnc-FL, a PPFL framework that leverages SMPC and parameter encryption for protecting privacy and reducing communication and computational costs. It surpasses typical SMC systems in training duration and data transfer capacity, matching the fundamental FL framework and outperforming DP-secure frameworks. However, while enhancing privacy and reducing computational and communication costs in FL, ComEnc-FL may still be susceptible to collusion between clients and the server, potentially compromising the model confidentiality. Overall, SMPC schemes prevent inquisitive or untrustworthy aggregators from inspecting private models without impacting accuracy [

79]. SMPC schemes offer advantages in preventing unauthorized access to private models without compromising accuracy. However, challenges such as communication overhead, scalability issues, and susceptibility to collusion underscore the necessity of in-depth research and advancement to overcome the limitations and ensure robust privacy-preserving mechanisms within FL frameworks.

4.3. Homomorphic Encryption-Enabled Federated Learning

HE can be defined as a cryptographic mechanism that permits arithmetic operations on encrypted data without decryption requirement [

11]. Thus, a fundamental property of HE is that decrypting the operated ciphertext should yield the same output as would be obtained by operating on the unencrypted data. This property allows to execute intricate mathematical operations on encrypted data while maintaining the security of the raw data.

HE encompasses a variety of encryption techniques capable of conducting diverse computations on encrypted data. It includes several types, such as partially homomorphic, somewhat homomorphic, and fully HE [

11,

107,

108].

Partially HE (PHE) enables computations involving a single type of operation, like addition or multiplication. PHE incurs lower computational costs compared to alternative forms of HE, yet its applicability remains limited [

109].

Somewhat HE (SWHE) enables both addition and multiplication but with restrictions on the number of operations permitted [

11,

110]. SWHE is more computational cost compared to PHE while providing enhanced functionalities [

109].

Fully HE allows an unlimited number of additions or multiplications on ciphertexts [

68,

110]. It allows unrestricted computations on encrypted data, including conditional operations, branching, and iterative processes [

110,

111,

112].

This flexibility in conducting computations while maintaining data privacy makes HE an invaluable tool for scientific research and applications. Based on the encryption mechanism, this technique avoids sharing raw data and the model during the training process in FL between clients and the server. Therefore, it is very difficult for a third party to access user sensitive information. Several studies have proposed privacy-preserving solutions based on HE in the FL context.

Table 5 compares HE-based PPFL methods based on key metrics such as privacy level, key technologies, datasets, accuracy, and limitations.

For instance, Park et al. [

75] introduced a system that enables homomorphic operations with different encryption keys and the implementation of a system model involving a cloud server and multiple clients for secure model aggregation and averaging. They presented an algorithm for secure aggregation of local models which facilitates the update of the global model parameters by the server using local model parameters with noise that can be reversed out through participant collaboration. Their model involves a trusted key generation center, cloud server, computation provider, and multiple clients, ensuring data privacy through encryption and decryption processes [

75]. The challenges addressed in [

75] include the need for extra operations to enhance data privacy in FL-based frameworks, while limitations involve the balance between computational overhead and security level, especially with increasing key sizes.

Shi et al. [

76] introduced a method that combines HE and secret sharing to ensure the confidentiality of local parameters, withstand collusion threats, and simplify aggregation without sharing keys. However, the proposed scheme faces challenges such as collusion threats among clients or with the server, network disruptions leading to communication issues, and the complexity of implementing encryption techniques in practical applications. Moreover, Wang et al. [

77] propose a scheme using HE to secure model parameters in healthcare data applications, addressing privacy concerns and communication efficiency challenges. The scheme introduces client authentication mechanisms and access control to prevent attacks, ensuring data privacy and model performance while reducing communication overhead. However, the proposed scheme has some limitations including potential communication overhead due to users dropping out during training, hardware quality issues, network delays, and the need for an

Acknowledgment (ACK) mechanism to handle unresponsive users, which may increase waiting delays and affect overall training progress.

In addition, Walskaar et al. [

113] also proposed another approach enhanced with

Ring Learning With Errors (RLWE)-based multi-key HE. The proposed approach utilizes the xMK-CKKS scheme, a multi-key HE scheme based on the CKKS scheme, to ensure the data confidentiality during the training processes in untrusted environments while also addressing the shortcomings and trade-offs associated with privacy preservation in medical data analysis. Although [

113] proposed a comprehensive and detailed method to addressing privacy concerns in the ML context for healthcare institutions by integrating multi-key HE within the FL framework, their approach presents some limitations, including the increased computational overhead and data expansion associated with homomorphic encryption, which can reduce system performance and require additional storage and communication resources. Additionally, the accumulation of noise in HE poses a significant challenge, potentially leading to undecryptable ciphertext over time, necessitating the use of noise management techniques to mitigate this issue.

Zhang et al. [

114] developed a new masking scheme that integrates HE and SMPC for FL, which considers data quality in model aggregation and provides a dropout-tolerable and participants collusion-resistible solution. It also implements an FL prototype system for medical data, performing comprehensive experiments utilizing authentic skin cancer datasets to validate both the privacy preservation and the effectiveness of their approach. However, the proposed approach has certain limitations such as the potential impact of HE on computational overhead, the need for further tuning in heterogeneous environments, and the lack of consideration for malicious server attacks and tampering of the aggregated model.

Shen et al. [

99] introduced a privacy-preserving and efficient online diagnosis method for e-healthcare systems leveraging FL. The proposed scheme effectively protects patients’ privacy, achieves high accuracy in clinical diagnosis, and demonstrates practicality for real-world SHSs. However, some limitations of the proposed scheme include potential damage to the raw data and model accuracy due to the use of DP, increased computational complexity from the complex HE algorithm, inefficient diagnosis result retrieval, and relatively low accuracy of the diagnosis model obtained using the SVM algorithm.

Kumar et al. [

70] proposed a sophisticated scheme that integrates blockchain technology and HE with FL to address the challenges of privacy-preserving collaborative model aggregation for the analysis of the medical image, particularly for COVID-19 detection and classification. The proposed framework offers a novel approach to data sharing and collaborative training across multiple healthcare institutions, laying the groundwork for enhanced privacy, security, and accuracy in medical image analysis. However, the approach may face challenges in latency and scalability as a result of the decentralized nature of the blockchain network and the need for continuous updates to address new mutations of the COVID-19 virus. Recently, Liu et al. [

115] introduced a novel framework wherein users encrypt their data using a joint public key determined by the server over three rounds of interactions. This scheme offers several advantages, including accommodating dynamic user participation, generating compact ciphertexts that remain independent of the number of participants involved, and reducing the number of interactions per round from three to two, thus mitigating concerns regarding user dropout during computation [

115]. However, the security of the proposed scheme may be compromised in scenarios where all users collude with the server.

HE emerges as a pivotal technique for privacy preservation in FL systems within the healthcare domain. Offering the capacity of performing computations on encrypted data without decryption, HE provides a crucial opportunity to safeguard sensitive medical information while enabling collaborative model training. However, the diverse types of HE present trade-offs between computational costs and capabilities, necessitating careful consideration in system design. Despite the promising advancements and proposals of HE-based solutions to address privacy concerns, noise accumulation, computational overhead, as well as security vulnerabilities persist as challenges. Further studies and refinements are essential to overcome these obstacles to fully realize the potential of HE in FL-based smart healthcare applications.

4.4. Comparison of Key Privacy-Enhancing Technologies in FL

To compare the effectiveness of the aforementioned privacy-preserving techniques in FL, a comprehensive comparison is presented in

Table 6, highlighting key aspects such as privacy guarantees, computational overhead, scalability, key features, and limitations between different methods.

The existing privacy protection frameworks for FL have certain limitations to varying degrees, rendering them unable to achieve a comprehensive resolution of all challenges within a single scheme. As shown in

Table 6, we compare these technologies, focusing on aspects crucial for smart healthcare. SMPC is noted for its good privacy level and moderate computational overhead, offering the advantage of joint computation without exposing private inputs, albeit at the cost of high communication overhead. DP provides strong privacy but with a risk of reduced data utility. Data utility refers to the ability to maintain the usability and accuracy of data as well as preserving the validity and reliability of the insights derived from them after the application of a privacy protection mechanism [

117]. Utility measures the ability of the system to maintain model performance. HE allows for computation on encrypted data, ensuring high privacy but suffering from high computational costs. Fully HE extends this capability with increased flexibility for model training but introduces system complexity and still retains considerable communication overhead. These insights are essential to determine the appropriate technology for a reliable smart healthcare system that balances privacy, efficiency, and practical limitations.

4.5. Privacy-Enhancing Technologies Meet FL-Based Smart Healthcare

In this section, we investigate the integration of PETs in FL-based SHSs, especially in health data management, remote health monitoring, medical imaging, and health finance management.

4.5.1. Application in Health Data Management

PETs such as DP, HE, and SMPC are often combined with FL in FL-based smart healthcare to enhance data privacy while training ML models. For example, the work in [

118] presented a secure framework integrating SMPC and blockchain with FL to enable heterogeneous models to collaboratively learn from healthcare institutions’ data while protecting users’ privacy. In [

84], authors proposed a privacy-preserving method that combines FL and DP for Alzheimer’s disease detection based on patients’ audio data collected by IoT devices. Authors in [

6] developed a framework for disease prediction that combines FL, DP, and SMPC to protect users’ data privacy during the training process. Similarly, ref. [

119] employed DP-enabled FL for disease diagnosis in IoMT. Studies in [

77,

114] proposed homomorphic encryption-based FL schemes to protect the privacy of healthcare data in SHSs.

4.5.2. Application in Remote Health Monitoring

The rapid developments in IoT leading to Internet of Medical Things (IoMT) have boosted the expansion of remote health monitoring [

120]. It consists of remote health services delivery through the Internet and IoT devices and sensors for remotely monitoring blood sugar levels, vital signs, heart rate, or other relevant health metrics. AI and FL have been introduced in this SHSs application field in several manners. Although FL by nature ensures privacy protection in this context, privacy enhancement measures are still needed, and several approaches using PETs are proposed. Shen et al. [

99] introduced a privacy-preserving approach for remote disease diagnosis integrating HE with FL. HE is used to encrypt patients’ physiological data during the training process. This application of smart healthcare is still evolving with further privacy-preserving FL methods from researchers to remotely monitor patients’ health in various manners.

4.5.3. Application in Medical Imaging

Nowadays, medical imaging associated with AI is widely used in SHSs for many reasons, including disease prediction, disease diagnosis, and tumor classification. To enhance privacy during ML model training in medical imaging, several schemes that integrate PETs with FL have been proposed by researchers. For instance, ref. [

121] proposed an adaptive DP-based FL method COVID-19 disease detection based on chest X-ray images. Similarly, ref. [

122] introduced a DP-based FL approach for COVID-19 detection using a generative adversarial network and CT scan images. In [

11], authors developed a framework integrating HE and FL for CNN-based COVID-19 detection.

4.5.4. Application in Health Finance Management

The management of medical finance is an important topic in SHSs since healthcare service providers are still looking for a more modern, reliable, and efficient system. AI has been introduced in this application of SHSs to improve financial transactions and efficiency. To the best of our knowledge, there is not yet a privacy-preserving FL method specifically tailored for financial transactions management in SHSs systems. However, regarding the fast-ever growing interest in AI-enabled healthcare, this field necessitates further attention from scholars.

6. Discussions and Future Work

In this section, a few findings and challenges are presented in

Section 6.1 along with related opportunities in

Section 6.2.

6.1. Discussions

Privacy-preserving FL holds substantial promise for revolutionizing healthcare systems by addressing the critical need for maintaining patient privacy while leveraging the collective intelligence of distributed data sources. Through our survey, several key insights emerged that shed light on the current landscape, opportunities, and challenges in this burgeoning field.

We classified privacy threats in SHSs into five main types, including data breaches, insider threats, technical vulnerabilities, user privacy concerns, and regulatory compliance issues. These threats pose significant risks to patient privacy, data integrity, and trust in SHSs. We highlighted the complexities and challenges inherent in protecting sensitive health information and underscored the need for robust privacy-preserving mechanisms. After discussing FL principles and its potential benefits in SHSs, FL stands as a suitable solution to address privacy challenges in SHSs, facilitating collaborative model training while ensuring data integrity and confidentiality. However, as FL alone cannot ensure strong privacy protection, a new paradigm known as PPFL was discussed and a classification of FL-based privacy-enhancing mechanisms in SHSs was proposed. The proposed taxonomy relies on three main groups of techniques including PETs, DLT, and hybrid techniques, along with existing approaches and applications of each approach in SHSs.

On one hand, we identified DP, HE, and SMPC as the leading PETs that, when integrated with FL, significantly enhance privacy guarantees. Each technique presents unique strengths and challenges: DP is highly scalable but may suffer from utility loss; HE ensures robust security but incurs substantial computational overhead; SMPC provides strong security guarantees with moderate overhead. On the other hand, blockchain emerges as an efficient technology for addressing several inherent challenges in FL, particularly in decentralized and trustless environments. Blockchain enhances the integrity and transparency of FL processes, ensuring that model updates are tamper-proof and auditable. However, the integration of blockchain with FL also introduces challenges such as increased latency and computational requirements, which necessitate further optimization.

Furthermore, to comprehensively discuss the blockchain applications in FL for privacy protection, we focus on three key dimensions such as model storage, aggregation, and gradient upload, highlighting how blockchain’s features can specifically address and mitigate privacy challenges in FL systems. The combination of blockchain with FL not only secures data transactions and model updates but also fosters a cooperative and reliable environment for participants across various sectors, particularly in SHSs, where data sensitivity is paramount. Although the reviewed schemes share common benefits in enhancing privacy and security, they face distinct challenges such as performance limitations, communication overhead, and computational intensity.

Another notable finding is the growing interest and adoption of PPFL methods within smart healthcare, especially for health data management, remote health monitoring, medical imaging, and health finance management. Researchers and practitioners alike are increasingly recognizing the significance of privacy-preserving techniques in safeguarding sensitive medical data, especially in light of strict regulatory requirements such as HIPAA and GDPR. The proliferation of PPFL frameworks specifically tailored for healthcare applications underscores the urgency and importance of addressing privacy concerns in this context.

Despite the promising advancements in FL-based privacy-preserving frameworks, several challenges remain. Chief among these is the inherent trade-off between privacy and utility in FL settings. While PPFL schemes strive to protect patient privacy, they must also ensure that the resulting models retain sufficient accuracy and generalizability for clinical use. Balancing these competing objectives remains a complex and ongoing research endeavor, requiring innovative solutions at the intersection of ML, cryptography, and healthcare domain knowledge.

Another critical consideration is the diversity of healthcare stakeholders and the varying levels of trust among participants. Building secure and resilient PPFL systems requires the establishment of robust governance structures, transparent communication channels, and mechanisms to verify the integrity of the participants.

Moreover, blockchain-enabled privacy-preserving FL presents several challenges such as latency, energy consumption, interoperability, and data storage costs. In fact, the consensus mechanisms introduced by blockchain such as Proof of Work and Practical Byzantine Fault Tolerance can cause delays in large-scale FL–SHSs frameworks. This situation can significantly affect the efficiency of SHSs which require real-time blockchain protocols that are also known as high-energy consumers, which may not satisfy the SHSs’ sustainability goals. Data storage is another challenge for blockchain-enabled PPFL, as the implementation of blockchain needs huge storage capacity to store models and gradients. It can be more challenging in SHSs with the management of high-dimensional healthcare data. Finally, the integration of blockchain into PPFL within SHSs requires seamless interoperability, which remains an ongoing challenge.

Joint efforts between researchers, healthcare providers, policymakers, and technology providers are required to foster trust and cooperation in FL-based smart healthcare ecosystems.

6.2. Future Works

This survey explored FL, PETs, and blockchain as well as their integration into SHSs, highlighting their potential to address the critical challenge of privacy protection and their combined capability to significantly enhance privacy and security in data handling. Each technology contributes uniquely to PPFL by training models on decentralized data [

160], PETs by securing data at rest and in transit [

106], and blockchain by ensuring data integrity and traceability [

62]. As demonstrated, while each technology has its merits, their combined application can significantly enhance the privacy and security standards of data processing in the healthcare sector.

While FL enhances model utility without compromising data privacy [

84], PETs add an additional layer of data protection, and blockchain provides a secure, immutable ledger for transparent and traceable transactions [

90]. Our work highlights several critical findings and implications that can motivate future advancements in this domain. Given the heavy computational demands of blockchain-based frameworks and the resources constraints nature of smart healthcare devices, further research could focus on lightweight blockchain solutions, enabling efficient and secure computing for various SHS applications.

In addition, the scalability and efficiency of PPFL frameworks need further investigation. As healthcare datasets continuously grow in size and complexity, the computational and communication overhead associated with FL presents significant challenges. Efforts to optimize and streamline PPFL protocols, while maintaining robust privacy guarantees, are imperative to reap the full benefit of FL in healthcare. Despite the promising synergies identified, integrating these technologies into existing healthcare infrastructures presents notable challenges, including technical complexities and scalability concerns [

25]. To address these challenges, a shift in regulatory frameworks and ongoing technological refinement are necessary to support innovative solutions.

Future research should therefore focus on advancing these technologies’ seamless integration, developing methods that balance privacy with utility, privacy with computational demands, and crafting adaptive frameworks that respond to the dynamic nature of healthcare data and privacy standards. By continuing to innovate and rigorously evaluate PETs, the field of smart healthcare progresses toward a future where data-driven insights and patient privacy are not at odds but are instead facets of a harmonious and highly effective healthcare system.