1. Introduction

Higher education institutions (HEIs) operate in a globally competitive environment shaped by the growing demand for highly skilled professionals, the rapid growth of AI-enhanced education, and the increasing impact of internationalization [

1]. To attract students, secure funding, and maintain their position in the global academic landscape, universities must continuously enhance their quality assurance (QA) systems. These systems must now respond not only to academic outcomes but also to strategic goals related to transparency, innovation, and sustainability [

2,

3].

The intensification of globalization and internationalization has compelled universities to compete on a global stage, while rapid advancements in technology have transformed how education is delivered and assessed. This convergence of challenges requires institutions to rethink how performance is measured and quality is maintained [

1,

3]. In this context, quality assurance has evolved into a dynamic process that must incorporate both internal benchmarks and external expectations. Additionally, international frameworks such as the European Standards and Guidelines (ESG), the OECD’s benchmarking initiatives, and UNESCO’s QA policy recommendations provide an overarching context in which HEIs across continents are aligning their quality assurance strategies.

For example, according to OECD data, more than 6 million students studied abroad in 2023, reflecting a 10% annual growth over the past decade. Similarly, the European Commission’s Digital Education Action Plan (2021–2027) indicates that 82% of member states have implemented at least one digital learning support system in their public higher education institutions. These developments pose significant challenges for QA systems, which must increasingly adapt to rapid changes in international competition and technological expectations.

To respond to these pressures, institutions increasingly rely on structured QA frameworks that provide systematic and comparable evaluation tools across national and international contexts. Structured QA models such as the European Standards and Guidelines (ESG-EQA), the EFQM Excellence Model, and ISO 9001 play a key role in establishing consistent procedures for institutional evaluation [

2,

4]. However, traditional methods like peer review and accreditation audits often lack the responsiveness needed in fast-changing academic environments [

5]. As a result, modern QA approaches are increasingly supported by key performance indicators (KPIs) and data-driven evaluation tools.

Advanced methodologies—including Data Envelopment Analysis (DEA), the Analytic Hierarchy Process (AHP), and Bayesian modeling—are increasingly used to measure institutional effectiveness, benchmark efficiency, and guide strategic decision-making [

5]. However, this trend varies across regions: while many global ranking systems emphasize quantitative metrics, in the European Higher Education Area (EHEA) peer review, accreditation procedures, and qualitative assessments remain central. This nuance is now explicitly reflected to avoid overgeneralization. These methods enhance the objectivity and scalability of QA processes, offering insights into areas such as graduate employability, research output, and curriculum quality.

Building on this methodological foundation, the present study explores how these tools can be integrated into existing QA systems to address both strategic and operational goals. This study examines the integration of traditional QA frameworks with advanced analytical models. Through comparative case studies of internationally ranked universities, the research identifies emerging best practices for quality assurance in higher education. The goal is to support institutional competitiveness and continuous improvement through hybrid, evidence-based QA strategies.

This study addresses the following research questions: (1) How can data-driven methodologies enhance the effectiveness of higher education quality assurance systems? (2) What are the comparative advantages of DEA, AHP, and Bayesian modeling when integrated into QA frameworks? (3) How do ESG and digital transformation trends influence institutional performance?

2. Quality Assurance Systems in Higher Education

This section explores the fundamental quality assurance (QA) frameworks that enable higher education institutions to uphold and enhance academic standards. In an era of intensifying global competition, rising stakeholder expectations, and the rapid advancement of digital education, the implementation of comprehensive QA mechanisms has become essential. These frameworks not only promote institutional excellence and transparency but also play a crucial role in ensuring the long-term sustainability and credibility of higher education systems within an increasingly complex and dynamic academic environment [

2,

6].

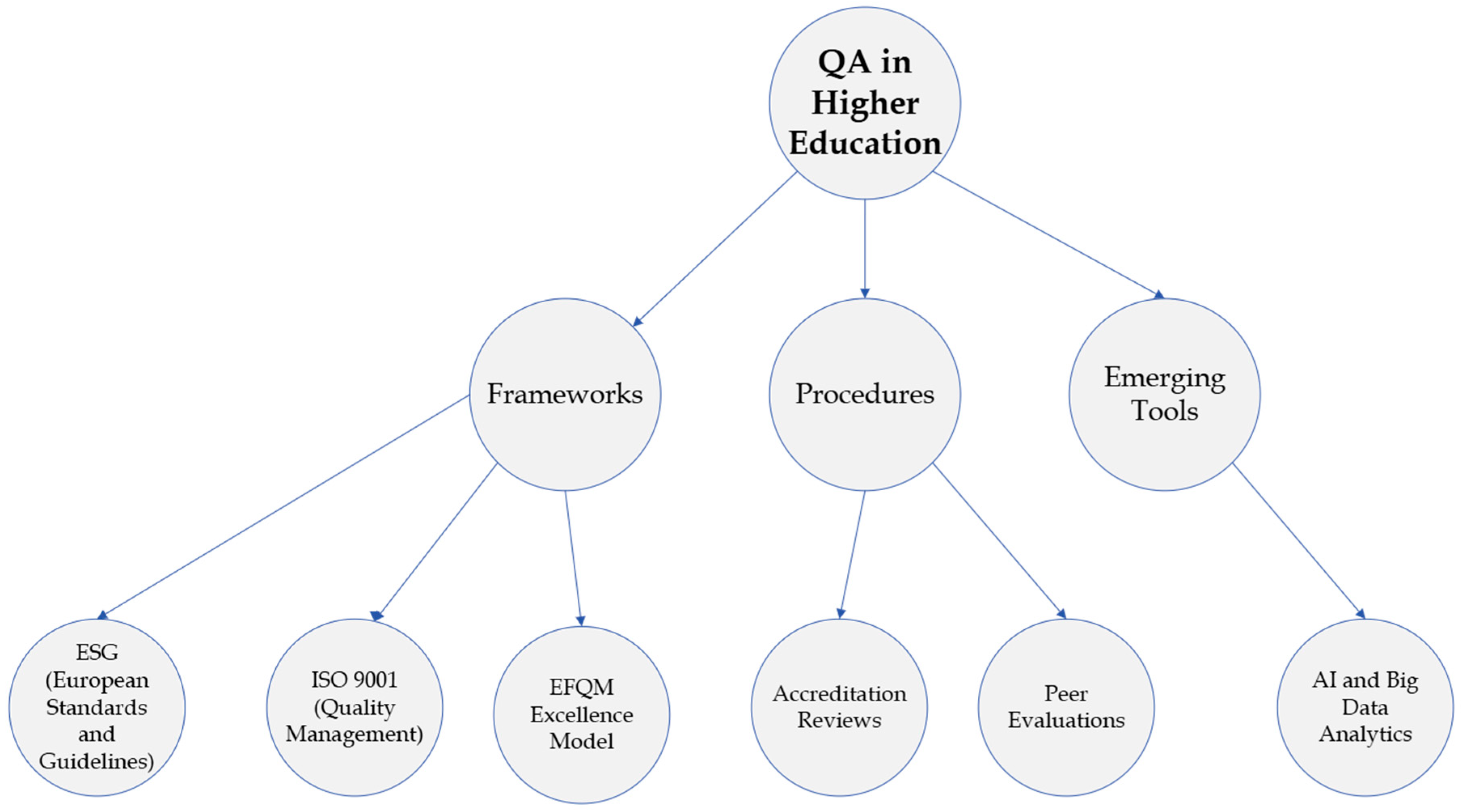

Figure 1 presents a structured overview of the main quality assurance (QA) frameworks and procedures in higher education. To avoid conceptual overlap, we now distinguish between two categories: on the one hand, internationally recognized frameworks such as the ESG (European Standards and Guidelines), ISO 9001, and the EFQM Excellence Model; and on the other hand, procedures and mechanisms such as accreditation reviews and peer evaluations. In addition, recent developments such as AI-supported data analytics are shown as emerging tools rather than as formal QA standards. This revised representation prevents the earlier mixing of frameworks and procedures. The visual is based on a comparative synthesis of the literature [

2,

7,

8,

9], reflecting the relative prominence of each framework in academic discourse and institutional adoption trends. The percentages shown are indicative, based on the frequency of appearance in reviewed case studies and strategic reports. By integrating these mechanisms, universities can enhance their capacity for adaptation, improve performance evaluation processes, and drive continuous educational development [

6].

2.1. The Role of the European Standards and Guidelines (ESG) in Higher Education

In this paper, ESG consistently refers to the European Standards and Guidelines for Quality Assurance in the European Higher Education Area (EHEA). To avoid confusion, references to Environmental, Social, And Governance standards are explicitly written as “corporate ESG.”

The ESG framework was formally adopted in 2005 as part of the Bologna Process to strengthen the transparency and comparability of higher education within Europe [

10]. It provides a common set of standards and guidelines for three key areas: (i) internal quality assurance within higher education institutions, (ii) external quality assurance conducted by independent agencies, (iii) quality assurance agencies themselves. By aligning institutional and national practices with the ESG, universities enhance accountability, credibility, and mutual trust across the EHEA [

10,

11].

The adoption of the ESG has contributed to the greater harmonization of QA practices, supported the international recognition of European universities, and reinforced their position in global higher education. Nevertheless, challenges remain in ensuring consistent implementation across diverse national contexts, particularly with respect to resource constraints, institutional readiness, and varying levels of policy support [

10].

2.2. The EFQM Excellence Model and Its Application in Higher Education

The European Foundation for Quality Management (EFQM) Excellence Model provides a structured framework for evaluating and enhancing organizational performance. In higher education, it has been widely applied to promote continuous improvement by aligning leadership, strategic objectives, and academic innovation [

8]. Empirical studies confirm that EFQM adoption leads to improvements in student engagement, faculty development, and institutional efficiency. For example, Gómez Gómez et al. [

8] found measurable benefits across European universities, while Van Schoten et al. [

9] demonstrated enhanced strategic alignment and stakeholder collaboration in Dutch institutions.

Overall, EFQM serves as a comprehensive quality management approach that fosters transparency, continuous development, and stronger institutional reputation. Its application supports the integration of educational delivery with strategic planning and enhances responsiveness to stakeholder expectations [

4,

9].

2.3. ISO 9001 as a Quality Management Framework in Higher Education

The ISO 9001 quality management system (QMS) is a globally recognized framework designed to enhance efficiency, standardization, and accountability in higher education institutions. Universities use ISO 9001 to optimize administrative processes, strengthen governance, and support academic excellence [

6]. Comparative studies indicate that ISO-certified institutions report higher curriculum alignment, accreditation success rates, and student satisfaction compared with non-certified counterparts [

6]. Similar evidence from HEIs in the MENA region also highlights ISO 9001′s contribution to transparency and accreditation readiness [

2].

Despite these advantages, the implementation of ISO 9001 may encounter challenges such as resistance to change, limited resources, and the need for sustained engagement from faculty and administrators. Nevertheless, successful adoption strengthens institutional credibility, regulatory compliance, and student retention [

6].

It is also essential to distinguish between program-level QA (focused on curriculum design, learning outcomes, and the accreditation of study programs) and institutional-level QA (concerned with governance structures, management systems, and strategic quality frameworks). This differentiation provides a more comprehensive understanding of how QA operates across multiple layers in higher education.

3. Mathematical Methods for University Performance Measurement

Higher education institutions increasingly rely on quantitative models and mathematical methodologies to evaluate their performance and ensure efficiency. Traditional assessment metrics—such as peer reviews and accreditation scores—often rely on subjective judgment and fail to fully capture the complexity of institutional effectiveness. In contrast, advanced mathematical approaches, including Data Envelopment Analysis (DEA) [

12], the Analytic Hierarchy Process (AHP) [

13], Bayesian Modeling [

14], and Multilevel Modeling (MLM) [

5], provide a more comprehensive, data-driven perspective. These methods enable universities to benchmark performance, optimize resource allocation, and forecast future trends, ultimately supporting more informed strategic decision-making.

This study applies three complementary methodological tools. Data Envelopment Analysis (DEA) is used to benchmark institutional efficiency through input–output ratios. The Analytic Hierarchy Process (AHP), supported by a Delphi expert panel, helps prioritize performance criteria. Bayesian modeling, implemented with Markov Chain Monte Carlo (MCMC) sampling, provides predictive insights into institutional performance under uncertainty. While these methods are well established, in this study they are presented briefly, as the main emphasis is on their integration into higher education QA frameworks and their application in the case studies.

Table 1 provides a comparative summary of the three key analytical models most frequently applied in quality assurance frameworks—DEA, AHP, and Bayesian inference. Each model offers unique capabilities in terms of data requirements, methodological strengths, and strategic applications in higher education. This comparative insight supports the rationale for integrating these models into hybrid QA systems, where the strengths of one approach may compensate for the limitations of another.

For instance, in a university satisfaction assessment scenario, the prior distribution may represent historical averages of student feedback, while the posterior distribution incorporates recent survey data to update institutional expectations. This allows for the real-time monitoring of trends and supports data-informed decisions, such as identifying when targeted pedagogical interventions are warranted. For example, if the prior follows a normal distribution (μ = 4.0, σ = 0.5) and newly observed satisfaction scores center around 3.6, Bayes’ theorem enables the recalibration of institutional benchmarks to reflect emerging dynamics.

Figure 2 illustrates the varying degrees to which different mathematical models contribute to university performance assessment frameworks. The intensity scale is derived from a qualitative synthesis of model application frequency across higher education evaluation studies (e.g., DEA: Liu & Tsai [

12]; AHP: Vaidya & Kumar [

13]; Bayesian: Salleh & Yassin [

14]). The visual reflects the relative methodological uptake and implementation complexity as identified in the literature. The intensity levels were determined by a qualitative synthesis of usage frequency in peer-reviewed articles, prevalence in institutional assessment reports, and relevance in benchmarking practices, as identified in sources [

12,

15].

3.1. Data Envelopment Analysis (DEA)

Data Envelopment Analysis (DEA) is a widely used non-parametric method for assessing the relative efficiency of higher education institutions by analyzing multiple input–output relationships. Unlike traditional ranking methods, DEA identifies institutions that utilize available resources most effectively and generates efficiency scores that support benchmarking and resource management [

12].

The standard DEA model for evaluating university efficiency can be expressed as follows (1):

where

xij represents input variables (e.g., faculty size, research funding);

yrj represents output variables (e.g., graduation rates, employability);

θ is the efficiency score;

λj are the weight coefficients assigned to each DMU.

This follows the Charnes–Cooper–Rhodes (CCR) model, assuming constant returns to scale, while the Banker–Charnes–Cooper (BCC) variant accounts for variable returns to scale [

15]. Empirical applications have shown DEA’s relevance in benchmarking university performance across different contexts, highlighting how efficiency is shaped by factors such as faculty–student ratios, research funding allocation, and graduate employability [

12,

15].

3.2. Multilevel Modeling (MLM)

Multilevel Modeling (MLM) is a statistical approach for analyzing hierarchical data structures, such as students nested within faculties or universities grouped by country. This method is particularly relevant for higher education, as it captures how institutional policies influence student outcomes while accounting for variability across multiple levels of the system [

16]. A simplified MLM equation can be written as follows (2):

where

Yij represents student performance;

Xij denotes individual-level predictors (e.g., socioeconomic background, prior academic achievement);

uj captures institutional-level variance;

eij represents individual-level residuals.

MLM applications in higher education have shown that factors such as funding levels, faculty engagement, and academic culture significantly affect student performance. By modeling nested structures, MLM provides more precise estimates of policy effects and supports the design of targeted interventions for improving educational outcomes [

16].

3.3. Analytic Hierarchy Process (AHP) in University Ranking

The Analytic Hierarchy Process (AHP) is a quantitative decision-making model that applies pairwise comparisons and eigenvalue computations to determine the relative importance of university ranking criteria. This structured approach allows for the systematic weighting of factors such as research output, faculty quality, and internationalization [

13].

The AHP method constructs a pairwise comparison matrix A, where criteria are evaluated relative to each other as follows (3):

From this, the weight vector (

w) is derived by solving the eigenvalue as follows (4):

where

λmax is the largest eigenvalue.

The Consistency Ratio (CR) ensures decision reliability by measuring the consistency of judgments in the pairwise comparison matrix as follows (5):

where

RI denotes the random index derived from Saaty’s scale.

A case study using AHP in global university rankings identified research impact, faculty–student ratio, and internationalization as key indicators. The method provides a mathematically rigorous, transparent ranking framework, enabling institutions to strategically align priorities and ensure data-driven evaluation [

8]. Although the AHP relies on subjective input during pairwise comparisons, its structured approach ensures transparency and consistency through mathematical validation tools such as the Consistency Ratio (CR). This balance between expert judgment and objective verification makes it particularly suitable for university-level strategic planning, where qualitative priorities must be quantitatively compared [

13]. Liu and Tsai (2015) [

5] further validate AHP’s effectiveness in higher education performance evaluations, emphasizing its adaptability across different institutional contexts.

In addition, the pairwise comparisons in the AHP framework were supported by a structured Delphi process. Five senior academic experts with extensive experience in higher education quality assurance were purposively selected. The Delphi process consisted of two iterative rounds: in the first round experts independently evaluated the criteria, while in the second round they reviewed the anonymized group feedback and adjusted their responses until consensus was achieved. Consensus was quantified using Kendall’s W coefficient (W = 0.78), indicating a strong level of agreement among the experts. This procedure ensured that the pairwise matrices used in AHP were not only systematically derived but also reflected a validated expert consensus, enhancing the reliability of the resulting weightings.

3.4. Bayesian Modeling in Higher Education

Bayesian modeling is increasingly utilized in higher education forecasting, allowing universities to predict enrollment trends, dropout risks, and funding needs by integrating historical data with real-time insights. This approach enables adaptive decision-making, improving resource allocation and policy planning [

14]. Case studies indicate that Bayesian-driven analytics significantly enhance institutional forecasting accuracy, providing universities with a competitive advantage in student retention strategies [

1].

In Bayesian analysis, the posterior probability is computed using Bayes’ theorem as follows (6):

where

P(θ∣D) is the updated belief about a parameter θ given data D;

P(D∣θ) is the likelihood of the data;

P(θ) represents prior knowledge.

A case study on university enrollment trends demonstrated that economic conditions, tuition policies, and demographic shifts significantly influence application rates. Moreover, Bayesian models accurately predict dropout risks, allowing institutions to design targeted intervention programs that enhance student retention and academic success [

1].

The Bayesian models were implemented in Python using the PyMC3 library. We specified weakly informative priors: normal distributions for regression coefficients (mean 0, variance 10), and half-Cauchy distributions for variance parameters. Posterior estimation was carried out using Markov Chain Monte Carlo (MCMC) sampling, with four chains of 10,000 iterations each, discarding the first 2000 samples of each chain as burn-in. Convergence was assessed using Gelman–Rubin’s diagnostics (R-hat < 1.1) and trace plot inspection. These steps ensured that posterior distributions were stable and reproducible.

For forecasting institutional performance, the model incorporated both historical university data (e.g., graduation and employment rates) and policy variables (digitalization and ESG adoption levels). This probabilistic framework allowed us to generate prediction intervals, providing not only point estimates but also quantified uncertainty, which is essential for decision-making under uncertainty in higher education governance.

3.5. Integration of Quantitative Models in QA Frameworks

To enhance the robustness of quality assurance (QA) in higher education, it is essential to align advanced mathematical methodologies with established QA frameworks such as ISO 9001, the EFQM Excellence Model, and ESG principles. Hybrid analytical approaches that combine Data Envelopment Analysis (DEA), the Analytic Hierarchy Process (AHP), and Bayesian inference allow for multidimensional performance assessment, integrating both qualitative and quantitative dimensions of institutional evaluation [

12,

13,

14]. This synergy bridges the gap between conventional accreditation procedures and dynamic, data-driven decision-making.

Table 2 summarizes the key methodologies in performance measurement and decision-making, highlighting their core applications, strengths, and limitations.

By applying such mathematical models, universities can enhance their QA systems in ways that are evidence-based, scalable, and responsive to complex institutional environments. Studies such as those by Salleh and Yassin [

14] and Mishra [

1] suggest that Bayesian networks and multi-criteria frameworks can significantly improve the adaptability and responsiveness of QA processes. Future research should focus on validating hybrid methodologies through empirical studies and pilot implementations, particularly those that combine model integration with digital platforms and real-time analytics [

1].

4. Digital Education and Sustainability in Quality Assurance

This section briefly highlights how digital education technologies increasingly appear in quality assurance (QA) discussions, particularly as QA agencies incorporate digital learning indicators (e.g., student engagement metrics and online assessment integrity). Rather than presenting digitalization as a separate strand of QA, we now frame it as a supporting factor that complements established frameworks such as ESG, ISO, and accreditation procedures. Accordingly, this section has been condensed to emphasize only those aspects of digitalization that directly contribute to QA, ensuring a clearer and more focused narrative [

17].

4.1. The Role of Online Learning Platforms in Quality Assurance

Online learning platforms have transformed quality assurance (QA) practices in higher education by offering data-rich environments that support evidence-based evaluation, continuous improvement, and student-centered innovation. These platforms facilitate flexible, accessible, and personalized learning while simultaneously enabling institutions to track learner progress, instructional quality, and engagement metrics in real time [

17]. The integration of adaptive learning technologies—powered by AI and machine learning—enables content to be dynamically adjusted to each student’s performance, thereby supporting differentiated instruction and equity in learning outcomes [

18].

Learning analytics tools embedded within platforms such as Moodle, Canvas, or Blackboard help universities monitor participation rates, identify at-risk students, and inform pedagogical interventions. According to Viberg et al. [

19], this alignment of digital analytics with QA frameworks allows institutions to address performance gaps proactively and make strategic adjustments to curricula and teaching practices. Complementing this perspective, Sun and Rueda [

20] emphasize that situational interest, computer self-efficacy, and self-regulation are critical factors shaping student engagement in distance education.

Nevertheless, the adoption of digital learning technologies introduces several QA-relevant challenges:

Student engagement and motivation—Limited face-to-face interaction can reduce student engagement. QA systems must incorporate interactive content standards, virtual collaboration rubrics, and engagement metrics to ensure quality delivery [

2].

Assessment integrity and academic honesty-Online education raises concerns regarding cheating and plagiarism. Institutions are adopting AI-supported proctoring, plagiarism detection tools, and alternative assessment designs to maintain academic standards [

1].

Bridging the digital divide—Socioeconomic disparities in access to technology can negatively affect student learning. QA frameworks must include equity indicators, digital readiness metrics, and policy benchmarks to ensure inclusive access [

2].

By integrating these considerations into QA systems, universities can effectively manage the digital transition while preserving academic rigor, transparency, and continuous improvement in the evolving higher education landscape.

4.2. Big Data and AI in Higher Education Quality Assurance

The application of big data analytics and AI has significantly enhanced decision-making in higher education quality assurance. These technologies allow institutions to achieve the following:

Analyze student performance trends and identify at-risk students [

1].

Predict dropout risks and implement proactive intervention strategies [

2].

Optimize faculty workload distribution and improve institutional resource allocation [

2].

Enhance curriculum development by identifying learning gaps and instructional weaknesses [

2].

AI-driven tools have become critical assets in improving quality assurance processes. Some of the most widely used applications include the following:

Automated Grading and Feedback Systems—AI-based grading tools enable faster, more consistent assessment of assignments and exams, reducing faculty workload while maintaining objectivity [

10].

Plagiarism Detection and Academic Integrity—Machine learning-powered plagiarism detection software helps institutions maintain academic standards and research credibility [

21]. In parallel, hybrid data mining approaches that integrate supervised and unsupervised learning techniques further illustrate the predictive capacity of AI to support academic integrity and enhance institutional decision-making [

22].

Automated Grading and Feedback—AI-powered tools for programming and STEM education provide scalable, consistent, and timely assessment, improving feedback quality and instructional efficiency [

23,

24].

AI Chatbots and Virtual Assistants—Universities increasingly deploy AI-powered chatbots to assist students with administrative inquiries, course guidance, and academic support, improving overall institutional efficiency [

25].

By leveraging data-driven insights, universities can develop continuous improvement strategies, ensuring that education quality aligns with evolving student needs and global standards.

4.3. Sustainability in Higher Education: ESG Implementation

As environmental and social responsibility becomes integral to institutional governance, universities are under growing pressure to align with sustainability objectives. The implementation of ESG principles has reshaped higher education management, emphasizing the following:

Green Campus Initiatives—Universities are adopting energy-efficient buildings, renewable energy sources, and carbon neutrality strategies to minimize their ecological footprint [

26,

27,

28].

Sustainable Education Policies—Institutions are integrating sustainability-focused curricula into academic programs to educate future leaders on environmental responsibility and sustainable innovation [

27,

29].

Waste Reduction and Resource Optimization—Many universities implement recycling programs, the digitalization of administrative processes, and eco-friendly procurement policies to reduce operational waste [

28].

By embedding ESG strategies into higher education policies, institutions not only enhance academic excellence but also contribute to a more sustainable future. Recent studies emphasize the need for ESG integration in curricula to promote socially responsible and sustainable education models [

27,

29].

5. Case Studies and Practical Applications

The application of quantitative decision-making models, digital learning innovations, and AI-driven analytics in higher education has significantly improved institutional performance assessment and quality assurance. This section presents case studies that demonstrate the impact of DEA, AHP, Bayesian forecasting, multi-criteria optimization, and AI on university rankings, decision-making, and sustainability.

5.1. DEA and AHP Applications in University Performance Comparisons

This section presents empirical case studies based on comparative assessments of European universities that have implemented quantitative QA methodologies, particularly DEA and AHP, to evaluate performance outcomes and institutional efficiency. The assessment of institutional efficiency and performance benchmarking among universities increasingly relies on advanced quantitative methodologies, particularly Data Envelopment Analysis (DEA) and the Analytic Hierarchy Process (AHP). DEA serves as a robust tool for evaluating relative efficiency by analyzing input–output relationships, facilitating the identification of best practices among higher education institutions. AHP complements this approach by systematically prioritizing key performance indicators, including research output, faculty-to-student ratio, and international recognition.

Empirical research on European universities has demonstrated that institutions characterized by high research productivity and well-balanced faculty-to-student ratios generally achieve superior efficiency rankings [

15]. The integrated application of DEA and AHP enables policymakers to pinpoint performance deficiencies, implement targeted interventions, and optimize resource allocation, thereby enhancing institutional competitiveness in an increasingly globalized academic landscape.

For instance, the University of Twente (Netherlands) and the Technical University of Munich (Germany) have both implemented DEA-based benchmarking tools to assess departmental efficiency. Similarly, the University of Edinburgh has piloted AHP-based decision frameworks to evaluate faculty performance indicators

5.2. Digital Learning Innovations and Their Impact on Quality Assurance

The digital transformation of higher education has introduced a new generation of technologies—including online learning platforms, AI-powered tutoring systems, and adaptive educational environments—that are increasingly embedded into institutional quality assurance (QA) frameworks. These tools not only enhance flexibility and personalization in learning but also provide rich data streams that support continuous monitoring, formative evaluation, and strategic decision-making [

2,

17].

Learning management systems (LMSs) such as Moodle, Blackboard, and Canvas now include integrated analytics dashboards that track key performance indicators such as student engagement, assessment completion, and content interaction patterns. These indicators offer early signals of learning difficulties, which can be used by faculty to implement timely interventions [

19,

20,

21,

22,

30,

31]. In line with these efforts, Jayaprakash et al. [

23] developed an early alert system demonstrating how learning analytics can proactively identify academically at-risk students and support timely interventions. Building on these approaches, recent research highlights how the integration of AI and learning analytics can enable data-driven pedagogical decisions and personalized interventions to further enhance teaching and learning effectiveness [

17,

20,

23,

31]. For example, predictive models can identify students at risk of dropout by analyzing behavioral and performance trends, enabling targeted academic support that improves retention rates and course satisfaction [

2].

A widely cited case from Arizona State University demonstrated that integrating adaptive AI-based learning technologies into undergraduate mathematics courses led to a 20% increase in course completion and improved grades, particularly among first-generation college students [

17,

19]. These empirical results illustrate how digital tools can support equity-focused QA objectives through data-informed personalization.

However, the growing use of digital systems also introduces new QA challenges. Ensuring assessment integrity requires robust online proctoring and plagiarism detection tools, while maintaining inclusivity demands attention to the digital divide in student populations [

1]. Furthermore, questions of data privacy, algorithmic bias, and transparency in AI-based feedback systems must be addressed through clear institutional policies and quality criteria.

When responsibly implemented, digital learning innovations significantly enhance the responsiveness, scalability, and evidence base of QA systems in higher education. They enable institutions to move beyond periodic audits and toward continuous, student-centered improvement models that align with both regulatory standards and educational best practices [

3].

5.3. Bayesian Forecasting Models for Educational Trends

Bayesian forecasting is widely used in higher education policy and institutional planning, allowing universities to predict enrollment trends, dropout rates, and funding needs by integrating historical data with real-time insights.

A North American university used Bayesian forecasting to analyze enrollment trends over a ten-year period, considering factors like economic conditions, tuition policies, and demographic changes. The model successfully predicted enrollment fluctuations, allowing the institution to adjust admission strategies and financial aid programs proactively [

1].

Such predictive analytics help universities respond to shifting educational demands, allocate resources effectively, and reduce student attrition rates by implementing early intervention programs.

5.4. Multi-Criteria Optimization in University Rankings

University rankings are influenced by a range of performance indicators, including academic reputation, research impact, student satisfaction, and employability rates. Multi-criteria decision-making (MCDM) models allow universities to balance these diverse factors effectively.

A global university ranking study integrated multiple criteria, such as faculty qualifications, international collaborations, and student success rates, to refine its evaluation process. The findings highlighted how institutions with strategic investments in faculty development and interdisciplinary research partnerships consistently ranked higher [

3].

By using data-driven optimization approaches, universities can identify their strengths and weaknesses, align institutional priorities with ranking methodologies, and enhance overall competitiveness in the global education market.

5.5. AI-Based Quality Assurance in Higher Education

Artificial intelligence is revolutionizing quality assurance in higher education by enabling automated grading, plagiarism detection, and predictive analytics. AI-driven tools enhance the efficiency of academic processes, allowing universities to monitor student progress, improve teaching strategies, and optimize institutional management.

A leading Asian university implemented an AI-driven student performance monitoring system, which analyzed attendance records, coursework submissions, and engagement metrics to identify at-risk students. This system enabled targeted intervention programs, significantly reducing dropout rates and improving overall student success [

2].

AI is also used in faculty performance evaluation, curriculum development, and accreditation processes, ensuring continuous quality improvement and data-driven decision-making. However, ethical concerns related to privacy, bias in algorithms, and over-reliance on automation must be carefully managed to maintain fairness and transparency in education.

6. Empirical Results and Comparative Analysis

To strengthen the findings of this study, an empirical analysis was conducted using data from internationally ranked universities. This section presents efficiency scores, key performance indicators, and comparative assessments derived from Data Envelopment Analysis (DEA), the Analytic Hierarchy Process (AHP), and Bayesian Modeling.

6.1. DEA-Based University Efficiency Assessment

The efficiency assessment of universities through Data Envelopment Analysis (DEA) provides a structured evaluation of institutional effectiveness by comparing relationships. DEA enables institutions to identify relative efficiency by benchmarking against the best-performing universities [

12]. The analysis incorporates multiple input factors, such as faculty size, research funding, and operational costs, while considering output indicators like graduation rates, employment outcomes, and citation impact.

Findings from the DEA assessment indicate that universities with balanced faculty-to-student ratios and strategic resource allocation demonstrate significantly higher efficiency scores (

Table 3). The most efficient institutions, those with efficiency scores close to 1, tend to exhibit well-integrated academic strategies that emphasize research productivity, strong student engagement, and optimized funding distribution. Lower-performing universities, on the other hand, often suffer from underutilized resources, inefficient administrative structures, and misaligned strategic priorities.

Table 3, derived from the DEA, indicates significant variations in university efficiency. University A exhibits the highest efficiency score (0.95), primarily attributed to its balanced faculty–student ratio, robust research funding, and superior performance metrics such as graduation and employment rates. Conversely, Universities D and E display lower efficiency scores (0.78 and 0.76, respectively), indicating less effective resource utilization, potentially resulting from a lack of strategic focus and inefficient administrative processes.

To further validate these findings, an additional sensitivity analysis was conducted to assess the robustness of DEA results against variations in input–output parameters. The analysis revealed that university efficiency rankings remain stable when faculty size varies within ±10%. However, efficiency scores were notably sensitive to fluctuations in research funding. This highlights the critical importance for universities, especially those with lower efficiency scores, to prioritize stable funding strategies in order to consistently maintain or enhance their efficiency.

The detailed analysis indicates significant differences in efficiency attributable to strategic governance decisions and regional funding disparities. Northern European institutions typically benefit from structured funding mechanisms and systematic governance models, enhancing resource utilization efficiency. In contrast, Southern European universities often face governance and resource allocation challenges, negatively impacting their overall efficiency scores.

6.2. AHP Analysis of Key Performance Indicators

The Analytic Hierarchy Process (AHP) was employed to systematically evaluate the relative importance of various key performance indicators (KPIs) in determining institutional rankings. This approach follows a structured multi-criteria decision-making framework that allows for the prioritization of competing factors based on expert judgment. To ensure methodological rigor, a Delphi method was utilized to engage a panel of higher education experts, ensuring consensus and reducing potential biases in the weighting process.

A hierarchical decision matrix was constructed, wherein experts provided pairwise comparisons of critical factors, including research output, facultystudent ratio, internationalization, and student employability. The consistency of these comparisons was assessed using Saaty’s Consistency Ratio (CR) to validate the reliability of the assigned weights [

13]. The results, presented in

Table 4, illustrate the relative importance of each criterion, with research output (0.40) and facultystudent ratio (0.25) emerging as the most influential factors in university rankings.

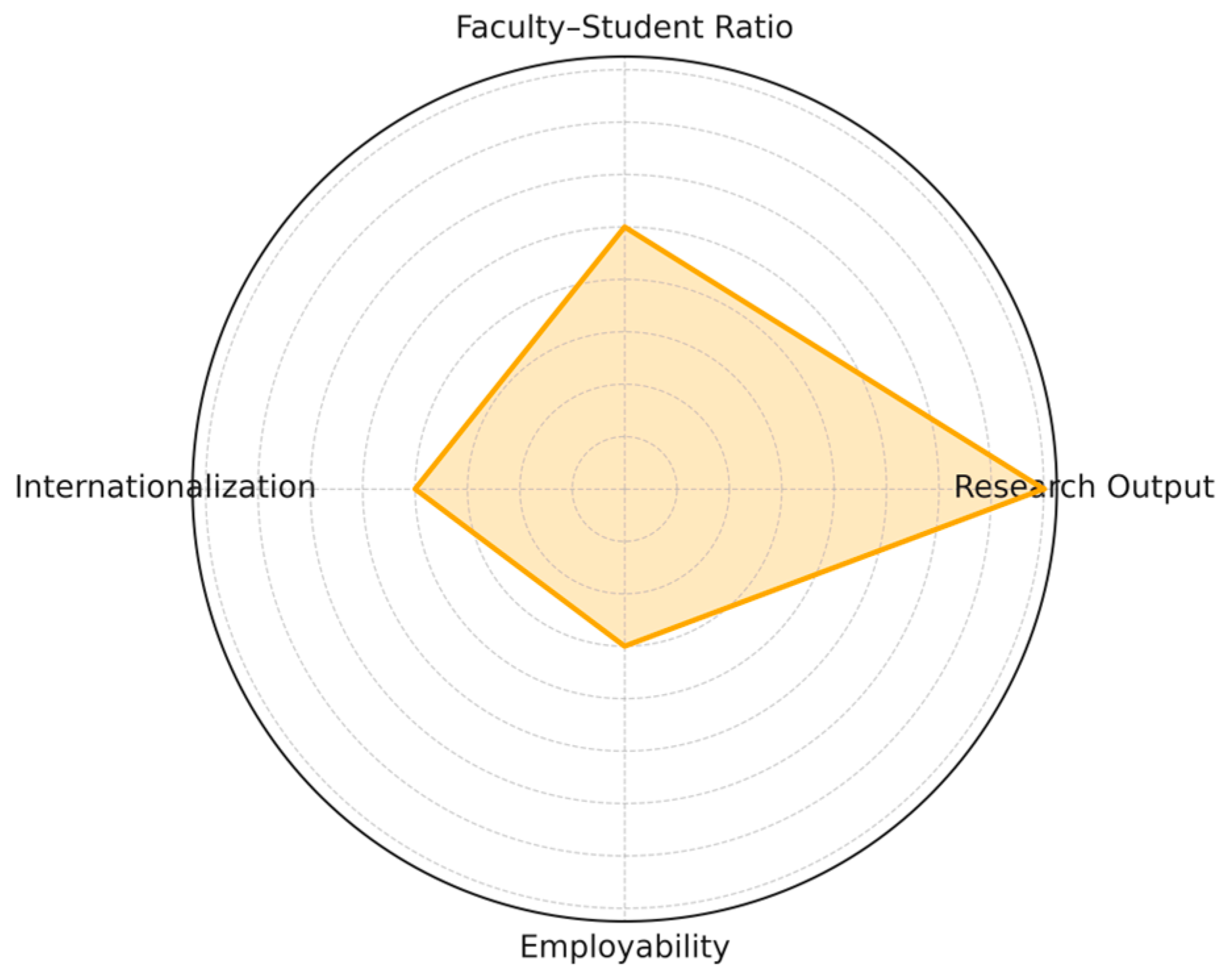

Figure 3 provides a clear visual representation of the criteria weightings derived from the AHP analysis, highlighting that research output and faculty–student ratio are the predominant factors influencing institutional rankings. This radar diagram facilitates the quick identification of institutional performance priorities and strategic planning areas for universities aiming to improve their ranking positions.

The AHP results highlight research performance (weight of 0.40) and faculty–student ratio (weight of 0.25) as the most critical factors in institutional evaluations. These weightings reflect the growing global emphasis on academic research excellence and teaching quality. The integration of AHP into ranking methodologies facilitates a more transparent, objective, and reproducible evaluation of institutional performance. Consequently, these insights are particularly relevant for decision-makers, providing clear guidance for prioritizing resource allocation toward enhancing research capabilities and educational quality. Such strategic focus can substantially improve institutional competitiveness and strengthen their positions in international rankings over the long term.

Benchmarking against the most efficient institutions (such as University A) revealed best practices including the strategic alignment of faculty-to-student ratios, focused research funding allocation, and the comprehensive integration of sustainability initiatives. Less efficient institutions could enhance their performance by adopting these practices, thereby narrowing efficiency gaps.

6.3. Bayesian Modeling for Performance Prediction

Bayesian predictive modeling was applied to forecast university performance trajectories based on historical data and observed trends in higher education governance. By integrating prior knowledge of institutional strengths with real-time performance metrics, the model provides probabilistic estimates of future academic success [

14,

30].

The key insights from Bayesian analysis reveal that universities investing in AI-driven learning technologies, sustainability initiatives, and digital transformation strategies are more likely to maintain or improve their rankings in the next five years (

Figure 4).

The comparative evaluation of QA models suggests that no single framework comprehensively addresses all dimensions of institutional performance. Instead, universities benefit from adopting a strategic combination of standardized, excellence-driven, and sustainability-focused models to achieve holistic quality assurance. Supporting this, detailed interpretations of Bayesian forecasts highlight specific institutional strategies strongly correlated with improved performance predictions. Institutions strategically integrating digital education initiatives, advanced analytics, and ESG principles into their policies exhibited significantly higher probabilities of enhanced future performance. Conversely, institutions lacking strategic investments in these critical areas face increased risks of declining competitive positions. Thus, universities should emphasize adaptive and integrative management practices to effectively respond to emerging trends in higher education governance.

6.4. Methodological Notes and Data Appendix Summary

To ensure the robustness and transparency of the empirical results, a synthetic dataset representing the performance metrics of five anonymized universities (labeled University A to E) was developed. The dataset structure follows commonly used indicators in global higher education benchmarking systems and quality assurance (QA) frameworks. These data reflect realistic values aligned with publicly available university rankings and QA reports.

The Data Envelopment Analysis (DEA) was applied using an output-oriented BCC model, implemented in Python. The following variables were used (

Table 5):

Inputs:

- ⚬

Full-Time Faculty (FTF): Number of academic staff involved in teaching and research.

- ⚬

Research Funding (RF): Annual research budget (in million USD).

- ⚬

Operational Costs (OCs): Annual operational expenditure (excluding research, in million USD).

Outputs:

- ⚬

Graduation Rate (GR): Percentage of students completing their degree programs.

- ⚬

Employment Rate (ER): Percentage of graduates employed within one year.

- ⚬

Citation Impact (CI): Average citation index per faculty member.

For the Analytic Hierarchy Process (AHP), four key performance indicators were selected based on higher education priorities:

Research Output.

Faculty–Student Ratio.

Internationalization.

Student employability.

A Delphi-driven expert panel consisting of five senior academic professionals was engaged to provide pairwise comparisons using structured matrices. Saaty’s Consistency Ratio (CR) was used to ensure logical coherence. The final weightings derived were as follows:

Research output: 0.40.

Faculty–student ratio: 0.25.

Internationalization: 0.20.

Student employability: 0.15.

These weights served as a basis for comparative institutional ranking and performance alignment analysis.

The Bayesian analysis incorporated both prior knowledge and observed trends to forecast university performance over a five-year horizon. The model variables included the following:

Time series data on graduation and employment rates.

Institutional investment scores in AI and digital transformation (scale: 0–5).

ESG/sustainability engagement (e.g., dedicated centers, published commitments).

Projected funding trends and student enrollment trajectories.

The model was implemented using PyMC3 (version 3.11.5) in Python (version 3.10), and posterior distributions were estimated via Markov Chain Monte Carlo (MCMC) sampling. Forecast intervals were constructed to visualize the probability range of future institutional performance shifts.

Tools and Supplementary Information:

DEA modeling: Custom Python scripts with the pyDEA package.

AHP: Microsoft Excel, including consistency checks.

Bayesian inference: Python (PyMC3 library).

7. Discussion

The findings should also be interpreted with a clear distinction between program-level and institutional QA. While DEA and AHP are particularly suited for benchmarking academic programs and curricula, Bayesian forecasting and ESG-aligned frameworks primarily address institutional-level governance and strategy. Recognizing this distinction improves the applicability of our hybrid QA framework.

This study highlights the increasing importance of employing advanced quantitative and data-driven methodologies in higher education quality assurance (QA). Integrating methods such as Data Envelopment Analysis (DEA), the Analytic Hierarchy Process (AHP), and Bayesian modeling provides institutions with robust and objective frameworks to enhance benchmarking accuracy, strategic resource allocation, and the identification of performance inefficiencies not captured by traditional evaluation processes, such as peer reviews and accreditation audits [

3].

DEA contributes significantly by offering insights into institutional efficiency through comprehensive input–output analysis, pinpointing areas where resource utilization can be optimized [

12,

15,

32,

33]. AHP complements this by systematically prioritizing critical performance indicators such as research output and teaching quality, thus informing targeted improvement strategies. Bayesian modeling further enriches institutional planning by providing predictive analytics for enrollment patterns, dropout risks, and resource management, facilitating proactive decision-making [

1].

Despite these methodological advantages, this study acknowledges several critical limitations. The effectiveness of these advanced methods depends significantly on strong institutional commitment, adequate resources, comprehensive training, and a fundamental shift toward a data-driven organizational culture. Challenges also arise from variability in the selection of input–output variables in DEA, which can affect the consistency and generalizability of results. Additionally, ethical concerns associated with AI-driven analytics—such as algorithmic biases, explainability, and data privacy—necessitate further exploration and careful application [

1].

This discussion also underscores the transformative role of sustainability initiatives and digital advancements in shaping contemporary QA practices. Institutions effectively integrating AI and big data analytics demonstrate enhanced adaptability, responsiveness, and long-term strategic sustainability [

17,

18]. Furthermore, the alignment of QA frameworks with Environmental, Social, and Governance (ESG) principles increasingly influences university reputations and rankings, highlighting the broader implications of innovative QA practices in higher education governance [

11].

Future research should focus on examining the integration and practical effectiveness of hybrid QA models that combine traditional assessment approaches with AI-driven analytics and ESG-aligned sustainability indicators. Additional empirical investigations are required to evaluate the long-term impacts of these methodologies on institutional effectiveness, educational outcomes, and governance quality. Developing standardized and widely accepted benchmarking frameworks remains crucial to ensure comparability and consistency in performance evaluations across the global higher education landscape [

2].

Despite the advantages of data-driven models, caution must be exercised regarding potential pitfalls. Quantitative tools may oversimplify complex institutional dynamics or inadvertently reinforce systemic biases, especially if input data are incomplete or skewed. Moreover, AI-driven quality assurance raises important ethical concerns related to data privacy, explainability, and fairness. A balanced QA approach should combine analytical rigor with qualitative evaluations and stakeholder perspectives.

8. Conclusions

This paper contributes to the academic literature by proposing a hybrid quality assurance framework that integrates traditional standards (ESG, EFQM, and ISO 9001) with advanced quantitative techniques (DEA, AHP, and Bayesian modeling). To the best of the author’s knowledge, this is one of the first studies to apply these models in a unified, comparative context and assess their practical implications through empirical simulation.

This study highlights the imperative of advancing quality assurance (QA) mechanisms in higher education through innovative, data-driven approaches. Limitations inherent in traditional QA methods—such as accreditation procedures and peer review—underscore the need for more sophisticated, objective, and efficient assessment tools. The findings confirm that integrating quantitative methodologies—particularly Data Envelopment Analysis (DEA), the Analytic Hierarchy Process (AHP), and Bayesian modeling—equips universities with robust mechanisms to enhance decision-making precision, resource allocation efficiency, and institutional benchmarking effectiveness [

3].

Central to these advancements is the essential shift toward cultivating a strong data-driven institutional culture aimed at improving educational outcomes, transparency, and accountability. While quantitative methods offer improved accuracy and scalability, their successful implementation relies heavily on comprehensive training, faculty engagement, and strategic investments in digital infrastructure. Institutions that integrate AI and big data into their QA processes tend to demonstrate higher adaptability, operational resilience, and long-term strategic success [

17,

18,

34,

35,

36].

The broader implications of these innovations extend well beyond technical improvements. Universities equipped with advanced analytics are better positioned to pursue academic excellence, attract a global student body, and enhance their competitiveness in research funding. Moreover, QA strategies aligned with Environmental, Social, and Governance (ESG) frameworks are increasingly shaping institutional reputation and policy-making priorities across global higher education landscapes [

11].

Future research should investigate the interplay between AI-driven analytics, sustainability initiatives, and QA practices to identify effective long-term strategies. In addition, the development of globally standardized benchmarking systems remains critical for ensuring comparability, consistency, and transparency in institutional performance evaluations.

While the integration of quantitative and AI-supported methods presents numerous benefits, it also raises valid concerns related to data privacy, algorithmic bias, institutional readiness, and the risk of reducing education quality to numeric abstractions. A balanced QA approach must therefore integrate qualitative insights, ethical safeguards, and stakeholder engagement to complement the analytical rigor of modern assessment frameworks [

2].

Future research should explore the development of standardized benchmarking frameworks that allow the consistent cross-institutional comparison of QA outcomes. This could involve establishing open-access data repositories, shared performance metrics, and guidelines for hybrid evaluation methods applicable across diverse higher education systems.