Methods and Target Values Used to Evaluate Teaching Concepts, with a Particular Emphasis on the Incorporation of Digital Elements in Higher Education: A Systematic Review

Abstract

1. Introduction

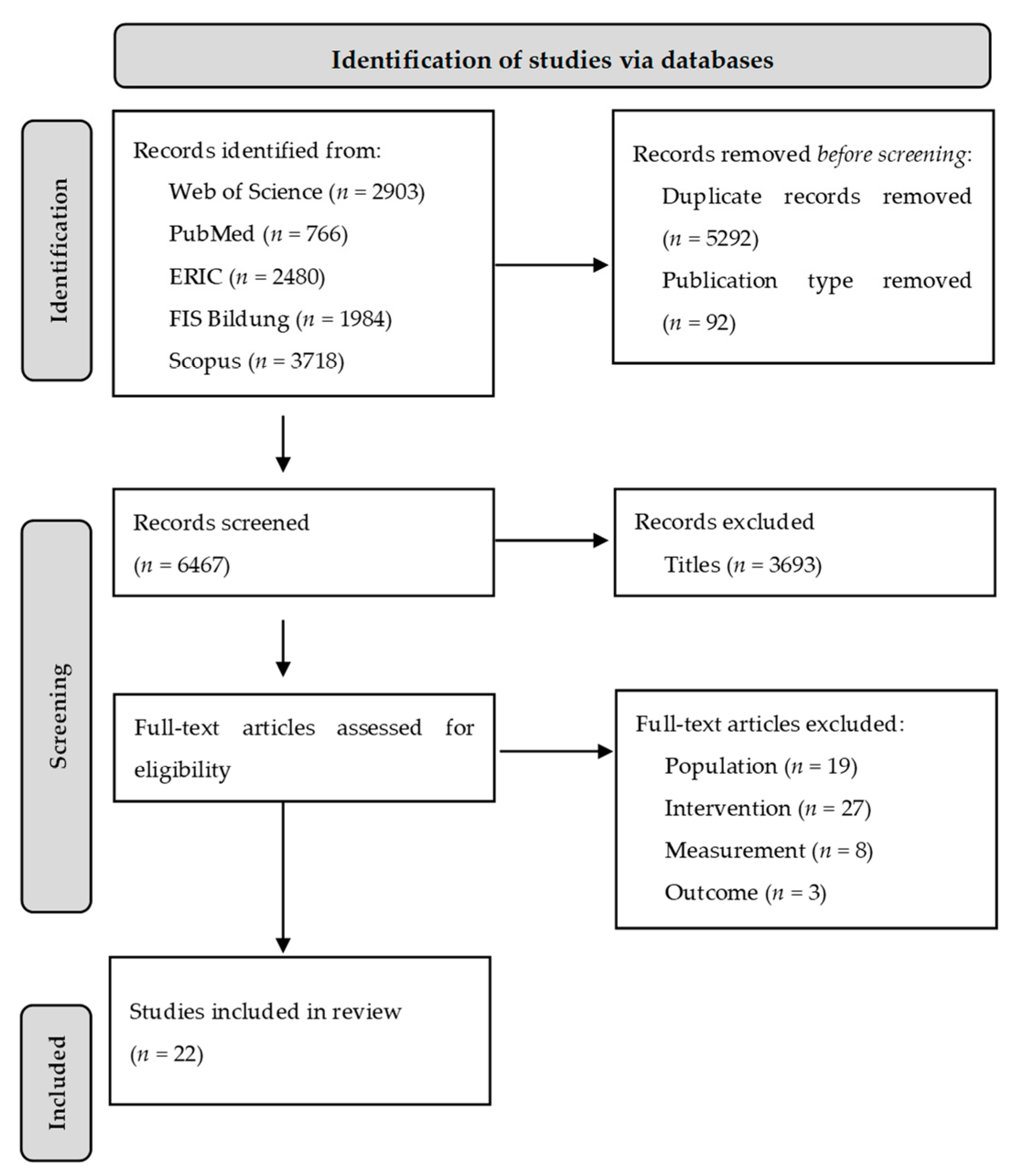

2. Methods and Material

- Participants had to be students enrolled in universities or universities of applied sciences, taking courses related to sports science, such as anatomy, exercise science, biology, biomechanics, health sciences, management, medicine, physiology, psychology, physical education, or statistics. Programs unrelated to sports studies, such as art or music, were excluded.

- The intervention must have included a comprehensive teaching concept implemented over a minimum of one semester, integrating digital components such as gaming or quiz formats, videos, podcasts, and an explanation of their use, such as in a blended learning context.

- The study’s outcomes were required to include precise target values and the instruments used to measure them, as described in the scholarly publication. Outcomes related to ‘digital competency’ or ‘digital literacy’ were excluded from the scope of this analysis, as they have been extensively examined in previously published systematic reviews [11,12].

- The research design encompassed both quantitative and qualitative methods, inclusive of cross-sectional or longitudinal studies, as well as pilot studies.

- Eligibility requires that studies are accessible as full-text articles published in scientific journals, with the language of publication being either German or English.

- digital elements: online based, e-learning, online/digital learning/teaching/tool/education/method, e-teaching, technolog* tool, technology enhanced learning

- setting: university, higher education

- teaching concept: concept/s, approach/es

- evaluation: evaluat*, measur*, assessment, analysis, intervention, effectiveness, survey, test, exploration, impact, effect, investigation

3. Results

3.1. Quantitative Methods

3.1.1. Evidence Level (A)

3.1.2. Evidence Level (B)

3.1.3. Evidence Level (C)

3.2. Qualitative Methods

4. Discussion

Strengths and Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jakoet-Salie, A.; Ramalobe, K. The Digitalization of Learning and Teaching Practices in Higher Education Institutions during the COVID-19 Pandemic. Teach. Public Adm. 2023, 41, 59–71. [Google Scholar] [CrossRef]

- Rosak-Szyrocka, J.; Zywiolek, J.; Zaborski, A.; Chowdhury, S.; Hu, Y.-C. Digitalization of Higher Education around the Globe during COVID-19. IEEE Access 2022, 10, 59782–59791. [Google Scholar] [CrossRef]

- Ødegaard, N.B.; Myrhaug, H.T.; Dahl-Michelsen, T.; Røe, Y. Digital Learning Designs in Physiotherapy Education: A Systematic Review and Meta-Analysis. BMC Med. Educ. 2021, 21, 48. [Google Scholar] [CrossRef] [PubMed]

- Fuchs, K. The Difference between Emergency Remote Teaching and Online Learning. New Dir. Teach. Learn. 2020, 78, 37–47. [Google Scholar]

- Donkin, R.; Rasmussen, R. Student Perception and the Effectiveness of Kahoot!: A Scoping Review in Histology, Anatomy, and Medical Education. Anat. Sci. Educ. 2021, 14, 572–585. [Google Scholar] [CrossRef]

- Noetel, M.; Griffith, S.; Delaney, O.; Sanders, T.; Parker, P.; Cruz, B.d.P.; Lonsdale, C. Video Improves Learning in Higher Education: A Systematic Review. Rev. Educ. Res. 2021, 91, 204–236. [Google Scholar] [CrossRef]

- Tan, C.; Yue, W.-G.; Fu, Y. Effectiveness of Flipped Classrooms in Nursing Education: Systematic Review and Meta-Analysis. Chin. Nurs. Res. 2017, 4, 192–200. [Google Scholar] [CrossRef]

- Braun, E.; Gusy, B.; Leidner, B.; Hannover, B. Das Berliner Evaluationsinstrument für Selbsteingeschätzte, studentische Kompetenzen (BEvaKomp) [The Berlin Evaluation Instrument for Self-Assessed Student Competences]. Diagnostica 2008, 54, 30–42. [Google Scholar] [CrossRef]

- Zumbach, J.; Spinath, B.; Schahn, J.; Friedrich, M.; Koegel, M. Entwicklung Einer Kurzskala zur Lehrevaluation [Development of a Short Scale for Teaching Evaluation]. In Psychodidaktik und Evaluation, 6th ed.; Kraemer, M., Preiser, S., Brusdeylins, K., Eds.; V & R Unipress: Goettingen, Germany, 2007; Volume 6, pp. 317–325. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, 71. [Google Scholar] [CrossRef]

- Esteve-Mon, F.M.; Llopis-Nebot, M.A.; Adell-Segura, J. Digital Teaching Competence of University Teachers: A Systematic Review of the Literature. Rev. Iberoam. Tecnol. Aprendiz. 2020, 15, 399–406. [Google Scholar] [CrossRef]

- Sillat, L.H.; Tammets, K.; Laanpere, M. Digital Competence Assessment Methods in Higher Education: A Systematic Literature Review. Educ. Sci. 2021, 11, 402. [Google Scholar] [CrossRef]

- Ouzzani, M.; Hammady, H.; Fedorowicz, Z.; Elmagarmid, A. Rayyan—A Web and Mobile App for Systematic Reviews. Syst. Rev. 2016, 5, 210. [Google Scholar] [CrossRef] [PubMed]

- Alabdulkarim, L. University Health Sciences Students Rating for a Blended Learning Course Framework: University Health Sciences Students Rating. Saudi J. Biol. Sci. 2021, 28, 5379–5385. [Google Scholar] [CrossRef] [PubMed]

- Back, D.; Haberstroh, N.; Hoff, E.; Plener, J.; Haas, N.; Perka, C.; Schmidmaier, G. Implementierung Des ELearning-Projekts NESTOR: Ein Netzwerk für Studierende der Traumatologie und Orthopädie [Implementation of the ELearning Project NESTOR: A Network for Students in Traumatology and Orthopedics]. Chirurg 2012, 83, 45–53. [Google Scholar] [CrossRef]

- Bradley, L.J.; Meyer, K.E.; Robertson, T.C.; Kerr, M.S.; Maddux, S.D.; Heck, A.J.; Reeves, R.E.; Handler, E.K. A Mixed Method Analysis of Student Satisfaction with Active Learning Techniques in an Online Graduate Anatomy Course: Consideration of Demographics and Previous Course Enrollment. Anat. Sci. Educ. 2023, 16, 907–925. [Google Scholar] [CrossRef]

- Campillo-Ferrer, J.-M.; Miralles-Martínez, P. Impact of an Inquiry-Oriented Proposal for Promoting Technology-Enhanced Learning in a Post-Pandemic Context. Front. Educ. 2023, 8, 1204539. [Google Scholar] [CrossRef]

- Chao, H.-W.; Wu, C.-C.; Tsai, C.-W. Do Socio-Cultural Differences Matter? A Study of the Learning Effects and Satisfaction with Physical Activity from Digital Learning Assimilated into a University Dance Course. Comput. Educ. 2021, 165, 104150. [Google Scholar] [CrossRef]

- Dantas, A.M.; Kemm, R.E. A Blended Approach to Active Learning in a Physiology Laboratory-Based Subject Facilitated by an e-Learning Component. Adv. Physiol. Educ. 2008, 32, 65–75. [Google Scholar] [CrossRef][Green Version]

- Dlouhá, J.; Burandt, S. Design and Evaluation of Learning Processes in an International Sustainability Oriented Study Programme. In Search of a New Educational Quality and Assessment Method. J. Clean. Prod. 2015, 106, 247–258. [Google Scholar] [CrossRef]

- Drozdikova-Zaripova, A.R.; Sabirova, E.G. Usage of Digital Educational Resources in Teaching Students with Application of ‘Flipped Classroom’ Technology. Contemp. Educ. Technol. 2020, 12, ep278. [Google Scholar] [CrossRef]

- Fatima, S.S.; Arain, F.M.; Enam, S.A. Flipped Classroom Instructional Approach in Undergraduate Medical Education. Pak. J. Med. Sci. 2017, 33, 1424–1428. [Google Scholar] [CrossRef] [PubMed]

- Gazibara, T.; Marusic, V.; Maric, G.; Zaric, M.; Vujcic, I.; Kisic-Tepavcevic, D.; Maksimovic, J.; Maksimovic, N.; Denic, L.M.; Grujicic, S.S.; et al. Introducing E-Learning in Epidemiology Course for Undergraduate Medical Students at the Faculty of Medicine, University of Belgrade: A Pilot Study. J. Med. Syst. 2015, 39, 121. [Google Scholar] [CrossRef]

- Graf, D.; Yaman, M. Blended-Learning in der Biologielehrerausbildung: Ein Kooperationsprojekt zwischen der TU Dortmund und der Hacettepe Universitaet Ankara [Blended learning in biology teacher training: A collaborative project between TU Dortmund university and Hacettepe un]. J. Hochschuldidaktik 2011, 22, 949–2429. [Google Scholar] [CrossRef]

- Liu, X.-Y.; Lu, C.; Zhu, H.; Wang, X.; Jia, S.; Zhang, Y.; Wen, H.; Wang, Y.-F. Assessment of the Effectiveness of BOPPPS-Based Hybrid Teaching Model in Physiology Education. BMC Med. Educ. 2022, 22, 217. [Google Scholar] [CrossRef]

- Lu, C.; Xu, J.; Cao, Y.; Zhang, Y.; Liu, X.; Wen, H.; Yan, Y.; Wang, J.; Cai, M.; Zhu, H. Examining the Effects of Student-Centered Flipped Classroom in Physiology Education. BMC Med. Educ. 2023, 23, 233. [Google Scholar] [CrossRef]

- Ma, L.; Lee, C.S. Evaluating the Effectiveness of Blended Learning Using the ARCS Model. J. Comput. Assist. Learn. 2021, 37, 1397–1408. [Google Scholar] [CrossRef]

- Melton, B.F.; Bland, H.; Chopak-Foss, J. Achievement and Satisfaction in Blended Learning versus Traditional General Health Course Designs. Int. J. Scholarsh. Teach. Learn. 2009, 3, 26. [Google Scholar] [CrossRef]

- Ornellas, A.; Carril, P.C.M. A Methodological Approach to Support Collaborative Media Creation in an E-Learning Higher Education Context. Open Learn. 2014, 29, 59–71. [Google Scholar] [CrossRef]

- Ouchaouka, L.; Laouina, Z.; Moussetad, M.; Talbi, M.; El Amrani, N.; ElKouali, M. Effectiveness of a Learner-Centered Pedagogical Approach with Flipped Pedagogy and Digital Learning Environment in Higher Education Feedback on a Cell Biology Course. Int. J. Emerg. Technol. Learn. 2021, 16, 4–15. [Google Scholar] [CrossRef]

- Pieter, A.; Schiefner, M.; Strittmatter, P. Konzeption, Implementation und Evaluation von Online-Seminaren in der universitaeren, erziehungswissenschaftlichen Ausbildung [Conception, implementation, and evaluation of online seminars in university educational science education]. Medien. Z. Theor. Prax. Medien. 2004, 17, 1–21. [Google Scholar] [CrossRef]

- Surov, A.; March, C.; Pech, M. Curricular Teaching during the COVID-19-Pandemic: Evaluation of an Online-Based Teaching Concept. Radiologe 2021, 61, 300–306. [Google Scholar] [CrossRef]

- Wilson, L.; Greig, M. Students’ Experience of the Use of an Online Learning Channel in Teaching and Learning: A Sports Therapy Perspective. Int. J. Ther. Rehabil. 2017, 24, 289–296. [Google Scholar] [CrossRef][Green Version]

- Wong, M.S.; Lemaire, E.D.; Leung, A.K.L.; Chan, M.F. Enhancement of Prosthetics and Orthotics Learning and Teaching through E-Learning Technology and Methodology. Prosthet. Orthot. Int. 2004, 28, 55–59. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Zarcone, D.; Saverino, D. Online Lessons of Human Anatomy: Experiences during the COVID-19 Pandemic. Clin. Anat. 2022, 35, 121–128. [Google Scholar] [CrossRef]

- Martin, F.; Sun, T.; Westine, C.D. A Systematic Review of Research on Online Teaching and Learning from 2009 to 2018. Comput. Educ. 2020, 159, 104009. [Google Scholar] [CrossRef]

- Fang, M.; Choi, M.; Kim, K.; Chan, S. Student Engagement and Satisfaction with Online Learning: Comparative Eastern and Western Perspectives. J. Univ. Teach. Learn. Pract. 2023, 20, 20. Available online: https://ro.uow.edu.au/jutlp/vol20/iss5/17 (accessed on 13 June 2024). [CrossRef]

- Al-Samarraie, H.; Shamsuddin, A.; Alzahrani, A.I. A Flipped Classroom Model in Higher Education: A Review of the Evidence across Disciplines. Educ. Technol. Res. Dev. 2020, 68, 1017–1051. [Google Scholar] [CrossRef]

- Schoenbaechler, M.T. Ergaenzende Perspektive: Anpassungsnotwendigkeit der Evaluation von Hochschullehre [Supplementary Perspective: Adapting the Evaluation of University Teaching]. Beitraege Lehrerinnen-Lehrerbildung 2021, 39, 392–395. [Google Scholar] [CrossRef]

- Kärchner, H.; Gehle, M.; Schwinger, M. Entwicklung und Validierung des Modularen Fragebogens zur Evaluation Digitaler Lehr-Lern-Szenarien (MOFEDILLS) [Development and Validation of a Modular Questionnaire for Evaluating Digital Teaching and Learning Scenarios]. ZeHf–Z. Empirische Hochschulforschung 2023, 6, 62–84. [Google Scholar] [CrossRef]

- Yu, H. The Application and Challenges of ChatGPT in Educational Transformation: New Demands for Teachers’ Roles. Heliyon 2024, 10, e24289. [Google Scholar] [CrossRef]

- Zeb, A.; Ullah, R.; Karim, R. Exploring the Role of ChatGPT in Higher Education: Opportunities, Challenges and Ethical Considerations. Int. J. Inf. Learn. Technol. 2024, 41, 99–111. [Google Scholar] [CrossRef]

| Authors (Year), Country [Reference Number] | Subject of Teaching, Population (N = Number of Students), Measurement Points (Mp), Study Design | Groups, Teaching Format, Didactical Integration, Digital Elements | Outcomes |

|---|---|---|---|

| Alabdulkarim (2021), Saudi Arabia [14] | Subject: health sciences education and research methods N = 118 Mp: post + biweekly survey Design: mixed methods | Groups: 1 Teaching format: BL Didactical integration: N/A Digital elements: smart board tools, virtual classrooms, course website, Blackboard (quizzes, students’ online feedback, forums, course tools), Google Docs, LMS | Target values: students’ attitude and satisfaction Students’ evaluation (quantitative, post): 23 questions towards BL elements and tools: team-based learning, online students’ writing feedback, f2f lectures, Blackboard, software and digital resources, Google Docs, joining senior faculty research project, assessment; 5-point Likert scale (strongly disagree, disagree, undecided, agree, strongly agree). Evidence Level: B Target value: students’ performance Exam: final exam (N/A) Evidence Level: C Target value: students’ satisfaction Students’ feedback (qualitative, biweekly): self-reported biweekly semi-structured feedback about virtual classrooms, team-based learning and f2f lectures. |

| Back et al. (2012), Germany [15] | Subject: orthopedics and trauma surgery N = 108 Mp: post Design: quantitative | Groups: 1 Teaching format: BL Didactical integration: media didactic and technical aspects, curriculum integration Digital elements: LMS, Videos, Podcasts, interactive images editing with Blackboard software, virtual patient cases, knowledge tests, 3D animations | Target values: students’ acceptance and satisfaction Students’ evaluation: 12 self-generated questions on the acceptance and satisfaction of the learning program; 5-point Likert scale (strongly disagree, disagree, neutral, agree, strongly agree). Two open-ended questions about particular like and potential for improvement. Evidence Level: C |

| Bradley et al. (2023), USA [16] | Subject: medical science structural anatomy course N = 170 Mp: pre + 5 interim surveys + post Design: mixed methods | Groups: 4 active learning groups, one control group; each n = 31–36 Teaching format: online-learning Didactical integration: Jigsaw, Team-Learning Module (adaptation of Team-Based Learning model), Concept Mapping, Question Constructing Digital elements: Canvas Learning Management System, Students recorded PowerPoint slide; discussion board; Canvas quizzes | Target value: students’ satisfaction Students’ evaluation (quantitative): 5 items: preparedness, usefulness, productiveness, appropriateness, likelihood; 5-point Likert scale in a combined score ranging from 5 to 25 (5 = least satisfied, 25 = most satisfied). The post-course survey asked students to rank all the active learning techniques from best to worst based on preparedness, usefulness, productiveness, appropriateness, and likelihood of using the techniques in the future. Evidence Level: C Target value: course evaluation Students’ evaluation (qualitative): one open-ended question: ‘Is there anything else you’d like to add?’ Analyzed categories (active learning technique helped in the learning process, was time-consuming or was not the students’ learning style; enjoyed or didn’t’ enjoy active learning technique). |

| Campillo-Ferrer & Miralles-Martínez (2023), Spain [17] | Subject: social studies course in primary education N = 73 Mp: pre + post Design: quantitative | Groups: 1 Teaching format: local teaching Didactical integration: N/A Digital elements: digital platform, WebQuests | Target value: evaluation of the training program Questionnaire: evaluation of the training program based on gamification and flipped classroom. Three subscales: motivation (9 items), learning acquired through the tools (7 items), promotion of democratic education and active citizenship through the platform (12 items); 5-point Likert scale (1 strongly disagree to 5 strongly agree). Evidence Level: A |

| Chao et al. (2021), Taiwan [18] | Subject: dance course N = 290 Mp: pre + post Design: mixed methods | Groups: in each case Taiwanese and foreign students: 1. BL (n = 96; 28), 2. FC (n = 68; 21), 3. CG (n = 58; 19) Teaching format: 1./2. BL; 3. local teaching Didactical integration: FC Digital elements: Tron Class teaching platform, Videos | Target value: students’ physical activity class satisfaction Questionnaire (quantitative): Physical Activity Class Satisfaction Questionnaire (PACSQ); 32 items for nine factors: teaching, normative success, cognitive development, mastery experiences, fun and enjoyment, improvement of health and fitness, diversionary experiences, relaxation, and interaction with others. 7-point Likert scale (1 strongly disagree to 7 strongly agree). Evidence Level: A Target value: students’ dance skills learning effectiveness Practical exam video: the students’ performances for the exams were recorded and uploaded for assessing the skills and learning effects Evidence Level: C Target value: students’ learning experiences Focus group interviews (qualitative): four questions about original and changed habits, influence on the convenience of learning, difficulties and problem solving |

| Dantas & Kemm (2008), Australia [19] | Subject: science physiology laboratory-based course N = 78 Mp: post Design: mixed methods | Groups: 1 Teaching format: BL Didactical integration: N/A Digital elements: e-learning website; query tools; digital interactive assignments | Target values: quality of teaching, students’ usability of the e-learning website, students’ use of e-learning, students’ learning outcomes Students’ evaluation (quantitative): university-wide quality of teaching survey (10 items); usability of the e-learning website (5 items); students’ use of and learning outcomes from e-learning (8 items). 5-point Likert scale (5 strongly agree; 4 agree; 3 neither agree nor disagree; 2 disagree; 1 strongly disagree). Open-ended questions (N/A). Exam: final examination Evidence Level: C Target value: students’ experience with online learning Interviews (qualitative): questions about students’ past experience with online learning, details of students’ views on the clarity and navigation of the website, any problems, the learning outcomes that they thought had been achieved by e-learning, and the students’ feelings toward the group work in the assignment and the performance of their group. |

| Dlouha & Burandt (2015), Germany/Czech Republic [20] | Subject: transdisciplinary teaching of sustainability issues in an international teaching program N = 38 Mp: pre + post Design: mixed methods | Groups: 1 Teaching format: BL Didactical integration: N/A Digital elements: Moodle Wiki | Target values: students’ learning experience, (online) communication, learning approach Questionnaires (quantitative): Experiences of Teaching and Learning Questionnaire (ETLQ); Revised Approaches to learning and Studying Inventory Questionnaire (RASI); both: 3-point Likert scale (1 strongly disagree; 3 don’t agree or disagree; 5 strongly agree). Evidence Level: B Target value: students’ competence development Students’ evaluation (quantitative): skill description (e.g., computer skills, problem solving skills, work with other students); 12 Items; 3-point Likert scale (1 strongly disagree; 3 don’t agree or disagree; 5 strongly agree). Evidence Level: C Target value: students’ learning context Qualitative feedback and focus groups: information about the context of the learning process, e.g., prior knowledge of the course topic, detailed feedback on e-learning tools, course content, discussion topics, workload, and satisfaction with own performances. |

| Drozdikova-Zaripova & Sabirova (2020), Russia [21] | Subject: methodology and method of organizing in psychological and pedagogical education N = 65 Mp: pre + post Design: quantitative | Groups: 1. undergraduate students (n = 30), 2. baccalaureate (n = 35, separate analysis) Teaching format: BL Didactical integration: FC Digital elements: video-lecture, closed group in social network, virtual auditorium; digital tests | Target value: teaching quality Students’ evaluation: teaching arrangement level (5 items), qualification and responsiveness of teacher (5 items), content of digital education resource (5 items). 7-point Likert scale (1 completely unsatisfactory, 7 totally satisfactory). Evidence Level: C Target values: students’ self-organizing particularities, students’ learning motivation Questionnaire: Diagnostic of self-organizing particularities-39 (DSP-3), methodic for diagnostic of students’ learning motivation: motives of learning activity (communicative motives; avoiding motives; prestige motives; professional motives; creative self-realization motives; educative-cognitive motive; social motives). Scales N/A Evidence Level: B |

| Fatima et al. (2017), Pakistan [22] | Subject: neuroscience module in medicine N = 98 Mp: post Design: mixed method | Groups: 1 Intervention: BL Didactical integration: FC Digital elements: video lectures; quiz; Padlet; Kahoot | Target value: students’ perceptions of learning experience Questionnaire (quantitative): four factors: students’ perception of pre-session instructions/preparation (6 items), perception towards student-teacher interaction (2 items), students’ perception towards active learning and engagement (5 items), utility of flipped classroom (4 items). 5-point Likert scale (1 strong negative association, 3 neutral, 5 strong positive association). Evidence Level: A Target value: students’ experience of using FC Students’ evaluation (qualitative): One open-ended question about the experience of using FC. Analyzed by categories (content delivery; video and reading material; session scheduling; unmotivated students; interaction driven learning; formative assessment for learning). |

| Gazibara et al. (2015), Serbia [23] | Subject: epidemiology in medicine N = 185 Mp: pre + post Design: quantitative | Groups: 1. e-seminar (n = 36), 2. CG (classroom, n = 149) Teaching format: 1. online-learning, 2. local teaching Didactical integration: N/A Digital elements: practical workbook, Moodle | Target values: students’ motives for enrollment (pre), students’ satisfaction (post) Students’ evaluation: 5 items for several motives for enrollment and 3 items for their initial expectations; 5-point Likert scale (1 = strongly disagree, 5 = strongly agree). 6 items for overall satisfaction and impressions from the course; 5-point Likert scale (1 I strongly disagree to 5 I strongly agree), marks 4–5 were considered as positive attitude for the given statement. Evidence Level: C Target value: students’ performance (post) Exam: oral exam accounts for 70% of the total grade and 3% received for the oral presentation of seminar topic. MSCQ: 27% of the total grade consists of points received in the written quiz in mid-semester. Evidence Level: C |

| Graf & Yaman (2011), Germany/Turkey [24] | Subject: biology education N = 99 Mp: pre + post Design: quantitative | Groups: 1 Teaching format: BL Didactical integration: N/A Digital elements: e-learning platform; cooperative task processing, communication in the teams and with the instructors; online materials, videos | Target values: online materials, students’ satisfaction, online communication, communication and group activities, overall concept Students’ evaluation: evaluation of online materials: usefulness (3 items; 5 very useful to 1 not useful at all) and technical issues (3 items; 5 completely agree to 1 strongly disagree). Satisfaction with various activities (6 items). Evaluation of online communication tools (7 items for usefulness, learning effects and success), communication and group activities (12 items), and of the overall concept (8 items). 5-point Likert scale (N/A). Evidence Level: B |

| Liu et al. (2022), China [25] | Subject: physiology course in clinical medicine N = 1576 Mp: pre + post Design: quantitative | Groups: 1. HBOPPPS model, year 2018 (n = 526) and year 2019 (n = 518), 2. control group, BOPPPS model, year 2017 (n = 532) Teaching format: BL Didactical integration: HBOPPPS (hybrid development of BOPPPS: Bridge-in, Objective/Outcomes, Pre-assessment, Participatory learning, Post-assessment, Summary) Digital elements: Mobile App (Xuexi Tong): micro-lecture videos, single/multiple-choice questions, science stories and clinical cases; posting questions, private chat with teachers, discussions, notes, mind maps | Target value: students’ initiatives of self-learning Mobile App Score: automatically generated by the system of the mobile app; micro-lecture video watching (30 points), homework for each chapter (40 points), test (10 points), attendance (20 points). Max. 100 points. Evidence Level: C Target value: students’ performance Final examination: final examination of the physiology course was worthy of 100 points, consisting of 50 MSCQ (1 point/question) and 50 points of subjective questions, i.e., 6 short (5 points each) and 2 long (10 points each) essays. Max. 100 points. Evidence Level: C Target values: students’ learning ability, students’ satisfaction, advantages, disadvantages, teaching methods Students’ evaluation: overall 6 items: Do you like Physiology? Are you satisfied with the Physiology course? 4-point Likert scale (very much, like, fair, no). Does the online course improve your learning ability? 4-point Likert scale (strongly improved, improved, fair, no change). What do you think are the advantages of the Physiology course? 4 and 7 multiple-choice answer options. What do you think are the disadvantages of the Physiology course? Which method of teaching modality do you prefer? 3 answer options (online teaching, offline teaching, hybrid teaching). Evidence Level: C |

| Lu et al. (2023), China [26] | Subject: physiology course in clinical medicine N = 131 Mp: post Design: quantitative | Groups: 1. FC (n = 62), 2. traditional teaching (n = 69) Teaching format: BL Didactical integration: FC Digital elements: Chaoxing Group and Xueyin Online (platforms); micro videos, virtual simulation experiment teaching videos, sets of chapter test questions | Target value: students’ performance Online Platform Score: automatically collected by “Xueyin Online” platform, according to students’ performance in video watching (20 points), discussion (10 points), homework (20 points), quizzes (20 points), attendance (10 points), and classroom activities (20 points). Accounting for 40% of the final grades. Final exam: single-choice questions, multiple-choices questions, short answer questions, and essay questions, with a total of 100 points, accounting for 60% of the final grades. A score of 90–100 points is excellent, 80–89 points is good, 70–79 points is average, 60–69 points is fair, and lower than 60 points is failure. Evidence Level: C Target values: students’ knowledge acquisition, students’ learning ability, potential contributors of FC teaching in promoting learning effectiveness in physiology, advantages of FC teaching Students’ evaluation (only FC group): overall four items: students’ knowledge acquisition (strongly improved, improved, fair, no change). Learning ability (multiple-choice scale: self-study ability, cooperation ability, ability to analyze and solve problems, communication and presentation skills, clinical thinking). Potential contributors of FC teaching in promoting learning effectiveness in physiology, multiple-choice scale (group collaborative learning, diverse teaching methods, rich teaching resources, active classroom environment, timely answering questions). Advantages of FC teaching, multiple-choice scale (teaching design and teaching environment, teaching arrangement, level of students’ engagement, presentation of group learning, classroom quiz). Evidence Level: C |

| Ma & Lee (2021), China [27] | Subject: statistics course N = 133 Mp: post Design: mixed methods | Groups: 1. BL (n = 37), 2. e-learning (n = 45), 3. CG (face-to-face seminar, n = 51) Teaching format: 1. BL 2. e-learning, 3. local teaching Didactical integration: ARCS model (Keller, 1999) Digital elements: online videos, instructional videos | Target value: students’ learning effectiveness (attention, relevance, confidence, satisfaction) Questionnaire (quantitative): four factors: attention (4 items), relevance (3 items), confidence (3 items), satisfaction (8 items). 5-point Likert scale (1 strongly disagree to 5 strongly agree). Evidence Level: A Target value: students’ perception on attention, relevance, confidence, and satisfaction Group interview (qualitative): four group interviews in the BL and e-learning group. |

| Melton et al. (2009), USA [28] | Subject: health course N = 251 Mp: pre + post Design: quantitative | Groups: 1. BL seminar (n = 98), 2. CG (traditional, n = 153) Teaching format: 1. BL, 2. local teaching Didactical integration: N/A Digital elements: PowerPoint, quizzes, WebCT Vista course management system | Target value: students’ course achievement (pre, post) MSCQ: 50 multiple-choice questions to assess course content of the course materials. Exam: four written exams for the final course grade (midterm, post) Evidence Level: C Target values: educational quality, students’ satisfaction, teacher/course evaluation (post) Questionnaire: modified Students’ Evaluation of Educational Quality (SEEQ). 15 items, 5-point Likert scale (5 strongly agree, 4 agree, 3 neutral, 2 disagree, 1 strongly disagree). Points are summed up: 51–60 highly satisfied, 41–50 moderately satisfied, 31–40 satisfied, 21–30 dissatisfied, 11–20 moderately dissatisfied, 1–10 highly dissatisfied. Students’ evaluation: university-wide teacher and course evaluation, 14 items, e.g., organization, instructors’ helpfulness, course materials. 5-point Likert scale (5 very good, 4 good, 3 satisfactory, 2 poor, 1 very poor). Evidence Level: B |

| Ornellas & Muñoz Carril (2014), Spain [29] | Subject: use and application of ICT in the academic and professional environments N = 82 Mp: post Design: mixed methods | Groups: 1 Teaching format: e-learning Didactical integration: N/A Digital elements: social bookmarking, remote collaboration tools, e-calendars, blog, video platform, video channels, various ICT tools; audio-visual projects | Target values: students’ satisfaction, level of skills acquired, teaching performance, teaching and learning resources and tools, evaluation of continuous assessment practices Students’ evaluation (quantitative): university-wide course evaluation with four dimensions: overall satisfaction, teaching performance, teaching and learning resources, system of assessment. Scale (N/A). Additional self-generated questions about the level of skills acquired (8 items), the resources and tools and how to use them, the continuous assessment practices (4 assessment practices with 5 items). 3-point Likert scale (low, medium, high). Evidence Level: C Target values: teaching performance, strengths and weaknesses of the course Students’ evaluation (qualitative): Two open-ended questions: ‘Did the teacher facilitate your learning in the subject?’ and ‘Did the evaluation and feedback by the teacher during the continuous assessment help you to carry out the final project?’. Analyzed by categories (teachers’ speed of response to their doubts and queries, guidance and clarifications given in the assessment activities, monitoring of work carried out, clarity and usefulness of the teachers’ guidance. One open question for assessing the strengths of the course (collaborative work, personalized attention by the teacher) and weaknesses of the course (workload, technical problems with some of the tools used). |

| Ouchaouka et al. (2021), Morocco [30] | Subject: cell biology in life and earth sciences N = 292 Mp: post Design: quantitative | Groups: 1 Teaching format: BL Didactical integration: individual progress through the serious game Digital elements: serious game, Moodle, quizzes, Unity 3D software, LMS | Target values: students’ relevance and attractiveness Students’ evaluation: six factors: academic information (8 items), 8-point Likert scale (N/A). Teaching context (3 items), learning barriers (3 items), digital learning environments (3 items), 3-point Likert scale (N/A). Communication modalities (4 items), pedagogical innovation and integration of ICT (5 items), 4-point Likert scale (N/A). Evidence Level: B |

| Pieter et al. (2004), Germany [31] | Subject: education sciences N = 23 Mp: pre + post Design: mixed methods | Groups: 1 Teaching format: BL Didactical integration: instructional design (Gagne et al., 2004), ARCS model (Keller, 1999), basic principles according to Bourdeau & Bates (1997) Digital elements: video-, audio-, and text-based learning units (online versions or CD-ROM) | Target values: students’ usage behavior (pre), product-oriented criteria, process-oriented criteria, Internet interest and EDP knowledge, control and visual design, quality of technical implementation, importance of individual program aspects, acceptance (post) Students’ evaluation (quantitative): frequency of use of various Internet services (6 items), scale (1 I don’t know; 2 never; 3 rarely; 4 sometimes; 5 often; 6 very often). Product-oriented criteria (motivation, curriculum, usability, design), process-oriented criteria (study program integration, transferability, quality assurance, didactical aspect). Scale (grades 1 very good to 6 unsatisfactory). EDP knowledge and interest of the students (2 items; very good; rather good; average; rather bad; very bad), simplicity of control and clarity of appearance (2 items), assessment of individual components (5 items), scale (very good; good; average; poor; very poor; not specified). Importance of individual program aspects (5 items; very important; important; unimportant; not specified), agreement with individual statements (10 items; fully agree; rather agree; don’t know; rather disagree; completely disagree; not specified). Evidence Level: C Target values: students’ motivation to participate, benefits, interests, times of course processing Students’ evaluation (qualitative): five open-ended questions about motivation and benefits to participate, interests of online learning, time of course processing and particular likes. |

| Surov et al. (2021), Germany [32] | Subject: imaging techniques in human medicine N = 110 Mp: post Design: mixed methods | Groups: 1 Teaching format: e-learning Didactical integration: N/A Digital elements: videos; video presentations; Zoom (online seminar, chat function, monitor sharing) | Target value: course evaluation (time effort, retrievability of video presentations, design of video conferences, general structure of the digital teaching concept) Students’ evaluation (quantitative): 11 self-generated questions: time required, video length, video duration, profit from teaching concept, retention of teaching concept, retrievability of the videos, design of video conferences, knowledge transfer, interest, animation for self-study, flexible learning, general structure of the digital teaching concept; 5-point Likert scale (fully agree; agree; undecided; disagree; disagree at all). Optimal duration of a video segment; 5-point Likert scale (<10 min, 10–20 min, 21–40 min, 41–60 min, >60 min). Evidence Level: C Target value: course evaluation Students’ evaluation (qualitative): One open-ended question for comments. Analyzed by categories (good implementation of teaching, positive assessment of the new teaching concept or the video presentations provided, retention or increased use of assignments or case studies to prepare for the online seminars). |

| Wilson & Greig (2017), Scotland, UK [33] | Subject: sports therapy N = 164 Mp: post Design: qualitative | Groups: level of difficulty in the online channel: level four (n = 67), level five (n = 51), level six (n = 46) Teaching format: BL Didactical integration: N/A Digital elements: instructional videos; Blackboard virtual learning environment | Target value: students’ experience Students’ evaluation (qualitative): seven open-ended questions to investigate students’ experience of an online video-based learning channel across modules in levels four, five, and six. |

| Wong et al. (2004), China [34] | Subject: health education for prosthetics and orthotics N = 1613 Mp: post Design: quantitative | Groups: 1. e-learning (n = 20), 2. CG (standard curricula, n = 61), 3. CG (entire university, n = 1532) Teaching format: 1. e-learning, 2. N/A, 3. N/A Didactical integration: N/A Digital elements: multimedia components (video, PPP, text); WebCT (forum); slide shows, handouts, tutorials, electronic exercises, assignments, quizzes; online discussion forum | Target values: students’ career relevance, learning outcomes, critical thinking, creative thinking, ability to pursue lifelong learning, communication skills, interpersonal skills, problem solving, adaptability, workload (reversed) Students’ evaluation: 10 factors (same as target values). 5-point Likert scale (strongly disagree to strongly agree). Evidence Level: C |

| Zarcone & Saverino (2022), Italy [35] | Subject: human anatomy in medicine N = 444 Mp: post Design: quantitative | Groups: 1. e-learning (n = 236), 2. CG (f2f teaching, n = 208) Teaching format: 1. e-learning, 2. local teaching Didactical integration: N/A Digital elements: PowerPoint; Microsoft Teams; audiovisuals (interaction on slides; chat features); Visible Body 3D models; EdiErmes dissection clips | Target value: students’ perceived quality of the didactics Students’ evaluation: 12 questions: clarity of presentation, availability of the teacher for further explanations, need for basic preliminary knowledge, perceived learning weight. 4-point Likert scale (4 strongly agree, 3 somewhat agree, 2 somewhat disagree, and 1 strongly disagree). Answers were grouped into “negative score” and “positive score”. Evidence Level: B Target value: students’ performance Exam: final examination: microscopic recognition of an anatomical structure, oral examination on topographical and visceral anatomy topic. Evidence Level: C |

| Category | Subcategory | Number of Studies [n] | References |

|---|---|---|---|

| Study design | Post-intervention measurements | 12 | [14,15,19,22,26,27,29,30,32,33,34,35] |

| Pre-post design | 9 | [17,18,20,21,23,24,25,28,31] | |

| Interim assessments | 2 | [14,16] | |

| Measurement methods | Quantitative surveys | 11 | [15,17,21,23,24,25,26,28,30,34,35] |

| Qualitative surveys | 1 | [33] | |

| Combined quantitative and qualitative surveys | 10 | [14,16,18,19,20,22,27,29,31,32] | |

| Didactic integration | Flipped/inverted classroom | 4 | [18,21,22,26] |

| Didactic theories (e.g., ARCS model) | 2 | [27,31] | |

| Instructional design principles | 1 | [31] | |

| HBOPPPS model | 1 | [25] | |

| WebQuest method | 1 | [17] | |

| Other didactic aspects | 4 | [15,16,30,31] | |

| Comparative analyses | E-teaching vs. traditional methods | 5 | [23,25,26,28,35] |

| University students vs. high school graduates | 1 | [21] | |

| Two e-teaching formats vs. control group | 3 | [18,27,34] | |

| Comparison of difficulty levels in serious games | 1 | [33] | |

| Active learning groups vs. control group | 1 | [16] | |

| Instructional delivery format | Blended learning (BL) | 15 | [14,15,18,19,20,21,22,24,25,26,27,28,30,31,33] |

| E-learning | 7 | [16,23,27,29,32,34,35] | |

| Traditional face-to-face (local teaching) | 6 | [17,18,23,27,28,35] |

| Evaluation Method | Number of Studies [n] | References |

|---|---|---|

| Self-generated questionnaires | 10 | [15,16,20,21,23,25,26,31,32,34] |

| Written and oral examinations | 6 | [14,23,25,26,28,35] |

| Practical examination videos | 1 | [18] |

| Multiple-choice questionnaires | 2 | [23,28] |

| University-wide surveys | 2 | [19,29] |

| Quantitative measurement of engagement/proficiency scores | 2 | [25,26] |

| Evaluation Method | Number of Studies [n] | References |

|---|---|---|

| Open-ended questions in questionnaires | 6 | [16,22,29,31,32,33] |

| Focus groups and interviews | 4 | [18,19,20,27] |

| Semi-structured feedback via Blackboard method | 1 | [14] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Morat, T.; Hollinger, A. Methods and Target Values Used to Evaluate Teaching Concepts, with a Particular Emphasis on the Incorporation of Digital Elements in Higher Education: A Systematic Review. Trends High. Educ. 2024, 3, 734-756. https://doi.org/10.3390/higheredu3030042

Morat T, Hollinger A. Methods and Target Values Used to Evaluate Teaching Concepts, with a Particular Emphasis on the Incorporation of Digital Elements in Higher Education: A Systematic Review. Trends in Higher Education. 2024; 3(3):734-756. https://doi.org/10.3390/higheredu3030042

Chicago/Turabian StyleMorat, Tobias, and Anna Hollinger. 2024. "Methods and Target Values Used to Evaluate Teaching Concepts, with a Particular Emphasis on the Incorporation of Digital Elements in Higher Education: A Systematic Review" Trends in Higher Education 3, no. 3: 734-756. https://doi.org/10.3390/higheredu3030042

APA StyleMorat, T., & Hollinger, A. (2024). Methods and Target Values Used to Evaluate Teaching Concepts, with a Particular Emphasis on the Incorporation of Digital Elements in Higher Education: A Systematic Review. Trends in Higher Education, 3(3), 734-756. https://doi.org/10.3390/higheredu3030042