Use-Case-Driven Architectures for Data Platforms in Manufacturing

Abstract

1. Introduction

2. Materials and Methods

2.1. Key Terms and Concepts

2.2. Systematic Literature Review

3. Archetypes for Data Management Platforms in Manufacturing

3.1. Requirements of Archetypes

3.1.1. Latency

3.1.2. Data Volume (and Heterogeneity)

3.1.3. Scalability

3.1.4. Application Environment

3.1.5. Data Integrity

3.1.6. Model-Driven Approach

3.2. Use Cases

3.2.1. Factory Management and Coordination

3.2.2. Process Management and Monitoring

3.2.3. Process Control of Robots and Machines

3.2.4. Management of Auxiliaries

3.3. Clustering of Archetypes

3.3.1. Archetypes Without Model-Driven Approaches

3.3.2. Archetypes with Model-Driven Approaches

3.4. Archetypes in Detail

3.4.1. Gateway

3.4.2. Low Energy, Wide Range

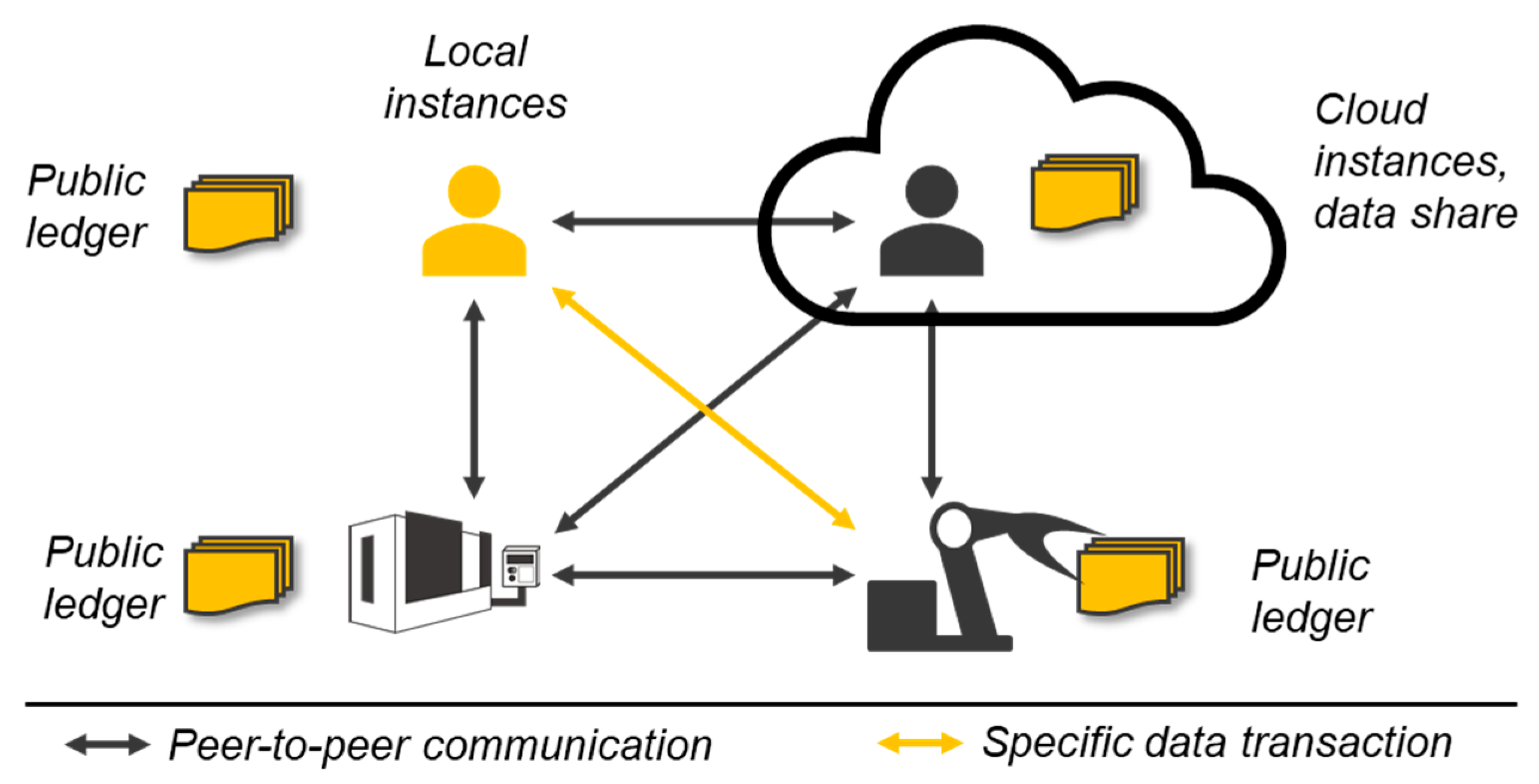

3.4.3. Blockchain

3.4.4. Streaming

3.4.5. Management and Monitoring

3.4.6. Resource Orchestration

3.4.7. Process Control

3.4.8. Large-Scale, Time-Critical Control

4. Example Cases

4.1. Gateway for Computerized Numerical Control Data

4.2. Low Energy, Wide Range for Building Resource Monitoring

5. Discussion

6. Conclusions

- A clear distinction between latency-critical architectures (e.g., process control) and data-sharing-oriented architectures (e.g., blockchain, gateway);

- A three- and two-dimensional framework for organizing archetypes according to requirements such as data integrity, scalability, latency, and mobility;

- The identification of trade-offs between architectural properties, illustrating that hybrid or modular solutions may be necessary in real-world settings.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AAS | Asset Administration Shell |

| AGV | Automated Guided Vehicle |

| API | Application Programming Interfaces |

| BLE | Bluetooth Low Energy |

| CNC | Computerized Numerical Control |

| CPPS | Cyber-Physical Production Systems |

| e.g. | For Example |

| GPS | Global Positioning System |

| HTTPS | Hypertext Transfer Protocol Secure |

| IoT | Internet of Things |

| KPI | Key Performance Indicator |

| LoRaWAN | Long Range Wide Area Network |

| MQTT | Message Queueing Telemetry Transport |

| noSQL | Not only Structured Query Language |

| OEE | Overall Equipment Effectiveness |

| OPC UA | Open Platform Communications Unified Architecture |

| PLC | Programmable Logic Controls |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| REST | Representational State Transfer |

| RFID | Radio-Frequency Identification |

| SPAR-4-SLR | Scientific Procedures and Rationales for Systematic Literature Reviews |

| SQL | Structured Query Language |

| UWB | Ultra-Wideband |

| WiFi | Wireless Fidelity |

| WORM | Write Once Read Many |

References

- Taylor, P. Volume of Data/Information Created, Captured, Copied, and Consumed Worldwide from 2010 to 2023, with Forecasts from 2024 to 2028. Available online: https://www.statista.com/statistics/871513/worldwide-data-created/ (accessed on 27 May 2025).

- Hankel, M. The Reference Architectural Model Industrie 4.0 (RAMI 4.0); ZVEI—German Electrical and Electronic Manufacturers’ Association: Frankfurt am Main, Germany, 2015; Available online: https://www.zvei.org/fileadmin/user_upload/Presse_und_Medien/Publikationen/2015/april/Das_Referenzarchitekturmodell_Industrie_4.0__RAMI_4.0_/ZVEI-Industrie-40-RAMI-40-English.pdf (accessed on 27 May 2025).

- International Electrotechnical Commission. Smart Manufacturing: Reference Architecture Model Industry 4.0 (RAMI4.0), 1st ed.; IEC PAS 63088; International Electrotechnical Commission: Geneva, Switzerland, 2017. [Google Scholar]

- Pennekamp, J.; Glebke, R.; Henze, M.; Meisen, T.; Quix, C.; Hai, R.; Gleim, L.; Niemietz, P.; Rudack, M.; Knape, S.; et al. Towards an Infrastructure Enabling the Internet of Production. In Proceedings of the 2019 IEEE International Conference on Industrial Cyber Physical Systems (ICPS), Taipei, Taiwan, 6–9 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 31–37, ISBN 978-1-5386-8500-6. [Google Scholar]

- Schuh, G.; Prote, J.-P.; Gützlaff, A.; Thomas, K.; Sauermann, F.; Rodemann, N. Internet of Production: Rethinking production management. In Production at the Leading Edge of Technology; Wulfsberg, J.P., Hintze, W., Behrens, B.-A., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; pp. 533–542. ISBN 978-3-662-60416-8. [Google Scholar]

- Brecher, C.; Padberg, M.; Jarke, M.; van der Aalst, W.; Schuh, G. The Internet of Production: Interdisciplinary Visions and Concepts for the Production of Tomorrow. In Internet of Production; Brecher, C., Schuh, G., van der Aalst, W., Jarke, M., Piller, F.T., Padberg, M., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 1–12. ISBN 978-3-030-98062-7. [Google Scholar]

- Cardin, O. Classification of cyber-physical production systems applications: Proposition of an analysis framework. Comput. Ind. 2019, 104, 11–21. [Google Scholar] [CrossRef]

- Uhlemann, T.H.-J.; Lehmann, C.; Steinhilper, R. The Digital Twin: Realizing the Cyber-Physical Production System for Industry 4.0. Procedia CIRP 2017, 61, 335–340. [Google Scholar] [CrossRef]

- Monostori, L. Cyber-physical Production Systems: Roots, Expectations and R&D Challenges. Procedia CIRP 2014, 17, 9–13. [Google Scholar] [CrossRef]

- Rossit, D.A.; Tohmé, F.; Frutos, M. Production planning and scheduling in Cyber-Physical Production Systems: A review. Int. J. Comput. Integr. Manuf. 2019, 32, 385–395. [Google Scholar] [CrossRef]

- Lins, T.; Oliveira, R.A.R. Cyber-physical production systems retrofitting in context of industry 4.0. Comput. Ind. Eng. 2020, 139, 106193. [Google Scholar] [CrossRef]

- Wei, K.; Sun, J.Z.; Liu, R.J. A Review of Asset Administration Shell. In Proceedings of the 2019 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), Macao SAR, China, 15–18 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1460–1465, ISBN 978-1-7281-3804-6. [Google Scholar]

- Tantik, E.; Anderl, R. Integrated Data Model and Structure for the Asset Administration Shell in Industrie 4.0. Procedia CIRP 2017, 60, 86–91. [Google Scholar] [CrossRef]

- Cavalieri, S.; Salafia, M.G. Asset Administration Shell for PLC Representation Based on IEC 61131–3. IEEE Access 2020, 8, 142606–142621. [Google Scholar] [CrossRef]

- Wenger, M.; Zoitl, A.; Muller, T. Connecting PLCs With Their Asset Administration Shell For Automatic Device Configuration. In Proceedings of the 2018 IEEE 16th International Conference on Industrial Informatics (INDIN), Porto, Portugal, 18–20 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 74–79, ISBN 978-1-5386-4829-2. [Google Scholar]

- Ding, K.; Chan, F.T.; Zhang, X.; Zhou, G.; Zhang, F. Defining a Digital Twin-based Cyber-Physical Production System for autonomous manufacturing in smart shop floors. Int. J. Prod. Res. 2019, 57, 6315–6334. [Google Scholar] [CrossRef]

- Soori, M.; Arezoo, B.; Dastres, R. Digital twin for smart manufacturing, A review. Sustain. Manuf. Serv. Econ. 2023, 2, 100017. [Google Scholar] [CrossRef]

- ISO/IEC 2382:2015; Information Technology—Vocabulary. ISO/IEC JTC 1: Geneva, Switzerland, 2015.

- Brecher, C.; Weck, M. Machine Tools Production Systems 3; Springer Fachmedien: Wiesbaden, Germany, 2022; ISBN 978-3-658-34621-8. [Google Scholar]

- Yang, C.; Yu, H.; Zheng, Y.; Ala-Laurinaho, R.; Feng, L.; Tammi, K. Towards Human-Centric Manufacturing: Leveraging Digital Twin for Enhanced Industrial Processes. In Proceedings of the IECON 2024—50th Annual Conference of the IEEE Industrial Electronics Society, Chicago, IL, USA, 3–6 November 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–7, ISBN 978-1-6654-6454-3. [Google Scholar]

- DIN EN ISO/IEC 27001; Information Security Management System. DIN German Institute for Standardization: Berlin, Germany, 2024.

- European Commission. Cyber Resilience Act. Available online: https://digital-strategy.ec.europa.eu/en/policies/cyber-resilience-act (accessed on 3 July 2025).

- International Society of Automation. ISA/IEC 62443 Series of Standards. Available online: https://www.isa.org/standards-and-publications/isa-standards/isa-iec-62443-series-of-standards (accessed on 3 July 2025).

- Canizo, M.; Onieva, E.; Conde, A.; Charramendieta, S.; Trujillo, S. Real-time predictive maintenance for wind turbines using Big Data frameworks. In Proceedings of the 2017 IEEE International Conference on Prognostics and Health Management (ICPHM), Dallas, TX, USA, 19–21 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 70–77, ISBN 978-1-5090-5710-8. [Google Scholar]

- Yang, C.-T.; Chen, S.-T.; Yan, Y.-Z. The implementation of a cloud city traffic state assessment system using a novel big data architecture. Clust. Comput. 2017, 20, 1101–1121. [Google Scholar] [CrossRef]

- Mu, N.; Gong, S.; Sun, W.; Gan, Q. The 5G MEC Applications in Smart Manufacturing. In Proceedings of the 2020 IEEE International Conference on Edge Computing (EDGE), Beijing, China, 19–23 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 45–48, ISBN 978-1-7281-8254-4. [Google Scholar]

- Yang, C.; Guo, Q.; Yu, H.; Chen, Y.; Taleb, T.; Tammi, K. Semantic-Enhanced Digital Twin for Industrial Working Environments. In Global Internet of Things and Edge Computing Summit; Presser, M., Skarmeta, A., Krco, S., González Vidal, A., Eds.; Springer Nature: Cham, Switzerland, 2025; pp. 3–20. ISBN 978-3-031-78571-9. [Google Scholar]

- Kim, J.; Lee, J.Y. Server-Edge dualized closed-loop data analytics system for cyber-physical system application. Robot. Comput. Integr. Manuf. 2021, 67, 102040. [Google Scholar] [CrossRef]

- Chae, C.; Kim, H.; Sim, B.; Yoon, D.; Kang, J. Poster: Real-Time Data-Driven Optimization in Semiconductor Manufacturing: An Edge-Computing System Architecture for Continuous Model Improvement. In Proceedings of the 22nd Annual International Conference on Mobile Systems, Applications and Services, Minato-ku, Tokyo, Japan, 2–6 June 2024; Okoshi, T., Ko, J., LiKamWa, R., Eds.; ACM: New York, NY, USA, 2024; pp. 630–631, ISBN 9798400705816. [Google Scholar]

- Angrish, A.; Starly, B.; Lee, Y.-S.; Cohen, P.H. A flexible data schema and system architecture for the virtualization of manufacturing machines (VMM). J. Manuf. Syst. 2017, 45, 236–247. [Google Scholar] [CrossRef]

- Li, X.; Song, J.; Huang, B. A scientific workflow management system architecture and its scheduling based on cloud service platform for manufacturing big data analytics. Int. J. Adv. Manuf. Technol. 2016, 84, 119–131. [Google Scholar] [CrossRef]

- Yang, C.; Yu, H.; Zheng, Y.; Feng, L.; Ala-Laurinaho, R.; Tammi, K. A digital twin-driven industrial context-aware system: A case study of overhead crane operation. J. Manuf. Syst. 2025, 78, 394–409. [Google Scholar] [CrossRef]

- Safronov, G.; Theisinger, H.; Sahlbach, V.; Braun, C.; Molzer, A.; Thies, A.; Schuba, C.; Shirazi, M.; Reindl, T.; Hänel, A.; et al. Data Acquisition Framework for spatio-temporal analysis of path-based welding applications. Procedia CIRP 2024, 130, 1644–1652. [Google Scholar] [CrossRef]

- Guo, C.; Yang, S.; Thiede, S. An Autonomous Edge Box System Architecture for Industrial IoT Applications. In Proceedings of the 2024 IEEE 22nd International Conference on Industrial Informatics (INDIN), Beijing, China, 18–20 August 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6, ISBN 979-8-3315-2747-1. [Google Scholar]

- Apache Software Foundation. Apache Kafka. Available online: https://kafka.apache.org/ (accessed on 2 July 2025).

- Durão, L.F.C.; Zancul, E.; Schützer, K. Digital Twin data architecture for Product-Service Systems. Procedia CIRP 2024, 121, 79–84. [Google Scholar] [CrossRef]

- Prist, M.; Monteriu, A.; Freddi, A.; Pallotta, E.; Cicconi, P.; Giuggioloni, F.; Caizer, E.; Verdini, C.; Longhi, S. Cyber-Physical Manufacturing Systems for Industry 4.0: Architectural Approach and Pilot Case. In Proceedings of the 2019 II Workshop on Metrology for Industry 4.0 and IoT (MetroInd4.0&IoT), Naples, Italy, 4–6 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 219–224, ISBN 978-1-7281-0429-4. [Google Scholar]

- PROFIBUS Nutzerorganisation e.V. Profibus. Available online: https://www.profibus.de/ (accessed on 2 July 2025).

- Pan, Y.H.; Qu, T.; Wu, N.Q.; Khalgui, M.; Huang, G.Q. Digital Twin Based Real-time Production Logistics Synchronization System in a Multi-level Computing Architecture. J. Manuf. Syst. 2021, 58, 246–260. [Google Scholar] [CrossRef]

- Kong, X.T.; Fang, J.; Luo, H.; Huang, G.Q. Cloud-enabled real-time platform for adaptive planning and control in auction logistics center. Comput. Ind. Eng. 2015, 84, 79–90. [Google Scholar] [CrossRef]

- Nguyen, T.; Nguyen, H.; Nguyen Gia, T. Exploring the integration of edge computing and blockchain IoT: Principles, architectures, security, and applications. J. Netw. Comput. Appl. 2024, 226, 103884. [Google Scholar] [CrossRef]

- Lee, J.; Azamfar, M.; Singh, J. A blockchain enabled Cyber-Physical System architecture for Industry 4.0 manufacturing systems. Manuf. Lett. 2019, 20, 34–39. [Google Scholar] [CrossRef]

- Charania, Z.; Vogt, L.; Klose, A.; Urbas, L. Bringing Human Cognition to Machines: Introducing Cognitive Edge Devices for the Process Industry. In Proceedings of the 2024 IEEE 22nd International Conference on Industrial Informatics (INDIN), Beijing, China, 18–20 August 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–7, ISBN 979-8-3315-2747-1. [Google Scholar]

- Klingel, L.; Kübler, K.; Verl, A. A Multicore Control System Architecture as an Operating Platform for Industrial Digital Twins. Procedia CIRP 2023, 118, 294–299. [Google Scholar] [CrossRef]

- Santos, R.; Piqueiro, H.; Dias, R.; Rocha, C.D. Transitioning trends into action: A simulation-based Digital Twin architecture for enhanced strategic and operational decision-making. Comput. Ind. Eng. 2024, 198, 110616. [Google Scholar] [CrossRef]

- Yan, S.; Imran, M.A.; Flynn, D.; Taha, A. Architecting Internet-of-Things-Enabled Digital Twins: An Evaluation Framework. In Proceedings of the 2024 IEEE 10th World Forum on Internet of Things (WF-IoT), Ottawa, ON, Canada, 10–13 November 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 649–653, ISBN 979-8-3503-7301-1. [Google Scholar]

- Cui, X. Cyber-Physical System (CPS) architecture for real-time water sustainability management in manufacturing industry. Procedia CIRP 2021, 99, 543–548. [Google Scholar] [CrossRef]

- Yeh, C.-S.; Chen, S.-L.; Li, I.-C. Implementation of MQTT protocol based network architecture for smart factory. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2021, 235, 2132–2142. [Google Scholar] [CrossRef]

- Hastbacka, D.; Jaatinen, A.; Hoikka, H.; Halme, J.; Larranaga, M.; More, R.; Mesia, H.; Bjorkbom, M.; Barna, L.; Pettinen, H.; et al. Dynamic and Flexible Data Acquisition and Data Analytics System Software Architecture. In Proceedings of the 2019 IEEE SENSORS, Montreal, QC, Canada, 27–30 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4, ISBN 978-1-7281-1634-1. [Google Scholar]

- Lin, W.D.; Low, M.Y.H. Design and Development of a Digital Twin Dashboards System Under Cyber-physical Digital Twin Environment. In Proceedings of the 2021 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), Singapore, Singapore, 13–16 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1716–1720, ISBN 978-1-6654-3771-4. [Google Scholar]

- Wang, J.; Wang, X.; Dong, S. Construction of MBSE-based smart beam yard system. In Proceedings of the International Conference on Smart Transportation and City Engineering (STCE 2023), Chongqing, China, 16–18 December 2023; Mikusova, M., Ed.; SPIE: Bellingham, WA, USA, 2023; p. 62, ISBN 9781510673540. [Google Scholar]

- InfluxData Inc. InfluxDB. Available online: https://www.influxdata.com/lp/influxdb-database/?utm_source=bing&utm_medium=cpc&utm_campaign=na_search_brand_related&utm_content=brand&utm_source=bing&utm_medium=cpc&utm_campaign=2020-09-03_Cloud_Traffic_Brand-InfluxDB_INTL&utm_term=influxdb&msclkid=87fab7b77d8b1df52505dfd258d80cf5 (accessed on 2 July 2025).

- Adhikari, A.; Winslett, M. A Hybrid Architecture for Secure Management of Manufacturing Data in Industry 4.0. In Proceedings of the 2019 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Kyoto, Japan, 11–15 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 973–978, ISBN 978-1-5386-9151-9. [Google Scholar]

- Bhattacharjee, A.; Badsha, S.; Sengupta, S. Blockchain-based Secure and Reliable Manufacturing System. In Proceedings of the 2020 International Conferences on Internet of Things (iThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData) and IEEE Congress on Cybermatics (Cybermatics), Rhodes, Greece, 2–6 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 228–233, ISBN 978-1-7281-7647-5. [Google Scholar]

- Raspberry Pi Ltd. Raspberry Pi. Available online: https://www.raspberrypi.com/ (accessed on 2 July 2025).

- NVIDIA Corporation. Jetson—Embedded AI Computing Platform. Available online: https://developer.nvidia.com/embedded-computing (accessed on 2 July 2025).

- Wu, B. Research and Design of Cloud-Based Digital Three-Dimensional Scanning System for Denture Digitization. In Proceedings of the 2023 IEEE 3rd International Conference on Data Science and Computer Application (ICDSCA), Dalian, China, 27–29 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 439–443, ISBN 979-8-3503-4154-6. [Google Scholar]

- Apache Software Foundation. Apache Spark™—Unified Engine for Large-Scale Data Analytics. Available online: https://spark.apache.org/?ref=producthunt (accessed on 2 July 2025).

- Apache Software Foundation. Apache Hadoop. Available online: https://hadoop.apache.org/ (accessed on 2 July 2025).

- Barseghian, D.; Altintas, I.; Jones, M.B.; Crawl, D.; Potter, N.; Gallagher, J.; Cornillon, P.; Schildhauer, M.; Borer, E.T.; Seabloom, E.W.; et al. Workflows and extensions to the Kepler scientific workflow system to support environmental sensor data access and analysis. Ecol. Inform. 2010, 5, 42–50. [Google Scholar] [CrossRef]

- Park, K.T.; Im, S.J.; Kang, Y.-S.; Noh, S.D.; Kang, Y.T.; Yang, S.G. Service-oriented platform for smart operation of dyeing and finishing industry. Int. J. Comput. Integr. Manuf. 2019, 32, 307–326. [Google Scholar] [CrossRef]

- Havard, V.; Sahnoun, M.; Bettayeb, B.; Duval, F.; Baudry, D. Data architecture and model design for Industry 4.0 components integration in cyber-physical production systems. Proc. Inst. Mech. Eng. Part. B J. Eng. Manuf. 2021, 235, 2338–2349. [Google Scholar] [CrossRef]

- Nahar, P.; Ghuraiya, A.; Voronkov, I.M.; Kharlamov, A.A. IoT System Architecture for a Smart City. In Proceedings of the 2024 8th International Conference on Information, Control, and Communication Technologies (ICCT), Vladikavkaz, Russia, 1–5 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–5, ISBN 979-8-3315-1756-4. [Google Scholar]

- Tang, J.; Emmanouilidis, C.; Salonitis, K. Reconfigurable Manufacturing Systems Characteristics in Digital Twin Context. IFA Pap. 2020, 53, 10585–10590. [Google Scholar] [CrossRef]

- Liu, J.; Liu, J.; Zhuang, C.; Liu, Z.; Miao, T. Construction method of shop-floor digital twin based on MBSE. J. Manuf. Syst. 2021, 60, 93–118. [Google Scholar] [CrossRef]

- Kim, D.-H.; Kim, G.-Y.; Noh, S.D. Digital Twin-Based Prediction and Optimization for Dynamic Supply Chain Management. Machines 2025, 13, 109. [Google Scholar] [CrossRef]

- Pan, Y.H.; Wu, N.Q.; Qu, T.; Li, P.Z.; Zhang, K.; Guo, H.F. Digital-twin-driven production logistics synchronization system for vehicle routing problems with pick-up and delivery in industrial park. Int. J. Comput. Integr. Manuf. 2021, 34, 814–828. [Google Scholar] [CrossRef]

- Wu, W.; Shen, L.; Zhao, Z.; Li, M.; Huang, G.Q. Industrial IoT and Long Short-Term Memory Network-Enabled Genetic Indoor-Tracking for Factory Logistics. IEEE Trans. Ind. Inf. 2022, 18, 7537–7548. [Google Scholar] [CrossRef]

- Chen, Y.; Feng, Q.; Shi, W. An industrial robot system based on edge computing: An early experience. In Proceedings of the USENIX Workshop on Hot Topics in Edge Computing, HotEdge 2018, Co-Located with USENIX ATC 2018, Renton, WA, USA, 10 July 2019. [Google Scholar]

- Wang, Z.; Gong, S.; Liu, Y. Convolutional Neural Network based Digital Twin of Rolling Bearings for CNC Machine Tools in Cloud Computing. In Proceedings of the 2023 10th International Conference on Dependable Systems and Their Applications (DSA), Tokyo, Japan, 10–11 August 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 890–895, ISBN 979-8-3503-0477-0. [Google Scholar]

- Zhu, X.; Li, C.; Mai, J.; Yang, J.; Kuang, Z. An Empirical Study on the Digital Twin System of Intelligent Production Line. In Proceedings of the 2024 4th International Conference on Computer Science and Blockchain (CCSB), Shenzhen, China, 6–8 September 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 280–286, ISBN 979-8-3503-5650-2. [Google Scholar]

- Lin, T.Y.; Shi, G.; Yang, C.; Zhang, Y.; Wang, J.; Jia, Z.; Guo, L.; Xiao, Y.; Wei, Z.; Lan, S. Efficient container virtualization-based digital twin simulation of smart industrial systems. J. Clean. Prod. 2021, 281, 124443. [Google Scholar] [CrossRef]

- Barzegaran, M.; Pop, P. The FORA European Training Network on Fog Computing for Robotics and Industrial Automation. In Proceedings of the 2023 Design, Automation & Test in Europe Conference & Exhibition (DATE), Antwerp, Belgium, 17–19 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Pabst, R.G.; De Souza, A.F.; Brito, A.G.; Ahrens, C.H. A new approach to dynamic forecasting of cavity pressure and temperature throughout the injection molding process. Polym. Eng. Sci. 2022, 62, 4055–4069. [Google Scholar] [CrossRef]

- Guo, M.; Fang, X.; Hu, Z.; Li, Q. Design and research of digital twin machine tool simulation and monitoring system. Int. J. Adv. Manuf. Technol. 2023, 124, 4253–4268. [Google Scholar] [CrossRef]

- Lalik, K.; Flaga, S. A Real-Time Distance Measurement System for a Digital Twin Using Mixed Reality Goggles. Sensors 2021, 21, 7870. [Google Scholar] [CrossRef]

- MongoDB, Inc. MongoDB: The World’s Leading Modern Database. Available online: https://www.mongodb.com/ (accessed on 2 July 2025).

- Neo4j, Inc. Neo4j Graph Database & Analytics. Available online: https://neo4j.com/ (accessed on 2 July 2025).

- Simion, G.; Filipescu, A.; Ionescu, D.; Filipescu, A. Cloud/VPN Based Remote Control of a Modular Production System Assisted by a Mobile Cyber Physical Robotic System—Digital Twin Approach. Sensors 2025, 25, 591. [Google Scholar] [CrossRef] [PubMed]

- DMG MORI Deutschland. CMX 600 V-Vertikal-Fräsen-DMG MORI Deutschland. Available online: https://de.dmgmori.com/produkte/maschinen/fraesen/vertikal-fraesen/cmx-v/cmx-600-v (accessed on 2 July 2025).

- DMG MORI Deutschland. SIEMENS Operate 4.8. Available online: https://de.dmgmori.com/produkte/steuerungen/19-slimline-control-and-siemens-dmp (accessed on 2 July 2025).

- Raspberry Pi Ltd. Raspberry Pi 4 Model B. Available online: https://www.raspberrypi.com/products/raspberry-pi-4-model-b/ (accessed on 2 July 2025).

- OpenJS Foundation. Low-Code Programming for Event-Driven Applications: Node-RED. Available online: https://nodered.org/ (accessed on 2 July 2025).

- Grafana Labs. Grafana: The Open and Composable Observability Platform|Grafana Labs. Available online: https://grafana.com/ (accessed on 2 July 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Permin, E.; Wohlgemuth, C.; Keller, T. Use-Case-Driven Architectures for Data Platforms in Manufacturing. Platforms 2025, 3, 15. https://doi.org/10.3390/platforms3030015

Permin E, Wohlgemuth C, Keller T. Use-Case-Driven Architectures for Data Platforms in Manufacturing. Platforms. 2025; 3(3):15. https://doi.org/10.3390/platforms3030015

Chicago/Turabian StylePermin, Eike, Carsten Wohlgemuth, and Tom Keller. 2025. "Use-Case-Driven Architectures for Data Platforms in Manufacturing" Platforms 3, no. 3: 15. https://doi.org/10.3390/platforms3030015

APA StylePermin, E., Wohlgemuth, C., & Keller, T. (2025). Use-Case-Driven Architectures for Data Platforms in Manufacturing. Platforms, 3(3), 15. https://doi.org/10.3390/platforms3030015