1. Introduction

Since its inception in 2015 [

1], deep reinforcement learning (DRL) has found many applications in a wide set of domains [

2]. In recent years, its use in trading has become quite popular, at least in academia (for an overview, see [

3] or [

4]).

The most interest lies within the portfolio optimization problem (see, for example, references [

5,

6,

7] for some modern approaches), which can leverage multiple assets both in uptrends and downtrends. In single-asset trading, there is too much risk involved, as argued, for example, in ref. [

8]: if the asset goes down, then the best option is to hold. Additionally, if the market is in a strong bull trend, it is hard to beat it; see, for example, a recent confirmation of this by [

9]. The market (in this case, the single asset) often changes regimes regarding volatility, trend, smoothness, etc. There have been many works showing and modeling this; see, for example, works mentioning trading and market regime switches (e.g., [

10,

11]).

The applied methodology pursued by many (if not all) researchers working in DRL for trading is to evaluate the agent for a short period (test mode) and then retrain it to deal with another test period. Moreover, the training must occur before the testing so the agent can catch the current market dynamics.

This workflow is cumbersome and introduces additional constraints and computational costs. Moreover, the uncertainty about the change in the market regime and timing still needs to be addressed in real-time. We thus propose an alternative method, where a hierarchical system decides which of the regimes the market is in and assigns the most appropriate behavior by selecting a low-level agent to act in the actual market, i.e., to buy and sell the asset. We delve more into the architecture in subsequent sections. We now proceed to the RL problem formulation.

The reinforcement learning problem is usually formulated as a Markov decision process (MDP) [

12], which is a discrete stochastic control process for decision-making in environments with uncertainty (there are also continuous MDPs [

13]). Formally, a discrete MDP is given by:

, a set of discrete states that the agent can be in, often called the state space.

, a set of discrete actions the agent can take in the environment, also referred to as the action space.

, which is the probability of being in state s at timestep t, taking an action a and then being in at timestep . This formulation is also known as the transition function.

, which is the reward function, similarly dependent on a, the current action, and s, the current state, and is given by the environment when in the next state . Usually, the policy of selecting an action a when in state s is denoted by and is often probabilistic.

In dynamic programming, which is somewhat the precursor of RL, where the transition function and reward function are given or known, two equations are employed alternatively:

To update a value function , which encodes how good it is to be in state s;

To update the policy, which reflects the value function and is taken to be the action that maximizes this value function estimate, known as the greedy policy for V.

Formally:

where

is called the discount factor and

. A commonly used RL alternative is to use a state-action value function, where we now have two identifiers,

s and

a, where

p and

r are unknown:

with the model-free version, the iterative version given by (which is, in a sense, an approximation of the above equation with the greedy policy selection step included):

There are two classes of algorithms for RL: value function algorithms, which try to accurately estimate the state-value function V or the state-action value function Q (Q-learning), and policy algorithms, which look directly at optimizing (policy gradient), bypassing the value function estimation (others use both methods, e.g., actor-critic).

On a different set of axes, there are model-based RL agents that model and learn the transition function , and there are model-free agents that do not explicitly model the transition function. Model-based RL implies the existence of a simulation of the environment with which the agent can interact without incurring the cost associated with or benefiting from the precision of the real environment. If a model can learn accurately and efficiently, then the advantages of having a model are apparent; knowing the possible outcomes of some actions without actually taking them enables long-term planning and significantly enhances the agent’s capabilities.

Instead of using the summation over all possible following states to obtain a value for

Q, we concatenate the prediction of the next state(s) to the current state, effectively adding another identifier to the

Q function, which is the predicted future. This approach is more efficient but less accurate, but it still uses the information from the prediction. Moreover, it can be applied to any reinforcement learning algorithm with the minimum overhead. This methodology has been used previously for the same purpose; see, for example, [

14]. This technique could be classified by [

15] as implicit model-based RL, where we do not explicitly model the transition function and the planning procedure, but we use the prediction as part of the RL agent representation.

The purpose of this work was to leverage the newest technologies in the DRL domain to obtain better performance on highly volatile trading markets. The work described herein brings a number of novel contributions and overcomes some of the limitations of previous systems, such as reduced performance on testing sets that are very different and the need for training sets and retraining. Some benefits of using such a system are: lower computational cost, higher flexibility, incorporating sound domain knowledge into a complex high-performance automatic system, and balancing risk and reward using powerful deep networks.

2. Related Work

The idea of having an environment model has been used in the past quite effectively, both with analytical gradients [

16] in the linear regime and even neural networks [

17] in the nonlinear. As long as a model can learn to some degree of accuracy and is not too computationally intensive to do so, then the use of a model generally improves performance and sample efficiency. Moreover, it gives the ability to generate or roll out future trajectories.

This ability is instrumental in trading, where accurate predictions could greatly enhance profits, especially if they are multi-step. A couple of recent works combine DRL and MB methods for trading [

14,

18]. In [

18], they use a sequence-to-sequence RNN-MDN (mixture density network [

19]) to model the transition model based on latent states given by a convolutional autoencoder. They show that the performance of an agent trained on the transition model is very similar to that of one trained on the actual data, with the ability to explore the transition model and exploit it in the real environment. In the second work [

14], using model-based prediction with DRL for trading, the authors use a nonlinear DyBM (dynamic Boltzmann machine) to make predictions and embed these into the state of the agent; they call it an infused prediction module.

On the other end, hierarchical RL enables the partitioning of the state space in some meaningful way (usually performed automatically) and the solving of different problems individually with so-called skills or options, sub-policies directly interacting with the environment. A higher-level agent chooses between these sub-policies according to a decided criteria and stops them according to a decided termination function, simultaneously giving goals and rewards to the low-level agents. Some new approaches to HRL using deep networks can be found in [

20,

21,

22]; however, the concepts are much older; see [

23,

24]. For a more detailed overview, see [

25]. The goal is to learn specific behaviors well while combining the learned solutions adaptively. Hierarchical RL methods hold promise for general artificial intelligence. In trading, they have been used successfully, for example, in portfolio management [

26,

27], but not in the single asset case.

The association of hierarchy with model-based RL seems fruitful; however, only a few works exist that combine the two. It has been mentioned as promising for the future in [

28], but more from a philosophical or cognitive point of view than from a practical application to a real-world problem. More recently, the association has been performed in [

29], where the authors use inductive logic programming to devise a transition model. This combines symbolic representations by employing an additional symbolic MDP, which augments the state with boolean predicates, which can be goals, the relationship of objects, events, or properties in the environment. The rewards for the low-level agent are augmented with a positive number if the agent reaches the subgoal set by the high-level agent (using the symbolic transition model). The high-level agent uses as a reward the accumulated environmental rewards that result when the low-level agent reaches the subgoal, with small penalizing factors for setting immature or unlearnable tasks as subgoals. The high-level agent alternates interacting with the environment and the simulated transition model, which results in increased sample efficiency. The overall agent is tested in two hard environments for flat agents (not using hierarchy), showing good performance. It is unclear if for both, but for one environment (involving a robot reaching several circles in a specific order), the HRL uses tabular Q-learning for the high-level agent and a PPO (Proximal Policy Optimization [

30]) agent for the low level. Even though it is partly a symbolic approach and thus significantly engineered, it shows the potential of combining hierarchy and explicitly learning a transition model. A somehow related work is [

31], where even though the markets are different (energy), they use multi-scale deep reinforcement learning with great results. An even more interesting and similar work, which is also on the energy market, is [

32], where they use multiple DRL agents on different levels.

Our architecture is similar to the options framework [

33], where an option is defined by:

, a set of initial states from which an option o can be started, with ;

, a policy that is followed for the duration of the option;

, a termination function that governs the termination of the policy when certain conditions are met.

We describe our architecture and how the above points correspond to it.

3. Overview and Methods

The idea is to leverage heterogeneous behaviors in a flexible manner using hierarchy. The assumption is that the market can go through multiple different dynamics, and thus hierarchy seems the most appropriate to tackle this issue in a feasible and efficient way. This assumption has been widely studied in the literature and was verified experimentally by the author as well. Even though hierarchy is well suited for multi-asset problems, we show that it can also be used in a single-asset case successfully.

In the work that follows, we combine the model-based approach with a hierarchical structure, such that we use a high-level agent to select among a set of pretrained low-level agents, then act out several steps in the environment. These steps are governed by a certain termination function

, which in the simplest case is given by:

where % is the modulo operator,

s is the current state,

t is the current timestep in the environment, and

k is a value significantly larger than 1; this is the resolution at which the high-level agent sees the world, the subsampling factor. We set

(we test for

). The options’ policies are the actual low-level policies pretrained beforehand or simple strategies governed by some technical indicators (such as moving average crossover or mean reversion strategy). We use five PPO agents and two simple strategies. The initiation sets are all the same for all low-level agents and are constituted by this reduced set

, which comprises every

kth element of

, with

.

The high-level agent is endowed with a classification mechanism

, which clusters incoming series into different classes and feeds different agents with samples from these different clusters. This mechanism is analogous to the subgoal discovery problem [

34,

35], where similar processing exists where the high-level agents find attractive states for the low-level agents to reach. Because the environment is different in our c, reaching the subgoal does not have any real meaning. We use

k-means [

36] from the Python library

(

https://github.com/tslearn-team/tslearn/, accessed on 19 January 2023) for clustering the time series, with an episode length of 50 and 5 clusters. We tested more clustering algorithms, but they all gave similar results, so we chose the simplest one. We also tested more values for the number of clusters, but we chose a small one that showed the significant trends that one can see in a market.

The prediction function

f is applied to the recent past to produce some probable future (same for the low level and function

g):

To use the classification part to its full power, we classify the past as well as the future. Classification induces a smoothness in the representation (since neighboring points will be very similar and thus the classification will be the same), which is very desirable since we do not want the policy to change too often. The classification is given by:

We already see how model-based prediction can help. By assigning an agent to act for the next window, we have to classify it as being in one of the clusters, which means that we first have to predict it and then classify this prediction to match the agent. We denote by

f the prediction function for the high level and by

g the prediction function for the low level. For

f, we use a deep autoregressive model using recurrent neural networks [

37] from the Python library

gluonts (

https://ts.gluon.ai/, accessed on 19 January 2023), which is a probabilistic time-series library focused on deep learning models. For

g, we use a dynamic Boltzmann machine (

https://github.com/ibm-research-tokyo/dybm, accessed on 19 January 2023) which is generally good for one-step prediction and also has a constant training time.

For the RL part, we use

RLlib (

https://github.com/ray-project/ray/tree/master/rllib, accessed on 19 January 2023), which is a Python RL library that scales very well on multiple CPU cores and machines. It also has the latest state-of-the-art algorithms and RL tricks implemented and ready to use.

3.1. Data

We sample data at 1 h frequencies and use 4000 data points for training the low level, 8500 points for training the high level (including the previous 4000), and 10,000 points for testing (BTCUSDT taken from Binance (

www.binance.com, accessed on 19 January 2023), 2019-10-23 07:00:00–2022-01-30 21:00:00). For details on the data splits, see

Figure A2. For training the low level, we sample episodes at a length of 50. For the high level, we use 1500 (which amounts to 50 decision points for the high-level agent since it acts every 30 steps). For each performance measurement, we take 30 random samples.

Here, we deviate from the general literature, where the test sets are much smaller than the training sets, and often each testing set has to be preceded by a training set due to what is considered a requirement of the nature of the market and its different regimes. It is assumed that for an agent to perform well on some data, it needs to be trained on the period right before. Because we deal with the different regimes in another way (by employing heterogeneous agents), we can train systems that perform well on an extensive testing set and do not need to be trained multiple times for each testing period. Our agents are general enough to deal well with any market regime.

For the low level, the data are concatenated as , where corresponds to the last n steps of the respective price return (open, high, low, and close). By price return, we mean that . is the volume associated with timestep t, and is also a normalized difference: .

3.2. Architecture

After we tried running multiple types of agents on the various parts of the dataset and obtained poor performance, we noticed that the performance was correlated with the training data; thus, we concluded that partitioning the data in some meaningful way might be fruitful. We describe this next.

The preprocessing for the high-level agent is performed by the clustering mechanism, where we classify each running episode in one of the existing clusters (

) and feed as state the last

n such classifications. To select the low-level agent to act, we consider this representation more appropriate since the classes represent the expertise of each agent in some sense. Moreover, neighboring episodes will have the same class, thus inducing smoothness in the representation and thus action selection, which is in line with our goals. We show the overall architecture of our learning system in

Figure 1. The gray rectangles depict the logical agents, and the arrows represent the data flow, with dashed arrows representing the actions. We see that both levels incorporate a prediction model.

The low-level prediction did not improve much (or at all: the results were inconclusive) the performance of the low-level agents; thus, we do not include it in the final results. For the high-level state, we tried multiple approaches:

Using raw returns, as in the low-level agent;

Using the recent performance of each individual agent in its own simulated environment (similar to [

7]) and concatenating these for all agents;

Using concatenated samples from the prediction model instead of median or mean;

Using the result of the classification of the recent past and the prediction, applying .

The best version used both classification and prediction (see

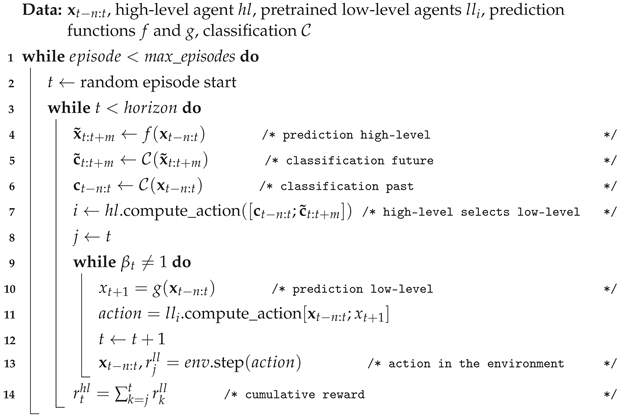

Section 4). In general, in our experience, larger state spaces induce more variability, which is also what happened when using raw samples from the prediction model. We show the full algorithm in Algorithm 1.

| Algorithm 1: Hierarchical model-based deep reinforcement learning for trading in evaluation mode. |

![Analytics 02 00031 i001 Analytics 02 00031 i001]() |

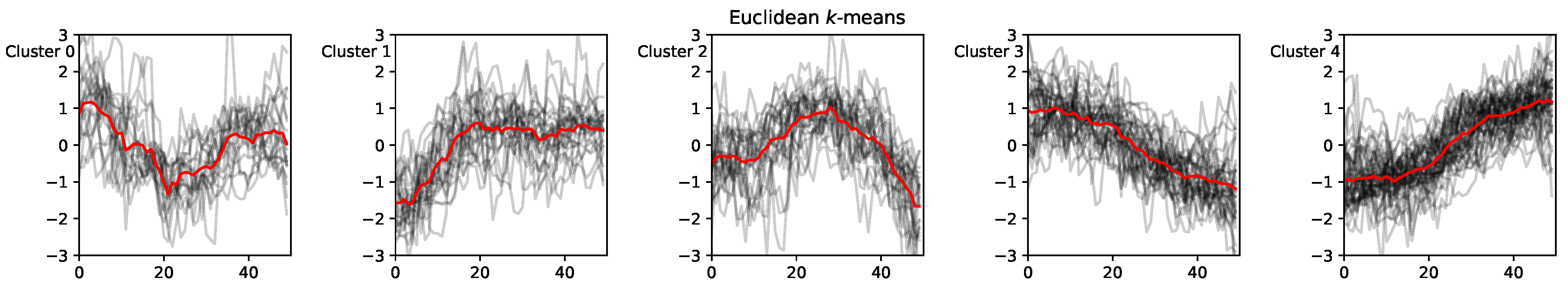

3.2.1. Clustering as Preprocessing for the High-Level Agent

We endow the high-level agent with a preprocessing module that can cluster the incoming time series into a specific cluster (one of five discovered beforehand when clustering the training set, see

Figure 2).

We denote by

the state of the high-level agent. The state at timestep

t is given by

, where

is a vector of the last

n classifications, where each point

with

denotes the classification of the episode that ends at

.

is the prediction of the following episodes’ classes by the classification, which is fed with the prediction of the next timesteps. Thus, each point

with

is the classification of the (predicted) episode ending at

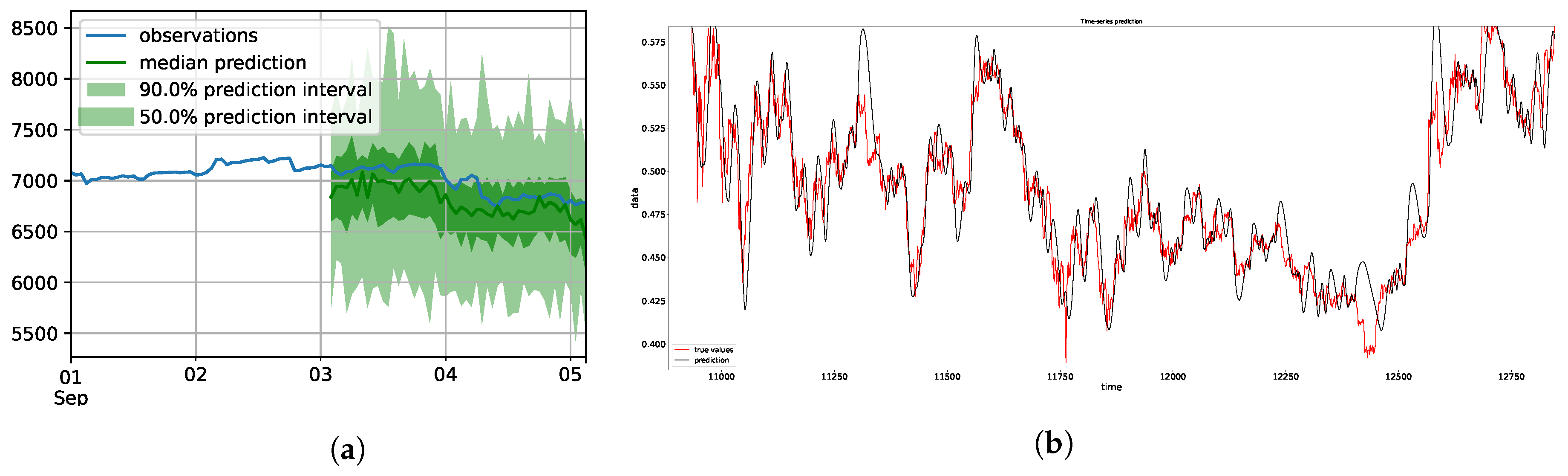

. Because the high-level agent takes decisions at a coarser data granularity, having such a multiple-step prediction model makes sense. We use a DeepAR model for our multiple-step prediction, which generally keeps the shape of the future trend but lacks minute precision, which is what we needed. We show the example predictions in

Figure 3a.

3.2.2. Pretraining the Low-Level Agents

We pretrain the low-level agents on specific data clusters, so each one specializes in one type of trend. We show the clusters and their means in

Figure 2. We see that the five clusters capture some of the main trends in the market.

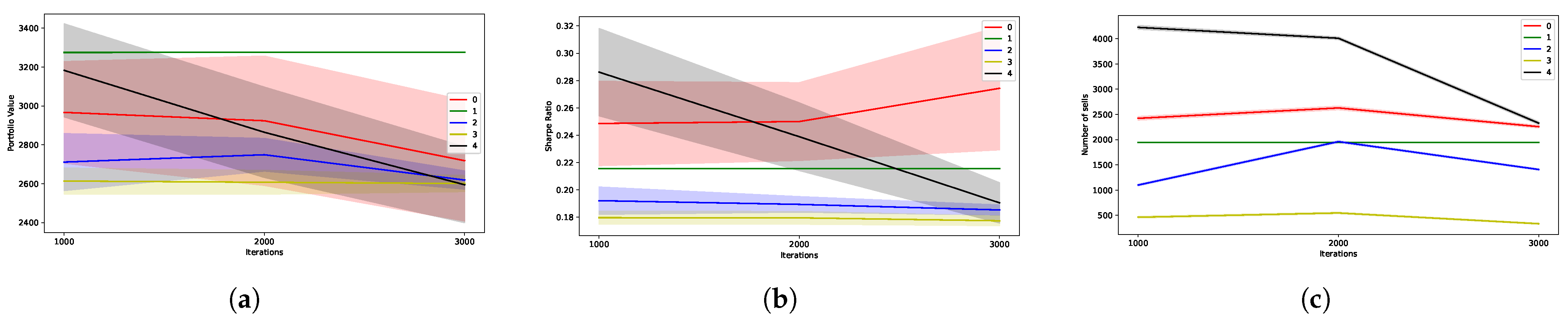

In

Figure 4, we show the best performance of individual agents on the test set consisting of 15,000 points (21 months). We select the best one (among 24 trials) for each class for comparison. We perform 30 repetitions for each and depict the mean and standard deviation. We can conclude from these results a number of things:

Performance and trade dynamics vary widely between agents trained with different types of data;

More iterations do not mean better performance; we should be careful not to overfit;

More data does not mean better performance;

We should be watchful for possible pathologies (e.g., a small number of sells).

3.3. Prediction

In the context of RL, prediction assumes the existence of a model of the environment with which the agent can interact without incurring the losses associated with the real environment. This complete model comprises state and reward models, predicting the following states and rewards. We are only interested in the state model, as the reward model is completely dependent on the state model. In the sense that if we know the next state (price), we can easily compute the rewards, given the action taken.

For the low level, we tested with the true values instead of the predictions to see if the performance improved. We noticed a significant improvement. We repeated the value of the future step 10 times and then concatenated this to the state vector for the low level as a way of biasing the agent, such that this future value has a more considerable influence on the decision (having a single value did not seem to work too well). So, it is clear that accurate predictions can significantly improve performance and training time. We thus add a one-step prediction for each of the OHLCV time series, thus having five different prediction models for each agent. As we mentioned, this does not improve performance conclusively.

For the

high-level agent, the representation is in terms of classes (0 to 4), and thus the prediction should be the same. We also note that the history window size has now doubled compared to the low level since an episode now defines every point

ending at that point. So, we predict multiple steps in the future and look at more steps in the past. We show an example in

Figure 5.

3.4. Rewards

The rewards for the low-level agent are given by the usual profit and loss (PnL). We also standardize the rewards, subtracting the running mean and dividing by the running standard deviation of the current episode at each step. We perform this operation for all types of rewards used and all agents.

For the high-level agent, we feed the cumulative profit and loss for each low-level episode, while for the low-level agent, we feed the realized PnL (profit and loss):

where

is the first open buy price (see

Appendix A.1).

3.5. Termination Function and Diversification

By looking at the low-level agents’ pretraining performance, we can draw some conclusions:

If a large loss has been incurred, there are chances that this is a period of losses for this agent (not only this agent; we see that some periods of losses coincide for all agents). Thus, we add a hold action for the high-level agent.

Recent agent performance could be relevant for the immediate future.

Agents are not complementary, but combining more of them can improve performance.

Improving diversification in the low-level dynamics might improve overall performance.

We hypothesized that the termination function would be necessary for trading and that the overall strategy could be improved by learning when to terminate, but it seems not. We tested with multiple values (10, 30, and 300), and the difference is small, especially among lower values. We settled on 30 as the value, which seemed more robust.

4. Experiments and Results

Firstly, we have to also mention the usual RL instability in training and emphasize how the best hyperparameters were different in each selection (for the pretrained agents and the hierarchical agent), so running multiple trials with different configurations of hyperparameters at each experimental stage is critical for obtaining reasonable solutions. We evaluate 30 samples for each curve after an initial run of 24 trials and select the best hyperparameter configuration (see

Appendix A.2). A significant improvement was the addition of the ability to hold (or do nothing) for the high-level agent, thus allowing for a more extended period (the terminating value

k), which makes sense because, as we saw from the low-level pretraining, there are extended periods where it is better to do nothing as it is almost impossible to be profitable.

Performance gains were hard to obtain with this setup due to the fact that agents were not very dissimilar when making profits; thus, we wanted to add more agents but with different trading dynamics. We proceed to describe these steps.

4.1. Hierarchy with Two Simple Strategies

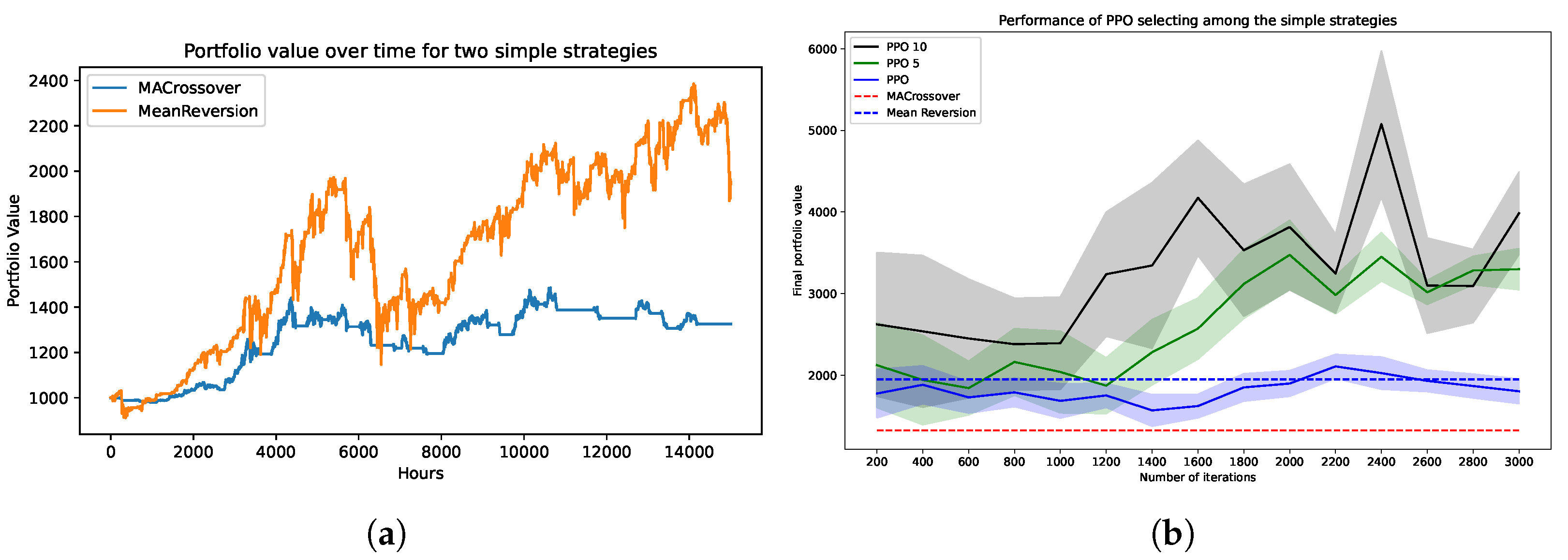

We test two basic strategies, a mean reversion and a moving average crossover, which can be considered a momentum strategy.

Figure 6a shows the performance of the two basic strategies. We then check to see if using a DRL agent to select between the two strategies is of any help. We also add the hold option for the agent to choose from three discrete actions. We show the performance of the DRL agent (we use a PPO agent as before) in

Figure 6b. We see that performance is indeed better than in each of the two individual strategies. We use a minimal

network with a

activation function. We try three decision intervals for the PPO: every step, once every five steps (PPO 5), and once every ten steps (PPO 10).

The mean reversion strategy uses two indicators: a relative strength index indicator with an exponential moving window working over a period of 6 h and a moving average over a period of 20 h. The strategy buys if the RSI value is less than a certain threshold (40 in our case) and the price value is less than the lower Bollinger band and sells if the RSI value is higher than a certain threshold (60 in our case) and the current price is higher than the higher Bollinger. The higher and lower Bollinger thresholds are at , with the rolling standard deviation of the time series (on the same period as the mean: 20 h). The strategy holds or does nothing if none of the above conditions are met.

The moving average crossover strategy is even more straightforward. We have two different moving averages (in our case, one works on a 100 h window and the other on a 400 h window), and when the shorter one surpasses the lower one, it is a buy signal, and when the reverse is the case, it is a sell signal.

We see that these models can be leveraged to perform better when combined through an additional layer of decision-making. Thus, we include these two simple models in our final set of low-level agents. The results in

Figure 7 and

Figure 8 were obtained with this setup. We can see in

Figure 7 the performance of various models tested throughout, with the best one being the one that uses both classification and prediction. In

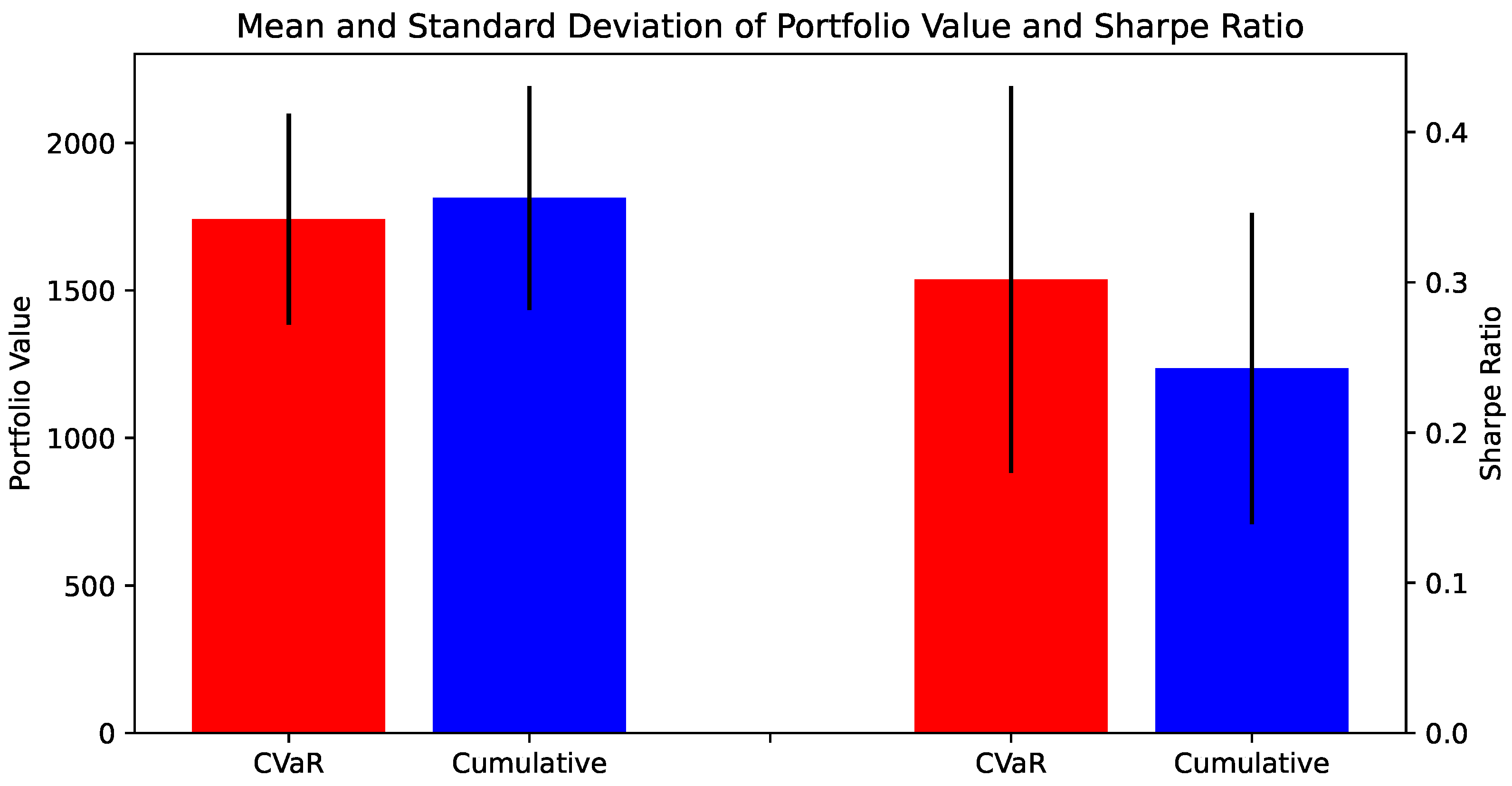

Figure 8, we see a significant rise in the Sharpe ratio and a small drop in performance for the agent using risk-return rewards, which looks very promising for a first set of experiments.

4.2. Risk-Return Optimization

The Markowitz model implies that one wants to minimize risk and maximize returns, and a hierarchical approach naturally lends itself to such a dual optimization. We thus test this approach as well, with the high-level agent optimizing for risk. The conditional value rewards the high level at risk, or conditional value at risk (CVaR). To define the CVaR, we first need to define the value at risk (VaR).

VaR: Value at risk is a measure describing how much value is at risk during a specific period and its probability of occurrence. Many critics have criticized this as being uninformative or giving a false measure of certainty and security where there should be none. One of the main arguments against using VaR is that it is a measure for describing rare events that are hard to describe.

Formally, let

X be the profits (positive) and losses (negative) of a random variable (rv) associated with a certain distribution. The VaR at level

gives the smallest number

y, such that the probability that

is not larger than

y is at least

. We denote this as

, and the most general definition is given by:

The function is the cumulative distribution function (cdf) of the random variable X (for any rv, its cdf is well defined). There are multiple ways of computing the VaR:

Either by looking at past returns, assuming that future outcomes will be similar to past ones;

By assuming a parametric distribution of the returns, such as a Gaussian distribution;

By using Monte Carlo simulations of predicted returns.

We use the first method as it is the simplest and quite efficient. We use .

CVaR: Conditional value at risk is a measure related to VaR but more comprehensive as it looks at the expected value beyond the VaR threshold:

Because losses are negative, then their expectations would also be negative, and we want a positive risk measure (since it should represent the magnitude of losses). We thus add a minus sign to ensure that the final value is positive. If we assume that the distribution is continuous, then this is equivalent to the conditional tail expectation given by:

CVaR is also known as the

expected shortfall and can be seen as describing the average loss case when the losses are severe (they only occur

percent of the time).

When comparing the original version, using a cumulative return for the high level, versus the risk-return version, using CVaR as a reward, we obtain a significant difference with a larger Sharpe ratio for the CVaR agent (running a t-test between the two populations gave a p-value of 0.02, so the null hypothesis can be rejected, i.e., the two populations’ means are not equal).

To evaluate the performance of the risk-return model, we compute the monthly Sharpe ratio, which will be independent of the frequency of trading or data used. Otherwise, we risk sampling bias, with the Sharpe ratio dependent on the period length used for calculation. The

monthly Sharpe ratio is computed as follows:

where

is the average monthly excess return,

is the return of the portfolio in month

i,

is the risk-free excess return (which can be taken to vary each month but which we keep constant for simplicity), and

n is the number of months. The risk-free return is generally quite low (initially taken as the return of US bonds); we take it as

.

5. Discussion

Our findings confirm the utility of both hierarchy and prediction in enhancing performance. In the hierarchical reinforcement learning framework, our results indicate that low-level one-step prediction did not yield significant performance improvements. However, an interesting avenue that emerges from this finding is the potential inadequacy of the current prediction model at this level. Therefore, further research is needed to investigate more efficient prediction models that could yield better performance at the low level.

For the high level, using predictions in conjunction with incremental classifications of these predictions significantly improved performance. The introduction of a risk-based reward measure such as CVaR altered the problem dynamic to a risk-return optimization, with the low level being incentivized for greater returns and the high level being rewarded for risk reduction. This balance of risk and reward is a crucial aspect of any market setting, and the inclusion of domain knowledge through clustering further sets our model apart from traditional hierarchical reinforcement learning approaches.

By leveraging hierarchy and prediction for trading a single asset, we were able to significantly improve performance in terms of overall returns and the Sharpe ratio. The large testing set used in this study also minimizes sampling bias, reinforcing the robustness of our findings.

Finally, the possibility of mapping the natural hierarchical structures seen in traditional portfolio management to this hierarchical system could provide a consistent and intuitive framework for managing heterogeneous assets. This integration could be the next frontier in the development of more effective and adaptable trading systems.

6. Conclusions

Both hierarchy and prediction can significantly improve performance. Even though we have not seen any performance benefit from adding one-step prediction at the low level, we have tested to see if good prediction does improve performance, and indeed it is so; using true values instead of predictions does provide positive results. This means that the prediction model used for the low level is insufficient.

For the high level, we saw that prediction used in conjunction with incrementally classifying predictions does offer significant performance improvements, even though neither of the two methods used separately offered any improvement. Moreover, we saw that changing the reward of the high level to a risk-based measure such as CVaR transforms the whole problem into a risk-return optimization, where the low level is rewarded for increased returns and the high level receives rewards for lowering the risk (or the risk measure).

Some aspects in which our work differs from traditional hierarchical reinforcement learning are:

Easy use of prediction at both levels (this can be used by any RL algorithm);

Incorporation of domain knowledge (the clustering part);

Balancing of risk and reward between the two sets of agents (very desirable in any market setting).

7. Future Directions

The current work can be extended by considering specific goals that the high-level agents might give the low-level ones, such as, for example, a desired (approximate) number of trades or a desired amount of risk to seek for the period (Sharpe ratio or Sortino ratio). The closer to the goal, the bigger the reward for the low-level agent. In this way, the behavior of the low-level agents can be constructed around specific desiderata.

One interesting behavior that could be enacted in this type of architecture is for one agent to control the learning of another (meta-learning), where, for example, the high-level control of a parameter with the reward of the low-level being .

Other ways that the hierarchical decision-making setup can be further leveraged are to devise complementary low-level agents, where the trading dynamics are as different as possible while still retaining reasonable performance individually. Moreover, training one level of agents with a certain risk measure and the other with the returns has not been explored fully in this article. For example, one could try using the risk measure in the low level and the returns for the high level. Or, alternatively, train them at the same time without pretraining the low level. However, this is usually computationally more intensive and more unstable, adding to the usual instability of RL training.

In this article, we showed how leveraging hierarchy and prediction for trading a single asset can significantly improve performance, both in terms of overall returns and the Sharpe ratio. We used a much larger testing set to avoid the sampling bias usually encountered in such scenarios (cherry picking) and showed that even simple strategies, such as the mean reversion or moving average crossover, can be leveraged with the help of an additional decision-making layer.

Furthermore, natural hierarchical structures seen in the usual portfolio management literature (e.g., asset classes based on industry type or country) could be mapped to such a hierarchical system in a straightforward manner, providing a consistent way of dealing with heterogeneous assets.