Anxiety among Medical Students Regarding Generative Artificial Intelligence Models: A Pilot Descriptive Study

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design and Ethical Permission

2.2. Survey Instrument Development

2.3. Final Survey Used

2.4. Sample Size Calculation

2.5. Statistical and Data Analysis

3. Results

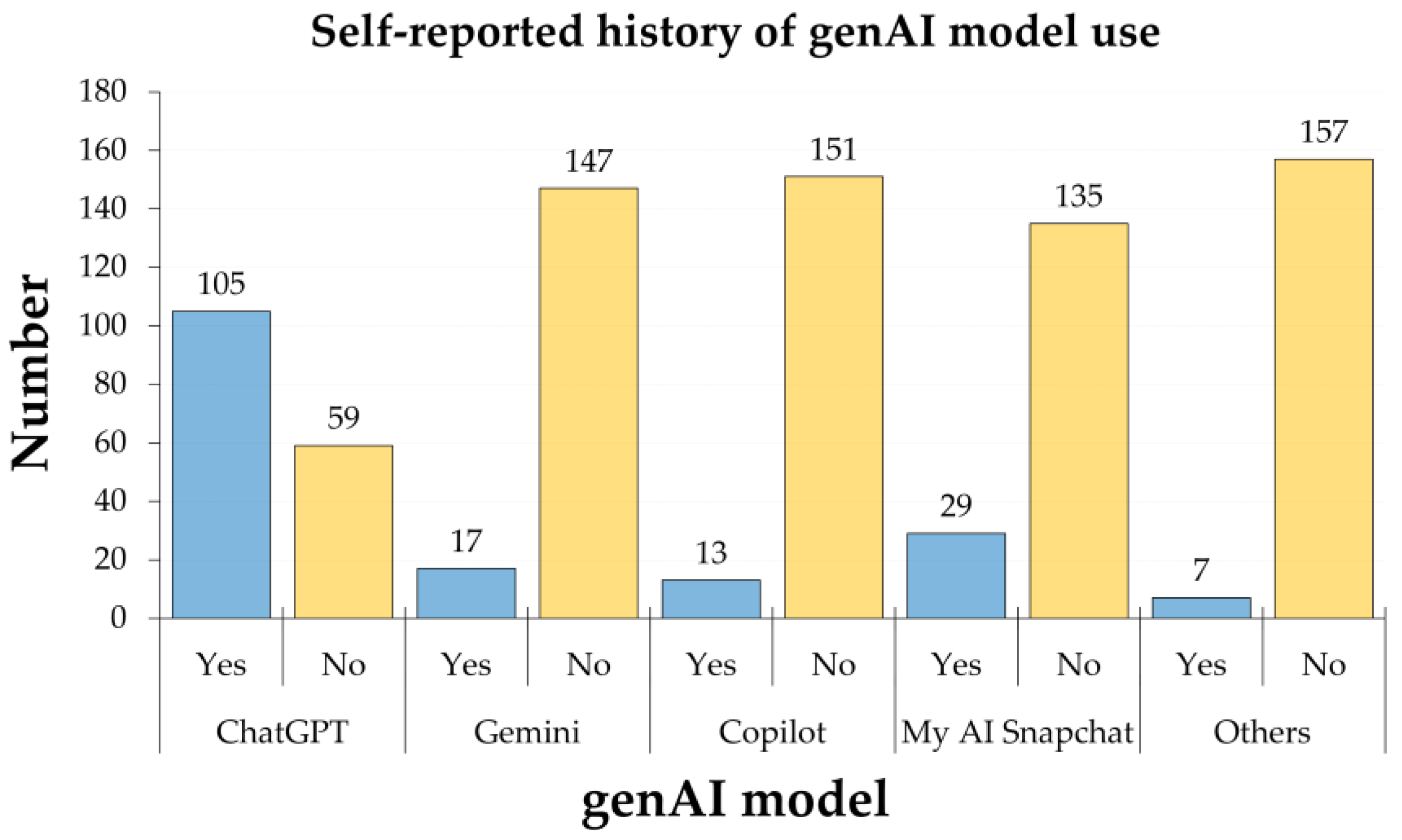

3.1. General Features of Participating Medical Students

3.2. The Level of Anxiety Toward genAI and Its Associated Determinants

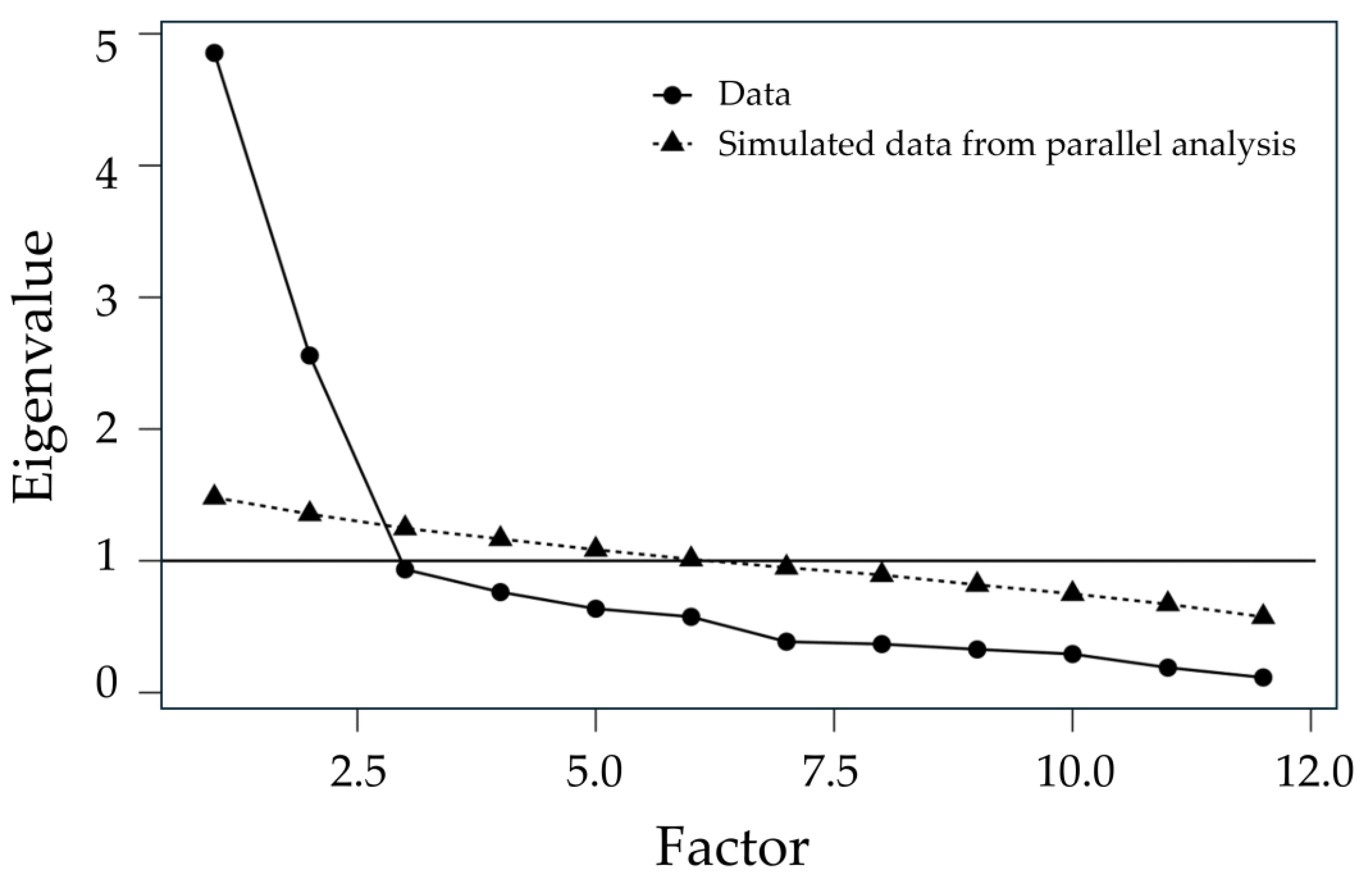

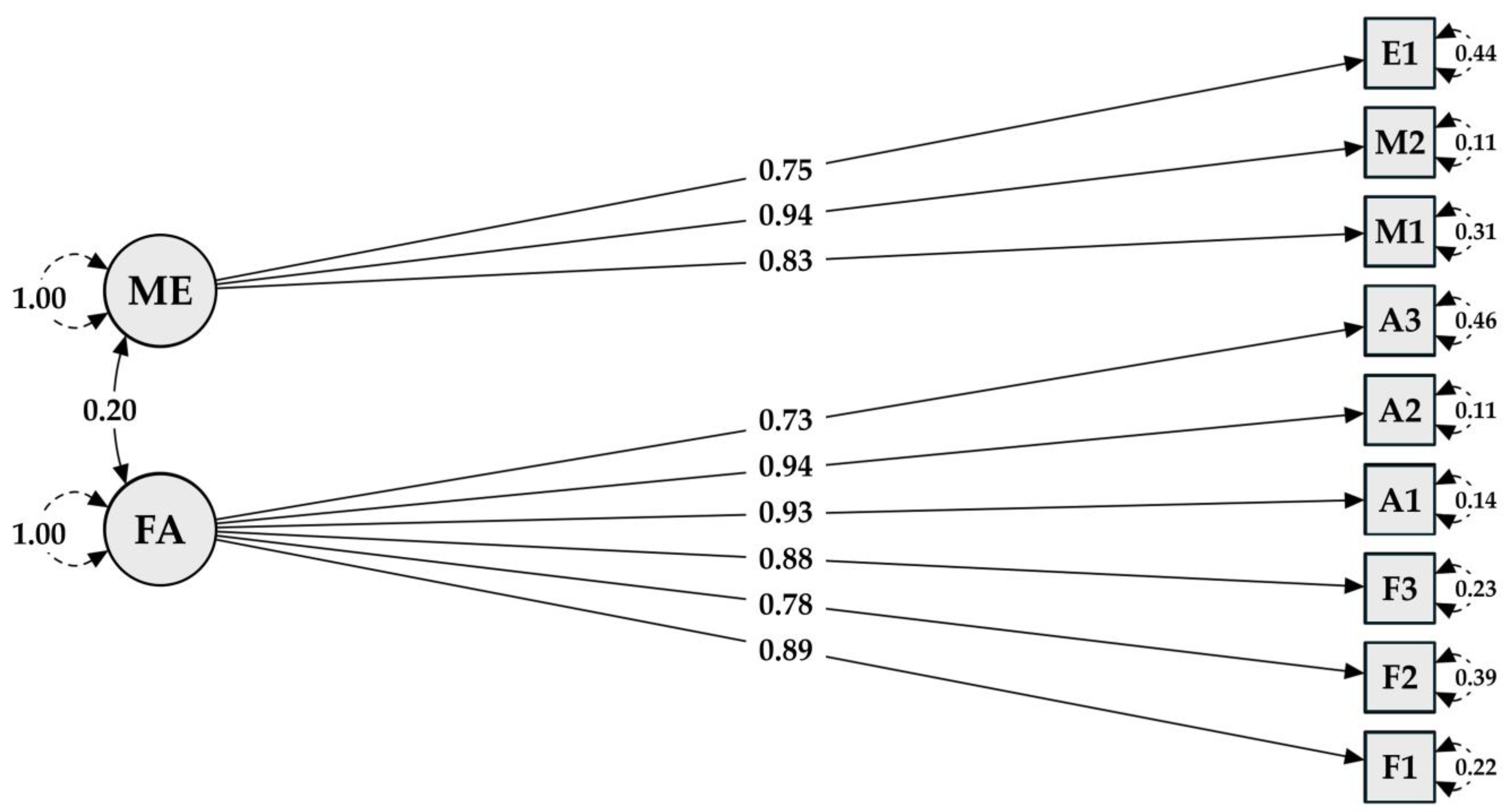

3.3. FAME Construct’s Reliability

3.4. FAME Constructs Scores

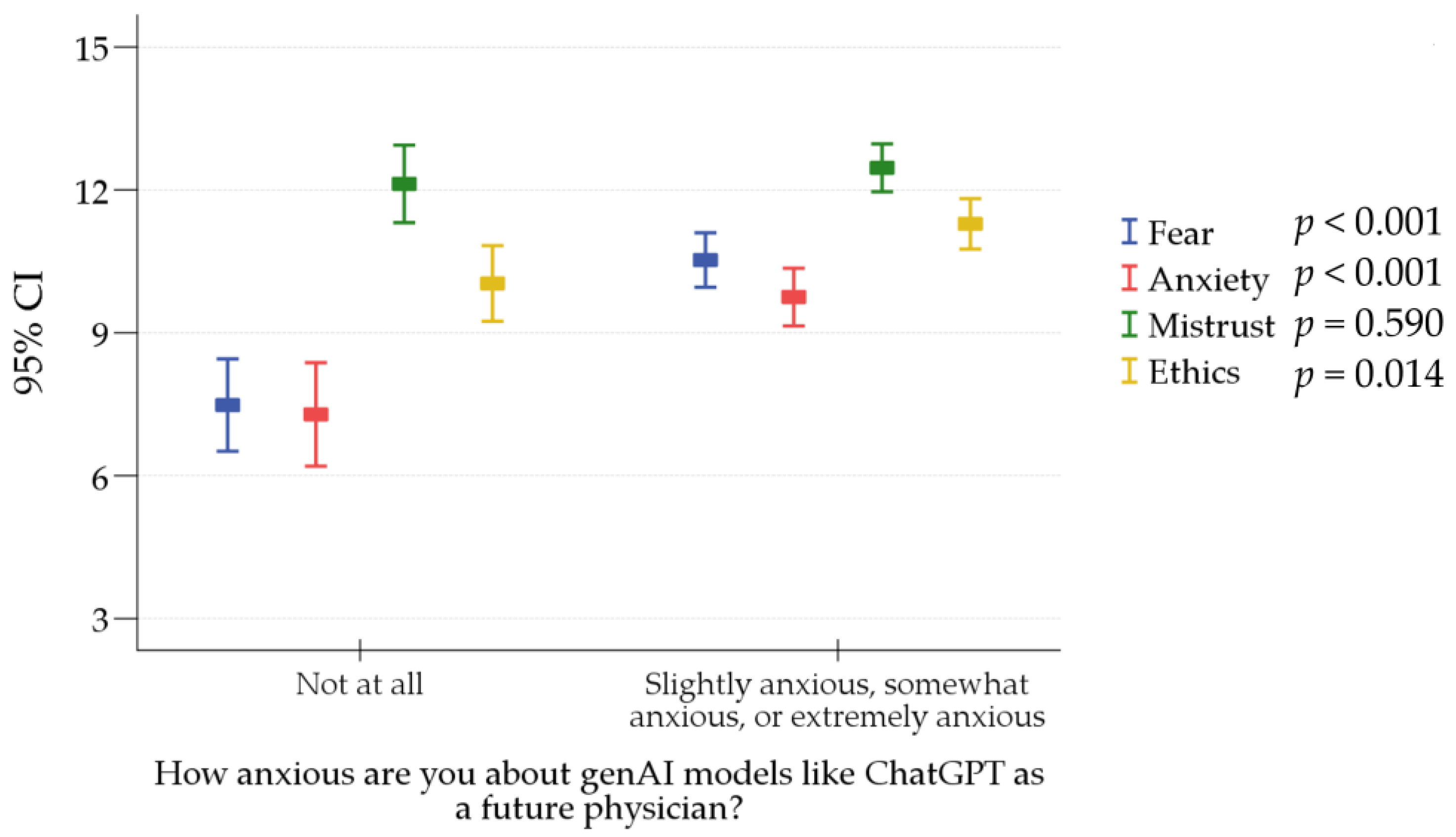

3.5. Determinants of Anxiety about genAI among Participating Medical Students

4. Discussion

4.1. Recommendations Based on This Study’s Findings

4.2. Study Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| CI | Confidence Interval |

| FAME | Fear, Anxiety, Mistrust, and Ethics |

| genAI | Generative Artificial Intelligence |

| GFI | Goodness of Fit Index |

| GPA | Grade Point Average |

| ICC | Intraclass Correlation Coefficient |

| KMO | Kaiser–Meyer–Olkin Measure |

| RMSEA | Root Mean Square Error of Approximation |

| SD | Standard Deviation |

| SRMR | Standardized Root Mean Square Residual |

| TAM | Technology Acceptance Model |

| UAE | United Arab Emirates |

References

- Alowais, S.A.; Alghamdi, S.S.; Alsuhebany, N.; Alqahtani, T.; Alshaya, A.I.; Almohareb, S.N.; Aldairem, A.; Alrashed, M.; Bin Saleh, K.; Badreldin, H.A.; et al. Revolutionizing healthcare: The role of artificial intelligence in clinical practice. BMC Med. Educ. 2023, 23, 689. [Google Scholar] [CrossRef]

- Sallam, M.; Salim, N.A.; Barakat, M.; Al-Tammemi, A.B. ChatGPT applications in medical, dental, pharmacy, and public health education: A descriptive study highlighting the advantages and limitations. Narra J. 2023, 3, e103. [Google Scholar] [CrossRef] [PubMed]

- Sallam, M. ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthcare 2023, 11, 887. [Google Scholar] [CrossRef] [PubMed]

- Hashmi, N.; Bal, A.S. Generative AI in higher education and beyond. Bus. Horiz. 2024, in press. [CrossRef]

- Lee, D.; Arnold, M.; Srivastava, A.; Plastow, K.; Strelan, P.; Ploeckl, F.; Lekkas, D.; Palmer, E. The impact of generative AI on higher education learning and teaching: A study of educators’ perspectives. Comput. Educ. Artif. Intell. 2024, 6, 100221. [Google Scholar] [CrossRef]

- Yilmaz Muluk, S.; Olcucu, N. The Role of Artificial Intelligence in the Primary Prevention of Common Musculoskeletal Diseases. Cureus 2024, 16, e65372. [Google Scholar] [CrossRef]

- Sheikh Faisal, R.; Nghia, D.-T.; Niels, P. Generative AI in Education: Technical Foundations, Applications, and Challenges. In Artificial Intelligence for Quality Education; Seifedine, K., Ed.; IntechOpen: Rijeka, Croatia, 2024. [Google Scholar] [CrossRef]

- Acar, O.A. Commentary: Reimagining marketing education in the age of generative AI. Int. J. Res. Mark. 2024, in press. [Google Scholar] [CrossRef]

- Chiu, T.K.F. The impact of Generative AI (GenAI) on practices, policies and research direction in education: A case of ChatGPT and Midjourney. Interact. Learn. Environ. 2023, 2023, 1–17. [Google Scholar] [CrossRef]

- Barakat, M.; Salim, N.A.; Sallam, M. Perspectives of University Educators Regarding ChatGPT: A Validation Study Based on the Technology Acceptance Model. Res. Sq. 2024, 1–25. [Google Scholar] [CrossRef]

- Sallam, M.; Al-Farajat, A.; Egger, J. Envisioning the Future of ChatGPT in Healthcare: Insights and Recommendations from a Systematic Identification of Influential Research and a Call for Papers. Jordan Med. J. 2024, 58, 236–249. [Google Scholar] [CrossRef]

- Mijwil, M.; Abotaleb, M.; Guma, A.L.I.; Dhoska, K. Assigning Medical Professionals: ChatGPT’s Contributions to Medical Education and Health Prediction. Mesopotamian J. Artif. Intell. Healthc. 2024, 2024, 76–83. [Google Scholar] [CrossRef] [PubMed]

- Roos, J.; Kasapovic, A.; Jansen, T.; Kaczmarczyk, R. Artificial Intelligence in Medical Education: Comparative Analysis of ChatGPT, Bing, and Medical Students in Germany. JMIR Med. Educ. 2023, 9, e46482. [Google Scholar] [CrossRef] [PubMed]

- Lim, W.M.; Gunasekara, A.; Pallant, J.L.; Pallant, J.I.; Pechenkina, E. Generative AI and the future of education: Ragnarök or reformation? A paradoxical perspective from management educators. Int. J. Manag. Educ. 2023, 21, 100790. [Google Scholar] [CrossRef]

- Safranek, C.W.; Sidamon-Eristoff, A.E.; Gilson, A.; Chartash, D. The Role of Large Language Models in Medical Education: Applications and Implications. JMIR Med. Educ. 2023, 9, e50945. [Google Scholar] [CrossRef]

- Wani, S.U.D.; Khan, N.A.; Thakur, G.; Gautam, S.P.; Ali, M.; Alam, P.; Alshehri, S.; Ghoneim, M.M.; Shakeel, F. Utilization of Artificial Intelligence in Disease Prevention: Diagnosis, Treatment, and Implications for the Healthcare Workforce. Healthcare 2022, 10, 608. [Google Scholar] [CrossRef]

- Howard, J. Artificial intelligence: Implications for the future of work. Am. J. Ind. Med. 2019, 62, 917–926. [Google Scholar] [CrossRef]

- George, A.S.; George, A.S.H.; Martin, A.S.G. ChatGPT and the Future of Work: A Comprehensive Analysis of AI’s Impact on Jobs and Employment. Partn. Univers. Int. Innov. J. 2023, 1, 154–186. [Google Scholar] [CrossRef]

- Yang, Y.; Ngai, E.W.T.; Wang, L. Resistance to artificial intelligence in health care: Literature review, conceptual framework, and research agenda. Inf. Manag. 2024, 61, 103961. [Google Scholar] [CrossRef]

- Stoumpos, A.I.; Kitsios, F.; Talias, M.A. Digital Transformation in Healthcare: Technology Acceptance and Its Applications. Int. J. Environ. Res. Public Health 2023, 20, 3407. [Google Scholar] [CrossRef]

- Alkhaaldi, S.M.I.; Kassab, C.H.; Dimassi, Z.; Oyoun Alsoud, L.; Al Fahim, M.; Al Hageh, C.; Ibrahim, H. Medical Student Experiences and Perceptions of ChatGPT and Artificial Intelligence: Cross-Sectional Study. JMIR Med. Educ. 2023, 9, e51302. [Google Scholar] [CrossRef]

- Bekbolatova, M.; Mayer, J.; Ong, C.W.; Toma, M. Transformative Potential of AI in Healthcare: Definitions, Applications, and Navigating the Ethical Landscape and Public Perspectives. Healthcare 2024, 12, 125. [Google Scholar] [CrossRef] [PubMed]

- Bohr, A.; Memarzadeh, K. (Eds.) Chapter 2—The rise of artificial intelligence in healthcare applications. In Artificial Intelligence in Healthcare; Academic Press: Cambridge, MA, USA, 2020; pp. 25–60. [Google Scholar] [CrossRef]

- Rony, M.K.K.; Kayesh, I.; Bala, S.D.; Akter, F.; Parvin, M.R. Artificial intelligence in future nursing care: Exploring perspectives of nursing professionals—A descriptive qualitative study. Heliyon 2024, 10, e25718. [Google Scholar] [CrossRef] [PubMed]

- Weidener, L.; Fischer, M. Role of Ethics in Developing AI-Based Applications in Medicine: Insights From Expert Interviews and Discussion of Implications. JMIR AI 2024, 3, e51204. [Google Scholar] [CrossRef] [PubMed]

- Rahimzadeh, V.; Kostick-Quenet, K.; Blumenthal Barby, J.; McGuire, A.L. Ethics Education for Healthcare Professionals in the Era of ChatGPT and Other Large Language Models: Do We Still Need It? Am. J. Bioeth. 2023, 23, 17–27. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Zhang, B.; Cai, Z.; Seery, S.; Gonzalez, M.J.; Ali, N.M.; Ren, R.; Qiao, Y.; Xue, P.; Jiang, Y. Acceptance of clinical artificial intelligence among physicians and medical students: A systematic review with cross-sectional survey. Front. Med. 2022, 9, 990604. [Google Scholar] [CrossRef]

- Fazakarley, C.A.; Breen, M.; Leeson, P.; Thompson, B.; Williamson, V. Experiences of using artificial intelligence in healthcare: A qualitative study of UK clinician and key stakeholder perspectives. BMJ Open 2023, 13, e076950. [Google Scholar] [CrossRef]

- Zhang, P.; Kamel Boulos, M.N. Generative AI in Medicine and Healthcare: Promises, Opportunities and Challenges. Future Internet 2023, 15, 286. [Google Scholar] [CrossRef]

- Kerasidou, A. Artificial intelligence and the ongoing need for empathy, compassion and trust in healthcare. Bull. World Health Organ. 2020, 98, 245–250. [Google Scholar] [CrossRef]

- Adigwe, O.P.; Onavbavba, G.; Sanyaolu, S.E. Exploring the matrix: Knowledge, perceptions and prospects of artificial intelligence and machine learning in Nigerian healthcare. Front. Artif. Intell. 2023, 6, 1293297. [Google Scholar] [CrossRef]

- Alghamdi, S.A.; Alashban, Y. Medical science students’ attitudes and perceptions of artificial intelligence in healthcare: A national study conducted in Saudi Arabia. J. Radiat. Res. Appl. Sci. 2024, 17, 100815. [Google Scholar] [CrossRef]

- Bala, I.; Pindoo, I.; Mijwil, M.; Abotaleb, M.; Yundong, W. Ensuring Security and Privacy in Healthcare Systems: A Review Exploring Challenges, Solutions, Future Trends, and the Practical Applications of Artificial Intelligence. Jordan Med. J. 2024, 58, 250–270. [Google Scholar] [CrossRef]

- Jeyaraman, M.; Balaji, S.; Jeyaraman, N.; Yadav, S. Unraveling the Ethical Enigma: Artificial Intelligence in Healthcare. Cureus 2023, 15, e43262. [Google Scholar] [CrossRef] [PubMed]

- Buabbas, A.J.; Miskin, B.; Alnaqi, A.A.; Ayed, A.K.; Shehab, A.A.; Syed-Abdul, S.; Uddin, M. Investigating Students’ Perceptions towards Artificial Intelligence in Medical Education. Healthcare 2023, 11, 1298. [Google Scholar] [CrossRef] [PubMed]

- Farhud, D.D.; Zokaei, S. Ethical Issues of Artificial Intelligence in Medicine and Healthcare. Iran. J. Public Health 2021, 50, i–v. [Google Scholar] [CrossRef]

- Kim, J.; Kadkol, S.; Solomon, I.; Yeh, H.; Soh, J.; Nguyen, T.; Choi, J.; Lee, S.; Srivatsa, A.; Nahass, G.; et al. AI Anxiety: A Comprehensive Analysis of Psychological Factors and Interventions. SSRN Electron. J. 2023, 2023. [Google Scholar] [CrossRef]

- Oniani, D.; Hilsman, J.; Peng, Y.; Poropatich, R.K.; Pamplin, J.C.; Legault, G.L.; Wang, Y. Adopting and expanding ethical principles for generative artificial intelligence from military to healthcare. NPJ Digit. Med. 2023, 6, 225. [Google Scholar] [CrossRef]

- Dave, M.; Patel, N. Artificial intelligence in healthcare and education. Br. Dent. J. 2023, 234, 761–764. [Google Scholar] [CrossRef]

- Grassini, S. Shaping the Future of Education: Exploring the Potential and Consequences of AI and ChatGPT in Educational Settings. Educ. Sci. 2023, 13, 692. [Google Scholar] [CrossRef]

- Preiksaitis, C.; Rose, C. Opportunities, Challenges, and Future Directions of Generative Artificial Intelligence in Medical Education: Scoping Review. JMIR Med. Educ. 2023, 9, e48785. [Google Scholar] [CrossRef]

- Karabacak, M.; Ozkara, B.B.; Margetis, K.; Wintermark, M.; Bisdas, S. The Advent of Generative Language Models in Medical Education. JMIR Med. Educ. 2023, 9, e48163. [Google Scholar] [CrossRef]

- Caporusso, N. Generative artificial intelligence and the emergence of creative displacement anxiety. Res. Directs Psychol. Behav. 2023, 3, 1–12. [Google Scholar] [CrossRef]

- Woodruff, A.; Shelby, R.; Kelley, P.G.; Rousso-Schindler, S.; Smith-Loud, J.; Wilcox, L. How Knowledge Workers Think Generative AI Will (Not) Transform Their Industries. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; p. 641. [Google Scholar]

- Meskó, B.; Topol, E.J. The imperative for regulatory oversight of large language models (or generative AI) in healthcare. NPJ Digit. Med. 2023, 6, 120. [Google Scholar] [CrossRef] [PubMed]

- Ansari, A.; Ansari, A. Consequences of AI Induced Job Displacement. Int. J. Bus. Anal. Technol. 2024, 2, 4–19. Available online: https://ijbat.com/index.php/IJBAT/article/view/18/31 (accessed on 14 August 2024).

- Ooi, K.-B.; Tan, G.W.-H.; Al-Emran, M.; Al-Sharafi, M.A.; Capatina, A.; Chakraborty, A.; Dwivedi, Y.K.; Huang, T.-L.; Kar, A.K.; Lee, V.-H.; et al. The Potential of Generative Artificial Intelligence Across Disciplines: Perspectives and Future Directions. J. Comput. Inf. Syst. 2023, 2023, 1–32. [Google Scholar] [CrossRef]

- Hosseini, M.; Gao, C.A.; Liebovitz, D.M.; Carvalho, A.M.; Ahmad, F.S.; Luo, Y.; MacDonald, N.; Holmes, K.L.; Kho, A. An exploratory survey about using ChatGPT in education, healthcare, and research. PLoS ONE 2023, 18, e0292216. [Google Scholar] [CrossRef]

- Sallam, M.; Salim, N.A.; Barakat, M.; Al-Mahzoum, K.; Al-Tammemi, A.B.; Malaeb, D.; Hallit, R.; Hallit, S. Assessing Health Students’ Attitudes and Usage of ChatGPT in Jordan: Validation Study. JMIR Med. Educ. 2023, 9, e48254. [Google Scholar] [CrossRef]

- Alanzi, T.M. Impact of ChatGPT on Teleconsultants in Healthcare: Perceptions of Healthcare Experts in Saudi Arabia. J. Multidiscip. Healthc. 2023, 16, 2309–2321. [Google Scholar] [CrossRef]

- Wang, C.; Liu, S.; Yang, H.; Guo, J.; Wu, Y.; Liu, J. Ethical Considerations of Using ChatGPT in Health Care. J. Med. Internet Res. 2023, 25, e48009. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A.; Singh, R.P. ChatGPT for healthcare services: An emerging stage for an innovative perspective. BenchCouncil Trans. Benchmarks Stand. Eval. 2023, 3, 100105. [Google Scholar] [CrossRef]

- Zaman, M. ChatGPT for healthcare sector: SWOT analysis. Int. J. Res. Ind. Eng. 2023, 12, 221–233. [Google Scholar] [CrossRef]

- Özbek Güven, G.; Yilmaz, Ş.; Inceoğlu, F. Determining medical students’ anxiety and readiness levels about artificial intelligence. Heliyon 2024, 10, e25894. [Google Scholar] [CrossRef] [PubMed]

- Saif, N.; Khan, S.U.; Shaheen, I.; Alotaibi, F.A.; Alnfiai, M.M.; Arif, M. Chat-GPT; validating Technology Acceptance Model (TAM) in education sector via ubiquitous learning mechanism. Comput. Hum. Behav. 2024, 154, 108097. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Lang, A.-G.; Buchner, A. G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 2007, 39, 175–191. [Google Scholar] [CrossRef] [PubMed]

- Faul, F.; Erdfelder, E.; Buchner, A.; Lang, A.-G. Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behav. Res. Methods 2009, 41, 1149–1160. [Google Scholar] [CrossRef] [PubMed]

- Jasp Team. JASP (Version 0.19.0) [Computer Software]. 2024. Available online: https://jasp-stats.org/ (accessed on 9 September 2024).

- Ibrahim, H.; Liu, F.; Asim, R.; Battu, B.; Benabderrahmane, S.; Alhafni, B.; Adnan, W.; Alhanai, T.; AlShebli, B.; Baghdadi, R.; et al. Perception, performance, and detectability of conversational artificial intelligence across 32 university courses. Sci. Rep. 2023, 13, 12187. [Google Scholar] [CrossRef]

- Abdaljaleel, M.; Barakat, M.; Alsanafi, M.; Salim, N.A.; Abazid, H.; Malaeb, D.; Mohammed, A.H.; Hassan, B.A.R.; Wayyes, A.M.; Farhan, S.S.; et al. A multinational study on the factors influencing university students’ attitudes and usage of ChatGPT. Sci. Rep. 2024, 14, 1983. [Google Scholar] [CrossRef]

- Sallam, M.; Elsayed, W.; Al-Shorbagy, M.; Barakat, M.; El Khatib, S.; Ghach, W.; Alwan, N.; Hallit, S.; Malaeb, D. ChatGPT usage and attitudes are driven by perceptions of usefulness, ease of use, risks, and psycho-social impact: A study among university students in the UAE. Front. Educ. 2024, 9, 1414758. [Google Scholar]

- Zhang, J.S.; Yoon, C.; Williams, D.K.A.; Pinkas, A. Exploring the Usage of ChatGPT Among Medical Students in the United States. J. Med. Educ. Curric. Dev. 2024, 11, 23821205241264695. [Google Scholar] [CrossRef]

- Yusuf, A.; Pervin, N.; Román-González, M.; Noor, N.M. Generative AI in education and research: A systematic mapping review. Rev. Educ. 2024, 12, e3489. [Google Scholar] [CrossRef]

- Raman, R.; Mandal, S.; Das, P.; Kaur, T.; Sanjanasri, J.P.; Nedungadi, P. Exploring University Students’ Adoption of ChatGPT Using the Diffusion of Innovation Theory and Sentiment Analysis With Gender Dimension. Hum. Behav. Emerg. Technol. 2024, 2024, 3085910. [Google Scholar] [CrossRef]

- Almogren, A.S.; Al-Rahmi, W.M.; Dahri, N.A. Exploring factors influencing the acceptance of ChatGPT in higher education: A smart education perspective. Heliyon 2024, 10, e31887. [Google Scholar] [CrossRef] [PubMed]

- Chan, C.K.Y.; Hu, W. Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. High. Educ. 2023, 20, 43. [Google Scholar] [CrossRef]

- Ghotbi, N.; Ho, M.T.; Mantello, P. Attitude of college students towards ethical issues of artificial intelligence in an international university in Japan. AI Soc. 2022, 37, 283–290. [Google Scholar] [CrossRef]

- McClure, P.K. “You’re Fired,” Says the Robot: The Rise of Automation in the Workplace, Technophobes, and Fears of Unemployment. Soc. Sci. Comput. Rev. 2017, 36, 139–156. [Google Scholar] [CrossRef]

- Erebak, S.; Turgut, T. Anxiety about the speed of technological development: Effects on job insecurity, time estimation, and automation level preference. J. High Technol. Manag. Res. 2021, 32, 100419. [Google Scholar] [CrossRef]

- Nam, T. Technology usage, expected job sustainability, and perceived job insecurity. Technol. Forecast. Soc. Change 2019, 138, 155–165. [Google Scholar] [CrossRef]

- Koo, B.; Curtis, C.; Ryan, B. Examining the impact of artificial intelligence on hotel employees through job insecurity perspectives. Int. J. Hosp. Manag. 2021, 95, 102763. [Google Scholar] [CrossRef]

- Rony, M.K.K.; Parvin, M.R.; Wahiduzzaman, M.; Debnath, M.; Bala, S.D.; Kayesh, I. “I Wonder if my Years of Training and Expertise Will be Devalued by Machines”: Concerns About the Replacement of Medical Professionals by Artificial Intelligence. SAGE Open Nurs. 2024, 10, 23779608241245220. [Google Scholar] [CrossRef]

- Rawashdeh, A. The consequences of artificial intelligence: An investigation into the impact of AI on job displacement in accounting. J. Sci. Technol. Policy Manag. 2023. ahead-of-print. [Google Scholar] [CrossRef]

- Link, J.; Stowasser, S. Negative Emotions Towards Artificial Intelligence in the Workplace–Motivation and Method for Designing Demonstrators. In Proceedings of the Artificial Intelligence in HCI, Washington, DC, USA, 29 June–4 July 2024; Springer: Cham, Switzerland, 2024; pp. 75–86. [Google Scholar]

- Hopcan, S.; Türkmen, G.; Polat, E. Exploring the artificial intelligence anxiety and machine learning attitudes of teacher candidates. Educ. Inf. Technol. 2024, 29, 7281–7301. [Google Scholar] [CrossRef]

- Maida, E.; Moccia, M.; Palladino, R.; Borriello, G.; Affinito, G.; Clerico, M.; Repice, A.M.; Di Sapio, A.; Iodice, R.; Spiezia, A.L.; et al. ChatGPT vs. neurologists: A cross-sectional study investigating preference, satisfaction ratings and perceived empathy in responses among people living with multiple sclerosis. J. Neurol. 2024, 271, 4057–4066. [Google Scholar] [CrossRef] [PubMed]

- Bragazzi, N.L.; Garbarino, S. Toward Clinical Generative AI: Conceptual Framework. JMIR AI 2024, 3, e55957. [Google Scholar] [CrossRef] [PubMed]

- Tucci, V.; Saary, J.; Doyle, T.E. Factors influencing trust in medical artificial intelligence for healthcare professionals: A narrative review. J. Med. Artif. Intell. 2021, 5. [Google Scholar] [CrossRef]

- Ogunleye, B.; Zakariyyah, K.I.; Ajao, O.; Olayinka, O.; Sharma, H. A Systematic Review of Generative AI for Teaching and Learning Practice. Educ. Sci. 2024, 14, 636. [Google Scholar] [CrossRef]

- Paranjape, K.; Schinkel, M.; Nannan Panday, R.; Car, J.; Nanayakkara, P. Introducing Artificial Intelligence Training in Medical Education. JMIR Med. Educ. 2019, 5, e16048. [Google Scholar] [CrossRef] [PubMed]

- Ngo, B.; Nguyen, D.; vanSonnenberg, E. The Cases for and against Artificial Intelligence in the Medical School Curriculum. Radiol. Artif. Intell. 2022, 4, e220074. [Google Scholar] [CrossRef]

- Ngo, B.; Nguyen, D.; vanSonnenberg, E. Artificial Intelligence: Has Its Time Come for Inclusion in Medical School Education? Maybe…Maybe Not. MedEdPublish 2021, 10, 131. [Google Scholar] [CrossRef]

- Tolentino, R.; Baradaran, A.; Gore, G.; Pluye, P.; Abbasgholizadeh-Rahimi, S. Curriculum Frameworks and Educational Programs in AI for Medical Students, Residents, and Practicing Physicians: Scoping Review. JMIR Med. Educ. 2024, 10, e54793. [Google Scholar] [CrossRef]

- Sauerbrei, A.; Kerasidou, A.; Lucivero, F.; Hallowell, N. The impact of artificial intelligence on the person-centred, doctor-patient relationship: Some problems and solutions. BMC Med. Inform. Decis. Mak. 2023, 23, 73. [Google Scholar] [CrossRef]

- Yim, D.; Khuntia, J.; Parameswaran, V.; Meyers, A. Preliminary Evidence of the Use of Generative AI in Health Care Clinical Services: Systematic Narrative Review. JMIR Med. Inform. 2024, 12, e52073. [Google Scholar] [CrossRef]

- Sallam, M. Bibliometric top ten healthcare-related ChatGPT publications in the first ChatGPT anniversary. Narra J. 2024, 4, e917. [Google Scholar] [CrossRef] [PubMed]

- Franco D’Souza, R.; Mathew, M.; Mishra, V.; Surapaneni, K.M. Twelve tips for addressing ethical concerns in the implementation of artificial intelligence in medical education. Med. Educ. Online 2024, 29, 2330250. [Google Scholar] [CrossRef] [PubMed]

- Alam, F.; Lim, M.A.; Zulkipli, I.N. Integrating AI in medical education: Embracing ethical usage and critical understanding. Front. Med. 2023, 10, 1279707. [Google Scholar] [CrossRef] [PubMed]

- Weidener, L.; Fischer, M. Teaching AI Ethics in Medical Education: A Scoping Review of Current Literature and Practices. Perspect. Med. Educ. 2023, 12, 399–410. [Google Scholar] [CrossRef] [PubMed]

- Tang, L.; Li, J.; Fantus, S. Medical artificial intelligence ethics: A systematic review of empirical studies. Digit. Health 2023, 9, 20552076231186064. [Google Scholar] [CrossRef]

- Siala, H.; Wang, Y. SHIFTing artificial intelligence to be responsible in healthcare: A systematic review. Soc. Sci. Med. 2022, 296, 114782. [Google Scholar] [CrossRef]

- Zirar, A.; Ali, S.I.; Islam, N. Worker and workplace Artificial Intelligence (AI) coexistence: Emerging themes and research agenda. Technovation 2023, 124, 102747. [Google Scholar] [CrossRef]

- Shuaib, A. Transforming Healthcare with AI: Promises, Pitfalls, and Pathways Forward. Int. J. Gen. Med. 2024, 17, 1765–1771. [Google Scholar] [CrossRef]

- Khan, B.; Fatima, H.; Qureshi, A.; Kumar, S.; Hanan, A.; Hussain, J.; Abdullah, S. Drawbacks of Artificial Intelligence and Their Potential Solutions in the Healthcare Sector. Biomed. Mater. Devices 2023, 1, 731–738. [Google Scholar] [CrossRef]

- Reddy, S. Generative AI in healthcare: An implementation science informed translational path on application, integration and governance. Implement. Sci. 2024, 19, 27. [Google Scholar] [CrossRef]

- Bajwa, J.; Munir, U.; Nori, A.; Williams, B. Artificial intelligence in healthcare: Transforming the practice of medicine. Future Healthc. J. 2021, 8, e188–e194. [Google Scholar] [CrossRef] [PubMed]

- Maleki Varnosfaderani, S.; Forouzanfar, M. The Role of AI in Hospitals and Clinics: Transforming Healthcare in the 21st Century. Bioengineering 2024, 11, 337. [Google Scholar] [CrossRef] [PubMed]

- Naqvi, W.M.; Sundus, H.; Mishra, G.; Muthukrishnan, R.; Kandakurti, P.K. AI in Medical Education Curriculum: The Future of Healthcare Learning. Eur. J. Ther. 2024, 30, e23–e25. [Google Scholar] [CrossRef]

| Variable | Category | Count | Percentage |

|---|---|---|---|

| Sex | Male | 88 | 53.7% |

| Female | 76 | 46.3% | |

| Academic year | First year | 25 | 15.2% |

| Second year | 52 | 31.7% | |

| Third year | 36 | 22.0% | |

| Fourth year | 20 | 12.2% | |

| Fifth year | 19 | 11.6% | |

| Sixth year | 12 | 7.3% | |

| GPA 1 | Unsatisfactory | 8 | 4.9% |

| Satisfactory | 15 | 9.1% | |

| Good | 57 | 34.8% | |

| Very good | 65 | 39.6% | |

| Excellent | 19 | 11.6% | |

| Desired specialty classification based on the risk of job loss due to genAI 2 | Low risk 3 | 68 | 51.5% |

| Middle risk 4 | 48 | 36.4% | |

| High risk 5 | 16 | 12.1% | |

| How anxious are you about genAI models, like ChatGPT as a future physician? | Not at all | 56 | 34.1% |

| Slightly anxious | 68 | 41.5% | |

| Somewhat anxious | 36 | 22.0% | |

| Extremely anxious | 4 | 2.4% | |

| Number of genAI models used | 0 | 45 | 27.4% |

| 1 | 77 | 47.0% | |

| 2 | 33 | 20.1% | |

| 3 | 5 | 3.0% | |

| 4 | 4 | 2.4% |

| Variable | Category | How Anxious Are You about genAI Models, Like ChatGPT as a Future Physician? | p Value | |

|---|---|---|---|---|

| Not at All | Slightly Anxious, Somewhat Anxious, or Extremely Anxious | |||

| Count (%) | Count (%) | |||

| Age | Mean ± SD 2 | 21.66 ± 3.12 | 20.8 ± 1.61 | 0.186 |

| Sex | Male | 29 (33.0) | 59 (67.0) | 0.729 |

| Female | 27 (35.5) | 49 (64.5) | ||

| Level | Basic | 36 (31.9) | 77 (68.1) | 0.358 |

| Clinical | 20 (39.2) | 31 (60.8) | ||

| GPA 1 | Unsatisfactory, satisfactory, good | 26 (32.5) | 54 (67.5) | 0.664 |

| Very good, excellent | 30 (35.7) | 54 (64.3) | ||

| Desired specialty | Low risk 3 | 19 (27.9) | 49 (72.1) | 0.504 |

| Middle risk 4 | 18 (37.5) | 30 (62.5) | ||

| High risk 5 | 6 (37.5) | 10 (62.5) | ||

| Number of genAI models used | 0 | 14 (31.1) | 31 (68.9) | 0.895 |

| 1 | 28 (36.4) | 49 (63.6) | ||

| 2 | 10 (30.3) | 23 (69.7) | ||

| 3 | 2 (40.0) | 3 (60.0) | ||

| 4 | 2 (50.0) | 2 (50.0) | ||

| Variable | Category | Fear | Anxiety | Mistrust | Ethics | ||||

|---|---|---|---|---|---|---|---|---|---|

| Mean ± SD 6 | p Value | Mean ± SD | p Value | Mean ± SD | p Value | Mean ± SD | p Value | ||

| Sex | Male | 9.55 ± 3.31 | 0.972 | 9.00 ± 3.61 | 0.739 | 12.3 ± 2.75 | 0.728 | 10.86 ± 3.10 | 0.983 |

| Female | 9.42 ± 3.77 | 8.80 ± 3.78 | 12.41 ± 2.82 | 10.86 ± 2.66 | |||||

| Level | Basic | 9.57 ± 3.54 | 0.683 | 8.85 ± 3.62 | 0.781 | 12.33 ± 2.70 | 0.596 | 10.65 ± 2.86 | 0.108 |

| Clinical | 9.31 ± 3.52 | 9.04 ± 3.84 | 12.39 ± 2.97 | 11.31 ± 2.96 | |||||

| GPA 1 | Unsatisfactory, satisfactory, good | 9.51 ± 3.53 | 0.803 | 9.36 ± 3.81 | 0.082 | 12.14 ± 2.87 | 0.277 | 10.86 ± 2.88 | 0.987 |

| Very good, excellent | 9.46 ± 3.54 | 8.48 ± 3.52 | 12.55 ± 2.69 | 10.86 ± 2.93 | |||||

| Desired specialty | Low risk 3 | 9.85 ± 3.43 | 0.504 | 9.09 ± 3.62 | 0.796 | 12.35 ± 2.87 | 0.953 | 11.18 ± 2.88 | 0.812 |

| Middle risk 4 | 9.10 ± 3.58 | 8.79 ± 3.92 | 12.44 ± 2.74 | 11.19 ± 2.71 | |||||

| High risk 5 | 8.94 ± 3.57 | 9.63 ± 3.58 | 12.94 ± 1.88 | 10.75 ± 2.98 | |||||

| Number of genAI 2 models used | 0 | 10.31 ± 3.38 | 0.362 | 9.47 ± 3.49 | 0.581 | 12.27 ± 2.59 | 0.106 | 10.87 ± 2.52 | 0.496 |

| 1 | 9.06 ± 3.56 | 8.51 ± 3.61 | 12.57 ± 2.91 | 10.78 ± 3.05 | |||||

| 2 | 9.39 ± 3.60 | 8.88 ± 3.85 | 12.64 ± 2.19 | 11.18 ± 2.78 | |||||

| 3 | 9.80 ± 4.21 | 10.40 ± 5.90 | 9.80 ± 4.44 | 11.40 ± 4.93 | |||||

| 4 | 8.75 ± 3.20 | 8.75 ± 3.20 | 9.75 ± 2.63 | 9.00 ± 2.58 | |||||

| How anxious are you about genAI models like ChatGPT as a future physician? | Not at all | 7.48 ± 3.62 | <0.001 | 7.29 ± 4.06 | <0.001 | 12.13 ± 3.04 | 0.590 | 10.04 ± 2.97 | 0.014 |

| Slightly anxious, somewhat anxious, or extremely anxious | 10.53 ± 3.00 | 9.75 ± 3.17 | 12.46 ± 2.64 | 11.29 ± 2.78 | |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Published by MDPI on behalf of the Academic Society for International Medical Education. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sallam, M.; Al-Mahzoum, K.; Almutairi, Y.M.; Alaqeel, O.; Abu Salami, A.; Almutairi, Z.E.; Alsarraf, A.N.; Barakat, M. Anxiety among Medical Students Regarding Generative Artificial Intelligence Models: A Pilot Descriptive Study. Int. Med. Educ. 2024, 3, 406-425. https://doi.org/10.3390/ime3040031

Sallam M, Al-Mahzoum K, Almutairi YM, Alaqeel O, Abu Salami A, Almutairi ZE, Alsarraf AN, Barakat M. Anxiety among Medical Students Regarding Generative Artificial Intelligence Models: A Pilot Descriptive Study. International Medical Education. 2024; 3(4):406-425. https://doi.org/10.3390/ime3040031

Chicago/Turabian StyleSallam, Malik, Kholoud Al-Mahzoum, Yousef Meteb Almutairi, Omar Alaqeel, Anan Abu Salami, Zaid Elhab Almutairi, Alhur Najem Alsarraf, and Muna Barakat. 2024. "Anxiety among Medical Students Regarding Generative Artificial Intelligence Models: A Pilot Descriptive Study" International Medical Education 3, no. 4: 406-425. https://doi.org/10.3390/ime3040031

APA StyleSallam, M., Al-Mahzoum, K., Almutairi, Y. M., Alaqeel, O., Abu Salami, A., Almutairi, Z. E., Alsarraf, A. N., & Barakat, M. (2024). Anxiety among Medical Students Regarding Generative Artificial Intelligence Models: A Pilot Descriptive Study. International Medical Education, 3(4), 406-425. https://doi.org/10.3390/ime3040031