Benchmarking Foundation Models for Time-Series Forecasting: Zero-Shot, Few-Shot, and Full-Shot Evaluations †

Abstract

1. Introduction

2. Materials and Methods

2.1. Datasets

2.2. Models

2.3. Metrics

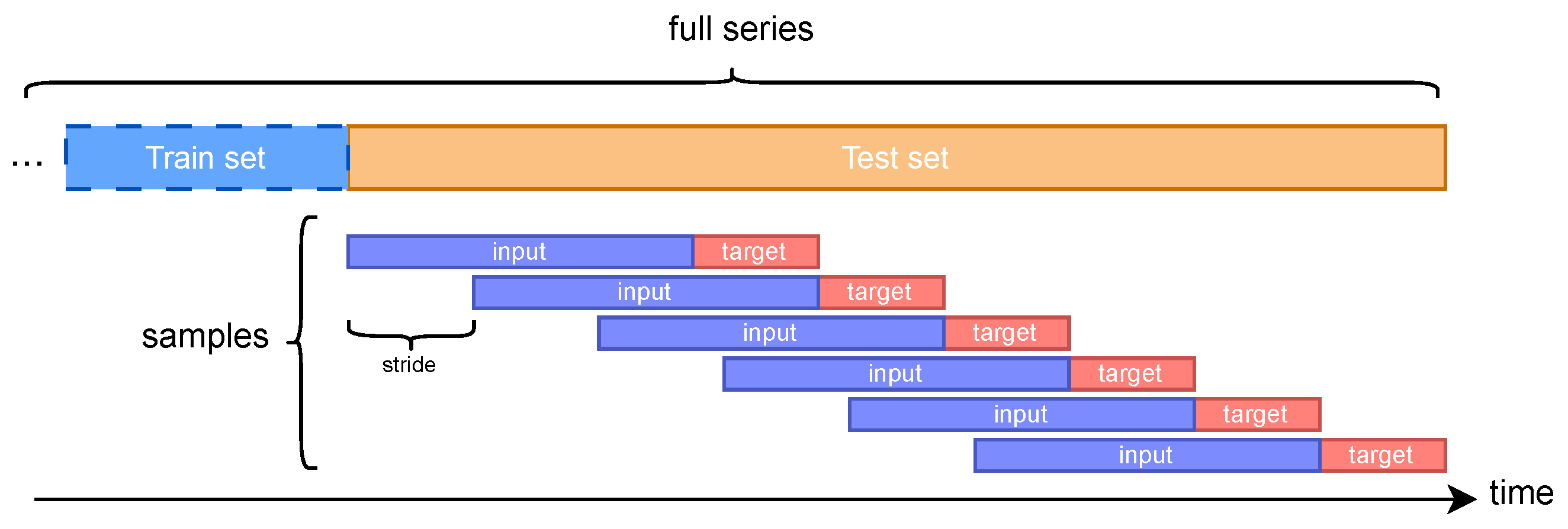

2.4. Scenarios

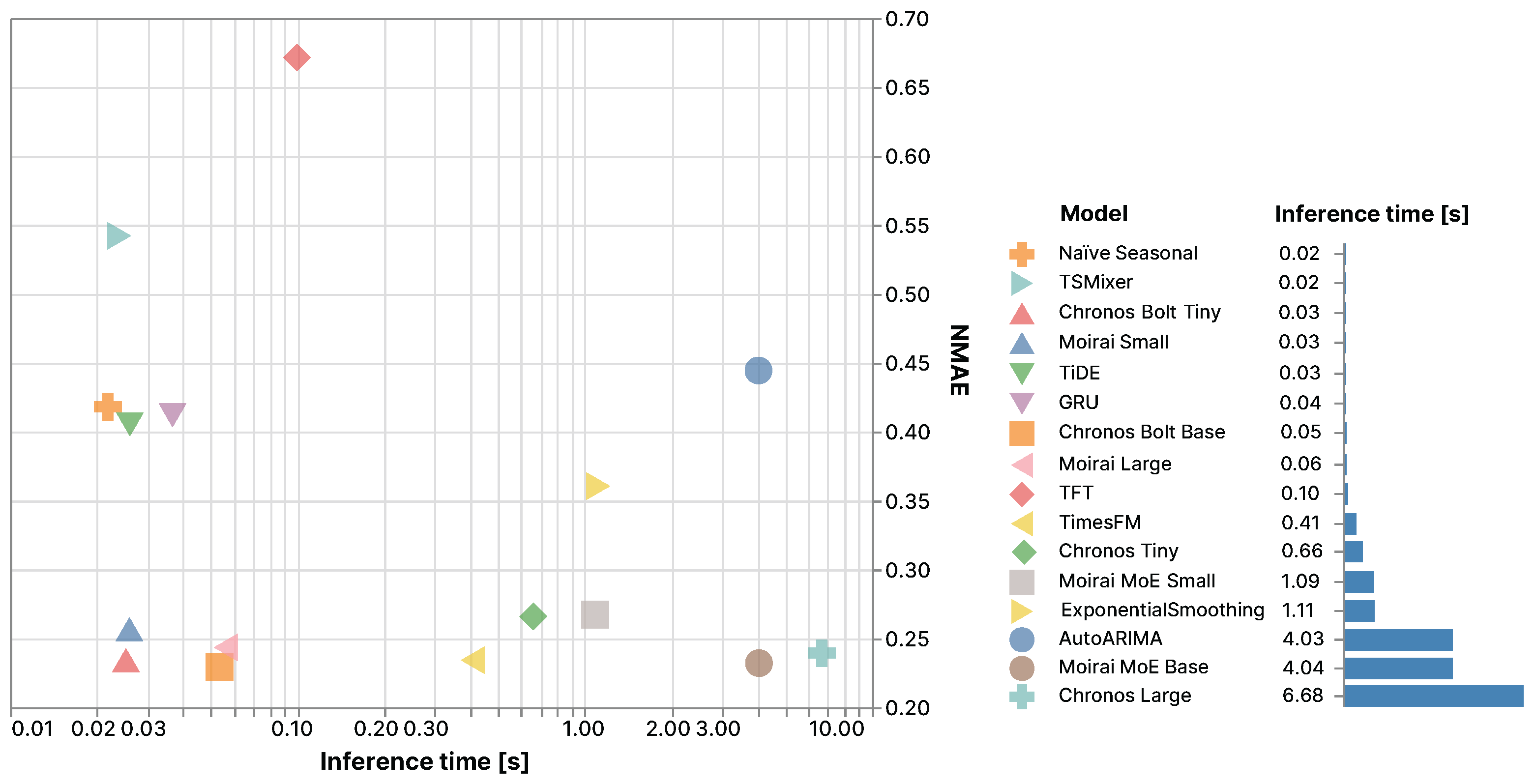

2.5. Evaluation Framework and Infrastructure

3. Results

3.1. Predictions Across Models

3.2. Predictions Horizons

3.3. Few-Shot Learning with Various Data Proportions

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ansari, A.F.; Stella, L.; Turkmen, C.; Zhang, X.; Mercado, P.; Shen, H.; Shchur, O.; Rangapuram, S.S.; Pineda Arango, S.; Kapoor, S.; et al. Chronos: Learning the Language of Time Series. arXiv 2024, arXiv:2403.07815. [Google Scholar] [CrossRef]

- Liu, X.; Liu, J.; Woo, G.; Aksu, T.; Liang, Y.; Zimmermann, R.; Liu, C.; Savarese, S.; Xiong, C.; Sahoo, D. Moirai-MoE: Empowering Time Series Foundation Models with Sparse Mixture of Experts. arXiv 2024, arXiv:2410.10469. [Google Scholar]

- Das, A.; Kong, W.; Sen, R.; Zhou, Y. A decoder-only foundation model for time-series forecasting. arXiv 2024, arXiv:2310.10688. [Google Scholar]

- Aksu, T.; Woo, G.; Liu, J.; Liu, X.; Liu, C.; Savarese, S.; Xiong, C.; Sahoo, D. GIFT-Eval: A Benchmark For General Time Series Forecasting Model Evaluation. arXiv 2024, arXiv:2410.10393. [Google Scholar] [CrossRef]

- Zhang, J.; Wen, X.; Zhang, Z.; Zheng, S.; Li, J.; Bian, J. ProbTS: Benchmarking Point and Distributional Forecasting across Diverse Prediction Horizons. arXiv 2024, arXiv:2310.07446. [Google Scholar]

- Herzen, J.; Lässig, F.; Piazzetta, S.G.; Neuer, T.; Tafti, L.; Raille, G.; Pottelbergh, T.V.; Pasieka, M.; Skrodzki, A.; Huguenin, N.; et al. Darts: User-Friendly Modern Machine Learning for Time Series. J. Mach. Learn. Res. 2022, 23, 5442–5447. [Google Scholar]

- Energy Consumption, Generation, Prices and Weather. 2019. Available online: https://www.kaggle.com/datasets/nicholasjhana/energy-consumption-generation-prices-and-weather (accessed on 17 November 2024).

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting. In Proceedings of the Thirty-Fifth AAAI Conference on Artificial Intelligence, AAAI 2021, Virtual Conference, 2–9 February 2021; AAAI Press: Washington, DC, USA, 2021; Volume 35, pp. 11106–11115. [Google Scholar]

- Lai, G.; Chang, W.C.; Yang, Y.; Liu, H. Modeling Long- and Short-Term Temporal Patterns with Deep Neural Networks. In Proceedings of the The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, SIGIR ’18, Ann Arbor, MI, USA, 8–12 July 2018. pp. 95–104. [Google Scholar] [CrossRef]

- Max Planck Institute for Biogeochemistry. Weather Data from the Max Planck Institute for Biogeochemistry, Jena, Germany. 2025. Available online: https://www.bgc-jena.mpg.de/wetter/ (accessed on 16 May 2025).

- Umwelt- und Gesundheitsschutz Zürich. Stündlich aktualisierte Meteodaten, Seit 1992. 2025. Available online: https://data.stadt-zuerich.ch/dataset/ugz_meteodaten_stundenmittelwerte (accessed on 16 May 2025).

- Elektrizitätswerk der Stadt Zürich. Viertelstundenwerte des Stromverbrauchs in den Netzebenen 5 und 7 in der Stadt Zürich, seit 2015. 2025. Available online: https://data.stadt-zuerich.ch/dataset/ewz_stromabgabe_netzebenen_stadt_zuerich (accessed on 16 May 2025).

- MeteoSwiss. Federal Office of Meteorology and Climatology. 2024. Available online: https://www.meteoswiss.admin.ch/ (accessed on 18 November 2024).

- Hyndman, R.J.; Khandakar, Y. Automatic Time Series Forecasting: The forecast Package for R. J. Stat. Softw. 2008, 27, 1–22. [Google Scholar] [CrossRef]

- Cho, K.; van Merrienboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation. arXiv 2014, arXiv:1406.1078. [Google Scholar] [CrossRef]

- Das, A.; Kong, W.; Leach, A.; Mathur, S.; Sen, R.; Yu, R. Long-term Forecasting with TiDE: Time-series Dense Encoder. arXiv 2024, arXiv:2304.08424. [Google Scholar]

- Lim, B.; Arık, S.Ö.; Loeff, N.; Pfister, T. Temporal Fusion Transformers for interpretable multi-horizon time series forecasting. Int. J. Forecast. 2021, 37, 1748–1764. [Google Scholar] [CrossRef]

- Chen, S.A.; Li, C.L.; Yoder, N.; Arik, S.O.; Pfister, T. TSMixer: An All-MLP Architecture for Time Series Forecasting. arXiv 2023, arXiv:2303.06053. [Google Scholar] [CrossRef]

- Woo, G.; Liu, C.; Kumar, A.; Xiong, C.; Savarese, S.; Sahoo, D. Unified Training of Universal Time Series Forecasting Transformers. arXiv 2024, arXiv:2402.02592. [Google Scholar] [CrossRef]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A Next-generation Hyperparameter Optimization Framework. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, KDD ’19, Anchorage, AK, USA, 4–8 August 2019; pp. 2623–2631. [Google Scholar] [CrossRef]

- ontime.re. onTime: Your Library to Work with Time Series. GitHub Repository. 2024. Available online: https://github.com/ontime-re/ontime (accessed on 18 November 2024).

| Dataset | Type | # Features | Resolution | # Target Features | Size |

|---|---|---|---|---|---|

| Energy [7] | Academic | 20 | 1 h | 1 (Total load) | 35,064 |

| ETTh1 [8] | Academic | 7 | 1 h | 1 (Oil temp.) | 17,420 |

| ETTm1 [8] | Academic | 7 | 15 min | 1 (Oil temp.) | 69,680 |

| ExchangeRate [9] | Academic | 8 | 1 day | 8 (All) | 7588 |

| Weather [10] | Academic | 21 | 10 min | 21 (All) | 52,704 |

| ZurichElectricity [11,12] | Academic | 10 | 15 min | 2 (Consumption) | 93,409 |

| HEIA1h | Industrial | 8 | 1 h | 8 (All) | 11,664 |

| MeteoSwiss [13] | Industrial | 8 | 10 min | 24 (All) | 105,264 |

| Model | Type | Prediction Type | # Parameters |

|---|---|---|---|

| NaïveSeasonal | Statistical | Univariate | Not applicable |

| AutoARIMA [14] | Statistical | Univariate | <100 |

| ExpotentialSmoothing | Statistical | Univariate | Not applicable |

| GRU [15] | Deep learning | Multivariate | 3–160 K |

| TiDE [16] | Deep learning | Multivariate | 285 K–8.5 M |

| TFT [17] | Deep learning | Multivariate | 3–36 K |

| TSMixer [18] | Deep learning | Multivariate | 19–931 K |

| Chronos Tiny † [1] | Foundation | Univariate | 8 M |

| Chronos Large † [1] | Foundation | Univariate | 710 M |

| Chronos Bolt Small [1] | Foundation | Univariate | 48 M |

| Chronos Bolt Base [1] | Foundation | Univariate | 205 M |

| Moirai small † [19] | Foundation | Multivariate | 14 M |

| Moirai large † [19] | Foundation | Multivariate | 311 M |

| Moirai MoE Small [2] | Foundation | Multivariate | 117 M |

| Moirai MoE Base [2] | Foundation | Multivariate | 935 M |

| TimesFM [3] | Foundation | Univariate | 500 M |

| Deep Learning | Statistical | |||||||

|---|---|---|---|---|---|---|---|---|

| Metrics | GRU | TFT | TiDE | TSMixer | Naive Seasonal | AutoARIMA | ES † | |

| Energy | sMAPE | 13.49 | 15.25 | 8.102 | 9.724 | 20.56 | 13.34 | 22.67 |

| NMAE | 0.1332 | 0.1507 | 0.0825 | 0.0952 | 0.1946 | 0.1318 | 0.2215 | |

| ETTh1 | sMAPE | 35.00 | 47.15 | 40.11 | 38.13 | 34.49 | 33.60 | 35.80 |

| NMAE | 0.4003 | 0.6310 | 0.4097 | 0.5261 | 0.3627 | 0.3526 | 0.3781 | |

| ETTm1 | sMAPE | 32.11 | 41.53 | 55.02 | 26.05 | 22.27 | 23.59 | 24.55 |

| NMAE | 0.3190 | 0.6568 | 1.040 | 0.2950 | 0.2325 | 0.2369 | 0.2462 | |

| ExchangeRate | sMAPE | 15.55 | 18.06 | 11.45 | 15.34 | 2.336 | 2.514 | 2.537 |

| NMAE | 0.1428 | 0.1631 | 0.1049 | 0.1416 | 0.0234 | 0.0249 | 0.0252 | |

| Weather | sMAPE | 79.09 | 81.48 | 62.53 | 65.67 | 54.58 | 61.77 | 66.82 |

| NMAE | 250.0 | 223.5 | 50.21 | 39.41 | 1.073 | 10.85 | 38.16 | |

| ZurichElectricity | sMAPE | 14.20 | 21.87 | 5.395 | 8.260 | 18.53 | 18.49 | 23.39 |

| NMAE | 0.1401 | 0.2160 | 0.0548 | 0.0835 | 0.1871 | 0.1858 | 0.2416 | |

| HEIA | sMAPE | 42.26 | 52.47 | 29.56 | 39.48 | 39.99 | 41.65 | 31.28 |

| NMAE | 0.5380 | 0.6706 | 0.3240 | 0.5422 | 0.4182 | 0.4445 | 0.3606 | |

| MeteoSwiss | sMAPE | 75.64 | 89.08 | 64.64 | 80.11 | 68.98 | 71.96 | 72.76 |

| NMAE | 1.641 | 2.170 | 1.085 | 1.810 | 2.152 | 1.768 | 2.664 | |

| Moirai | Moirai-MoE | Chronos | Chronos Bolt | TFM † | BB ‡ | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Metrics | Small | Large | Small | Base | Tiny | Large | Tiny | Base | |||

| Energy | sMAPE | 7.360 | 7.134 | 7.088 | 7.062 | 7.508 | 4.854 | 6.183 | 5.095 | 7.035 | 8.102 |

| NMAE | 0.0744 | 0.0718 | 0.0719 | 0.0718 | 0.0751 | 0.0491 | 0.0622 | 0.0511 | 0.0708 | 0.0825 | |

| ETTh1 | sMAPE | 32.64 | 34.61 | 33.25 | 34.04 | 31.15 | 30.68 | 31.95 | 30.56 | 31.40 | 33.60 |

| NMAE | 0.3382 | 0.3344 | 0.3333 | 0.3370 | 0.3242 | 0.3217 | 0.3408 | 0.3334 | 0.3231 | 0.3526 | |

| ETTm1 | sMAPE | 23.66 | 24.64 | 24.78 | 23.64 | 22.95 | 21.61 | 21.58 | 22.40 | 22.45 | 22.27 |

| NMAE | 0.2461 | 0.2658 | 0.2569 | 0.2488 | 0.2347 | 0.2303 | 0.2346 | 0.2329 | 0.2521 | 0.2325 | |

| ExchangeRate | sMAPE | 2.505 | 2.687 | 2.470 | 2.535 | 2.714 | 2.583 | 2.412 | 2.552 | 2.565 | 2.336 |

| NMAE | 0.0251 | 0.0273 | 0.0248 | 0.0255 | 0.0270 | 0.0260 | 0.0242 | 0.0255 | 0.0256 | 0.0234 | |

| Weather | sMAPE | 64.20 | 64.43 | 62.01 | 59.47 | 63.47 | 61.83 | 62.40 | 61.81 | 45.05 | 54.58 |

| NMAE | 2.663 | 9.052 | 11.05 | 5.192 | 13.03 | 0.4987 | 6.455 | 4.629 | 1.243 | 1.073 | |

| ZurichElectricity | sMAPE | 17.92 | 18.06 | 17.30 | 15.63 | 8.368 | 6.119 | 5.635 | 4.177 | 7.292 | 5.395 |

| NMAE | 0.1769 | 0.1804 | 0.1727 | 0.1540 | 0.0872 | 0.0639 | 0.0592 | 0.0440 | 0.0768 | 0.0548 | |

| HEIA | sMAPE | 22.74 | 20.89 | 23.31 | 20.20 | 23.33 | 20.73 | 20.37 | 19.71 | 20.67 | 29.56 |

| NMAE | 0.2570 | 0.2423 | 0.2670 | 0.2321 | 0.2687 | 0.2411 | 0.2343 | 0.2294 | 0.2343 | 0.3240 | |

| MeteoSwiss | sMAPE | 67.72 | 66.98 | 66.14 | 66.49 | 67.99 | 62.95 | 67.58 | 65.18 | 62.74 | 64.64 |

| NMAE | 0.8146 | 1.488 | 2.442 | 1.173 | 1.657 | 1.662 | 0.9932 | 1.377 | 0.9777 | 1.085 | |

| Moirai | Moirai-MoE | Chronos | Chronos Bolt | TFM † | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Horizons | Small | Large | Small | Base | Tiny | Large | Tiny | Base | ||

| Energy | 24 | 0.0643 | 0.0592 | 0.0591 | 0.0528 | 0.0563 | 0.0338 | 0.0503 | 0.0385 | 0.0589 |

| 48 | 0.0719 | 0.0678 | 0.0678 | 0.0628 | 0.0678 | 0.0414 | 0.0587 | 0.0442 | 0.0676 | |

| 96 | 0.0744 | 0.0718 | 0.0719 | 0.0718 | 0.0751 | 0.0491 | 0.0622 | 0.0511 | 0.0708 | |

| 192 | 0.0773 | 0.0755 | 0.0746 | 0.0802 | 0.0754 | 0.0529 | 0.0646 | 0.0556 | 0.0734 | |

| ETTh1 | 24 | 0.2048 | 0.2104 | 0.1970 | 0.2021 | 0.2011 | 0.2074 | 0.1999 | 0.1944 | 0.2077 |

| 48 | 0.2619 | 0.2713 | 0.2503 | 0.2547 | 0.2447 | 0.2490 | 0.2495 | 0.2517 | 0.2536 | |

| 96 | 0.3382 | 0.3344 | 0.3333 | 0.3370 | 0.3242 | 0.3217 | 0.3408 | 0.3334 | 0.3231 | |

| 192 | 0.2883 | 0.2969 | 0.3063 | 0.2905 | 0.2849 | 0.2838 | 0.3070 | 0.2870 | 0.2784 | |

| ETTm1 | 24 | 0.1616 | 0.1967 | 0.1752 | 0.1755 | 0.1796 | 0.1446 | 0.1479 | 0.1443 | 0.1643 |

| 48 | 0.2515 | 0.2742 | 0.2574 | 0.2571 | 0.2382 | 0.2166 | 0.2397 | 0.2307 | 0.2594 | |

| 96 | 0.2461 | 0.2658 | 0.2569 | 0.2488 | 0.2347 | 0.2303 | 0.2346 | 0.2329 | 0.2521 | |

| 192 | 0.2917 | 0.3094 | 0.3055 | 0.3007 | 0.2950 | 0.2870 | 0.3008 | 0.3017 | 0.2977 | |

| ExchangeRate | 24 | 0.0138 | 0.0133 | 0.0128 | 0.0130 | 0.0140 | 0.0136 | 0.0131 | 0.0136 | 0.0132 |

| 48 | 0.0177 | 0.0179 | 0.0171 | 0.0174 | 0.0186 | 0.0184 | 0.0170 | 0.0182 | 0.0180 | |

| 96 | 0.0251 | 0.0273 | 0.0248 | 0.0255 | 0.0270 | 0.0260 | 0.0242 | 0.0255 | 0.0256 | |

| 192 | 0.0350 | 0.0472 | 0.0394 | 0.0397 | 0.0406 | 0.0375 | 0.0340 | 0.0343 | 0.0346 | |

| Weather | 24 | 0.8605 | 0.4102 | 0.5990 | 0.8083 | 2.260 | 0.3976 | 2.760 | 0.6189 | 0.6271 |

| 48 | 2.915 | 1.317 | 6.538 | 7.237 | 1.779 | 0.9095 | 6.655 | 1.919 | 1.834 | |

| 96 | 2.663 | 9.052 | 11.05 | 5.192 | 13.03 | 0.4987 | 6.455 | 4.629 | 1.243 | |

| 192 | 0.5276 | 0.6359 | 0.8007 | 0.7821 | 0.7816 | 0.5519 | 0.6735 | 0.5559 | 0.6298 | |

| ZurichElectricity | 24 | 0.0883 | 0.0758 | 0.0721 | 0.0596 | 0.0339 | 0.0244 | 0.0292 | 0.0233 | 0.0316 |

| 48 | 0.1576 | 0.1413 | 0.1403 | 0.1076 | 0.0501 | 0.0297 | 0.0348 | 0.0289 | 0.0441 | |

| 96 | 0.1769 | 0.1804 | 0.1727 | 0.1540 | 0.0872 | 0.0639 | 0.0592 | 0.0440 | 0.0768 | |

| 192 | 0.1757 | 0.1838 | 0.1752 | 0.1621 | 0.1056 | 0.0825 | 0.0667 | 0.0501 | 0.0926 | |

| HEIA | 24 | 0.2220 | 0.1959 | 0.2131 | 0.1924 | 0.2123 | 0.1837 | 0.1998 | 0.1937 | 0.2013 |

| 48 | 0.2459 | 0.2177 | 0.2479 | 0.2111 | 0.2322 | 0.2086 | 0.2173 | 0.2098 | 0.2206 | |

| 96 | 0.2570 | 0.2423 | 0.2670 | 0.2321 | 0.2687 | 0.2411 | 0.2343 | 0.2294 | 0.2343 | |

| 192 | 0.2687 | 0.2659 | 0.2807 | 0.2467 | 0.2762 | 0.2540 | 0.2437 | 0.2408 | 0.2507 | |

| MeteoSwiss | 24 | 0.6949 | 0.8155 | 0.8457 | 0.6222 | 0.7558 | 0.7167 | 0.6576 | 0.8176 | 0.6057 |

| 48 | 1.153 | 1.441 | 1.771 | 1.016 | 1.457 | 1.297 | 1.045 | 1.346 | 0.9871 | |

| 96 | 0.8146 | 1.488 | 2.442 | 1.173 | 1.657 | 1.662 | 0.9932 | 1.377 | 0.9777 | |

| 192 | 1.226 | 1.530 | 1.850 | 1.898 | 1.584 | 1.374 | 1.295 | 1.231 | 1.336 | |

| Moirai | Chronos | ||||

|---|---|---|---|---|---|

| Proportions | Small | Large | Tiny | Large | |

| Energy | 0% | 0.0744 | 0.0718 | 0.0751 | 0.0491 |

| 33% | 0.0727 | 0.0704 | 0.0649 | 0.0549 | |

| 67% | 0.0667 | 0.0658 | 0.0594 | 0.0495 | |

| 100% | 0.0687 | 0.0661 | 0.0599 | 0.0445 | |

| ETTh1 | 0% | 0.3382 | 0.3344 | 0.3242 | 0.3217 |

| 33% | 0.3146 | 0.3307 | 0.3315 | 0.3161 | |

| 67% | 0.3173 | 0.3167 | 0.3157 | 0.3272 | |

| 100% | 0.3127 | 0.3346 | 0.3099 | 0.3460 | |

| ETTm1 | 0% | 0.2461 | 0.2658 | 0.2347 | 0.2303 |

| 33% | 0.2404 | 0.3616 | 0.2459 | 0.2540 | |

| 67% | 0.2686 | 0.3693 | 0.2484 | 0.2754 | |

| 100% | 0.2437 | 0.2558 | 0.2198 | 0.2308 | |

| ExchangeRate | 0% | 0.0251 | 0.0273 | 0.0270 | 0.0260 |

| 33% | 0.0282 | 0.0694 | 0.0319 | 0.0319 | |

| 67% | 0.0250 | 0.0360 | 0.0285 | 0.0299 | |

| 100% | 0.0285 | 0.0779 | 0.0251 | 0.0302 | |

| Weather | 0% | 2.663 | 9.052 | 13.03 | 0.4987 |

| 33% | 3.793 | 6.041 | 2.053 | 0.9532 | |

| 67% | 6.670 | 2.425 | 1.670 | 3.274 | |

| 100% | 2.459 | 3.703 | 8.644 | 5.433 | |

| ZurichElectricity | 0% | 0.1769 | 0.1804 | 0.0872 | 0.0639 |

| 33% | 0.0482 | 0.0475 | 0.0326 | 0.0295 | |

| 67% | 0.0419 | 0.0526 | 0.0323 | 0.0277 | |

| 100% | 0.0499 | 0.0535 | 0.0333 | 0.0265 | |

| HEIA | 0% | 0.2570 | 0.2423 | 0.2687 | 0.2411 |

| 33% | 0.2631 | 0.2736 | 0.3271 | 0.2574 | |

| 67% | 0.2991 | 0.2920 | 0.2981 | 0.2488 | |

| 100% | 0.2653 | 0.2728 | 0.2494 | 0.2420 | |

| MeteoSwiss | 0% | 0.8146 | 1.488 | 1.657 | 1.662 |

| 33% | 0.5650 | 0.6690 | 0.6111 | 0.9706 | |

| 67% | 0.4954 | 0.4532 | 1.446 | 0.7739 | |

| 100% | 0.5396 | 0.5316 | 0.7382 | 0.6285 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Montet, F.; Pasquier, B.; Wolf, B. Benchmarking Foundation Models for Time-Series Forecasting: Zero-Shot, Few-Shot, and Full-Shot Evaluations. Comput. Sci. Math. Forum 2025, 11, 32. https://doi.org/10.3390/cmsf2025011032

Montet F, Pasquier B, Wolf B. Benchmarking Foundation Models for Time-Series Forecasting: Zero-Shot, Few-Shot, and Full-Shot Evaluations. Computer Sciences & Mathematics Forum. 2025; 11(1):32. https://doi.org/10.3390/cmsf2025011032

Chicago/Turabian StyleMontet, Frédéric, Benjamin Pasquier, and Beat Wolf. 2025. "Benchmarking Foundation Models for Time-Series Forecasting: Zero-Shot, Few-Shot, and Full-Shot Evaluations" Computer Sciences & Mathematics Forum 11, no. 1: 32. https://doi.org/10.3390/cmsf2025011032

APA StyleMontet, F., Pasquier, B., & Wolf, B. (2025). Benchmarking Foundation Models for Time-Series Forecasting: Zero-Shot, Few-Shot, and Full-Shot Evaluations. Computer Sciences & Mathematics Forum, 11(1), 32. https://doi.org/10.3390/cmsf2025011032