Simplicity vs. Complexity in Time Series Forecasting: A Comparative Study of iTransformer Variants †

Abstract

1. Introduction

2. Related Work

3. Methodology

- iTransformer: Prioritizes simplicity and speed. Strong baseline.

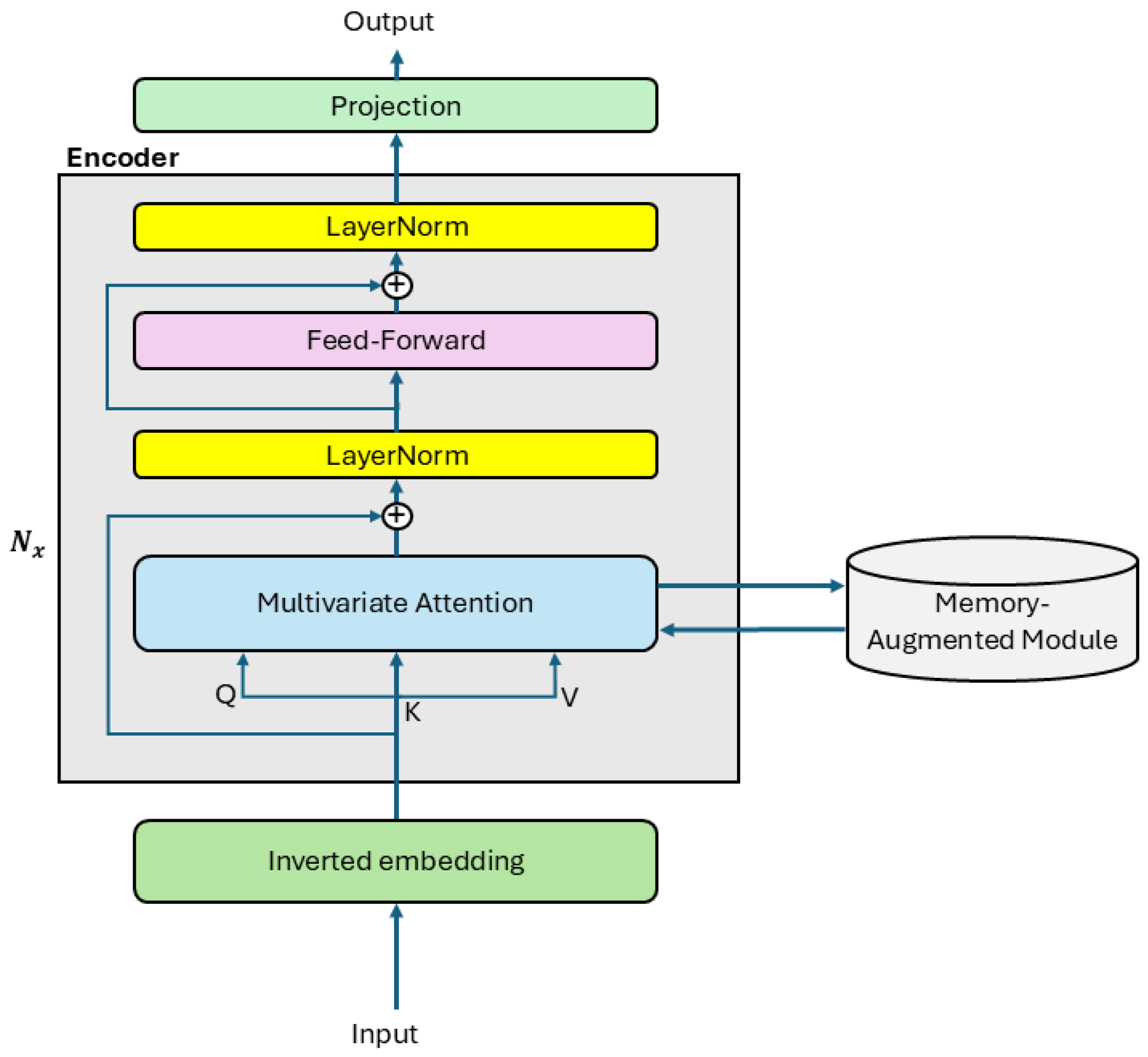

- MiTransformer: Adds memory augmentation to capture long-term temporal dependencies, performing better on datasets with recurrent patterns.

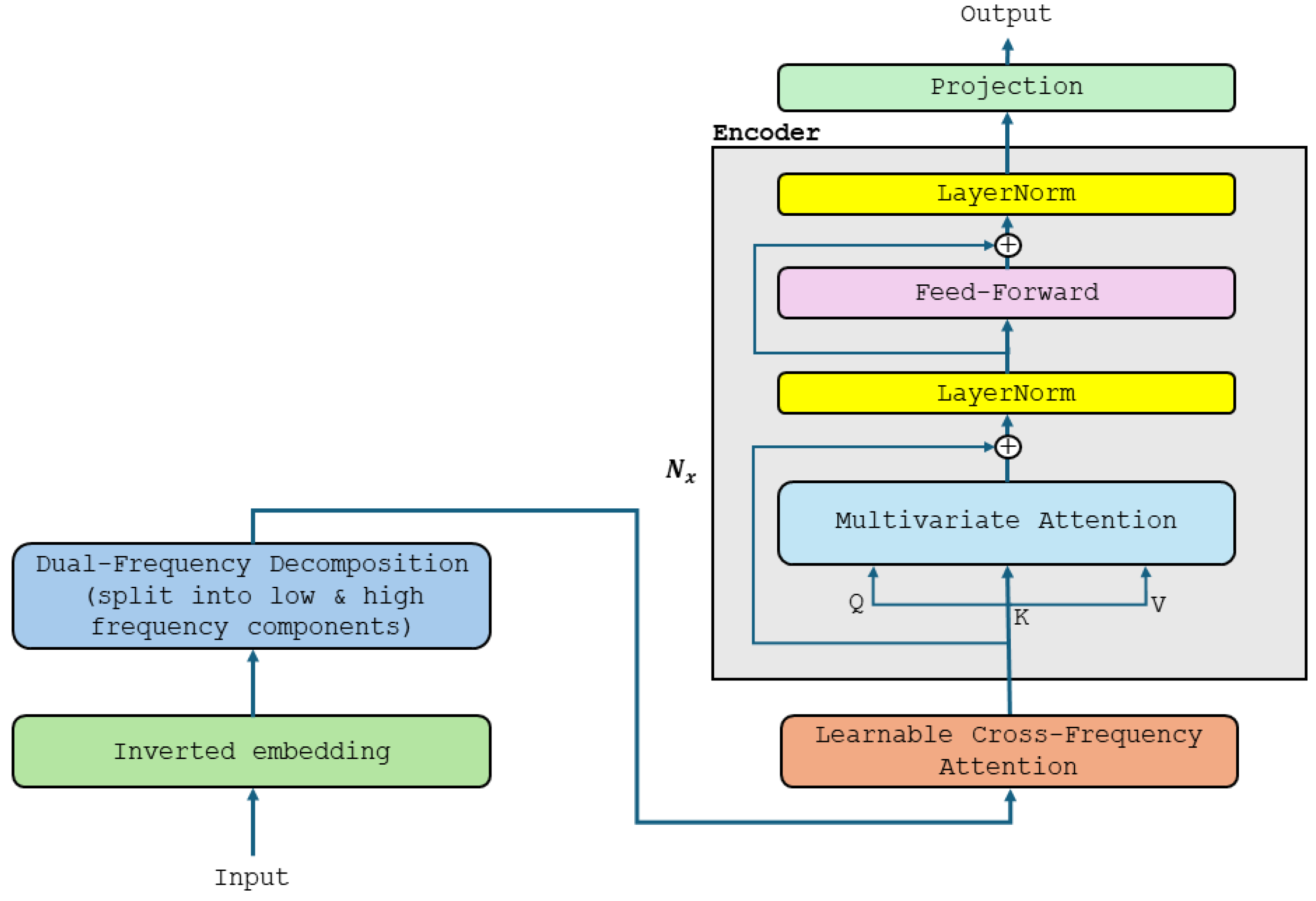

- DFiTransformer: Focuses on frequency-aware modelling and complex interactions, useful for multi-scale signals, but can be computationally expensive and prone to overfitting.

4. Experimental Setup

4.1. Datasets

- Weather: Meteorological data from the US, including variables like temperature, humidity, and wind speed.

- Electricity Consumption Load (ECL): Hourly electricity consumption data of 321 clients.

- Exchange: Daily exchange rates of eight different countries.

- ETTm1 and ETTm2: Electric Transformer Temperature datasets with different temporal resolutions (15 min and 1 h intervals).

4.2. Models Compared

- iTransformer: The baseline inverted transformer architecture.

- MiTransformer: Enhances iTransformer with a memory-augmented module.

- DFiTransformer: Adds DFD and LCFA to iTransformer.

5. Results and Analysis

6. Discussion: Simplicity vs. Complexity in Forecasting Models

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

- Input sequence length: 96.

- Correct full multi-head attention (MHA) parameter count: 4 × d_model2.

- Feedforward layer: 2 × d_model × d_ff.

- Consistent dimensions:

- ○

- d_model = 512;

- ○

- d_ff = 2048;

- ○

- #layers = 2;

- ○

- #heads = 8 (used in attention logic, but does not affect parameter count).

- MHA (Q + K + V + Output): 4 × 5122 = 1,048,576.

- Feedforward: 2 × 512 × 2048 = 2,097,152.

- Total per layer = 1,048,576 + 2,097,152 = 3,145,728.

- Input embedding: 512 × 1 = 512.

- Transformer encoder: 6,291,456.

- Output layer: 512 × 1 = 512.

- Total → 6,291,456 + 512 + 512 = 6,292,480.

- iTransformer base: 6,292,480

- Added components:

- ○

- Memory slots: M = 64, each slot = 512→ 64 × 512 = 32,768.

- ○

- Memory attention: same projection scheme as MHA→ 4 × 5122 = 1,048,576.

- Total → 6,292,480 + 32,768 + 1,048,576 = 7,373,824

- iTransformer base: 6,292,480.

- Added components:

- ○

- Dual-frequency token projections (2 branches): 2 × 512 × 512 = 524,288.

- ○

- Cross-frequency attention: 4 × 5122 = 1,048,576.

- ○

- Fusion layer (linear): 512 × 512 = 262,144.

- Total → 6,292,480 + 524,288 + 1,048,576 + 262,144 = 8,127,488.

| Model | Parameter Count | Extra Modules | Complexity Level |

| iTransformer | ~6.29 M | – | (1) |

| MiTransformer | ~7.37 M | Memory slots + attention | (2) |

| DFiTransformer | ~8.13 M | DFD + LCFA | (3) |

- iTransformer is the most parameter-efficient and easiest to interpret;

- MiTransformer increases parameters moderately with significant benefit on periodic or long-range data;

- DFiTransformer adds even more complexity, useful in frequency-rich datasets, but has diminishing returns on simple tasks.

References

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Liu, Y.; Hu, T.; Zhang, H.; Wu, H.; Wang, S.; Ma, L.; Long, M. itransformer: Inverted transformers are effective for time series forecasting. In Proceedings of the ICLR 2024, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Yakoi, P.S.; Meng, X.; Yu, C.; Odeh, V.A.; Zhang, Y.; Zhao, Z.; Suleman, D.; Yang, Y. LTSMiTransformer: Learnable Temporal Sparsity and Memory for Efficient Long-Term Time Series Forecasting. In Proceedings of the 2025 6th International Conference on Computing, Networks and Internet of Things (CNIOT), Shanghai, China, 23–25 May 2025; pp. 1–7. [Google Scholar]

- Zeng, A.; Chen, M.; Zhang, L.; Xu, Q. Are transformers effective for time series forecasting? Proc. AAAI Conf. Artif. Intell. 2023, 37, 11121–11128. [Google Scholar] [CrossRef]

- Chen, H.; Luong, V.; Mukherjee, L.; Singh, V. SimpleTM: A Simple Baseline for Multivariate Time Series Forecasting. In Proceedings of the Thirteenth International Conference on Learning Representations, Singapore, 24–28 April 2025. [Google Scholar]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond efficient transformer for long sequence time-series forecasting. Proc. AAAI Conf. Artif. Intell. 2021, 35, 11106–11115. [Google Scholar] [CrossRef]

- Wu, H.; Xu, J.; Wang, J.; Long, M. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. In Proceedings of the 35th Conference on Neural Information Processing Systems (NeurIPS 2021), Online, 6–14 December 2021. [Google Scholar]

- Nie, Y.; Nguyen, N.H.; Sinthong, P.; Kalagnanam, J. A time series is worth 64 words: Long-term forecasting with transformers. arXiv 2022, arXiv:2211.14730. [Google Scholar]

- Lim, B.; Zohren, S. Time-series forecasting with deep learning: A survey. Philos. Trans. R. Soc. A 2021, 379, 20200209. [Google Scholar] [CrossRef] [PubMed]

- Zhou, T.; Ma, Z.; Wen, Q.; Wang, X.; Sun, L.; Jin, R. Fedformer: Frequency enhanced decomposed transformer for long-term series forecasting. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022. [Google Scholar]

- Rae, J.; Hunt, J.J.; Danihelka, I.; Harley, T.; Senior, A.W.; Wayne, G.; Graves, A.; Lillicrap, T. Scaling memory-augmented neural networks with sparse reads and writes. Adv. Neural Inf. Process. Syst. 2016, 29, 3628–3636. [Google Scholar]

- Sukhbaatar, S.; Weston, J.; Fergus, R. End-to-end memory networks. Adv. Neural Inf. Process. Syst. 2015, 28, 2440–2448. [Google Scholar]

- Elsayed, S.; Thyssens, D.; Rashed, A.J.H.S.; Schmidt-Thieme, L. Do we really need deep learning models for time series forecasting? arXiv 2021, arXiv:2101.02118. [Google Scholar] [CrossRef]

- Wu, H.; Hu, T.; Liu, Y.; Zhou, H.; Wang, J.; Long, M. Timesnet: Temporal 2d-variation modeling for general time series analysis. arXiv 2022, arXiv:2210.02186. [Google Scholar]

- Kaiser, Ł.; Nachum, O.; Roy, A.; Bengio, S. Learning to remember rare events. arXiv 2017, arXiv:1703.03129. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

| Models | iTransformer | MiTransformer | DFiTransformer | ||||

|---|---|---|---|---|---|---|---|

| Metric | MSE | MAE | MSE | MAE | MSE | MAE | |

| Weather | 96 | 0.174 | 0.214 | 0.179 | 0.218 | 0.187 | 0.232 |

| 192 | 0.221 | 0.254 | 0.224 | 0.257 | 0.242 | 0.275 | |

| 336 | 0.278 | 0.296 | 0.285 | 0.301 | 0.299 | 0.313 | |

| 720 | 0.358 | 0.347 | 0.359 | 0.350 | 0.373 | 0.364 | |

| Macro Avg | 0.258 | 0.278 | 0.262 | 0.282 | 0.275 | 0.296 | |

| ECL | 96 | 0.148 | 0.240 | 0.166 | 0.256 | 0.191 | 0.297 |

| 192 | 0.162 | 0.253 | 0.177 | 0.267 | 0.207 | 0.310 | |

| 336 | 0.178 | 0.269 | 0.195 | 0.285 | 0.222 | 0.323 | |

| 720 | 0.225 | 0.317 | 0.234 | 0.318 | 0.249 | 0.343 | |

| Macro Avg | 0.178 | 0.270 | 0.193 | 0.282 | 0.217 | 0.318 | |

| Exchange | 96 | 0.086 | 0.206 | 0.087 | 0.207 | 0.119 | 0.245 |

| 192 | 0.177 | 0.299 | 0.176 | 0.299 | 0.252 | 0.358 | |

| 336 | 0.331 | 0.417 | 0.346 | 0.426 | 0.430 | 0.475 | |

| 720 | 0.847 | 0.691 | 0.854 | 0.700 | 0.949 | 0.738 | |

| Macro Avg | 0.360 | 0.403 | 0.366 | 0.408 | 0.438 | 0.454 | |

| ETTm1 | 96 | 0.334 | 0.368 | 0.354 | 0.382 | 0.368 | 0.392 |

| 192 | 0.377 | 0.391 | 0.383 | 0.394 | 0.411 | 0.415 | |

| 336 | 0.426 | 0.420 | 0.444 | 0.430 | 0.444 | 0.437 | |

| 720 | 0.491 | 0.459 | 0.517 | 0.470 | 0.560 | 0.493 | |

| Macro Avg | 0.407 | 0.410 | 0.425 | 0.419 | 0.446 | 0.434 | |

| ETTm2 | 96.000 | 0.180 | 0.264 | 0.182 | 0.263 | 0.196 | 0.275 |

| 192.000 | 0.250 | 0.309 | 0.250 | 0.306 | 0.256 | 0.313 | |

| 336.000 | 0.311 | 0.348 | 0.318 | 0.351 | 0.332 | 0.358 | |

| 720.000 | 0.412 | 0.407 | 0.414 | 0.405 | 0.414 | 0.404 | |

| Macro Avg | 0.288 | 0.332 | 0.291 | 0.331 | 0.300 | 0.338 | |

| 1st | 24 | 24 | 2 | 2 | 0 | 1 | |

| 2nd | 1 | 1 | 23 | 23 | 3 | 0 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yakoi, P.S.; Meng, X.; Suleman, D.; Idowu, A.; Odeh, V.A.; Yu, C. Simplicity vs. Complexity in Time Series Forecasting: A Comparative Study of iTransformer Variants. Comput. Sci. Math. Forum 2025, 11, 27. https://doi.org/10.3390/cmsf2025011027

Yakoi PS, Meng X, Suleman D, Idowu A, Odeh VA, Yu C. Simplicity vs. Complexity in Time Series Forecasting: A Comparative Study of iTransformer Variants. Computer Sciences & Mathematics Forum. 2025; 11(1):27. https://doi.org/10.3390/cmsf2025011027

Chicago/Turabian StyleYakoi, Polycarp Shizawaliyi, Xiangfu Meng, Danladi Suleman, Adeleye Idowu, Victor Adeyi Odeh, and Chunlin Yu. 2025. "Simplicity vs. Complexity in Time Series Forecasting: A Comparative Study of iTransformer Variants" Computer Sciences & Mathematics Forum 11, no. 1: 27. https://doi.org/10.3390/cmsf2025011027

APA StyleYakoi, P. S., Meng, X., Suleman, D., Idowu, A., Odeh, V. A., & Yu, C. (2025). Simplicity vs. Complexity in Time Series Forecasting: A Comparative Study of iTransformer Variants. Computer Sciences & Mathematics Forum, 11(1), 27. https://doi.org/10.3390/cmsf2025011027