Abstract

Background/Objectives: Autoimmune rheumatic diseases (AIRDs) are complex, heterogeneous, and relapsing–remitting conditions in which early diagnosis, flare prediction, and individualized therapy remain major unmet needs. This review aims to synthesize recent progress in AI-driven, biomarker-based precision medicine, integrating advances in imaging, multi-omics, and digital health to enhance diagnosis, risk stratification, and therapeutic decision-making in AIRD. Methods: A comprehensive synthesis of 2020–2025 literature was conducted across PubMed, Scopus, and preprint databases, focusing on studies applying artificial intelligence, machine learning, and multimodal biomarkers in rheumatoid arthritis, systemic lupus erythematosus, systemic sclerosis, spondyloarthritis, and related autoimmune diseases. The review emphasizes methodological rigor (TRIPOD+AI, PROBAST+AI, CONSORT-AI/SPIRIT-AI), implementation infrastructures (ACR RISE registry, federated learning), and equity frameworks to ensure generalizable, safe, and ethically governed translation into clinical practice. Results: Emerging evidence demonstrates that AI-integrated imaging enables automated quantification of synovitis, erosions, and vascular inflammation; multi-omics stratification reveals interferon- and B-cell-related molecular programs predictive of therapeutic response; and digital biomarkers from wearables and smartphones extend monitoring beyond the clinic, capturing early flare signatures. Registry-based AI pipelines and federated collaboration now allow multicenter model training without compromising patient privacy. Across diseases, predictive frameworks for biologic and Janus kinase (JAK) inhibitor response show growing discriminatory performance, though prospective and equity-aware validation remain limited. Conclusions: AI-enabled fusion of imaging, molecular, and digital biomarkers is reshaping the diagnostic and therapeutic landscape of AIRD. Standardized validation, interoperability, and governance frameworks are essential to transition these tools from research to real-world precision rheumatology. The convergence of registries, federated learning, and transparent reporting standards marks a pivotal step toward pragmatic, equitable, and continuously learning systems of care.

1. Background

Autoimmune rheumatic diseases (AIRDs) are clinically and biologically heterogeneous, characterized by overlapping phenotypes, fluctuating disease activity, and variable therapeutic responses [1,2]. This complexity renders early diagnosis, prognostication, and individualized treatment particularly challenging when relying on conventional single-marker heuristics. Recent reviews emphasize that artificial intelligence (AI) is uniquely positioned to address these challenges by modeling the nonlinear, multimodal structure of AIRDs—provided that data quality, transparent governance, and rigorous validation are ensured [3,4].

Among AIRDs, rheumatoid arthritis (RA) and systemic lupus erythematosus (SLE) represent prototypical yet contrasting disease archetypes. RA is characterized by chronic synovial inflammation driven by autoreactive B and T cells, pro-inflammatory cytokines such as TNF, IL-6, and IL-1, and progressive joint destruction mediated by osteoclast activation. SLE, in contrast, arises from loss of immune tolerance, immune-complex deposition, complement activation, and type I interferon overproduction that drives multi-organ involvement [5,6]. Despite advances in diagnostics—including serologic markers such as RF, ACPA, ANA, and anti-dsDNA—both disorders exhibit substantial clinical overlap, fluctuating phenotypes, and seronegative or atypical presentations that delay definitive diagnosis [7,8]. Current therapies range from conventional and biologic DMARDs to JAK inhibitors in RA and B-cell- or interferon-targeted biologics in SLE, yet variable treatment response and relapse remain major unmet needs [9,10]. These challenges underscore the necessity of multidimensional approaches capable of integrating molecular, imaging, and behavioral data to refine disease classification and personalize therapy, an area where AI and biomarker-driven strategies are beginning to demonstrate transformative potential.

Three converging developments have markedly advanced the feasibility of AI-driven precision medicine in AIRD. First, deep learning-based image analysis now enables automated, quantitative assessment of musculoskeletal ultrasound and MRI, producing standardized measures of synovitis, erosions, and joint space narrowing that improve reproducibility across centers [11]. Second, advances in multi-omics profiling have revealed interferon-driven and B cell-enriched molecular programs in SLE and related AIRDs, refining disease subtypes and predicting differential responses to targeted therapies such as anifrolumab or B cell-directed agents [8,12]. Third, continuous digital phenotyping through smartphones and wearable devices allows longitudinal tracking of mobility, sleep, and symptom trajectories, augmenting traditional clinic-based indices and enabling earlier detection of flare risk in rheumatoid arthritis and other immune-mediated inflammatory diseases [13,14].

The AI tasks with greatest translational potential in AIRD include diagnostic support and triage, disease-activity and flare prediction, and treatment-response modeling for biologic DMARDs and JAK inhibitors. Current best practices employ interpretable ensemble learners such as gradient boosting for registry and Electronic Health Record (HER) data; convolutional neural networks and transformers for imaging and time-series data; and multimodal fusion frameworks for integrating -omics, imaging, and digital phenotyping streams. To ensure clinical reliability, these applications must be accompanied by robust calibration, uncertainty quantification, and external or temporal validation [15,16].

Equally critical to progress are the infrastructures that enable implementation. The ACR RISE registry, a large-scale, EHR-enabled quality registry encompassing millions of patients, has become a pivotal substrate for model development, deployment, and post-deployment monitoring. Recent analyses demonstrate that active engagement with RISE dashboards is associated with measurable improvements in clinical quality metrics, underscoring its role as an implementation backbone for precision rheumatology [17,18]. To enable generalization across institutions without centralizing sensitive patient data, federated learning (FL) approaches are increasingly adopted. These methods are supported by governance frameworks that formalize data-sharing agreements, auditability standards, and model-card reporting to ensure transparency and safety in clinical use [19,20].

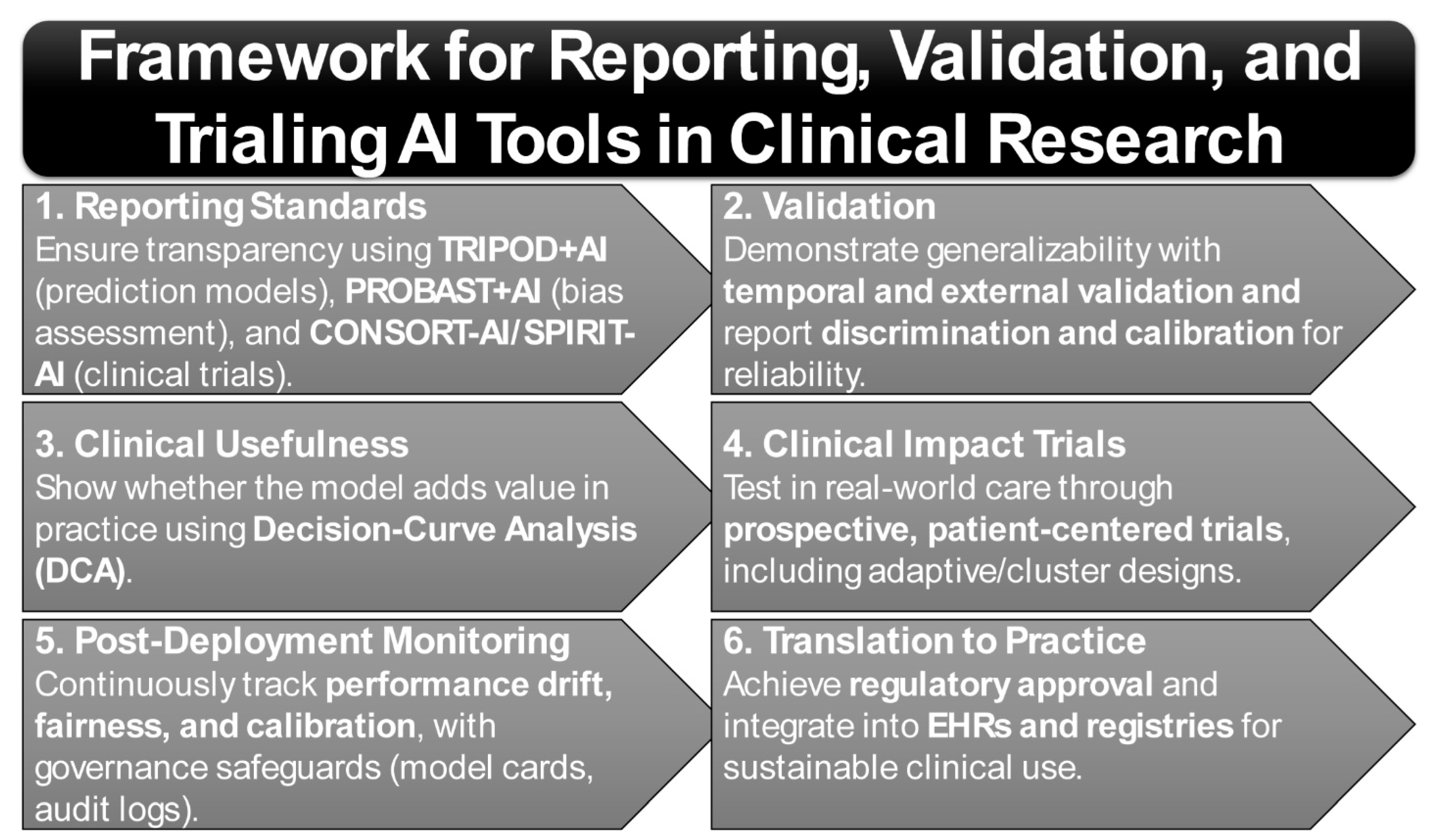

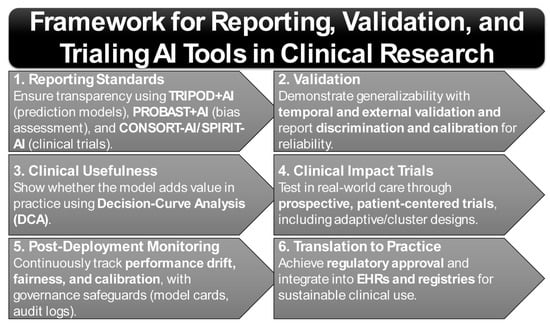

Translation of AI tools from research to practice further depends on adherence to rigorous reporting and bias-assessment frameworks. The TRIPOD+AI guideline establishes minimum requirements for transparent reporting of AI-based prediction models [21,22]. Complementarily, PROBAST+AI provides structured tools for assessing risk of bias and applicability [21], while CONSORT-AI and SPIRIT-AI extend standards for trial reporting and protocol design [23,24]. Together, these instruments form the methodological foundation for the trustworthy evaluation of precision tools in rheumatology. Importantly, given the well-documented influence of ancestry, sex, and socioeconomic context on disease biology, phenotype expression, and care access, equity and subgroup generalizability must be treated as first-order design principles. Continuous recalibration and drift monitoring within registries such as RISE are therefore essential to maintain validity across heterogeneous populations and evolving care environments [25].

Bringing these strands together, the field now stands at a critical inflection point: multimodal biomarkers, advanced AI methodologies, and robust implementation infrastructures are converging to enable pragmatic precision rheumatology [26]. This review synthesizes recent advances, highlights validated exemplars, and delineates the standards and governance practices required to move AIRD care from conceptual promise to routine, clinic-embedded decision support.

This review adopts a narrative approach designed to synthesize recent translational advances in AI-driven biomarker discovery and precision medicine within autoimmune rheumatic diseases. Relevant literature was identified through PubMed and Scopus searches spanning 2015–2025, restricted to articles published in English. Search terms incorporated combinations of MeSH descriptors including “artificial intelligence,” “machine learning,” “biomarkers,” “multi-omics,” “autoimmune rheumatic diseases,” “rheumatoid arthritis,” and “systemic lupus erythematosus.” Eligible studies comprised mechanistic, translational, and clinical investigations applying AI or advanced analytical methods to diagnostic, prognostic, or therapeutic modeling in AIRD. Excluded materials encompassed anecdotal case reports, and purely genetic-association studies without clinical modeling components. This narrative rather than systematic format allows for critical integration of heterogeneous evidence while maintaining focus on clinically actionable insights and methodological innovation.

2. Biomarker Evolution in Autoimmune Rheumatic Diseases

2.1. Classic Biomarkers—Autoantibodies and Inflammatory Markers: Limitations and Drift

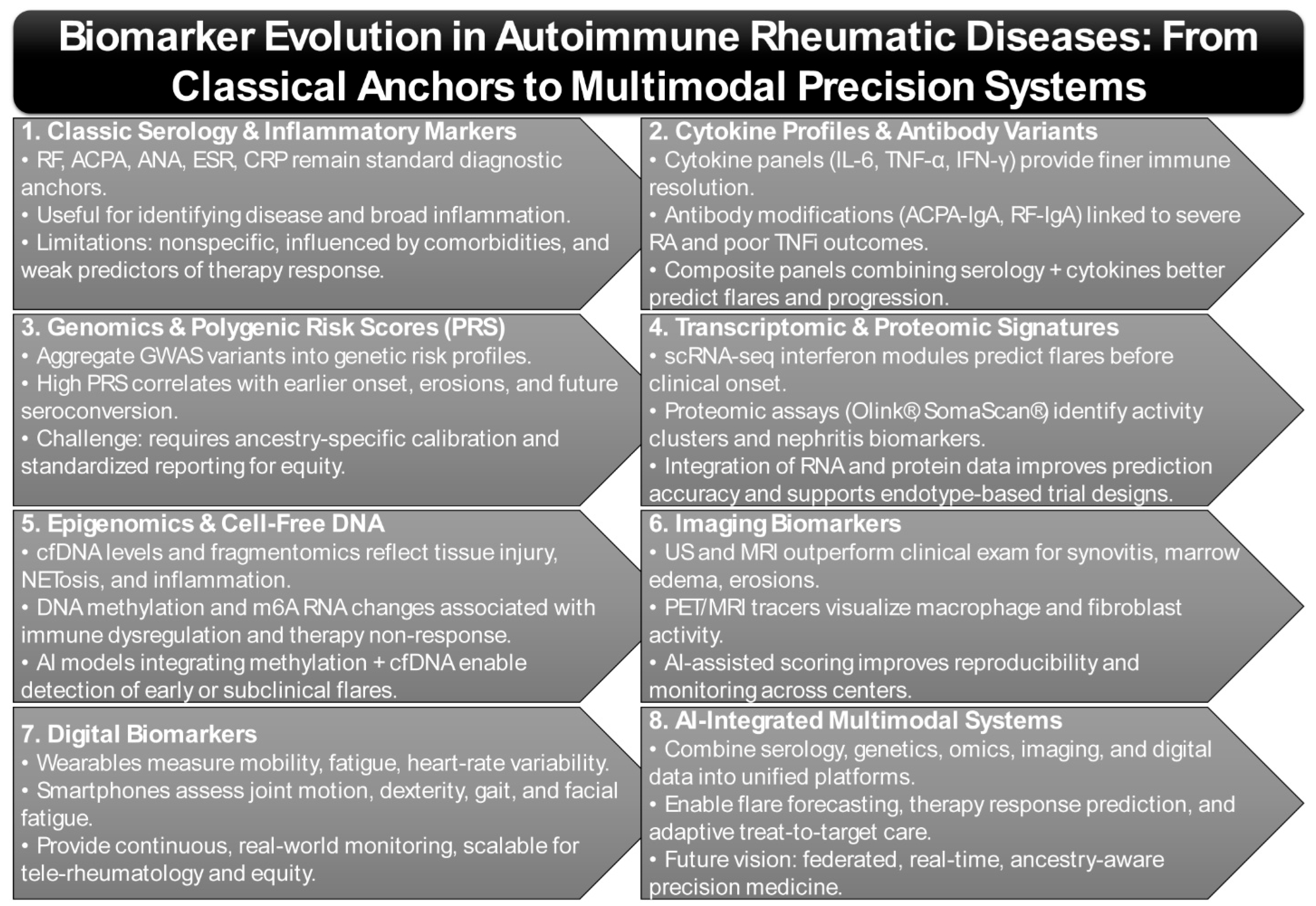

Classic serologic biomarkers—including rheumatoid factor (RF), anti-citrullinated protein antibodies (ACPA), antinuclear antibodies (ANA), and inflammatory markers such as erythrocyte sedimentation rate (ESR) and C-reactive protein (CRP)—remain fundamental to AIRD diagnosis and activity monitoring (Figure 1). Elevated RF titers are consistently associated with more severe RA phenotypes, including extra-articular involvement and reduced responsiveness to TNF-inhibitor therapy, underscoring RF’s continued clinical relevance decades after its discovery [27,28]. Additional isotypes, particularly RF-IgA and ACPA-IgA, have been linked to poorer TNF-inhibitor outcomes and enhanced neutrophil extracellular trap formation, suggesting more profound engagement in RA pathogenesis [29,30]. Nevertheless, RF and ACPA alone fail to capture the full mechanistic heterogeneity of disease or predict therapeutic response with high fidelity.

Figure 1.

Biomarker Landscape Across AIRDs: From Classical Anchors to Multimodal Precision in Autoimmune Rheumatic Diseases.

Recent studies emphasize that these classical markers, while indispensable, act more as “broad-spectrum indicators” rather than precise stratifiers of disease biology. For instance, longitudinal cohort analyses demonstrate that while ACPA positivity predicts erosive progression, it cannot reliably discriminate between patients who will remain refractory versus those who will achieve remission on biologic therapy. Similarly, CRP and ESR, although widely used as inflammatory proxies, are nonspecific and subject to modulation by comorbidities such as infection, obesity, and cardiovascular disease, thus limiting their interpretive precision [31,32,33].

Emerging data now advocates for a layered biomarker strategy: combining serologic markers with molecular correlates such as cytokine signatures (e.g., IL-6, TNF-α, IFN-γ), autoantibody glycosylation patterns, and proteomic fingerprints derived from high-throughput assays (Figure 1) [34,35]. This composite approach has demonstrated superior predictive power for flare risk, radiographic progression, and biologic drug discontinuation.

Recent syntheses emphasize that composite biomarker panels, which integrate serology with immune-complex quantification and additional molecular indicators, outperform single-marker approaches for forecasting disease activity and remission [36,37]. Such multiplexed approaches are increasingly being embedded into AI-driven algorithms, where serologic markers are not discarded but rather contextualized as part of broader multimodal inputs. This shift reframes classical biomarkers not as outdated relics but as essential “anchors” that, when fused with omics and digital data, yield precision-grade stratification tools for clinical decision-making.

2.2. Genomics & Polygenic Risk

Polygenic risk scores (PRSs) aggregate the cumulative burden of GWAS-identified variants to quantify genetic susceptibility in AIRDs. A recent multi-ancestry optimization demonstrated improved predictive capacity of RA PRS but underscored the necessity of ensuring equity and interpretability across diverse ancestry groups (Figure 1) [38]. Similarly, a Taiwanese population study showed that individuals in the highest PRS quartile were significantly more likely to be RF- and ACPA-positive, display bone erosions, and require advanced therapies, thereby directly linking PRS to disease severity and structural damage [39].

Beyond susceptibility, PRSs are increasingly being explored as predictors of disease course and therapeutic response. For example, recent analyses suggest that higher RA-PRS correlates with earlier disease onset, faster radiographic progression, and reduced likelihood of achieving drug-free remission, positioning PRS as potential tools for risk stratification at the preclinical and early-disease stages [39]. Importantly, PRSs have also been associated with subclinical autoimmunity, where elevated scores predict future seroconversion of ACPA and RF in at-risk cohorts [39,40], thus offering a genetic “early warning” system for preventive interventions.

While these studies demonstrate consistent directionality and moderate effect sizes (typically explaining 8–15% of variance), most existing RA PRSs have been developed and optimized using predominantly European-ancestry GWAS datasets, with emerging validation in East Asian cohorts beginning to improve cross-population performance [41]. Predictive power and calibration, however, remain variable across ancestries, underscoring the need for broader representation in training datasets. Limited sample diversity and modest absolute risk separation currently constrain clinical translation, emphasizing that PRSs remain adjunctive rather than stand-alone tools.

Collectively, these findings highlight that although PRSs are promising, their clinical translation will require ancestry-specific calibration and integration with clinical, serologic, and environmental datasets to achieve real-world utility. Long-term implementation will also demand harmonized reporting standards, transparent benchmarking across ancestries, and embedding PRSs within federated, multi-site infrastructures to ensure both generalizability and equity.

2.3. Transcriptomic & Proteomic Signatures

Transcriptomic and proteomic profiling have generated powerful insights into disease heterogeneity and flare risk prediction, particularly in SLE. A longitudinal study in Asian SLE patients used phenome-wide causal proteomics with Mendelian randomization and machine learning to identify five key proteins—SAA1, B4GALT5, GIT2, NAA15, and RPIA—whose expression correlated strongly with one-year flare risk; a composite model integrating these proteins with clinical features achieved an AUC of 0.7 [42]. In parallel, a multi-omics screen of 121 SLE patients compared with healthy controls identified more than 90 differentially expressed proteins and 76 metabolites, including apolipoproteins and arachidonic acid derivatives, with strong correlations to disease activity and renal function; a subset of markers selected via random forest models yielded diagnostic AUCs of 0.86–0.90 [34].

Transcriptomic profiling has further delineated immune-cell-specific activation states that underpin flare dynamics. Single-cell RNA sequencing (scRNA-seq) studies have revealed aberrant type I interferon signatures in plasmacytoid dendritic cells and monocytes, alongside persistent activation of cytotoxic CD8+ T cells in patients with active SLE [43,44]. Longitudinal scRNA-seq has shown that these interferon-driven modules can precede clinical flares by weeks, highlighting their potential as predictive biomarkers. Furthermore, bulk RNA-seq studies consistently identify upregulated interferon-stimulated gene (ISG) clusters, which not only stratify patients by disease activity but also predict responsiveness to targeted IFN-blocking therapies such as anifrolumab [45].

Proteomic investigations are increasingly complemented by high-throughput platforms such as Olink® proximity extension assays and SomaScan® aptamer-based profiling, which allow simultaneous quantification of thousands of circulating proteins at picogram sensitivity (Figure 1) [46]. Notably, proteomic signatures are proving useful in distinguishing lupus nephritis subtypes, where urinary proteomes (e.g., VCAM-1, NGAL, CD163) track intrarenal inflammation and may reduce reliance on repeat biopsies [47].

Integration of transcriptomic and proteomic layers has demonstrated synergistic value. Network-based models show that proteomic changes in the complement and coagulation cascades are tightly coupled with transcriptomic interferon signatures, pointing to shared upstream drivers of disease amplification [48]. Such integrative approaches also enable “endotype” discovery—subgrouping patients by molecular mechanism rather than clinical phenotype—which is now guiding early adaptive trial designs for precision therapeutics.

Despite high discriminatory performance, many studies remain limited by small cohort sizes (often <150 patients), lack of external validation, and variable assay reproducibility. Reported AUCs > 0.85 are encouraging but may overestimate real-world accuracy due to cross-validation bias [49]. Incorporating standardized pipelines and independent replication cohorts will be essential before these signatures can inform regulatory-grade diagnostics.

These findings demonstrate that integrative multi-omics approaches significantly outperform mono-omics strategies in classification, monitoring, and risk stratification in SLE. Going forward, embedding transcriptomic and proteomic biomarkers into federated learning frameworks, with continuous recalibration across diverse ancestries and treatment contexts, will be essential to move from discovery into clinically deployable decision-support tools.

2.4. Epigenomic Alterations and Cell-Free DNA/Fragmentomics as Emerging Biomarkers

Epigenetic dysregulation and cell-free DNA (cfDNA) signatures are rapidly emerging as minimally invasive, dynamic biomarkers in AIRDs [50]. cfDNA, derived from both nuclear and mitochondrial sources, reflects tissue injury, neutrophil extracellular trap (NET) activity, and systemic inflammation [51,52]. Studies report that elevated plasma cfDNA levels, particularly mitochondrial cfDNA, correlate with disease activity in RA and SLE and could serve as a complement to traditional inflammatory markers such as CRP and ESR for real-time disease monitoring [53,54]. Earlier foundational studies highlighted that cfDNA quantification is highly sensitive to pre-analytical variables, including sample handling, fragmentation bias, and contamination, necessitating rigorous standardization before clinical application [55,56,57].

Recent advances extend beyond absolute cfDNA concentration toward “fragmentomics”—the analysis of cfDNA fragment size distribution, genomic positioning, and nucleosomal occupancy patterns [58]. These signatures provide clues about tissue-of-origin, cell death pathways, and immune activation states. For example, RA patients demonstrate enrichment of neutrophil-derived cfDNA fragments, consistent with aberrant NETosis, while SLE cohorts exhibit cfDNA fragmentation patterns linked to lymphocyte and endothelial cell injury [59]. Emerging algorithms now integrate cfDNA methylation landscapes with fragmentomic features to improve sensitivity for detecting low-grade inflammation and organ-specific damage.

Epigenetically, multiple studies have reported consistent DNA methylation alterations and N6-methyladenosine (m6A) modifications in RA and SLE, both of which show potential as diagnostic classifiers and mechanistic readouts of immune dysregulation [60,61,62,63]. DNA methylation changes at immune regulatory loci (e.g., TNFAIP3, STAT4, IRF5) have been linked to aberrant cytokine production and treatment non-response, while altered m6A RNA methylation patterns are increasingly implicated in dysregulated T- and B-cell differentiation [64,65]. These findings suggest that epigenomic markers are not only passive correlations of disease but may also represent causal drivers of autoimmune pathogenesis.

While fragmentomic and epigenomic approaches hold considerable promise for early disease detection and molecular stratification, their current translational readiness remains limited (technology readiness level ~3–4). Most published studies involve fewer than 100 participants and are based on retrospective or convenience sampling, which constrains reproducibility and effect-size precision [56,66]. Establishing standardized pre-analytical workflows, cross-platform benchmarking, and multi-center prospective validation will be essential prerequisites for clinical deployment.

Importantly, interpretable machine learning models are beginning to integrate such data. A multi-task deep learning system demonstrated efficacy in learning cross-disease methylation signatures that retain both predictive accuracy and biological interpretability across autoimmune phenotypes (Figure 1) [67]. Other computational pipelines now fuse cfDNA fragmentomics with methylome-wide profiles, enabling the detection of early disease transitions and subclinical flare states [68]. These approaches highlight the promise of dynamic, mechanism-aware biomarkers that could guide preemptive therapy escalation or tapering.

These advances position cfDNA fragmentomics and epigenomic readouts as central candidates for non-invasive, mechanism-aware disease stratification in AIRDs. Looking forward, standardizing analytic pipelines, embedding ancestry-aware epigenomic references, and validating models prospectively in large multi-center cohorts will be critical steps for clinical translation.

2.5. Imaging Biomarkers

Anatomical and molecular imaging have transformed the capacity to detect subclinical inflammation and structural progression in AIRDs. Ultrasound (US) and magnetic resonance imaging (MRI) remain indispensable tools for the detection and monitoring of inflammatory and structural changes in AIRD. US offers high sensitivity for synovitis, tenosynovitis, and superficial erosions, enabling dynamic bedside assessment of disease activity. However, it cannot directly visualize bone marrow edema, which is best characterized by MRI sequences sensitive to water content, such as STIR or T2-weighted fat-suppressed imaging. Together, these modalities provide complementary information that underpins diagnosis, treat-to-target monitoring, and early evaluation of therapeutic response [69,70]. Musculoskeletal imaging has reinforced that US and MRI consistently outperform clinical examination in quantifying inflammatory burden and predicting structural outcomes in RA and psoriatic arthritis [71,72].

Simplified scoring approaches such as RAMRIS-5 have been validated for use in both early and established RA, enabling semi-quantitative assessment of inflammation and joint damage with reduced burden compared to full OMERACT RAMRIS scoring [73,74]. In clinical trials, such simplified scoring systems have accelerated feasibility while retaining high sensitivity to change, thereby supporting their integration into adaptive trial designs and pragmatic real-world monitoring frameworks [75,76]. Importantly, these simplified indices are increasingly paired with AI-assisted image interpretation, which reduces inter-reader variability and enhances reproducibility across centers.

Beyond structural imaging, molecular imaging has advanced rapidly: novel radiotracers targeting activated macrophages or fibroblast-like synoviocytes in inflamed synovium now enable non-invasive visualization of disease-specific biology [69,77]. PET/MRI with tracers such as ^18F-FDG or macrophage-specific ligands can complement US/MRI by providing metabolic signatures of synovitis, creating opportunities for multi-scale phenotyping and drug-response monitoring [78,79]. For instance, PET tracers binding to the folate receptor β on synovial macrophages allow distinction between inflamed and quiescent tissue, while novel fibroblast-activation protein (FAP) ligands provide unique readouts of stromal pathogenicity [80,81]. These molecular approaches are beginning to bridge the gap between static anatomic imaging and dynamic immune-pathobiology, enabling visualization of pathways directly targeted by emerging therapies.

Technological convergence is also driving next-generation imaging biomarkers. Hybrid modalities such as PET/MRI integrate high-resolution anatomic detail with metabolic and immunologic readouts in a single acquisition, while AI-enhanced US leverages automated Doppler signal quantification for real-time flare detection (Figure 1) [82]. Furthermore, machine learning models trained on imaging features—including gray-scale US, power Doppler, and MRI-derived quantitative maps—are being developed for automated disease activity scoring and longitudinal progression prediction [3].

Despite impressive technical accuracy, most imaging-AI studies remain single-center and retrospective, with heterogeneous scanner protocols and modest sample sizes (<300 cases). Reported diagnostic AUCs of 0.85–0.95 often lack external validation, and cost or infrastructure barriers may hinder scalability [83]. Prospective multicenter benchmarking and open-source reference datasets are urgently needed to confirm clinical benefit and cost-effectiveness.

Imaging biomarkers are poised to serve not only as diagnostic adjuncts but as surrogate endpoints in clinical trials, particularly as regulatory agencies begin to recognize imaging-derived metrics of synovitis or erosive change as validated outcome measures. Integration with digital biomarkers (e.g., wearables, motion capture) may further enable remote, multimodal disease activity tracking, ushering in an era of precision monitoring in AIRDs.

Across these domains, imaging biomarkers demonstrate the greatest real-world validation and regulatory readiness, followed by transcriptomic and proteomic signatures that show emerging predictive utility in SLE and RA. Epigenomic and fragmentomic markers remain largely exploratory, whereas polygenic-risk tools provide moderate yet ancestry-limited predictive power. Collectively, this hierarchy highlights that the integration of precision-medicine approaches in AIRD is progressing unevenly—imaging is nearing clinical implementation, multi-omics are in translational consolidation, and digital biomarkers represent the next frontier once standardization and equity challenges are resolved.

2.6. Digital Biomarkers (Wearables/Smartphones)

Digital phenotyping using smartphones and wearable sensors is increasingly recognized as a transformative approach to capture continuous, ecologically valid data in AIRDs (Figure 1) [4]. Digital phenotypes refer to quantifiable behavioral and physiological signatures—such as movement patterns, heart-rate variability, speech prosody, and sleep rhythms—captured through connected sensors and mobile devices [84]. These dynamic data streams mirror disease activity in real time, complementing conventional clinical and laboratory markers. Integrating Apple Watch-derived mobility, fatigue, and heart-rate metrics with smartphone-guided dexterity tasks enabled machine learning models to infer RA disease activity and severity with accuracy exceeding that of intermittent clinical assessments [13,85]. These digital endpoints provide high-frequency functional data that extends beyond clinic visits, offering unprecedented resolution for disease course monitoring. Unlike traditional biomarkers that capture “snapshots” of disease during scheduled visits, digital biomarkers generate dense time-series data that reflect real-world fluctuations in mobility, pain, and fatigue, thus uncovering patterns that may be invisible to episodic clinical assessment.

To ensure clinical reliability, wearable and smartphone measures undergo standardization through multi-device calibration, test–retest reproducibility studies, and validation against established clinical indices such as DAS28, SLEDAI, or CDAI [86]. Current frameworks led by the Digital Rheumatology Network (DRN) and OMERACT recommend benchmarking algorithms for accuracy, precision, and interpretability across vendors, while cross-platform normalization corrects for differences in sensor sampling frequency, placement, and firmware [13]. Such validation enables digital phenotypes to evolve from exploratory metrics into qualified clinical biomarkers capable of regulatory acceptance.

Recent validation studies of single-camera smartphone video analysis have reinforced its feasibility in rheumatology [87]. One investigation demonstrated that finger-joint mobility captured via smartphone correlated strongly with DAS28 scores and physician-assessed disease activity, underscoring its potential as a scalable, low-cost digital biomarker for tele-rheumatology [88]. Other studies have expanded this paradigm to include gait analysis, grip strength estimation, and facial expression monitoring for fatigue and pain detection, showing that even consumer-grade cameras can yield clinically actionable signals [89]. Importantly, these methods democratize access by reducing reliance on specialized imaging infrastructure, particularly in under-resourced settings.

At the systems level, the Digital Rheumatology Network (DRN) is driving consensus guidelines around the validation and integration of digital tools, emphasizing explainable AI, interoperability, and patient-centric design to ensure uptake in PsA, RA, and beyond [90,91]. The DRN and related initiatives are also addressing critical challenges of data privacy, regulatory approval, and clinical workflow integration—highlighting that technical feasibility must be matched with governance and ethical oversight. Moreover, hybrid models that fuse digital biomarkers with molecular and imaging data are emerging, enabling multi-layered disease signatures that better reflect the complexity of AIRDs.

Collectively, these initiatives position digital biomarkers as key elements of next-generation precision rheumatology, complementing molecular and imaging modalities. The integration of smartphone- and wearable-derived biomarkers into adaptive trials, treat-to-target protocols, and remote patient monitoring platforms may shorten feedback loops in clinical care—moving from reactive management of flares toward proactive, personalized disease interception.

3. Harnessing AI and Machine Learning for Autoimmune Rheumatic Diseases

Artificial intelligence (AI) and machine learning (ML) are increasingly applied to AIRD, offering opportunities for earlier diagnosis, continuous monitoring, and personalized treatment optimization [92,93]. Unlike other specialties with more homogeneous data, AIRD research faces the complexity of heterogeneous clinical presentations, multimodal diagnostic pipelines (imaging, serology, -omics), and variable disease trajectories, all of which demand sophisticated modeling approaches. Methods range from traditional regularized regression and gradient boosting to advanced deep learning and multimodal fusion architecture. Importantly, new trends highlight causal inference frameworks and clinician-in-the-loop deployment, reflecting a shift from mere predictive accuracy toward actionable, safe, and interpretable tools [94]. This shift underscores the transition from “black-box prediction” to mechanism-aware, clinically integrated AI that aligns with regulatory expectations for transparency and accountability.

Recent translational advances demonstrate that AI tools are no longer confined to experimental settings but are beginning to enhance clinical decision-making across major AIRDs. In rheumatoid arthritis, transformer-based ultrasound models have achieved near-expert accuracy for detecting synovitis and quantifying power Doppler signal, effectively standardizing disease-activity scoring and reducing inter-reader variability [95]. In systemic lupus erythematosus, multimodal AI frameworks combining interferon gene signatures with clinical features have successfully predicted response to anifrolumab and other IFN-blocking biologics, guiding precision therapy selection in real-world cohorts [96]. Similarly, in systemic sclerosis, convolutional neural network-based nailfold-capillaroscopy systems (e.g., CAPI-Detect, EfficientNet-B0) have improved early diagnostic accuracy to over 90%, allowing detection of microvascular pathology before overt clinical manifestations [97,98]. These exemplars illustrate how AI is directly enhancing diagnostic reproducibility, flare prediction, and therapeutic response modeling, narrowing the gap between algorithmic innovation and bedside application.

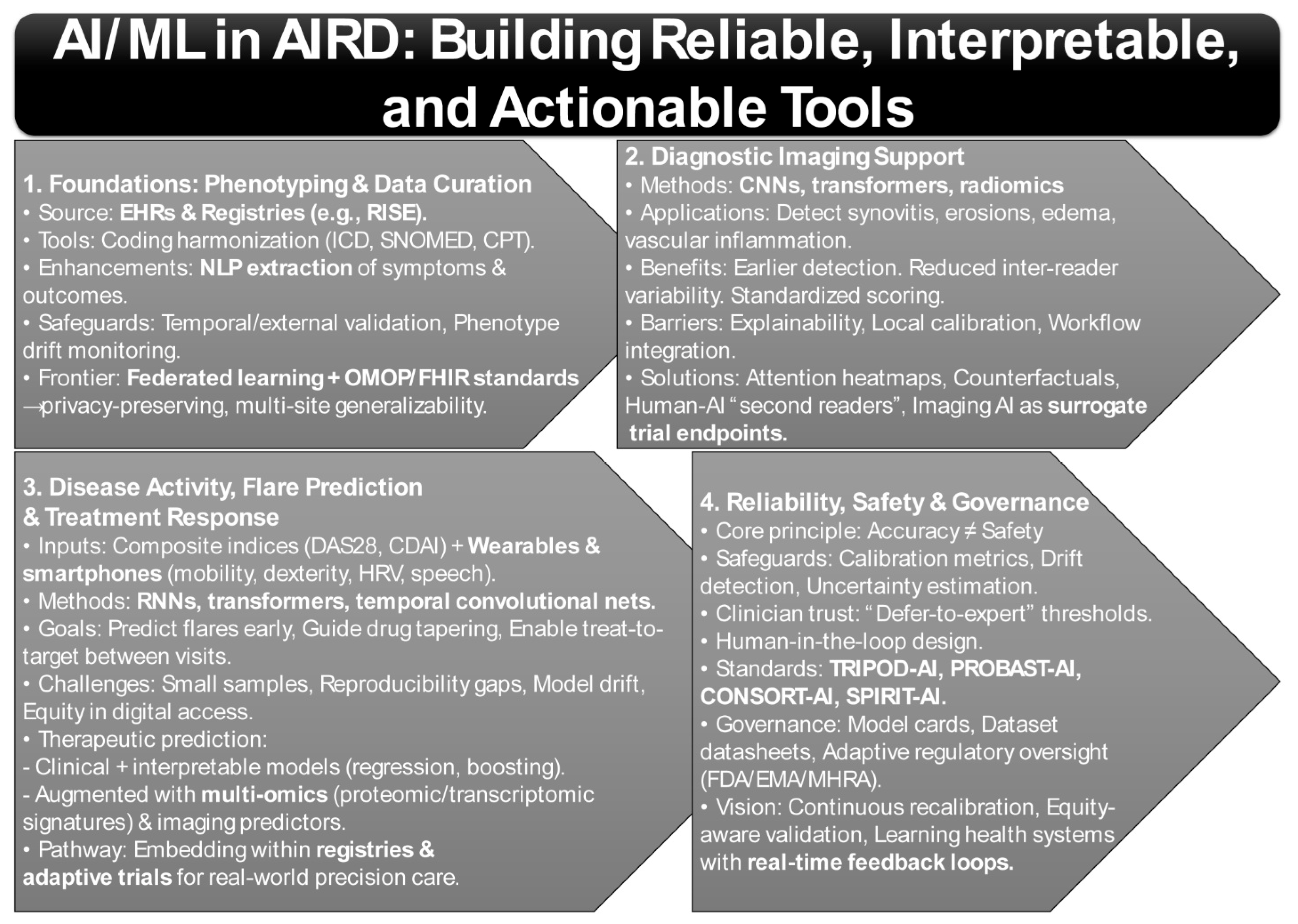

3.1. Phenotyping and EHR Curation

A fundamental challenge in AIRD is the reliable construction of phenotypes from complex EHR and registry data [99,100]. National efforts such as the American College of Rheumatology’s RISE registry now aggregate data across >1000 U.S. practices, enabling longitudinal monitoring and real-world model development [101,102,103]. However, registry-based AI pipelines require harmonization of coding systems (ICD, CPT, SNOMED), management of missingness, and governance against “phenotype drift” as disease definitions evolve (Figure 2) [104,105].

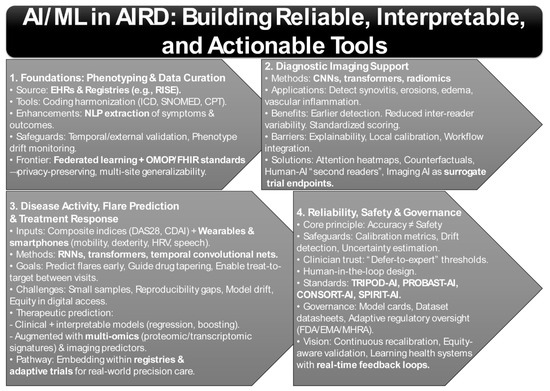

Figure 2.

AI/ML Methods That Matter in Autoimmune Rheumatic Diseases: From Phenotyping to Precision Care.

Recent work demonstrates that natural language processing (NLP) can significantly enhance data capture. For example, studies showed that NLP applied to free-text rheumatology notes extracted functional status and pulmonary outcomes with higher sensitivity than structured coding alone, reducing misclassification in registries [106,107,108]. Yet, these pipelines face generalizability challenges—site-specific documentation habits and EHR vendor differences often degrade performance when applied across institutions [108,109]. This underscores the need for temporal and external validation, as well as model governance structures to ensure reliability over time and across populations.

Moreover, federated learning approaches are now being piloted to enable cross-institutional model training without centralizing sensitive patient data, thereby improving generalizability while maintaining privacy. Interoperability frameworks such as OMOP-CDM and FHIR are increasingly being paired with AI pipelines to standardize data representation, further reducing barriers to multicenter validation [110,111]. Together, these developments illustrate how robust phenotype construction is evolving into the foundation for downstream predictive and prognostic modeling in AIRD.

3.2. Diagnostic Imaging Support

Imaging is perhaps the most mature application of AI in AIRD. Deep learning methods, particularly convolutional neural networks (CNNs), transformers, and radiomics pipelines, have achieved success in identifying synovitis, erosions, bone marrow edema, and vascular inflammation across conditions such as RA, axial spondyloarthritis (axSpA), systemic sclerosis (SSc), and giant cell arteritis (GCA) (Figure 2) [83,112].

A study demonstrated that AI-enhanced radiographic scoring systems could identify subtle erosive changes in RA earlier than human assessors, with reduced inter-reader variability [113]. Similarly, studies showed that transformer-based ultrasound models accurately quantified synovial proliferation and power Doppler signal, suggesting a role in standardizing clinical scoring across sites [3,114,115]. A meta-analysis of deep learning in musculoskeletal imaging confirmed robust performance but also highlighted heterogeneity in validation and lack of calibration reporting [116].

The translational challenge lies in prospective evaluation and workflow integration. While AI can accelerate reads and standardize interpretation, radiologists and rheumatologists demand explainability, uncertainty estimation, and local calibration before adopting models into routine practice. Without these, there is a risk of automation bias, especially in borderline cases where expert oversight remains indispensable.

Emerging solutions include attention heatmaps, counterfactual visualizations, and uncertainty quantification methods that help clinicians understand why a model made a given prediction [117]. Hybrid human-AI systems are also being tested, where models act as triage or “second readers,” flagging high-risk scans while leaving ultimate decision-making with experts [118]. In parallel, early-phase trials are beginning to explore imaging AI as a surrogate endpoint for drug efficacy, raising the possibility that automated quantification of synovitis or vascular inflammation could accelerate therapeutic evaluation [119].

3.3. Disease Activity, Flare Prediction, and Treatment Response

Disease activity monitoring and treatment optimization constitute two of the most critical unmet needs in the application of AI to AIRD. Time-series ML frameworks have been increasingly applied to forecast fluctuations in disease activity by integrating longitudinal measurements of validated composite indices, including the Disease Activity Score in 28 joints (DAS28) and the Clinical Disease Activity Index (CDAI), with continuous streams of patient-generated health data from wearables and smartphones (Figure 2) [120,121]. Recent evidence indicates that accelerometer-derived mobility profiles and touchscreen-based dexterity metrics, when combined with patient-reported outcomes, can anticipate flare events several days prior to their clinical manifestation [122,123]. Such approaches highlight the potential of AI to extend treat-to-target strategies into inter-visit periods and to enable proactive adjustments in disease management [124].

Importantly, flare prediction extends beyond symptom anticipation: early detection of subclinical activity may prevent irreversible joint damage, reduce corticosteroid dependence, and optimize drug tapering strategies [125]. Novel architectures such as recurrent neural networks (RNNs), transformers, and temporal convolutional networks are particularly well suited to capturing nonlinear disease trajectories and lagged effects of therapy, offering richer predictive insights than static regression-based models [126]. In addition, integration of multi-modal features—such as wearable-derived sleep disruption, HRV fluctuations, and smartphone-based speech prosody—has begun to uncover latent signatures of systemic inflammation, pointing to a broader phenome-wide approach to flare detection.

Nonetheless, current studies remain constrained by small sample sizes, limited reproducibility, and the lack of standardized digital biomarkers, thereby restricting the generalizability of predictive models. Moreover, harmonized data standards and explicit attention to equity in digital monitoring have been emphasized as essential safeguards to ensure that the deployment of flare-prediction algorithms does not exacerbate existing disparities in access to care [127]. Equally important is the issue of model drift: flare-prediction algorithms must undergo continuous recalibration as treatment paradigms, patient behaviors, and sensor technologies evolve, necessitating governance frameworks for lifecycle monitoring [128].

Parallel efforts have focused on the prediction of therapeutic response to biologic disease-modifying antirheumatic drugs (bDMARDs) and JAK inhibitors, representing a major translational frontier in precision rheumatology [129,130]. Systematic reviews report wide variability in predictive performance, with areas under the curve (AUC) ranging substantially, and highlight methodological heterogeneity across studies. Models constructed from routinely available baseline clinical variables and employing interpretable algorithms, such as penalized regression and gradient boosting, demonstrated the most consistent external validation [131,132].

By contrast, models augmented with multi-omics and imaging data often achieved higher discriminatory performance but faced increased risks of overfitting, scalability challenges, and uncertain cost-effectiveness [133]. Proteomic and transcriptomic signatures—such as IFN-stimulated gene modules in SLE or baseline TNF/IL-6 pathway activity in RA—are showing promise for predicting biologic response [134], yet their translation requires harmonized assays, prospective validation, and reimbursement strategies. Imaging-based predictors, including MRI synovitis scores and Doppler ultrasound vascularity, have also correlated with treatment response but are limited by cost and accessibility [135], raising questions about their role in routine practice.

Embedding such predictive frameworks within large-scale, registry-based adaptive infrastructures, such as the Rheumatology Informatics System for Effectiveness (RISE), offers a promising pathway for iterative refinement, prospective validation, and integration into real-world care [136]. Furthermore, adaptive trial designs are beginning to leverage prediction models for dynamic treatment allocation, accelerating drug evaluation while simultaneously generating validation data for the models themselves [76]. This bidirectional integration between AI tools and trial infrastructures represents a crucial step toward mechanism-aware precision therapeutics.

Nevertheless, unresolved challenges remain regarding regulatory approval, calibration across diverse populations, and robust health-economic evaluation, all of which will ultimately determine the feasibility and sustainability of widespread clinical adoption [137]. The long-term vision is the embedding of AI-driven flare prediction and treatment-response tools within learning health systems, where continuous feedback loops between clinic, registry, and patient-generated data enable real-time precision care.

3.4. Reliability, Safety, and Governance

Reliability and safety remain the defining cornerstones of clinical AI deployment. High discriminatory performance alone offers little value if models are poorly calibrated or become unstable under conditions of data drift [138]. Recent studies have underscored the need for routine evaluation of calibration across multiple dimensions—including calibration-in-the-large, calibration slope, and subgroup-specific calibration—to mitigate the risk of systematic overtreatment or undertreatment, risks that disproportionately affect minority populations [139]. Evidence from medical imaging demonstrates that passive performance monitoring is insufficient to detect covariate or prevalence shifts, reinforcing the necessity of active drift detection strategies and periodic temporal validation [140]. This lesson is particularly salient in rheumatology, where treatment guidelines evolve and registries continue to expand, demanding that data quality audits and recalibration triggers be embedded within deployment pipelines [141].

Equally important is the integration of mechanisms that preserve clinical trust. Research has shown that referral triage models which incorporate uncertainty estimates and implement defer-to-expert thresholds not only enhance clinician confidence but also reduce automation bias [142]. Uncertainty quantification—through Bayesian modeling, conformal prediction, or ensemble variance estimation—is now considered essential for mitigating false reassurance and guiding safe escalation pathways [143]. By explicitly flagging ambiguous cases, AI systems can encourage collaborative decision-making rather than unilateral algorithmic recommendations. This highlights a broader principle: AI in AIRD should be designed to augment rather than replace expert judgment.

Updated reporting frameworks are beginning to institutionalize this ethos. TRIPOD-AI now mandates transparency in the handling of preprocessing steps, missing data, validation strategies, and clinical utility analyses [22]. Complementing this, PROBAST-AI provides a structured framework for assessing risk of bias and applicability in machine-learning prediction models, addressing critical gaps in peer review and regulatory oversight [21]. The CONSORT-AI and SPIRIT-AI extensions further extend this framework to the design and reporting of AI-enabled clinical trials, ensuring reproducibility and regulatory compliance in prospective studies [144]. Together, these frameworks are shaping a standards-based ecosystem that prioritizes interpretability, fairness, and clinical relevance alongside raw accuracy (Figure 2).

Governance infrastructures are also emerging as critical components of reliable AI deployment. Model cards and datasheets for datasets are increasingly required to document training cohorts, limitations, and intended use cases, while regulatory bodies such as the FDA, EMA, and MHRA are developing adaptive oversight frameworks for continuously learning algorithms (Figure 2) [145,146]. In rheumatology, this governance must also account for ancestry-aware PRS, equity in digital biomarker access, and evolving therapeutic landscape issues that amplify the risk of model obsolescence if oversight is not dynamic [147].

Ensuring equity in AI-enabled rheumatology requires deliberate sampling across ancestry, sex, and socioeconomic strata to capture population diversity and avoid algorithmic bias [148,149]. Fairness-auditing pipelines, subgroup calibration metrics, and transparent documentation of data composition are now considered essential safeguards to prevent systemic bias and ensure model generalizability. Moreover, socioeconomic disparities in access to smartphones and wearables introduce a “digital divide” that can distort data inputs and downstream predictions. Addressing these inequities demands inclusive dataset design, equity-weighted model evaluation, and regulatory alignment with initiatives such as the FDA’s Algorithmic Bias Guidance, which encourages demographic reporting and continuous fairness monitoring across product lifecycles [139]. Embedding these safeguards within learning-health-system infrastructures will be vital to guarantee that AI advances translate equitably across all patient populations.

Taken together, these methodological advances outline a pragmatic blueprint for implementation. The process begins with rigorous phenotyping of electronic health records and careful curation of registry data, which establish the foundation for reliable model development. From this base, interpretable baseline models should be benchmarked before advancing to more complex multimodal deep learning frameworks, ensuring that predictive performance is not gained at the expense of transparency. At the deployment stage, systematic incorporation of calibration metrics, decision-curve analysis, and mechanisms for drift monitoring became essential to maintain reliability over time and across settings. Equally important is the design of clinician-in-the-loop interfaces that not only safeguard trust but also promote safety, transparency, and equity—ensuring that AI systems enhance rather than disrupt existing care pathways.

The trajectory of the field has already moved beyond proof-of-concept demonstrations toward registry-scale feasibility. Yet the next decisive frontier lies in prospective validation, the adoption of causal framing to strengthen inference, and the seamless integration of these models into decision support systems capable of delivering demonstrable improvements in patient outcomes. Ultimately, governance in AIRD AI must balance innovation with accountability, ensuring that models are safe, equitable, and continuously aligned with evolving standards of care.

4. Redefining Autoimmune Rheumatic Disease Pathways: From Immune Signatures to AI-Enhanced Precision Medicine

4.1. Rheumatoid Arthritis (RA)

Recent randomized controlled trial demonstrated that abatacept, a CTLA-4–Ig co-stimulation blocking biologic, can delay the transition from autoantibody positivity with arthralgia (pre-RA) to clinically classifiable RA. The APIPPRA trial provided early evidence of preventive efficacy in seropositive individuals without overt synovitis (Table 1) [150], while the ARIAA trial extended these findings to patients with subclinical joint inflammation detected by MRI [151]. In both studies, abatacept was associated with reduced progression to overt RA and diminished inflammatory activity, with benefits persisting beyond the treatment period. Collectively, these trials support the concept that targeted immunomodulation during the pre-clinical phase can alter the natural course of disease development.

Table 1.

Disease-Focused Advances in AI, Biomarkers, and Digital Health Across Autoimmune Rheumatic Diseases.

Together, these trials underscore a paradigm shift toward early “disease interception” in RA, whereby immune modulation in at-risk individuals may prevent or substantially delay disease onset. This represents a new frontier in rheumatology, where prevention-oriented strategies could reshape the natural history of disease. Yet critical questions remain regarding the long-term durability of benefit, potential rebound activity following treatment cessation, and the cost-effectiveness of extending biologic therapies into pre-clinical or at-risk populations. Moreover, the risk/benefit calculus of exposing asymptomatic individuals to immunosuppressive agents requires careful evaluation through adaptive, stratified trial designs [152].

Artificial intelligence (AI) and deep learning methods are increasingly applied to US and MRI for detecting and quantifying synovitis, joint erosion, and joint space narrowing. Recent systematic reviews highlight frameworks aligned with RAMRIS and OMERACT standards, employing architectures such as U-Net, convolutional neural networks, and transformer variants to improve reproducibility compared with human scoring, while also enhancing sensitivity to change [153,154,155,156]. Automated segmentation algorithms now achieve near-human accuracy in delineating synovial hypertrophy and erosions [157], while deep radiomics pipelines are beginning to uncover latent imaging features predictive of future structural progression, even before they are visually appreciable [158].

Innovative sub-pixel quantification methods have also been proposed for detecting minute changes in joint space narrowing (JSN) on radiographs, increasing sensitivity in early disease where structural progression may be subtle [159,160]. Despite progress, many imaging AI studies remain constrained by small, homogeneous datasets, lack of external validation, and inconsistent image acquisition protocols, which collectively limit clinical deployment [161]. Future efforts will require federated learning across multi-center cohorts, harmonization of imaging protocols, and incorporation of calibration metrics to ensure robustness across devices, vendors, and populations.

Digital health approaches are increasingly explored as scalable, objective tools for functional monitoring in RA. A study applied single-camera smartphone motion capture to assess repeated fist closures [88]. Extracted kinematic features—including range of motion, time to maximal flexion, and velocity—correlated strongly with disease activity measured by DAS28. Such approaches illustrate the feasibility of remote functional biomarkers for RA, aligning with treat-to-target strategies and expanding the potential for continuous, home-based disease monitoring. In addition, wearable-derived accelerometry, grip strength sensors, and smartphone-based joint stiffness trackers are being integrated into multimodal pipelines, opening opportunities for near real-time flare detection and longitudinal disease activity profiling [162]. Nevertheless, larger multi-center validation and clear regulatory pathways for digital biomarker adoption remain prerequisites for clinical translation.

Advances in multi-omics integration and machine learning (ML) have accelerated efforts to predict therapeutic response across biologic DMARDs (bDMARDs) and Janus kinase inhibitors (JAKi) [163,164]. A recent scoping review synthesized nearly ninety studies, the majority in RA, with smaller but growing efforts in spondyloarthritis (SpA) and psoriatic arthritis (PsA). These studies leveraged diverse inputs, including clinical biomarkers, genomic variants, proteomic patterns, and imaging-derived features. Reported performance was heterogeneous, with modest to strong discriminatory ability depending on data type and modeling approach [165].

Models that combined multi-omics data with imaging signatures generally outperformed those based on clinical or single-modality inputs. However, most remain exploration, constrained by limited external validation and insufficient reproducibility across cohorts [165]. Emerging candidate predictors include interferon- and B-cell-related gene expression modules, autoantibody glycosylation patterns, and proteomic correlates of TNF/IL-6 pathway activity [12]. While these molecular features highlight promising biological axes for precision therapy, translation into practice is hampered by unresolved issues: the absence of harmonized assay platforms, challenges in standardizing bioinformatic pipelines, and the need for real-world cost-effectiveness evaluations to justify implementation at scale.

In parallel, a study in large European RA cohorts identified clinical and serologic predictors of response to b/tsDMARDs. Factors such as baseline disease activity, age, prior biologic exposure, inflammatory markers (CRP, ESR), and comorbidities influenced therapeutic outcomes, offering pragmatic tools for patient stratification [166]. These pragmatic predictors, while less mechanistically granular than omics-driven models, currently represent the most immediately translatable approach, particularly in health systems where resource constraints limit access to advanced biomarker profiling.

4.2. Systemic Lupus Erythematosus (SLE)

4.2.1. IFN Signature & Targeted Therapy

Type I interferon (IFN) signaling is central to SLE pathogenesis and contributes to disease activity, organ involvement, and long-term prognosis. Disease heterogeneity is reflected in variable IFN gene signature (IFNGS) levels, autoantibody profiles, and downstream pathways such as neutrophil extracellular trap (NET) formation (Table 1) [167,168]. For example, a study demonstrated associations between autoantibodies, elevated IFN signatures, NET release, and clinical phenotypes, underscoring IFN signaling as a mechanistic driver of disease expression [169].

Anifrolumab, a monoclonal antibody targeting IFNAR1, was approved for moderate-to-severe SLE following pivotal phase III trials. Recent studies confirm that anifrolumab suppresses the IFN signature, reduces cutaneous activity, and lowers flare frequency [170,171]. A study reported durable IFN signature suppression accompanied by clinical improvement [172]. Post-marketing evidence has further validated these findings, showing real-world effectiveness in reducing corticosteroid dependence and improving patient-reported fatigue scores—an especially relevant outcome given the high burden of fatigue in SLE [173].

Studies emphasized that baseline IFN signature magnitude stratifies response likelihood across IFN-targeting therapies, including anifrolumab and anti-IFNα antibodies [170,174]. Patients with a “high IFN” molecular endotype consistently demonstrate greater probability of response, suggesting that the IFNGS may serve as both a predictive and pharmacodynamic biomarker. Conversely, “low IFN” patients often fail to derive meaningful benefit [175], highlighting the necessity of molecular stratification prior to initiating IFN-targeted therapies.

Emerging translational data suggest IFN signatures can serve as monitoring biomarkers. It has been shown that longitudinal changes in SIGLEC-1 expression on monocytes correlated with systemic and cutaneous responses; increased SIGLEC-1 was associated with relapse [176]. Other interferon-inducible proteins, including CXCL10 and ISG15, are also being evaluated as dynamic readouts of pathway activity, with potential to inform early therapeutic switching before clinical relapses are apparent [177].

Not all patients with high IFN signatures respond, and responses vary by organ system. In lupus nephritis, benefits on proteinuria reduction are inconsistent [178]. A study demonstrated that subsets of SLE patients may exhibit uncoupled IFN pathway activation, providing one explanation for variable treatment responses [179]. This heterogeneity may reflect differential activation of type I versus type II interferon pathways, crosstalk with BAFF/TNF signaling, or organ-specific immune microenvironments that alter drug penetrance and pathway dependence. Such findings emphasize the need for combination therapies—pairing IFN blockade with agents targeting B-cell activation, complement, or JAK/STAT signaling—to achieve durable remission across diverse SLE manifestations.

Long-term outcome studies are needed to determine whether sustained IFN signature suppression reduces irreversible organ damage. Key unanswered questions include whether IFN suppression modifies cardiovascular risk, mitigates neuropsychiatric SLE progression, or prevents accrual of organ damage over decades of disease. Prospective registries and adaptive platform trials will be essential to establish the durability, safety, and health-economic impact of IFN-targeted therapies in SLE.

4.2.2. Digital Measures & Flare Prediction

The OASIS study applied biosensors and patient-reported outcomes (PROs) to longitudinally track ~550 SLE patients, including 144 smartwatch users [180]. The study showed that integrating biometric data, quality-of-life metrics, and PROs into ML classifiers achieved strong flare discrimination [180]. These results demonstrate the feasibility of integrating passive physiological data streams—such as heart rate variability, step counts, and sleep duration—with subjective symptom reports, thereby creating multimodal signatures of flare risk that extend beyond clinic-based assessments.

In another investigation, the FLAME pipeline (FLAre Machine learning prediction of SLE) was developed, demonstrating that multivariable EHR data could predict flares with fair accuracy, providing proof-of-concept for real-world data integration [181]. Importantly, FLAME leveraged routinely available structured fields (labs, medications, visit patterns) to build scalable models, suggesting that pragmatic flare prediction tools can be embedded within existing health record infrastructures without requiring additional patient burden.

In lupus nephritis, a deep learning model incorporating 59 demographic, clinical, and pathological features achieved strong discrimination for predicting renal flares in time-series datasets [182]. This approach highlights the potential of multimodal fusion—linking histopathology with longitudinal clinical variables—to anticipate nephritic flares before overt proteinuria or renal dysfunction emerges, a capability that could transform monitoring strategies and guide earlier therapeutic escalation.

Another study identified patient-prioritized digital concepts of interest (COIs)—walking ability, sustained activity, rest, and sleep—and mapped them to measurable digital clinical measures (DCMs) such as daily steps and sleep efficiency [183]. Patients favored wrist-worn devices, highlighting acceptability. The prioritization of mobility and fatigue-related metrics underscores that patient-valued outcomes may differ from physician-centric disease activity indices, emphasizing the importance of patient-centered design in digital biomarker development.

A separate study used baseline plasma proteomics (data-independent acquisition) plus clinical data to predict flares over one year in an Asian cohort (AUC ≈ 0.77). Candidate biomarkers included SAA1, B4GALT5, GIT2, NAA15, and RPIA [42]. These findings illustrate the promise of integrating circulating proteomic signatures with digital and clinical data streams, potentially enabling hybrid molecular-digital biomarkers for robust flare prediction.

Current digital models are often limited by small sample sizes, reliance on internal cross-validation, and inconsistent flare definitions. External validation and regulatory qualification of DCMs remain pressing needs. Standardizing flare definitions across studies, establishing interoperability between devices, and embedding validation cohorts across ancestries and healthcare systems will be essential to achieve regulatory recognition. Ultimately, the goal is to transition digital measures from exploratory research endpoints into qualified biomarkers that can support trial enrichment, adaptive dosing, and real-world disease monitoring in SLE.

4.3. Systemic Sclerosis (SSc)

A pilot study using ResNet-34 + YOLOv3 achieved sensitivity and specificity of ~89% for distinguishing pathological vs. normal nailfold images, with ~96.5% precision for capillary density counts in systemic sclerosis (SSc) cohorts [184]. AI applications in nailfold capillaroscopy (NFC) have highlighted the utility of supervised deep learning for detecting SSc-specific abnormalities such as giant capillaries, hemorrhages, and capillary dropout. However, longitudinal validation linking microvascular metrics to clinical outcomes (e.g., digital ulcers, pulmonary hypertension) remains scarce (Table 1) [97,185].

In one study, CAPI-Detect was developed as a machine learning model trained on >1500 NFC images using 24 quantitative features. It achieved ~91% accuracy in distinguishing scleroderma-specific from nonspecific patterns and improved classification across early, active, and late SSc microvascular stages [98]. Another investigation reported that an EfficientNet-B0 cascade model, applied to NFC images from 225 patients, achieved ROC-AUC values near 1.0, with substantial gains over conventional single-transfer learning methods [97]. A separate group released a large, annotated NFC dataset (321 images, 219 videos), enabling training for both morphological and dynamic feature extraction. Their pipeline reached ~89.9% accuracy for abnormal morphology detection with sub-pixel measurement precision [185].

These advances position AI-assisted NFC as one of the most mature digital applications in SSc, offering potential for automated early diagnosis, microvascular staging, and longitudinal monitoring. Importantly, AI-driven quantification reduces inter-observer variability—a longstanding challenge in manual NFC interpretation—and provides scalable, reproducible readouts that may standardize clinical practice. Moreover, the ability to detect subtle microvascular alterations could enable earlier identification of patients at risk for vasculopathic complications, including digital ulcers and pulmonary arterial hypertension, both of which are major drivers of morbidity and mortality in SSc.

Despite progress, challenges remain. AI-assisted NFC is approaching clinical readiness, yet lack of multicenter datasets, absence of prospective prognostic validation, and variability in acquisition protocols limit immediate deployment. Most current studies are retrospective and rely on relatively small, single-center image repositories, raising concerns about generalizability across devices, geographic populations, and disease subtypes. Furthermore, few investigations have explicitly linked AI-derived NFC features to downstream organ involvement, therapeutic response, or long-term survival, which are essential for establishing clinical and regulatory value.

4.4. Spondyloarthritis/Psoriatic Arthritis (SpA/PsA)

Predictive modeling in spondyloarthritis (SpA) remains heterogeneous but is steadily advancing [186]. A scoping review encompassing 89 AI studies across inflammatory arthritis identified only 11 studies in SpA/PsA, with reported performance ranging from accuracy ~60–70% and AUC 0.63–0.92. Multi-omics and imaging-augmented models generally outperformed clinical-only baselines, but methodological heterogeneity and limited external validation constrained generalizability [165]. This reflects a broader challenge in SpA research: unlike rheumatoid arthritis, where serologic biomarkers (e.g., ACPA, RF) provide mechanistic anchors, SpA and PsA lack universally validated molecular markers, necessitating greater reliance on composite clinical, imaging, and lifestyle predictors (Table 1).

Within axial SpA, a multiregistry EuroSpA cohort study analyzing secukinumab-treated patients found that clinical characteristics, patient-reported outcomes (PROs), and lifestyle factors predicted achievement of low disease activity (ASDAS-CRP, BASDAI) at 6 months and treatment persistence at 12 months [187]. This underscores the value of real-world registry data for prognostication. Interestingly, registry-based models highlight that baseline PROs—such as patient global assessment and fatigue—can be as predictive of drug persistence as traditional biomarkers, suggesting that patient-centered data streams may be critical for individualized treatment planning.

In parallel, the ROC-SpA randomized protocol will test whether pharmacokinetic (PK) parameters (drug levels, exposure) predict clinical response at 24 weeks following anti-TNF failure, representing a shift toward therapeutic drug monitoring in SpA [188]. If validated, PK-informed personalization could establish a new treatment paradigm, aligning drug exposure with disease endotypes rather than applying uniform dosing strategies. Such approaches may also help rationalize costs by avoiding unnecessary biological cycling in non-responders.

For psoriatic arthritis (PsA), imaging biomarkers are emerging as key translational tools. A recent prospective pilot study showed that short-interval ultrasound changes in inflammation (MIJET/2MIJET/GUIS scores) at 1–3 months predicted 6-month drug retention, with faster responses observed in JAK inhibitor-treated patients compared with TNF, IL-17, or IL-12/23 inhibitor therapy [189]. This suggests that dynamic imaging readouts may serve as early surrogate markers of therapeutic persistence, accelerating adaptive decision-making in PsA. Larger PsA cohorts confirm that baseline disease activity, prior biologic exposure, and comorbidity burden influence therapeutic response, but validated molecular predictors remain scarce [190,191]. Emerging multi-omics studies have identified candidate pathways—including IL-23/Th17 signaling, keratinocyte-derived cytokines, and metabolic dysregulation—that may differentiate responders from non-responders [192], but these remain exploratory pending replication in diverse cohorts.

Key gaps remain, as modest sample sizes, variable imaging protocols, and inconsistent composite outcomes across studies limit generalizability. Moreover, heterogeneity in disease domains—peripheral arthritis, axial involvement, enthesitis, dactylitis, and skin disease—complicates biomarker validation, since predictors may differ by dominant phenotype. This necessitates domain-specific models or modular prediction frameworks that can adapt to different PsA presentations. Large, multicenter studies integrating PK data with multi-modal predictors (clinical, imaging, genomic, and lifestyle) are required to establish clinically deployable prediction tools. Ultimately, the integration of SpA/PsA predictive models into learning health systems and adaptive trial designs will be essential to move from exploratory research toward precision, mechanism-guided care.

4.5. Other Conditions

4.5.1. Sjögren’s Disease (SjD)

Salivary gland ultrasound (SGUS) is increasingly validated for diagnosis and risk stratification. A study comparing OMERACT vs. Hočevar scoring demonstrated that parotid ultrasound features correlated with lymphoma risk in SjD [193]. A new multicenter study confirmed that SGUS correlates with secretory function, systemic disease activity, and lymphoma risk factors, supporting its broader clinical use [194]. Importantly, updated guidelines caution against routine repeat SGUS in asymptomatic patients, highlighting the need to avoid over-screening [195].

On the biomarker front, salivary proteomics are rapidly evolving. A study review emphasized proteomic pipelines for identifying candidate diagnostic and prognostic markers [196], while an integrative study combining saliva, plasma, and gland tissue proteomics identified novel biomarker candidates for SjD classification [197]. Emerging evidence also suggests that salivary exosomal microRNAs and proteoforms may offer superior sensitivity for early-stage disease compared with conventional serologic markers such as anti-Ro/SSA and anti-La/SSB (Table 1) [198]. Integration of SGUS and proteomics into multimodal diagnostic algorithms could therefore accelerate detection, stratify lymphoma risk, and refine patient selection for clinical trials.

Standardized SGUS and proteomic panels represent complementary tools for early diagnosis and lymphoma risk stratification, but longitudinal validation linking imaging/omics outputs to clinical outcomes remains essential. Future directions include embedding SGUS-proteomic fusion models within registry-based cohorts and testing their capacity to predict long-term systemic involvement, malignancy risk, and response to B-cell-targeted therapies.

4.5.2. Idiopathic Inflammatory Myopathies (IIM)

Stratification of IIM is increasingly driven by myositis-specific autoantibodies (MSAs), imaging, and ML approaches. Reviews emphasize how MSAs have redefined subtype classification and prognosis [199], while methodological papers detail ML opportunities for biomarker discovery and patient clustering [200]. For instance, anti-MDA5 positivity is strongly associated with rapidly progressive interstitial lung disease (ILD), while anti-TIF1-γ predicts malignancy risk, underscoring the prognostic utility of serologic stratification [201].

Applications of ML to muscle MRI have demonstrated feasibility in predicting antibody-defined subgroups and disease clusters via radiomics and texture features [202]. Similarly, multi-omics pipelines are being tested to improve stratification, though most remain single-center, retrospective, and exploratory. Deep learning applied to T2-weight and STIR MRI sequences has revealed latent imaging phenotypes that correspond to distinct histopathological patterns, suggesting potential for early detection of subclinical muscle inflammation [203]. Moreover, integrative ML models combining autoantibody profiles, transcriptomics, and MRI data are beginning to uncover mechanistic endotypes that may guide immunosuppressive therapy selection (Table 1) [204,205].

Despite proof-of-concept success, prospective multicenter validation linking ML-based stratification to treatment response and clinical outcomes (e.g., ILD progression, steroid-sparing) is urgently needed [206]. Next steps will require harmonization of MRI acquisition protocols, integration of patient-reported outcomes, and trial-based testing of whether biomarker-guided stratification can optimize therapeutic decisions in IIM.

4.5.3. Vasculitides

In large-vessel vasculitis (LVV), [^18F] FDG-PET/CT is increasingly central to diagnosis and monitoring. Studies show PET/CT can confirm vascular involvement when biopsies are negative, and emerging radiomics/ML models distinguish active giant cell arteritis (GCA) from atherosclerosis, potentially reducing diagnostic uncertainty [207]. Hybrid imaging approaches, including PET/MRI, are further expanding the toolkit, enabling simultaneous metabolic and anatomical assessment of vessel inflammation [208]. These modalities may serve not only as diagnostic adjuncts but also as surrogate endpoints for treatment response in clinical trials.

In ANCA-associated vasculitis (AAV), renal transcriptomic signatures are advancing risk prediction. A study developed a 12-gene renal signature that outperformed clinicopathologic scores in predicting kidney failure [209]. More recent data suggest that stronger type I IFN signatures predict worse renal outcomes and distinct clinical phenotypes, underscoring immune-pathway-guided precision medicine [210]. Integration of kidney biopsy transcriptomics with digital pathology and single-cell sequencing is beginning to delineate cellular drivers of renal injury, potentially guiding therapeutic targeting of pathogenic myeloid and interferon-driven networks (Table 1).

A recent review synthesized AI applications across vasculitides, highlighting progress in diagnostic imaging, biomarker discovery, and outcome prediction, while stressing the need for larger, prospective harmonized datasets [211]. Moving forward, federated AI pipelines across vasculitis consortia and international biobanks will be essential to achieve sufficient statistical power and ensure equitable performance across ancestries.

PET-based radiomics and renal transcriptomics exemplify organ-specific precision tools; the next step is embedding these predictors into decision-impact trials to guide therapy. Ultimately, the goal is to transform these tools from retrospective predictors into real-time decision-support instruments capable of improving patient survival, organ preservation, and quality of life.

5. Artificial Intelligence in Rheumatology: From Triage to Therapy Selection

5.1. AI-Enhanced Triage and Access

Among rheumatology applications, text-based triage is the furthest along the translation curve because it addresses a clear bottleneck—waiting times—without displacing diagnostic authority. A recent multicenter study processed 8044 GP referral letters (5728 patients) from 12 clinics, training models in two centers and testing in the remaining ten. This external-site validation design reduces the risk of “center overfitting” and provides evidence of genuine generalizability across healthcare systems [212]. The system prioritized likely RA, OA, fibromyalgia, and long-term care needs, showing that machine learning can augment queue management and equity of access.

The translational value here lies in optimizing time-to-assessment rather than automating diagnosis. Deployment, however, depends on safeguards such as calibration monitoring (slopes and intercepts), deferral rules for low-confidence predictions, and post-deployment drift audits to detect changes in case-mix or letter style. Comparable patient-facing systems, such as RhePort (digital rheumatology patient intake and referral platform), demonstrate that combining structured digital intake with NLP triage could further streamline referral pathways [212,213]. Such hybrid platforms not only shorten diagnostic delays but may also reduce inequities in access, particularly for patients in underserved regions, by providing consistent triage independent of referral letter quality (Table 2).

Table 2.

AI in Rheumatology—From Diagnostics to Decisions.

These findings suggest that triage augmentation—supported by transparent reporting frameworks such as TRIPOD-AI and PROBAST-AI—is the most immediate clinical application of AI in rheumatology [22].

5.2. Imaging Decision Support

Imaging AI in rheumatology is converging on a reader-assist model, standardizing quantification rather than replacing interpretation. In RA, deep learning pipelines now achieve volumetric quantification of synovitis and erosions on contrast-enhanced MRI, correlating strongly with RAMRISs and matching expert reproducibility [83,112]. Similarly, the ARTHUR v2.0 ultrasound platform integrates segmentation and activity grading aligned with OMERACT/EULAR definitions, supporting consistent scoring across sites [215].

Systemic sclerosis provides an instructive parallel. A paper demonstrated that convolutional neural networks could fully automate nailfold capillaroscopy (NVC) interpretation [98]. More recently, the multicenter CAPI-Detect initiative refined ML-based scoring of capillary density, hemorrhages, and the scleroderma pattern, reporting reproducible accuracy across independent datasets [98,216]. Complementary work further validated automated microvascular abnormality detection, underscoring the feasibility of AI-assisted NVC for SSc [185].

The clinical takeaway is that imaging AI is most advanced in standardized scoring, workload reduction, and trial reproducibility, not in stand-alone diagnosis. Prospective workflow-embedded studies remain the key translational step before these systems can enter routine care [217]. In parallel, explainability tools such as saliency maps and uncertainty quantification are increasingly incorporated into imaging AI pipelines to address clinician trust and mitigate automation bias [218,219]. Furthermore, the incorporation of imaging AI as surrogate endpoints in drug trials may accelerate therapeutic evaluation by providing objective, reproducible readouts of disease activity.

5.3. Predictive Tools for Therapy Selection

The prediction of biologic or targeted synthetic DMARD response represents a more ambitious but less mature AI application. A systematic review of 89 AI studies across inflammatory arthritis reported AUCs ranging from 0.63 to 0.92, with multi-omics and imaging features consistently improving discrimination over clinical baselines. Yet, methodological heterogeneity and limited external validation restrict current clinical use [132,165].

Encouragingly, registry-based models using only baseline clinical features and gradient boosting have achieved clinically plausible predictive accuracy for 6- and 12-month bDMARD outcomes, illustrating the value of transparent, implementable baselines [220]. At the mechanistic layer, whole-blood RNA-seq studies are beginning to identify molecular predictors of JAK inhibitor response, though these remain at the discovery stage [221]. Early findings suggest that interferon-driven transcriptional modules and metabolic pathway activity may stratify JAK inhibitor responders, but harmonization of RNA-seq assays and prospective biomarker-guided trials will be needed before translation [222].

Adjacent work in RA complications underscores translational potential: ML models predicting RA-associated interstitial lung disease (RA-ILD) using clinical and biomarker features such as KL-6 have shown robust performance, supporting early screening applications [214]. This illustrates that prediction models can also be extended beyond drug response toward complication forecasting, potentially enabling proactive surveillance strategies that pre-empt irreversible organ damage (Table 2).