2.1. Game’s Setting

A brief overview of reputation game simulations should be in order. Details can be found in Enlin et al. [

1]. The game is played by a set

of agents in rounds consisting of binary conversations, as shown in

Figure 1. Each conversation is about the believed honesty of one of the agents. After each conversation, the agents involved update their beliefs about the topic of the conversation, the interlocutor, and themselves. In addition, they record some information about what the other person said, what they seem to believe, or what they want the receiver to believe. Finally, if the conversation was about themselves, they decide whether the other person should be considered a friend or an enemy.

Friends are other agents who help an agent maintain a high reputation, and thus in the reputation game, friendship is granted to those agents who speak more positively about one compared with the others. Enemies, on the other hand, are agents whose statements seem to harm one’s reputation. If an agent lies about a friend, they will influence the statement positively to benefit from a more respected supporter of themselves. If they lie about an enemy, agents will influence their statements negatively so that bad propaganda about them will be less well received by others. For the simulation of larger social groups, a continuous notion of friendship was developed as well [

3], but this is not used in the small-group simulation runs discussed here.

Lying carries the risk for most agents of inadvertently signaling that they are lying. Such “blushing” happens to most agents in of lies and lets the recipient know with certainty that they have been lied to. Some special agents, such as aggressive and destructive ones, can lie without blushing. However, currently all agents assume that the frequency of blushing while lying is when trying to assess the reliability of a received statement.

The game is (usually) initialized with agents that do not have any specific knowledge about each other, as well as of themselves. The honesty of others, as well as their own, needs to be determined from observations, self-observations, and the clues delivered to them by the communications. The self-reputation, in the following called self-esteem, is an important property of agents. A high self-esteem allows agents to advertise themselves without lying. The game ends after a predetermined number of rounds, and the minds of the agents are analyzed in order to evaluate the effectiveness of the different strategies in reaching a high reputation.

2.2. Knowledge Representation

Since agents must deal with uncertainties, probabilistic reasoning is the basis of their thinking. The quantities of interest here are the honesty

of each agent

a, which form an honesty vector

. The accumulated knowledge

of an agent

a about the honesty of all agents is represented by a probability distribution

. This is assumed to follow a certain parametric form,

The reasoning behind this is as follows. First, to mimic limited cognitive capacity, agents store only probabilistic knowledge states that are products of knowledge about an individual agent. In this way, they do not store entanglement information, which prevents them from removing once-accepted messages from their belief system, even if they conclude that their source was deceptive. Second, the parametric form of the beta distribution is well-suited for storing knowledge about the frequency of events. If agents recognized lies and truths with certainty and stored only their numbers, those numbers would be and , respectively. If an agent learns that another person has reported from an absolutely trustworthy source that they have recently spread additional and truths and lies, respectively, then the updated belief state is .

The ability to store non-integer values for

and

becomes important once uncertain message trustworthiness must be considered. Ideally, this would allow bimodal distributions, since the knowledge state after receiving a potentially deceptive message

J should be a superposition of the state resulting from being updated by information from a trusted source

and the state after a detected lie; the latter being the original state

to block out the disinformation of the lie. This superposition is

However, the parametric form of the beta distribution used for knowledge storage prevents agents from storing such bimodal distributions. Instead, agents temporarily construct such bimodal posterior distributions, and then compress them into the parametric form of the beta distribution in Equation (

1) for long-term storage. The principle of optimal belief approximation is used for this compression [

4]. This states that the Kullback–Leibler (KL) divergence

between optimal and approximate belief states should be minimized with respect to the parameters

of the approximation. For the chosen parametric form of the beta distribution, this implies the conservation of the moments

and

in the [

2] compression step. These are the surprises expected by agent

a for the truth and lie of agent

b. Many other details of the distribution, such as its bimodality and possible entanglements between the honesty of the subject and that of the speaker, are lost in the compression.

2.4. Deceptive Communication

Thus, there are a number of advantages to lying, and in the case that strategies for detecting deception are not present in the minds of agents, notorious and extreme lying would automatically be the most successful strategy. Therefore, for mental self-defense, every functioning agent must practice lie detection. For a lie to have even a chance of going unnoticed as such, its content must be adapted to the lie detection strategy of the recipient. Thus, lie detection determines how the optimal construction of lies works, and so we must first turn to lie detection.

Lie Detection: An agent

b that receives a message in a communication

must determine a probability for its knowledge update that the message is honest (“

”). This is constructed as

Here, d denotes the communication data (the setup , the statement J, and whether blushing was observed), are various features that agent b extracts from these data, and is the reputation of a with b, i.e., agent b’s assumption about how frequently a is honest.

By using Equation (

7) for message analysis, agents implicitly assume that the various data features used are statistically independent given the message’s honesty status (

or

). The features that ordinary agents use to detect lies are blushing (indicating lies), confessions (indicating truths), and the expected surprise

that the message would induce in them if they changed their belief about

c to the assertion of the message.

The assumption that agents make about the surprise values of messages to them is that the lies underneath deviate more from their own beliefs than honest statements, and are thus more surprising. Although not necessarily true, this assumption protects their belief system from being easily altered by extreme claims made by other agents, since messages that have the potential to greatly change their minds are simply not believed. Where the line between predominantly trust or distrust is drawn depends on a number of factors. First, the reputation of the sender of the message has a major impact. Second, the receiver b of a message must compare the surprises of the message with a characteristic surprise scale to judge whether or not the message deviates too much from one’s belief system. However, this scale cannot be a fixed number, as it must adapt to evolving social situations, which may differ in terms of the size of lies typically used and the dispersion of honest opinions.

In the reputation game, a very simple heuristic is used for this setting ( is the median of the surprise scores of the last ten messages received with positive surprises). Regardless of this specific choice, however, any socially communicating intelligent system will require a similar scale to assess the reliability of news in light of its own knowledge and to protect its belief system from distortions due to exaggerated claims. It is therefore to be expected that such a scale (or several of them) is present in any robust (in terms of deception and bias) social information processing system. The resulting social psychological phenomena should be relatively universal, and therefore found in a variety of societies, human and non-human.

Lie Construction: Given this strategy for detecting lies, it becomes clear how a successful lie must be composed. A lie cannot simply be an arbitrary statement, as this would be too easily detected. Nor can it simply be a distorted version of the sender’s belief, as this would statistically add surprise over honest statements to which the receiver pays attention (this was tried in an initial version of the simulation and failed grandiosely). Consequently, it should ideally be a distorted version of the receiver’s own belief to hide among the surprise values of the honest messages received. Additionally, the distortion should typically be smaller than the receiver’s reference surprise scale to go unnoticed.

To be successful, then, a liar must cultivate a picture of his victim’s beliefs, a rudimentary Theory of Mind. On this basis, the expected effect of the lie on the speaker can be estimated, and a lie with hopefully optimal effect can be constructed. Usually, this will not be too far from the recipient’s own belief to go unnoticed, but also not too close to still sufficiently move the victim’s mind in the desired direction. To determine the size of their lies, agents use their own perceived mean surprise scale , making the implicit (and not necessarily correct) assumption that all agents experience the same social atmosphere. This has very interesting consequences.

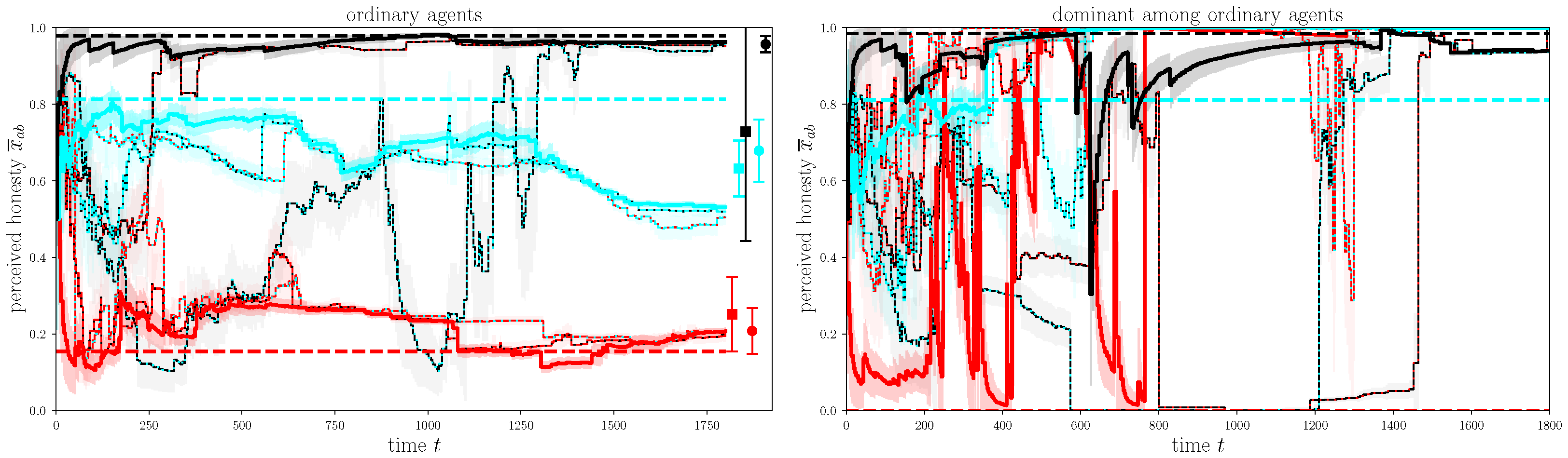

For any agent who is exposed to opinions significantly different from their own, whether they are lied to a lot or simply have a different belief system than their social group, the reference surprise scale will grow. Such an agent’s lies will have a larger size because the same surprise scale is used in detecting and constructing lies. Although these inflated lies may not be more convincing to the recipients, the recipients’ reference surprise scale will also increase. As a result, the other agents’ lies will also increase, which in turn will further increase the reference lie scale for everyone. In this way, a run away regime of inflating reference scales can develop, in which the social atmosphere becomes toxic, in the sense that the opinions expressed become extreme. This is clearly seen in several simulations of the reputation game, especially in the presence of agents using the dominant communication strategy, as discussed below.

It should be noted that, in addition, improved lie detection strategies adapted to the lie construction strategy described above are conceivable. In the reputation game simulations, so-called smart agents use their Theory of Mind to guess whether the message is more likely to be a truth (a message derived from the sender’s belief) or a lie (a message constructed from the sender’s belief about the receiver’s belief).