Abstract

Why quantum? Why spacetime? We find that the key idea underlying both is uncertainty. In a world lacking probes of unlimited delicacy, our knowledge of quantities is necessarily accompanied by uncertainty. Consequently, physics requires a calculus of number pairs and not only scalars for quantity alone. Basic symmetries of shuffling and sequencing dictate that pairs obey ordinary component-wise addition, but they can have three different multiplication rules. We call those rules A, B and C. “A” shows that pairs behave as complex numbers, which is why quantum theory is complex. However, consistency with the ordinary scalar rules of probability shows that the fundamental object is not a particle on its Hilbert sphere but a stream represented by a Gaussian distribution. “B” is then applied to pairs of complex numbers (qubits) and produces the Pauli matrices for which its operation defines the space of four vectors. “C” then allows integration of what can then be recognised as energy-momentum into time and space. The picture is entirely consistent. Spacetime is a construct of quantum and not a container for it.

1. Strategy

Simplicity yields generality, and generality is power. There are deep mysteries in physics, such as why space has three dimensions and why quantum formalism is complex. Inquiry at such depth demands sparse assumptions of compelling generality. We aim to leave no room for plausible doubt; thus, we allow no delicate assumptions (such as continuity, perhaps) which could plausibly be denied. We paint with a broad brush, aiming to mirror simplicity of ideas with simplicity of presentation. Our aim is to expose the inevitable language of physics in a form accessible to neophyte students as well as experienced professionals. This is only a beginning, and we do not proceed to discuss the physical laws that form content of the language.

In attempting to understand the world, we seem forced to think of it in terms of adequately isolated parts with adequately separable properties. To obtain generality, we hypothecate symmetries such that laws applying to one part will apply to another. Otherwise, without consistent rules, we will find no generalities, and our quest will fail. Symmetries are our only hope. If the world is to be comprehended at all, this is the path we should follow. What, if anything, the symmetries apply to will only be apparent later when we try to match our intellectual constructions to sensory impressions of the world.

2. Mathematics

We first suppose a “with” operator that links two parts (”stuff”) to make compound stuff. Technically, this operator is deemed to possess closure: stuff -with- stuff = stuff. Furthermore, since we have closure, we can continue adding other stuff to produce yet more stuff. For this to be fully useful, we suppose that we can shuffle stuff around without it making any difference. Formally, shuffling is associative commutativity.

If our stuff has only one property of interest, it is not hard to show that the “with” operator can without loss of generality be taken to be standard arithmetical addition [1,2,3,4]. Any other representation is isomorphic. In order to model an arbitrarily large world, we suppose that combination can be conducted indefinitely without repeat: unless Y is null. Otherwise, we would obtain the wrap around for integers limited to finite storage. This is why stuff adds up [5].

It is not difficult: Children understand it as they learn to count. The wide utility of addition mirrors the ubiquity of symmetry under shuffling. There are many applications, but we might think of shuffling as a parallel operation which could take place in some sort of proto-space.

Now, we will want “+” to continue to work regardless of who supplies the stuff or, for that matter, who receives it; thus, we next suppose a “then” operator that transfers stuff, possibly with modification, as in . This operator is also supposed to possess closure: is itself a transfer so that suppliers can be chained, and as before we suppose that chaining can be performed indefinitely. We might think of -then- as a series operation that could take place in some sort of proto-time.

For -with- to be additive regardless of suppliers or receivers, we need -then- to be distributive.

With only one property of interest in play, the representation is merely scalar. The only freedom allowed within the sum-rule convention is scaling; thus, the representation “·” of -then- has to be standard arithmetical multiplication, possibly scaled by some constant [3,4]:

which is most commonly set by convention to one. Again, this is not difficult: children learn it informally as they are taught multiplication, and they learn with percentages.

Note that we are building arithmetic and not Boolean calculus. Our “then” describes sequencing and is distributive over “with” but not the other way around. We point out that only with standard arithmetic in place can we build the sophisticated mathematics, which gives us quantified science. This is why mathematics is so effective—we have designed it to obey the fundamental symmetries which are required for our generalisations. Moreover, we need the symmetries, for denial would remove our mathematics and render us powerless. How could we justify Hilbert spaces if we cannot even add up?

3. Physics

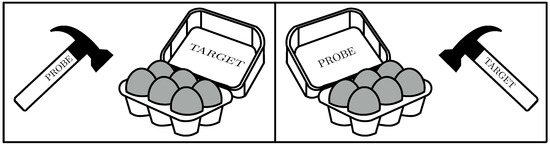

Physics is more subtle, for arbitrary precision is not available with finite probes (Figure 1, left).

Figure 1.

(Left) Uncertainty: probe and fragile target. (Right) Measurement: delicate probe with target.

This means that any particular quantity is necessarily associated with an uncertainty. Hence, a minimal description (and anything more would lack rationale and be mathematically redundant) requires a pair of numbers to quantify a property. We do not mean “,” which is merely crude shorthand for a distribution over the available values x—in which those numbers too would be uncertain, resulting in indefinite regress. The fundamentally irreducible pair-wise connection is simpler and more intimate than that. Uncertainty becomes part of the very language of physics and is impossible to remove.

To define the connections analogous to scalar addition and multiplication, we start by imposing the same ubiquitous symmetries as before. Associative commutativity yields component-wise addition for -with- :

and distributivity yields bilinear multiplication for -then- :

but now with eight apparently arbitrary coefficients and not only a single removable scale factor. It is true that we have four degrees of freedom to choose coordinates, but that still leaves four of the ’s free so that multiplication is not yet adequately defined. This extra subtlety of physics requires us to make the distributivity associative, meaning that the effect of chaining does not depend on how the factors are grouped into compounds.

In one dimension, associativity was automatically an emergent property of multiplication, but we need to impose it in two dimensions. Associative multiplication of pairs yields quadratic equations for the ’s for which its multiple solutions A,B,C,D,E and F enable the rich framework of physics. In summary, the following is the case.

Explicitly, the six product rules are the following [6,7,8].

The first three, A,B and C, are pair-pair products, the next two, D and E, are degenerate scalar-pair products, and the last F is ordinary scalar–scalar multiplication appearing as the doubly degenerate case.

4. Product Rule F

It was inevitable that two-dimensional multiplication would include one-dimensional scalar multiplication as a special case, and what our derivation demonstrates is that there is necessary consistency between one and two dimensions. Both follow from the same basic symmetries of shuffling and sequencing. In one dimension, the scalar sum and product rules are those of probability, observed from this viewpoint as part of an intellectual structure common to both mathematics and physics.

Whether quantum or classical, physics makes predictions expressed as likelihoods , assuming what we think we know about the setup, expressed as the prior. Bayesian inference then uses actual outcomes to refine the predictions (the posterior) and assess the predictive quality of our assumptions (the evidence). Probability and quantum theory (which is basic physics) share a common foundation, and quantum behaviour fits seamlessly into Bayesian analysis no differently to anything else. There can be no quantum weirdness in this approach. It is all only ordinary inference.

Note our bottom-up strategy. Our assumptions refer to basic symmetries of only two or three items at a time. We start with items and build up from there. This is opposite to approaches of superficially greater sophistication which specify top-down from infinity . That strategy is deliberate. Infinity and the continuum involve delicate limits that might not hold in all circumstances. We think it perversely fragile to traverse delicate analysis in order to return, ultimately, to discrete predictions which never needed the delicacy in the first place.

5. Product Rule A

Product rule A is complex multiplication, which we can write in operator form as follows.

The condition selects operators which under repeated application cause neither divergence towards infinity nor collapse towards zero . Thus, defines unit quantity. Knowledge of quantity is invariant under such unit-modulus operations, which add constants to the phase of the operand while leaving its modulus unchanged. Correspondingly, our knowledge of the phase of an operand is invariant to offset, obeying for any , indicating uniformity.

Later, it will be observed that neither of the other pair–pair product rules can support a proper prior distribution, and this is why quantum theory uses complex numbers. It is the only way of enabling consistency with probability. Uncertainty being identified with phase, the modulus becomes associated with quantity, and the unit modulus represents unit quantity.

Incidentally, phase has to be a continuous variable. If it was not, any gap could be filled in by adding complex numbers from either side: one from the upper boundary and one from the lower in order to obtain a sum with intermediate phase. Whilst absolute phase of any newly introduced quantity is unknown (and uniformly distributed), relative phase is the critical ingredient that distinguishes representation of quantity-with-uncertainty from representation of quantity alone. Relative phase manifests as interference.

6. Born Rule

When we combine objects, the scalar sum rule demands that we add quantity. That is the definition: quantity is what adds up. So what scalar property of complex numbers could be additive? Complex moduli do not add up, either directly or through any function . Phase differences interfere and appear to force an inconsistency with scalar addition. However, we do not know the phases of independent objects; thus, we do not actually know what the interference should be. Fortunately, the rules of probability instruct us to average (“marginalise”) over what we do not know in order to arrive at the reproducible behaviour that we seek. Moreover, we can attain consistency on average, , provided we use . That modulus-squared form of the following:

is forced upon us and yields a general additivity that holds for any number of components. The average-modulus-squared relationship is the archetype of the Born rule of quantum theory [9]. By updating our nomenclature of complex numbers to the more traditional , we have additive scalar quantification , which we interpret as follows.

This is how uncertainty manifests in the formalism. The observably additive intensity emitted by sources and observed by receivers is a modulus-squared average. Averaging can be performed artificially by picturing an ensemble of possibilities, as expressed either algebraically with a formula for or arithmetically as Monte Carlo samples. In the laboratory, averaging is performed by repetition. Thus, the fundamental object of inquiry is a stream and not, as commonly assumed, a particle. A particle is less fundamental because it carries the extra information that a detector had fired. Streams add up. Particles only add up if they are independent because otherwise phase difference is liable to cause interference so that could become anything from 0 to 4.

Observing Q yields information about partially because the phase remains unknown, and the observation is only an average. The probability distribution for under this quadratic constraint Q is assigned by maximum entropy as the Gaussian:

just as standard in classical inference and signal processing.

The foundations of quantum theory begin to become apparent, but so far all we have quantified is existence. We need more. We need properties.

7. Product Rule B

Product rule B is hyperbolic (or “split-complex”) multiplication, written in operator form as follows.

The condition selects operators which under repeated application cause neither divergence towards infinity nor collapse towards zero . Thus, defines unit quantity. Instead of complex phase , we have pseudo-phase . Offsets of leave r unchanged, but the range is unbounded . Hence, as anticipated, we could not use to express uncertainty because the corresponding uniform prior would be improper. Rule B does not admit some alternative representation of quantity and uncertainty.

Instead, we upgrade our inquiry to binary this-or-that properties, each of which can exist or not and is represented by a quantity and uncertainty pair. We call the binaries of the following:

qubits. The detection of by a ↑-detector would signify the supply of ↑ and similarly for ↓ so that would signify ↑-or-↓ presence of qubits with either property.

Shuffling and sequencing are still to be obeyed, and the ABCDEF rules still apply in the complex field. So far, we are invoking rules A and B. For the sake of simplicity, we restrict attention to unit operators and write them in generator form. The generator form of the following:

allows us to separate the (complex) magnitude of a multiplication from its structure . From (9), the unit operators for A and B are as follows:

where we recognise the Hermitian Pauli matrices:

that mix trigonometric and hyperbolic rotations in complex context.

7.1. Quantification

For quantification, we still have the Born rule, initially as individual averages of and , but quickly promoted to for any Hermitian . Specifically, the Pauli matrices yield scalar observables.

Of these, observes qubits as a whole. Given a covariance matrix derived from such observations (which in practice would often include statistical uncertainty too), the maximum entropy assignment of probability in any dimension is the Gaussian.

This distribution of can be used to predict observable quantities in the usual manner that is no differently from any other application of probability. Knowing what a system is enables us to predict how it will behave, whether that is probabilistic or definitive.

It is clear from (21) that initial coordinates could be replaced by any unitary combination.

Hence, there is no observational test of whether or is the fundamental representation; thus, our physics needs to be invariant under unitary transformation. This gives the complex representations of quantum theory flexibility beyond that accessible to classical scalars for which quantity is restricted to positive values.

7.2. Quantisation

We become aware of quantisation when a probe initialised in a fragile metastable state is brought to the target stream (Figure 1(right)). If the target triggers effectively irreversible descent of the probe into a disordered state (cleverly engineered to be a macroscopic pointer angle or suchlike), then that becomes available as an essentially permanent macroscopic bit of information signifying that something (a quantum) had been present in the target stream at that time. It is from this point that the experimenter may in person or by proxy become aware of the digital event and incorporate it into probabilistic modelling.

Assuming that the interaction had been engineered to preserve the identities of target and probe, then the something (the quantum) could then be intercepted from the ongoing stream and preserved for later use. Its total modulus would then be known (and conventionally assigned as 1) so that its “wave function” would be represented by the distribution:

with known modulus and unknown phase. With the wave function thereby normalised and confined to the Hilbert sphere , the covariance is known as the density matrix. However, it may be noted that such confinement damages the smooth elegance of the Gaussian analysis of streams.

This is the source of entanglement, contextuality and such quintessentially quantum phenomena. It is simply Bayesian analysis in the unfamiliar context of complex numbers (as suggested by [10]) and in indirect modulus-squared observation, usually written in the Dirac bracket notation with and , that was developed for physics [11,12] before the Bayesian paradigm became pre-eminent in inference.

For example, if were observed equal to , then the definitions (20) would force to be purely , an eigenvector of (with unknown phase) with covariance . Subsequent prediction of with (21) would ensure that remained equal to . Measurements of , on the other hand, would average to zero upon repetition and restriction to the Hilbert sphere (23) of an individual quantum would force individual outcomes to take either one of the eigenvectors of at random, incidentally destroying the memory of the earlier . We see that “wave-function collapse” is just the incorporation of new data into ordinary Bayesian inference on the Hilbert sphere.

7.3. Transformations

The Pauli matrices generate the 6-parameter Lorentz group of unit operators.

On using only, the Pauli observables transform as follows:

while on using only, they transform as follows.

In each case, we have the following:

and the axis could have been in any direction. We recognise the rotation and boost behaviour of 4-momentum, but the identification would be premature because we do not yet have spacetime.

8. Product Rule C

Product rule C in operator form is as follows.

As before, defines unit quantity. Instead of complex phase , we have pseudo-phase . Offsets of t leave r unchanged, but the range is unbounded . Hence, as anticipated, we could not use t to express uncertainty because the uniform prior would be improper. The only choice really was rule A.

Unit operations now take the following form.

This acts as an integrator:

so that U is the integral of u with . Note that operates in the real domain. It is not Hermitian, so it applies to scalar operands and not to complex entities such as , and its coefficient t will be real. Rule C meaningfully applies to (real-valued) phase, rephasing to (the minus sign is conventional).

Take a qubit with invariant m. Define through by using m as a clock. Under Pauli, we have observed that m transforms as the 4-vector , which is covariant by convention. Phase is a -periodic scalar that cannot be rescaled because its -periodicity represents interference—the distinguishing observational feature of quantum theory. Hence, must transform as a contravariant 4-vector with invariant of the following:

obeying the following:

where the arbitrary constant ℏ (which is best set to one) defines our units of t and relative to m and . With being rephased by , the Schrödinger equations of the following:

are immediate.

All that remains is to recognise the symbols of the following.

Moreover, we must identify the Lorentz coefficients within Minkowski spacetime.

Viewpoint

Traditionally, energy and momentum are obtained from the Schrödinger equations as differentials with respect to time and space. With ABC, it is the other way round—time and space emerge from quantum theory as integrals of energy and momentum.

The ABC derivation dictates why space has three dimensions—and that is the right way round. Space and time are measured by theodolites and clocks, and observations are at root quantum in nature.

We have now developed a unified foundation based on the necessary incorporation of uncertainty with quantity.

Further development would include formalising qubit–qubit interactions (two Pauli matrices) by using the Dirac equation and continuing to quantum field theory. That would allow theoretical demonstration of idealised measurement probes and of how observation can be used both to predict future evolution and to retrodict past behaviour. However, since ABC recovers the standard equations, new insight would not be immediately expected.

9. Speculations

With a consistent formulation based on so few and general assumptions, it seems almost inconceivable that inconsistency could arise in future developments. Would a user wish to deny uncertainty, or shuffling, or sequencing?

However, there is more to be conducted. Although spacetime has been constructed with a locally Minkowski metric, inheriting continuity from complex phase, ABC does not require the manifold to be flat. We should presumably expect that curvature will follow physical matter as general relativity indicates. Yet gravitational singularities appear to be inconsistent with the required reversibility of quantum formalism.

One may ask for the source of that reversibility, for it is at least superficially plausible that dropping an object into a black hole is not a reversible process—at least not on a timescale of interest to the experimenter. Since it appears that the fundamental object is not a particle on its Hilbert sphere but a stream represented by a Gaussian distribution, it seems that there is scope for revisiting this question from a revised viewpoint.

Traditional developments of quantum theory and of geometry have accepted the notion of a division algebra [13,14] in which every operation is presumed to have an inverse. However, that may not be true. ABC does not need to assume inverses. They might fail in extreme situations. Assuming division algebras may have been an assumption too far.

One may also speculate on the roles of the unused product rules D and E. Traditionally, these scalar-vector product rules are imposed as part of the axiomatic structure of a vector space. Users are just expected to accept them on the basis of initial plausibility, familiarity and submission to conventional authority. However, ABC did not need to assume them. In the two-parameter quantity and uncertainty space, D and E appear as additional candidate rules. Each of A, B, C, and F had critically important roles. Why would D and E not have critically important roles too?

Inquiry at this depth does not throw up results that we should casually discard. Rules D and E suggest (demand?) the existence of scalar stuff different from the pair-valued stuff of our original inquiry. We might only be able to interact with it through the curvature of space, in other words gravity. Is this dark matter? Might we have predicted this possibility ahead of time if we had thought this through 50 years ago?

Author Contributions

All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Aczél, J. Lectures on Functional Equations and Their Applications; Academic Press: New York, NY, USA, 1966. [Google Scholar]

- Aczél, J. The associativity equation re-revisited. In Proceedings of the AIP Conference Proceedings, Jackson Hole, WY, USA, 3–8 August 2003; AIP: New York, NY, USA, 2004; Volume 707, pp. 195–203. [Google Scholar] [CrossRef]

- Knuth, K.H. Deriving laws from ordering relations. In Proceedings of the AIP Conference Proceedings, Jackson Hole, WY, USA, 3–8 August 2003; AIP: New York, NY, USA, 2004; Volume 707, pp. 204–235. [Google Scholar]

- Knuth, K.H.; Skilling, J. Foundations of inference. Axioms 2012, 1, 38–73. [Google Scholar] [CrossRef] [Green Version]

- Knuth, K.H. The deeper roles of mathematics in physical laws. In Trick or Truth: The Mysterious Connection between Physics and Mathematics; Aguirre, A., Foster, B., Merali, Z., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; pp. 77–90. [Google Scholar]

- Goyal, P.; Knuth, K.H.; Skilling, J. Origin of complex quantum amplitudes and Feynman’s rules. Phys. Rev. A 2010, 81, 022109. [Google Scholar] [CrossRef] [Green Version]

- Skilling, J.; Knuth, K.H. The symmetrical foundation of Measure, Probability and Quantum theories. Ann. Phys. 2019, 531, 1800057. [Google Scholar] [CrossRef] [Green Version]

- Skilling, J.; Knuth, K.H. The arithmetic of uncertainty unifies quantum formalism and relativistic spacetime. arXiv 2020, arXiv:2104.05395. [Google Scholar]

- Born, M. Zur Quantenmechanik der Stoßvorgänge (Quantum mechanics of collision processes). Z. Phys. 1926, 38, 803. [Google Scholar] [CrossRef]

- Youssef, S. Quantum mechanics as Bayesian complex probability theory. Mod. Phys. Lett. A 1994, 9, 2571–2586. [Google Scholar] [CrossRef] [Green Version]

- Dirac, P.A.M. A new notation for quantum mechanics. In Mathematical Proceedings of the Cambridge Philosophical Society; Cambridge University Press: Cambridge, UK, 1939; Volume 35, pp. 416–418. [Google Scholar]

- Borrelli, A. Dirac’s bra-ket notation and the notion of a quantum state. Styles of Thinking in Science and Technology. In Proceedings of the 3rd International Conference of the European Society for the History of Science, Vienna, Austria, 10–12 September 2008; pp. 361–371. [Google Scholar]

- Hurwitz, A. Über die Komposition der quadratischen Formen von beliebig vielen Variablen. In Mathematische Werke; Springer: Berlin/Heidelberg, Germany, 1963; pp. 565–571. [Google Scholar]

- Baez, J.C. Division algebras and quantum theory. Found. Phys. 2012, 42, 819–855. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).