Abstract

With the rising impact of climate change on agriculture, insect-borne diseases are proliferating. There is a need to monitor the appearance of new vectors to take preventive actions that allow us to reduce the use of chemical pesticides and treatment costs. Thus, agriculture requires advanced monitoring tools for early pest and disease detection. This work presents a new concept design for a scalable, interoperable and cost-effective smart trap that can digitize daily images of crop-damaging insects and send them to the cloud server. However, this procedure can consume approximately twenty megabytes of data per day, which can increase the network infrastructure costs and requires a large bandwidth. Thus, a two-stage system is also proposed to locally detect and count insects. In the first stage, a lightweight approach based on the SVM model and a visual descriptor is used to classify and detect all regions of interest (ROIs) in the images that contain the insects. Instead of the full image, only the ROIs are then sent to a second stage in the pest monitoring system, where they will be classified. This approach can reduce, by almost 99%, the amount of data sent to the cloud server. Additionally, the classifier will identify unclassified insects in each ROI, which can be sent to the cloud for further training. This approach reduces the internet bandwidth usage and helps to identify unclassified insects and new threats. In addition, the classifier can be trained with supervised data on the cloud and then sent to each smart trap. The proposed approach is a promising new method for early pest and disease detection.

1. Introduction

Agricultural production has a significant impact on society and has faced some challenges in terms of pests and diseases. Each year, they account for up to 40 percent of global crop production losses and a high cost to the economy of around USD 220 billion [1]. Given the wide range of transmitting agents and their attack dynamics, it is difficult to define appropriate control methods, even more so considering conventional systems that require manual analysis. This work aims to describe a new solution to automate the prediction process and to enable the earlier detection of insect-borne diseases. Thus, the farmer can reduce the treatment costs and the impacts on humans, plants and animals.

One way to control the risk level of pest propagation is to monitor the number of invasive insects in a particular region. Nowadays, this is frequently carried out with conventional chromotropic, pheromone and light traps that require a specialized person to visit them in a time-consuming, mundane and expensive way. Besides this, when considering multiple trap locations and a weekly monitoring time interval for each one, it is not possible to achieve the efficient detection of pest appearance. These aspects lead to a poor spatial and temporal resolution of insect pest monitoring activity, resulting in late input data to the decision support systems. Consequently, the alerts for the farmer will be delayed and the treatment costs will increase [2].

In recent years, smart traps equipped with sensing devices have achieved high-level importance due to their ability to automatically monitor pests and diseases. These systems support agricultural production and allow the reduction of labor, transport and logistics costs. There are already some technologies emerging that stand out for capturing, detecting, identifying and counting insects that are carriers of diseases. Most of them are designed to couple a sticky paper that is used to attract the insects. Different configurations have been adopted with an emphasis on delta and hive shapes. These solutions have been studied and modified to maximize the number of captures on the field using sex pheromones or food lures. Due to their closed structure model, they also present the advantage of protecting the sticky paper and the attractant against environmental conditions. In contrast, solutions that use color as the main attractant tend to be open and to adopt a panel configuration [3]. In an attempt to use a disruptive chromotropic trap, Hadi et al. [4] proposed a new approach using a four-sided sticky paper that takes into account the flight insect direction and the density of captures in each one to predict the pest propagation behavior. Additionally, the authors developed a closed lid where the capture solution remains inside as long as the rain and wind values do not fall below a defined threshold. This also helps to reduce the possibility of crowds of insects, thus avoiding overlaps between them, which will have a negative impact on the identification process through image analysis. When the smart trap is applied in an environment with a large population of attracted insects, the sticky board becomes saturated in a short time, requiring manual intervention in a higher frequency [5]. In order to reduce this problem, Huang et al. [6] propose a motor-driven e-trap based on a yellow sticky trap to automatically replace attractants and avoid insect overlapping, allowing long-acting work without manual operation. The concept of the system is similar to the commercial solution Trapview Self-Cleaning [7]; however, the authors show that the principles of reserving and automatically replacing the attractants are different. In line with the cleaning mechanisms, Qing et al. [8] also developed a novel monitoring system that avoids crowd insects. After attracting them using a light source and killing them by passing through an IR heating unit, the system uses a vibration plate to disperse them into a moving conveyor, which will be positioned for a photo to be taken. Then, the conveyor rotates to move away the insects that fall into a recycling box.

Alongside the development of hardware and structures for the capture and acquisition of data for monitoring systems, classification and identification methods have been studied as a means of automating the insect counting process. While the first one is only concerned with characterizing a region of interest as part of a class, the second one provides the exact location in the image of the classes under study. Thus, machine learning and deep neural network (DNN) algorithms have been integrated and have been demonstrated to be an important solution, with high accuracy results in early pest prediction [9]. An important aspect to take into consideration when applying these algorithms is that even when the metrics for their evaluation are good, a critical analysis of the results must be performed. In most cases, a pest alert should be generated to the farmer when the first insect appearance happens. This means that a false detection should be prioritized over an undetected insect. For this reason, Segalla et al. [10] studied two different DNNs, VGG16 and LeNet, and concluded that the second one outperformed the other since the obtained recall in the training process presented better results. The authors also proposed that the algorithms should run locally in the sensing device and only the number of insects should be sent to the farmer. This allows the reduction of the network bandwidth used and permits the opportunity to use low-power wide-range (LPWR) technologies, such as LoRaWAN, to communicate data to the server.

DNN algorithms usually require large datasets in the training process and higher processing power. Due to these requirements, other machine learning approaches have been considered. Both Sütő [11] and Huang et al. [6] run a selective search algorithm to predict a potential object’s location in the captured image. Then, they discuss and test solutions based on non-max-suppression algorithms to avoid overlapping regions and keep the ones with a higher probability. Sütő [11] concluded that soft-NMS with an exponential penalty function obtained better results, since it showed less loss than conventional NMS methods. In turn, Huang et al. [6] assumed the insect’s position and the illumination conditions to be invariable. This allowed them to apply a difference estimation between consecutive frames and detect the presence of a new insect more efficiently. Zhong et al. [12] used a deep learning model, YOLO, to look for the insects in the image and then classify the species with an SVM model. This system extracts local features (shape, texture, color) and makes it easier to perform the classification since it requires much fewer samples from a dataset. Besides this, the authors conducted fine counting with SVM and some of the false insect detections from YOLO could be overtaken, increasing the accuracy of the whole monitoring system.

We proposed a new concept design for a scalable, interoperable and cost-effective smart trap that extends the pest monitoring application to a wide range of crops and species. A two-stage system is also proposed to locally detect and count insects, thus reducing the amount of data sent to the server. A lightweight approach based on a visual descriptor and a trained SVM classifier is proposed to detect ROIs in the images that contain the insects. Then, the ROIs are sent to a second stage where they can be classified by a DNN. Table 1 presents some of the research works that most reflect the recent developments and serve as a comparison for our approach to pest monitoring.

Table 1.

Some research works related to the proposed pest monitoring approach.

2. Methods

The concept of the proposed trap was developed around six fundamental principles: (i) autonomous, (ii) energetically sustainable without human intervention, (iii) extensible to a wide range of crops and species, (iv) modular and interoperable with other devices, (v) provides the necessary information for the early and efficient prediction of a pest, as well as generating alerts for the appearance of new species, and (vi) cost-effectiveness. To ensure the above principles, the trap was conceptualized from scratch, both in terms of hardware and structure, taking into consideration low-cost components. Regarding the first one, an intelligence unit was integrated into the trap using a low-power microprocessor, the Raspberry Pi Zero 2W. This lightweight and cost-effective version was chosen to ensure sufficient processing power at a lower energy cost. It also has a large set of peripherals that allow it to communicate with numerous devices, such as cameras, sensors and actuators. Besides this, it has a wireless module that allows it to connect to any network or even act as an access point for other devices, facilitating their interoperability and data communication to the trap, which will act as an IoT gateway. In this way, all the data acquired on site, by different client devices, can be shared to the internet using only a single point, reducing the hardware and communication costs. Two modules have also been added to reinforce the possibility of communicating data with other devices and over the internet: (i) a LoRa transceiver, RFM98 (Seeed Technology Co., Ltd.), provides the opportunity to communicate at a low data rate over a long range, while (ii) an LTE module, SIM7600E (SIMCom Wireless Solutions Limited), makes it possible to transmit and subscribe a high data rate to and from the server. To avoid all these components reducing the autonomy of the system, each communication module can be activated or not at any time. Additionally, a low-power microcontroller, ATMEGA32U4-AU (Microchip Tecnology), is used to wake up the entire system only when needed. This period is fully configurable and can be adapted to each case. Sensors and actuators that require real-time and more frequent operation can also be added, without running the whole system. The whole hardware system is powered by a 12 V 7 Ah battery, which is automatically rechargeable thanks to a monolithic step-down charger, LT3652 (Analog Devices Inc.). This one employs an input voltage regulation loop to maintain a solar panel (20W) at peak output power. If the input voltage falls below a programmed level, it reduces, automatically, the charge current. The LT3652 also has an auto-recharge feature that starts a new charging cycle if the battery voltage falls 2.5% below the programmed value. As soon as the charging cycle finishes, a low-current standby mode is applied.

According to the principle of extending the application to a wide range of crops and species, the trap structure was designed to adapt its configuration to a delta shape working with sex pheromones and color attractants. This is possible using a servo motor that allows switching between three different positions in relation to the camera: (i) standby position and trap in delta configuration, with pheromone and white sticky paper inside and chromotropic sticky paper on the bottom; (ii) image acquisition of the delta trap; and (iii) image acquisition of the chromotropic trap. All the positions are illustrated in Figure 1, respectively, on the left side, middle and right side.

Figure 1.

Positions adopted by the smart trap in relation to the camera.

Additionally, a servo motor was also placed in the front with an attached brush that remains above the camera lens while the trap is in the standby position, preventing the entry of dust, insects, water and fertilizers. The camera used was the Raspberry Pi module v2, which offers an 8 MP resolution, which is important to acquire small insects and make their identification possible. The 3D trap model helped to improve our concept and was necessary to generate the print models. The entire trap has a built-in house and uses the polymer PETG since it offers thermal and mechanical resistance. Some prototypes have already been installed in vineyards, orchards and olive groves, as can be seen in Figure 2. All of them are connected to a weather station, which acquires the temperature and humidity of the air, as well as the spectrum of radiation that is affecting the crop.

Figure 2.

Smart traps installed in olive groove (left), orchard (middle) and vineyard (right).

Since mobile communications proved to be quite unstable in weak signal locations, the possibility of performing insect detection and counting locally in the trap was considered. Considering the low-power microprocessor used and the small amount of data acquired, a lightweight approach that could be easily retrained was adopted. Thus, the proposed algorithm utilizes an SVM with color and texture as features by concatenating the uniform Local Binary Pattern (LBP) histogram and the Hue, Saturation, Value (HSV) histogram of the image. SVM algorithms are appealing, especially for high-dimensional non-linear classification problems. To train an SVM, the dataset is divided into two sets, training and testing data, which consist of some data instances. Each data instance contains a value related to the class label and several values related to the features. The SVM aims to construct a predictive model that can classify the instances in the test set based solely on their characteristics. The SVM is a non-parametric supervised classification algorithm with different configurations depending on the choice of the kernel function, which defines the transformation space for building the decision surface. The goal of a binary classification task is to find an optimal separation hyperplane.

The LBP method is flexible and adaptable to many real-world problems. This method is simple, efficient for feature extraction and computationally simple. The LBP method compares the grey-level value of the central pixel with the neighbor pixels with a predefined grid. This comparison results in binary patterns capturing the local texture patterns, which reveal the hidden information in the structure of an image.

The HSV color space represents perceptual color relationships and decouples color-related features (H and S) from the brightness information (V). This separation allows attention to be focused only on the perceptual properties of color, which can be highly informative for classification tasks.

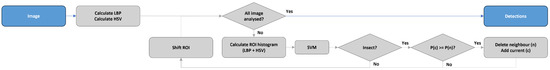

Figure 3 shows the flowchart with the main tasks of the proposed algorithm capable of detecting insects in the input image, with detections as the output. The algorithm initiates the process by computing the image’s LBP and HSV representations. Subsequently, the algorithm systematically scans the image using a predefined number of windows and step size. Within each ROI identified by the sliding windows, if the SVM classifies the region as an insect, it is necessary to check the neighborhood. If the probability of the current region is greater than the neighborhood, then the neighborhood detections are eliminated and the current detection takes precedence. This verification avoids several bounding boxes detecting the same insect. Only the set of new detections is sent to the cloud server, thus allowing us to reduce the bandwidth used.

Figure 3.

Flowchart of the algorithm for detection of insects using SVM.

3. Results

The classification and detection methods already described in Section 2 were trained and tested taking into account four different datasets that were a result of the data acquired using the developed monitoring system in the field: two from an olive grove in Mirandela (OG1 and OG2), with X and Y insect images, respectively, and two from an orchard in Alcobaça (OC1 and OC2), with X and Y insect images, respectively. All of them were built from iterative images over a certain period without changing the sticky paper. This means that the last image acquired in each one was the accumulated result of several days of capture, which led to obtaining quite populated images with possible insect overlapping.

To obtain data for the SVM, random cut-outs were extracted from the final image within each dataset. Each cut-out was classified (insect or no insect), and a feature vector was calculated, the concatenate histogram of LBP and HSV. The acquired dataset contained data from 12,500 distinct images, with an 80:20 division between the training set (10,000) and the testing set (2500). The SVM can use distinct kernels that adapt to different cases, so, with the training set mentioned, different trainings were performed to find the SVM that best adapted to the problem of insect detection. Table 2 shows the classification results of the testing set obtained from training the SVM with different kernels. The radial basis function (RBF) and polynomial (poly) kernels demonstrate superior performance in the chosen metrics for both classes, with results above 85%. The metrics utilized are duly explained by Pinheiro et al. [15].

Table 2.

Classification results of the test set obtained with SVM with different kernels.

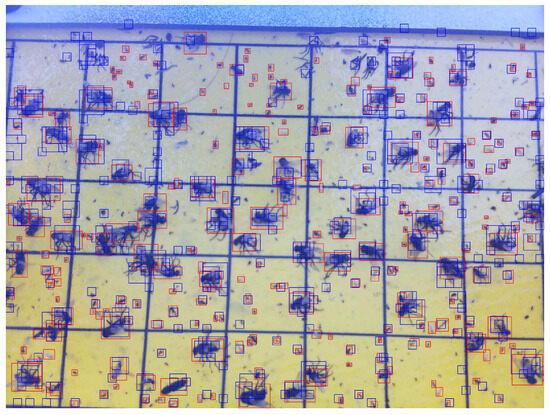

Since the RBF kernel has the best metrics and is less susceptible to overfitting, this was the selected kernel. Figure 4 presents the results of the detection algorithm utilizing the trained SVM with the RBF kernel. The algorithm was tested with three different window sizes and a step size of half the window size. The algorithm identifies most insects captured by the trap, with some false positives associated. Some of the windows overlapped; this was not an issue considered when the algorithm was developed.

Figure 4.

Detection algorithm results using the trained SVM with an RBF kernel. Red bounding boxes present ground truth. Blue bounding boxes present the algorithm’s predictions.

Regarding the bandwidth optimization that we proposed, an analysis was performed comparing the amount of data transmitted assuming a daily full image with the amount of data that would be necessary to transmit if the proposed detection algorithm were locally applied. For the analysis, it was considered a mean size of 10 MB per full image and a mean size of 13.1 KB per insect (100 × 100 pixels). The results are mentioned in Table 3.

Table 3.

Bandwidth optimization with the proposed algorithm.

4. Discussion and Conclusions

Automatic pest monitoring systems have evolved significantly over the last ten years. Prototypes with different configurations and attraction methods have been developed in order to better adapt to different crops and maximize the number of captures. In most cases, the search for the optimal configuration has been directly related to the crop under study and, consequently, to the pest being identified. In this work, we have proposed a more versatile configuration from which we can extend its application to a wide diversity of crops and species, since it can work with both sex pheromones and chromotropic sticky papers or even both simultaneously.

During continuous tests carried out in vineyards, orchards and olive groves, two main difficulties were noted: (i) the need to change the paper at high intervals due to the overlapping of insects and the movement of parts of their bodies caused by environmental conditions; (ii) the need to send full images to the server using mobile data in areas with a weak signal, resulting in daily losses of information that can compromise the early warning of a pest. Therefore, firstly, we consider it important to add a mechanism for the cleaning or automatic changing of the sticky paper, as suggested in the literature. Although this adds mechanical complexity to the trap and a higher cost in production, there will be a reduction in labor costs and it will promote better results for the identification algorithms. Regarding the second difficulty, we decided to implement the insect identification process locally in the trap. Since we had a low-power microprocessor and small data sets, we opted to use a lightweight approach based on SVM models to classify insects or non-insects. Thus far, the models have been tested and continually retrained with the images that were sent to the server and subsequently annotated, achieving relevant performance. With the SVM model integrated into an algorithm for the identification of new insects in the image, we are able to send only the object of interest and thus identify new threats and reduce, by almost 99%, the bandwidth used.

When considering a system that integrates a mechanism for the cleaning and automatic changing of attractants with a method for the accurate detection of the presence of insect-borne diseases in the crop, it will be possible to provide the agricultural sector with a long-acting monitoring process that does not require manual operation. Most of the time currently spent travelling and working to monitor insects will no be longer necessary and the level of confidence in the early prediction of diseases will be optimized. Besides this, it will be possible to efficiently predict the appearance of new insects that, due to climate change, fly to other regions where they are not yet accounted for in the databases.

The proposed approach has been verified in this evaluation and is expected to impact real-world pest monitoring in the agricultural field, taking advantage of its scalability to work in different crops and with different attractants, its interoperability with other sensing devices and the cost-effective monitoring system developed to rapidly identify new insect-borne diseases, generating data and alerts to the farmer using an optimized bandwidth.

Author Contributions

Conceptualisation, P.M., I.P., F.T., T.P. and F.S.; investigation, P.M., I.P., F.T. and F.S.; methodology, P.M., I.P., F.T. and F.S.; validation, P.M., I.P., F.T. and F.S.; supervision, T.P. and F.S.; writing—original draft, P.M. and I.P.; writing—review and editing P.M., I.P., F.T., T.P. and F.S. All authors read and agreed to the published version of the manuscript.

Funding

This project has received funding from the European Union’s Horizon 2020 research and innovation program under grant agreement No. 857202. Disclaimer: The sole responsibility for the content of this publication lies with the authors. It does not necessarily reflect the opinions of the European Commission (EC). The EC is not responsible for any use that may be made of the information contained therein.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- FAO. Climate Change Fans Spread of Pests and Threatens Plants and Crops; New FAO Study; FAO: Rome, Italy, 2021. [Google Scholar]

- Preti, M.; Verheggen, F.; Angeli, S. Insect pest monitoring with camera-equipped traps: Strengths and limitations. J. Pest Sci. 2021, 94, 203–217. [Google Scholar] [CrossRef]

- Tirelli, P.; Borghese, N.; Pedersini, F.; Galassi, G.; Oberti, R. Automatic monitoring of pest insects traps by Zigbee-based wireless networking of image sensors. In Proceedings of the 2011 IEEE International Instrumentation and Measurement Technology Conference, Hangzhou, China, 10–12 May 2011; pp. 1–5. [Google Scholar]

- Hadi, M.K.; Kassim, M.S.M.; Wayayok, A. Development of an automated multidirectional pest sampling detection system using motorized sticky traps. IEEE Access 2021, 9, 67391–67404. [Google Scholar] [CrossRef]

- Diller, Y.; Shamsian, A.; Shaked, B.; Altman, Y.; Danziger, B.C.; Manrakhan, A.; Serfontein, L.; Bali, E.; Wernicke, M.; Egartner, A.; et al. A real-time remote surveillance system for fruit flies of economic importance: Sensitivity and image analysis. J. Pest Sci. 2023, 96, 611–622. [Google Scholar] [CrossRef]

- Huang, R.; Yao, T.; Zhan, C.; Zhang, G.; Zheng, Y. A Motor-Driven and Computer Vision-Based Intelligent E-Trap for Monitoring Citrus Flies. Agriculture 2021, 11, 460. [Google Scholar] [CrossRef]

- Trapview. 2023. Available online: https://trapview.com/ (accessed on 24 September 2023).

- Qing, Y.; Jin, F.; Jian, T.; Xu, W.G.; Zhu, X.H.; Yang, B.J.; Jun, L.; Xie, Y.Z.; Bo, Y.; Wu, S.Z.; et al. Development of an automatic monitoring system for rice light-trap pests based on machine vision. J. Integr. Agric. 2020, 19, 2500–2513. [Google Scholar]

- Teixeira, A.C.; Ribeiro, J.; Morais, R.; Sousa, J.J.; Cunha, A. A Systematic Review on Automatic Insect Detection Using Deep Learning. Agriculture 2023, 13, 713. [Google Scholar] [CrossRef]

- Segalla, A.; Fiacco, G.; Tramarin, L.; Nardello, M.; Brunelli, D. Neural networks for pest detection in precision agriculture. In Proceedings of the 2020 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), Trento, Italy, 4–6 November 2020; pp. 7–12. [Google Scholar]

- Sütő, J. Embedded system-based sticky paper trap with deep learning-based insect-counting algorithm. Electronics 2021, 10, 1754. [Google Scholar] [CrossRef]

- Zhong, Y.; Gao, J.; Lei, Q.; Zhou, Y. A vision-based counting and recognition system for flying insects in intelligent agriculture. Sensors 2018, 18, 1489. [Google Scholar] [CrossRef] [PubMed]

- Brunelli, D.; Polonelli, T.; Benini, L. Ultra-low energy pest detection for smart agriculture. In Proceedings of the 2020 IEEE SENSORS, Rotterdam, The Netherlands, 25–28 October 2020; pp. 1–4. [Google Scholar]

- Pérez Aparicio, A.; Llorens Calveras, J.; Rosell Polo, J.R.; Martí, J.; Gemeno Marín, C. A cheap electronic sensor automated trap for monitoring the flight activity period of moths. Eur. J. Entomol. 2021, 118, 315–321. [Google Scholar] [CrossRef]

- Pinheiro, I.; Aguiar, A.; Figueiredo, A.; Pinho, T.; Valente, A.; Santos, F. Nano Aerial Vehicles for Tree Pollination. Appl. Sci. 2023, 13, 4265. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).