Abstract

This article aims to limit the rule explosion problem affecting market basket analysis (MBA) algorithms. More specifically, it is shown how, if the minimum support threshold is not specified explicitly, but in terms of the number of items to consider, it is possible to compute an upper bound for the number of generated association rules. Moreover, if the results of previous analyses (with different thresholds) are available, this information can also be taken into account, hence refining the upper bound and also being able to compute lower bounds. The support determination technique is implemented as an extension to the Apriori algorithm but may be applied to any other MBA technique. Tests are executed on benchmarks and on a real problem provided by one of the major Italian supermarket chains, regarding more than transactions. Experiments show, on these benchmarks, that the rate of growth in the number of rules between tests with increasingly more permissive thresholds ranges, with the proposed method, is from to , while it would range from to if the traditional thresholding method were applied.

1. Introduction

Market basket analysis (MBA) identifies a group of techniques used to process a transactional dataset for extracting association rules characterized by values of relevance and reliability greater than a given minimum.

Generally, users of any software which addresses the field of Market Basket Analysis (MBA) belong to one of the two following categories:

- Data scientists, experts in algorithms and programming, but lacking domain-specific knowledge related to the MBA application;

- Managers, experts in their proper applicative domain, but (possibly) lacking specific competencies in designing and interpreting association rule mining algorithms.

Data scientists and managers usually cooperate [1], combining their skills for a more effective analysis. Distinguishing relevant and irrelevant data automatically is crucial for data scientists, both to reduce the computational cost of MBA algorithms, allowing us to also generate useful association rules for big datasets, and to provide managers with succinct, actionable information. Well-known MBA algorithms, such as Apriori [2,3], FP-Growth [4] and Eclat [5], filter data according to a relevance threshold: items (and itemsets) whose number of occurrences in the dataset does not overcome this threshold are discarded.

Still, data scientists may have to deal with datasets whose characteristics are unknown. It is then challenging to find an effective threshold that guarantees to filter data as desired. Moreover, some datasets may involve a considerable number of items and the support level of many of them may only differ slightly (e.g., supermarket purchases). Then, a small change in the support threshold significantly alters the association rules, i.e., the algorithm is sensitive to the choice of this parameter.

An alternative support threshold computation technique is proposed to face this problem: the number of items to consider (most frequent one first) is specified and the corresponding support threshold is automatically determined and applied.

It will be proven that, by employing this technique, results are more predictable, even for a user lacking domain-specific knowledge. More specifically, an upper bound for the number of generated association rules is computable and this bound may be refined taking into account the results of other analyses, with different parametrization, addressing the same dataset.

To validate the effectiveness of the proposed technique, tests are executed on well-known benchmarks (such as the retail dataset [6]), as well as on real data concerning 552,626 transactions, provided by a big Italian supermarket chain whose name has to be kept confidential.

The paper is organized as follows: Section 1.1 introduces market basket analysis terminology and Section 1.2 is dedicated to related work. Section 2 describes the proposed automatic support threshold computation technique and quantifies the bound that it is possible to impose on the number of generated association rules by employing it. Section 3 presents experimental results and Section 4 examines the current limitations of the approach, as well as future work. Conclusions follow.

1.1. Definitions

Denote with I = {, …, } the universe constituted by the items , …, (being ), with B⊆I an itemset. The cardinality of the itemset is equivalent to the number of items such that ∈B for i = 1, …, n. If , B is defined as an item.

Let be a dataset constituted by a set of transactions (with ), such that ⊆I for each j = 1, …, m; that is, each transaction constitutes an itemset. An occurrence of the itemset B in takes place if, for at least one value of j between 1 and m, B⊆. The number of occurrences of B in is then equivalent to the number of different j such that B⊆.

An association rule r is defined as

where and . The rule r identifies a relation between the occurrence of the itemset A and that of C; usually, A is called antecedent, and C is called consequent. A rule r is then univocally associated with the itemsets A and C and so, necessarily, its cardinality

It is important to note that each frequent itemset B such that > 1 may generate multiple association rules , according to the choice of A. More specifically, up to

association rules can be generated. As a matter of fact, the antecedent of the rule can in fact contain from one to different items, chosen inside the itemset B. Hence, at most distinct association rules whose antecedent part has cardinality k can be derived.

Three proper measures, defined in terms of the occurrences of A and C, characterize the association rule r as follows:

- The support of an itemset B is the number of occurrences of the itemset B in ; measures the empirical probability of the occurrence of B in , where m denotes the total number of transactions in . Considering that any rule is univocally associated with the itemset , its support and empirical probability of occurrence, respectively, denoted as and , are equal to and .

- The confidence of r is the ratio between and the number of occurrences of A in :It is a measure of the reliability of r and represents how often the consequent occurs if the antecedent is verified.

- The lift of r is the ratio between the empirical probability that r occurs in and its expected value in case A and C were independent. The probability of occurrence for an itemset in is expressed as the ratio between its support and the total number of transactions m, then:where denotes the number of occurrences of C in . Lift represents a measure of correlation between the occurrences of itemsets A and C; it is greater than 1 in case a positive correlation exists and lower than 1 for negative ones.

1.2. Related Work

The technique described in the present article has been implemented as an extension of an existing one, i.e., the Apriori algorithm, widely employed in the MBA field, also recently [7,8] and described in [2,3].

The Apriori algorithm is constituted by a loop; at each step k, all the itemsets of cardinality k with support at least equal to a threshold value t∈ are identified. The itemsets fulfilling this condition are defined as frequent itemsets and the set composed of them is referred to as .

The value of k is initialized to 1 and increased after each iteration; the loop ends if k overcomes the value y∈ of maximum cardinality specified by the user or if = ∅.

Each iteration is composed of two steps:

- Candidate generation: the output of this step is the candidate set . is composed of all the items in ; with is composed of all the itemsets of cardinality k such that, if any item is removed from them, the resulting itemset belongs to .

- Pruning: the support of each itemset in is computed. If it is at least equal to t, it is included in .

Finally, all the itemsets belonging to are examined to identify all the association rules that are associated with them, according to the choice of the antecedent part. All these rules, possibly filtered by a minimum threshold of confidence and/or lift set by the user, constitute the output of the Apriori algorithm.

Apriori needs a complete scan of the dataset for each pruning step of the candidate itemsets, i.e., once for each considered rule cardinality, and can consequently be slower than the Fp-Growth algorithm [4], which overcomes this constraint by means of auxiliary data structures. Nevertheless, Fp-Growth is less suitable for the considered application, since the reference dataset may be composed of a huge number of transactions and items; hence, the memory overhead due to auxiliary data structures could be relevant. In addition, given the practical flavor of the proposed technique, the focus will be on low-cardinality association rules (few antecedents, few consequents), which are characterized by higher support and may be applied more easily. Then, the computational time advantage of Fp-Growth is less relevant. The authors of [9] discuss another tree-based algorithm (DFP-SEPS) for frequent pattern mining, in case the input data is received as a stream, while in [10], an integration between Apriori and FP-Growth for the extraction of multi-level association rules is discussed.

Also, Eclat [5] would offer some advantages with respect to Apriori in appropriate operational contexts. It allows an efficient parallelization in mining frequent itemsets, which can be used for generating association rules. Still, a pre-processing phase as well as an auxiliary data structure are necessary and, also in this case, the need to consider big datasets and low-cardinality association rules dampens the advantages and emphasizes the drawbacks of the algorithm.

It is also worth considering that the proposed technique can be adopted whatever the association rule mining algorithm is. We have chosen to consider Apriori, since it is the most consolidated one and allows us to single out more clearly their innovative aspects.

A possible support threshold determination technique is described in [11]; the authors propose generating association rules with variable accuracy, but it is necessary to work on a sampled version of the original dataset, consequently risking a loss of information.

In [12], a filtering criterion based on multiple, automatically determined support thresholds is defined. Yet, the proposed approach is not general: it may be applied only if it is possible to define a taxonomy for items, i.e., only if items are grouped in known categories and, possibly, sub-categories. Also, the authors of [13,14], more recently, suggest faster and refined algorithms for multiple support thresholds determination but, even in these cases, an item taxonomy needs to be specified.

An automated tuning algorithm for generating different support thresholds is proposed in [15]; there, the refinement process is iterative and hence not suitable for working with big datasets.

The authors of [16] describe how to automatically determine multiple support thresholds by means of the ant colony optimization algorithm [17]. The need for an iterative execution reduces computational efficiency; furthermore, the user loses control over the thresholds, completely relying on the algorithm. Conversely, the technique proposed in this paper allows control over the result, enabling specification of the number of considered items. Similar observations are applicable to the suggestion, by the same authors [18], to apply another evolutionary algorithm, i.e., particle swarm optimization, [19] to compute the value of support thresholds.

The use of two different support thresholds is proposed in [20]: one for most frequent items and another for rare ones. If an itemset is composed only of frequent items, it must satisfy a specified criterion not to be discarded; if rare items are included then another, less restrictive, criterion is applied. Also, the notion of relative support is introduced and used to prune the itemsets more effectively. The MBA algorithm gains flexibility but is still unpredictably sensitive to the choice of these parameters.

A drastic solution to the rule explosion problem is depicted in [21]: the user is enabled to determine how many itemsets (leading then to association rules) they would like to generate. Automatically, the best ones are chosen according to an optimizing criterion. Still, the optimization step implies only mining maximal frequent itemsets, and this is known to be a lossy itemset compression technique; a significant bound to rule explosion comes then at the price of an unavoidable and only partially controllable loss of information.

In [22], a general solution to the problem of automatically determining a reasonable support threshold is proposed, but in a different operational context; the aim is, in fact, to look for rare itemsets, rather than for frequent ones.

A comprehensive framework for assessing the statistical significance of the set of itemsets (and, consequently, of association rules) is developed in [23]. Using this statistical significance measurement as a reference, it is possible to compute a suitable support threshold. Still, the procedure to compute this threshold is complex and computationally intensive, while the aim of the present paper is to equip any MBA user with an intuitive tool to deal with a well-known, pervasive problem.

Also, the approach described in [24] represents an original solution to the determination of the support threshold, but the goal of the procedure proposed by the authors is not to bound rule explosion but to attenuate the effects of information uncertainty affecting input data.

Extensions to traditional market basket analysis algorithms, so that they can benefit from the improvements associated with the use of a Map-Reduce computing paradigm, have been recently discussed in [25].

Alternative approaches also encompass the idea of estimating the number of occurrences of items and itemsets rather than computing, thus having a computational advantage at the expense of having a more noisy, non-deterministic, result [26].

2. Materials and Methods

Through the adoption of the technique proposed in this article, the user is still allowed to choose a maximum cardinality y for the itemsets, but they are not requested to provide the minimum support threshold t. Instead of t, they specify the number f∈ of items to monitor.

A support threshold t∈, which allows to consider only the f most frequent items, is then automatically computed by ordering in descending order of support and setting t equal to the value of its f-th element. Ties are broken according to a proper ordering criterion, e.g., the order of appearance in the dataset, so that is always composed of exactly f items.

An estimate of the number of rules that the analysis is going to generate is crucial information for an MBA user and adopting the described support threshold computation technique improves the predictability of the number of generated association rules. More specifically, it allows to computation of valid upper bounds given y and f.

Denote with e the number of frequent itemsets and with d the number of association rules associated with them. Then, since (1), the following inequalities can be readily formulated:

where denotes the number of frequent itemsets of cardinality j∈, which is a fraction of the number of candidate itemsets generated by the j-th iteration of the Apriori algorithm. This quantity can be upper-bounded by the number of combinations of j elements between the f items satisfying the support constraint. Then, we have:

It follows that d and e are upper-bounded by an expression involving the value of f and y:

The proposed technique to compute a support threshold allows the user to fix the value of f, instead of indirectly specifying it through the choice of a minimum support threshold. Together with the choice of y, this allows us to bind the value of e and, consequently, of d. In other words, limiting the number of items to be considered in the rule extraction process allows us to define an upper bound for the number of rules that will be generated.

Also, let be the number of frequent itemsets if items are taken into account. Then, it is possible to compute a more accurate bound for , that is, the number of frequent itemsets obtained when considering items, being . Let us denote with and the upper bounds for and computed according to (6). We aim to show that:

i.e., is upper-bounded as follows:

Theorem 1.

Proof.

The set is defined as including the original items, while the set comprises the additional ones. The value of is equivalent to:

where accounts for the frequent itemsets comprising items belonging to together with items belonging to . Conversely, denotes the number of frequent itemsets involving only items in . By substituting (10) in (8), we can reformulate the thesis of the theorem as:

From (6), it follows that

Now, denoting with the number of frequent itemsets of cardinality j that concurs to , so that

we can observe that each frequent itemset concurring to includes k items from and items from , with k = 1, …, . Since the number of different ways for selecting k items from is , whereas the other items from can be chosen in different ways, we have:

By employing the relationship of the hypergeometric distribution [27]

we obtain

and by substituting (16) in (15), it follows that:

from which (see (14))

The quantity can then be upper-bounded by employing (18) for and (12) for

□

3. Results

The effectiveness of the computed bound is verified for differently sized problems, addressing both well-known benchmarks and problems concerning real sales data from big companies. More specifically, five cases are considered:

- The groceries dataset [28], comprising 9835 transactions and 169 different categories of items;

- The Mondrian foodmart sales dataset (https://github.com/kijiproject/kiji-modeling/tree/master/kiji-modeling-examples/src/main/datasets/foodmart, accessed on 15 January 2020) including 7824 transactions (which are assumed to be identified by their customer id) and 1559 different items;

- The retail dataset [6], comprising 88,162 transactions, with 16,470 different items;

- The online retail dataset [29], comprising 25,900 transactions, with 4194 different item descriptions;

- Real, anonymous (no detailed data will be commented, only aggregate results) data gathered by one of the largest Italian supermarket chains; this dataset is composed of 552,626 transactions, involving 51,892 different items. The transactions refer to thousands of different customers, with an average basket size of items (and a standard deviation in the basket size of items). At least one of the 100 most frequent items is included in of the transactions.

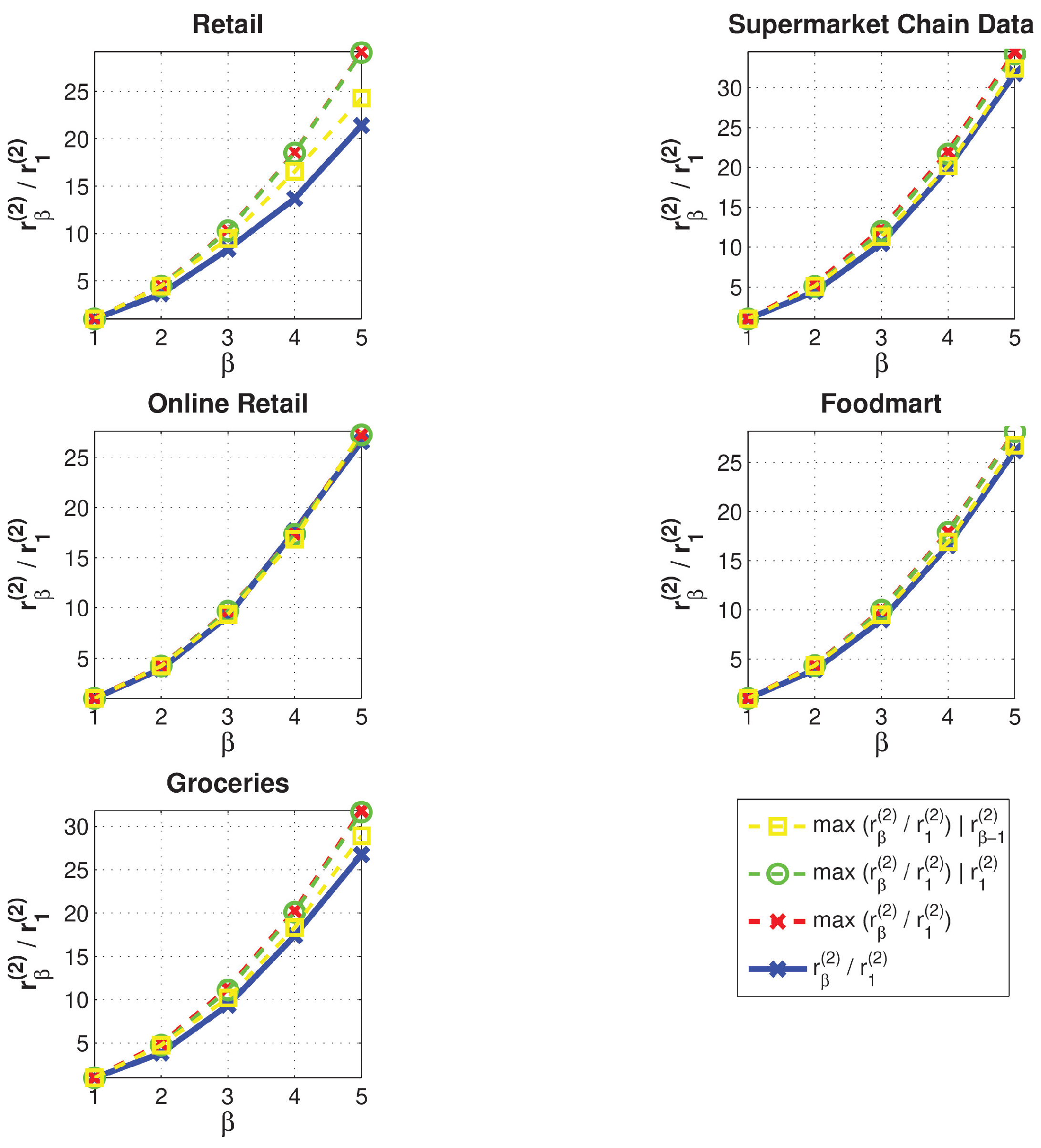

For all these datasets, we aim to verify the predictive power of the upper bound determined according to (20).

The tests where an explicit minimum support threshold is specified are referred to as group 1, whereas group 2 includes the tests in which the number of items to consider is set by the user. Firstly, an experiment showing the better predictability of results in group 2 is proposed.

Let us denote with ∈ the support of the th-most frequent item in the dataset, being ∈ (in experiments, = 10). Let ∈ represent the number of tests for each dataset, for both group 1 and group 2. Then, for each ∈ such that 1 ⩽⩽

- A new test is included in group 1, setting / as minimum item support: rules will be generated;

- A new test is included in group 2, taking items into account: rules will be generated.

Let ∈ denote the minimum itemset support threshold. Considering that the present work addresses the comparison between two different ways of computing the minimum item support, has been set to the same value for both groups of tests; in particular, the least restrictive possible value, = 1, has been adopted. A minimum lift threshold of 1 has been chosen for both groups to select rules identifying positive correlations between antecedents and consequents (see Equation (3)). The proposed technique for support threshold computation, as anticipated in the introduction, can be applied to any frequent pattern mining algorithm (Apriori has just been picked as an example). For better generality, in the following, we will focus our analysis of the results on the number of rules generated at each step, which is independent of the choice of the algorithm and from its implementation details rather than on computation time or memory peak, which are correlated with the total number of extracted rules but depend on the choice of the pattern mining algorithm and on its implementation.

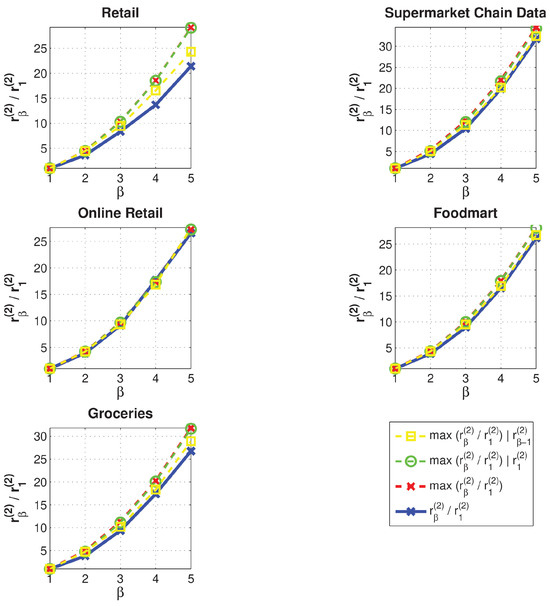

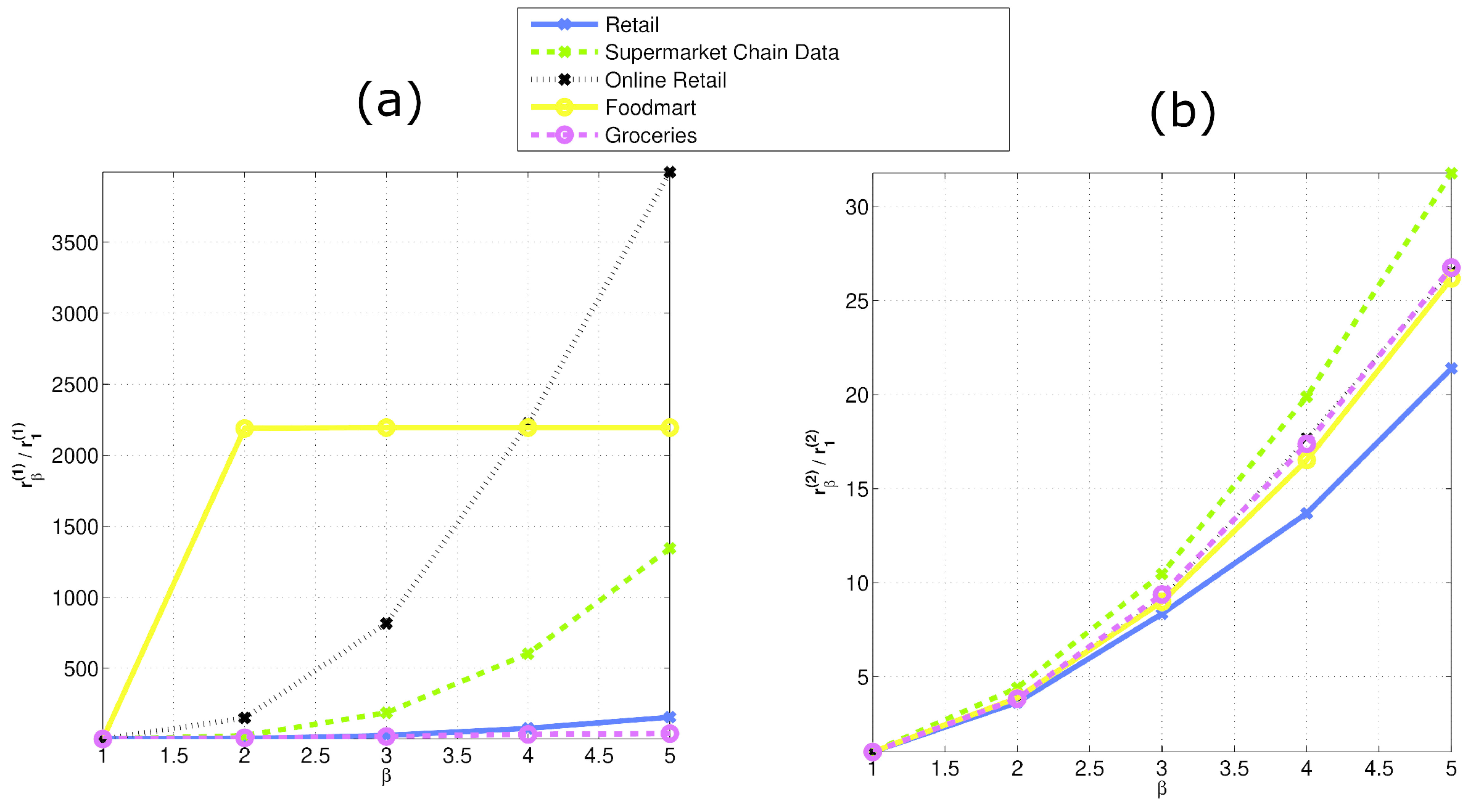

Figure 1 shows how the ratios and vary with for group 1 (left plot) and group 2 (right plot), for all considered datasets.

Figure 1.

Number of generated rules with respect to thresholding factor. Group 1 tests are represented in the left plot (a). Group 2 results are displayed in the right one (b). On the x axis, we have the tested values for . On the y axis, we have the ratio between the number of rules with a given and the number of rules with for group 1 (a) and group 2 (b) tests.

The growth in the number of rules is more regular for group 2, i.e., if the approach proposed in the present paper is adopted to filter data: ranges from to , while ranges from to . The quantitative results displayed in Figure 1 are also reported in Table 1.

Table 1.

Number of rules and generated with respect to the thresholding factor, and values observed in Group 1 and Group 2 tests.

An upper bound for has been computed, according to (7) (being y = 2):

Also, it is possible to bound more accurately, by taking into account the number of rules generated for = 1: the value of the bound is then computed by substituting y = 2, = and = in (20):

since = 10. The accuracy of the upper bound for the number of association rules generated by the -th iteration can be further improved by taking into account the number of association rules generated in the ()-th iteration of the test instead of the first one (that is, the one with = 1). This corresponds to substituting y = 2, = (− 1) and = in (20):

4. Discussion

Before summarizing the main contribution of the paper in the conclusions, we would like to draw attention to the aspects that are not yet captured by this initial set of experiments and, consequently, identify and suggest some possible areas for future work.

Firstly, considering that the adoption of the proposed technique is expected to facilitate the interaction between data scientists and domain-expert users, human feedback gathered about the use of the suggested support threshold computation technique would be valuable, as well as to evaluate how the users make use of the information concerning increasingly refined upper bounds for the number of rules that they will need to analyze and apply.

Also, the paper is focused on the definition, the calculation, and the experimental validation of bounds allowing us to have an idea about how many rules could be extracted. Yet, even if support itself is one of the metrics which are commonly associated with rule quality, in perspective, a comparative analysis of the effect of the proposed technique on rule quality as a whole, from different angles (for example, according to confidence, lift, symmetric confidence), could constitute an additional contribution stemming from the present work.

Future work will also be oriented towards providing contributions to the creation of effective item packages, allowing the management and the evaluation of item hierarchies (which may be constituted, for instance, by single items, which may or may not be included in packages, belonging to categories) by means of MBA techniques.

5. Conclusions

This paper aims to show to what extent an alternative support threshold determination, guided by the choice of the number of items to take into account, may be used to attenuate the rule explosion problem.

More specifically, experiments show, on five different datasets, that the rate of growth in the number of rules between tests with increasingly more permissive thresholds ranges from to with the proposed method, while it would range from to , if the traditional thresholding method were applied.

As shown in Section 3, addressing both well-known benchmarks and real datasets, the use of this technique induces better predictability in terms of the number of generated rules, resulting in a particularly useful result for users lacking domain-specific knowledge.

Author Contributions

Conceptualization, D.V. and M.M.; Methodology, D.V.; Investigation, D.V.; Writing—original draft, D.V.; Writing—review & editing, D.V. and M.M.; Supervision, M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Only one of the datasets described in the article is not publicly available, while the others can be downloaded from public repositories.

Conflicts of Interest

Author Damiano Verda and Marco Muselli were employed by the company Rulex Innovation Labs. The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Kumar, P.; Manisha, K.; Nivetha, M. Market Basket Analysis for Retail Sales Optimization. In Proceedings of the 2024 Second International Conference on Emerging Trends in Information Technology and Engineering (ICETITE), Vellore, India, 22–23 February 2024; IEEE: New York, NY, USA, 2024; pp. 1–7. [Google Scholar]

- Agrawal, R.; Imieliński, T.; Swami, A. Mining association rules between sets of items in large databases. In Proceedings of the ACM SIGMOD Record, ACM, Washington, DC, USA, 26–28 May 1993; Volume 22, pp. 207–216. [Google Scholar]

- Agrawal, R.; Srikant, R. Fast algorithms for mining association rules. In Proceedings of the 20th International Conference on Very Large Data Bases, VLDB, Santiago de Chile, Chile, 12–15 September 1994; Volume 1215, pp. 487–499. [Google Scholar]

- Han, J.; Pei, J.; Yin, Y. Mining frequent patterns without candidate generation. In Proceedings of the ACM SIGMOD Record, ACM, Dallas, TX, USA, 16–18 May 2000; Volume 29, pp. 1–12. [Google Scholar]

- Zaki, M.J.; Parthasarathy, S.; Ogihara, M.; Li, W. Parallel algorithms for discovery of association rules. Data Min. Knowl. Discov. 1997, 1, 343–373. [Google Scholar] [CrossRef]

- Brijs, T. Retail market basket data set. In Proceedings of the Workshop on Frequent Itemset Mining Implementations (FIMI’03), Melbourne, FL, USA, 19 November 2003. [Google Scholar]

- Omol, E.J.; Onyango, D.A.; Mburu, L.W.; Abuonji, P.A. Apriori algorithm and market basket analysis to uncover consumer buying patterns: Case of a Kenyan supermarket. Buana Inf. Technol. Comput. Sci. (BIT CS) 2024, 5, 51–63. [Google Scholar] [CrossRef]

- Wahidi, N.; Ismailova, R. A market basket analysis of seven retail branches in Kyrgyzstan using an Apriori algorithm. Int. J. Bus. Intell. Data Min. 2025, 26, 236–255. [Google Scholar] [CrossRef]

- Alavi, F.; Hashemi, S. DFP-SEPSF: A dynamic frequent pattern tree to mine strong emerging patterns in streamwise features. Eng. Appl. Artif. Intell. 2015, 37, 54–70. [Google Scholar] [CrossRef]

- Alcan, D.; Ozdemir, K.; Ozkan, B.; Mucan, A.Y.; Ozcan, T. A comparative analysis of apriori and fp-growth algorithms for market basket analysis using multi-level association rule mining. In Global Joint Conference On Industrial Engineering And Its Application Areas; Springer: Cham, Switzerland, 2022; pp. 128–137. [Google Scholar]

- Park, J.S.; Yu, P.S.; Chen, M.S. Mining association rules with adjustable accuracy. In Proceedings of the Sixth International Conference on Information and Knowledge Management, ACM, Las Vegas, NV, USA, 10–14 November 1997; pp. 151–160. [Google Scholar]

- Tseng, M.C.; Lin, W.Y. Efficient mining of generalized association rules with non-uniform minimum support. Data Knowl. Eng. 2007, 62, 41–64. [Google Scholar] [CrossRef][Green Version]

- Vo, B.; Le, B. Fast algorithm for mining generalized association rules. Int. J. Database Theory Appl. 2009, 2, 1–12. [Google Scholar]

- Baralis, E.; Cagliero, L.; Cerquitelli, T.; D’Elia, V.; Garza, P. Support driven opportunistic aggregation for generalized itemset extraction. In Proceedings of the Intelligent Systems (IS), 2010 5th IEEE International Conference, London, UK, 7–9 July 2010; IEEE: New York, NY, USA, 2010; pp. 102–107. [Google Scholar]

- Hu, Y.H.; Chen, Y.L. Mining association rules with multiple minimum supports: A new mining algorithm and a support tuning mechanism. Decis. Support Syst. 2006, 42, 1–24. [Google Scholar] [CrossRef] [PubMed]

- Kuo, R.; Shih, C. Association rule mining through the ant colony system for National Health Insurance Research Database in Taiwan. Comput. Math. Appl. 2007, 54, 1303–1318. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M. Ant colony optimization. In Encyclopedia of Machine Learning; Springer: Cham, Switzerland, 2010; pp. 36–39. [Google Scholar]

- Kuo, R.J.; Chao, C.M.; Chiu, Y. Application of particle swarm optimization to association rule mining. Appl. Soft Comput. 2011, 11, 326–336. [Google Scholar] [CrossRef]

- Kennedy, J. Particle swarm optimization. In Encyclopedia of Machine Learning; Springer: Cham, Switzerland, 2010; pp. 760–766. [Google Scholar]

- Yun, H.; Ha, D.; Hwang, B.; Ho Ryu, K. Mining association rules on significant rare data using relative support. J. Syst. Softw. 2003, 67, 181–191. [Google Scholar] [CrossRef]

- Salam, A.; Khayal, M.S.H. Mining top- k frequent patterns without minimum support threshold. Knowl. Inf. Syst. 2012, 30, 57–86. [Google Scholar] [CrossRef]

- Sadhasivam, K.S.; Angamuthu, T. Mining rare itemset with automated support thresholds. J. Comput. Sci. 2011, 7, 394. [Google Scholar] [CrossRef][Green Version]

- Kirsch, A.; Mitzenmacher, M.; Pietracaprina, A.; Pucci, G.; Upfal, E.; Vandin, F. An efficient rigorous approach for identifying statistically significant frequent itemsets. J. ACM (JACM) 2012, 59, 12. [Google Scholar] [CrossRef]

- Tong, Y.; Chen, L.; Yu, P.S. Ufimt: An uncertain frequent itemset mining toolbox. In Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, ACM, Beijing, China, 12–16 August 2012; pp. 1508–1511. [Google Scholar]

- Vats, S.; Sharma, V.; Bajaj, M.; Singh, S.; Sagar, B. Advanced frequent itemset mining algorithm (AFIM). In Uncertainty in Computational Intelligence-Based Decision Making; Elsevier: Amsterdam, The Netherlands, 2025; pp. 187–201. [Google Scholar]

- Sadeequllah, M.; Rauf, A.; Rehman, S.U.; Alnazzawi, N. Probabilistic support prediction: Fast frequent itemset mining in dense data. IEEE Access 2024, 12, 39330–39350. [Google Scholar] [CrossRef]

- Feller, W. An Introduction to Probability Theory and Its Applications; John Wiley & Sons: Hoboken, NJ, USA, 2008; Volume 2. [Google Scholar]

- Hahsler, M.; Hornik, K.; Reutterer, T. Implications of probabilistic data modeling for mining association rules. In From Data and Information Analysis to Knowledge Engineering; Springer: Cham, Switzerland, 2006; pp. 598–605. [Google Scholar]

- Chen, D.; Sain, S.L.; Guo, K. Data mining for the online retail industry: A case study of RFM model-based customer segmentation using data mining. J. Database Mark. Cust. Strategy Manag. 2012, 19, 197–208. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).