Abstract

In the modern era of informatics, where data are very important, efficient management of data is necessary and critical. Two of the most important data management techniques are searching and data ordering (technically sorting). Traditional sorting algorithms work in quadratic time , and in the optimized cases, they take linearithmic time , with no existing method surpass this lower bound, given arbitrary data, i.e., ordering a list of cardinality x in . This research proposes Search-o-Sort, which reinterprets sorting in terms of searching, thereby offering a new framework for ordering arbitrary data. The framework is applied to classical search algorithms,–Linear Search, Binary Search (in general, k-ary Search), and extended to more optimized methods such as Interpolation and Jump Search. The analysis suggests theoretical pathways to reduce the computational complexity of sorting algorithms, thus enabling algorithmic development based on the proposed viewpoint.

1. Introduction

In the digital age, exponential growth of data necessitates the need for efficient data management, for which, sorting and searching hold foundational roles in information organization and retrieval. Sorting is fundamental to numerous computational tasks, with widespread applications from database indexing to network routing and machine learning.

1.1. General Context

The objective of Sorting Algorithms is to arrange a collection of elements in a specific order (by their magnitude). If one were to attempt to establish a broad, amorphous explanation, one could begin by saying that it is a procedure that examines the collection of all permutations for a potential permutation in which each of the components that make up the index is monotonically organized. It is guaranteed that there always exists an instance such that in , or , where n is the cardinality of the dataset. The classical sorting lower bound is well-established through decision tree models, where each comparison narrows the set of possible permutations. This results in a minimum of comparisons, which, by Stirling’s approximation, gives rise to the bound (refer to Appendix A).

Claim.

For any sorting Algorithm, the minimal time, any Algorithm can hit is .

Proof.

Let us attempt to understand sorting in the context of searching (which is the backbone of this research). Each categorized component ultimately seeks its correct position within the set. Assume it is required to evacuate the element to its original location. To return to its original location, one will have to look in as many n places as possible. Now, after it has been dumped at its original location and moved on to the subsequent element of , to evict it to its true location, one must look for it at most locations, and the number of locations to be sought varies as follows: , , , , …, 1. There are numerous searching algorithms available, such as linear search, binary search, and interpolation search [1], but the quickest searching technique created thus far without enforcing any stringent restrictions is binary search [2], which conducts searches in logarithmic time . So, the total time, as per the computational complexities, will be

which can be further delineated as

Now, there exist some approximations with bounds for the general factorial, Stirling’s Approximation, and Ramanujan’s Approximation for the Gamma Function, to name a few. In some recent works, it was proved using the aforementioned approximation that the minimum computational time taken by a sorting Algorithm to sort a given set of data is of the order of without tampering with the general architecture of the processor. Drawing from that work and utilizing Srinivasa Ramanujan’s approximation of the Euler Gamma Function, we can conclude

Thus, from Equation (1), one gets

since, , where,

□

1.2. Motivation

Now, since there exists a tight minimal bound for the sorting algorithms, “what’s the point behind this work?” In the reference drawn above, binary searching Algorithm was enforced to search the individual elements before sorting. Over time, scientists and algorithmists came up with a variety of new algorithms that could search for a given key in a hump of data quite optimally (compared to the primitive algorithms). By using these searches, we could make use of some optimized algorithms, which would have an edge over other prevalent algorithms (in terms of sorting).

1.3. Literature Review

In the literature, there exist limited but foundational works on deterministic searching algorithms. These search algorithms serve as the basis for Search-o-Sort. Manna and Waldinger, in their seminal work, “The origin of a binary-search paradigm” [2], introduced 2-ary Search (commonly known as binary search). In recent times, several variants of the binary search exists [3,4,5], operating in time (amortized). The proposition of “original” binary search was further extended to 3-ary (ternary) Search [6] by Bajwa et al., and was generalized to k-ary Search [7] by Dutta et al. Contrary to these methods, Interpolation Search [1] suggested a newer perspective for search algorithms. The computational complexity of Interpolation Search is unlike k-ary Search algorithms, which was explained by the authors of Interpolation Search in their follow-up work, “Understanding the complexity of Interpolation Search” [8]. Another out-of-the-box searching Algorithm, Jump Search, was proposed by Shneiderman [9]. These algorithms (in general) serve as the basis for analysis of the proposed Search-o-Sort theory.

1.4. Contribution and Scope

This research introduces Search-o-Sort theory, a conceptual framework that identifies sorting as a composition of iterative searching operations. Thus, in the proposed mathematical formulation, the total cost of sorting Algorithm is modeled as a summation of successive search costs. This model is applied and validated across multiple searching paradigms, Binary Search (in general, k-ary Search), Interpolation Search, and Jump Search. By embedding these search algorithms into the sorting process, we derive computational costs and interpret their implications on the sorting bounds. Our analysis suggests that alternative searching techniques can offer promising directions for reducing effective sorting time, particularly when specialized assumptions or approximations are permitted (which is possible nowadays due to recent advancement in computer architecture).

1.5. Document Structure

The rest of the manuscript is organized as follows, Section 2 introduces the Search-o-Sort theory and its foundational proposition. Section 2.3, Section 2.4, Section 2.5 and Section 2.6 explore the applications of Search-o-Sort theory across k-ary Search, Interpolation Search, and Jump Search, respectively. Finally, Section 3 concludes the paper with insights on the implications of Search-o-Sort theory and scope for future research.

2. Search-o-Sort

One can verify Sorting in the context of Searching, as indicated in the introduction. To summarize, every component that is being ordered is subsequently looking for its correct location in the set. Assume it is required to evacuate the element to its original location. To get back to its unique location, one will have to look in all of the n places. Now, once it is dropped at its original place, it will move to the next element of , and to evacuate it to its actual place, one will have to search at most places and in this way, the number of places to be searched varies, coagulated with subsequent decrements. Now, here comes the million-dollar question—which Algorithm should be tested for searching the elemental position invoked with sorting? Works have been published which make use of Binary Search (Equation (2)). Here, firstly, the Search-o-Sort (see Section 2.1) is proposed, and its further expansion for the theoretical provenance is achieved by its application on k-ary (see Section 2.3), Interpolation (see Section 2.4), and Jump Search (see Section 2.6) as intermediate Searching Algorithms for their respective sorting paradigms, as discussed in the Search-o-Sort.

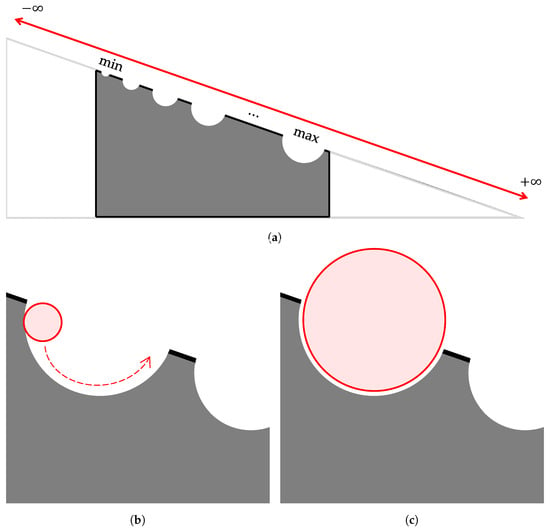

2.1. The Proposition

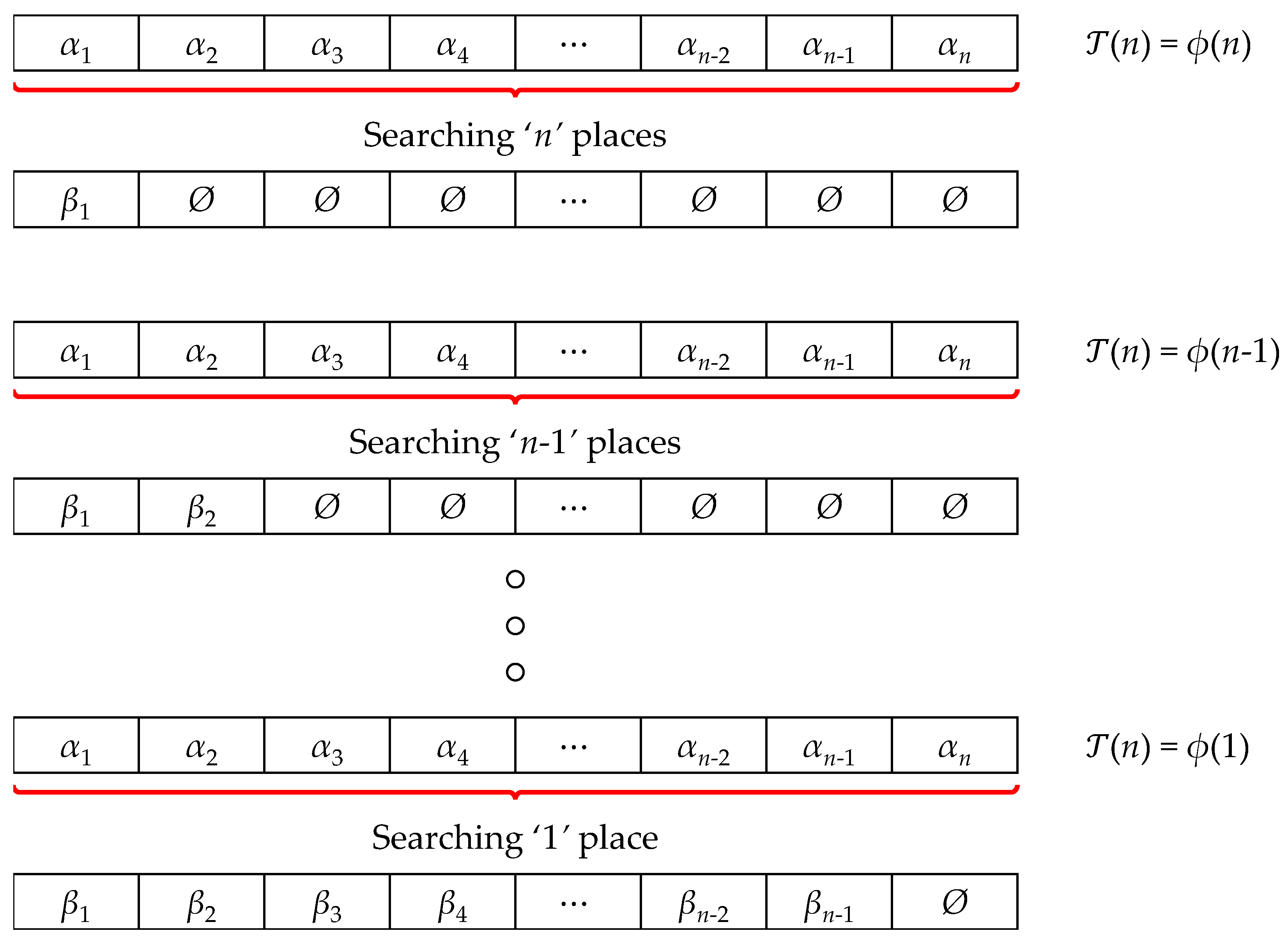

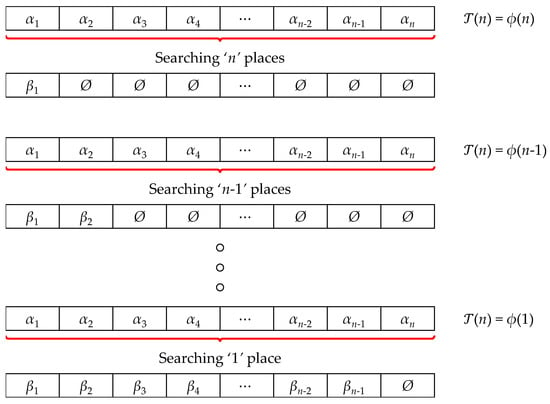

Suppose we have a set of n elements, , and we are aiming to find a permutation of this set of n numbers, such that the elements in the permutation follow a monotonic order by their magnitude. Suppose the new permutation after sorting is . Now, each of these elements , are selected from the list, in iterations. The selection cost will depend on the cardinality of the input sequence. Let us suppose, that the cost is . Now, once one selection is complete from the sequence of n entries, it will be left with more entries to be selected. In the next iteration, the cost would be reduced to , and in the consecutive iterations, the cost will finally converge at . Thus, it would be correct to undertake a summation of each of these costs, (see Figure 1) to determine the cost of finding the required permutation with elements arranged in monotonic order by their magnitude. So, the Computational Time Complexity of the Sorting Algorithm, which incorporates a Searching Algorithm of Computational Expense, , would be

Figure 1.

Pictorial representation of the Search-o-Sort theory.

Corollary 1.

For any sorting Algorithm, if the intermediate searching Algorithm claims a computational expense of , the computational complexity of the sorting Algorithm will be .

Proof.

The proof follows from the proposition (Equation (3)), of the Search-o-Sort, and since it is known that

NOTE: This proof (and following predicates in the manuscript) intentionally adoptions continuous summation (integrals, over discrete summation) for better convergence (as permissible in an asymptotic setting).

is the Big-Oh Asymptotic Notation (refer to Appendix A.1), and as per the properties of Asymptotes, if one has a function , and if,

then,

Thus , such that

□

In the following subsections, proposals of the novel sorting paradigms making use of the Interpolation, Jump, and k-ary Searching Techniques as the intermediate are made, and investigated. The aforementioned corollary (Equation (4)) is also verified.

2.2. The Realization

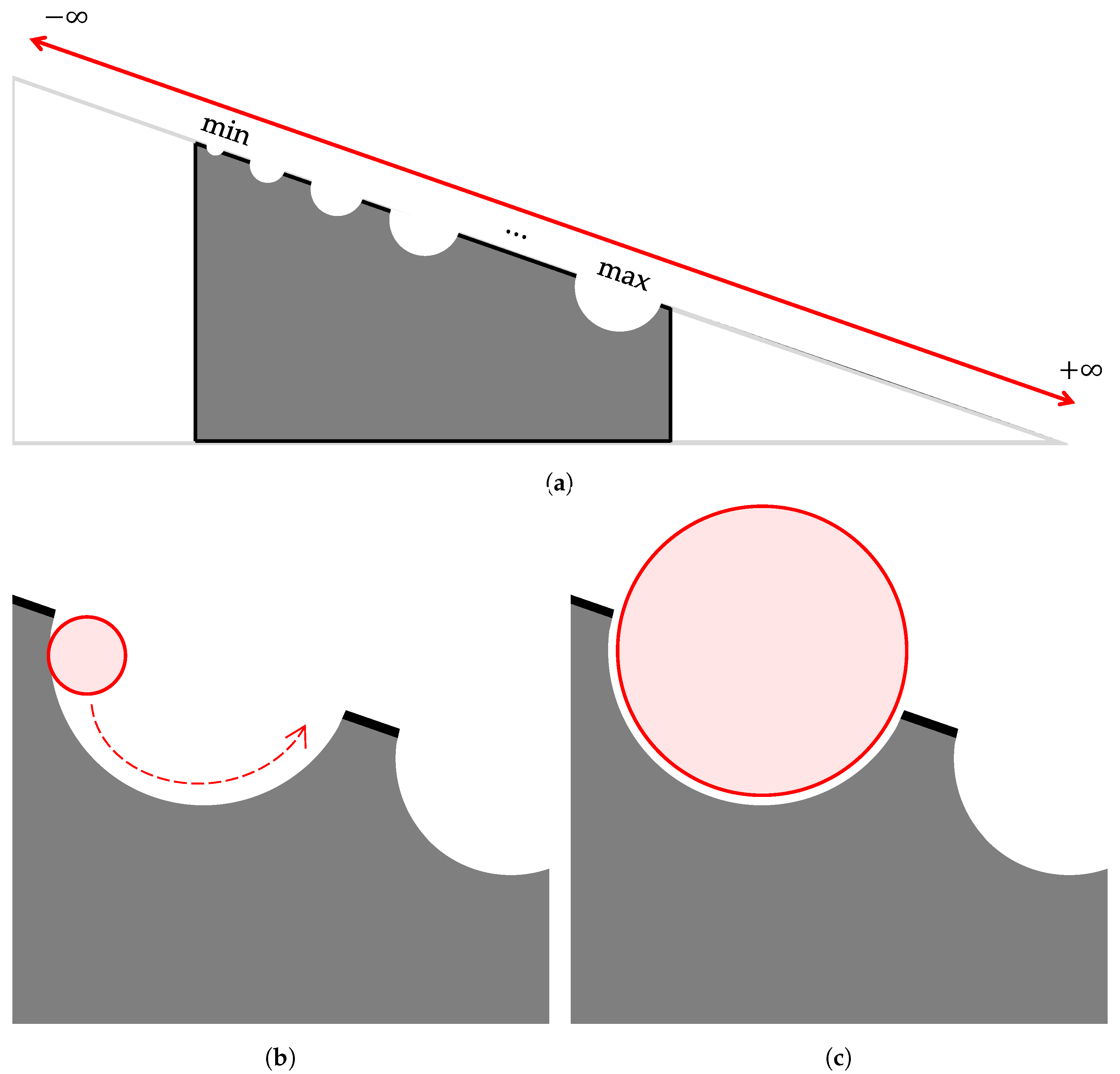

This Search-o-Sort theory might appear circular at first glance, as it invokes binary search as an intermediate for sorting, but this is not the case. This section aims to bridge the realization of the proposed Search-o-Sort theory. To this end, let us consider an infinitely long incline as depicted in Figure 2, and for each integer, there exist respective holes on the hypotenuse. Now, the list to be sorted consists of a fixed set of integers, which are to be sorted by their magnitude. Consider each of the integers as spheres of the respective radius, and the polarity, for example, the integer +7, would fit inside the hole of a radius of 7 units, and positive polarity, i.e., if the ball passes by the −7 hole, it would be repelled by the positive polarity of the hole, and even if it falls inside holes of smaller radius, it would be thrown off by its inertial force (refer Figure 2b. It will only fit inside a hole if both the polarity and the radius match (refer Figure 2c). We would roll each ball corresponding to each integer from the list to be ordered from the hole, and let them roll until they find their exact position.

Figure 2.

Infinitely long inclined plane (a), where the hypotenuse represents the ordered integral number system, . If the sphere falls in a hole of smaller radius, it will be thrown off by the inertial force (b), but will fit inside a hole of (nearly, as exact match might result in a toppling inertial force) the same radius (c).

Now since checking each hole will consume ∞ time, we would initially, run the Min-Max Algorithm (refer to Appendix B) in to obtain knowledge about the maximum and minimum of the integral list (i.e., assuming inputs to be linearly distributed) to be sorted, and since , or , we will now start rolling from a smaller index, until the higher index is reached. Now, unrestrictive rolling can be thought of like a linear search, where the ball checks for each hole one by one in a sequential manner. The same unrestricted rolling can be replaced by binary search, wherein the middle hole of the max and min is considered initially, and based on the suitability of the hole, i.e., whether the radius of the hole is greater than or less than that of the ball, the next hole is calculated. This is repeated for all the balls, representing all the entries of the list to be sorted, and thus Sorting is visualized as repeated Sorting.

2.3. k-Ary Sort

In k-ary Search, the list of data is divided into k parts and searched further by comparing elements such as the key, which is the target value subjected to supervision; that is, the element to be found concerning the middle elements of the list. If it is not found, one moves to Boolean-involving inequalities that quantitatively define the estimated position of the element to be searched. This can be considered as the general case of the family to which Binary search (2-ary search) and other searching algorithms like Ternary Search (3-ary search) belong. k-ary Search, being the generic member of all the possible partition search, contains characteristics that are improvised as an intersection of the characteristics of all the members of the family. In this generic search, the chunk of data is divided into k equal (probably) parts, with potent, indices that serve as middle points or points of supervision for the search. The generalized equation of one such point of supervision, relating to the initial diametric is

where,

It works on the principle of Divide and Conquer, where the whole chunk is divided into smaller sub-parts, and solved further. Algorithm 1 shows the Pseudocode for the k-ary Search Algorithm.

| Algorithm 1 Pseudocode for the k-ary Search |

|

Now, some of the important metrics regarding this k-ary Search Algorithm are as follows:

- The worst-case time complexity of the k-ary searching algorithm will be , which occurs if the target element is present in the indices involving bounds; that is, the starting and the ending indices.

- The best-case time complexity is , which occurs if the target element is present at any of the mid indices.

- The average-case time complexity is

If the key is present somewhere in the list, mathematically,

where, indicates a number of elements that require a total of k comparisons, which is equal to Now,

Now,

So,

If sorting was sought in terms of the generic k-ary Search, time, as per the computational complexities, will be

which can be further delineated as

Now, as mentioned earlier, there exist numerous approximations for this general factorial. Here, by making use of the “Very Accurate Approximations for the Factorial Function” by Necdet Batir [10], the result is sought. According to Batir,

Making use of the approximation from Equation (5),

which, on asymptotic notations, can be represented as in the order of

This follows the Search-o-Sort, as .

2.4. Interpolation Sort

Interpolation Search is one of the extrapolations of the Binary Search. It is like searching for a page in a book, where each page is marked distinctly. Algorithm 2 shows the Pseudocode for the Interpolation Search Algorithm.

Now, some of the important metrics regarding this Interpolation Search Algorithm are as follows:

- The worst-case time complexity of the Interpolation Searching Algorithm will be , which occurs if the target element is present in the indices involving bounds; that is, the starting and the ending indices.

- The Best-Case Time Complexity is , which occurs if the target element is present at any of the mid indices.

- In the average case, both cases are considered.

- (a)

- If the key is present in the list.

- (b)

- If it is not present in the list.

If the key is present somewhere in the list, it is assumed that the current search space is from 0 to , and n keys are independently drawn from a uniform distribution. As all the elements in the data cluster are independent of each other, it is expected that the expected number of probes is bound by a constant. So, the probability that at least one of them is equal to the will be

The probability that exactly of them is equal to will be

So, the number of keys expected to be less than or equal to will be

and variance of the following distribution will be

Since, , so, and

Using bounding conditions of Chebyshev’s Inequality [11],

Let be the average number of probes needed to find a key in an array of size n. Let C be the expected number of probes needed to reduce the search space of size x to .

where is the bound of the number of probes, C.

If sorting is sought in terms of the Interpolation Search, time, as per the computational complexities, it will be

which can be further delineated as

Since , present at the rear end, will make the argument as zero, and is undefined. Now, in practice, when it comes to searches in data having a single element, it will take a negligible amount of time, say , such that . In fact, it can be shown that as and as for any base, . So, its very evident from the observations that . To normalize this, the Arithmetic Mean of the range is considered, and due to this assumption, it could be proclaimed that . Finally, could be reformatted as

Coming back to Sorting,

which can be further delineated as

A tighter bound to this method could be evicted by making use of the Incomplete Gamma Function. To recall, for Interpolation Search,

Now,

In practice, , for will be undefined as mathematically, but in real-life instances of computation, .

where

| Algorithm 2 Pseudocode for the Interpolation Search |

|

Lemma 1.

is related to the bounds of the Exponential Integral, Ei as

where,

which is the Euler–Mascheroni constant.

Making use of Lemma 1,

where, .

So,

Now,

and

So, the maximum value of

will be

and that of

So, from Equation (7),

This follows the Search-o-Sort, as .

NOTE: A general interpolation paradigm is . As per the seminal work by Gonnet et al. [1]; they considered (linear interpolation). skews the probe towards the upper index, while skews the probe towards the lower index. This does not affect the computational complexity (asymptotically) of Interpolation Search (non-linear), and thus the Search-o-Sort theory holds.

2.5. Extrapolation Sort

In the literature, there are no algorithmic developments that (explicitly) relate to Extrapolation Searching, but for evaluation of the proposed Search-o-Sort Algorithm, a logical development is made in Algorithm 3. It searches for the key beyond the learned range of the sorted dataset by extrapolating the (likely) position of the target.

Now, some of the important metrics regarding Extrapolation Search Algorithm are as follows:

- The worst-case time complexity of the Extrapolation Searching Algorithm will be , which occurs if the data are highly skewed, or the extrapolated estimate repeatedly fails to converge toward the target, thus degrading to a sequential or linear-like search.

- The best-case time complexity is , which occurs if the target element lies near the extrapolated index on the first estimation and is found without any iterative refinement.

- In the average case, both conditions are considered:

- (a)

- If the key is present in the list.

- (b)

- If it is not present in the list.

| Algorithm 3 Pseudocode for the Extrapolation Search |

|

If the key is present somewhere in the list, it is assumed that the search space is uniformly distributed over , and n keys are independently drawn from a linear distribution. The goal is to predict the index outside the bounds where the value might lie using extrapolation rather than interpolation. The Apropos Interpolation Search, is obtained by further calculations (similar to its predecessor). Therefore, this follows the Search-o-Sort, as .

2.6. Jump Sort

Jump Search is a searching technique [9] that is well defined for sets with the existence of some sort of order prevailing between them. Its course of action is quite simple, and involves checking for every element in the list , for all , with as the evicted block size, until and unless the match is found, larger than the key of supervision. To find the exact position of the key of supervision in the list, a 1-ary search is performed on the sublist, . The block size to be evicted should be of appropriate optimality. Accordingly, is one such point, with n being the cardinality of the List . Algorithm 4 shows the Pseudocode for the Jump Search Algorithm.

| Algorithm 4 Pseudocode for the Jump Search |

|

Now, some of the important metrics regarding this Jump Search Algorithm are as follows:

- In the worst-case scenario, one has to complete jumps (n being the cardinality, m being the size of the block to be evicted) and if the last checked value is greater than the element to be searched for, it performs comparisons more for a linear search. Hence, on the whole, the number of comparisons in the worst case will be . So, the worst-case complexity will be .

- The best-case complexity can be obtained simply from the number of comparisons that need to be performed for supervision, . Now, . will be minimum when . With that being said, the best-case complexity becomes .

- The average-case complexity for this Searching Technique is abruptly equal to the worst-case complexity, .

If sorting was sought in terms of Jump Search, the time, as per the computational complexities, will be

Before, moving further, let us consider the following corroboration [12]

Claim.

&

Proof.

Now, firstly, let us consider a probability distribution, , and function , which is -measurable. Now, according to Jensen’s Inequality [13],

Now, let us consider the measure, , such that the density of the probability distribution, , concerns the measure .

Let us consider , and since

Further,

Finally, it can be concluded that

□

It is known that

By making use of the inequality, , it can be said,

which can be reformatted as . Now, for high values of n, or in just terms, when, , will give us a good bound.

Now, coming back to Sorting,

which can be further delineated on asymptotic notations, and can be represented as in the order of or , (Equation (9)) to stay in accordance with the assumption mentioned above.

This follows the Search-o-Sort Theory, as .

3. Conclusions

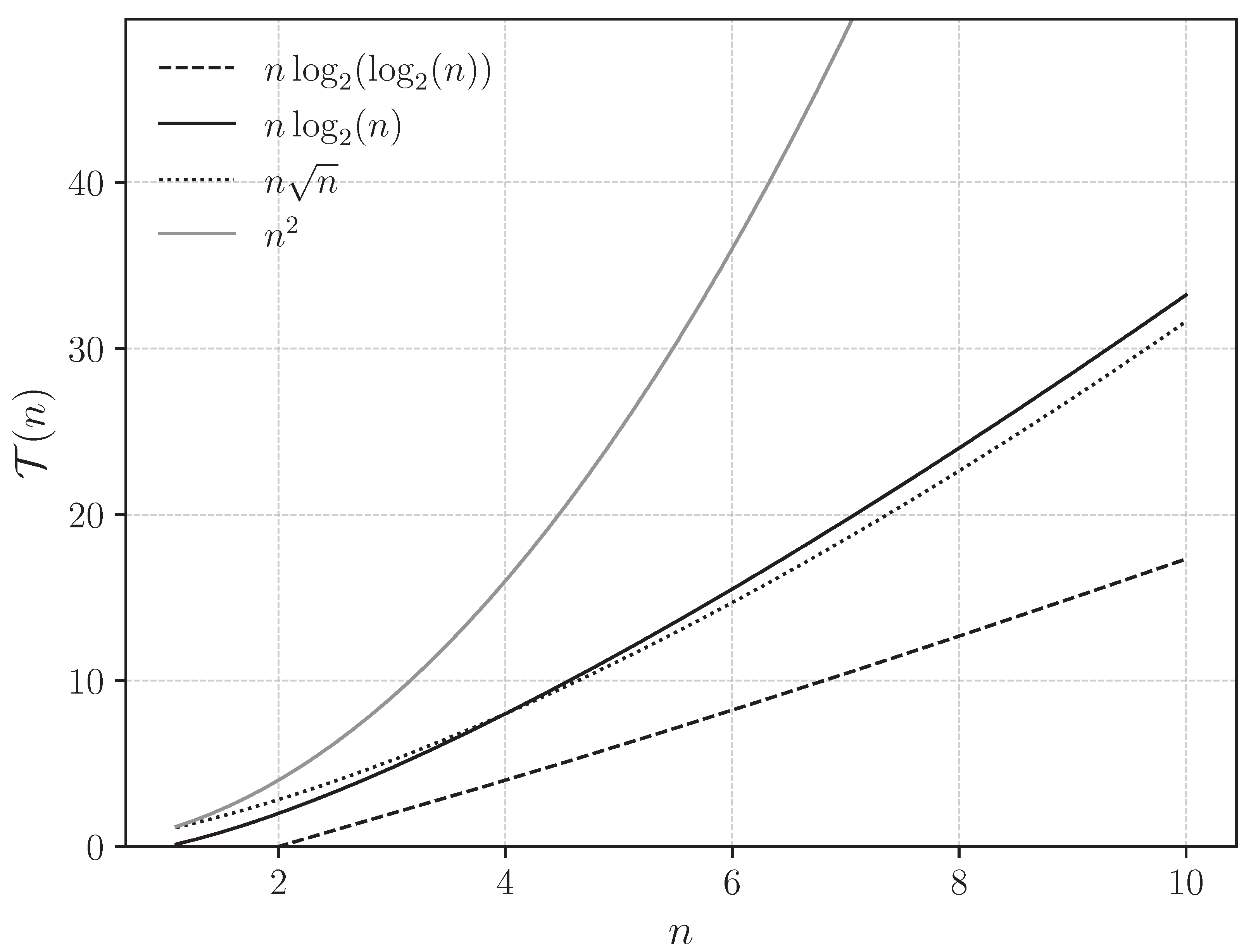

This Search-o-Sort theory is a bridge between Sorting and Searching Algorithms which seeks to merge the notions constructively. In the previous works, the minimum time that could be achieved was sought to be , which was attainable by making use of Binary Search. Now, in this research, its domain is extended to a more generic one. Sorting enhanced with k-ary search, which gave a minimal worst-case time complexity of is introduced. Further, we made use of the Interpolation Search, which indeed comes with some requisites, but still, assuming that the ample requirements being provided, it managed to fetch a computational time of the order . Further, the method was improvised with the notions of Incomplete Gamma Function, or digamma function, to obtain a Computational Advanced Complexity, in terms of the Big—Oh asymptotic as, . Lastly, we made use of Jump Search, which comes with a computational complexity of the order , which when implemented in Sorting, gave a computational complexity of . Some scopes of further research that could be considered are as follows:

- A tighter bound, if possible, could be searched for .

- An even tighter bound, if possible, could be searched for .

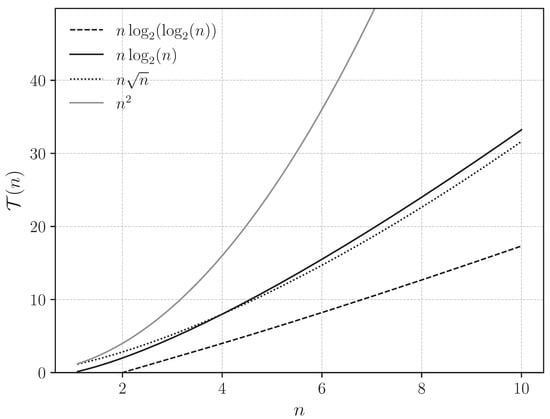

Figure 3 draws a comparative graphical study of the algorithms that are emphasized as a primary part of the research.

Figure 3.

Graphical plots for the Sorting Algorithm, with intermediate search being performed by making use of Interpolation Search, Binary Search, Jump Search, and Linear Search, respectively.

Author Contributions

Conceptualization, A.D. and S.K.; Methodology, A.D.; Software, P.K.K.; Validation, S.K., D.M. and P.K.K.; Formal Analysis, D.M.; Investigation, P.K.K.; Resources, D.M.; Data Curation, A.D.; Writing—Original Draft Preparation, A.D.; Writing—Review and Editing, P.K.K.; Visualization, S.K.; Supervision, P.K.K.; Project Administration, S.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article material. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Asymptotic Notations

Asymptotic notation is a mathematical tool that is used to understand growth of any function, particularly in algorithmic analysis. It enables comparison between different algorithms in terms of time or space, as the input size increases significantly (exceeding a threshold, say ). This section describes five common asymptotic notations, Big-Oh , Big-Omega , Big-Theta , little-oh , and little-omega .

Appendix A.1. Big Oh Notation—Upper Bound

Big Oh notation gives a loose upper bound on the growth of any function. A function, does not grow faster than up to a constant factor, c for sufficiently large . The Big Oh notation is defined as

if there exists constant , and for , such that for all ,

For example, if an Algorithm takes at most operations, its time complexity will be

Appendix A.2. Big Omega Notation—Lower Bound

Big Omega notation gives a loose lower bound on the growth of any function. A function grows at least as fast as up to a constant factor, c for sufficiently large . The Big Omega notation is defined as

if there exists constant , and for , such that for all ,

For example, if an Algorithm takes at least time in the worst case, its time complexity will be

Appendix A.3. Big Theta Notation—Tight Bound

Big Theta notation exerts a tight bound on the growth of any function. A function grows at the same rate as up to constant factors, , and for sufficiently large . The Big Theta notation is defined as

if there exists constant , and for , such that for all ,

For example, if an Algorithm’s run time is both at most and at least proportional to , its time complexity will be .

Appendix A.4. Little Oh Notation—Strict Upper Bound

Little Oh notation exerts a strict upper bound on the growth of any function. A function, grows strictly slower than up to a constant factor, for sufficiently large . The Little Oh notation is defined as

if there exists constant , and for , such that for all ,

For example, if an Algorithm’s running time is , its time complexity can be interpreted as .

Appendix A.5. Little Omega Notation—Strict Lower Bound

Little Omega notation exerts a strict lower bound on the growth of any function. A function, grows strictly faster than up to a constant factor, c for sufficiently large . The Little Omega notation is defined as

if there exists constant , and for , such that for all ,

For example, if an Algorithm takes linear time in the worst case, its time complexity can be interpreted as

Appendix B. Min-Max Algorithm

The Min-Max Algorithm (refer to Algorithm A1) finds both the minimum and maximum of an integral list (linearly distributed) with n numbers in comparisons. A precise count for the number of comparisons can be found as follows:

- For even n (i.e., ), one comparison is needed to initialize both the min and max. In pairwise processing, since the Algorithm starts from the third element of the list, the remaining elements are processed in pairs, and since n is even, is an integer. For each of these pairs, say three comparisons are needed, one to compare a and b; one to compare the smaller of these with the min; and another to compare the larger of these with the max. In total, for an even n, total comparisons are needed.

- For an odd n (i.e., ), no comparison is needed to initialize the min and max, as they are set to the first element of the list. In pairwise processing, since the Algorithm starts from the second element of the list, the remaining elements are processed in pairs. For each of these pairs, again, three comparisons are needed. So, in total, for odd n, total comparisons are needed.

| Algorithm A1 Pseudocode for Min-Max algorithm |

|

References

- Gonnet, G.H.; Rogers, L.D.; George, J.A. An algorithmic and complexity analysis of interpolation search. Acta Inform. 1980, 13, 39–52. [Google Scholar] [CrossRef]

- Manna, Z.; Waldinger, R. The origin of a binary-search paradigm. Sci. Comput. Program. 1987, 9, 37–83. [Google Scholar] [CrossRef]

- Chadha, A.R.; Misal, R.; Mokashi, T. Modified binary search Algorithm. arXiv 2014, arXiv:1406.1677. [Google Scholar]

- Thwe, P.P.; Kyi, L.L.W. Modified binary search Algorithm for duplicate elements. Int. J. Comput. Commun. Eng. Res. (IJCCER) 2014, 2. Available online: https://www.researchgate.net/publication/326088292_Modified_Binary_Search_Algorithm_for_Duplicate_Elements (accessed on 29 March 2025).

- Wan, Y.; Wang, M.; Ye, Z.; Lai, X. A feature selection method based on modified binary coded ant colony optimization Algorithm. Appl. Soft Comput. 2016, 49, 248–258. [Google Scholar] [CrossRef]

- Bajwa, M.S.; Agarwal, A.P.; Manchanda, S. Ternary search Algorithm: Improvement of binary search. In Proceedings of the 2015 2nd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 11–13 March 2015; pp. 1723–1725. [Google Scholar]

- Dutta, A.; Ray, S.; Kumar, P.K.; Ramamoorthy, A.; Pradeep, C.; Gayen, S. A Unified Vista and Juxtaposed Study on k-ary Search Algorithms. In Proceedings of the 2024 2nd International Conference on Networking, Embedded and Wireless Systems (ICNEWS), Bangalore, India, 22–23 August 2024; pp. 1–6. [Google Scholar]

- Perl, Y.; Reingold, E.M. Understanding the complexity of interpolation search. Inf. Process. Lett. 1977, 6, 219–222. [Google Scholar] [CrossRef]

- Shneiderman, B. Jump searching: A fast sequential search technique. Commun. ACM 1978, 21, 831–834. [Google Scholar] [CrossRef]

- Batir, N. Very accurate approximations for the factorial function. J. Math. Inequal 2010, 4, 335–344. [Google Scholar] [CrossRef]

- Olkin, I.; Pratt, J.W. A multivariate Tchebycheff inequality. Ann. Math. Stat. 1958, 29, 226–234. [Google Scholar] [CrossRef]

- Gesellschaft der Wissenschaften zu Göttingen; Georg-August-Universität Göttingen Nachrichten von der Königl. Gesellschaft der Wissenschaften und der Georg-Augusts-Universität zu Göttingen: Aus dem Jahre. 1884. Available online: https://gdz.sub.uni-goettingen.de/id/PPN252457072 (accessed on 29 March 2025).

- Jensen, J.L.W.V. Sur les fonctions convexes et les inégalités entre les valeurs moyennes. Acta Math. 1906, 30, 175–193. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).