Introducing the Leal Method for the Approximation of Integrals with Asymptotic Behaviour: Special Functions

Abstract

1. Introduction

2. Introduction to Leal Method

- 1.

- 2.

- If with j expansion points, then we solve (3) symbolically for variables and finally use the least squares method with the remaining variables to fit with respect to . The suggested interval to perform the fitting is the zone that exhibits the poorest accuracy for the pure asymptotic solution ().

3. Least Squares Method (LSM)

4. Leal Method Applied to Approximate Special Functions

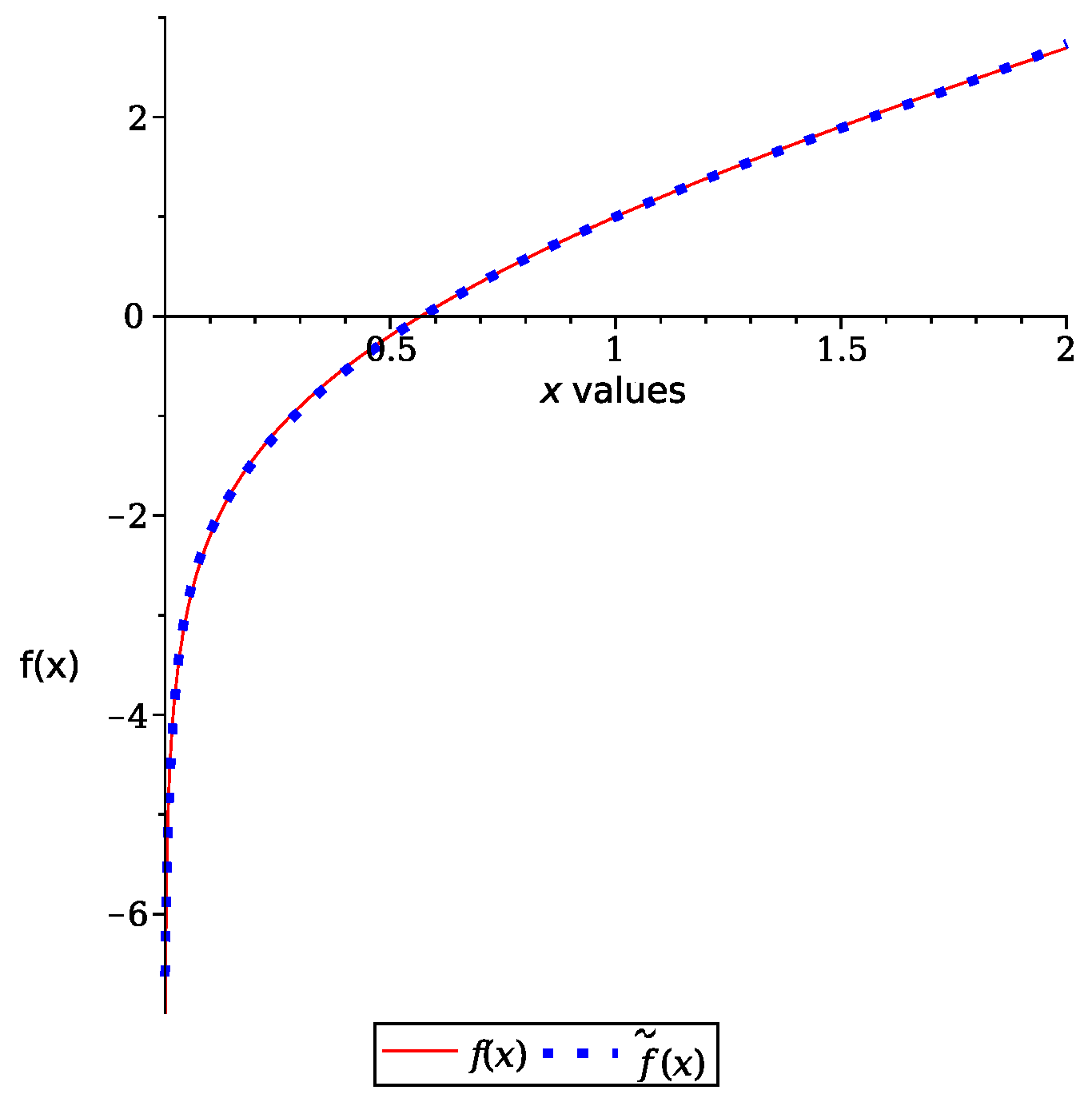

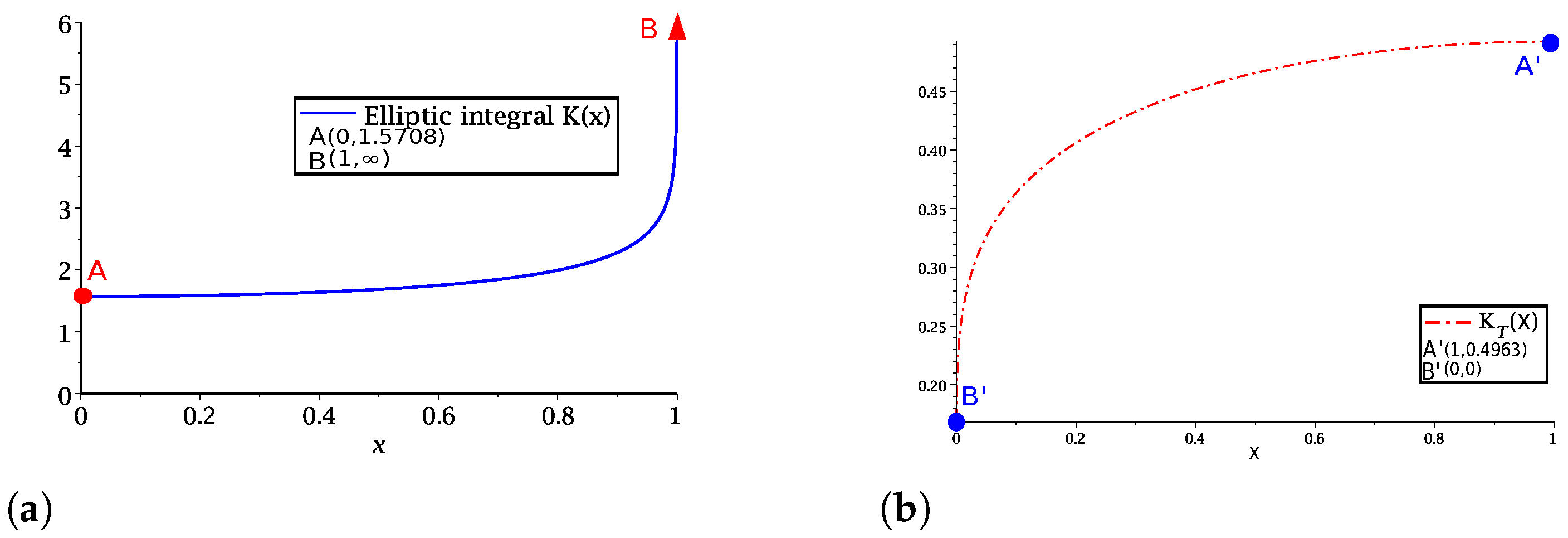

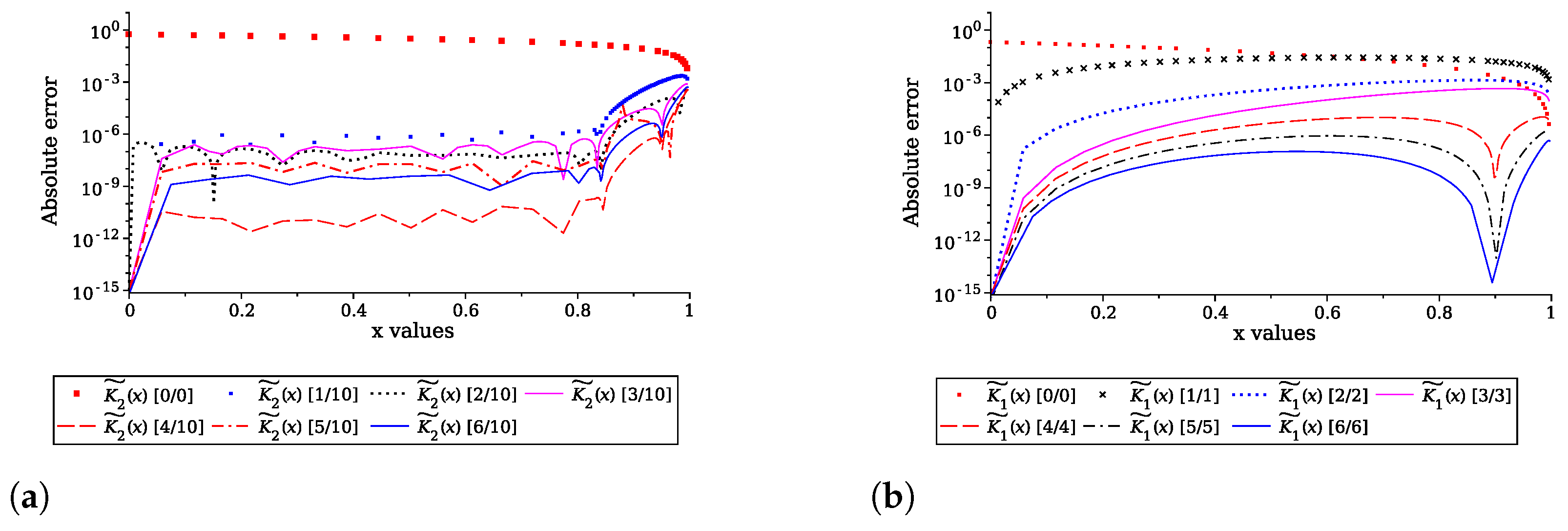

4.1. Approximation Procedure Using Variation of LEAL Method, Variant 2

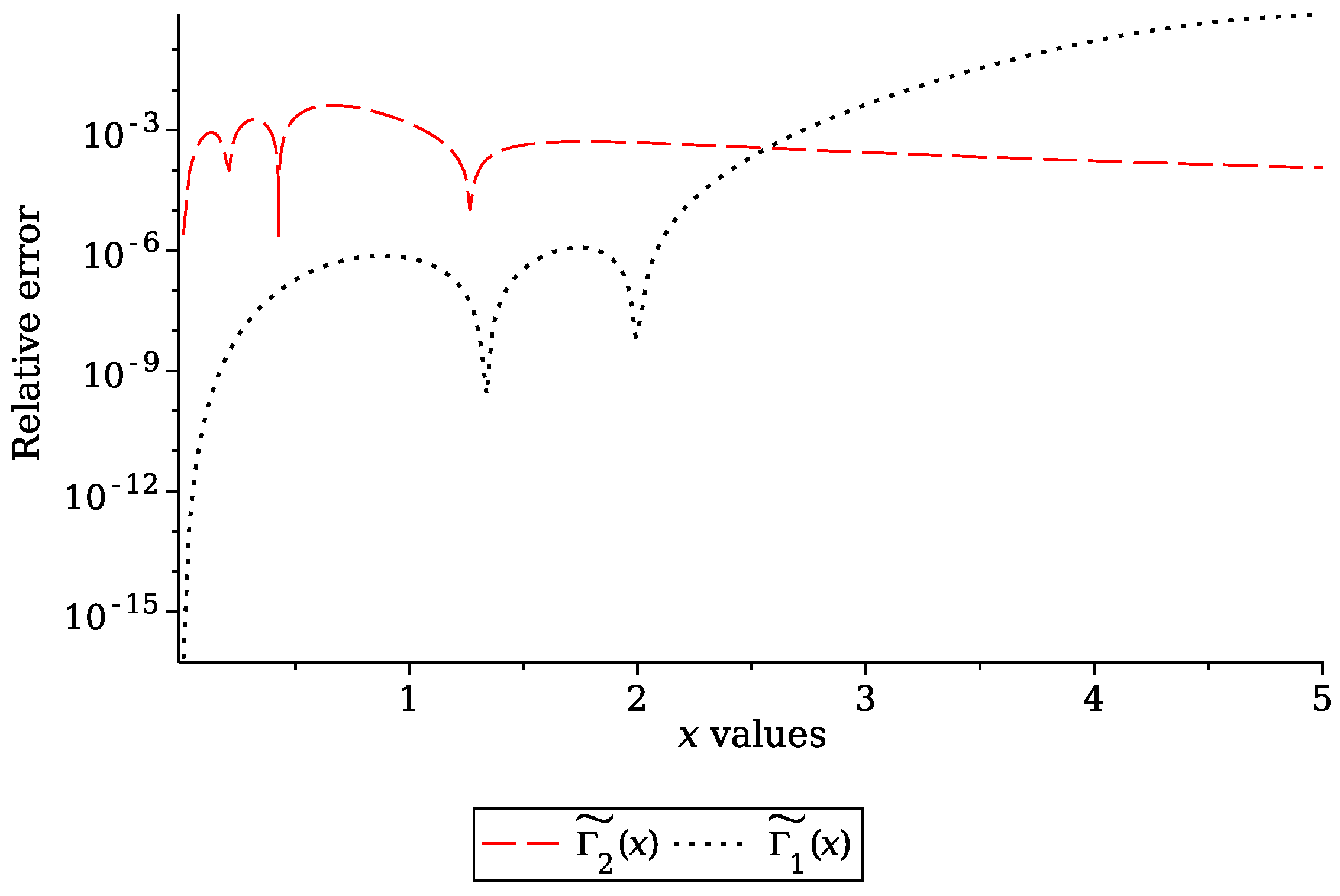

4.2. Approximation Procedure for Using Variation 1 of Leal Method

4.3. Approximation of Using Variation 2 of Leal Method

4.4. Approximation of Using Variation 1 of Leal Method

4.5. Approximation of Using Variation 2 of Leal Method

4.6. Approximation of Error Function Using Variation 2 of Leal Method

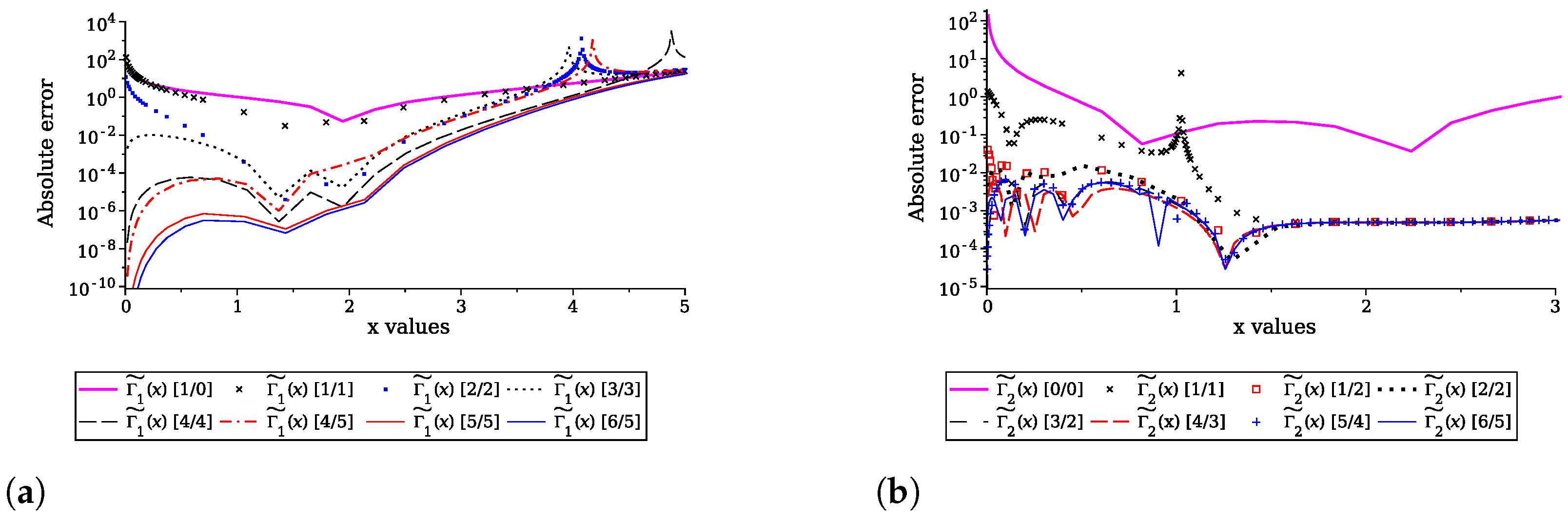

5. Computing Convergence

6. Numerical Comparison and Discussion

- ♣

- The Leal method is capable of providing algebraic expressions, similar to other approximation techniques.

- ♣

- The Leal method can be coupled to work in combination with power series expansions and asymptotic expansions, as reported in the case studies of this work. This strategy can produce a remarkable increase in the domain of convergence (see case studies). In fact, further research will focus on exploring the combination of the Leal method with the Variational Iteration Method, Adomian Decomposition Method, among others.

- ♣

- The Leal method can be applied without requiring the aforementioned coupling with other approximate methods (see Variation 1 of the Leal method in Section 4.2 and Section 4.4). In this case, the Leal method can be applied using basic knowledge of calculus and numerical methods; in contrast, some approximate methods are too cumbersome and require specialized knowledge. Taking these advantages into account, this method can also be applied to solve nonlinear ordinary differential equations.

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Maple Code

Appendix A.2. Special Functions Equations

References

- Burden, R.L.; Faires, J.D. Numerical Analysis; Cengage Learning: Singapore, 2010. [Google Scholar]

- Saad-Albalawi, K.; Saad-Alkahtani, B.; Kumar, A.; Goswami, P. Numerical Solution of Time-Fractional Emden–Fowler-Type Equations Using the Rational Homotopy Perturbation Method. Symmetry 2023, 15, 258. [Google Scholar] [CrossRef]

- Sandoval-Hernandez, M.A.; Vazquez-Leal, H.; Filobello-Nino, U.; Hernandez-Martinez, L. New handy and accurate approximation for the Gaussian integrals with applications to science and engineering. Open Math. 2019, 17, 1774–1793. [Google Scholar] [CrossRef]

- Vazquez-Leal, H.; Sandoval-Hernandez, M.; Garcia-Gervacio, J.; Herrera-May, A.; Filobello-Nino, U. PSEM approximations for both branches of Lambert function with applications. Discret. Dyn. Nat. Soc. 2019, 2019, 8267951. [Google Scholar] [CrossRef]

- de Brujin, N.G. Asymptotic Methods in Analysis; Dover: Amsterdm, The Netherlands, 1961. [Google Scholar]

- Jakimczuk, R. Some Applications of the Euler-Maclaurin Summation Formula. Int. Math. Forum 2013, 8, 9–14. Available online: https://www.m-hikari.com/imf/imf-2013/1-4-2013/jakimczukIMF1-4-2013.pdf (accessed on 10 December 2024). [CrossRef][Green Version]

- Herschel, J.F. A Brief Notice of the Life, Researches, and Discoveries of Friedrich Wilhelm Bessel; G. Barclay: Trangie, NSW, Australia, 1847. [Google Scholar]

- Butzer, P.; Jongmans, F. PL Chebyshev (1821–1894): A guide to his life and work. J. Approx. Theory 1999, 96, 111–138. [Google Scholar] [CrossRef]

- Schrödinger, E. An Undulatory Theory of the Mechanics of Atoms and Molecules. Phys. Rev. 1926, 28, 1049–1070. [Google Scholar] [CrossRef]

- Muñoz, J.L. Riemann: Una visión Nueva de la Geometría; Nivola: New York, NY, USA, 2006. [Google Scholar]

- Sandoval-Hernandez, M.; Vazquez-Leal, H.; Hernandez-Martinez, L.; Filobello-Nino, U.; Jimenez-Fernandez, V.; Herrera-May, A.; Castaneda-Sheissa, R.; Ambrosio-Lazaro, R.; Diaz-Arango, G. Approximation of Fresnel integrals with applications to diffraction problems. Math. Probl. Eng. 2018, 2018, 4031793. [Google Scholar] [CrossRef]

- Aznar, Á.C.; Roca, L.J.; Casals, J.M.R.; Robert, J.R.; Boris, S.B.; Bataller, M.F. Antenas; Alfaomega: London, UK, 2004. [Google Scholar]

- Sandoval-Hernández, M.; Hernández-Méndez, S.; Torreblanca-Bouchan, S.E.; Díaz-Arango, G.U. Actualización de contenidos en el campo disciplinar de matemáticas del componente propedéutico del bachillerato tecnológico: El caso de las funciones especiales. RIDE Rev. Iberoam. Investig. Desarro. Educ. 2021, 12, 23. [Google Scholar] [CrossRef]

- Rohedi, A.Y.; Pramono, Y.H.; Widodo, B.; Yahya, E. The Novelty of Infinite Series for the Complete Elliptic Integral of the First Kind. Preprints 2016. [Google Scholar] [CrossRef]

- Guedes, E.; Gandhi, K.R.R. On the Complete Elliptic Integrals and Babylonian Identity II: An Approximation for the Complete Elliptic Integral of the first kind. Bull. Math. Sci. Appl. 2013, 2, 72–78. [Google Scholar] [CrossRef]

- Borwein, J.M.; Borwein, P.B. Pi and the AGM; Wiley: New York, NY, USA, 1987. [Google Scholar]

- Abramowitz, M.; Stegun, I.A. Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables; National Bureau of Standards: Gaithersburg, MD, USA, 1973. [Google Scholar]

- Vatankhah, A.R. Approximate solutions to complete elliptic integrals for practical use in water engineering. J. Hydrol. Eng. 2011, 16, 942–945. [Google Scholar] [CrossRef]

- Ramanujan, A.; Srinivasa, A.; George, E. The Lost Notebook and Other Unpublished Papers; Narosa Publishing House New Delhi: New Delhi, India, 1988. [Google Scholar]

- Nemes, G. New asymptotic expansion for the Gamma function. Arch. Math. 2010, 95, 161–169. [Google Scholar] [CrossRef]

- Patel, J.K.; Read, C.B. Handbook of the Normal Distribution, 2nd ed.; V1ARCEL DEKKER, INC.: New York, NY, USA; Department of Statistics, Southern Methodist University Dallas: Dallas, TX, USA, 1996. [Google Scholar]

- Winitsky, S. A handy Approximation for the Error Function and Its Inverse. A Lecture Note Obtained through Private Communication. 2008. Available online: https://wenku.baidu.com/view/d2a5e11ec5da50e2524d7ff1?pcf=2&bfetype=new&bfetype=new&_wkts_=1738961370306&needWelcomeRecommand=1 (accessed on 12 December 2024).

- Vazquez-Leal, H.; Castaneda-Sheissa, R.; Filobello-Nino, U.; Sarmiento-Reyes, A.; Orea, J.S. High Accurate Simple Approximation of Normal Distribution Integral. Math. Probl. Eng. 2012, 2012, 124029. [Google Scholar] [CrossRef]

- He, J.-H.; Ji, F.Y. Taylor series solution for Lane–Emden equation. J. Math. Chem. 2019, 57, 1932–1934. [Google Scholar] [CrossRef]

- He, J.H. The simplest approach to nonlinear oscillators. Results Phys. 2019, 15, 102546. [Google Scholar] [CrossRef]

- Vazquez-Leal, H.; Sarmiento-Reyes, A. Power series extender method for the solution of nonlinear differential equations. Math. Probl. Eng. 2015, 2015, 717404. [Google Scholar] [CrossRef]

- Gerald, C.F. Análisis Numérico; Alfaomega: London, UK, 1997. [Google Scholar]

- Bates, D.M.; Watts, D.G. Nonlinear regression: Iterative estimation and linear approximations. Nonlinear Regres. Anal. Its Appl. 1988, 32–66. [Google Scholar] [CrossRef]

- Boyd, Stephen Convex Optimization; Cambridge University Press: Cambridge, UK, 2004.

- Alomari, A.K.; Noorani, M.S.M.; Nazar, R.M. Approximate analytical solutions of the Klein-Gordon equation by means of the homotopy analysis method. J. Qual. Meas. Anal. 2008, 4, 45–57. Available online: https://www.ukm.my/jqma/v4_1/JQMA-4-1-04-abstractrefs.pdf (accessed on 12 December 2024).

- Shirazian, M. A new acceleration of variational iteration method for initial value problems. Math. Comput. Simul. 2023, 214, 246–259. [Google Scholar] [CrossRef]

- Liu, Y.; Ding, F. Convergence properties of the least squares estimation algorithm for multivariable systems. Appl. Math. Model. 2013, 37, 476–483. [Google Scholar] [CrossRef]

- Deckers, K.; Bultheel, A. Rational interpolation: I. Least square convergence. J. Math. Anal. Appl. 2012, 395, 455–464. [Google Scholar] [CrossRef]

- Dieuleveu, A.; Flammarion, N.; Bach, F. Harder, Better, Faster, Stronger Convergence Rates. Appl. Math. Model.-Least-Squares Regres. 2017, 18, 1–51. [Google Scholar]

- Rajaraman, V. IEEE standard for floating point numbers. Resonance 2016, 21, 11–30. Available online: https://www.ias.ac.in/public/Volumes/reso/021/01/0011-0030.pdf (accessed on 10 December 2024). [CrossRef]

- Barry, D.; Culligan-Hensley, P.; Barry, S. Real values of the W-function. ACM Trans. Math. Softw. (TOMS) 1995, 21, 161–171. [Google Scholar] [CrossRef]

- Wazwaz, A.M. Linear and Nonlinear Integral Equations: Methods and Applications, 1st ed.; Springer Publishing Company, Incorporated: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- He, J.H.; Mo, L.F. Variational approach to the finned tube heat exchanger used in hydride hydrogen storage system. Int. J. Hydrogen Energy 2019, 38, 16177–16178. [Google Scholar] [CrossRef]

- He, J.H.; Chang, S. A variational principle for a thin film equation. J. Math. Chem. 2013, 57, 2075–2081. [Google Scholar] [CrossRef]

- He, J.H. Lagrange crisis and generalized variational principle for 3D unsteady flow. Int. J. Numer. Methods Heat Fluid Flow 2019, 30, 1189–1196. [Google Scholar] [CrossRef]

| Case Study | Variant | Order | Chosen Interval |

|---|---|---|---|

| 1 | 1 | ||

| 2 | |||

| 2 | 1 | ||

| 2 | |||

| 3 | 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vazquez-Leal, H.; Sandoval-Hernandez, M.A.; Filobello-Nino, U.A.; Huerta-Chua, J.; Aguilar-Velazquez, R.; Dominguez-Chavez, J.A. Introducing the Leal Method for the Approximation of Integrals with Asymptotic Behaviour: Special Functions. AppliedMath 2025, 5, 28. https://doi.org/10.3390/appliedmath5010028

Vazquez-Leal H, Sandoval-Hernandez MA, Filobello-Nino UA, Huerta-Chua J, Aguilar-Velazquez R, Dominguez-Chavez JA. Introducing the Leal Method for the Approximation of Integrals with Asymptotic Behaviour: Special Functions. AppliedMath. 2025; 5(1):28. https://doi.org/10.3390/appliedmath5010028

Chicago/Turabian StyleVazquez-Leal, Hector, Mario A. Sandoval-Hernandez, Uriel A. Filobello-Nino, Jesus Huerta-Chua, Rosalba Aguilar-Velazquez, and Jose A. Dominguez-Chavez. 2025. "Introducing the Leal Method for the Approximation of Integrals with Asymptotic Behaviour: Special Functions" AppliedMath 5, no. 1: 28. https://doi.org/10.3390/appliedmath5010028

APA StyleVazquez-Leal, H., Sandoval-Hernandez, M. A., Filobello-Nino, U. A., Huerta-Chua, J., Aguilar-Velazquez, R., & Dominguez-Chavez, J. A. (2025). Introducing the Leal Method for the Approximation of Integrals with Asymptotic Behaviour: Special Functions. AppliedMath, 5(1), 28. https://doi.org/10.3390/appliedmath5010028