On One Problem of the Nonlinear Convex Optimization

Abstract

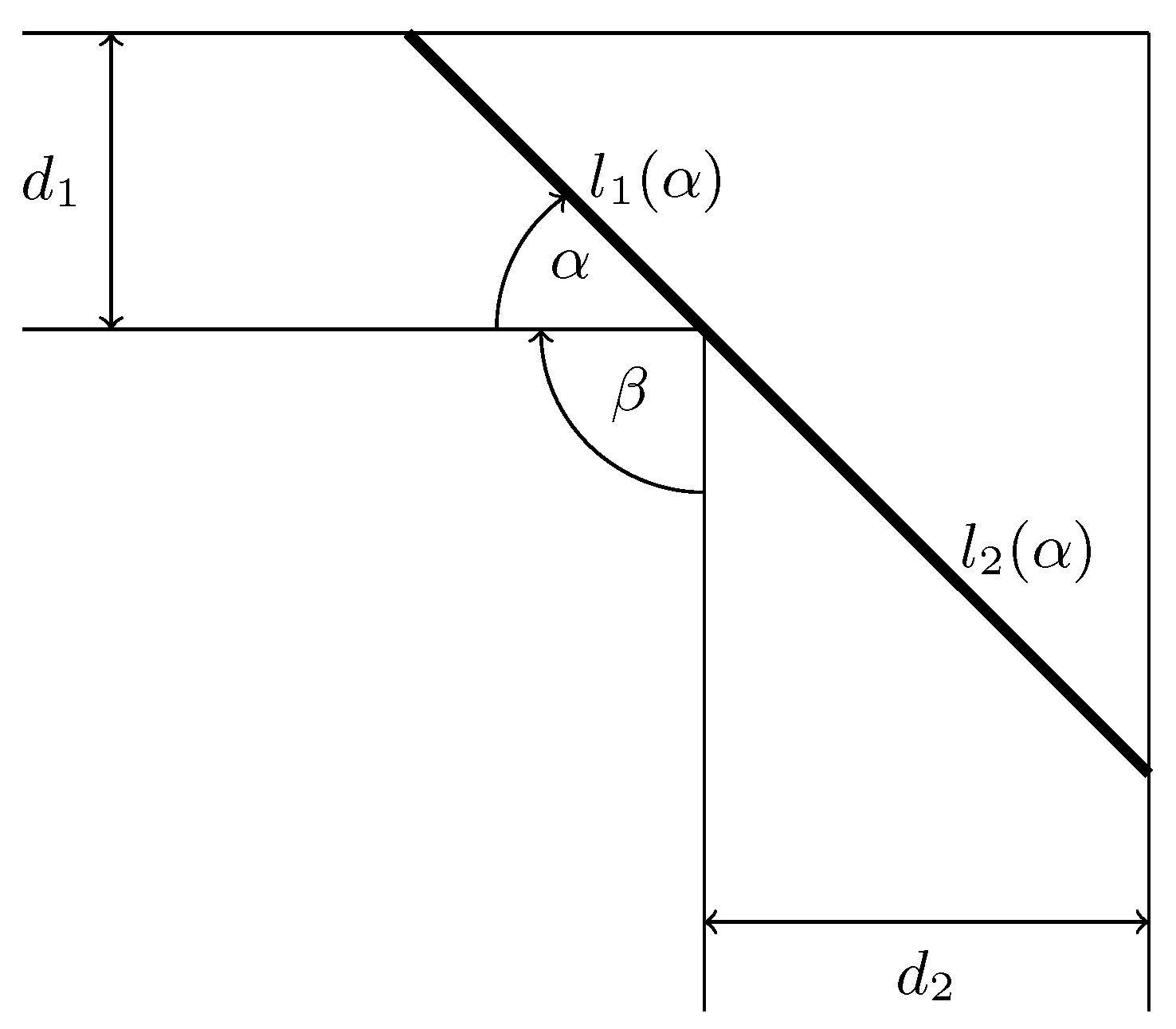

:1. Formulation of the Problem

- -

- They are close to being the broadest class of problems we know how to solve efficiently.

- -

- They enjoy nice geometric properties (e.g., local minima are global).

- -

- There are excellent softwares that readily solve a large class of convex problems.

- -

- Numerous important problems in a variety of application domains are convex.

A Brief History of Convex Optimization

2. Analytical and Numerical Solution of the Convex Optimization Problem

| syms alpha beta d1 d2 |

| d1 = 1; % width of the first channel |

| d2 = 2; % width of the second channel |

| beta = pi/2; % an angle between the navigable channels |

| l = (d1/sin(alpha))+(d2/sin(alpha+beta)); % the cost function from (1) |

| la = diff(l,alpha); |

| eqn = la == 0; |

| num = vpasolve(eqn,alpha,[0 pi-beta]); % numerical solver |

| solution_max_length = simplify(subs(l,num),’Steps’,20) % output |

3. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ahmadi, A.A. Princeton University. 2015. Available online: https://www.princeton.edu/~aaa/Public/Teaching/ORF523/S16/ORF523_S16_Lec4_gh.pdf (accessed on 10 August 2022).

- Stigler, S.M. Gauss and the Invention of Least Squares. Ann. Stat. 1981, 9, 465–474. [Google Scholar] [CrossRef]

- Ghaoui, L.E. University of Berkeley. 2013. Available online: https://people.eecs.berkeley.edu/~elghaoui/Teaching/EE227BT/LectureNotes_EE227BT.pdf (accessed on 10 August 2022).

- Dantzig, G. Origins of the simplex method. In A History of Scientific Computing; Nash, S.G., Ed.; Association for Computing Machinery: New York, NY, USA, 1987; pp. 141–151. [Google Scholar]

- Dantzig, G. Linear Programming and Extensions; Princeton University Press: Princeton, NJ, USA, 1963. [Google Scholar]

- Fiacco, A.; McCormick, G. Nonlinear programming: Sequential Unconstrained Minimization Techniques; John Wiley and Sons: New York, NY, USA, 1968. [Google Scholar]

- Dikin, I. Iterative solution of problems of linear and quadratic programming. Sov. Math. Dokl. 1967, 174, 674–675. [Google Scholar]

- Dikin, I. On the speed of an iterative process. Upr. Sist. 1974, 12, 54–60. [Google Scholar]

- Yudin, D.; Nemirovskii, A. Informational complexity and efficient methods for the solution of convex extremal problems. Matekon 1976, 13, 22–45. [Google Scholar]

- Khachiyan, L. A polynomial time algorithm in linear programming. Sov. Math. Dokl. 1979, 20, 191–194. [Google Scholar]

- Shor, N. On the Structure of Algorithms for the Numerical Solution of Optimal Planning and Design Problems. Ph.D. Thesis, Cybernetic Institute, Academy of Sciences of the Ukrainian SSR, Kiev, Ukraine, 1964. [Google Scholar]

- Shor, N. Cut-off method with space extension in convex programming problems. Cybernetics 1977, 13, 94–96. [Google Scholar] [CrossRef]

- Rodomanov, A.; Nesterov, Y. Subgradient ellipsoid method for nonsmooth convex problems. Math. Program. 2022, 1–37. [Google Scholar] [CrossRef]

- Karmarkar, N. A new polynomial time algorithm for linear programming. Combinatorica 1984, 4, 373–395. [Google Scholar] [CrossRef]

- Adler, I.; Karmarkar, N.; Resende, M.; Veiga, G. An implementation of Karmarkar’s algorithm for linear programming. Math. Program. 1989, 44, 297–335. [Google Scholar] [CrossRef]

- Klee, V.; Minty, G. How Good Is the Simplex Algorithm? Inequalities—III; Shisha, O., Ed.; Academic Press: New York, NY, USA, 1972; pp. 159–175. [Google Scholar]

- Nesterov, Y.; Nemirovski, A.S. Interior Point Polynomial Time Methods in Convex Programming; SIAM: Philadelphia, PA, USA, 1994. [Google Scholar]

- Nemirovski, A.S.; Todd, M.J. Interior-point methods for optimization. Acta Numer. 2008, 17, 191–234. [Google Scholar] [CrossRef]

- Nimana, N. A Fixed-Point Subgradient Splitting Method for Solving Constrained Convex Optimization Problems. Symmetry 2020, 12, 377. [Google Scholar] [CrossRef]

- Han, D.; Liu, T.; Qi, Y. Optimization of Mixed Energy Supply of IoT Network Based on Matching Game and Convex Optimization. Sensors 2020, 20, 5458. [Google Scholar] [CrossRef] [PubMed]

- Popescu, C.; Grama, L.; Rusu, C. A Highly Scalable Method for Extractive Text Summarization Using Convex Optimization. Symmetry 2021, 13, 1824. [Google Scholar] [CrossRef]

- Jiao, Q.; Liu, M.; Li, P.; Dong, L.; Hui, M.; Kong, L.; Zhao, Y. Underwater Image Restoration via Non-Convex Non-Smooth Variation and Thermal Exchange Optimization. J. Mar. Sci. Eng. 2021, 9, 570. [Google Scholar] [CrossRef]

- Alfassi, Y.; Keren, D.; Reznick, B. The Non-Tightness of a Convex Relaxation to Rotation Recovery. Sensors 2021, 21, 7358. [Google Scholar] [CrossRef]

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Bartsch, H.-J.; Sachs, M. Taschenbuch Mathematischer Formeln für Ingenieure und Naturwissenschaftler, 24th ed.; Neu Bearbeitete Auflage; Carl Hanser Verlag GmbH & Co., KG.: Munich, Germany, 2018. [Google Scholar]

- Ebrahimnejad, A.; Verdegay, J.L. An efficient computational approach for solving type-2 intuitionistic fuzzy numbers based Transportation Problems. Int. J. Comput. Intell. Syst. 2016, 9, 1154–1173. [Google Scholar] [CrossRef]

- Ebrahimnejad, A.; Nasseri, S.H. Linear programmes with trapezoidal fuzzy numbers: A duality approach. Int. J. Oper. Res. 2012, 13, 67–89. [Google Scholar] [CrossRef]

| , | 2.00 | 2.07 | 2.16 | 2.82 | 4.00 | 5.22 | 127.32 |

| , | 4.00 | 4.10 | 4.25 | 5.40 | 7.54 | 9.80 | 237.60 |

| , | 6.00 | 6.12 | 6.29 | 7.77 | 10.69 | 13.84 | 333.35 |

| , | 9.00 | 9.22 | 9.54 | 12.01 | 16.70 | 21.69 | 524.70 |

| , | 11.00 | 11.23 | 11.58 | 14.38 | 19.84 | 25.71 | 620.27 |

| , | 13.00 | 13.24 | 13.60 | 16.70 | 22.90 | 29.61 | 712.44 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vrabel, R. On One Problem of the Nonlinear Convex Optimization. AppliedMath 2022, 2, 512-517. https://doi.org/10.3390/appliedmath2040030

Vrabel R. On One Problem of the Nonlinear Convex Optimization. AppliedMath. 2022; 2(4):512-517. https://doi.org/10.3390/appliedmath2040030

Chicago/Turabian StyleVrabel, Robert. 2022. "On One Problem of the Nonlinear Convex Optimization" AppliedMath 2, no. 4: 512-517. https://doi.org/10.3390/appliedmath2040030

APA StyleVrabel, R. (2022). On One Problem of the Nonlinear Convex Optimization. AppliedMath, 2(4), 512-517. https://doi.org/10.3390/appliedmath2040030