1. Introduction

Over the last few decades, detecting influences between variables of a dynamical system from time series data has shed light on the interplay between quantities in various fields, such as between genes [

1,

2], between wealth and transportation [

3], between marine populations [

4], or within the Earth’s climate [

5,

6]. Such analyses can reveal the driving forces behind complex phenomena and enable the understanding of connections that otherwise would have been left uncovered. For example, recently, novel candidates for cancer-causing genes have been detected by systematic dependency analyses using patient data [

7,

8,

9].

There exist various conceptually different numerical methods that aim to detect relations between variables from data. For example, a prominent method is Convergent Cross-Mapping (CCM) [

10], introduced in 2012, which uses the delay-embedding theorem of Takens [

11]. Another method is Granger causality [

12,

13], which was first used in economics in the 1960s and functions based on the intuition that if a variable

X forces

Y, then values of

X should help to predict

Y. Much simpler than these methods is the well-known Pearson correlation coefficient [

14], introduced already in the 19th Century and one of many methods that detect linear dependencies [

15]. There exist many more, such as the Mutual Information Criterion [

16], the Heller–Gorfine test [

17], Kendall’s

[

18], the transfer entropy method [

19], the Hilbert–Schmidt independence criterion [

20], or the Kernel Granger test [

21].

Recently, in [

22], a summarising library comprising a broad range of statistical methods was presented together with many examples. This illustrates the large number of already existing statistical techniques to detect influences between variables of a dynamical system. However, as also pointed out in [

22], such methods have different strengths and shortcomings, making them well-suited for different scenarios while ill-suited for others. For example, CCM requires data coming from dynamics on an attracting manifold and suffers when the data are subject to stochastic effects. Granger causality needs an accurate model formulation for the dynamics, which can be difficult to find. Pearson’s correlation coefficient requires statistical assumptions on the data, which are often not met. The authors of [

22] therefore suggest to utilise many different methods on the same problem instead of only a single one to optimally find and interpret relations between variables.

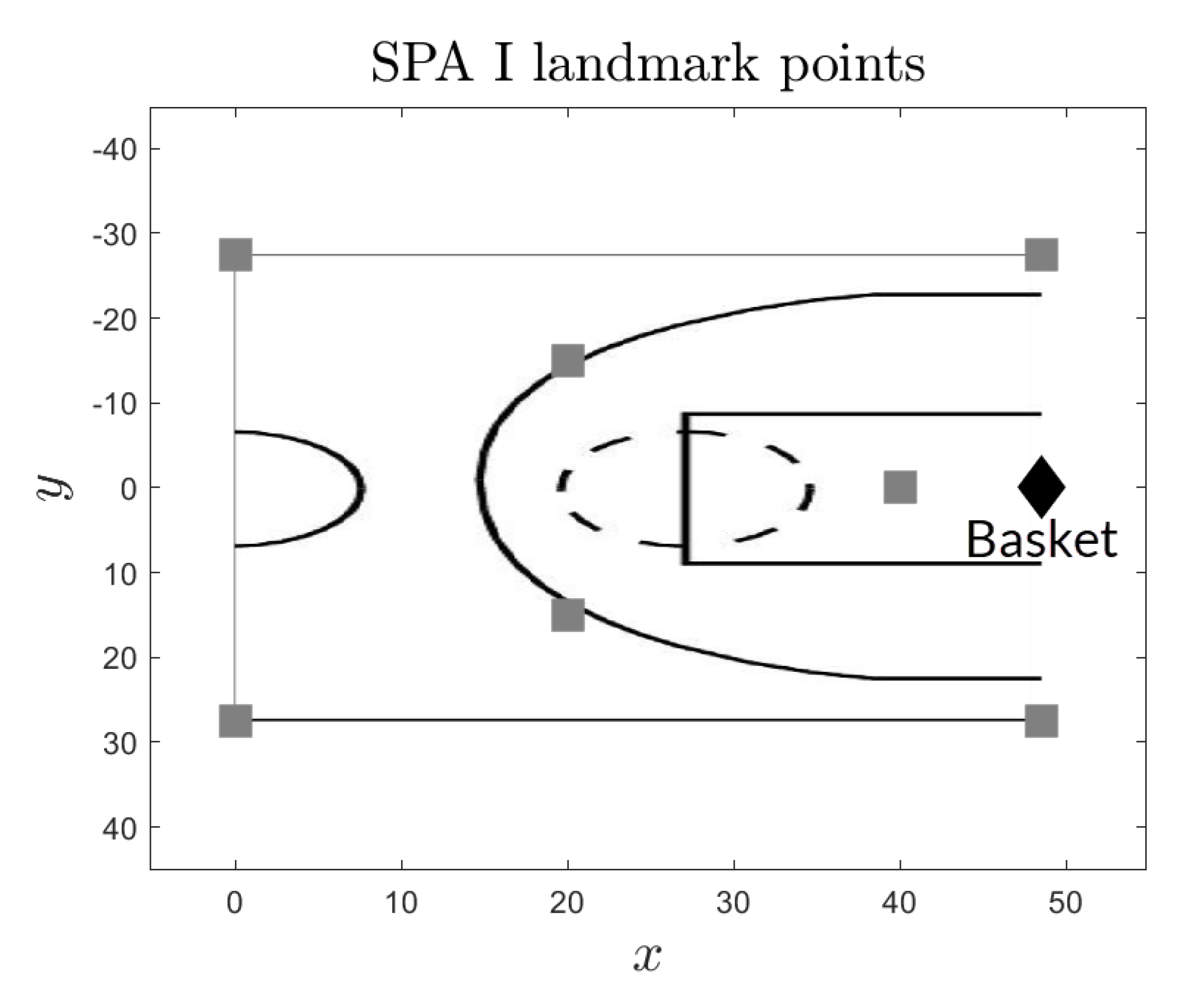

In this article, a practical method is presented that aims at complementing the weaknesses of related methods and enriching the range of existing techniques for the detection of influences. It represents the data using probabilistic, or

fuzzy, affiliations with respect to landmark points and constructs a linear probabilistic model between the affiliations of different variables and forward in time. This linear mapping then admits a direct and intuitive interpretation in regard to the relationship between variables. The landmark points are selected by a data-driven algorithm, and the model formulation is quite general, so that only very little intuition of the dynamics is required. For the fuzzy affiliations and the construction of the linear mapping, the recently introduced method Scalable Probabilistic Approximation (SPA) [

23,

24] is used, whose capacity to locally approximate highly nonlinear functions accurately was demonstrated in [

23]. Then, the properties of this mapping are computed, which serve as the dependency measures. The intuition, which is further elaborated on in the article, now is as follows: if one variable has little influence on another, the columns of the linear mapping should be similar to each other, while if a variable has a strong influence, the columns should be very different. This is quantified using two measures, which extract quantities of the matrix, one inspired by linear algebra, the other a more statistical one. The former computes the sum of singular values of the matrix with the intuition that a matrix consisting of similar columns is close to a low-rank matrix for which many singular values are zero [

25]. The latter uses the statistical variance to quantify the difference between the columns. It is shown that they are in line with the above interpretation of the column-stochastic matrix, and the method is applied to several examples to demonstrate its efficacy. Three examples are of a theoretical nature, where built-in influences are reconstructed. One real-world example describes the detection of influences between basketball players during a game.

This article is structured as follows: In

Section 2, the SPA method is introduced, including a mathematical formalisation of influences between variables in the context of the SPA-induced model. In

Section 3, the two dependency measures that quantify the properties of the SPA model are introduced and connected with an intuitive perception of the SPA model. In

Section 4, the dependency measures are applied to examples.

To outline the structure of the method in advance, the three main steps that are proposed to quantify influences between variables of a dynamical system are (also see

Figure 1):

Representation of points by fuzzy affiliations with respect to landmark points.

Estimation of a particular linear mapping between fuzzy affiliations of two variables and forward in time.

Computation of the properties of this matrix.

2. Scalable Probabilistic Approximation

Scalable Probabilistic Approximation (SPA) [

23] is a versatile and low-cost method that transforms points from a

D-dimensional state space to

K-dimensional probabilistic, fuzzy, affiliations. If

K is less than

D, SPA serves as a dimension reduction method by representing points as closely as possible. If

, SPA can be seen as a probabilistic, or fuzzy, clustering method, which assigns data points to

landmark points in

D-dimensional space depending on their closeness to them. For two different variables

X and

Y, SPA can furthermore find an optimal linear mapping between the probabilistic representations.

The first step, the transformation to fuzzy affiliations, will be called SPA I, while the construction of the mapping in these coordinates will be called SPA II.

2.1. Representation of the Data in Barycentric Coordinates

The mathematical formulation of SPA I is as follows: Let

. Then, solve

It was discussed in [

26] that for

, the representation of points in this way is the orthogonal projection onto a convex polytope with vertices given by the columns of

. The coordinates

then specify the position of this projection with respect to the vertices of the polytope and are called

Barycentric Coordinates (BCs). A high entry in such a coordinate then signals the closeness of the projected point to the vertex.

Remark 1. A similar representation of points has already been introduced in PCCA+ [27]. For

, however, in [

23], the interpretation of a probabilistic clustering was introduced. According to the authors, the entries of a

K-dimensional coordinate of a point denote the probabilities to be inside a certain box around a landmark point. One can generalise this interpretation to

fuzzy affiliations to these landmark points, again in the sense of closeness. A BC

then denotes the distribution of affiliations to each landmark point, and (

SPA 1) can be solved without loss, i.e., so that

holds for all data points.

Figure 2 shows the representation of a point in

with respect to four landmark points.

Remark 2. From now on, regardless of the relation between K and D, mostly the term BC will be used instead of “fuzzy affiliations” for the to emphasise that they are new coordinates of the data, which can always be mapped back to the original data as long as Equation (1) is fulfilled. Note that while commonly in the literature, the term “barycentric coordinate” refers to coordinates with respect to the vertices of a simplex (i.e., is -dimensional with K vertices, contrary to the assumption ), in various publications, e.g., [28], the term generalised barycentric coordinates is used if the polytope is not a simplex. In any case, the term “generalised” will be omitted and only “BC” will be written. For

and given landmark points, the representation with barycentric coordinates is generally not unique (while the set of points that can be represented by

is a convex polytope, some landmark points can even lie inside this polytope if

). Therefore, let us define the representation of a point

X analogously to [

26] in the following way:

should be selected among all barycentric coordinates that represent

X without loss so that it is closest to the

reference coordinate.

Remark 3. Note that the solution for the landmark points determined by SPA I is never unique if [23]. In order to solve (

SPA 1)

, its objective function is iteratively minimised by separately solving for Σ and Γ. For this, initial values are randomly drawn. Therefore, for , an exact solution can be achieved by placing points so that all data points lie inside their convex hull (all convex combinations of them) while the remaining landmark points can be chosen arbitrarily. As a consequence, the placement of landmark points depends strongly on the randomly chosen initial values of the optimisation process. In simple cases, e.g., when the state space of the data is one-dimensional, one could even manually place landmark points across an interval containing the data and solve only for Γ

. Remark 4. Note that with a fixed Σ, the computations of all columns of Γ are independent of each other so that this step can easily be parallelised, making SPA a very efficient method for long time series and justifying the term “Scalable”. Moreover, the time complexity of the full (

SPA 1)

problem grows linearly with the number of data points [23], which is comparable to the K-means method [29], while still giving a much more precise, for even lossless, representation of points. Let now a dynamical system be given by

In this case, let us select

as the reference coordinate for the point

. Using that Equation (

1) holds for

, this gives

With this,

solely depends on

. This establishes a time-discrete dynamical system in the barycentric coordinates. By choosing the reference coordinate as

, it is asserted that the steps taken are as short as possible.

2.2. Model Estimation between Two Variables

Assuming that data from two different variables

and

is considered and one strives to investigate the relationship between them, one can solve (

SPA 1) for both of them, finding landmark points denoted by the columns of

and BCs

and

for

and solve Equation (

SPA 2), given by

is a column-stochastic matrix and, therefore, guarantees that BCs are mapped to BCs again. It is an optimal linear mapping with this property that it connects

X to

Y on the level of the BCs. One can easily transform back to the original state space by

Since, by construction, the prediction must lie inside the polytope again, it is a weighted linear interpolation with respect to the landmark points, similar as, e.g., in Kriging interpolation [

30]. Note that is was demonstrated in [

23] that this linear mapping applied to the BCs can accurately approximate functions of various complexity and is not restricted to linear relationships.

Remark 5. In [23], a way to combine SPA I and SPA II into a single SPA I problem was shown. The landmark points are then selected so that the training error of the SPA II problem can be set to 0. In other words, optimal discretisations of the state spaces are determined where optimal means with respect to the exactness of the ensuing (SPA 2) model. Estimation of Dynamics

A special case of (

SPA 2) is the estimation of dynamics, i.e., solving

is therefore a linear, column-stochastic approximation of the function

from Equation (

4). Such a matrix is typically used in so-called Markov State Models [

31,

32]. With

, one can construct dynamics in the BCs with the possibility to transform back to the original state space by multiplication with

, since

2.3. Forward Model Estimation between Two Processes

Given two dynamical systems:

let us now determine a column-stochastic matrix that propagates barycentric coordinates from one variable to the other and forward in time. This mapping will be used to quantify the effect of one variable on future states of the other.

With landmark points in

and BCs

and

for

, let us find a column-stochastic matrix

that fulfils

represents a model from

to

on the level of the BCs and tries to predict subsequent values of

Y using only

X.

Now, let us assume that

X in fact has a direct influence on

Y, meaning that there exists a function:

Then, similar as when constructing the dynamical system in the BCs previously in Equation (

4), we can observe, using Equations (

10) and (

1),

therefore directly depends on

and

, while

attempts to predict

using only

. Assuming that the approximation:

is close for each pair of

, one can assert

where

is the

jth column of

. A prediction for

is therefore constructed using a weighted average of the columns of

. The weights are the entries of

.

Remark 6. Note that the same argumentation starting in Equation (9) holds if one chooses a time shift of length and considers information of about . If , it will simply be written , but otherwise, . Remark 7. Since estimates using only , although it additionally depends on , one can interpret as an approximation to the conditional expectation of given , i.e., assuming that is distributed by a function ,In Appendix A.1, this intuition is formalised further. In the original SPA paper [23], the were interpreted as probabilities to “belong” to states σ that describe a discretisation of the state space. Λ then uses the law of total probability and the -entry denotes . Together with the combined solution of the (SPA 1) and (SPA 2) problems outlined in Remark 5, the authors of [23] described their method as finding an optimal discretisation of the state space to generate an exact model on the probabilities, thereby satisfying desired physical conservation laws. In this light, SPA then directly competes with the before-mentioned Markov State Models (MSMs) with the significant advantage that for MSMs with equidistant discretisations, i.e., grids, the number of “grid boxes” increases exponentially with the dimension and box size. This is further elaborated in Appendix A.3. While the probabilistic view is a sensible interpretation of the objects involved in SPA, one is not restricted to it, and the view taken in this article focuses on the exact point representations, which the barycentric coordinates yield. However, also acknowledging the probabilistic view point, it should be possible to make the arguments following in the next sections in a satisfying and intuitive way. 3. Quantification of Dependencies between Variables

In the following, two methods that quantify the strength of dependence of

on

by directly computing the properties of

will be defined. The intuition can be illustrated as follows: If a variable

X is important for the future state of another variable,

Y, then the multiplication of

with

should give very different results depending on which landmark point

is close to, i.e., which weight in

is high. Since

is composed by a weighted average of the columns of

by Equation (

13), this means that the columns of

should be very different from each other. In turn, if

X has no influence and carries no information for future states of

Y, the columns of

should be very similar to each other. In

Appendix A.1, it is shown that given the independence of

from

, all columns of

should be given by the mean of the

in the data. There, this is also connected to conditional expectations and the intuition given in Equation (

14).

In the extreme case that the columns are actually equal to each other,

would be a rank-1 matrix. If the columns are not equal, but similar,

is at least

close to a rank-1 matrix. One should therefore be able to deduce from the similarity of the columns of

if

X could have an influence on

Y. This is the main idea behind the dependency measures proposed in this section. The intuition is similar to the notion of the predictability of a stochastic model introduced in [

33].

Remark 8. Note that if there is an intermediate variable Z that is forced by X and forces Y while X does not directly force Y, then it is generally difficult to distinguish between the direct and indirect influences. In Section 4.2, an example for such a case is investigated. 3.1. The Dependency Measures

Now, the two measures are introduced that will be used for the quantification of dependencies between variables. Note that these are not “measures” in the true mathematical sense, but the term is rather used as synonymous with “quantifications”.

3.1.1. Schatten-1 Norm

For the first measure, let us consider the

Singular Value Decomposition (SVD) [

34] of a matrix

, given by

.

is a matrix that is only nonzero in the entries

for

, which are denoted by

.

and

fulfil certain orthogonality properties and consist of columns

. One can thus write

as

A classic linear algebra result asserts that

. As a consequence, if some of the

are close to 0, then Equation (

24) means that only a small perturbation is sufficient to make

a matrix of lower rank. Therefore, the sum of singular values, the so-called

Schatten-1 norm [

35], will be used as a continuous measure of the rank and, thus, of the difference in the rows of

.

Definition 1 (Schatten-1 norm)

. Let the SVD of a matrix be given by with singular values . Then, the Schatten-1 norm of Λ is defined as 3.1.2. Average Row Variance

As the second dependency measure, the difference of the columns of a matrix is directly quantified using the mean statistical variance per row. Therefore, every row is considered, and the variance between its entries is computed, thereby comparing the columns with respect to this particular row. Then, the mean of these variances is taken across all rows.

Definition 2 (Average row variance)

. For a matrix , let denote the mean of the ith row of Λ. Letbe the statistical variance of the entries of the ith row. Then, the average row variance is defined as The calculated values for

and

will be stored in tables, respectively, matrices of the form

Then, for each of these matrices, the property

should be interesting for us, because they contain the differences between dependency measures, stating how strongly

X depends on

Y compared to

Y depending on

X. Let us therefore define the following:

Definition 3 (Relative difference between dependencies)

. Let M be one of the matrices from Equation (18). The relative difference between dependencies in both directions is defined as Remark 9. In Appendix A.3, it is explained how this method differs from linear correlations, Granger causality, and the same approach using boxes instead of fuzzy affiliations. When using the dependency measures to analyse which of two variables more strongly depends on the other, it is unclear at this point whether the dimension of the variables and the number of landmark points affects the outcome of the analysis. Hence, for all numerical examples in the next section, pairs of variables that have the same dimension are considered, and the same number of landmark points for them is used, i.e., it holds in each example to make the comparison as fair as possible. In this light, the following theoretical results on the Schatten-1 norm and the average row variance are directly helpful.

3.2. Maximisers and Minimisers of the Dependency Measures

About and , one can prove properties that validate why they represent sensible measures for the strengths of dependency between two processes. For this, let us make the following definition.

Definition 4 (Permutation matrix). As a permutation matrix, a matrix is defined with the property that every row and column contains exactly one 1.

Then, one can prove the following results on the maximisers and minimisers of the Schatten-1 norm and average row variance (half of them only for

or

), whose proofs can be found in

Appendix A.2.

Proposition 1 (Maximal Schatten-1 norm, ). Let with . Then, the Schatten-1 norm of Λ obtains the maximal value if deletion of rows of Λ yields a permutation matrix.

Proposition 2 (Minimal Schatten-1 norm). The Schatten-1 norm of a column stochastic -matrix A is minimal if and only if , and its minimal value is equal to 1.

For the average row variance, the following results can be derived:

Proposition 3 (Maximal average row variance, ). The average row variance of a column-stochastic -matrix Λ with obtains the maximal value if it is a permutation matrix.

It seems likely that for , the maximisers of the Schatten-1 norm from Proposition 1 also maximise the average row variance with maximal value .

Proposition 4 (Minimal average row variance). The average row variance of a column-stochastic -matrix Λ obtains the minimal value 0 if and only if all columns are equal to each other.

In order to analyse the dependencies between two variables for which one uses different numbers of landmarks, i.e., , it would be desirable if similar results as above could be inferred for the case so that one could make valid interpretations of both and . However, it was more difficult to prove them, so that only the following conjectures are made for the case :

Conjecture 1. (Maximal Schatten-1 norm, ) The Schatten-1 norm of a column-stochastic -matrix with is maximal if and only if contains a permutation matrix and the matrix of the remaining columns can be extended by columns to a permutation matrix.

Conjecture 2. (Maximal average row variance, )The average row variance of a column-stochastic -matrix with is maximal if and only if contains an permutation matrix and the matrix of the remaining columns can be extended by columns to a permutation matrix.

In summary, the maximising and minimising matrices of

and

are identical and are of the following forms:

These results show that the two dependency measures

and

fulfil important intuitions: they are minimal, when information about

gives us no information about

because, in this case, all columns of

should be identical and even equal to each other. Maximal dependence is detected if the information about

is maximally influential on

. This happens when

is, respectively can be reduced or extended to, a permutation matrix. This is illustrated in

Figure 3.

5. Conclusions

In this article, a data-driven method for the quantification of influences between variables of a dynamical system was presented. The method deploys the low-cost discretisation algorithm Scalable Probabilistic Approximation (SPA), which represents the data points using fuzzy affiliations, respectively barycentric coordinates with respect to a convex polytope, and estimates a linear mapping between these representations of two variables and forward in time. Two dependency measures were introduced that compute the properties of the mapping and admit a suitable interpretation.

Clearly, many methods for the same aim already exist. However, most of them are suited for specific scenarios and impose assumptions on the relations or the dynamics, which are not always fulfilled. Hence, this method should be a helpful and directly applicable extension to the landscape of already existing methods for the detection of influences.

The advantages of the method lie in the following: it is purely data-driven and, due to the very general structure of the SPA model, requires almost no intuition about the relation between variables and the underlying dynamics. This is in contrast to a method such as Granger causality, which imposes a specific autoregressive model between the dynamics. Furthermore, the presented method is almost parameter-free, since only the numbers of landmark points and for the representation of points and the time lag have to be specified. Additionally, the dependency measures, the Schatten-1 norm and average row variance, are straightforward to compute from the linear mapping and offer direct interpretation. The capacity of the method to reconstruct influences was demonstrated on multiple examples, including stochastic and memory-exhibiting dynamics.

In the future, it could be worthwhile to find rules for the optimal number of landmark points and their exact positions with respect to the data. Plus, it seems important to investigate how dependencies between variables with differing numbers of landmark points can be compared with the presented dependency measures. Moreover, one could determine additional properties of the matrix

next to the two presented ones. It should also be investigated why in some of the presented examples, the average row variance gave a clearer reconstruction of influences than the Schatten-1 norm and how the latter can be improved. In addition, it should be worthwhile to use the combined solution of the (

SPA 1) and (

SPA 2) problems as done in [

23] and observe whether this improves the performance. Furthermore, constructing a nonlinear SPA model consisting of the multiplication of a linear mapping with a nonlinear function, as done in [

26], could give an improved model accuracy and, therefore, a more reliable quantification of influences. Lastly, since in this article, variables were always expressed using a higher number of landmark points, it should be interesting to investigate whether for high-dimensional variables, projecting them to a low-dimensional representation using SPA and performing the same dependency analysis is still sensible. This could be of practical help to shed light on the interplay between variables in high dimensions.