Abstract

The revealed capability of generative AI tools can significantly transform the way knowledge work is conducted. With more tools being built and implemented, generative AI-aided knowledge work starts to emerge as a new normal, where knowledge workers shift a significant portion of their workloads to the tools. This new normal can lead to many concerns and issues including workers’ mental health, employees’ confusion in production, and potential spreading misknowledge. Considering the substantial portion of knowledge work in the US economy, this paper calls for more research to be conducted on this important area. This paper synthesizes relevant economic and behavioral research findings in the AI automation field and opinions of field experts, and presents a comprehensive framework, “generative AI-aided knowledge work”. This framework theoretically addresses concerns such as job replacement and organizational and behavioral factors in using generative AI and provides directions for future research and guidelines for practitioners in incorporating generative AI tools. This is one of the early attempts to provide a comprehensive overview of generative AI’s impacts on knowledge workers and production. It has the potential to seed future research in many areas such as countering misknowledge and employees’ mental health.

1. Introduction

Since December 2022, ChatGPT has spearheaded a new wave of generative AI. The impressive performance in generating responses in natural languages opened the door to disruptive and transformative changes, which have been reemphasized in countless speeches by scholars and industry experts. For example, Peng et al. (2023) [1] find that GitHub Copilot (version of May 2022) can improve programming productivity by 55.8%. Microsoft and LinkedIn surveyed 31,000 people across 31 countries and found that 75% of knowledge workers use generative AI at work, and 46% of users started using it less than six months ago [2]. In general, knowledge workers’ acceptance and skills of working with AI are related to productivity gains [3,4]. Considering the large proportion of knowledge work in the US economy [5], it is not hard to imagine that the ubiquitous use of generative AI tools is on the horizon. Cathie Wood, in her TED talk “Why AI Will Spark Exponential Economic Growth (December 2023)”, estimates that the potential productivity gains from generative AI applications could elevate the world GDP growth rate to 6 to 9 percent, up from the current 2 to 3 percent.

Despite the high expectations and hypes, generative AI’s impact on productivity is far from being fully understood [6,7,8]. Companies feel the need to keep investing in generative AI to maintain competitiveness, but at the same time, they also need guidance and a framework to strategically plan and implement it. As Baily et al. (2023) [6] point out, there is a lack of systematic and uniform understanding of generative AI’s impacts. Without a clear framework, it is challenging to incorporate generative AI in work for individuals and organizations. As shown in the 2024 Work Trend Index Annual Report by Microsoft and LinkedIn [2], “60% of leaders worry their organization’s leadership lacks a plan and vision to implement AI”, and “79% of leaders agree their company needs to adopt AI to stay competitive, but 59% worry about quantifying the productivity gains of AI”.

This paper provides a high-level framework to guide the incorporation of generative AI in knowledge work. The framework has the following goals: understanding generative AI, providing guidance on incorporating generative AI in knowledge work, advocating the danger of misknowledge, and proposing a verification-driven approach regarding incorporating generative AI tools. The method used in this paper is primarily a literature review.

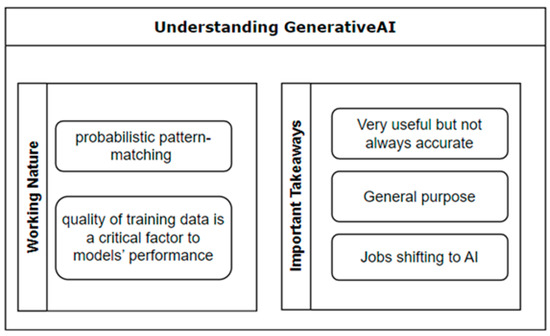

A solid understanding of generative AI’s working mechanism lays the foundation for successful incorporation. Because generative AI provides an unprecedented capability to human beings, understanding its working nature can greatly reduce the chance of misuse. Further, this paper discusses the new normal of cognitive automation, a concept introduced by Baily et al. (2023) [6]. Knowledge workers need to be ready to work in this new normal, in which machines undertake cognitive tasks, which used to be a unique ability of human beings. An important takeaway is that generative AI’s output may not always be accurate, but it is most likely useful. The terms general-purpose or general AI and generative AI summarize the new features brought by generative AI tools. General implies that it is not confined to a specific field. Any work having a language component in it can use some help from this type of tool. Generative implies that it generates/crafts responses for a prompt. This is an unprecedented capability that will drive deep changes in knowledge work. The term “language” in this paper has an extended scope that can refer to any form of human language, including pictures, voices, and videos.

Concerns about generative AI replacing jobs are prevalent [9]. In this paper, the authors compiled research findings and concluded that 1. new jobs will be created in the areas of generative AI development, services, and applications; 2. jobs involving more automatable tasks (see Section 5.1 for details) will have a reduced demand. Conversely, the more a job requires unstructured decision-making and communication, the less the demand will be reduced. Generative AI more likely shifts workers’ efforts from language generation tasks to other tasks such as communication and research, allowing for collaboration of humans and AI for quality outputs [10]. This aligns with the findings from the National Bureau of Economic Research (NBER), which indicate that customer support agents using AI tools increased productivity by 13.8%, particularly benefiting the less experienced workers with a high level of skills [11].

Having a supportive environment is critical to the successful incorporation of generative AI. It appears that the speed of generative AI development and application surpasses the anticipation. News like “College student put on academic probation for using Grammarly: ‘AI violation’” will keep coming to headlines. European countries, led by the Italian government, have a more conservative attitude in adopting this powerful tool https://www.bbc.com/news/technology-65139406 (accessed on 15 January 2025). As with any technological innovation, in the early stages of generative AI development, people will naturally resist the change. Creating and fostering a supportive environment of generative AI is necessary to help reduce the uncertainty and stress in the process. Organizations’ ethical codes, policies, and regulations need to be analyzed with adjustments toward embracing the new normal. The “machine replaces my job” mindset needs to be addressed properly. During the industrial revolution and the information era, history has proven that new technology has been on the side of job creation and economic boost. Generative AI adoptions will result in more jobs being created and the many existing jobs being enhanced. In addition to the “machine replaces my job” challenge, the literature also points out that workers’ learning attitude and expected “return on investment (ROI)” with learning generative AI can also play a role in how they view those tools [10].

When a supportive environment is established, generative AI can be incorporated into production. Knowledge work can be split into smaller tasks. All actions in a task can be analyzed to determine if they require “human in the loop”. This exercise will reveal which parts of a task can be delegated to generative AI. In this process, the role of a knowledge worker changes from primarily the creator to the selector/inspector.

The authors also want to emphasize the danger of misknowledge during the generative AI adoptions. Misknowledge is a new phenomenon that is different from misinformation. Examples of misinformation damage include cyber-bullying [12] and the infamous “Fake News” problem on social media [13]. In the Internet era, the misinformation problem becomes far more damaging due to its rapid and widespread proliferation. Similarly, people will soon need to deal with the misknowledge problem in the generative AI era. The difference is that misknowledge can be more damaging and harder to detect. It will be harder to verify misknowledge than to verify misinformation [14]. Drawing on the Test-Driven Development (TDD) approach [15], this paper proposes a verification-driven approach in generative AI-aided knowledge work to safeguard the process and the results.

To summarize, the generative AI-aided knowledge work framework has 4 components: (1) understanding generative AI, (2) supportive environment, (3) generative AI production, (4) misknowledge problem and the verification-driven approach. The following sections elaborate on each component with relevant literature and analysis.

2. Methodology

Due to the early stage of this concept, the authors chose to use the literature review and analysis method, which is a common approach for establishing a framework. Due to the short period of time of generative AI development, the literature specifically examining generative AI’s working mechanism in knowledge work is very limited. However, AI in productivity has a longer history of literature accumulation, exemplified by [16,17]. Thus, the authors used this school of literature as the baseline and screened for the ones that are closely related to knowledge work productions. In addition, the literature investigating behavioral impacts of AI was included because generative AI is expected to drive more automations in knowledge work and can have a great impact on behavioral changes.

The authors used “AI impact productivity, behavior” in the literature search using Google Scholar and Academic Search Complete. This search yields an initial set of published peer-reviewed articles. The authors manually reviewed the articles and kept only the ones investigating knowledge work and providing insights into how generative AI influenced behavioral changes. Those articles were further reviewed, and those examining the simple quantitative relationship between “using AI” and “productivity increase” without elaborating the theoretical construct were excluded from the set.

Table 1 below shows a summary of the literature. This paper classifies the literature based on the four components of the generative AI-aided knowledge work framework presented in this paper. To provide more support to each component, this paper includes additional literature when they are well-suited for the category. For example, the understanding generative AI category includes the paper of Vaswani et al. (2017) [18] because it is well known that the attention model by Vaswani et al. (2017) [18] is a seminal paper for generative AI algorithms.

Table 1.

Literature summary and category.

3. Understanding Generative AI Models

This paper focuses on understanding generative AI from an application perspective. Those interested in the design/engineering of generative AI models should check out the transformer model [18] and scaled models [19]. From a user’s perspective, generative AI tools take language input, compute best-fit scores, and generate language output. In this paper, language can be in multiple forms and is not limited to text. According to the attention mechanism [18], generative AI encodes user input into tokens, forms an association construct, and then fits the construct with a pre-trained association algorithm, which is typically a super-large matrix of parameters. The algorithm can provide tokens that are ranked by the association scores to the input construct, and then a set of high-score tokens will be kept, decoded into the desired form of language, and sent back to the user. This attention mechanism is the theoretical backbone of generative AI models. In essence, generative AI’s language generation ability is a result of a large-scale simulation, like Jiang et al. and Mirzadeh et al. stated “… is probabilistic pattern-matching rather than formal reasoning…” [20,21].

A large language model (LLM) is one application of generative AI models, and arguably the most successful application considering the success of ChatGPT, Claude, Grok, and DeepSeek. LLMs are trained with super-large amounts of language data (the Internet is a major source). The quality of training data is a critical factor in generative AI models’ performance. The training process requires powerful hardware and software to handle large matrix computations (at the level of billions of parameters). The hardware’s memory capacity and computation speed are critical to the success of the training process. Trained LLMs can be deployed to heavy-duty servers or lightweight PCs, depending on the size of the model’s parameter matrix. In addition to the well-known LLMs, there are many open-source generative AI models pre-trained for various purposes that are published on open-source platforms such as https://huggingface.co (accessed on 11 July 2025).

3.1. The New Normal: AI in the Loop

As predicted by economists [5,8], generative AI tools are changing the way humans work. Human labor and intelligent output have been the dominant form of knowledge work. But with the breakthrough of generative AI, human workers are facing the new normal of partnering with AI models [22]. The new normal that human society steps into is that AI takes a significant portion of knowledge work, i.e., AI in the loop or machine in the loop [23,24,25]. Baily et al. use the term “cognitive automation” [6] for this phenomenon. Tasks requiring reasoning and human interventions can now use generative AI tools to delegate decision-making. For example, Zhang et al. (2024) [26] demonstrate a multi-agent system to automate web tasks. Their WebPilot system incorporates ChatGPT-4 and automates the sub-tasking, refining, and tuning processes in handling web tasks [26].

Despite the productivity gains, generative AI tools can cause psychological reactions among knowledge workers. For example, people can experience reduced feelings of importance and relevance to work. People can also feel threatened by AI. There will be increased mental problems associated with the new normal establishment. Lerner finds that approximately 40% of workers in the U.S. expressed fear and anxiety about the potential of AI to make their jobs obsolete or irrelevant [9]. The American Psychological Association published the “2023 Work in America Survey” and revealed that people with concerns over AI in the workplace were more likely to show signs of decreased psychological and emotional health. It is urgent to have more research in this field. The existing research in AI and mental health has been focused on applying AI in mental health solutions [27].

3.2. Generative

Generative AI’s output is crafted, in contrast to many existing IT tools, which retrieve, rank, and format existing data. For example, search engines are mainly an information sorting tool by nature. It does not craft new pieces of data. If you search “I like orange juice” using Google, it will give you a list of websites on the Internet that mention the words. In contrast, if you input “I like orange juice” to ChatGPT, it will literally craft an essay letter by letter. Since users often regard generated content as novel knowledge, the likelihood of erroneous or wrong knowledge being adopted and propagated poses a serious risk. Ideally, users should apply the same level of caution to AI-generated knowledge as they would to information retrieved from the Internet. However, users in practice can be tempted to ignore this risk in exchange for convenience and efficiency. This may result in serious consequences of AI abuse considering the amount of knowledge work that can possibly be automated by generative AI.

Research in measuring, evaluating, and controlling the quality of generated knowledge is limited. Jiang et al. (2024) and Mirzadeh et al. (2024) examine generative AI’s reasoning ability and the quality of automated reasoning work [20,21]. With the expectation of pervasive applications of generative AI, the work quality measure has become even more critical than before. Section 5 discusses the misknowledge phenomenon and proposes a verification-driven approach.

3.3. General Purpose

AI tools before the invention of generative AI usually had a specific purpose. Medical image recognition is a well-known example. A deep-learning algorithm can perform as well as a professional radiologist after being trained to recognize cancer cells from a patient’s profile picture [28]. But it only serves its predefined purpose, i.e., recognizes the cancer cell image. Generative AI, exemplified by large language models (LLMs), is a general-purpose AI tool. This great feature offers the capability of being trained once and used everywhere. For example, ChatGPT’s knowledge is not confined to any specific subject area. Knowledge contained in the training data set sets its limitation. When trained with proper data, generative AI tools can generate programming code, video, images, and voices.

3.4. Will Generative AI Replace Jobs?

As economic theory shows, production can be viewed from the perspective of AI-automatable parts and -non-automatable parts [29]. The two parts support each other to make a successful production. The application of AI will speed up the automatable, and the non-automatable will remain in slow mode. This situation increases the demand for non-automatable work, which translates to new jobs being created. Logically, the more powerful the AI tool and the larger its applicable scale, the more new jobs will be created. There will be decreased labor demand in the automatable parts, but it is expected that the new jobs will be more than the jobs lost because of the overall increased production. Jobs related to generative AI services, development, and applications will see a boost.

Brynjolfsson et al. (2023) find that using generative AI can greatly improve productivity of novices by quickly equipping them with implicit knowledge, which is possessed by experienced employees, but can now be well reproduced by generative AI [8]. In contrast, Woodruff et al. (2024) find that many knowledge workers believe that generative AI applications can work against entry-level jobs [30].

As Brynjolfsson et al. (2018) [31] stated, “Jobs are bundles of work tasks. The suitability for machine learning for work tasks varies greatly” [31]. This idea is now reinforced by many field experts. Andrew Ng, a well-known AI scholar and developer, shared his opinion in a WSJ interview about AI’s effect on labor force and pointed out that generative AI “automating Tasks, Not Jobs”. He explained that a job usually requires a person to conduct multiple tasks that can be convolutedly related. AI is more likely to directly automate some of the tasks, and by doing so, productivity is improved. This perspective is approved by Woodruff et al. (2024) [30], who surveyed knowledge workers for their evaluation on generative AI’s impact on work. The respondents do not anticipate disruptive changes to their work generally, but are on board with more automation. Especially, they expect deskilling, dehumanization, disconnection, and disinformation amplified by generative AI applications [30].

Recent job market statistics provide empirical support for this articulation. Leading IT companies have taken quick action and made big decisions. For example, Google has been going through rounds of layoffs since December 2022. Philipp Schindler, the senior vice president of Google, addressed the layoff with the message “We’re not restructuring because AI is taking away roles that’s important here. But we see significant opportunities here with our AI-powered solution to actually deliver incredible ROI at scale, and that’s why we’re doing some of those adjustments.”

PwC’s 2024 AI Jobs Barometer [32] analyzed half a billion job postings and found that 27% lower hiring growth is associated with job roles that are most exposed to AI. Microsoft’s annual report [2] shows that “66% of leaders say they wouldn’t hire someone without AI skills. 71% say they’d rather hire a less experienced candidate with AI skills than a more experienced candidate without them.” Apparently, companies like Google see the opportunity and have been strategically shifting their focus to AI. The recent job market statistics show both mass layoffs and a low unemployment rate at the same time https://www.cbsnews.com/news/layoffs-economy-2024-why-are-job-cuts-happening (accessed on 15 January 2025). Mass firing and mass hiring at the same time support the articulation of companies undergoing the strategic goals shifting.

Figure 1 below summarizes this section.

Figure 1.

Understanding generative AI.

4. Supportive Environment for Generative AI Incorporation

To better understand the user acceptance of generative AI, we utilized the technology acceptance model to establish the construction for generative AI incorporation. According to TAM2 [33], the important factors of users accepting technology at work include subjective norm, image, job relevance, output quality, and result demonstrability.

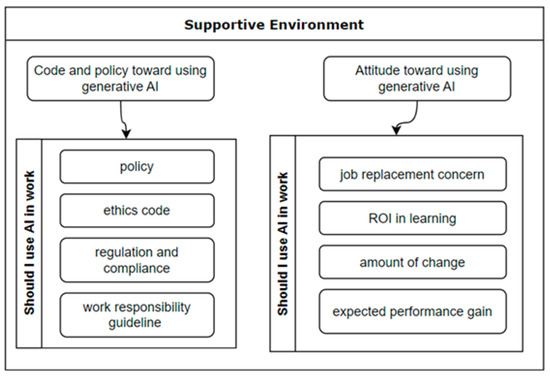

The subjective norm and image factors are rooted in the mass public’s perceptions. The influence of these two factors is largely external and beyond a company’s control. Therefore, we focus on controllable factors and categorize them into two groups: The company code and policy toward using generative AI, and user attitude toward using generative AI.

4.1. Company Code and Policy

Generative AI is in its early stages, where most people have not fully understood it. A company’s general policies provide the initial guidance to workers. A clear policy can signal the management’s support for using generative AI in work. Generative AI Policy can use the blacklist approach, which allows everything except the known bad ones. This approach allows improvisation and tends to foster innovations [34,35]. Other regulatory means, such as codes of conduct, regulations, compliance, and guidelines, can use the same approach in order to foster a supportive environment.

There are existing frameworks for companies to adopt a generative AI policy. For example, the United Nations Educational, Scientific and Cultural Organization published the Recommendation on the Ethics of Artificial Intelligence in 2022 [36]. That publication provides a general guideline for AI policy adoption. Companies can use that as a starting point and make their own version of a generative AI policy.

4.2. User Attitudes

On the side of user attitude toward using generative AI, this paper draws on the TAM2 [33] factors: job relevance, output quality, and result demonstrability. These factors help justify the decision to use generative AI at work. We rephrase these factors with more quantifiable measures: ROI in learning generative AI, changes required, and expected performance gain. The expected performance gain can be internal and/or external. Internal gains are tied to esteem and confidence. External gains relate to peer recognition and awards.

On top of these factors, the job replacement concern is significant enough to influence workers’ attitudes. This factor is temporary and can be mitigated when the generative AI incorporation process is established.

Figure 2 summarizes this section.

Figure 2.

Supportive environment for generative AI incorporation.

5. Generative AI Automation

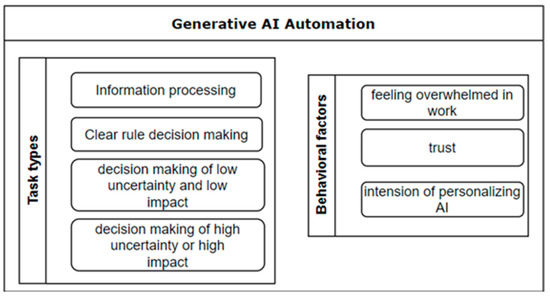

Combining and extending the view of production automatability and production tasks [29,31], we view production as a bundle of work tasks that can be evaluated toward a generative AI automatability level. To implement a reliable solution of “machine or AI in the loop”, we propose the following method.

5.1. Task and Automatability

To evaluate tasks’ automatability, a user must begin with the “human-in-the-loop” principle, which states that humans remain actively involved in critical stages of AI decision-making [37,38]. Tasks without decision-making can be safely automated, such as most information-processing tasks. Decision-making tasks should be further evaluated on (1) decision rules clearly defined and (2) level of criticalness of the decision. For example, the task of conducting an interview can be fully automated because it is a no-decision-making task. The task of evaluating credit card applications can be automated because the decision-making rules are clearly defined for most cases. The task of assigning an F score to a student should not be fully automated, even though it is technically easy. AI agents can play a big role in automatability. For automatable tasks, sometimes users must use AI agents to take actions on the environment, such as send commands and operate machines.

Another related concept is “machine in the loop” [39,40], which suggests viewing AI as a critical participant but not the commander in knowledge work. This view puts humans at the center of any task and treats AI as information providers.

Table 2 below provides a summary of tasks’ generative AI automatability:

Table 2.

Examples of generative AI automatability.

5.2. Behavioral Factors

There are some behavioral factors that can influence people’s willingness to use generative AI to automate knowledge work. A poll from the National Alliance on Mental Illness https://www.nami.org/support-education/publications-reports/survey-reports/the-2024-nami-workplace-mental-health-poll (accessed on 15 January 2025) shows that 52% of employees reported feeling burned out in the year 2023 because of their job, and 37% reported feeling overwhelmed at work. According to [6,33], when feeling overwhelmed, people tend to use AI to automate tasks even knowing that humans make better decisions. Considering the large proportion of workers who felt stressed and overwhelmed at work, this factor has a big influence on the decision to automate tasks with generative AI.

Another factor is the intention to personalize AI. There are two major opinions: machinery and consciousness. The machinery group views AI as solely a machine that can imitate intelligence. The famous Turing Test was originally named ‘imitation game’ by Alan Turing. The consciousness group is the minority at this moment, but should not be ignored. Artificial consciousness [41] has not yet been established as a field of research, but it drives significant discussions in areas such as moral considerations [42]. The authors have seen discussions on “AI self-awareness” in academic conferences. In 2022, a Google engineer presented his story to national news that he believes the company’s AI has developed sentience https://www.washingtonpost.com/technology/2022/06/11/google-ai-lamda-blake-lemoine (accessed on 15 January 2025). Personalizing AI can significantly influence AI usage with various levels of trust, prototyping, compassion, and bias.

Davenport et al. (2020) find that perceiving AI tools as machines is related to less trust in answering “why” questions, and more trust in “what” questions [43]. Castelo (2019) shows that people put less trust in AI when it is perceived as lacking compassion [44].

Figure 3 below summarizes this section.

Figure 3.

Generative AI automation.

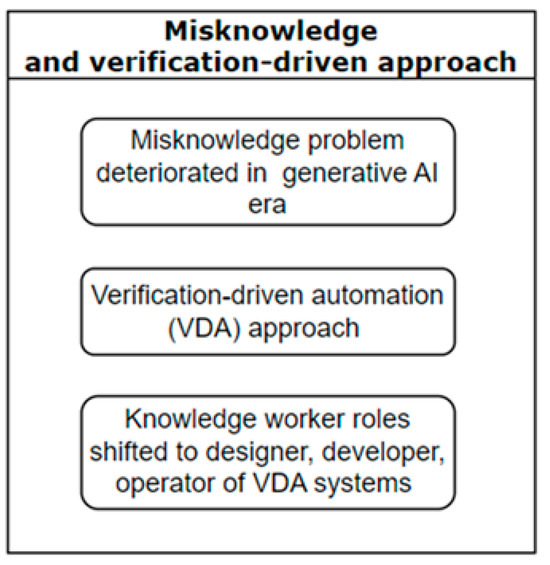

6. Misknowledge and Verification-Driven Approach (VDA)

The authors in this paper define misknowledge by comparing it with the misinformation concept. The misinformation problems existed before the IT era [45]. But it has significantly deteriorated with the social media platform being largely used in communications. It now has larger-scale impacts and a faster speed in spreading. Examples include cyberbullying [46], fake News [47], and social media manipulation [48]. With the forthcoming pervasive use of generative AI, human society is expected to deal with a new version of the misknowledge problem in the generative AI era.

In this paper, misknowledge is defined as systematically flawed or erroneous knowledge claims that are produced and presented in the form and structure of legitimate knowledge. Knowledge (and misknowledge) is typically composed and produced by a complicated process. But with generative AI, knowledge (and misknowledge) creation can be automated, which leads to a much higher scale and production rate. Misknowledge can mimic the appearance of valid knowledge with arguments, data, or citations. It requires professional expertise, critical analysis, or domain-specific judgment to identify and correct. Compared with misinformation, misknowledge can be more costly and more damaging once established [14].

To minimize the negative impact of misknowledge, the authors advocate for a verification-driven automation (VDA) approach in the generative AI-automated knowledge work. This approach is derived from the Test-Driven Development (TDD) approach [15] developed by the software development community. Before developing the software, the TDD approach develops a testing tool first and uses it to guide the process of software development. Using the same framework of idea, VDA first develops the verification instruments before deciding on the task automation types (see task automatability in Section 5.1). As discussed earlier, tasks of high automatability tend to be highly definable for quality checks. For example, information-processing tasks should have clearly defined success criteria for their results. Low automatability tasks require more frequent human interventions in high-stakes decision-making, which is a good place to verify AI-generated content for quality and accuracy.

Below is a hypothetical example to demonstrate how a VDA application works. In a school enrollment analysis project, the team needs to implement a model and predict the chance of a student dropping out of school. The provided panel data has students’ class registration records for each semester in a 4-year window. The team decided on the following tasks:

- 1.

- Data prep:

- a.

- Aggregate the data to the level of student + semester + number of courses enrolled.

- b.

- Extract and calculate several important factors such as “is First-Generation College Student”, “accumulative GPA”, “last semester GPA”, and “is international student”.

- c.

- Make the variables “isDropped2ndSemester”, “isDropped3rdSemester”, and “isDropped4thSemester”.

- d.

- Remove data records that have missing values.

- 2.

- Decide on the analysis methodology and model to be used.

- 3.

- Run predictive model:

- a.

- Estimate a logistic regression model for each DV shown in step 3 with IVs as in step 2.

- b.

- Interpret the regression model’s results, including parameters and prediction power.

- 4.

- Write reports on this project and prepare presentations.

Task 1 contains several steps of transforming data into the desired format. According to the task automatability discussion in Section 5.1, these are information-processing tasks that are highly automatable. An analyst can either directly input the data and prep instructions to generative AI tools and obtain the result, or let AI generate program code to process the data according to the prep instructions. Either way, the analyst can make a program to compare the data before and after to determine whether the prep tasks have been performed correctly. This is essentially how TDD has been traditionally used, as a verification based on fixed outcome expectations.

Task 2 requires human decision-making and thus is considered low in generative AI automatability. For this kind of task, the decision maker may use AI-provided information, but must be vigilant and responsible for validity of the knowledge applied. In this case, the traditional TDD context needs to be extended because a predefined outcome is not possible. The verification is based on a more complex human analysis of the results. Therefore, the authors call for a more dedicated multifaceted approach to verify and validate the AI-generated content in these cases.

Task 3 is a mix of information-processing and decision-making tasks. The analyst can automate task 2.a with the validation approach of running the model using two different software packages such as R version 4.5 and SPSS version 31 and comparing the two results. Task 2.b, interpreting the results, is an example of decision-making of low uncertainty and low impact. The analysts can use AI to generate raw results, manually sift through, and keep the desired results.

Task 4 is an example of decision-making under high uncertainty or high impact. This type of task should use the human-in-the-loop approach that puts the analyst in the driver’s seat and uses AI for technical help or non-decision-making such as simple proofreading.

As the case demonstrates, there are different levels of verification in terms of how involved they are with humans in the loop. For fully automatable tasks, verification instruments can be created and applied directly. For non-automatable tasks, human verification is critical to ensure the proper use of generative AI to ensure the knowledge work is performed correctly. More research is needed, especially to develop frameworks, methods, and techniques to systematically evaluate and validate generative AI’s output.

In the generative AI knowledge work era, efforts that used to be dedicated to knowledge creation will be shifted toward knowledge verification and evaluation. As such, it is predicted that the roles of designer, developer, and operator of VDA systems will be among the new jobs created in the generative AI era. These roles require analytical and critical thinking skills and do not necessarily reduce the requirements and needs for creativity.

Figure 4 below summarizes this section.

Figure 4.

Misknowledge and verification-driven approach.

7. Conclusions

By proposing the generative AI-aided knowledge work framework, this paper explains generative AI’s working mechanism and major features, addresses job replacement concerns, discusses the new normal of AI in the loop in knowledge work, analyzes factors of a supportive environment, lays out a method of incorporating generative AI into knowledge production, and finally, discusses the danger of the emerging misknowledge phenomenon in the generative AI era and proposes the verification-driven approach. This framework and its components provide a structured foundation for analyzing both the opportunities and challenges of integrating generative AI into knowledge work.

The analysis highlights that while generative AI offers unprecedented capabilities to automate cognitive tasks and elevate knowledge productivity, its successful adoption depends on more than technical performance alone. A supportive environment, including policies, regulations, and worker attitudes, is critical for sustainable use.

This paper identifies the misknowledge problem as a pressing challenge of the generative AI era. Unlike misinformation, misknowledge is harder to detect and potentially more damaging due to its systematic and knowledge-like form. To address this, the paper proposed a verification-driven approach in generative AI automation. This approach repositions verification as a central element of knowledge work, creating opportunities for new roles in AI oversight and quality assurance.

Overall, the framework presented in this paper contributes to one of the early attempts to theorize generative AI’s impact on knowledge work. It provides both scholars and practitioners with a conceptual roadmap to explore how generative AI may reshape knowledge work, organizational structures, and human well-being. Future research is urgently needed to refine verification practices, investigate behavioral impacts such as anxiety and deskilling, and develop systematic strategies to mitigate misknowledge.

This paper provides a much-needed guideline for incorporating generative AI in the work environment. This opens several promising research areas, including misknowledge, verification-driven approach, testing system design in the generative AI era, AI delegation decision-making, AI personalization intention and decision-making, and psychological effects from yielding workload to generative AI.

This paper is one of the early works in understanding generative AI’s impacts on workers and production. Like any theoretical and exploratory paper, it has some limitations. Empirical validation of the proposed framework is needed. Due to the rapidly evolving nature of generative AI, some findings may quickly become outdated. However, these limitations also present great opportunities for researchers to further investigate each element of the generative AI-aided knowledge framework to provide valid guidance for the incorporation of generative AI in work.

Author Contributions

Conceptualization, Z.Y., X.G. and P.Z.; methodology, Z.Y., X.G. and P.Z.; software, Z.Y., X.G. and P.Z.; validation, Z.Y., X.G. and P.Z.; formal analysis, Z.Y., X.G. and P.Z.; investigation, Z.Y., X.G. and P.Z.; resources, Z.Y., X.G. and P.Z.; data curation, Z.Y., X.G. and P.Z.; writing—original draft preparation, Z.Y., X.G. and P.Z.; writing—review and editing, Z.Y., X.G. and P.Z.; visualization, Z.Y., X.G. and P.Z.; supervision, Z.Y., X.G. and P.Z.; project administration, Z.Y., X.G. and P.Z.; funding acquisition, Z.Y., X.G. and P.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Peng, S.; Kalliamvakou, E.; Cihon, P.; Demirer, M. The impact of ai on developer productivity: Evidence from github copilot. arXiv 2023, arXiv:2302.06590. [Google Scholar] [CrossRef]

- Microsoft; LinkedIn. 2024 Work Trend Index Annual Report: AI at Work Is Here. Now Comes the Hard Part. 2024. Microsoft. Available online: https://www.microsoft.com/en-us/worklab/work-trend-index/ai-at-work-is-here-now-comes-the-hard-part (accessed on 8 May 2024).

- Necula, S.C.; Fotache, D.; Rieder, E. Assessing the impact of artificial intelligence tools on employee productivity: Insights from a comprehensive survey analysis. Electronics 2024, 13, 3758. [Google Scholar] [CrossRef]

- Nurlia, N.; Daud, I.; Rosadi, M.E. AI implementation impact on workforce productivity: The role of AI training and organizational adaptation. Escalat. Econ. Bus. J. 2023, 1, 1–13. [Google Scholar] [CrossRef]

- Eloundou, T.; Manning, S.; Mishkin, P.; Rock, D. Gpts are gpts: An early look at the labor market impact potential of large language models. arXiv 2023, arXiv:2303.10130. [Google Scholar] [CrossRef]

- Baily, M.; Brynjolfsson, E.; Korinek, A. Machines of Mind: The Case for an AI-Powered Productivity Boom; The Brookings Institute: Washington, DC, USA, 2023; Available online: https://www.brookings.edu/articles/machines-of-mind-the-case-for-an-ai-powered-productivity-boom/ (accessed on 22 July 2024).

- Raj, M.; Seamans, R. Artificial intelligence, labor, productivity, and the need for firm-level data. In The Economics of Artificial Intelligence: An Agenda; University of Chicago Press: Chicago, IL, USA, 2018; pp. 553–565. [Google Scholar]

- Brynjolfsson, E.; Li, D.; Raymond, L.R. Generative AI at Work; No. w31161; National Bureau of Economic Research: Cambridge, MA, USA, 2023. [Google Scholar]

- Lerner, M. Worried About AI in the Workplace? You’re Not Alone. 2024. American Psychological Association. Available online: https://www.apa.org/topics/healthy-workplaces/artificial-intelligence-workplace-worry (accessed on 15 January 2025).

- Noy, S.; Zhang, W. Experimental evidence on the productivity effects of generative artificial intelligence. Science 2023, 381, 187–192. [Google Scholar] [CrossRef]

- NBER. Measuring the Productivity Impact of Generative AI. 2023. Available online: https://www.nber.org/papers/w31161 (accessed on 15 January 2025).

- Arnon, S.; Klomek, A.B.; Visoki, E.; Moore, T.M.; Argabright, S.T.; DiDomenico, G.E.; Benton, T.D.; Barzilay, R. Association of cyberbullying experiences and perpetration with suicidality in early adolescence. JAMA Netw. Open 2022, 5, e2218746. [Google Scholar] [CrossRef] [PubMed]

- Vosoughi, S.; Roy, D.; Aral, S. The spread of true and false news online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Shu, K. Can LLM-generated misinformation be detected? In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 7–11 May 2024; Available online: https://openreview.net/forum?id=ccxD4mtkTU (accessed on 15 January 2025).

- Beck, K. Test Driven Development: By Example; Addison-Wesley Professional: Boston, MA, USA, 2022. [Google Scholar]

- Brynjolfsson, E.; McAfee, A. The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies; WW Norton & Company: New York, NY, USA, 2014. [Google Scholar]

- Ford, M. The Rise of the Robots: Technology and the Threat of a Jobless Future; Basic Books: New York, NY, USA, 2016. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; NeurIPS Proceedings: San Diego, CA, USA, 2017; Volume 30. [Google Scholar]

- Kaplan, J.; McCandlish, S.; Henighan, T.; Brown, T.B.; Chess, B.; Child, R.; Gray, S.; Radford, A.; Wu, J.; Amodei, D. Scaling laws for neural language models. arXiv 2020, arXiv:2001.08361. [Google Scholar] [CrossRef]

- Jiang, B.; Xie, Y.; Hao, Z.; Wang, X.; Mallick, T.; Su, W.J.; Taylor, C.J.; Roth, D. A Peek into Token Bias: Large Language Models Are Not Yet Genuine Reasoners. arXiv 2024, arXiv:2406.11050. [Google Scholar] [CrossRef]

- Mirzadeh, I.; Alizadeh, K.; Shahrokhi, H.; Tuzel, O.; Bengio, S.; Farajtabar, M. GSM-Symbolic: Understanding the Limitations of Mathematical Reasoning in Large Language Models. arXiv 2024, arXiv:2410.05229. [Google Scholar] [CrossRef]

- Cranefield, J.; Winikoff, M.; Chiu, Y.T.; Li, Y.; Doyle, C.; Richter, A. Partnering with AI: The case of digital productivity assistants. J. R. Soc. 2023, 53, 95–118. [Google Scholar] [CrossRef]

- Christiano, P.F.; Leike, J.; Brown, T.; Martic, M.; Legg, S.; Amodei, D. Deep reinforcement learning from human preferences. In Advances in Neural Information Processing Systems (NeurIPS); NeurIPS Proceedings: San Diego, CA, USA, 2017. [Google Scholar]

- Shneiderman, B. Human-centered artificial intelligence: Reliable, safe & trustworthy. Int. J. Hum.–Comput. Interact. 2020, 36, 495–504. [Google Scholar]

- Candrian, C.; Scherer, A. Rise of the machines: Delegating decisions to autonomous AI. Comput. Hum. Behav. 2022, 134, 107308. [Google Scholar] [CrossRef]

- Zhang, Y.; Ma, Z.; Ma, Y.; Han, Z.; Wu, Y.; Tresp, V. Webpilot: A versatile and autonomous multi-agent system for web task execution with strategic exploration. Proc. AAAI Conf. Artif. Intell. 2025, 39, 23378–23386. [Google Scholar] [CrossRef]

- De Freitas, J.; Uğuralp, A.K.; Oğuz-Uğuralp, Z.; Puntoni, S. Chatbots and mental health: Insights into the safety of generative AI. J. Consum. Psychol. 2024, 34, 481–491. [Google Scholar] [CrossRef]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- Acemoglu, D.; Restrepo, P. The race between man and machine: Implications of technology for growth, factor shares, and employment. Am. Econ. Rev. 2018, 108, 1488–1542. [Google Scholar] [CrossRef]

- Woodruff, A.; Shelby, R.; Kelley, P.G.; Rousso-Schindler, S.; Smith-Loud, J.; Wilcox, L. How knowledge workers think generative ai will (not) transform their industries. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–26. [Google Scholar]

- Brynjolfsson, E.; Mitchell, T.; Rock, D. What Can Machines Learn, and What Does It Mean for the Occupations and Industries. In AEA Papers and Proceedings; American Economic Association: Nashville, TN, USA, 2018; pp. 43–47. [Google Scholar]

- PwC. 2024 AI Jobs Barometer. 2024. Available online: https://www.pwc.com/gx/en/issues/artificial-intelligence/ai-jobs-barometer.html (accessed on 14 October 2024).

- Legris, P.; Ingham, J.; Collerette, P. Why do people use information technology? A critical review of the technology acceptance model. Inf. Manag. 2003, 40, 191–204. [Google Scholar] [CrossRef]

- OECD. OECD Regulatory Policy Outlook 2021; Regulatory policy 2.0.; OECD Publishing: Paris, France, 2021; Available online: https://www.oecd-ilibrary.org/governance/oecd-regulatory-policy-outlook-2021_38b0fdb1-en (accessed on 15 January 2025).

- Signé, L.; Almond, S. A Blueprint for Technology Governance in the Post-Pandemic World; Brookings Research: Washington, DC, USA, 2021; Available online: https://www.brookings.edu/articles/a-blueprint-for-technology-governance-in-the-post-pandemic-world/ (accessed on 22 July 2024).

- United Nations Educational, Scientific and Cultural Organization. UNESCO [8623]. Recommendation on the Ethics of Artificial Intelligence. 2022. Available online: https://www.unesco.org/en/articles/recommendation-ethics-artificial-intelligence (accessed on 15 September 2025).

- Endsley, M.R. Toward a theory of situation awareness in dynamic systems. Hum. Factors 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Cummings, M.M. Man versus machine or man+ machine? IEEE Intell. Syst. 2014, 29, 62–69. [Google Scholar] [CrossRef]

- Hemmer, P.; Westphal, M.; Schemmer, M.; Vetter, S.; Vössing, M.; Satzger, G. Human-AI collaboration: The effect of AI delegation on human task performance and task satisfaction. In Proceedings of the 28th International Conference on Intelligent User Interfaces, Sydney, NSW, Australia, 27–31 March 2023; pp. 453–463. [Google Scholar]

- Köbis, N.; Rahwan, Z.; Rilla, R.; Supriyatno, B.I.; Bersch, C.; Ajaj, T.; Bonnefon, J.-F.; Rahwan, I. Delegation to artificial intelligence can increase dishonest behaviour. Nature 2025, 1–9. [Google Scholar] [CrossRef]

- Buttazzo, G. Artificial consciousness: Utopia or real possibility? Computer 2001, 34, 24–30. [Google Scholar] [CrossRef]

- Harris, J.; Anthis, J.R. The moral consideration of artificial entities: A literature review. Sci. Eng. Ethics 2021, 27, 53. [Google Scholar] [CrossRef]

- Davenport, T.; Guha, A.; Grewal, D.; Bressgott, T. How artificial intelligence will change the future of marketing. J. Acad. Mark. Sci. 2020, 48, 24–42. [Google Scholar] [CrossRef]

- Castelo, N. Blurring the Line Between Human and Machine: Marketing Artificial Intelligence; Columbia University: Manhattan, NY, USA, 2019. [Google Scholar]

- Godfrey-Smith, P. Misinformation. Can. J. Philos. 1989, 19, 533–550. [Google Scholar] [CrossRef]

- Slonje, R.; Smith, P.K.; Frisén, A. The nature of cyberbullying, and strategies for prevention. Comput. Hum. Behav. 2013, 29, 26–32. [Google Scholar] [CrossRef]

- Lazer, D.M.; Baum, M.A.; Benkler, Y.; Berinsky, A.J.; Greenhill, K.M.; Menczer, F.; Metzger, M.J.; Nyhan, B.; Pennycook, G.; Rothschild, D.; et al. The science of fake news. Science 2018, 359, 1094–1096. [Google Scholar] [CrossRef]

- Aral, S.; Eckles, D. Protecting elections from social media manipulation. Science 2019, 365, 858–861. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).