A Review of Ethical Challenges in AI for Emergency Management

Abstract

1. Introduction

2. Previous Work

2.1. AI in Emergency Management

2.2. Ethical Issues Related to AI in Emergency Management

3. Applications of AI in Emergency Management

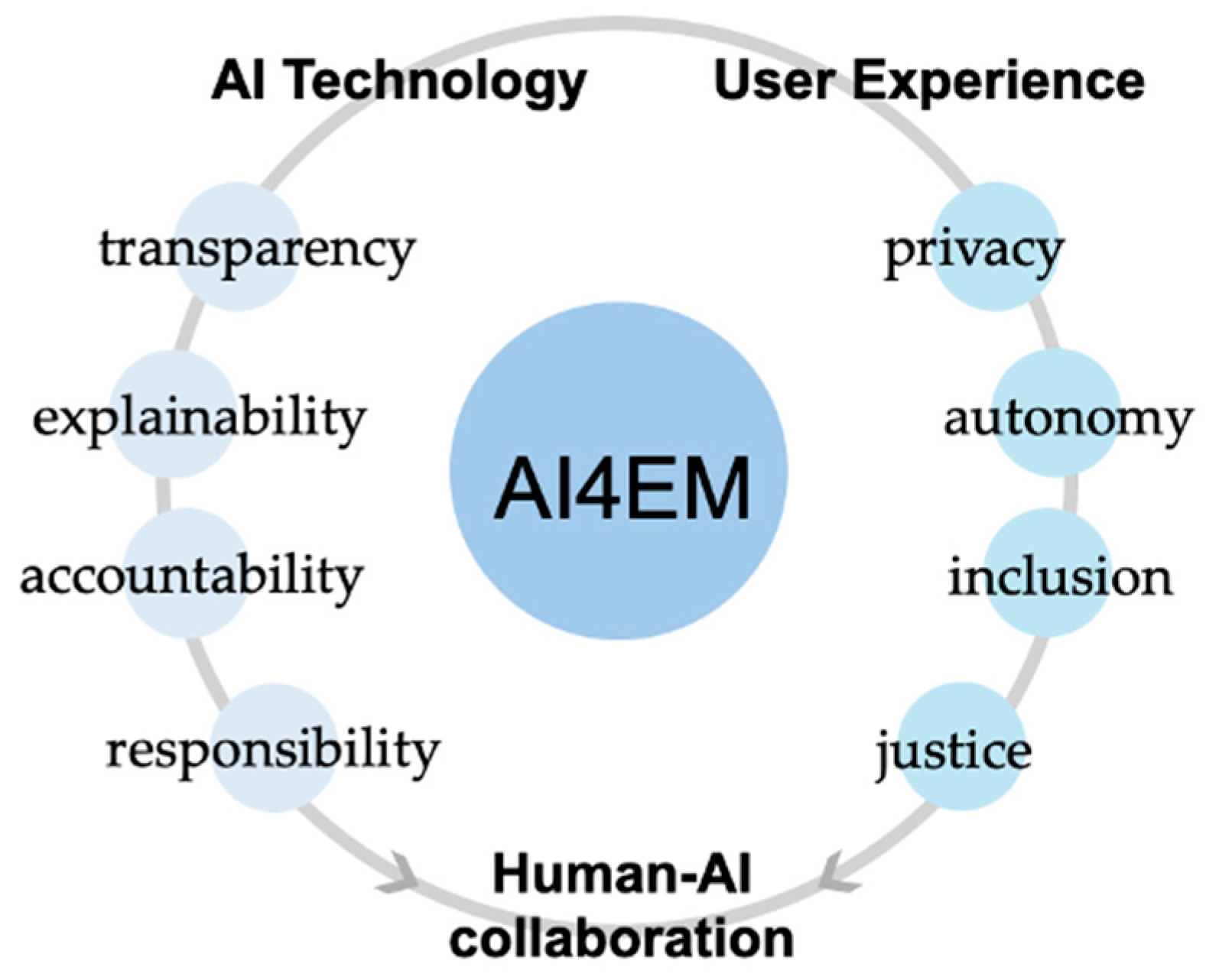

4. Current Ethical Challenges of AI in Emergency Management

4.1. AI Algorithmic Bias

4.2. AI Transparency, Privacy and Accountability

4.3. Human–AI Collaboration

5. Addressing Ethical Challenges in Emergency Management

5.1. Framework/Guidelines for AI in Emergency Management

5.2. Strategies to Mitigate Ethical Challenges

5.3. Policy-Related Ethical Challenges

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Alimonti, G.; Mariani, L. Is the number of global natural disasters increasing? Environ. Hazards 2023, 23, 186–202. [Google Scholar] [CrossRef]

- Brackbill, R.; Alper, H.; Frazier, P.; Gargano, L.; Jacobson, M.; Solomon, A. An Assessment of Long-Term Physical and Emotional Quality of Life of Persons Injured on 9/11/2001. Int. J. Environ. Res. Public Health 2019, 16, 1054. [Google Scholar] [CrossRef]

- Office of EMS. Civil Unrest Resources. 2020. Available online: https://www.ems.gov/assets/Guidance_Resources_Civil_Unrest.pdf (accessed on 28 March 2025).

- United Nations. Economic Recovery After Natural Disasters. May 2016. Available online: https://www.un.org/en/chronicle/article/economic-recovery-after-natural-disasters (accessed on 28 March 2025).

- McLoughlin, D. Framework for integrated emergency management. Public Adm. Rev. 1985, 45, 165–172. [Google Scholar] [CrossRef]

- Chapman, A. Leveraging Big Data and AI for Disaster Resilience and Recovery. Engineering.tamu.edu. 5 June 2023. Available online: https://engineering.tamu.edu/news/2023/06/leveraging-big-data-and-ai-for-disaster-resilience-and-recovery.html (accessed on 28 March 2025).

- Quinn, J.A.; Nyhan, M.M.; Navarro, C.; Coluccia, D.; Bromley, L.; Luengo-Oroz, M. Humanitarian applications of machine learning with remote-sensing data: Review and case study in refugee settlement mapping. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2018, 376, 20170363. [Google Scholar] [CrossRef]

- Thekdi, S.; Tatar, U.; Santos, J.; Chatterjee, S. Disaster risk and artificial intelligence: A framework to characterize conceptual synergies and future opportunities. Risk Anal. 2022, 43, 1641–1656. [Google Scholar] [CrossRef]

- Amershi, S.; Weld, D.; Vorvoreanu, M.; Fourney, A.; Nushi, B.; Collisson, P.; Suh, J.; Iqbal, S.; Bennett, P.N.; Inkpen, K.; et al. Guidelines for human-ai interaction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–13. [Google Scholar] [CrossRef]

- Almudallal, M. The Effect of Geopolitical Environment on the Influence of Crisis Management in Strategic Planning Process. Ph.D. Thesis, Universiti Teknologi Malaysia, Johor, Malaysia, 2019. Available online: https://eprints.utm.my/92419/1/MohammedWaleedAlmudallalPAHIBS2019.pdf.pdf (accessed on 28 March 2025).

- Flanagan, M. AI and Environmental Challenges. UPenn EII. Available online: https://environment.upenn.edu/events-insights/news/ai-and-environmental-challenges (accessed on 28 March 2025).

- Haghshenas, S.S.; Guido, G.; Haghshenas, S.S.; Astarita, V. The role of artificial intelligence in managing emergencies and crises within smart cities. In Proceedings of the 2023 International Conference on Information and Communication Technologies for Disaster Management (ICT-DM), Cosenza, Italy, 13–15 September 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Kolman, S.; Meyer, C. Workforce and Data System Strategies to Improve Public Health Policy Decisions. 2023. Available online: https://documents.ncsl.org/wwwncsl/Health/Workforce-Data-Systems_v02.pdf (accessed on 28 March 2025).

- United Nations Office for Disaster Risk Reduction. Disaster Risk Reduction & Disaster Risk Management. 2019. Available online: https://www.preventionweb.net/understanding-disaster-risk/key-concepts/disaster-risk-reduction-disaster-risk-management (accessed on 28 March 2025).

- Radanliev, P.; Santos, O.; Brandon-Jones, A.; Joinson, A. Ethics and responsible AI deployment. Front. Artif. Intell. 2024, 7, 1377011. [Google Scholar] [CrossRef]

- Boe, T.; Sayles, G. The Current State of Artificial Intelligence in Disaster Recovery: Challenges, Opportunities, and Future Directions. August 2023. Available online: https://digital.library.unt.edu/ark:/67531/metadc2289513/ (accessed on 20 July 2025).

- Bari, L.F.; Ahmed, I.; Ahamed, R.; Zihan, T.A.; Sharmin, S.; Pranto, A.H.; Islam, M.R. Potential use of artificial intelligence (AI) in disaster risk and emergency health management: A critical appraisal on environmental health. Environ. Health Insights 2023, 17, 11786302231217808. [Google Scholar] [CrossRef] [PubMed]

- Krichen, M.; Abdalzaher, M.S. Advances in ai and drone-based natural disaster management: A survey. In Proceedings of the 2023 20th ACS/IEEE International Conference on Computer Systems and Applications (AICCSA), Giza, Egypt, 4–7 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Vicari, R.; Komendatova, N. Systematic meta-analysis of research on AI tools to deal with misinformation on social media during natural and anthropogenic hazards and disasters. Humanit. Soc. Sci. Commun. 2023, 10, 332. [Google Scholar] [CrossRef]

- GFDRR. Responsible Artificial Intelligence for Disaster Risk Management|GFDRR. 29 April 2021. Available online: https://www.gfdrr.org/en/publication/responsible-artificial-intelligence-disaster-risk-management (accessed on 3 August 2025).

- European Commission. A European Strategy for Smart, Sustainable and Inclusive Growth. 2020. Available online: https://ec.europa.eu/eu2020/pdf/COMPLET%20EN%20BARROSO%20%20%20007%20-%20Europe%202020%20-%20EN%20version.pdf (accessed on 20 May 2025).

- European Union. European Union Priorities 2024–2029. June 2024. Available online: https://european-union.europa.eu/priorities-and-actions/eu-priorities/european-union-priorities-2024-2029_en (accessed on 20 May 2025).

- Lee, N.T.; Stewart, J. States Are Legislating AI, But a Moratorium Could Stall Their Progress. 14 May 2025. Available online: https://policycommons.net/artifacts/21028890/states-are-legislating-ai-but-a-moratorium-could-stall-their-progress/21929321/ (accessed on 20 August 2025).

- Fang, J.; Hu, J.; Shi, X.; Zhao, L. Assessing disaster impacts and response using social media data in China: A case study of 2016 Wuhan rainstorm. Int. J. Disaster Risk Reduct. 2019, 34, 275–282. [Google Scholar] [CrossRef]

- Ghaffarian, S.; Taghikhah, F.R.; Maier, H.R. Explainable artificial intelligence in disaster risk management: Achievements and prospective futures. Int. J. Disaster Risk Reduct. 2023, 98, 104123. [Google Scholar] [CrossRef]

- Saravi, S.; Kalawsky, R.; Joannou, D.; Rivas Casado, M.; Fu, G.; Meng, F. Use of artificial intelligence to improve resilience and preparedness against adverse flood events. Water 2019, 11, 973. [Google Scholar] [CrossRef]

- Vamathevan, J.; Clark, D.; Czodrowski, P.; Dunham, I.; Ferran, E.; Lee, G.; Li, B.; Madabhushi, A.; Shah, P.; Spitzer, M.; et al. Applications of machine learning in drug discovery and development. Nat. Rev. Drug Discov. 2019, 18, 463–477. [Google Scholar] [CrossRef]

- Aboualola, M.; Abualsaud, K.; Khattab, T.; Zorba, N.; Hassanein, H.S. Edge technologies for disaster management: A survey of social media and artificial intelligence integration. IEEE Access 2023, 11, 73782–73802. [Google Scholar] [CrossRef]

- Misra, S.; Katz, B.; Roberts, P.; Carney, M.; Valdivia, I. Toward a person-environment fit framework for artificial intelligence implementation in the public sector. Gov. Inf. Q. 2024, 41, 101962. [Google Scholar] [CrossRef]

- Shi, K.; Peng, X.; Lu, H.; Zhu, Y.; Niu, Z. Application of social sensors in natural disasters emergency management: A Review. IEEE Trans. Comput. Soc. Syst. 2023, 10, 3143–3158. [Google Scholar] [CrossRef]

- U.S. Geological Survey. Earthquake Early Warning—Overview|U.S. Geological Survey. 26 January 2022. Available online: https://www.usgs.gov/programs/earthquake-hazards/science/earthquake-early-warning-overview (accessed on 28 March 2025).

- Nevo, S.; Anisimov, V.; Elidan, G.; El-Yaniv, R.; Giencke, P.; Gigi, Y.; Hassidim, A.; Moshe, Z.; Schlesinger, M.; Shalev, G.; et al. ML for Flood Forecasting at Scale (Version 1). arXiv 2019, arXiv:1901.09583. [Google Scholar] [CrossRef]

- Fjeld, J.; Achten, N.; Hilligoss, H.; Nagy, A.; Srikumar, M. Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-Based Approaches to Principles for AI. 2020. Available online: https://dash.harvard.edu/server/api/core/bitstreams/c8d686a8-49e8-4128-969c-cb4a5f2ee145/content (accessed on 28 March 2025).

- United Nations Educational, Scientific and Cultural Organization. Ethics of Artificial Intelligence. 2021. Available online: https://www.unesco.org/en/artificial-intelligence/recommendation-ethics (accessed on 28 March 2025).

- United Nations System Chief Executives Board for Coordination. Principles for the Ethical Use of Artificial Intelligence in the United Nations System. 2022. Available online: https://unsceb.org/principles-ethical-use-artificial-intelligence-united-nations-system (accessed on 28 March 2025).

- Jones, E.; Sagawa, S.; Koh, P.W.; Kumar, A.; Liang, P. Selective Classification Can Magnify Disparities Across Groups. arXiv 2020, arXiv:2010.14134. [Google Scholar]

- Visave, J. AI in Emergency Management: Ethical Considerations and Challenges. J. Emerg. Manag. Disaster Commun. 2024, 5, 165–183. [Google Scholar] [CrossRef]

- World Health Organization. Coronavirus Disease (COVID-19) Epidemiological Updates and Monthly Operational Updates. Available online: https://www.who.int/emergencies/diseases/novel-coronavirus-2019/situation-reports (accessed on 20 May 2025).

- Hwang, Y.; Jeong, S.H. Generative artificial intelligence and misinformation acceptance: An experimental test of the effect of forewarning about artificial intelligence hallucination. Cyberpsychology Behav. Soc. Netw. 2025, 28, 284–289. [Google Scholar] [CrossRef]

- Athaluri, S.A.; Manthena, S.V.; Kesapragada, V.S.R.K.M.; Yarlagadda, V.; Dave, T.; Duddumpudi, R.T.S. Exploring the boundaries of reality: Investigating the phenomenon of artificial intelligence hallucination in scientific writing through ChatGPT references. Curēus 2023, 15, e37432. [Google Scholar] [CrossRef]

- Taeihagh, A. Governance of generative AI. Policy Soc. 2025, 44, 1–22. [Google Scholar] [CrossRef]

- Chen, J.; Chen, T.H.Y.; Vertinsky, I.; Yumagulova, L.; Park, C. Public–Private partnerships for the development of disaster resilient communities. J. Contingencies Crisis Manag. 2013, 21, 130–143. [Google Scholar] [CrossRef]

- Sun, W.; Bocchini, P.; Davison, B.D. Applications of artificial intelligence for disaster management. Nat. Hazards 2020, 103, 2631–2689. [Google Scholar] [CrossRef]

- Zhu, X.; Zhang, G.; Sun, B. A comprehensive literature review of the demand forecasting methods of emergency resources from the perspective of artificial intelligence. Nat. Hazards 2019, 97, 65–82. [Google Scholar] [CrossRef]

- Johnson, M.; Albizri, A.; Harfouche, A.; Tutun, S. Digital transformation to mitigate emergency situations: Increasing opioid overdose survival rates through explainable artificial intelligence. Ind. Manag. Data Syst. 2023, 123, 324–344. [Google Scholar] [CrossRef]

- Cao, Q.D.; Choe, Y. Post-Hurricane Damage Assessment Using Satellite Imagery and Geolocation Features. arXiv 2020, arXiv:2012.08624. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Ghaffarian, S.; Kerle, N.; Filatova, T. Remote sensing-based proxies for urban disaster risk management and resilience: A review. Remote Sens. 2018, 10, 1760. [Google Scholar] [CrossRef]

- Smith, A.B. U.S. Billion-Dollar Weather and Climate Disasters, 1980—Present (NCEI Accession 0209268); [Dataset]; NOAA National Centers for Environmental Information: Asheville, NC, USA, 2020. [Google Scholar] [CrossRef]

- Akhyar, A.; Asyraf Zulkifley, M.; Lee, J.; Song, T.; Han, J.; Cho, C.; Hyun, S.; Son, Y.; Hong, B.-W. Deep artificial intelligence applications for natural disaster management systems: A methodological review. Ecol. Indic. 2024, 163, 112067. [Google Scholar] [CrossRef]

- Chenais, G.; Lagarde, E.; Gil-Jardiné, C. Artificial intelligence in emergency medicine: Viewpoint of current applications and foreseeable opportunities and challenges. J. Med. Internet Res. 2023, 25, e40031. [Google Scholar] [CrossRef]

- Costa, D.B.; Pinna, F.C.d.A.; Joiner, A.P.; Rice, B.; de Souza, J.V.P.; Gabella, J.L.; Andrade, L.; Vissoci, J.R.N.; Néto, J.C. AI-based approach for transcribing and classifying unstructured emergency call data: A methodological proposal. PLoS Digit. Health 2023, 2, e0000406. [Google Scholar] [CrossRef]

- Stieglitz, S.; Mirbabaie, M.; Schwenner, L.; Marx, J.; Lehr, J.; Brünker, F. Sensemaking and Communication Roles in Social Media Crisis Communication. Wirtschaftsinformatik 2017 Proceedings. 2017. Available online: https://aisel.aisnet.org/wi2017/track14/paper/1 (accessed on 28 March 2025).

- Arnold, R.D.; Wade, J.P. A Definition of Systems Thinking: A Systems Approach. Procedia Comput. Sci. 2015, 44, 669–678. [Google Scholar] [CrossRef]

- Bejiga, M.B.; Zeggada, A.; Nouffidj, A.; Melgani, F. A convolutional neural network approach for assisting avalanche search and rescue operations with UAV imagery. Remote Sens. 2017, 9, 100. [Google Scholar] [CrossRef]

- Carrio, A.; Sampedro, C.; Rodriguez-Ramos, A.; Campoy, P. A review of deep learning methods and applications for unmanned aerial vehicles. J. Sens. 2017, 2017, 3296874. [Google Scholar] [CrossRef]

- Erdelj, M.; Natalizio, E.; Chowdhury, K.R.; Akyildiz, I.F. Help from the sky: Leveraging UAVs for disaster management. IEEE Pervasive Comput. 2017, 16, 24–32. [Google Scholar] [CrossRef]

- Bannour, W.; Maalel, A.; Ben Ghezala, H.H. Emergency management case-based reasoning systems: A survey of recent developments. J. Exp. Theor. Artif. Intell. 2023, 35, 35–58. [Google Scholar] [CrossRef]

- Boer, M.M.; Resco de Dios, V.; Bradstock, R.A. Unprecedented burn area of Australian mega forest fires. Nat. Clim. Change 2020, 10, 171–172. [Google Scholar] [CrossRef]

- Lu, S.; Jones, E.; Zhao, L.; Sun, Y.; Qin, K.; Liu, J.; Li, J.; Abeysekara, P.; Mueller, N.; Oliver, S.; et al. Onboard AI for fire smoke detection using hyperspectral imagery: An emulation for the upcoming Kanyini Hyperscout-2 Mission. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 9629–9640. [Google Scholar] [CrossRef]

- Freeman, S. Artificial intelligence for emergency management. In Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications II; Pham, T., Solomon, L., Rainey, K., Eds.; SPIE: Bellingham, WA, USA, 2020; p. 50. [Google Scholar] [CrossRef]

- Saheb, T.; Sidaoui, M.; Schmarzo, B. Convergence of artificial intelligence with social media: A bibliometric & qualitative analysis. Telemat. Inform. Rep. 2024, 14, 100146. [Google Scholar] [CrossRef]

- Vernier, M.; Farinosi, M.; Foresti, A.; Foresti, G.L. Automatic Identification and geo-validation of event-related images for emergency management. Information 2023, 14, 78. [Google Scholar] [CrossRef]

- Khan, A.; Gupta, S.; Gupta, S.K. Multi-hazard disaster studies: Monitoring, detection, recovery, and management, based on emerging technologies and optimal techniques. Int. J. Disaster Risk Reduct. 2020, 47, 101642. [Google Scholar] [CrossRef]

- Gill, S.S.; Golec, M.; Hu, J.; Xu, M.; Du, J.; Wu, H.; Walia, G.K.; Murugesan, S.S.; Ali, B.; Kumar, M.; et al. Edge AI: A taxonomy, systematic review and future directions. Clust. Comput. 2025, 28, 18. [Google Scholar] [CrossRef]

- Fan, C.; Zhang, C.; Yahja, A.; Mostafavi, A. Disaster City Digital Twin: A vision for integrating artificial and human intelligence for disaster management. Int. J. Inf. Manag. 2021, 56, 102049. [Google Scholar] [CrossRef]

- Ford, D.N.; Wolf, C.M. Smart cities with digital twin systems for disaster management. J. Manag. Eng. 2020, 36, 04020027. [Google Scholar] [CrossRef]

- Wang, Y.; Yue, Q.; Lu, X.; Gu, D.; Xu, Z.; Tian, Y.; Zhang, S. Digital twin approach for enhancing urban resilience: A cycle between virtual space and the real world. Resilient Cities Struct. 2024, 3, 34–45. [Google Scholar] [CrossRef]

- Hassija, V.; Chamola, V.; Mahapatra, A.; Singal, A.; Goel, D.; Huang, K.; Scardapane, S.; Spinelli, I.; Mahmud, M.; Hussain, A. Interpreting Black-Box Models: A review on explainable artificial intelligence. Cogn. Comput. 2024, 16, 45–74. [Google Scholar] [CrossRef]

- Islam, M.R.; Ahmed, M.U.; Barua, S.; Begum, S. A systematic review of explainable artificial intelligence in terms of different application domains and tasks. Appl. Sci. 2022, 12, 1353. [Google Scholar] [CrossRef]

- Amidon, T.R.; Sackey, D.J. Justice in/and the Design of AI Risk Detection Technologies. In Proceedings of the 42nd ACM International Conference on Design of Communication, Fairfax, VA, USA, 20–22 October 2024; pp. 81–93. [Google Scholar] [CrossRef]

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F.; et al. AI4People-An ethical framework for a good AI society: Opportunities, risks, principles, and recommendations. Minds Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef] [PubMed]

- Morley, J.; Elhalal, A.; Garcia, F.; Kinsey, L.; Mökander, J.; Floridi, L. Ethics as a service: A pragmatic operationalisation of AI ethics. Minds Mach. 2021, 31, 239–256. [Google Scholar] [CrossRef]

- Wright, J.; Verity, A. Artificial Intelligence Principles for Vulnerable Populations in Humanitarian Contexts. Digital Humanitarian Network. 2020. Available online: https://www.academia.edu/41716578/Artificial_Intelligence_Principles_For_Vulnerable_Populations_in_Humanitarian_Contexts (accessed on 28 March 2025).

- Preiksaitis, C.; Ashenburg, N.; Bunney, G.; Chu, A.; Kabeer, R.; Riley, F.; Ribeira, R.; Rose, C. The role of large language models in transforming emergency medicine: Scoping review. JMIR Med. Inform. 2024, 12, e53787. [Google Scholar] [CrossRef]

- Hart-Davidson, B.; Ristich, M.; McArdle, C.; Potts, L. The history of technical communication and the future of generative AI. In Proceedings of the 42nd ACM International Conference on Design of Communication, Fairfax, VA, USA, 20–22 October 2024; pp. 253–258. [Google Scholar] [CrossRef]

- Masoumian Hosseini, M.; Masoumian Hosseini, S.T.; Qayumi, K.; Ahmady, S.; Koohestani, H.R. The aspects of running artificial intelligence in emergency care; A scoping review. Arch. Acad. Emerg. Med. 2023, 11, e38. [Google Scholar] [CrossRef]

- Pearson, Y.; Borenstein, J. Robots, ethics, and pandemics: How might a global problem change the technology’s adoption? In Proceedings of the 2020 IEEE International Symposium on Technology and Society (ISTAS), Tempe, AZ, USA, 12–15 November 2020; pp. 12–19. [Google Scholar] [CrossRef]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A survey on bias and fairness in machine learning. ACM Comput. Surv. 2022, 54, 115. [Google Scholar] [CrossRef]

- Saxena, N.; Huang, K.; DeFilippis, E.; Radanovic, G.; Parkes, D.; Liu, Y. How Do Fairness Definitions Fare? Examining Public Attitudes Towards Algorithmic Definitions of Fairness. arXiv 2019, arXiv:1811.03654. [Google Scholar] [CrossRef]

- Obermeyer, Z.; Powers, B.; Vogeli, C.; Mullainathan, S. Dissecting racial bias in an algorithm used to manage the health of populations. Science 2019, 366, 447–453. [Google Scholar] [CrossRef]

- Daugherty, P.R.; Wilson, H.J.; Chowdhury, R. Using artificial intelligence to promote diversity. In Mit Sloan Management Review, How AI Is Transforming the Organization; The MIT Press: Cambridge, MA, USA, 2020; pp. 15–22. [Google Scholar] [CrossRef]

- Wong, P.-H. Democratizing algorithmic fairness. Philos. Technol. 2020, 33, 225–244. [Google Scholar] [CrossRef]

- Bolin, R.; Bolton, P. Book, Race, Religion, and Ethnicity in Disaster Recovery. Natural Hazards Center Collection. 1986. Available online: https://digitalcommons.usf.edu/nhcc/87 (accessed on 28 March 2025).

- Fitzpatrick, K.M.; Spialek, M.L. Hurricane Harvey’s Aftermath: Place, Race, and Inequality in Disaster Recovery; New York University Press: New York, NY, USA, 2020. [Google Scholar]

- Hartman, C.W.; Squires, G.D. There Is No Such Thing as a Natural Disaster: Race, Class, and Hurricane Katrina; Taylor & Francis: New York, NY, USA, 2006. [Google Scholar]

- Willison, C.E.; Singer, P.M.; Creary, M.S.; Greer, S.L. Quantifying inequities in US federal response to hurricane disaster in Texas and Florida compared with Puerto Rico. BMJ Glob. Health 2019, 4, e001191. [Google Scholar] [CrossRef]

- Hazeleger, T. Gender and disaster recovery: Strategic issues and action in Australia. Aust. J. Emerg. Manag. 2013, 28, 40–46. [Google Scholar]

- Rouhanizadeh, B.; Kermanshachi, S. Gender-based evaluation of economic, social, and physical challenges in timely post-hurricane recovery. Prog. Disaster Sci. 2021, 9, 100146. [Google Scholar] [CrossRef]

- Howell, J.; Elliott, J.R. Damages done: The longitudinal impacts of natural hazards on wealth inequality in the United States. Soc. Probl. 2019, 66, 448–467. [Google Scholar] [CrossRef]

- Goldsmith, L.; Raditz, V.; Méndez, M. Queer and present danger: Understanding the disparate impacts of disasters on LGBTQ+ communities. Disasters 2022, 46, 946–973. [Google Scholar] [CrossRef]

- Nicholson, K.L. Melting the iceberg. In Womenin Wildlife Science; Chambers, C.L., Nicholson, K.L., Eds.; Johns Hopkins University Press: Baltimore, MD, USA, 2022; pp. 336–361. [Google Scholar]

- Leslie, D. Tackling COVID-19 through responsible AI innovation: Five steps in the right direction. arXiv 2020, arXiv:2008.06755. [Google Scholar] [CrossRef]

- Rodriguez-Díaz, C.E.; Lewellen-Williams, C. Race and Racism as Structural Determinants for Emergency and Recovery Response in the Aftermath of Hurricanes Irma and Maria in Puerto Rico. Health Equity 2020, 4, 232–238. [Google Scholar] [CrossRef]

- Bethel, J.W.; Burke, S.C.; Britt, A.F. Disparity in disaster preparedness between racial/ethnic groups. Disaster Health 2013, 1, 110–116. [Google Scholar] [CrossRef]

- Roberts, P.S.; Wernstedt, K. Decision biases and heuristics among emergency managers: Just like the public they manage for? Am. Rev. Public Adm. 2019, 49, 292–308. [Google Scholar] [CrossRef]

- Kennel, J. IHI ID 08 Emergency medical services treatment disparities by patient race. BMJ Open Qual. 2018, 7 (Suppl. S1), A12. [Google Scholar] [CrossRef]

- Gupta, S.; Chen, Y.-C.; Tsai, C. Utilizing large language models in tribal emergency management. In Proceedings of the 29th International Conference on Intelligent User Interfaces, Greenville, SC, USA, 18–21 March 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Cao, S.; Wang, C.; Yang, Z.; Yuan, H.; Sun, A.; Xie, H.; Zhang, L.; Fang, Y. Evaluation of smart humanity systems and novel uv-oriented solution for integration, resilience, inclusiveness and sustainability. In Proceedings of the 2020 5th International Conference on Universal Village (UV), Boston, MA, USA, 24–27 October 2020; pp. 1–28. [Google Scholar] [CrossRef]

- Pereira, G.V.; Wimmer, M.; Ronzhyn, A. Research needs for disruptive technologies in smart cities. In Proceedings of the 13th International Conference on Theory and Practice of Electronic Governance, Athens, Greece, 23–25 September 2020; pp. 620–627. [Google Scholar] [CrossRef]

- Coeckelbergh, M. AI Ethics; The MIT Press: Cambridge, MA, USA, 2020. [Google Scholar]

- Wachter, S.; Mittelstadt, B.; Floridi, L. Why a right to explanation of automated decision-making does not exist in the general data protection regulation. Int. Data Priv. Law 2017, 7, 76–99. [Google Scholar] [CrossRef]

- Burwell, S.; Sample, M.; Racine, E. Ethical aspects of brain computer interfaces: A scoping review. BMC Med. Ethics 2017, 18, 60. [Google Scholar] [CrossRef] [PubMed]

- Zicari, R.V.; Brodersen, J.; Brusseau, J.; Dudder, B.; Eichhorn, T.; Ivanov, T.; Kararigas, G.; Kringen, P.; McCullough, M.; Moslein, F.; et al. Z-Inspection®: A process to assess trustworthy AI. IEEE Trans. Technol. Soc. 2021, 2, 83–97. [Google Scholar] [CrossRef]

- Raji, I.D.; Smart, A.; White, R.N.; Mitchell, M.; Gebru, T.; Hutchinson, B.; Smith-Loud, J.; Theron, D.; Barnes, P. Closing the AI accountability gap: Defining an end-to-end framework for internal algorithmic auditing. In Proceedings of the 2020 Conference on Fairness, and Transparency, Barcelona, Spain, 27–30 January 2020; pp. 33–44. [Google Scholar] [CrossRef]

- Hamissi, A.; Dhraief, A. A survey on the unmanned aircraft system traffic management. ACM Comput. Surv. 2024, 56, 68. [Google Scholar] [CrossRef]

- Akgun, S.A.; Ghafurian, M.; Crowley, M.; Dautenhahn, K. Using Emotions to Complement Multi-Modal Human-Robot Interaction in Urban Search and Rescue Scenarios. In Proceedings of the 2020 International Conference on Multimodal Interaction, Virtual Event, 22–29 October 2020; pp. 575–584. [Google Scholar] [CrossRef]

- Rahwan, I. Society-in-the-loop: Programming the algorithmic social contract. Ethics Inf. Technol. 2018, 20, 5–14. [Google Scholar] [CrossRef]

- Taddeo, M.; Floridi, L. How AI can be a force for good. Science 2018, 361, 751–752. [Google Scholar] [CrossRef]

- Senarath, A.; Arachchilage, N.A.G. Understanding Software Developers’ Approach towards Implementing Data Minimization. arXiv 2018, arXiv:1808.01479. [Google Scholar] [CrossRef]

- Reddy, E.; Cakici, B.; Ballestero, A. Beyond mystery: Putting algorithmic accountability in context. Big Data Soc. 2019, 6, 2053951719826856. [Google Scholar] [CrossRef]

- Jaume-Palasí, L.; Spielkamp, M. Ethics and algorithmic processes for decision making and decision support. AlgorithmWatch Work. Pap. 2017, 2, 1–19. [Google Scholar]

- Mai, J.-E. Big data privacy: The datafication of personal information. Inf. Soc. 2016, 32, 192–199. [Google Scholar] [CrossRef]

- Richter, R.M.; Valladares, M.J.; Sutherland, S.C. Effects of the source of advice and decision task on decisions to request expert advice. In Proceedings of the 24th International Conference on Intelligent User Interfaces, Marina del Ray, CA, USA, 17–20 March 2019; pp. 469–475. [Google Scholar] [CrossRef]

- Imran, M.; Castillo, C.; Diaz, F.; Vieweg, S. Processing social media messages in mass emergency: A survey. ACM Comput. Surv. 2015, 47, 67. [Google Scholar] [CrossRef]

- King, J.; Ho, D.; Gupta, A.; Wu, V.; Webley-Brown, H. The privacy-bias tradeoff: Data minimization and racial disparity assessments in U.S. Government. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, Chicago, IL, USA, 12–15 June 2023; pp. 492–505. [Google Scholar] [CrossRef]

- Nunes, I.; Jannach, D. A systematic review and taxonomy of explanations in decision support and recommender systems. User Model. User-Adapt. Interact. 2017, 27, 393–444. [Google Scholar] [CrossRef]

- Jarrahi, M.H. Artificial intelligence and the future of work: Human-AI symbiosis in organizational decision making. Bus. Horiz. 2018, 61, 577–586. [Google Scholar] [CrossRef]

- Herrera, L.C.; Gjøsæter, T.; Majchrzak, T.A.; Thapa, D. Signals of transition in support systems: A Study of the use of social media analytics in crisis management. ACM Trans. Soc. Comput. 2025, 8, 1–44. [Google Scholar] [CrossRef]

- Kamar, E. Directions in hybrid intelligence: Complementing AI systems with human intelligence. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016; pp. 4070–4073. [Google Scholar]

- Cai, C.J.; Winter, S.; Steiner, D.; Wilcox, L.; Terry, M. “Hello AI”: Uncovering the onboarding needs of medical practitioners for human-AI collaborative decision-making. Proc. ACM Hum.-Comput. Interact. 2019, 3, 104. [Google Scholar] [CrossRef]

- Elish, M.C. Moral crumple zones: Cautionary tales in human-robot interaction. Engag. Sci. Technol. Soc. 2019, 5, 40–60. [Google Scholar] [CrossRef]

- Cath, C.; Wachter, S.; Mittelstadt, B.; Taddeo, M.; Floridi, L. Artificial Intelligence and the “Good Society”: The US, EU, and UK approach. Sci. Eng. Ethics 2018, 24, 505–528. [Google Scholar] [CrossRef]

- Whittlestone, J.; Nyrup, R.; Alexandrova, A.; Dihal, K.; Cave, S. Ethical and Societal Implications of Algorithms, Data, and Artificial Intelligence: A Roadmap for Research. 2019. Available online: https://www.nuffieldfoundation.org/sites/default/files/files/Ethical-and-Societal-Implications-of-Data-and-AI-report-Nuffield-Foundat.pdf (accessed on 3 August 2025).

- Leslie, D. Understanding Artificial Intelligence Ethics and Safety: A Guide for the Responsible Design and Implementation of AI Systems in the Public Sector. 2019. Available online: https://digital.library.unt.edu/ark:/67531/metadc2289556/ (accessed on 3 August 2025).

- Gavidia-Calderon, C.; Kordoni, A.; Bennaceur, A.; Levine, M.; Nuseibeh, B. The IDEA of us: An identity-aware architecture for autonomous systems. ACM Trans. Softw. Eng. Methodol. 2024, 33, 164. [Google Scholar] [CrossRef]

- Hohendanner, M.; Ullstein, C.; Miyamoto, D.; Huffman, E.F.; Socher, G.; Grossklags, J.; Osawa, H. Metaverse Perspectives from Japan: A Participatory Speculative Design Case Study. Proc. ACM Hum.-Comput. Interact. 2024, 8, 400. [Google Scholar] [CrossRef]

- Aghaei, N.G.; Shahbazi, H.; Farzaneh, P.; Abdolmaleki, A.; Khorsandian, A. The structure of personality-based emotional decision making in robotic rescue agent. In Proceedings of the 2008 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Xi’an, China, 2–5 July 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1201–1206. [Google Scholar]

- Ulusan, A.; Narayan, U.; Snodgrass, S.; Ergun, O.; Harteveld, C. “Rather solve the problem from scratch”: Gamesploring human-machine collaboration for optimizing the debris collection problem. In Proceedings of the 27th International Conference on Intelligent User Interfaces, Helsinki, Finland, 22–25 March 2022; pp. 604–619. [Google Scholar] [CrossRef]

- Vaswani, V.; Caenazzo, L.; Congram, D. Corpse identification in mass disasters and other violence: The ethical challenges of a humanitarian approach. Forensic Sci. Res. 2024, 9, owad048. [Google Scholar] [CrossRef] [PubMed]

- Gasser, U.; Almeida, V.A.F. A layered model for AI governance. IEEE Internet Comput. 2017, 21, 58–62. [Google Scholar] [CrossRef]

- Brundage, M.; Avin, S.; Clark, J.; Toner, H.; Eckersley, P.; Garfinkel, B.; Dafoe, A.; Scharre, P.; Zeitzoff, T.; Filar, B.; et al. The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation. arXiv 2024, arXiv:1802.07228. [Google Scholar] [CrossRef]

- Floridi, L. Translating principles into practices of digital ethics: Five risks of being unethical. Philos. Technol. 2019, 32, 185–193. [Google Scholar] [CrossRef]

- Taddeo, M.; Blanchard, A.; Thomas, C. From AI Ethics Principles to Practices: A Teleological Methodology to Apply AI Ethics Principles in The Defence Domain. Philos. Technol. 2024, 37, 42. [Google Scholar] [CrossRef]

- Nussbaumer, A.; Pope, A.; Neville, K. A framework for applying ethics-by-design to decision support systems for emergency management. Inf. Syst. J. 2023, 33, 34–55. [Google Scholar] [CrossRef]

- Jayawardene, V.; Huggins, T.J.; Prasanna, R.; Fakhruddin, B. The role of data and information quality during disaster response decision-making. Prog. Disaster Sci. 2021, 12, 100202. [Google Scholar] [CrossRef]

- Gerlach, R. NextGen Emergency Management and Homeland Security: The AI Revolution. 30 April 2023. Available online: https://www.linkedin.com/pulse/next-generation-emergency-management-homeland-ai-gerlach-mpa-mep?trk=public_post_reshare_feed-article-content (accessed on 3 August 2025).

- Balasubramaniam, N.; Kauppinen, M.; Hiekkanen, K.; Kujala, S. Transparency and Explainability of AI Systems: Ethical Guidelines in Practice. In Requirements Engineering: Foundation for Software Quality; Gervasi, V., Vogelsang, A., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 3–18. [Google Scholar] [CrossRef]

- Gupta, T.; Roy, S. Applications of artificial intelligence in disaster management. In Proceedings of the 2024 10th International Conference on Computing and Artificial Intelligence, Bali Island, Indonesia, 26–29 April 2024; pp. 313–318. [Google Scholar] [CrossRef]

- Gupta, S.; Modgil, S.; Kumar, A.; Sivarajah, U.; Irani, Z. Artificial intelligence and cloud-based Collaborative Platforms for Managing Disaster, extreme weather and emergency operations. Int. J. Prod. Econ. 2022, 254, 108642. [Google Scholar] [CrossRef]

- AI.GOV. Administration Actions on AI. 30 October 2023. Available online: https://www.dhs.gov/archive/news/2023/10/30/fact-sheet-biden-harris-administration-executive-order-directs-dhs-lead-responsible (accessed on 28 March 2025).

- AI.GOV. AI Action Plan. 2025. Available online: https://www.ai.gov/action-plan (accessed on 12 August 2025).

- Camps-Valls, G.; Fernández-Torres, M.Á.; Cohrs, K.H.; Höhl, A.; Castelletti, A.; Pacal, A.; Robin, C.; Martinuzzi, F.; Papoutsis, I.; Prapas, I.; et al. Artificial intelligence for modeling and understanding extreme weather and climate events. Nat. Commun. 2025, 16, 1919. [Google Scholar] [CrossRef] [PubMed]

- Kumar, R.; Pathak, K.; Yesmin, W. Trending Interdisciplinary Research in 2025. 2025. Available online: http://cenagaon.digitallibrary.co.in/bitstream/123456789/165/1/Trending.pdf (accessed on 12 August 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, X.; Guo, Q.; Dadson, Y.A.; Goodarzi, M.; Jung, J.; Dong, Y.; Albert, N.; Bennett Gayle, D.; Sharma, P.; Ogunbayo, O.T.; et al. A Review of Ethical Challenges in AI for Emergency Management. Knowledge 2025, 5, 21. https://doi.org/10.3390/knowledge5030021

Yuan X, Guo Q, Dadson YA, Goodarzi M, Jung J, Dong Y, Albert N, Bennett Gayle D, Sharma P, Ogunbayo OT, et al. A Review of Ethical Challenges in AI for Emergency Management. Knowledge. 2025; 5(3):21. https://doi.org/10.3390/knowledge5030021

Chicago/Turabian StyleYuan, Xiaojun (Jenny), Qingyue Guo, Yvonne Appiah Dadson, Mahsa Goodarzi, Jeesoo Jung, Yanjun Dong, Nisa Albert, DeeDee Bennett Gayle, Prabin Sharma, Oyeronke Toyin Ogunbayo, and et al. 2025. "A Review of Ethical Challenges in AI for Emergency Management" Knowledge 5, no. 3: 21. https://doi.org/10.3390/knowledge5030021

APA StyleYuan, X., Guo, Q., Dadson, Y. A., Goodarzi, M., Jung, J., Dong, Y., Albert, N., Bennett Gayle, D., Sharma, P., Ogunbayo, O. T., & Cherukuru, J. (2025). A Review of Ethical Challenges in AI for Emergency Management. Knowledge, 5(3), 21. https://doi.org/10.3390/knowledge5030021