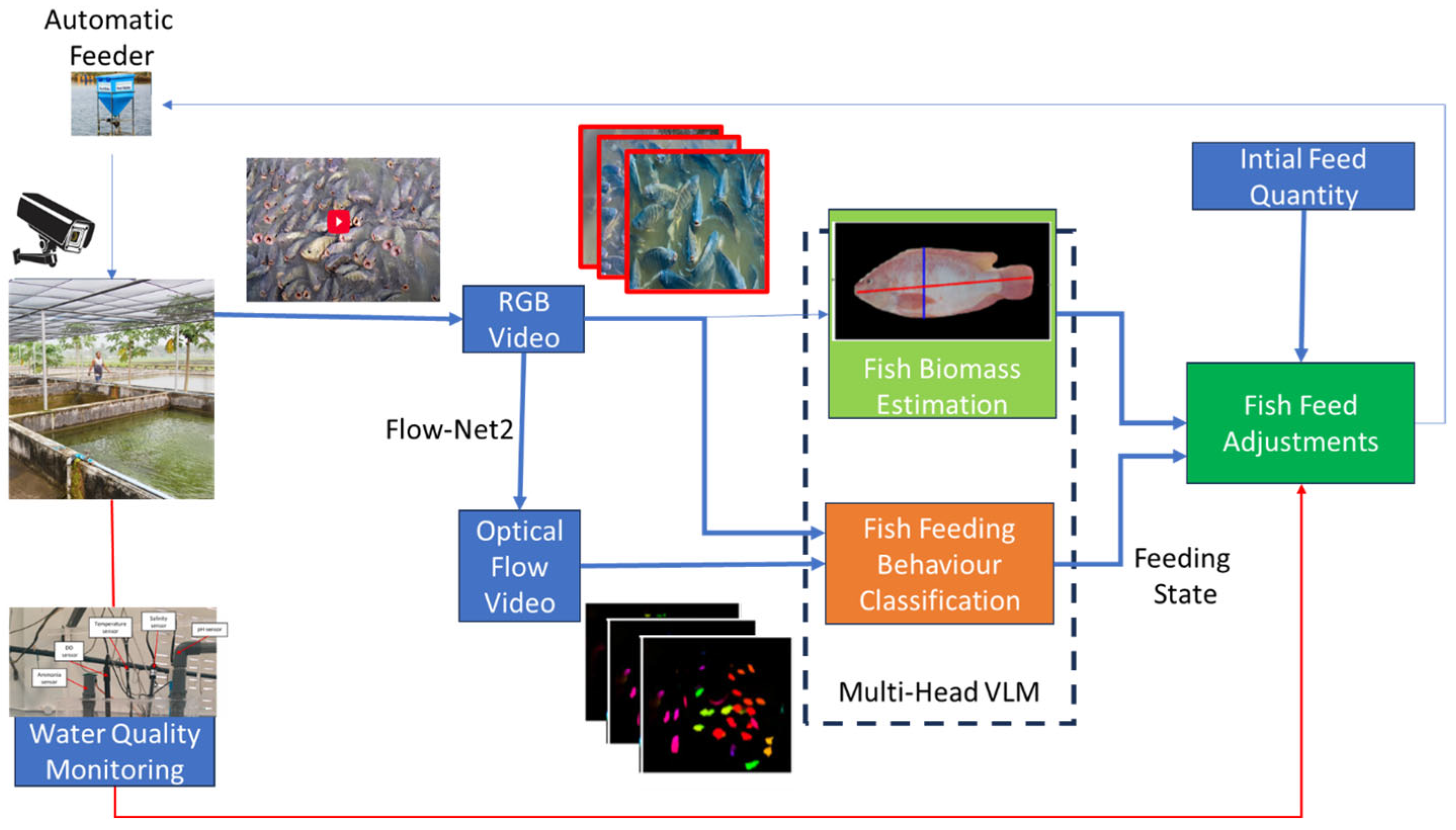

3.1. Data Collection and Pre-Processing

Accurately measuring fish biomass, keeping an eye on water quality, and identifying feeding patterns in aquaculture all depend on the data collection process.

- a.

Biomass Assessment:

Detailed pictures of the fish in the tanks are taken using high-resolution cameras. The overall computation of total biomass is then made easier by applying image processing algorithms to detect individual fish and estimate their size and weight. Periodically, physical sampling is carried out to validate and calibrate the image processing system in order to guarantee accuracy in these evaluations.

- b.

Water Quality Monitoring:

By integrating sensors that provide real-time data on vital parameters like temperature, pH, dissolved oxygen, ammonia, and turbidity, continuous monitoring of water quality is made possible. In order to assess its influence on fish health and feeding behavior and make well-informed management decisions, this data is methodically recorded.

- c.

Feeding Behavior Recognition:

Cameras are placed above the tanks in strategic locations to record the best possible footage of fish behavior during feeding sessions. The dynamics of fish movement and feeding habits are examined using optical flow techniques. Aggressive feeding, peaceful feeding, and scattering are among the behaviors that are categorized using a VLM. A labeled dataset of fish feeding behaviors is used to train the VLM, which enables it to identify and classify activities in real time and offer insightful information about fish behavior and feeding. Dynamics.

We employ FlowNet 2.0, a deep learning model specifically engineered to estimate optical flow between video frames with high accuracy, to generate optical flow data. By processing pairs of RGB video frames, which are standard color images that show the fish and their environment, FlowNet 2.0 calculates the optical flow and visualizes the movement of each pixel in the image from one frame to the next. With regions of active swimming displaying clear patterns, like trails or vectors indicating their paths, the resulting optical flow images visually depict fish movement. We can better comprehend fish interactions and their feeding environments thanks to this visualization.

3.2. Image Enhancement

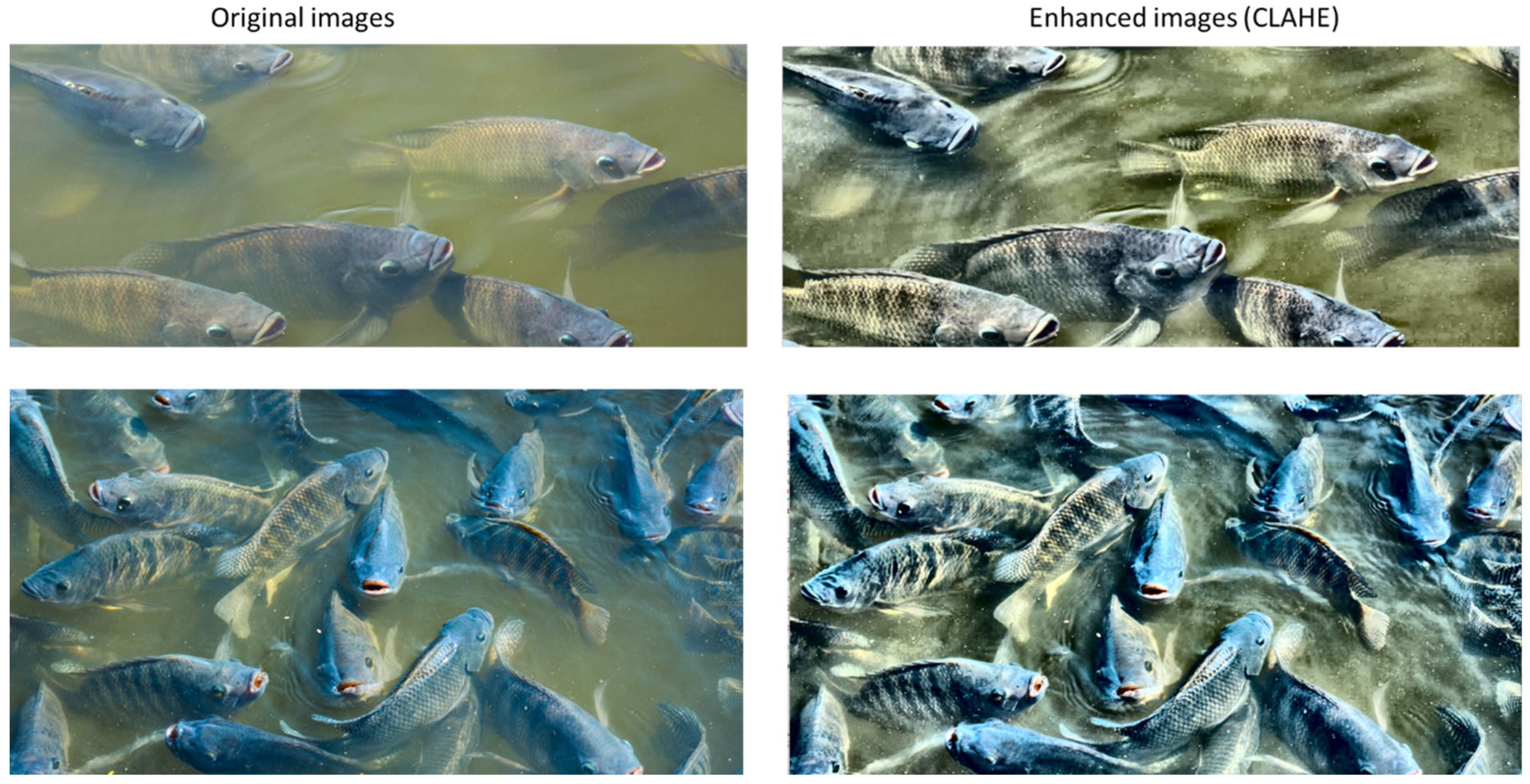

The image enhancement technique used in this work closely adheres to the methodology described by [

23]. Three crucial steps make up this strategy, which aims to enhance image quality for more accurate analysis of fish feeding behaviors. The first step involves applying the Multi-Scale Retinex with Color Restoration (MSRCR) algorithm, which is detailed in [

24], to preliminary processing of images. This method improves the overall visual quality of the photos by successfully reducing the effects of water surface reflections, which can mask visual details. MSRCR creates a strong basis for further improvements by restoring colors and preserving their natural appearance.

The Multidimensional Contrast Limited Adaptive Histogram Equalization method (MDCLAHE) [

25] is then used. This stage is essential for improving image contrast, especially in areas with low contrast at first. The mdc technique enhances the visibility of finer details by modifying local contrast levels, which facilitates the identification and analysis of particular features in the images.

Lastly, to further sharpen the images, we use Unsharp Masking (UM) technology [

26]. This method improves clarity and detail by highlighting the edges of objects in the pictures. UM greatly improves the overall quality of the images by sharpening them, which makes them better suited for precise analysis and interpretation.

Together, these three steps, UM for sharpening, MDCLAHE for contrast enhancement, and MSRCR for color restoration, work together to form a thorough image enhancement workflow that greatly raises the dataset’s quality and eventually makes it easier to identify fish feeding behaviors.

3.3. Image Preprocessing for Machine Learning

In order to standardize images and get them ready for efficient analysis, the CLIP model requires preprocessing that includes a number of crucial steps. Applying a RandomResizedCrop in the first step resizes the images to 224 by 224 pixels. By exposing the model to various image segments, this adjustment improves the model’s capacity for generalization by standardizing image dimensions while adding randomness in cropping.

The images are then mirrored along the vertical axis using a RandomHorizontalFlip transformation. The model’s ability to identify fish feeding behaviors under various circumstances is enhanced by this augmentation technique, which adds variability to the training dataset without increasing the overall number of images.

After these augmentations, ToTensor is used to convert the images into tensors, which is required in order to make them compatible with PyTorch-based models such as CLIP. For the model to properly process the images, this conversion is necessary.

To standardize the images according to particular mean and standard deviation values, a normalization transformation is applied in the last step. This guarantees that the distribution of input data matches the CLIP model’s pre-training conditions. Normalization is essential because it steadily scales pixel values, which helps to stabilize the training process and speed up model convergence.

We also use the multi-step image enhancement strategy outlined in [

23] in addition to these steps. This tactic uses methods like noise reduction and contrast enhancement to further enhance image quality before they are fed into the model.

3.5. Method for Generating Optical Flow Data Using FlowNet 2.0

By measuring the movement of fish across video frames, optical flow analysis can be used to understand the feeding dynamics of fish. By expressing this movement as vectors that express both direction and magnitude, optical flow is able to capture the motion of objects between two images. This method is well known for its ability to track and identify moving objects, which makes it appropriate for evaluating fish behavior during feeding events.

Sparse and dense optical flow are the two main methods for putting optical flow into practice, and they each have different ways of figuring out movement. Within an image, sparse optical flow concentrates on a small number of feature points. By tracking the movement of these selected points frame by frame, it estimates the flow using methods like the Lucas-Kanade algorithm [

30]. With this method, the tracked points are represented graphically as movement lines. In early attempts to use sparse optical flow, particular features—like the fish’s black eye—were targeted for quantification. These attempts, however, were unsuccessful because it was difficult to reliably identify the feature in the pictures when there were other fish parts present. Dense optical flow, on the other hand, provides a comprehensive view of motion throughout the frame by calculating movement for each pixel in the image. The Horn-Schunck and Gunnar Farneback methods are frequently used to calculate dense optical flow [

31]. When examining a school of fish, dense optical flow is especially useful because it captures the entire movement rather than just individual points, even though it uses more processing power than its sparse counterpart. To make feature extraction easier, the first step in data processing is to convert video frames to grayscale. Dense optical flow is vulnerable to noise from multiple sources because it examines every pixel. In order to minimize the impact of noise, the image is separated into smaller segments, such as 16 × 16 pixel regions, which enable the computation of the average flow within each segment. The first step in feature detection using the OpenCV library is to load the video using cv2.VideoCapture. The initial frames are captured using the read () method, which converts each one to grayscale. The flow between the first and second frames is calculated by cv2.calcOpticalFlowFarneback, which is used to carry out the dense optical flow processing. To create a comprehensive representation of movement, the resulting vector data are averaged within the divided regions. Histograms are made from the resulting flow data to categorize fish movements. The instantaneous movements seen between frames are summarized by these histograms; however, continuous movements over a predetermined number of frames are more informative for efficient classification. As a result, histograms describing vector magnitudes and angles are created for 31 consecutive frames. In order to provide feature values for machine learning algorithms that categorize different fish feeding behaviors, the data is categorized, noting the frequency of each movement type.

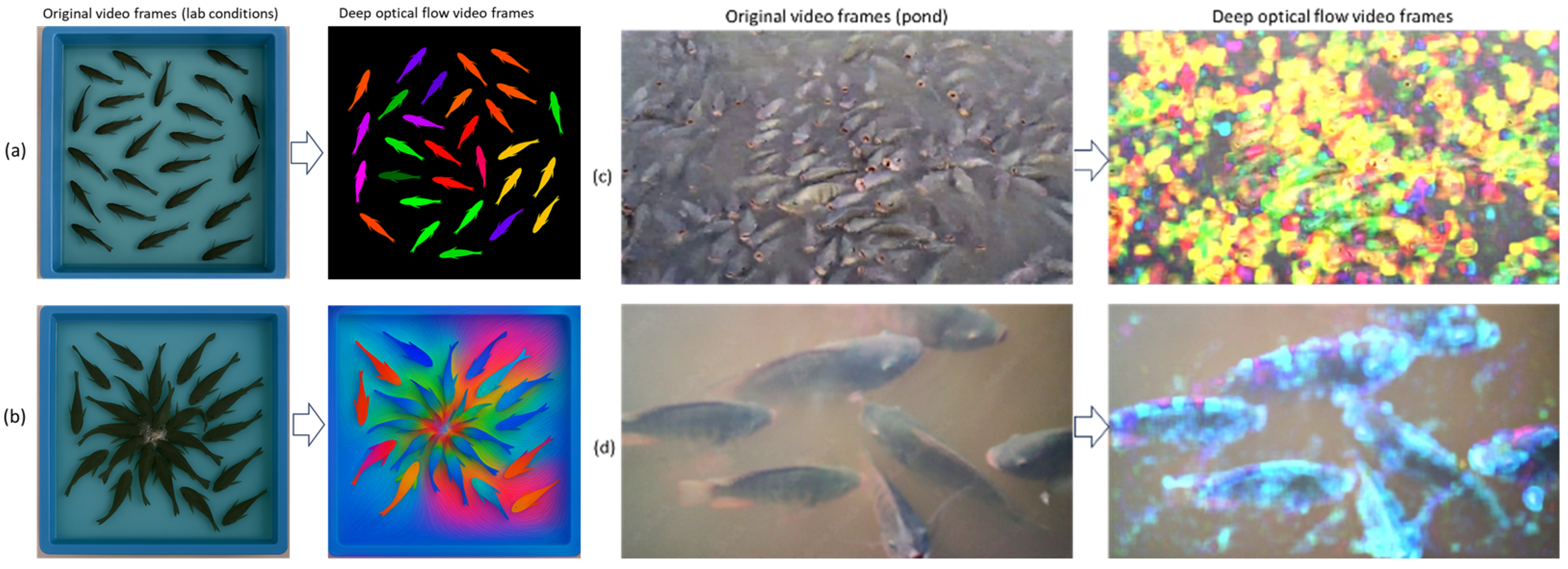

Figure 5 displays examples of optical flow data for key frames produced by Flow Net 2.0. In this figure, we present a comparative analysis of the optical flow representations obtained from two different environments. The state of movement speed and direction is the primary indicator of fish behavior. The images in (a) and (b) were taken in a controlled laboratory setting, as detailed in the study by [

32], recording fish feeding behaviors in an environment where factors like lighting and water clarity can be controlled. The optical flow data generated from these images clearly depict the movement dynamics of the fish as they interact with food, displaying distinct and well-defined movement vectors (HSV colors). The controlled setting provides high visibility and distinct patterns, which facilitate the interpretation of the flow data and the analysis of the feeding behaviors.

On the other hand, photos taken in an actual pond aquaculture environment are shown in (c) and (d). The intricacies and difficulties that occur in natural environments—such as fluctuations in water turbidity, lighting, and background clutter—are reflected in these photos. These images’ optical flow data show how fish movements can be affected by their interactions with the environment, including debris and aquatic plants. In contrast to the lab conditions, the resulting flow visuals might show less clarity, illustrating the dynamic interactions of fish in a more intricate ecological setting.

3.6. Fish Feeding Behaviour Assessment

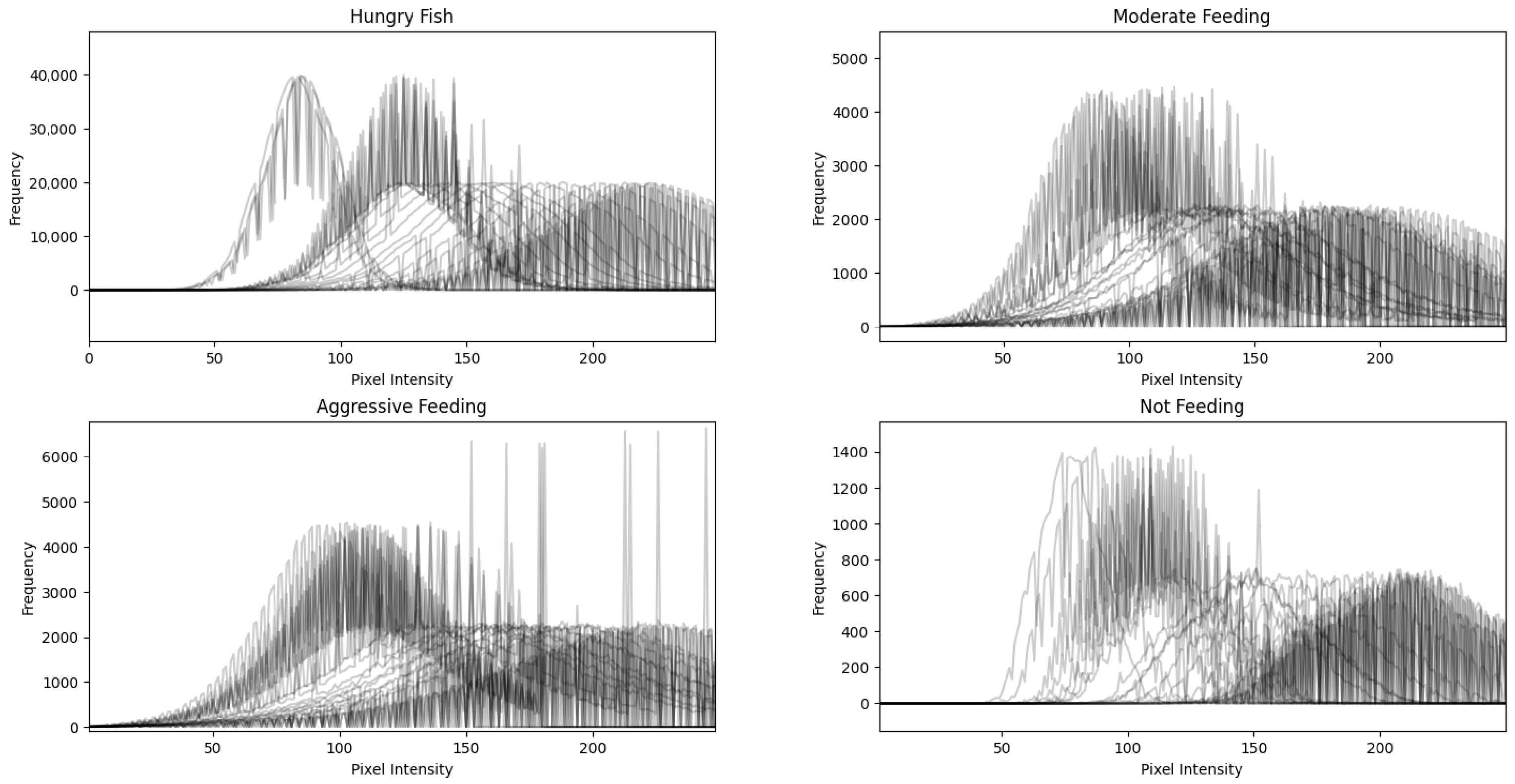

Figure 6 and

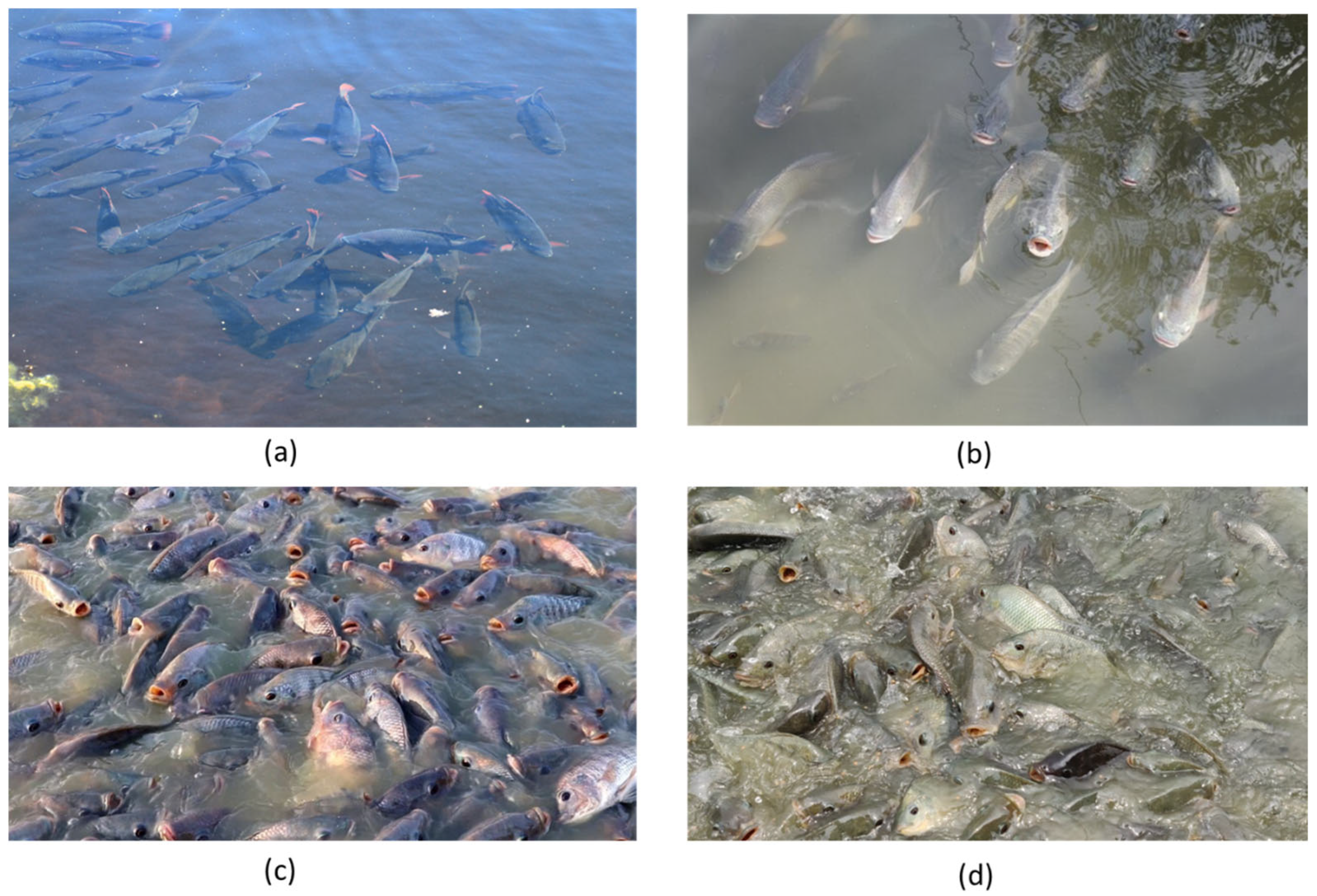

Figure 7 depict keyframes of fish feeding behaviors in complex environments and in lab settings, respectively. The keyframes in

Figure 6 show fish feeding behaviors seen in a carefully regulated laboratory environment with carefully controlled lighting, water quality, and food availability. The fish’s movements and behaviors during feeding can be clearly recorded thanks to the laboratory’s spotless, transparent tanks and excellent visibility. Conversely,

Figure 7 depicts feeding behaviors like aggressive, moderate, and hungry behavior. Behavior that is hungry, Fish are seen actively swimming close to the surface, showing signs of agitation, and darting in the direction of the feeders in the keyframe (

Figure 7b). The keyframe for “Moderate Feeding” (

Figure 7c) shows fish eating steadily, stopping occasionally to take in their environment. They exhibit this behavior when they bite and then switch back to their neutral swimming position. Fish vying for food are captured in Aggressive Feeding frames, which show noticeable accelerations and sporadic leaps as they pursue food particles. The hostile interactions between individual fish make this clear.

Figure 6 and

Figure 7 taken together give a thorough picture of how fish feeding habits can differ greatly between regulated lab settings and more intricate, natural settings. These keyframes are important sources of information for comprehending the subtleties of fish behavior, which can help improve aquaculture management techniques and lead to more efficient feeding plans.

The basic CLIP model creates separate embeddings for text and images because it processes them independently. We made some adjustments to increase accuracy for our particular requirements. Our method makes use of the pre-trained components of CLIP: a Vision Transformer (ViT) for image processing and a Transformer model or LLM, e.g., BERT, for text analysis. Because they extract rich features from both visual and textual data, these components are effective at capturing complex relationships between the two types of data. Specific adjustments were made to the network structure, including a modification of the classification head, which outputs a tensor of size 4 using a SoftMax activation function. We incorporated dropout layers before the classification head to help mitigate overfitting due to our smaller dataset. Additionally, we utilized a lower learning rate of , which is more appropriate than the typical learning rate used for training from scratch.

Prior to classification, we incorporated a fusion layer into our model that merges the text and image embeddings. This layer uses the interactions between the text and visual inputs to help the model better identify subtle indicators of various fish feeding behaviors. A SoftMax classifier that generates probabilities for each of the four fish feeding behavior categories in our dataset is the result of merging the embeddings and passing them through multiple dense layers.

Identifying fish feeding behavior can be considered as an image classification task where we have a dataset represented as

belonging to the set

, where

denotes the total number of classes in the dataset

. The primary objective of image classification is to accurately predict the category label associated with each given image. This is achieved by employing a visual encoder

along with a parametric classifier, such as a softmax classifier

. For an input image

, the encoder

converts

into an embedding vector

. Subsequently, the classifier

computes the logits distribution

across the

categories in

. When considering a specific image

, the cross-entropy loss function is utilized to optimize

against the true label

and is defined as follows:

Prerequisite, therefore is Image-text dataset. Image-text alignment involves a dataset

, which consists of images

and their accompanying captions

. The objective of this process is to minimize the distance between corresponding image-text pairs (referred to as positive pairs) while maximizing the distance between non-matching pairs (termed negative pairs) within the embedding space. This is achieved using a visual encoder

for images and a textual encoder

for captions. After passing through their respective feedforward neural networks and being L2 normalized, the embeddings

and

are generated. To adapt the cosine similarity between

and

, we typically employ the InfoNCE [

33] contrastive loss function, as expressed in Equation (2). Here,

represents a similarity function such as dot product or cosine similarity, and the learnable temperature parameter

is initially set to 0.07.

To solve the task of image classification based on image-text alignment, we frame image classification with a triplet dataset

, where

represents the corresponding text description. In traditional fish feeding behaviour classification, images are typically associated with straightforward category labels or indices

. However, in this case, text descriptions

serve as concept names indexed by

, allowing us to structure

as

. As illustrated in

Figure 8, this unification enhances the understanding of the relationship between images and text, as given in

Table 1, for example.

We incorporated detailed descriptions

for each fish feeding behaviour and utilized a structured prompt template to enhance the fluency and relevance of these descriptions. As depicted in

Figure 8, each class name is treated as a concept C for its respective category. The final text description

for Equations (4) and (5) is formulated using the following template:

This approach allows us to generate a final text description that is not only informative but also contextual.

We introduce a vision-language model inspired by the principles of CLIP, aimed at aligning the textual and visual representations of leaves within a shared embedding space. Similar to the original CLIP framework, our approach processes a batch of N image-text pairs denoted as , utilizing independent encoders for the visual components and the textual component .

To derive the semantic representations for each pair, the image is transformed into an embedding through the visual encoder , and the corresponding text is similarly processed by the textual encoder . Both encoders deliver output embeddings with a dimensionality of 512. The resulting embeddings are normalized for each image-text pair.

As expressed in Equation (3), we use one-hot label vectors for the target calculations, which are essential for computing the loss components, including both image-to-text and text-to-image losses as specified in Equations (4) and (5). For the i-th pair, the label is defined as

, where

equals one for the positive pair and zero for negative pairs. Consequently, the overall loss for the CLIP model can be expressed as:

where:

In these equations, signifies the cross-entropy operation applied to the respective loss functions.