1. Introduction

Network automationand self-organizing networks (SONs) increasingly rely on on-device classifiers for real-time traffic identification, intrusion detection, and fault diagnosis. Because of its simplicity and low-latency footprint, cross-entropy (CE) loss remains the default optimisation objective for such edge-deployed models [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14]. A standard CE pipeline maps features to class scores with a linear layer and then applies asoftmax. Although effective, this routine only implicitlystores class information in the weight matrix and imposes no explicit geometric structure on the feature space [

15,

16,

17]. As a result, learned representations may lack intra-class compactness and clear inter-class margins, ultimately limiting generalisation under the dynamic, noisy conditions typical of large-scale automated networks [

18,

19,

20,

21,

22,

23,

24].

Several avenues have been explored to overcome these limitations. Margin-based lossessuch as ArcFace [

18] and CosFace [

16] explicitly enlarge geometric margins in feature space, while contrastive learningapproaches like SimCLR [

25] and MoCo [

26] pull positive pairs together and push negatives apart. Although both lines of work improve discrimination, margin losses are highly sensitive to hyper-parameters and label noise [

27,

28,

29,

30,

31,

32], whereas contrastive methods often require large batches, complex hard-negative mining, or auxiliary memory banks [

33,

34,

35,

36,

37,

38,

39]. These extra costs clash with the tight latency and memory budgets of edge devices embedded in SON infrastructures.

To retain CE’s efficiency while meeting the geometric demands of reliable classification, we propose Contrastive Geometric Cross-Entropy (CGCE). CGCE assigns each class a trainable representation vector and defines the loss via dot-product similarity between features and these vectors, simultaneously tightening intra-class clusters and widening inter-class gaps—yet without large batches, negative mining, or extra memory. At inference time, a sample is classified simply by its similarity to the learned class vectors, streamlining deployment on resource-constrained network nodes.

Because class information is explicit, CGCE can seamlessly absorb priors from powerful pretrained encoders: class vectors may be initialised with class-wise feature means or semantic embeddings derived from label text, enabling rapid convergence and resilience to class imbalance, few-shot regimes, and noisy labels—conditions frequently observed in operational networks.

Compared with vanilla CE, CGCE (i) establishes quantifiable geometric margins that sharpen decision boundaries, (ii) integrates pretrained priors for fast online model updates essential to self-optimising network functions, and (iii) keeps computation and memory on par with CE, preserving the low-latency footprint required by large-scale SON deployments. Experiments across vision, NLP, and code-analysis datasets demonstrate up to a 2% absolute accuracy gain over CE together with superior robustness, positioning CGCE as a practical loss for next-generation network-automation pipelines.

2. Related Work

Cross-entropy loss has been widely adopted in classification tasks due to its optimization stability and ease of implementation [

1,

2,

3,

4,

5,

6,

7,

40,

41]. However, this loss function primarily models class information implicitly, lacking explicit constraints on intra-class compactness and inter-class separation, which may restrict a model’s discriminative capability in complex tasks [

18,

19,

20,

21,

22,

23,

24]. To overcome these limitations, several studies have introduced improvements based on geometric margins—such as ArcFace and CosFace—that explicitly enlarge the inter-feature distance [

16,

18,

42,

43,

44,

45]. These methods typically require meticulous hyperparameter tuning and exhibit limited performance in the presence of label noise or class imbalance [

27,

28,

29,

30,

31,

32]. Contrastive learning has demonstrated significant advantages in enhancing feature discriminativeness, particularly under unsupervised settings, but it often relies on large training batches and effective sample mining strategies [

25,

26,

33,

34,

36,

37,

38,

39,

46,

47,

48]. Although supervised contrastive learning mitigates some of these issues by leveraging label information to construct sample pairs, it still incurs high training costs [

35]. In this work, we propose the CGCE method, which synergistically integrates the strengths of cross-entropy and contrastive learning. By introducing class representation vectors, our approach explicitly constructs geometric boundaries without additional computational overhead, thereby simultaneously enhancing model discrimination, training efficiency, and generalization ability.

3. Methods

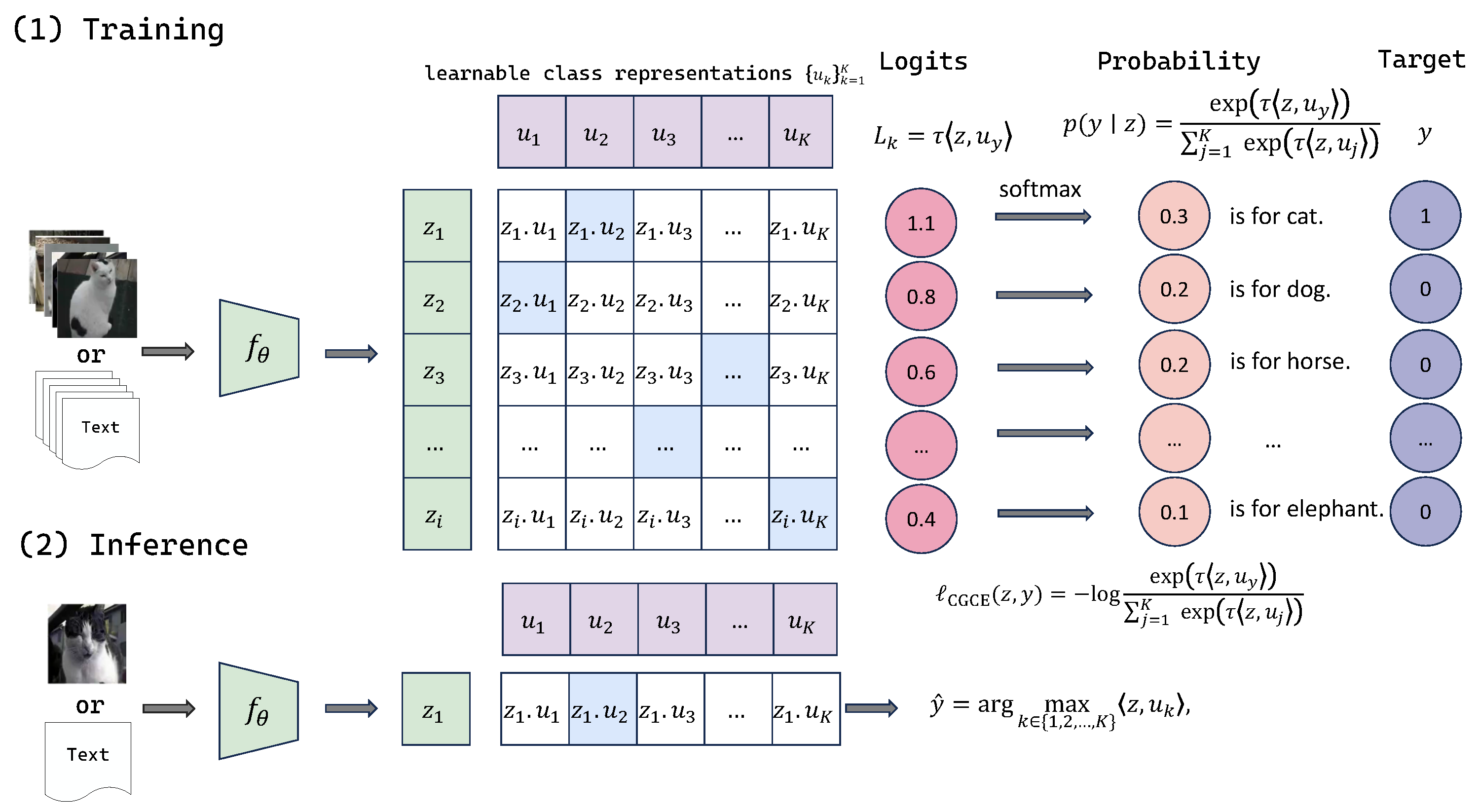

In this section, we introduce the proposed Contrastive Geometric Cross-Entropy (CGCE) framework, which enhances classification by enforcing explicit geometric constraints via learnable class representation vectors. We first define the CGCE loss and its optimization objective. Then, we provide a theoretical analysis showing that minimizing CGCE naturally induces clear geometric margins. Finally, we discuss its connections to traditional cross-entropy and supervised contrastive learning.

3.1. Problem Definition

Consider a multi-class classification task with a given training dataset , where each sample has a feature representation obtained via a feature extractor , and a corresponding class label . In contrast to traditional CE approaches, we explicitly introduce a set of learnable class representation vectors to directly characterize each class.

Specifically, for a single sample

, the CGCE loss is defined as follows:

where

is a temperature hyperparameter and

denotes the dot-product operation. For the entire training dataset, the average CGCE loss is as follows:

This definition explicitly embodies the core idea of contrastive learning: during training, the model optimizes both the feature extractor and the class representation vectors, pulling sample features closer to their corresponding class representations and pushing them away from the representations of other classes, thereby explicitly forming a measurable geometric margin in the feature space.

At inference time, classification decisions become straightforward: for a new sample

x, we first compute its feature representation

, and then select the class whose representation vector has the highest similarity (dot product) to

z:

Figure 1 provides an overview of the proposed CGCE framework.

3.2. Theoretical Analysis

To demonstrate theoretically that minimizing CGCE loss naturally leads to clear geometric class boundaries, we analyze a representative case for a single training sample.

First, we introduce the following notations:

The geometric margin for a sample

is then defined as follows:

Clearly, the sample is classified correctly if and only if .

We now rigorously derive a lower bound on this geometric margin. Consider the case when the CGCE loss satisfies the following:

Under this condition, we derive the following chain of inequalities:

Thus, we obtain a rigorous lower bound for the geometric margin:

This theoretical result shows that, once the CGCE loss is sufficiently small, the model naturally forms a clear, measurable decision boundary in the feature space. This property can be extended to the entire dataset: if the average CGCE loss across all training samples is low, then the model’s overall class separability is guaranteed.

Traditional CE methods lack this explicit geometric margin guarantee because they implicitly encode class information only within the linear classification layer parameters (weights and biases), without explicitly defining or optimizing class representation vectors. Therefore, minimizing the traditional CE loss does not directly imply an explicit measurable geometric margin, but rather merely improves class probabilities indirectly. In contrast, CGCE’s explicit introduction and optimization of trainable class vectors enable this rigorous theoretical guarantee, enhancing the discriminative power of the learned feature space.

4. The Relationship and Differences Between CGCE, Cross-Entropy, and Supervised Contrastive Loss

We propose the Contrastive Geometric Cross-Entropy (CGCE) framework, which introduces learnable class representation vectors and utilizes the similarity between sample features and class vectors as the scoring metric. This approach naturally fuses classification with contrastive learning. In essence, CGCE is closely related to the traditional Cross-Entropy (CE) loss and can be viewed as a special case of Supervised Contrastive Loss (SCL) at the class level.

Traditional CE loss is computed based on the logits produced by a linear classifier. It is typically defined as follows:

where the logits are usually obtained by multiplying the weights of a linear layer with the input features.

In contrast, CGCE explicitly defines the logits as the dot product similarity between the sample features and the class representation vectors, scaled by a temperature factor , and then normalized via the softmax function. This modification preserves the probabilistic interpretation of CE while effectively extracting the class information from the model weights into learnable class vectors, thereby constructing explicit geometric decision boundaries in the feature space.

While SCL emphasizes constructing positive and negative sample pairs at the individual sample level—maximizing similarity among samples of the same class and minimizing similarity among samples of different classes—this method typically incurs high computational complexity due to the maintenance of numerous pairs within each batch. CGCE simplifies this contrastive mechanism to the class level: each sample is matched only with its corresponding class vector as a positive example, with all other class vectors treated as negatives. In this configuration, if the class vectors are considered as the “positive samples” in the SCL framework, the CGCE loss is equivalent to SCL under the setting of “one anchor, one positive, and the rest negatives”. This reduction not only diminishes the dependency on batch size but also enhances scalability and efficiency.

In summary, CGCE integrates the stability of CE with the discriminative advantages of contrastive learning. It inherits the classification capability of CE while incorporating the clear separation in feature space offered by SCL, all while avoiding the computational overhead associated with constructing extensive positive-negative pairs. This design, characterized by its geometric interpretability and contrastive enhancement, enables CGCE to achieve superior generalization and training efficiency.

5. Experiments

5.1. Experimental Settings

In this section, we provide a detailed description of the overall experimental plan and implementation details. To evaluate the effectiveness of CGCE relative to the traditional CE method, we conducted both image classification and text classification experiments using the CE and CGCE loss functions under identical experimental conditions. The traditional CE method is implemented using a classic linear classification layer; that is, a fully connected layer is appended after the feature extractor to map the extracted features to class scores, followed by a softmax function to compute class probabilities and cross-entropy loss for optimization. In contrast, the CGCE method explicitly introduces a set of trainable class representation vectors, directly computes the dot product between the sample embeddings and the class representation vectors, scales the dot product with a temperature parameter , and then computes the softmax probabilities to construct the contrastive geometric cross-entropy loss. The length of the class vectors is set to match the model’s output feature dimension—for text tasks, it uses the hidden state of the first token from a RoBERTa model (typically 768 dimensions), and, for image tasks, it is determined by the model (2048 dimensions for ResNet-50 or the hidden_size, e.g., 768, for Vision Transformer).

To ensure a fair and rigorous comparison between the two methods, we strictly controlled the experimental conditions. These include the selection of datasets, the choice of feature extractor models, data preprocessing methods (e.g., random cropping, random horizontal flipping, color jittering, and normalization for image tasks; tokenization and encoding for text tasks), batch size, number of training epochs, learning rate, mixed precision training, early stopping strategy, and learning rate scheduling (e.g., cosine annealing). All experiments were conducted on a uniform hardware platform using an NVIDIA RTX 4090D GPU. Moreover, each experiment was repeated with five different random seeds to ensure the stability and reliability of the results, and the average accuracy along with the corresponding confidence intervals was reported.

Specifically, for image classification tasks, we employed the CIFAR-10 [

49], CIFAR-100 [

49], and ImageNet [

50] datasets and used ResNet-50 [

51] and Vision Transformer (ViT) [

52] as the feature extractors; for text classification tasks, we utilized the SST-2 [

53], SST-5 [

53], IMDB [

54], AG News [

55], Devign [

56], and Big-Vul [

57] datasets, with feature extractors including BERT [

58], RoBERTa [

59], CodeBERT [

60], and UniXcoder [

61]. Through these carefully designed and strictly controlled experimental conditions, we ensured that any performance differences between CGCE and the traditional CE method can be solely attributed to the design of the loss function, rather than to other external factors.

5.2. Evaluation Under Standard Conditions

In the standard setting, we primarily investigate the performance of CGCE in scenarios where data availability is ample, class distributions are balanced, and label quality is high. As shown in

Table 1, we first compare the proposed CGCE with traditional CE on the CIFAR-10, CIFAR-100, and ImageNet datasets using ResNet-50 and Vision Transformer (ViT) as feature extractors. The results indicate that CGCE consistently achieves higher overall accuracy than CE. This suggests that CGCE effectively pulls sample features closer to their corresponding class representations, while simultaneously pushing them away from other classes in the feature space, thereby forming a clearer geometric boundary and enhancing both intra-class compactness and inter-class separability.

Moreover, we observe that, for relatively simple tasks (e.g., CIFAR-10 and the Top-5 accuracy metric), the improvements offered by CGCE are modest, likely because CE already performs exceptionally well under these conditions. In contrast, as dataset complexity increases (e.g., CIFAR-100 and ImageNet), the advantages of CGCE become more pronounced. A possible explanation lies in CGCE’s built-in contrastive learning mechanism: during training, positive samples are explicitly “pulled” toward the target class vector, while negative samples are “pushed” away from other class vectors. When the task grows more complex—thus increasing the number of classes and negative samples—CGCE can better exploit the abundant negative samples, refining the feature representation and constructing a more distinct geometric boundary that facilitates more accurate discrimination across classes.

The experimental results on text classification tasks, reported in

Table 2, further corroborate the superior performance of CGCE compared to CE. We conjecture that CGCE capitalizes more effectively on the rich semantic information in pretrained language models: by introducing trainable class representation vectors, which can be semantically initialized via natural language label descriptions or class-average embeddings, CGCE is able to capture finer-grained semantic distinctions. During training, CGCE’s contrastive mechanism explicitly pulls each sample’s features closer to the appropriate semantic class vector, while pushing them away from irrelevant classes. This semantic contrast allows the model to more fully exploit the intrinsic semantic features of the pretrained model, thereby achieving higher accuracy and robustness in text classification scenarios.

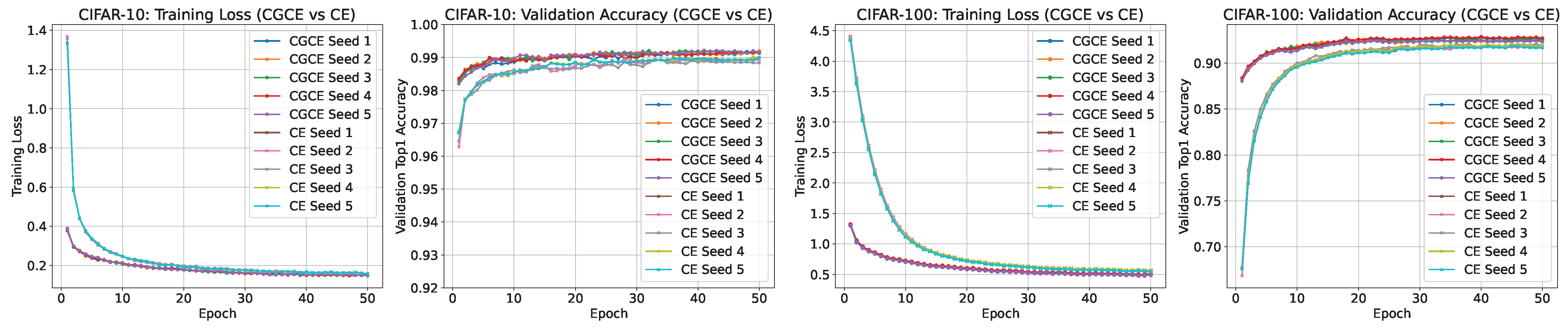

Rapid Convergence Property: One of the key practical advantages of the CGCE method is its rapid convergence capability. As shown in

Figure 2, the training loss curve on the CIFAR-100 and CIFAR-10 dataset clearly indicates that, compared with the traditional Cross-Entropy method, the loss for CGCE decreases more rapidly in the initial training epochs. Specifically, for the same number of epochs, the CGCE method not only achieves a significantly lower training loss but also attains higher validation accuracy more quickly, approaching optimal performance within fewer epochs.

This rapid convergence property can be attributed to two main factors. First, the explicit geometric constraint mechanism in CGCE, which, through learnable class representation vectors, effectively pulls the sample features closer to their corresponding class vectors while pushing them away from others, rapidly establishing clear and stable decision boundaries early in training. Second, the use of class-average features from a pretrained model as the initial values for the class representation vectors ensures that these vectors are closer to the true data distribution, further accelerating early convergence.

5.3. Performance Evaluation Under Complex Conditions

To evaluate the applicability of CGCE under more complex conditions, we designed three sets of experiments to assess its performance in scenarios involving data scarcity, class imbalance, and label noise.

Data Scarcity: In the CIFAR-10, CIFAR-100, ImageNet, and SST-2 datasets, we progressively reduced the number of samples per class (e.g., from 200 samples down to 1 sample) to evaluate the generalization capability of CGCE under limited data conditions. The results, as shown in

Table 3, indicate that, even under extreme data scarcity, CGCE maintains high accuracy and effectively mitigates the risk of overfitting.

Class Imbalance: In this experiment, we divided all classes into two groups by designating half of the classes as majority classes (with a larger number of samples) and the other half as minority classes (with fewer samples). We then manually adjusted the sample ratio between the majority and minority classes to simulate extreme imbalance. As presented in

Table 4, the results demonstrate that CGCE is better able to preserve the features of minority classes, thereby improving overall classification performance and significantly alleviating the performance degradation caused by class bias.

Label Noise: In the SST-2, CIFAR-100, and ImageNet datasets, we introduced erroneous labels at rates of 20%, 30%, and 50%, respectively, to assess the robustness of CGCE against label noise. As shown in

Table 5, although the presence of noise reduces overall accuracy, the performance drop in CGCE is noticeably smaller than that observed with traditional CE, confirming its stronger noise resilience.

In summary, these experiments under complex scenarios thoroughly validate the stability and superiority of CGCE when addressing challenges such as data scarcity, class imbalance, and label noise, thereby demonstrating its broad applicability in practical applications.

6. Discussion

Although CGCE demonstrates excellent classification performance and generalization ability, its practical deployment is still influenced by several factors. To comprehensively evaluate its feasibility and applicability, this paper conducts an in-depth analysis of CGCE from three perspectives: computational complexity and resource overhead, the impact of temperature coefficient adjustment, and the initialization strategies of class representation vectors. This analysis aims to reveal the design trade-offs of CGCE and provide practical insights for future applications.

6.1. Computational Complexity and Memory Footprint Analysis

To evaluate the computational efficiency of the proposed CGCE method in practical applications, we performed a comprehensive analysis of its computational complexity and memory consumption, comparing it with the traditional cross-entropy method combined with a linear classifier.

In our theoretical analysis, assuming a batch size N, feature dimensionality d, and number of classes K, the traditional cross-entropy approach processes each sample feature with a linear classifier by computing the class scores as for , where and are used. Since each involves d multiply-add operations, the per-sample complexity is and the batch complexity is . The storage requirement, given by , is approximately for large d relative to K.

In contrast, the CGCE method introduces trainable class vectors (for ) and computes the similarity (with temperature ) during the forward pass. This computation also takes d multiply-add operations per class, resulting in per-sample and batch complexities of and , respectively, with a memory footprint of parameters, i.e., .

Thus, both methods exhibit a forward computational complexity of

and similar memory requirements of

without additional overhead. We further validated these conclusions experimentally;

Table 6 shows the per-epoch runtime (in seconds) and memory usage (in MB) for both methods under identical conditions. The experimental results confirm that both methods have nearly identical per-epoch runtime and memory consumption, consistent with our theoretical analysis.

6.2. Impact of the Temperature Coefficient on Classification Accuracy

In the CGCE framework, the temperature coefficient modulates the dot product between the feature embedding and the class representation vector , thereby affecting both the contrastive loss and the softmax distribution. When is small, the model accentuates differences between classes, resulting in a “peaked” output distribution that clearly separates positive and negative examples in the embedding space, which is beneficial for simpler tasks (e.g., CIFAR-10); however, excessive separation may lead to training instability or overfitting. Conversely, an excessively large yields overly smooth class distinctions, weakening decision boundaries and degrading classification performance.

As shown in

Table 7, our experiments on CIFAR-10, CIFAR-100, ImageNet, and Devign reveal a single-peaked trend in accuracy as

increases. For datasets with many classes and higher complexity (e.g., CIFAR-100 and ImageNet), a moderate

helps capture subtle inter-class differences without collapsing similar classes, while for simpler tasks a smaller

accelerates convergence. Moreover, different network architectures exhibit varying sensitivity to

; for instance, ResNet performs well with a slightly lower

due to its strong spatial priors, whereas ViT benefits from a moderate

that balances contrastive separation and generalization.

Overall, we recommend using as an initial value, with further tuning based on dataset complexity and network architecture to achieve the optimal balance between training stability and generalization.

6.3. Impact of Class Representation Initialization

This section aims to investigate the effect of different initialization methods for the class representation vectors in the CGCE framework on model convergence speed and overall performance. According to the design of CGCE, the class representation vectors can be initialized in three ways: (1) in the absence of prior information, the vectors can be initialized using a random normal distribution to ensure generality and robustness; (2) when a pretrained model is available, the average feature vectors of samples from each class, as extracted by the pretrained model, can be used as the initial values to better align with the true feature distributions; and (3) when abundant semantic information is present, the natural language description of the labels can be fed into the pretrained feature extractor to obtain corresponding semantic embeddings as the initial class representations.

We conducted experiments on text classification tasks (using the SST-2 dataset with the RoBERTa-base model) and image classification tasks (using the CIFAR-100 and ImageNet datasets with the ViT model) to compare the effects of these three initialization methods on model performance.

As shown in

Table 8, in image classification, using category average vectors for initialization modestly boosts final accuracy by better capturing true feature distributions and offering a more informative start. Although the performance gap with random initialization narrows over time, the category average method still provides a significant advantage when training time or resources are limited.

For text classification, initializing class representations with label text descriptions generally achieves the highest accuracy by directly embedding rich semantic information. However, the effectiveness of these descriptions can vary, and determining the optimal phrasing remains a challenging issue.

Overall, the choice of initialization strategy critically impacts both the training dynamics and final performance of the CGCE framework. Under limited training conditions, category average or label text description initialization can significantly improve accuracy, while with ample training, even random initialization can eventually yield comparable results. These findings underscore the robustness and flexibility of CGCE across different initialization methods, offering practical options for diverse applications.

7. Conclusions

This work proposes a novel classification framework—Contrastive Geometric Cross-Entropy (CGCE)—which explicitly enhances both intra-class compactness and inter-class separability in the feature space by introducing learnable class representation vectors, all without incurring additional computational or memory overhead. Theoretically, CGCE naturally forms clear and measurable decision boundaries as the loss converges, and empirical results indicate that it achieves excellent discriminative performance and rapid convergence under standard conditions, while also exhibiting strong robustness in challenging scenarios such as data scarcity, class imbalance, and label noise.

Nonetheless, CGCE does have certain limitations in practice. In large-scale image tasks, the computation of class averages introduces extra overhead, and in text tasks, reliance on semantic label descriptions may lead to additional computational cost or semantic deviations. Future work could explore integrating multimodal priors, adapting CGCE to incremental or online learning, and employing large-scale pretrained models to automatically generate label descriptions, thereby further enhancing its practicality and extensibility.

Overall, CGCE offers a simple and efficient alternative for multi-class classification by bridging geometric representation learning and probabilistic modeling. Its fast convergence, strong performance, and extensibility make it a promising direction for future research in both academic and practical domains.

Author Contributions

Conceptualization, Y.W.; methodology, Y.W.; software, Y.W.; supervision, L.X.; project administration, L.X.; writing—original draft preparation, Y.W.; writing—review and editing, X.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Fujian Province, grant number 2022J011238; the Xiamen Major Public Technology Service Platform, grant number 3502Z20231042; the Research on Key Technologies of Intelligent and Cloud Service Testing (2022 Central Government Guide Local Development Science and Technology Special Project), grant number 2022L3029; the Ship Scientific Research Project—Key Technology and Demonstration of Type 2030 Green and Intelligent Ship in Fujian Region, grant number CBG4N21-4-4; and the Fujian Province University–Industry Cooperation Project—Research and Application of Intelligent Marine Terminal Based on Multisource Data, grant number 2024H6020. The APC was funded by the authors.

Data Availability Statement

The code and scripts to reproduce the experiments are publicly available at

https://github.com/wyffffff/CGCE (accessed on 22 September 2025). No new datasets were generated in this study; all datasets used are publicly available and cited in the manuscript.

Acknowledgments

The authors thank colleagues and collaborators for helpful discussions and support.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Zhang, C.; Bengio, S.; Hardt, M.; Recht, B.; Vinyals, O. Understanding Deep Learning Requires Rethinking Generalization. arXiv 2016, arXiv:1611.03530. [Google Scholar] [CrossRef]

- Mao, A.; Mohri, M.; Zhong, Y. Cross-Entropy Loss Functions: Theoretical Analysis and Applications. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 23803–23828. [Google Scholar]

- Boudiaf, M.; Rony, J.; Ziko, I.M.; Granger, E.; Pedersoli, M.; Piantanida, P.; Ayed, I.B. A Unifying Mutual Information View of Metric Learning: Cross-Entropy vs. Pairwise Losses. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 548–564. [Google Scholar]

- Xie, Z.; Huang, Y.; Zhu, Y.; Jin, L.; Liu, Y.; Xie, L. Aggregation Cross-Entropy for Sequence Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6538–6547. [Google Scholar]

- Amos, B.; Yarats, D. The Differentiable Cross-Entropy Method. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 291–302. [Google Scholar]

- Zadeh, S.G.; Schmid, M. Bias in Cross-Entropy-Based Training of Deep Survival Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3126–3137. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Wu, B.; Duan, Q.; Dong, L.; Yu, S. A fast UAV trajectory planning framework in ris-assisted communication systems with accelerated learning via multithreading and federating. IEEE Trans. Mob. Comput. 2025, 24, 6870–6885. [Google Scholar] [CrossRef]

- Pan, D.; Wu, B.N.; Sun, Y.L.; Xu, Y.P. A fault-tolerant and energy-efficient design of a network switch based on a quantum-based nano-communication technique. Sustain. Comput. Inform. Syst. 2023, 37, 100827. [Google Scholar] [CrossRef]

- Wu, B.; Huang, J.; Duan, Q. FedTD3: An Accelerated Learning Approach for UAV Trajectory Planning. In Proceedings of the International Conference on Wireless Artificial Intelligent Computing Systems and Applications, Tokyo, Japan, 24–26 June 2025; Springer: Singapore, 2025; pp. 13–24. [Google Scholar]

- Wu, B.; Huang, J.; Duan, Q. Real-time intelligent healthcare enabled by federated digital twins with aoi optimization. IEEE Netw. 2025. [Google Scholar] [CrossRef]

- Wu, B.; Cai, Z.; Wu, W.; Yin, X. AoI-aware resource management for smart health via deep reinforcement learning. IEEE Access 2023, 11, 81180–81195. [Google Scholar] [CrossRef]

- Wu, B.; Wu, W. Model-Free Cooperative Optimal Output Regulation for Linear Discrete-Time Multi-Agent Systems Using Reinforcement Learning. Math. Probl. Eng. 2023, 2023, 6350647. [Google Scholar] [CrossRef]

- Wu, B.; Huang, J.; Duan, Q.; Dong, L.; Cai, Z. Enhancing Vehicular Platooning with Wireless Federated Learning: A Resource-Aware Control Framework. arXiv 2025, arXiv:2507.00856. [Google Scholar] [CrossRef]

- Liu, W.; Wen, Y.; Yu, Z.; Li, M.; Raj, B.; Song, L. SphereFace: Deep Hypersphere Embedding for Face Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 212–220. [Google Scholar]

- Wang, H.; Wang, Y.; Zhou, Z.; Ji, X.; Gong, D.; Zhou, J.; Li, Z.; Liu, W. CosFace: Large Margin Cosine Loss for Deep Face Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5265–5274. [Google Scholar]

- Aljundi, R.; Patel, Y.; Sulc, M.; Chumerin, N.; Reino, D.O. Contrastive Classification and Representation Learning with Probabilistic Interpretation. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 6675–6683. [Google Scholar]

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4690–4699. [Google Scholar]

- Wang, F.; Xiang, X.; Cheng, J.; Yuille, A.L. NormFace: L2 Hypersphere Embedding for Face Verification. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1041–1049. [Google Scholar]

- Martins, A.; Astudillo, R. From Softmax to Sparsemax: A Sparse Model of Attention and Multi-label Classification. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1614–1623. [Google Scholar]

- Li, H.; Lu, W. Mixed Cross Entropy Loss for Neural Machine Translation. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 6425–6436. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Pang, T.; Xu, K.; Dong, Y.; Du, C.; Chen, N.; Zhu, J. Rethinking Softmax Cross-Entropy Loss for Adversarial Robustness. In Proceedings of the Eighth International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Feng, L.; Shu, S.; Lin, Z.; Lv, F.; Li, L.; An, B. Can Cross Entropy Loss be Robust to Label Noise? In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, Yokohama, Japan, 7–15 January 2021; pp. 2206–2212. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum Contrast for Unsupervised Visual Representation Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9729–9738. [Google Scholar]

- Ghosh, A.; Kumar, H.; Sastry, P.S. Robust Loss Functions under Label Noise for Deep Neural Networks. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 1919–1925. [Google Scholar]

- Liu, Y.; Guo, H. Peer Loss Functions: Learning from Noisy Labels without Knowing Noise Rates. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 6226–6236. [Google Scholar]

- Deng, J.; Guo, J.; Liu, T.; Gong, M.; Zafeiriou, S. Sub-center ArcFace: Boosting Face Recognition by Large-scale Noisy Web Faces. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 741–757. [Google Scholar]

- Zhu, D.; Ying, Y.; Yang, T. Label Distributionally Robust Losses for Multi-class Classification: Consistency, Robustness and Adaptivity. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 43289–43325. [Google Scholar]

- Wang, Y.; Ma, X.; Chen, Z.; Luo, Y.; Yi, J.; Bailey, J. Symmetric Cross Entropy for Robust Learning with Noisy Labels. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 322–330. [Google Scholar]

- Zhang, Z.; Sabuncu, M. Generalized Cross Entropy Loss for Training Deep Neural Networks with Noisy Labels. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 8792–8802. [Google Scholar]

- Zhai, X.; Oliver, A.; Kolesnikov, A.; Beyer, L. S4l: Self-supervised Semi-supervised Learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1476–1485. [Google Scholar]

- Li, J.; Zhou, P.; Xiong, C.; Hoi, S.C.H. Prototypical Contrastive Learning of Unsupervised Representations. In Proceedings of the International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Khosla, P.; Teterwak, P.; Wang, C.; Sarna, A.; Tian, Y.; Isola, P.; Maschinot, A.; Liu, C.; Krishnan, D. Supervised Contrastive Learning. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; pp. 18661–18673. [Google Scholar]

- Tian, Y.; Sun, C.; Poole, B.; Krishnan, D.; Schmid, C.; Isola, P. What Makes for Good Views for Contrastive Learning? In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; pp. 6827–6839. [Google Scholar]

- Chuang, C.Y.; Robinson, J.; Lin, Y.C.; Torralba, A.; Jegelka, S. Debiased Contrastive Learning. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; pp. 8765–8775. [Google Scholar]

- Kalantidis, Y.; Sariyildiz, M.B.; Pion, N.; Weinzaepfel, P.; Larlus, D. Hard Negative Mixing for Contrastive Learning. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; pp. 21798–21809. [Google Scholar]

- Wang, H.; Guo, X.; Deng, Z.H.; Lu, Y. Rethinking Minimal Sufficient Representation in Contrastive Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16041–16050. [Google Scholar]

- De Boer, P.T.; Kroese, D.P.; Mannor, S.; Rubinstein, R.Y. A Tutorial on the Cross-Entropy Method. Ann. Oper. Res. 2005, 134, 19–67. [Google Scholar] [CrossRef]

- Mannor, S.; Peleg, D.; Rubinstein, R. The Cross Entropy Method for Classification. In Proceedings of the 22nd International Conference on Machine Learning, Bonn, Germany, 7–11 August 2005; pp. 561–568. [Google Scholar]

- Elsayed, G.; Krishnan, D.; Mobahi, H.; Regan, K.; Bengio, S. Large Margin Deep Networks for Classification. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 842–852. [Google Scholar]

- Zhou, X.; Liu, X.; Zhai, D.; Jiang, J.; Gao, X.; Ji, X. Learning Towards the Largest Margins. In Proceedings of the Tenth International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Yu, B.; Tao, D. Deep Metric Learning with Tuplet Margin Loss. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6490–6499. [Google Scholar]

- Liu, B.; Cao, Y.; Lin, Y.; Li, Q.; Zhang, Z.; Long, M.; Hu, H. Negative Margin Matters: Understanding Margin in Few-Shot Classification. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 438–455. [Google Scholar]

- Le-Khac, P.H.; Healy, G.; Smeaton, A.F. Contrastive Representation Learning: A Framework and Review. IEEE Access 2020, 8, 193907–193934. [Google Scholar] [CrossRef]

- Zheng, M.; Wang, F.; You, S.; Qian, C.; Zhang, C.; Wang, X.; Xu, C. Weakly Supervised Contrastive Learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 10042–10051. [Google Scholar]

- Ho, C.H.; Vasconcelos, N. Contrastive Learning with Adversarial Examples. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; pp. 17081–17093. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Technical Report; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Socher, R.; Perelygin, A.; Wu, J.; Chuang, J.; Manning, C.D.; Ng, A.Y.; Potts, C. Recursive Deep Models for Semantic Compositionality Over a Sentiment Treebank. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; pp. 1631–1642. [Google Scholar]

- Maas, A.; Daly, R.E.; Pham, P.T.; Huang, D.; Ng, A.Y.; Potts, C. Learning Word Vectors for Sentiment Analysis. In Proceedings of the Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland, Oregon, 19–24 June 2011; pp. 142–150. [Google Scholar]

- Zhang, X.; Zhao, J.; LeCun, Y. Character-Level Convolutional Networks for Text Classification. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 649–657. [Google Scholar]

- Zhou, Y.; Liu, S.; Siow, J.; Du, X.; Liu, Y. Devign: Effective Vulnerability Identification by Learning Comprehensive Program Semantics via Graph Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 10197–10207. [Google Scholar]

- Fan, J.; Li, Y.; Wang, S.; Nguyen, T.N. AC/C++ Code Vulnerability Dataset with Code Changes and CVE Summaries. In Proceedings of the International Conference on Mining Software Repositories, Seoul, Republic of Korea, 29–30 June 2020; pp. 508–512. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2020, arXiv:1907.11692. [Google Scholar]

- Feng, Z.; Guo, D.; Tang, D.; Duan, N.; Feng, X.; Gong, M.; Shou, L.; Qin, B.; Liu, T.; Jiang, D.; et al. CodeBERT: A Pre-Trained Model for Programming and Natural Languages. In Proceedings of the Findings of the Association for Computational Linguistics: Empirical Methods in Natural Language Processing, Online, 16–20 November 2020; pp. 1536–1547. [Google Scholar]

- Guo, D.; Lu, S.; Duan, N.; Wang, Y.; Zhou, M.; Yin, J. UniXcoder: Unified Cross-Modal Pre-training for Code Representation. In Proceedings of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; pp. 7212–7225. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).