1. Introduction

In the modern world, people and processes are increasingly dependent on interconnected smart devices that offer us a wide range of possible uses. In the Internet of Things (IoT), sensors, actuators, and applications can be connected to each other to implement concepts such as Industry 4.0 and Education 4.0 [

1,

2]. Whenever these connections are direct and explicit, we refer to connected devices and applications as ensembles. For instance, consider an interaction device connected to an application that in turn controls part of the output devices in the environment. However, it may also be useful to connect multiple ensembles of devices indirectly with each other (e.g., based on events). In this regard, it has already been emphasized that service orchestration and choreography are two relevant patterns for interlinking processes in terms of service coordination [

3] (see also

Section 3.3).

Imagine a smart learning environment with distributed learning stations that show digital learning applications. Each station has a user interface that can present graphical or auditive content in some way and needs to be controlled somehow. Therefore, stations can be realized based on an ensemble that also includes an ambient application (namely, an application that makes use of smart objects in some way and that runs within smart environments [

4]). Now, these digital learning applications can be interconnected to provide a richer experience. For example, all applications wait until a prompted task is solved at each of the stations.

As rooms that can be used as learning spaces may offer different hardware, it is not always possible to determine in advance which device will display which application. Consequently, dynamic networking is necessary between individual devices and between ensembles. Manually connecting components within smart environments, such as diverse IoT devices, services, and interfaces, can certainly be challenging due to the technologies’ inherent complexity and variety. Additionally, the dynamic nature of these systems can obscure interactions between users and system components. More critically, this can hinder users’ overall understanding of the system and its functionality, ultimately leading to reduced usability of these interactive environments.

To address these issues, automatic composition has been explored, aiming to provide integrated and task-oriented services that align well with users’ needs and goals. However, conventional approaches to software composition are often unsuitable for smart environments due to their high level of dynamism and the fact that the involved devices are only identified shortly before runtime. One way of addressing this problem is the organic computing approach [

5]. Additionally, to foster understanding of the environment, self-explainability has been proposed [

6].

Yet, existing approaches and frameworks often fall short in providing thorough user guidance, fail to detail the necessary interactions, or lack support for orchestrating and choreographing ensembles within smart environments. While there exists research that addresses these topics, none of the described approaches target the conjunction of all three aspects (see

Section 3).

Thus, this paper describes an approach to the independent coordination of loosely coupled self-explaining ensembles of smart objects and ambient applications. To this end, we realized a software framework intended to create distributed applications that users perceive as homogeneous and uniform. Our concept allows for the implementation of (a) service orchestration, which is typically known as a centralized approach to managing service coordination based on a single coordinator, and (b) choreography, which is generally understood as decentralized coordination in which each service independently follows a predefined protocol. It also allows for a generation of intelligible user instructions for smart environments that provide interaction details. In our approach, ensembles are typically self-organized, and their flow control is handled by the components themselves. The advantage is that independent management prevents a single point of failure or bottleneck. If any device fails, the other devices can maintain their connection and continue to coordinate with each other. However, the setup can also be specified using external rules and configuration, allowing also for centralized control.

Moreover, we describe a case study that illustrates the benefits of our approach. This covers an investigation of the perception of a spatial system—an example ambient serious game. In the domain of smart spatial learning environments, there are already approaches that have been studied in terms of motivation or effectiveness. Examples include the mobile game LINA, which uses augmented reality to provide students with a game in their own classroom [

7], or the approach of Stoldt et al. to equip a room with technical devices that allow students to test their knowledge playfully [

8]. As illustrated by the provided examples, these configurations are immutable and do not dynamically instantiate with the services available within an environment. This paper presents an approach that enables smart spatial learning games to be realized dynamically with services available in the environment and to coordinate these services with each other.

Our main contributions presented here are as follows:

A literature overview in the domains of service composition, service coordination, and self-explainability,

A disambiguation of the terms service orchestration and service choreography,

A novel concept for the orchestration and choreography of self-explaining ensembles within smart environments extending the ambient reflection framework,

A user study that includes a trial and a questionnaire to assess the implementation and the perception of an example learning application.

The research presented in this paper particularly addresses the following research questions:

- 1.

How can self-explaining ensembles be coordinated decentrally using event-driven actions?

- 2.

How can explanations of these event-driven actions be provided?

- 3.

How do users perceive spatially orchestrated devices in the sense of a distributed application?

2. Exemplary Scenario

The following scenario depicts our vision of coordinated self-explaining ensembles consisting of smart objects and ambient applications.

Steven is in his fourth semester studying economics at the University of Lübeck. His course demands a great deal of time and requires him to acquire a lot of factual knowledge. Sometimes, he feels overwhelmed by all the reading material and looks for other ways to expand his knowledge. His university offers the opportunity to study in an ambient learning environment. They provide a setup of different screens and interaction devices that can be used for learning on digital stations or, as in our example, for ambient serious games (cf. [

9]). The interaction devices are grouped into ensembles comprising input and output devices and ambient apps. These ensembles are used as learning stations. It is possible for stations to be dependent on each other if a change in the content displayed at one station can be triggered by the states of the other stations.

Steven visits the learning environment and loads his course material from the learning management system into an escape room game. He enters the room and finds six different screens. One of the screens presents him with a puzzle. In order to solve it, he must know and apply parts of his learning material. These are presented to him in visual form on two of the screens. The fourth screen, which is next to the exit, shows a pin field. Steven is not sure how to proceed and taps on the help button next to the pin field. The system now tells him that he must first solve the puzzles at the other stations and that he has not yet solved any puzzles. Steven solved the first puzzle in his mind, but he does not know how to interact with the station. However, he finds a pictorial description of the interface at the bottom of the puzzle application screen and enters the solution using the depicted gestures. Next, a number appears on the display. The help function now informs Steven that he has solved a puzzle, but that there are still more stations to go. The fifth screen, which was previously black, now shows another puzzle for him to solve. After solving it, he receives a second digit. Steven follows the same procedure for the third station that now presents a puzzle, too. As soon as the third digit appears, the two displays showing the learning content turn black. Finally, Steven discovers the input buttons below the PIN field. After two attempts, he has completed the task and is satisfied with his learning success.

Note that interconnected ensembles are required to realize the described scenario. The ensembles for the individual stations must coordinate to proceed when a puzzle is solved at another station. Additionally, ensembles must provide information about this interconnection in order to inform Steven about the current state.

3. Related Work

Previous research presented in the literature has addressed service composition as well as explainability within smart environments. This also encompasses service orchestration and choreography. However, the integration of these domains presents distinct challenges. The following sections present related work in each of these areas, including a delimitation of our work from previous work particularly regarding the integration of self-explainability as well as service coordination.

3.1. Self-Explainability

There is limited research in the literature on self-explainability within the domain of smart environments. Most existing research in this area focuses on reasoning about the context and causal relationships within the self-adaptation process that led to a system’s current state.

For example, self-explainability has been explored as a means to help users understand the control logic of systems in smart environments. A better understanding may allow them to respond more appropriately to system behavior. Towards this end, deriving causal models from experimental and observational data has been proposed, which can then be used to explain the behavior of adaptive systems [

10].

A similar interpretation is that self-explainability is a system’s ability to answer questions about past decisions. For example, an architecture for recording temporal data has been described, which can subsequently be used to analyze and explain the system behavior and adaptations [

11,

12]. This history-awareness allows for assessing the impact of past events on decision-making processes and may be used as a basis for decision-making for future actions.

Some approaches have a clearer focus on explaining the behavior of composed software modules and services in pervasive environments. In this regard, an architecture that generates user-oriented descriptions of such composed systems has been proposed [

13]. These descriptions, based on component definitions and their interconnections, take the form of explanatory rules. While developers provide the component descriptions, the service composition determines the interconnections.

Other researchers emphasize the importance of incorporating self-explainability into intelligent environments, particularly in the context of intelligent assistance processes [

6]. They argue that providing explanations or fostering inherently intuitive behavior can enhance user acceptance. This is especially critical for systems that perform actions which are difficult to anticipate and may defy user expectations.

Towards this end, a conceptual framework and a generic explanation pattern have been proposed that enable self-adaptive systems to become self-explaining [

14]. The pattern has been instantiated on finite-state automata for formalization, which helps determine whether a given entity requires an explanation. These explanations may then guide further system actions.

Despite these efforts, current explanation approaches are often deemed inadequate for most users as they are considered to offer flat, static, and algorithm-focused explanations [

15]. Thus, the authors emphasize the importance of user-centered, understandable explanations and present a framework for generating these explanations in smart environments. This framework uses two types of explanation constructs: algorithmic constructs, which describe the logic of system behavior, and contextual constructs, which provide related background information. The authors use cause-effect paths to trace the reasons for the current system state and generate user-specific explanations by selecting an appropriate level of detail.

Recently, the use of generative artificial intelligence has been proposed to provide explanations [

16]. The author presents a framework for smart objects that possess self-knowledge. A formal model of smart object states underpins the synthesis of both actions and corresponding explanations. The method involves training small language models, suitable for edge devices, using a teacher large language model.

Most of the existing work focuses on explaining the behavior of software systems in smart environments, particularly emphasizing the actions that led to the current state. However, user-system interactions are generally treated as secondary. This is especially true for deliberate user inputs and the corresponding system responses. Consequently, interaction with dynamically formed ensembles of devices and applications is rarely addressed.

A notable exception is the

ambient reflection framework, which supports self-explainability in adaptive systems within smart environments, including ambient applications [

17]. Building on prior work [

18], this approach emphasizes explaining component behavior from an interaction-centric perspective while decoupling inputs from outputs.

The self-explainability provided by the framework relies on self-descriptions used for all involved components. They make use of a description language designed to be both human- and machine-readable. Importantly, applications are treated as black boxes, focusing on observable interactions rather than exposing their full internal logic (e.g., describing the menu controls of the application).

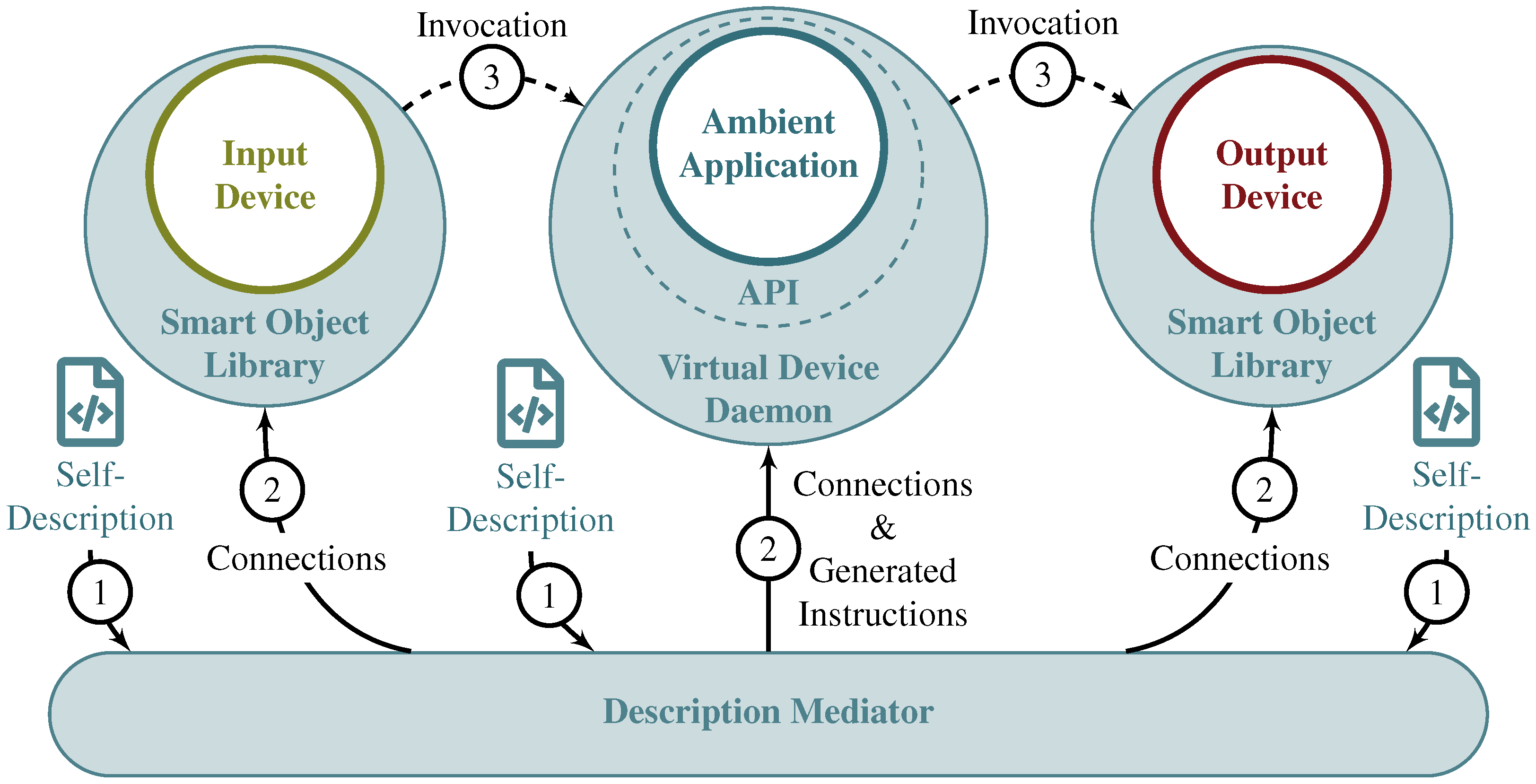

The framework comprises three core components: (1) The

smart object library is used to enable smart objects and ambient applications to self-explain and to integrate them into the framework. (2) The

virtual device daemon (VDD) and

virtual device application programming interface (API) can be used to integrate components that cannot use the smart object library directly, like web applications running in a browser. It makes use of a web socket connection. (3) The

description mediator that connects smart objects and ambient applications based on their functionality. It uses a probabilistic brute-force algorithm as a best-effort solution to the ensembling problem. The mediator also generates user instructions for the connected ensembles and notifies all components of both, the connections and the composed descriptions. See

Figure 1 for an overview of the framework and its components.

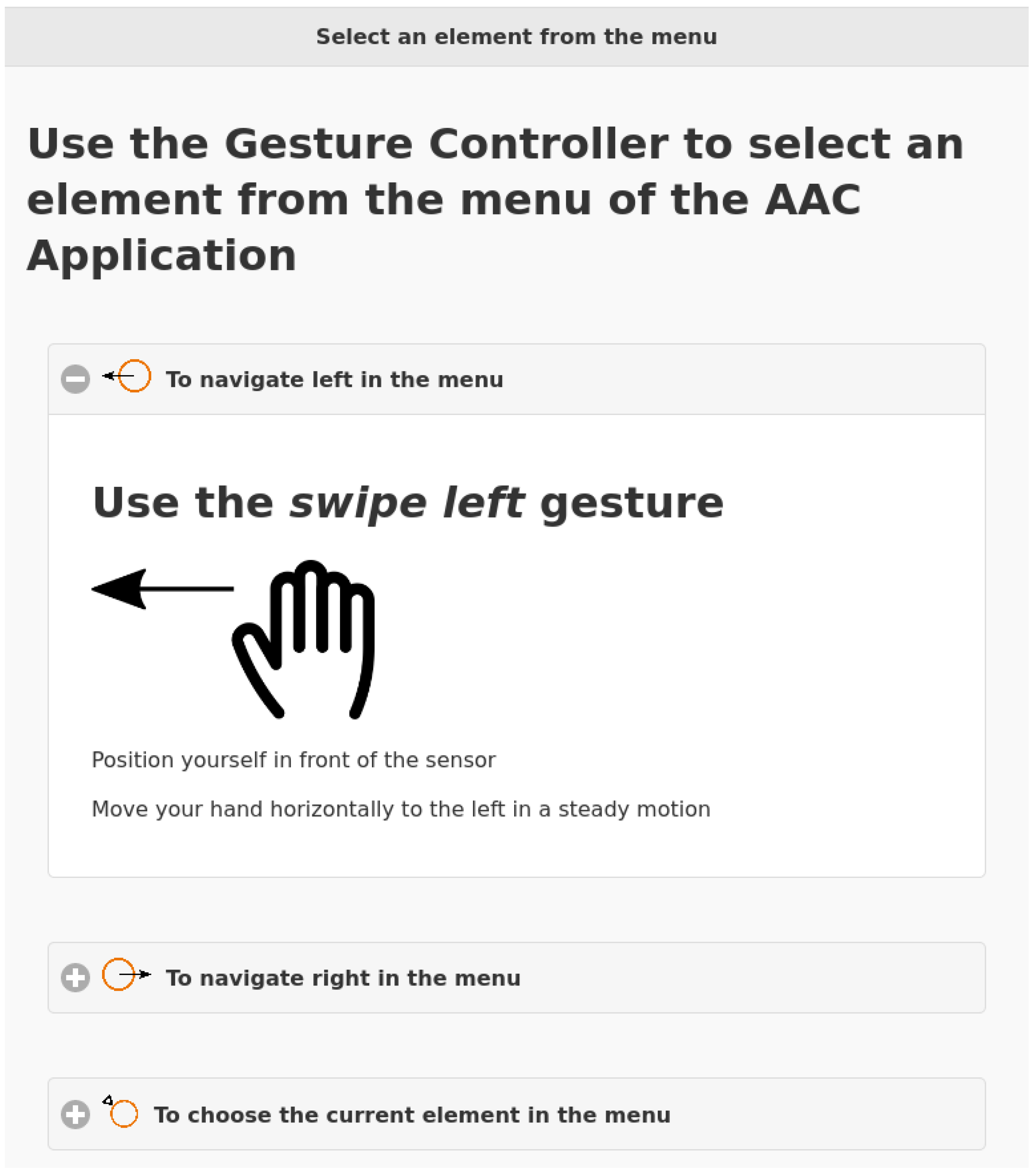

The framework can dynamically generate instructions in several display formats.

Figure 2 shows a screenshot of an excerpt from the usage instructions displayed in a web browser. These instructions were produced by a rendering engine that generates Hypertext Markup Language (HTML) documents.

Notably, the framework does not address event-based message exchange between multiple ensembles operating within a smart environment. However, the described research may lay the groundwork for integrating self-explaining, interconnected ensembles with regard to a coordination.

3.2. Service Composition

The objective of software composition is to promote the reuse of modular components, thereby reducing design complexity and facilitating the development of software systems. However, in the context of smart environments, the set of available components and devices is often only partially known prior to their actual utilization. This uncertainty renders reliable predictions about accessible hardware and services particularly challenging, necessitating alternative composition strategies distinct from those employed in more conventional domains.

An approach to service composition tailored for smart environments, as presented in the literature, is based on constraint satisfaction [

19]. This framework can use either a brute-force approach or backtracking with a heuristic, which is realized by filter criteria. Although this method requires users to articulate their requirements, it does not demand an in-depth understanding of the system or its technical intricacies.

Complementary to this, opportunistic software composition approaches based on machine learning have been introduced. For instance, a framework based on reinforcement learning for automatic and opportunistic service composition has been described [

20]. This system incorporates an assembly engine that autonomously identifies available components and determines viable connections, eliminating the need for pre-established plans. Users are primarily involved in addressing conflict resolution and providing feedback during the adaptation process.

Furthermore, a similar solution for dynamic and context-aware software composition using reinforcement learning has been introduced [

21]. The approach adapts to both the situational context and user preferences without requiring explicit input regarding user needs or predetermined assembly plans. Users retain the ability to intervene in the composition process by accepting, rejecting, or modifying proposed configurations prior to their deployment.

More recently, approaches to involve users in the machine learning process for developing self-adaptive smart environments have been presented [

22]. These insights include guidelines for designing such systems. The authors emphasize the importance of clearly communicating the system capabilities, performance, and operational mechanisms. These insights are further integrated into their previous work on opportunistic software composition within a multi-agent system.

All in all, the aforementioned approaches offer approaches towards the broader challenge of software composition in smart environments, with a shared emphasis on involving users in the composition process. Nevertheless, none of these methods explicitly address the interaction between users and the composed systems. Furthermore, there is a lack of focus on explaining the human–computer interaction in detail. This applies in particular to the interaction techniques employed. This is relevant, as prior researchers have argued that a more explicit focus on interaction design could enhance the composition process, facilitate requirement specification, and improve overall system comprehensibility [

17].

3.3. Service Orchestration and Choreography

While service composition allows to meet complicated user requests based on multiple services [

23], the individual services need to interact to form a cohesive process. This interaction is called service coordination. Towards this end, the terms service orchestration and service choreography have been coined.

In service-oriented architectures, orchestration typically refers to the centralized control of service interactions by a single coordinator, while choreography describes decentralized coordination, where each service follows a specified interaction protocol [

3,

24]. However, these terms are not always used consistently in the literature. For instance, Macker and Taylor explicitly describe the orchestration of decentralized workflows [

25]. In addition, de Sousa et al. do not make a distinction and cover centralized but also peer-to-peer approaches under the term orchestration [

26]. In the following, the two terms are characterized on the basis of the literature.

A distinction between the terms can be seen in the control perspective. According to Peltz, an orchestration represents control from one party’s perspective, while a choreography is more collaborative, allowing each involved party to describe its role in the interaction [

27]. The author explicitly states that orchestration refers to an executable process, while choreography focuses on tracking message sequences between parties and sources.

Another distinction can be made on the basis of the viewpoint [

28]. Choreography describes the coordination among collaborating participants from a global or neutral perspective, emphasizing how participants coordinate collectively. In contrast, orchestration represents the interactions from the perspective of a single participant, highlighting its control over the process. These two perspectives reflect different levels of abstraction in modeling service coordination.

Moreover, according to Tahamtan and Eder, orchestration has been described as a composition that belongs to and is controlled by one partner [

29]. It is described as an executable process which is run by its owner and therefore, is solely visible to it with other external partners having no view on and knowledge about it. The authors further describe a choreography as a non-executable, abstract process that defines the message exchange and collaboration among partners. The message exchange is visible to all participants but hidden from external parties. Thus, a choreography is a global perspective on a shared process definition.

Another way to interpret the definitions of the terms is to examine the hierarchies. Choreography can be seen as a superordinate model that describes the rules of interaction between the services, while orchestration is a specific execution within it. In these views, orchestration can also be implicitly seen as a centralized process, while choreography is organized decentrally. Furthermore, choreography is event-driven while orchestration uses a coordinator.

Chen and Englund explicitly emphasize that service orchestration uses a centralized composition while choreography is described as a decentralized service composition method that relies on a specification of the participants and the interaction protocol in a loose way [

24]. According to the authors, a choreography is based on a global coordination logic that is distributed between services and executed locally.

Notably, there are several languages targeting the coordination and composition of web services. The two most relevant languages are OWL-S, which is built on top of the Web Ontology Language (OWL), and the Web Services Business Process Execution Language (WS-BPEL). While OWL-S has more focus on automatic service composition, WS-BPEL is an orchestration language that is used to specify actions within business processes built upon web services. However, relevant information for interconnected systems in smart environments is generally not included.

Besides a broader integration in web services, service coordination has also been researched in the context of smart environments. For instance, various approaches for edge computing have already been reviewed [

30].

More closely related, service orchestration in the IoT has been addressed by a platform capable of sharing and discovering

Virtual Objects (virtual representations of physical objects) in the environment [

31]. The authors developed an application store that can be used for the provision of Virtual Objects and associated micro-services. It also allows for a service composition and the coordination of services, including those present in remote spaces.

Furthermore, a model for developing applications in the context of IoT has been presented, that has a logically centralized data-oriented architecture, allowing to incorporate artificial intelligence into IoT systems [

3]. Services are coordinated by a mediator that features storage, production, discovery, and notification of relevant data for client applications.

Recently, a multi-agent system has been described that orchestrates services across nodes in smart environments [

32]. To ensure modular and efficient management, each agent corresponds to a physical or virtual node or a group of nodes with similar functionalities. Agents control the context of node-related services and selectively activate or deactivate them in response to real-time system demands. This approach may reduce the complexity of smart environments while enhancing overall security.

Moreover, there is a multitude of practical tools and modeling languages targeting service coordination in the IoT [

33]. This includes ThingML, a modeling language and code generation framework allowing to transform state machines into code that can be compiled and executed on edge devices [

34]. Another notable tool is Node-RED, a flow-based visual programming development tool that follows a simple modular building-block approach. Node-RED has gained popularity in the IoT and edge computing contexts. Furthermore, centralized platforms for trigger-action programming, such as IFTTT, are widely used.

In addition, there are several home automation platforms that enable the integration of smart objects and services as well as the provision of orchestrations. These include OpenHAB and HomeAssistant, which are noteworthy due to their open-source nature and interoperable approach. However, these platforms are centralized and contrast with the aspect of self-organization.

In general, the presented approaches lack a focus on human-computer interaction and do not provide self-explainability for service coordination. Hence, the concept of coordinating services with respect to self-explainability has not yet been particularly targeted.

4. A Description Language for Self-Explaining Coordinated Components

When explaining systems that are not inherently self-explanatory, it is necessary to provide additional information. Various aspects of user interaction need to be considered, such as how to perform specific actions and how the system may respond to them. Self-descriptions of the individual components can serve to convey this information. Furthermore, these descriptions can also support service composition. In our previous work, we introduced the smart object description language (SODL), a description language that is both human- and machine-readable, designed to describe components within smart environments [

18].

However, the SODL does not yet take into account event-driven state changes that allow messages to be exchanged between ensembles. In principle, it would be possible to integrate relevant information of smart environments’ components into existing languages targeting the coordination and composition of web services, such as OWL-S or WS-BPEL. However, we argue that it is more practical to extend SODL to include the ability to coordinate; consequently, we have opted for this approach. Therefore, we present the modifications made to the language below. These modifications cover changes in state based on events sent by other network members, as well as mapping values from a range of the sending component to a range of the receiving component.

4.1. General Information, Components, and States

The general description section of SODL is used to convey general information about the device or the application. Devices and applications are modeled with their components and corresponding states that are organized in state groups. While devices consist of one or more physical components for input or output, applications may consist of logical components, like a control component to process inputs.

We introduced an attribute to state groups that denotes whether the states of that group can be changed based on received events or whether these states are disseminated to other members in the network. Disseminated state changes are sent as events to registered subscribers. These, in turn, may process the received events and may cause state changes for the respective state groups based on a rule set described below.

4.2. Coordination

While SODL covers a section for task analysis that is used to describe the interaction in greater detail, other ways of reacting to events or messages that are not (directly) related to user interaction are not covered in this section. We modified the SODL so that it now also covers a new section that is intended to describe the reaction to received events disseminated by the device or application itself or other components in the smart environment. The transmitting and receiving components do not have to be in the same ensemble, but they can be.

Incoming events may cause defined state changes (e.g., always change to a fixed state) or toggle states (e.g., toggle on/off or switch to next state in a sequence of states).

Triggers specify conditions for state changes, i.e., state changes disseminated by another device or application or the component itself. They check for a specific discrete state or compare the received state value (if it is a value from a range). In order to match the emitter, information about the involved device or application, the instance ID (UUID to identify the running device or application), the component, and state group must be specified.

Triggers can be combined in order to react to changes when all conditions are met (on all) or immediately after any trigger from a set is activated (on any).

In addition, for an orchestration, it is specified whether it can be triggered once or whether it can be repeatedly triggered (we call these cycles). Cycles can be handled differently with the requirement that all specified triggers have been activated. For example, it is possible to ignore the repeated fulfillment of the same condition so that the next run must wait for this condition to be fulfilled again. Alternatively, these events can be cached. In this case, a condition is already fulfilled by the cached event in the next run.

In addition to changing or toggling defined states based on given conditions, received state values from a range can be mapped to a given range of values. For example, an angle of an orientation measurement in the range of 0 to 360 degrees could be converted to a percentage value of 0 to 100%. Furthermore, it is possible to specify that the received state values should be passed on unchanged for further processing by this component.

5. A Framework for Coordinating Self-Explaining Smart Environments

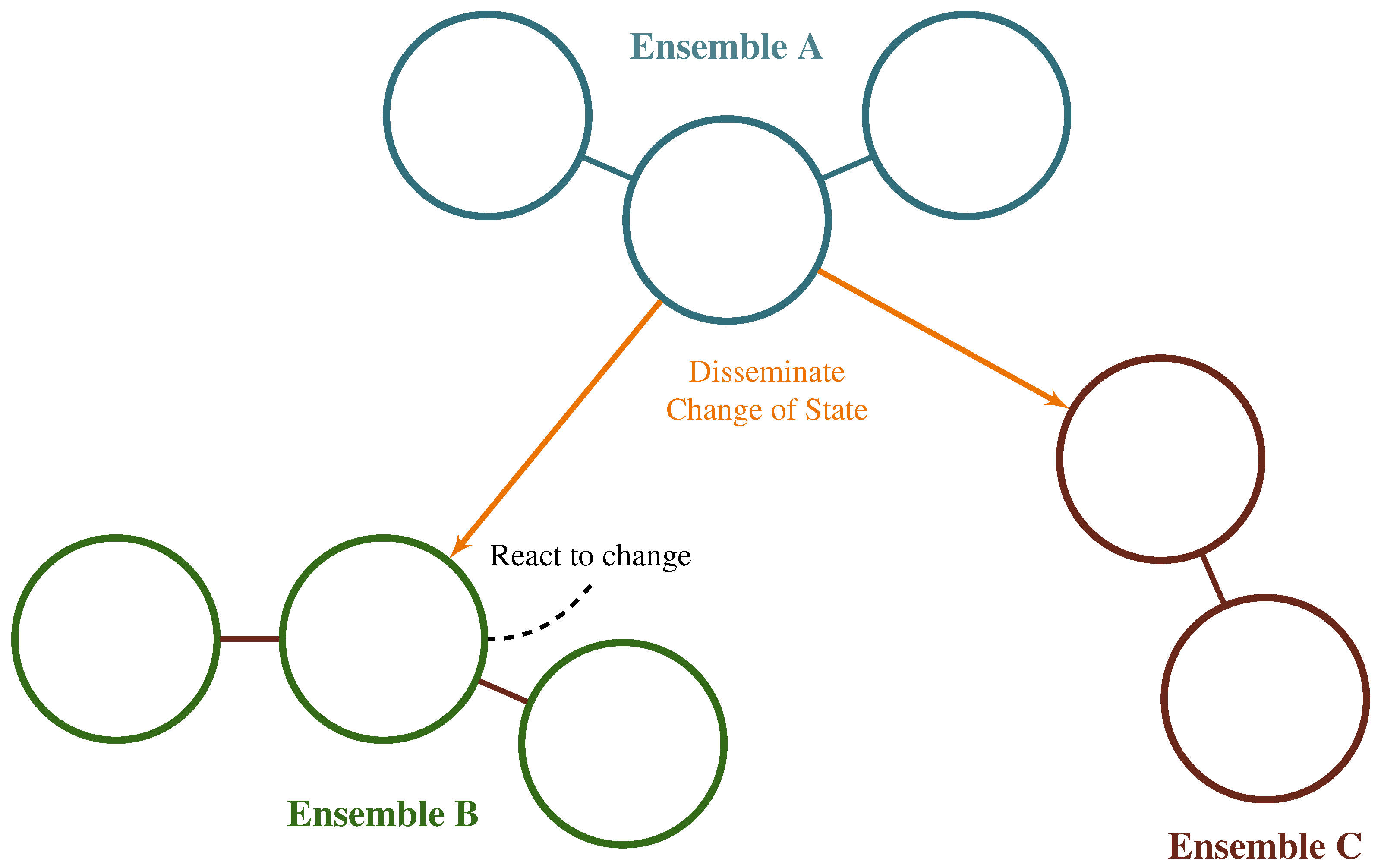

Our extension of the description language offers a comprehensive specification of ambient applications and smart objects, starting from high-level properties or related tasks and extending down to state groups that define their input and output options. It also covers orchestrating states based on events received from other network participants. This structure enables the identification of possible connections, supports the creation of usable ensembles, allows for the coordination of ensembles, and facilitates their self-explanation.

However, an implementation is required that takes care of the message exchange, evaluates the conditions for the coordination, and realizes the desired effects for the recipient (see

Figure 3). For this purpose, we have extended the ambient reflection framework in order to realize orchestration and choreography. We present these modifications below.

5.1. Smart Object Library

The component primarily affected by these modifications is the smart object library, which enables smart objects and ambient applications to be self-explaining and participate in the framework. An overview of the library structure can be found in

Figure 4.

A central component of the described system is a service for state dissemination and event handling, which is based on subscription-based message exchange. On the side of potential recipients, the trigger conditions stored in the respective self-description are analyzed. This involves checking which devices or applications are capable of activating these triggers. The identifiers of related devices or applications are cached. As soon as these devices or applications are identified in the network during a discovery process, a subscription is automatically initiated to ensure the exchange of relevant events covering the status information.

Components that disseminate states, i.e., act as potential transmitters, manage the associated subscriptions independently and, in the event of a status change, transmit corresponding event messages to all registered subscribers.

Recipients of state dissemination events must process and evaluate incoming messages. For this purpose, a dedicated service has been implemented to evaluate the events. As part of this process, each stored orchestration is checked to see whether the received event fulfills one of the defined trigger conditions. In the case of multiple conditions to be met (on all), a map is used to manage the trigger state, in which fulfilled conditions are temporarily stored. This ensures that further processing is initiated as soon as all required triggers have been activated. Mapping is also carried out as defined by the self-description.

In the case of mapping or triggered orchestration, registered listeners or methods designated for specific orchestrations by means of semantic annotation are called up. In this way, software components that access the smart object library can respond to the triggering of orchestrations and initiate further actions.

5.2. Virtual Device Daemon and API

The virtual device daemon uses the smart object library to serve as a proxy device, enabling components that are not integrated via Java or operated in a sandbox to participate in the ambient reflection framework. A connection to the daemon is established via web sockets, with application programming interfaces (API) already available for the JavaScript, Python, and C# programming languages.

Due to the integration of the smart object library, the basic functionality for processing orchestrations is already available. However, the triggered orchestrations must be passed on to the client, so that the modifications described below are necessary.

When the proxy is started, the supplied self-description is first evaluated and then listeners are registered for all stored orchestrations, which trigger the forwarding of the relevant information to the connected client via the web socket connection when called.

The interface to the clients (implemented by the respective API) has been extended accordingly so that messages providing information about initiated orchestrations are provided. Furthermore, a client can initiate a state dissemination by sending a corresponding message to the proxy, which forwards this event to the subscribers via the ambient reflection framework.

In addition, the transfer of information about detected or lost devices and about missing or complete subscriptions for an orchestration was implemented so that the client has all relevant information. This allows a client, for example, to notify a user that not all required components have been detected or to respond to this situation in some other way.

6. Case Study

The goal of our framework is the realization of distributed applications based on the coordination of several self-explaining ensembles, which are perceived by the users as a uniform, homogeneous application. To evaluate this aspect and as a proof of concept, we conducted a study that examined system perception using the example of spatially distributed learning games (that we call ambient serious games (see [

9])). Each game part functioned as an independent application that connected to input and output devices via the ambient reflection framework and communicated through it. These applications featured various states that changed event-based. Both reactions to disseminated state changes and mappings of values were applied. The games implemented reactions to state changes using the linking types

on any and

on all. Each game was distributed across six spatially separated stations, each equipped with a display, a button board for input, and a lightbulb providing input feedback. In addition, in the room, background music and lighting are provided by several smart objects. The user interface of each station included pictorial instructions to guide users through the interaction. The complete setup is shown in

Figure 5.

A specially developed questionnaire captured participants’ perception of the technical setup. It focused on the following aspects: the perception of (a) the system as distributed or unified, (b) the response time to user input and of the system as a whole, (c) the system’s ability to direct user attention, (d) the perception of the system state, (e) the impact of movement on the game flow, (f) the delay between input and feedback, and (g) the participants’ mental model of the system. Participants rated their agreement on a scale between two opposing statements. All items had to be answered to complete the questionnaire. At the end, participants were asked to suggest further possible use cases for the system. The complete questionnaire can be found in the

Supplementary Material.

The study aimed for a sample size of at least 30 participants. Given this sample size, an approximately normal distribution can be assumed [

35]. To mitigate potential bias due to the seemingly ordinal scale, the questionnaire used a ± scale instead of verbal labels, implying equidistant intervals between points.

Participant recruitment followed three channels: (a) through the online forum of student representatives, (b) through personal email invitations to students within the university network, and (c) through word of mouth referrals from individuals already contacted. We distributed the study information specifically through these channels. Participants signed a consent form directly before taking part, after any questions had been addressed.

Eligibility was limited to individuals currently enrolled at the University of Lübeck or who had previously studied there. This ensured that participants matched the target group of the games. Participation was optionally compensated with credit points for students in media informatics or psychology. In total, 40 individuals took part in the study.

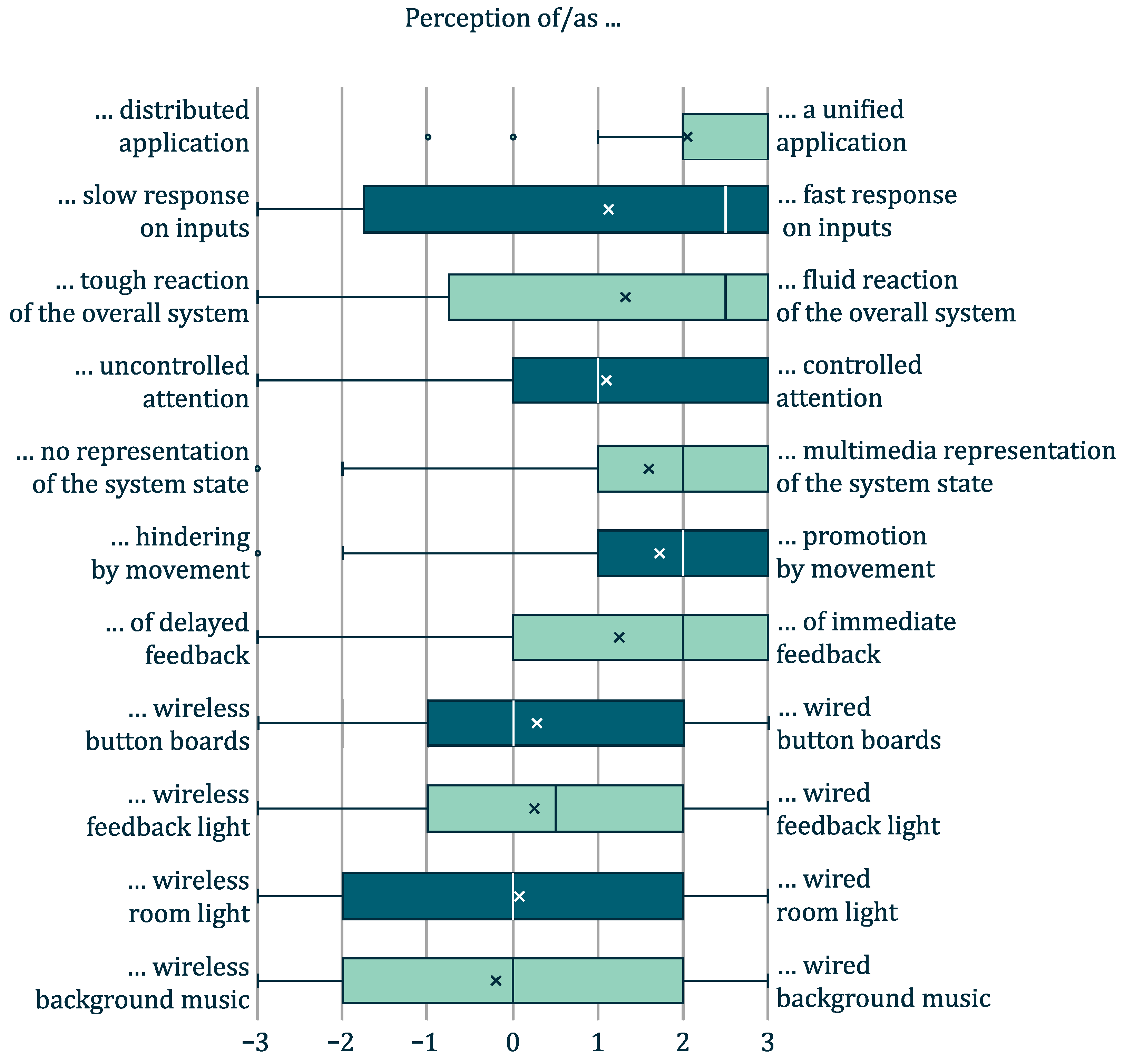

Evaluation of participants’ perception of the technical setup revealed that components were largely perceived as a cohesive system and that system responsiveness was experienced as fluid. The following section reports and interprets the responses to all evaluated items. In order to evaluate the answers ± scale mathematically, the symbols were transferred to numerical values in the range [−3;3] as follows, whereby the indication (−−−) corresponds to the value -3 and the indication (+++) corresponds to the value 3. Based on these values, mean and median are provided.

Regarding the perception of the system as either distributed or unified, participants generally reported experiencing the setup as a single, integrated system rather than spatially distributed individual applications. The mean rating across all participants was

, and the median was

(see

Figure 6). Since the maximum possible rating is 3, the data still suggests that parts of the system were perceived individually. This perception may be influenced by the spatial separation inherent in distributed systems. The clear physical distinction between device clusters likely made it easier to differentiate between components. To further strengthen the perception of a unified system, reducing the spatial distance between clusters or introducing bridging components that visually or functionally connect them might help. However, adding components that seem unnecessary could disrupt immersion. Likewise, reducing physical space might negatively impact gameplay, as participants reported that movement between device clusters contributed positively to game flow. The mean score for this item was

, and the median was

. Since movement can improve the game flow, any restrictions on freedom of movement should be avoided.

When analyzing response times, we observed wide interquartile ranges between the first (

q1) and third quartiles (

q3) (see

Figure 6), indicating high variability in responses. The mean perceived response to inputs was

with a variance of

. For the perception of the system as either sluggish or fluid, the mean score was

, with a variance of

. Both items had a median of

. These results suggest that most participants perceived the system as responsive and smooth. However, the result allows for multiple interpretations. On one hand, subjective definitions of terms like fast, slow, smooth, and sluggish can cause different expectations and reference points. On the other hand, differences in network communication may have led to varying response times.

Participants’ perceptions of how well the system directed their attention varied widely. The mean was , closely matching the median of . In this gaming context, players had some freedom to decide where to continue playing. Therefore, strong attention guidance was not always desirable. This result aligns well with expectations. In other application domains, such as visiting stations in a smart learning environment, implementing guiding components would be beneficial. Light-based cues, for example, could be used to direct users’ focus more clearly.

In gaming contexts, the system state plays a more critical role, as it helps players interpret feedback. The questionnaire addressed the perceived multimedia representation of system states (see

Supplementary Material). Participants reported this aspect with a mean of

and a median of

.

Figure 6 shows that participants used all available response options. This suggests that most users could perceive the system state and make sense of the feedback. Nonetheless, further improvements are possible—for example, by continuously visualizing the current game state and progress at one of the stations to make this information permanently accessible.

However, this measure does not address whether users perceive feedback as immediate. Participants rated the immediacy of the feedback with a mean of

and a median of

. Here, subjective expectations regarding latency play a significant role. Ideally, all participants would perceive the feedback as immediate.

Figure 6 shows that the reference implementation did not fully meet this goal. If future measurements confirm noticeable delays, the network communication architecture should be optimized. Since a spot check during system setup did not reveal perceivable latency, high user expectations may have contributed to lower scores. Such expectations cannot be fully addressed through technical means. As most responses still fall in the “immediate” range, this issue may not require further intervention.

The questionnaire also investigated participants’ mental model of the system, focusing on perceptions of wired versus wireless connections. However, meaningful conclusions are difficult due to high variances (

). For three out of four connections, the median was

, and for the connection between the interface and the feedback light, it was

, leaning slightly toward a wired interpretation. The means align with these medians (see

Figure 6). Most participants likely could not determine which connections were wired or wireless. This confusion may result from the visibility of cables (e.g., between button boards and microcontrollers). The data suggests that participants could not clearly distinguish wireless from wired connections, indicating that the system’s dynamic networking via Reflection worked effectively. This is a positive outcome, as it shows that the communication quality of the overall system remained perceptually consistent regardless of the underlying connection type.

At the end of the evaluation, participants were asked to suggest possible additional use cases in addition to the teaching scenario examined in the study. The free text responses on other possible applications were clustered independently and inductively by two people (cf. [

36]). Clusters that did not match were discussed, and a final cluster was determined. Reliability was not calculated. Their free-text responses were grouped inductively into two levels of categories, presented in

Table 1. The complete responses are available in

Appendix A. Many responses focused on using the system to teach factual or procedural knowledge. In contrast, others suggested pure entertainment games or team-building activities as potential use cases.

Using the system’s technical architecture in other domains is, in general, entirely feasible, and the case study represents only one conceivable example. Beyond the example of games, the framework also offers strong potential, especially also in contexts that do not require learning content and where the structure of the involved ensembles may differ.

7. Discussion

The results yield several important conclusions. Despite the distributed and coordinated nature of the ensembles, most test subjects perceived the application as a cohesive and unified system rather than a collection of spatially dispersed, disconnected components. Additionally, participants generally found the system to be responsive and smooth. Even though it relied on wireless communication and was distributed throughout the room, it still reacted quickly to user interactions. Overall, the survey on user perception of the technical setup revealed predominantly positive feedback. The participants viewed the system as consistent, with appropriate response and feedback times. Nonetheless, the considerable variance in some responses indicates areas for potential improvement, as outlined in the previous section.

All in all, the key finding is that our approach appears to be suitable for implementing coordinated applications in smart environments. The system responds quickly enough, and it is possible to create distributed applications that are perceived as a single application.

Furthermore, the spatial approach appears to be an effective way to facilitate the flow of play. This makes the use of spatially distributed applications particularly appealing in the context of university teaching.

However, it has not yet been defined who, besides the developers, should be authorized to specify service coordination and how this information can be integrated into devices or their self-descriptions. Particularly, the question of how to take a human-in-the-loop approach and distribute responsibility for certain system states and processes between humans and the system remains yet unanswered. To ensure usability, a user interface that allows end users to define their own orchestrations should be provided, similar to the rule-based programming options in common, centralized smart home platforms. One solution would be to extend the existing REST interface of the description mediator to transmit the coordination configurations to the participating devices. Alternatively, a control or configuration concept based on agents could be considered in which autonomous software agents negotiate and implement coordination.

Furthermore, the question of how to provide information about event-based coordination through instructions or explanations remains unanswered. To this end, causal chains could be evaluated to explain the behavior of ensembles within smart environments in a comprehensible manner. The basis for this can be approaches published in the relevant literature (see

Section 3.1).

Numerous explanations or instructions are conceivable using the information provided in our approach. It is possible to only take the self-descriptions into account without knowing the current state of the ensembles. One conceivable formulation for the digital learning space could be: “This station will continue with a dialogue after you have solved all tasks at all other stations”. In addition, the states of the components within the ensembles involved could be considered. Conceivable explanations that could be constructed from the processed states and the given self-descriptions may be formulated as follows: “This dialogue appears because you answered all the questions at the other stations correctly”. Similarly, information about missing actions may be provided: “You cannot proceed until you have correctly solved all tasks. Stations A and B are still up for solving”.

A suitable rendering engine could be implemented in the description mediator to provide these instructions. This engine would take into account the information needed for the chosen explanation format and style.

Notably, the chosen study approach entails some limitations detailed in the following, particularly regarding:

The framework extension was used to implement learning games in university teaching, which resulted in a limited user group in the evaluation. The studied group is composed of students who are not representative of the general population. As a result, it is not possible to generalize the case study results. Further, studies with other applications are necessary to understand the technical perceptions of a more representative group of users. Since the instrument used in this evaluation was not validated, biases introduced by the instrument itself cannot be ruled out. The use of a standardized instrument would have increased confidence in the validity of the survey results. However, a literature search revealed no suitable instruments. The specific focus and structure of the survey make it difficult to apply a general validated instrument. The paper published by Pott et al. [

37] addresses this shortcoming by presenting the Spatial Computing Evaluation Framework, which, however, currently does not provide a quantitative questionnaire.

Furthermore, in addition to the examined user group and application, there are limitations regarding the interaction devices. The framework allows for a dynamic integration of various input and output devices. Smart objects and ambient applications used in the study were dynamically discovered and connected at runtime. Yet, a fixed setup of interaction devices was chosen for the study. Future studies should investigate the capabilities of dynamic coupling of additional interaction devices in conjunction with orchestration in terms of user experience and usability.

8. Conclusions

In this paper, we presented a framework for coordinating ensembles of smart objects and ambient applications. Note that we use the term ambient application for applications that run within a smart environment and make use of devices present in it as ambient applications. Towards this end, we introduced an extension to the smart object description language (SODL) that allows for a recording of coordination events. In doing so, reactions to disseminated state changes of other components in the environment can be described but also processed. We further presented a framework that makes use of these self-descriptions in order to realize the required message exchange. It also provides developers a tool that makes it simple to respond to disseminated state changes and thereby realize a service coordination between the ensembles. Our approach allows for decentralized coordination, eliminating the single point of failure inherent in centralized approaches. Our system supports dynamic connectivity between smart objects and applications, enabling mutual control. Additionally, it can automatically generate user instructions for these interconnected devices and applications.

This addresses our first research question of how self-explaining ensembles could be coordinated in a decentralized manner. The recording of coordination events within the self-description further addresses the second research question of how explanations of these event-driven actions could be provided. We have also discussed possible instructions and corresponding approaches that could be generated based on the self-descriptions, which may also take into account the current state of the components.

Finally, we conducted a user study to answer our third research question of how users perceive spatially coordinated ensembles realized based on our approach within the scope of a digital learning environment. The findings suggest that our approach is well suited for implementing coordinated applications within smart environments. The system demonstrates sufficient responsiveness and successfully supports the development of distributed applications that are perceived by users as a unified, cohesive system. However, limitations of the study approach include the restricted diversity of participants, the unvalidated study instrument, the chosen use case, and the devices and application involved in our setup. These shortcomings leave room for future studies, which should ideally be underpinned by objective measurement data. An analysis of input lag could provide valuable information, particularly with respect to responsiveness and fluidity.

Our approach represents an important step towards coordinated self-explaining smart environments. However, so far, only the interaction between users and ensembles has been explained. No tutorials or instructions have been implemented that cover the coordination of several ensembles or the question of why the system has reached the current state, which is not always or not solely caused by interactions. In the future, additional extensions of the framework and further studies are required in this regard. The description mediator should be modified to process the provided coordination events. Displaying components could respect the current state of the involved components to provide rich instructions about the smart environment. To this end, cause-effect chains could be analyzed. In particular, the questions of how and where instructions should be displayed in smart environments are still open research questions.

More specific for the examined use case, the pictorial instructions used in our study depict a static user interface because the evaluated device networks consist of identical input devices. Though, the framework also allows for dynamic instruction generation, which can be integrated into application user interfaces. However, an adaptation to the game interfaces should be considered. Further investigation is required to determine the extent to which these should be based on the actual games and the extent to which the interactions for operating the tutorial are suitable.

In general, our framework can be applied to various application domains. Based on our study, we have outlined suggestions for different application domains. In addition to the shown example of smart learning environments, it can be used, for example, in interactive exhibitions in museums or public buildings. The system’s ability to coordinate different self-explaining ensembles within a smart environment enables the imparting and querying of knowledge through interfaces designed similarly to the ones used in our case study. However, completely different applications beyond these approaches are also conceivable.

Furthermore, more research is needed to clarify the questions of who should be authorized to establish specific rules for service coordination and how this information can be communicated to the devices and applications involved.

_Huang.png)