1. Introduction

Reliable network monitoring is important to fully understand the state of the network and infrastructure within any organisation for real-time performance analysis and cyber threat intelligence. This allows the easy and proactive identification of any issues in network devices, applications, and services. Without this ability, both the network operations centre (NOC) and security operations centre (SOC) would be unable to efficiently and effectively perform data analysis. As a result, network and cyber security professionals have to rely on outdated network information or implement new measures blindly, and thus, the proposed solution may not be appropriate. Therefore, network analysis tools are critical to business operations, cyber security, and network scalability [

1].

The role of a network monitoring tool within an organisation is typically event or metric collection by employing either simple network management protocol (SNMP) polling or performing syslog collection using a large number of distributed devices. Older tools that are still in use today are increasingly showing scalability problems; for example, older IT infrastructure may have grown beyond what system monitoring teams can manually support. This ultimately creates issues where the monitored and actual network states are likely not to be the same [

2,

3].

This creates an environment where monitoring tools are no longer trusted to provide a correct overview of the network or security monitoring state, which causes, for example, significantly higher response times to system issues or, on many occasions, issues being missed completely. Organisations are increasingly responding to this issue by incorporating expensive SaaS tools instead of investigating modern local alternatives [

2]. In this paper, a new network monitoring architecture is proposed that is as efficient as its SaaS counterparts, as well as fully scalable, secure, and resilient.

1.1. Motivations and Contribution

It has been realised that organisations of any scale need cost-effective solutions for network security, monitoring, and management. The reference platform discussed in this paper will provide an efficient and reliable alternative solution that can easily be adjusted to meet the specific digital needs of an organisation. The proposed method seeks to address previously experienced issues with unreliable and aging network monitoring tools and platforms. Developing a modern, scalable solution while maintaining the locality of the proposed solution will reduce the supply chain risk posed by placing a high level of trust in external monitoring vendors. The proposed method can benefit organisations that are frequently experiencing real-time network observation issues in cases where existing network monitoring platforms are no longer functioning properly due to the organisation’s growth and persistent reliance on obsolete solutions. This leads to a lack of trust in network monitoring and threat detection tools. Within the organisation, secondary stakeholders are teams leveraging the platform, such as network and security operations teams who use the platform to efficiently perform data analysis and issue triage, as well as DevOps/infrastructure teams who will be able to easily deploy the platform due to the incorporation of GitOps and Infrastructure as Code (IaC).

1.2. Scope and Structure

This paper aims to propose a novel syslog collection and analysis architecture and tools that are resilient, secure, and efficient, incorporating modern techniques that are easily repeatable and deployable, e.g., GitOps and IaC, such that the project can be easily redeployed on desired platforms. This will be implemented by creating a reference architecture that could be easily used as a template and adjusted as needed. This would enable the easy deployment of the reference design into an organisation, providing an alternative to existing expensive and aging solutions.

This would also decrease the response time and increase the network monitoring efficiency across the organisation, streamlining network and security operations processes, as discussed in [

4]. This study also aims to enable the successful and timely delivery of a reference syslog platform incorporating only open-source tools. Furthermore, it also provides a complete evaluation of the proposed system model against state-of-the-art competitors and further steps on how the system could be improved.

With the infrastructure, platform, and application delivered entirely as code, the proposed system can be deployed automatically in a repeatable manner. Overall, the proposed architecture will provide a useful reference deployment for any organisation seeking to deploy or analyse these systems. The proposed infrastructure will be accessible to smaller organisations without the dedicated platform and monitoring knowledge needed to design their own platform from scratch, allowing the system’s deployment where such systems would not typically be deployed, providing an alternative to Software as a Service (SaaS).

The viability of the platform will be ensured through the robust evaluation and testing of the platform in cases of failure. We also evaluated the resiliency and network stress as parameters to define the performance of the proposed platform. This was coupled with a user evaluation of how data can be searched and visualized to ensure that it is fit for our purposes. These requirements will be determined through the analysis and investigation of the current syslog monitoring ecosystem and the merits of each platform.

2. Related Work

It is crucial to understand the ever-increasing scale of the Internet and the underlying infrastructure to realise the importance of scalable network monitoring and maintain real-time observation over a network. Currently, 5.3 billion people, which is about 66% of the world population, actively use Internet applications and services [

5]. This puts a huge load on the global Internet infrastructure. Cloudflare reported an overall increase in Internet traffic of 23% across its content delivery network in 2022. This has also been observed in consumer access providers, with Openreach seeing an unprecedented increase across 2019 to 2022 of 192%, i.e., from 22,000 PB to 64,364 PB [

6].

The prevalence of remote work in education and industry has increased in recent years, driven largely by the COVID-19 pandemic among other factors [

7]. Enabled by rapidly improving last-mile infrastructure, Openreach is now serving Gigabit FTTP to over 10 million UK premises [

6]. This allows consumers to be served with higher quality content at faster rates, from content providers and content delivery networks (CDNs) such as Cloudflare.

With these traffic increases, network complexity and scale have increased substantially to compensate for the new digital information load. This has created new challenges in network monitoring where existing tools are unable to handle the rate of data ingress or polling, and either fail or run behind, resulting in diminished network state monitoring [

8]. This diminished state of network observability can compound into a large disparity between the true state and the state of the network being observed through the monitoring tools. This can lead to a wide spectrum of issues ranging from reduced performance to full incidents causing service outages and ultimately financial losses to the organisation [

2].

When faults occur in systems, e.g., network servers, end devices, or security firewalls, System Admins may struggle to understand the issue if their monitoring tools are inefficient or outdated. This leads to a delayed and less directed response from the network operation team and subsequently a longer time to resolution. As a result, this makes the speed and accuracy of the response critical in both a network operation and security operation context, where every second matters in trying to understand the issue before a decision and response can be implemented [

9].

2.1. Existing Frameworks

To solve the speed and accuracy issues in network monitoring, two major types of tools are used to maintain observability over the network. These can be categorized as either syslog collection/analysis tools and SNMP polling platforms. Both serve a different purpose and are typically used in tandem to provide both syslog event analysis and externally polled state using the SNMP method.

The collection of syslog to a central location for aggregation and analysis is extremely important. Without a centralised aggregator, logs would have to be analysed on-device, which leads to problems such as the logs having been tampered with or the logs being inaccessible if the event caused a device or network failure. When the syslog analysis platform is properly deployed, it provides the NOC team with the ability to search the logs quickly and efficiently with a range of queries.

The syslog format is described in Request for Comment (RFC) 5424 by the Internet Engineering Task Force (IETF), which defines the protocol and the payload allowing the syslog collection platform to split and index the logs by field. For instance, the hostname and IP address could be split from the log, allowing them to be filtered. This enables the NOC team to quickly filter the log records, which allows the NOC team to effectively find particular system or security faults amongst potentially millions of log entries [

10].

In general, these syslog collection solutions are designed and deployed in a monolithic architecture, where one or two syslog servers collect and parse all incoming syslog from the network. This creates log entry points that are frequently prone to issues like delays, especially with commonly deployed older products such as the Solarwinds Kiwi Syslog Server. This has led to newer products adopting a distributed architecture involving syslog being sent to multiple endpoints, as Cisco platforms typically allow up to four syslog servers to be configured [

11].

These syslog collection and analysis platforms are typically coupled with a metric collection platform, which is often an SNMP. Unlike syslog, SNMP utilises a collector-based architecture in which the tool reaches out to the device to collect the data; it is known as the Management Information Base (MIB). These tools are typically used to gather metrics from devices, which are then alerted to prompt an action. This is typically information that is important but not a syslog event, e.g., temperature or optical light level metrics [

12]. The role of an externally observing tool is also important to monitor the failure events that could result in the inability for a syslog to be generated, such as a device going offline [

13].

In summary, both syslog and SNMP monitoring tools are used to observe both device-generated syslog events and externally polled metric collection. Both monitoring tools can be summarised into roughly three groups of architecture and deployment type. Initially, these tools were typically designed and deployed in a monolithic manner. This would involve the tool being deployed as an appliance or installed onto an operating system (OS) image. An example of an SNMP polling tool utilising this architecture is LibreNMS, which recommends deployment onto a Linux host [

14]. On the other hand, with syslog collection tools, an example would be the Solarwinds Kiwi Syslog Server, installed similarly onto a single host [

15]. While both are easy to deploy, the resulting monolithic monitoring stack lacks resilience and is prone to failure. They also commonly exhibit scalability problems due to their monolithic and agent-based nature.

A common example of a modern and commonly deployed analysis platform is Cisco Splunk. Often used by small- and medium-sized business enterprises (SMBEs) to enable scalable ingestion, indexing, and analysis platforms. Splunk is commonly used for large-scale data analysis of a wide variety of datasets but is mostly used for network and security log analysis.

This has led to the creation of two modern categories of tools, SaaS and scalable open source stacks. Both present significant advantages and disadvantages, i.e., SaaS tools can provide an excellent feature set. Most SaaS SNMP monitoring tools, such as LogicMonitor and Auvik, do not offer on-premise deployment, creating a reliance on their cloud services and an inability to use their product in the offline threat intelligence environment. This is a shared issue with SaaS syslog collection platforms with multiple notable platforms that do not offer on-premise deployments. The SaaS monitoring tool is also highly expensive and charged per device or volume of log ingestion, resulting in high licensing costs in large deployments/networks.

To combat this issue, some organisations choose to deploy a third category of tools that incorporate multiple components to deploy an in-house monitoring stack. Examples of this include the ElasticSearch, Logstash, Kibana (ELK) Stack used for log collection/analysis, and Prometheus Grafana [

16] stack for SNMP/metric monitoring. These stacks provide a highly scalable and resilient monitoring stack at the cost of complexity, due to these tools preferring to be deployed on complicated platforms such as Kubernetes, resulting in companies leaning toward SaaS options instead of an internal platform. When deployed and managed correctly, an in-house platform can provide a highly scalable and resilient monitoring stack incorporating modern principles like GitOps and IaC.

2.2. Contributions

Reflecting on the issues identified with the different types of monitoring stacks, it is clear that the barrier to entry to deploying scalable in-house monitoring stacks is too high, leading to the preference for SaaS products for many organisations.

This paper focuses on designing and implementing a syslog analysis stack incorporating modern principles such as IaC to define the entire stack in Git. Furthermore, IaC also brings other benefits such as repeatability and consistency of build and deployment, which will significantly reduce human errors and deployment time [

9].

This platform-agnostic approach enables the core system to be used as a template, requiring only minor changes for deployment on any cloud and system platform.

The entire platform and application will be managed and deployed, incorporating the modern deployment principles identified, such as GitOps enabling the proposed method to be continuously integrated into the platform. This is typically realised with an agent configured onto the platform, such as ArgoCD or Flux, which deploys resources defined in the repo to the cluster/platform to avoid downtime and interruptions to the application [

17].

The end users benefit from this by permitting the entire infrastructure, platform, and application to be managed centrally through a version-controlled approach in line with modern development. This enables easy management of the platform for NOC and SOC.

A strong emphasis on security is considered in the proposed architecture, ensuring the infrastructure, platform, and application are protected and secured in line with the best practices identified in state-of-the-art technologies.

The proposed architecture addresses the identified problems with monolithic and SaaS monitoring stacks in which organisations can easily understand and deploy an internal syslog collection stack onto an internal platform of their choice. This lowers the barrier of entry to these systems and provides a viable alternative to SaaS products/platforms.

3. System Model

A clear knowledge gap in the industry is evident through the careful analysis of the existing ecosystem of monolithic and SaaS platforms. This gap is mostly created through the complexity of deploying scalable local stacks. This will be addressed by the creation of a reference local stack addressing this gap in the market.

This section will analyse the use of the platform and the relevant technical requirements. We also identify the tools required so they can be analysed to implement in the proposed reference stack, which can be defined as Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS) [

18].

Many organisations are transitioning from traditional monolithic solutions to newer monitoring systems in which SaaS is preferred due to several identified issues with monolithic solutions. SaaS solutions often have highly predictable and well-defined costs. For instance, in the case of both Splunk and the Elastic Stack, this is transparently defined without any hidden costs. This ultimately helps predict usage costs easily due to the subscription-based pricing model. This allows the classification of expenses as an operating expense, rather than capital expenditure if a local solution would be implemented.

Another major factor is the skills required for implementation. It requires a high level of knowledge and skills to create a modern infrastructure, platform, and application stack to run an in-house solution based on open source projects. This incurs additional costs, as expensive contracts or in-house staff would be required to design and deploy the solution. Notably, with the Elastic Stack, Elastic Co also offers its own managed and SaaS-hosted Elastic Stack solutions due to the complexity and knowledge required for deploying the Elastic Stack. This creates two main requirements. Firstly, we need to avoid high capital expenditure by designing the solution around high-value cloud services. Secondly, we need to keep the platform simple using existing tools and techniques to ensure it can be easily configured and deployed.

3.1. Infrastructure

The lowest-level component of the stack is the underlying infrastructure provider. This will provide the infrastructure on which the platform is configured, and multiple infrastructure resources will be required. This will include a computing resource, such as a virtual private server (VPS), which provides a virtualized Linux host on the cloud platform. Secondly, a private network resource is required that enables a private backend network between the computing nodes.

A load balancer is required, which enables the load balancing of incoming transmission control protocol (TCP) traffic to various nodes. On top of this, all resources should be deployed through an application programming interface (API) or a provider from the proposed infrastructure as a code tool. For this purpose, Hetzner would be a suitable tool for the proposed network monitoring platform. It is important to note that while a specific IaaS provider is used for this implementation, the self-hosted nature of the architecture ensures it is not locked to a single vendor. The platform is designed to be portable and could equally be deployed on other IaaS providers, an on-premise private cloud like OpenStack, or local bare-metal hardware. Here, Hetzner provides a competitively priced cloud hosting platform that offers computing, load balancing, networking, and, most importantly, IaC integration.

As Hetzner has a much smaller scope of products than larger services such as Amazon Web Services (AWS) and Google Cloud, pricing is much more competitive, as this is mainly just a computing resource provider [

19]. Interestingly, Hetzner offers ARM-based server instances that provide exceptionally high value for capital investment and will be used in this framework [

20].

3.2. Deployment Pipeline

A declarative language for defining infrastructure as code was required to define the infrastructure that was configured in this study. This means that resources and their provisioning configurations are defined as code. This requires the required nodes/VPS, private network, and load balancer from Hetzner.

There are multiple options available for this layer. The only requirement is that it can run from GitHub Actions [

21] and offers integration for the Hetzner Cloud. It also needs to offer an external state, which means the state of the infrastructure exists outside of the pipeline. If an external state is not used, it will not know the previous run or deployments [

22].

Research into available options for IaC definitions will be required to deliver the goal of a simple, maintainable, declarative infrastructure. Terraform [

23] was used due to the amount of documentation and its widespread use in the industry. Pulumi offers some interesting functionality such as the Python 3.9 syntax instead of Hashicorp configuration language, but the Hetzner module seems poorly maintained. Choosing Terraform also allows the usage of Terraform Cloud, which allows the external storage of the infrastructure state by allowing this to run in disposable pipeline VMs, as required by GitHub Actions [

24].

3.3. Configuration Management

Once the infrastructure has been deployed onto Hetzner by Terraform, a configuration tool is required. This serves a slightly different purpose from the IaC tool. Instead of specifying the infrastructure state, configuration as code tools are used to specify a desired configuration state [

25]. For example, this can be used to define a list of users that we create on a Linux host. The methodology involved two phases. First, a configuration tool was used for initial setup purposes: deploying the base configuration, updating the VPS, and SSH hardening. Second, this established base was used to bootstrap the container deployment and subsequent tasks.

There are quite a few options available for this on the market, notably Ansible [

26], Chef, and Puppet. These vary slightly with Ansible using YAML, i.e., yet another markup language, which is often used for writing configuration files, for config, and for an “agentless” approach in which the system is configured using SSH. This provides significant benefits over Chef and Puppet, which use Ruby and Puppet Language, respectively. The SSH-based configuration allows Ansible to interact without installing an agent on the host, unlike with Chef and Puppet [

25].

3.4. Container Orchestration

Once the base configuration and system hardening configuration have been deployed by Ansible, the application will be deployed as containers running on this platform. It needs to handle tasks such as deployment, scheduling, checking the health of the application containers, and providing distributed storage to the application. The main requirement here is a CI/CD agent. This means all of the above tasks can be specified in a GitHub repo, which will be continually deployed to the cluster. This allows the proposed framework to incorporate modern GitOps principles [

17].

Due to the complexity of the task, only two major options are available, i.e., Kubernetes and Docker Swarm. Docker Swarm is an orchestration platform built around the Docker container runtime. However, this is not commonly used in larger-scale environments. Here, Kubernetes has become the obvious choice as this is the platform chosen by most of the industry and is used by most of the managed container services [

27,

28].

Kubernetes has proven its effectiveness in providing scalable and reliable network monitoring in unconventional and challenging environments, as shown by the development of a Kubernetes-based platform for large-scale IoT power infrastructure monitoring. This platform demonstrates excellent scalability in monitoring large-scale environments with low latency [

29]. It has also demonstrated excellent capabilities in the context of network and security monitoring. Recent work integrating P4-based network telemetry with Kubernetes orchestration-based platforms in edge micro-data centres demonstrated significant improvements in real-time traffic monitoring and

service-level agreement compliance through efficient latency detection and automated network reconfiguration [

30].

Recent advancements in anomaly detection for Kubernetes infrastructures highlight the effectiveness of AI classification techniques for monitoring platforms. An implemented system utilising autoencoder models demonstrated significant potential in reducing manual network monitoring efforts against a time series dataset of distributed denial of service attack mitigation data [

31].

On top of Kubernetes, a couple of CI/CD agents are available, e.g., ArgoCD and Flux [

32]. Here, ArgoCD is a highly complicated tool and performs a large number of functionalities, e.g., automated deployment, automated configuration, health status analysis, etc. Only a few features will be utilised in the proposed method. Following the goal of simplicity for the system model proposed in this paper, Flux was used. This agent checks for changes to resources in the GitHub repo, and then performs relevant rollouts to ensure these are deployed without downtime [

17].

3.5. Syslog Collection

Once the platform is fully designed and the Flux CI/CD agent is configured, the application is deployed for further investigation. The application performs the functions of log ingestion, indexing, and visualization, which assist the analyst in quickly searching through logs to find artefacts of use. There are two main options here for scalable open source log analysis stacks, e.g., the ELK Stack and the newer Grafana Loki Stack [

33]. Both have multiple components performing storage, indexing, and search, connected to a visualization/search frontend.

This is where the proposed architecture deviates from the existing industry framework, i.e., instead of choosing the commonly used ELK stack, the Loki stack will be adopted. Recently, Elastic has made concerning changes to their licensing method, suggesting they are heavily trying to push their SaaS solution instead of fostering the open source nature of the project by changing the Apache 2.0 license to the server-side public license. This has caused major issues for commercial users of Elastic such as managed service providers AWS, Google Cloud Platform (GCP), Logz, etc. This has put the future stability of open source use in jeopardy [

34]. To address this shortcoming, the Grafana Loki platform will be used instead of ELK. This stack requires fewer resources than the full ELK Stack and has the benefit of integrating into Grafana, giving an analyst using the platform a single pane of glass showing both existing Prometheus SNMP metrics and Loki syslog collection. This will massively improve observability because multiple tools do not have to be monitored [

35].

3.6. Security of the Tool

The security requirements of the design should be considered in order for the solution to be fit for its purpose. A comprehensive security stance will be implemented in the proposed architecture to minimise points of failure. This will start with the infrastructure layer by implementing measures such as VPC networking to isolate the Kubernetes backend network. Inbound firewalls are also used to ensure access control for any privileged ports required, such as SSH. This will be combined with host-level security for the Kubernetes nodes, with guidelines followed to implement SSH security and automatic unattended security patching [

36].

The security of the GitHub Actions platform will be ensured by utilising GitHub Actions Secrets to securely access any required API keys and secrets during the pipeline, thereby minimising the chance of accidental exposure in logs [

37]. Finally, security will be strongly implemented to secure the platform and application. To further enhance security, the Kubernetes platform and application stack will implement safeguards against common Kubernetes issues and misconfigurations. These will include proper pod namespace limits and namespace separation, while minimising privilege with pod-specific security context being applied [

38]. The software supply chain is strongly considered to ensure the use of scanned container images to safely deploy the application stack, which supports policies such as single sign-on and comprehensive audit logging accessed via transport encryption, provided by Cert-manager [

39] transport layer security (TLS).

A threat mitigation matrix,

Table 1, was developed to identify specific risks across all layers of the system, from infrastructure provisioning to log storage and CI/CD automation. This matrix outlines key threats, their impact, and corresponding mitigation strategies, ensuring that technical countermeasures are directly tied to the unique risks posed by each tool and platform component.

To further validate the security posture of the underlying host environment, a privilege escalation simulation was conducted. This test involved the creation of an unprivileged user account on a Kubernetes node, from which the Linpeas automated scanner was executed to identify potential vulnerabilities and misconfigurations. The results of the scan did not identify any high-risk or easily exploitable vectors for privilege escalation. In addition to internal security validations, the external attack surface was tested using a Nessus vulnerability scanner. The Nessus scans revealed no major exploitable issues, confirming the robust external security posture of the platform and the security of the Grafana container. This could be further enhanced by putting the platform access behind the Web Application Firewall (WAF) solution. While the Linpeas tool highlighted several “less probable” Common Vulnerabilities and Exposures (CVEs) and noted that security features such as Seccomp were disabled, the combined tests indicate that the baseline hardening measures effectively mitigate common privilege escalation paths.

Overall, this provides an opinionated and strong security stance, comprehensive across the entire platform, which allows safe and trustworthy usage in an enterprise context.

3.7. Prototype Development and Methodology

When all of the above stages are implemented, standard Git practices will be followed. This will ensure that changes are properly tracked, allowing for reproducible builds of the platform. The proposed framework will be split into two branches, i.e., a production branch and a staging/test branch. The production branch is treated as a live production environment, where a build of the production branch will be deployed. The main development work will take place in the development branch. This will merge into the production branch, which takes place after the completion and validation of major functionalities and developments. This will ensure that both development and stable testing environments remain available throughout the project, mirroring real-world deployment of GitOps and CI/CD [

40].

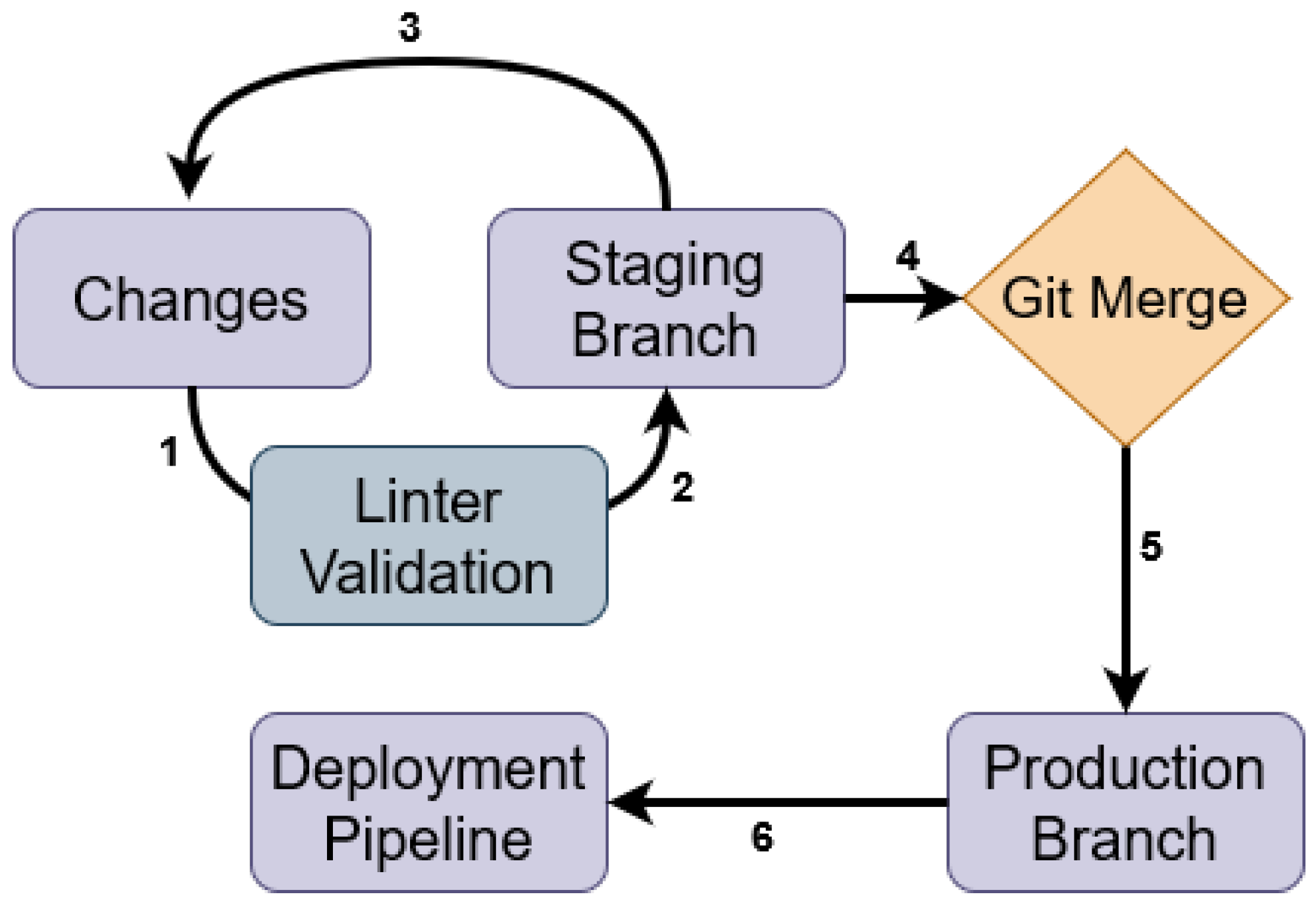

Figure 1 shows the deployment logic in which components 1, 2, and 3 represent the iterative Git workflow used in the project to change, lint, and push code. Once the staging branch is brought to the desired state, a Git merge is performed in component 4. Merging the staging branch to the production branch is completed in component 5. Once merged with the production branch, the deployment pipeline triggers the deployment of the desired infrastructure.

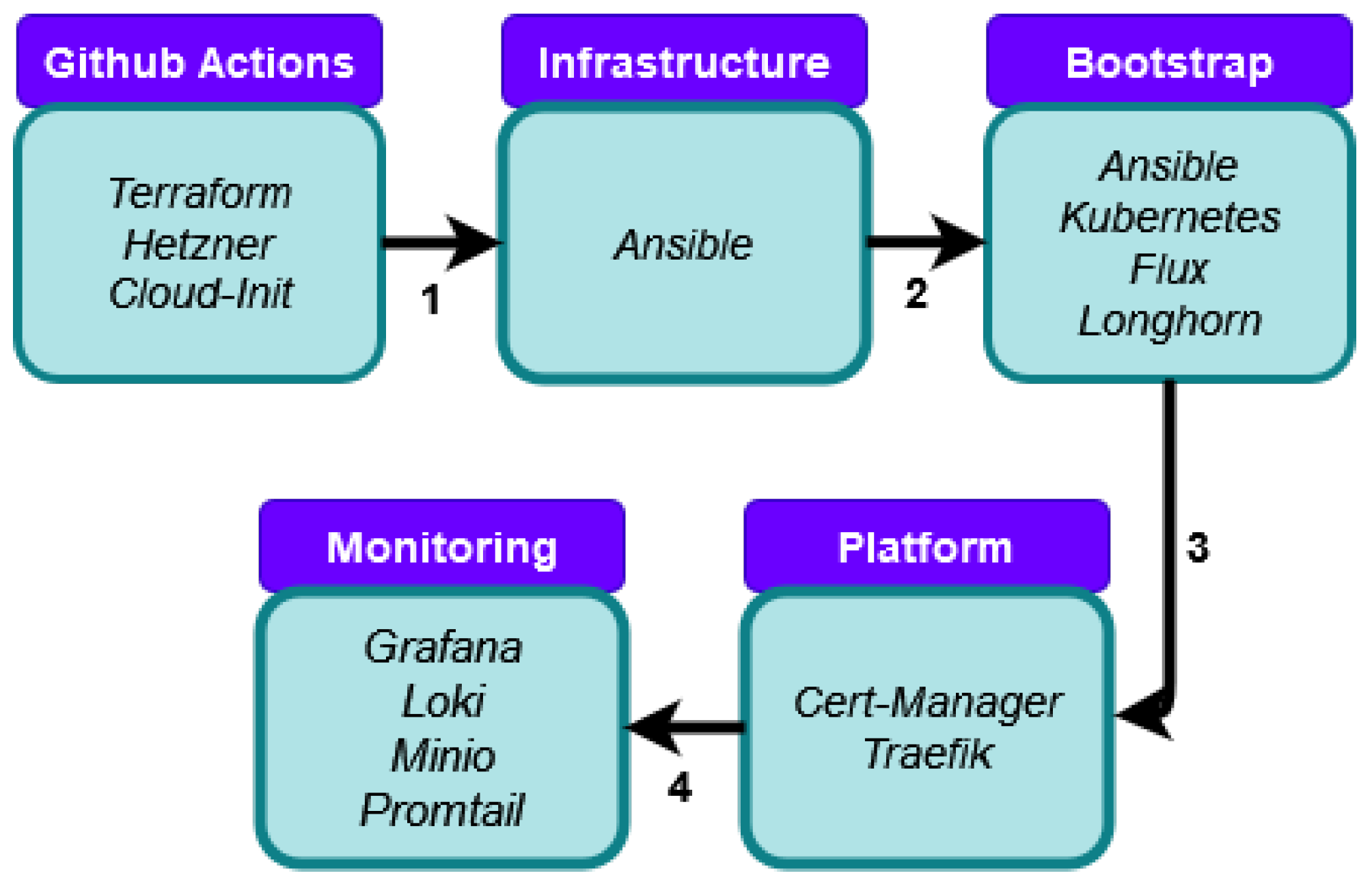

Figure 2 demonstrates the work process of the project, which highlights how the staging branch undergoes iterative development. This will then be Git-merged into the production branch, which will trigger the GitHub Action to deploy the infrastructure.

In conclusion, the full stack was carefully chosen to incorporate the goals of minimising vendor lock-in while ensuring alignment with popular projects and documentation. The platform utilises Hetzner, Terraform, Ansible, Kubernetes, and Grafana Loki, enabling the design and build of a scalable, high-availability network syslog aggregation stack.

4. Proposed System Architecture

In this section, we will cover the actual implementation and configuration of the tools discussed in the previous section. Therefore, we will cover the individual tool configurations and contextualise this with how they are utilised in the pipeline to bootstrap the infrastructure and platform and deploy the final log analysis application. Due to the high level of complexity of the proposed methods, this section will cover the implementation at a relatively high level of abstraction.

Figure 2 describes the high-level deployment process completed in the pipeline. Firstly, Stage 1 deploys the Terraform definitions to Hetzner and provides the Cloud-Init seed configuration. Once Stage 1 is completed, Ansible is used as the base host configuration on the infrastructure. This is followed by the platform bootstrap, where Ansible deploys Kubernetes and base CRDs in Stage 3. Following this, Stage 4 uses Flux to deploy the rest of the platform, including Cert-Manager [

39] and Traefik [

41]. This is followed by the log application stack automatically deploying from Flux in Stage 5.

4.1. Pipeline: Github Actions

The most important part of the project is the GitHub Actions pipeline. This creates the build environment from which the tools will run. Here, the action is configured to run when the master branch is merged, following best practices to continuously integrate and deploy the master branch [

40].

The pipeline configuration is specified in the

actions.yaml file located in “.github/workflows”. The pipeline is configured to deploy from the master branch, ignore the Kubernetes resource file, and set the working folder to the Terraform directory. Furthermore, GitHub Action Secrets were also configured, storing the configuration and API keys. This provides secure handling of secrets following the best security practices [

37]. This securely deploys SSH keys to the SSH agent, avoiding insecure handling. The pipeline then initiates the Terraform Cloud module using an API key, executes a Terraform plan, applies the configuration, and finally launches the Ansible playbook.

The GitHub Actions pipeline configuration is specified in the

actions.yaml file located in “.github/workflows”. Here,

actions.yaml is a declarative configuration describing the build stages to deploy the project. The first stage securely loads secrets from the GitHub Actions Secrets configured in the repository. This populates deployment variables, such as SSH Keys for the SSH-Agent, API Keys, and miscellaneous variables, such as the domain [

37]. These resources are used to initialize the Terraform Cloud and proceed to the infrastructure and platform deployment stages of the pipeline.

4.2. Infrastructure: Hetzner, Terraform, and Cloud-Init

When the pipeline triggers the Terraform stages, the Terraform planning stage occurs first. This loads the Terraform providers defined in

providers.tf, which are closely comparable to libraries for interacting with services [

42]. In this case, Hetzner [

43] and Cloudflare [

44] providers are loaded, which allows interaction with both Hetzner and Cloudflare. The “remote” state is also configured by defining the use of Terraform Cloud, which stores the infrastructure state between runs. These are initialised using variables defined in

variables.tf. This file defines the node count variable and relevant API keys, domain name system Zone IDs for Cloudflare. Once completed, Terraform interprets

main.tf, which contains resource definitions for deploying the base infrastructure. This is further discussed in

Table 2.

These resource definitions are interpreted by Terraform, which then makes requests to Hetzner to bring the Terraform state in line with the defined Terraform resources.

When requesting the VPS Nodes, a Cloud-Init file is sent. This file contains an initial bootstrap configuration disabling password authentication and defining two user accounts with SSH keys.

4.3. Infrastructure: Ansible

In the final stage of the Terraform application, an Ansible inventory is created. This contains the labelled hostnames of the created nodes, which are ready for configuration to be applied. Ansible is a play-based configuration tool, which means it runs iteratively through the hosts and playbook applying stages, rather than attempting to apply an entire state like Terraform. This starts with the pipeline Ansible applying the main Ansible playbook with relevant variables to select SSH keys and user login. The main playbook defines which roles to apply to which labelled hosts, i.e., different configurations can be selected and applied per host.

The common and hardening Ansible roles should be applied to all hosts. The common role applies a base configuration, such as updating, upgrading, and enabling unattended upgrades. The hardening role implements standard SSH hardening techniques, such as disabling root login and insecure modulus and strongly implementing NIST hardening guidance [

36]. Once completed, the Ansible playbook starts the process of bootstrapping Kubernetes and Flux CI/CD.

4.4. Platform: Kubernetes, Flux, and Longhorn

To deploy the Kubernetes platform, an Ansible role is created to perform the initial bootstrap process to bring up the chosen Kubernetes. This file performs the initial bootstrap process, selecting the first node in the inventory to bootstrap the first node to perform cluster-init and specify the backend networking interface and token used to join the cluster. In this process, each node is defined to be both a control plane and a worker node [

45].

Once the initial node has been bootstrapped, the remaining nodes are joined to the cluster, completing the initial cluster bootstrap of a three-node Kubernetes cluster. An additional stage installs Longhorn onto the cluster. Longhorn [

46] provides a replicated storage volume that permits configuration volumes to persist across the entire cluster, which will ultimately increase the resilience.

After the cluster bootstrap and Longhorn installation have been completed, an additional role is applied to install Flux. The Flux role installs the Flux CI/CD agent onto the cluster with a configuration to deploy all application resources. This provides the continuous integration and deployment functionality of the proposed platform.

4.5. Platform Ingress: Cert-Manager and Traefik

For the application to be made securely available on the cluster, further configuration is required. Two requirements are added for Kubernetes to route incoming web requests from the load balancer to an application container, i.e., an Ingress Controller and a Certificate Manager. An Ingress Controller is a Kubernetes-aware reverse proxy that routes incoming web requests from the load balancer to the application container. Traefik is used for this purpose because this is the default option with K3S [

47] Kubernetes deployment. A configuration override is applied on this to enable a permanent TLS redirect, forcing all ingress traffic to use TLS encryption.

This creates the requirement for issuing certificates for the domain. Therefore, the Cert-manager is deployed using a Helm Chart, in which the Cert-manager handles certificate issuance and renewal. The additional configuration is deployed to allow Cert-manager to use the Cloudflare API to perform DNS Challenges to issue resources defining a wildcard certificate for the domain name.

4.6. Application: Grafana, Loki, Minio, and Promtail

Once Cert-manager and Traefik have been deployed, the final application stack can be configured. This consists of Grafana [

16], Loki [

33], Promtail, and Minio. Here, Grafana provides the visualization and querying frontend. This is deployed from a Helm Chart, which also specifies the admin user and a bcrypt hash for the password. The configuration also adds Loki as a data source, utilises a Longhorn volume for persistent storage, and applies Traefik labels to enable Grafana access through the ingress controller.

Loki provides the data search and indexing backend to Grafana, similar to Elasticsearch. This is similarly deployed with a Helm Chart. It also specifies that the Minio child chart should be deployed, which provides an S3 bucket storage backend for Loki, which is used to store the logs. Both Loki and the child Minio deployment have a specified replica count, i.e., they are highly available on all nodes.

To ingest syslog into Loki and make it searchable in Grafana, Promtail has to be integrated. Promtail provides the equivalent of Logstash and Filebeat but for Loki. This is deployed with a Helm Chart too. This specifies the following relevant Kubernetes and scrapes configurations.

4.7. Log Shipping: Rsyslogd

To finalise the proposed platform, an external data source is required to provide live syslogs into the cluster. This is realised by creating a test VPS. An additional Ansible role is applied to this host, which installs rsyslogd and a configuration to send all-level syslogs via TCP to all cluster nodes, making them available in Grafana and Loki.

5. Platform Source

The full source code of the platform is available on GitHub [

48]. To preserve the authors’ privacy, references to identifiers have been redacted and replaced with placeholders where applicable.

6. Results and Analysis

6.1. Pipeline Testing

For the overall platform to meet the goal of incorporating GitOps and CI/CD, the build process to deploy the platform needs to be evaluated for consistency and repeatability. This will be achieved by initiating multiple deployments of the platform and validating consistent timing and success. In this experiment, Run 3 was performed at a later date to ensure that there was no performance degradation due to the changes performed on Hetzner or GitHub.

The duration of each experiment run and the maximum deviation among the three runs were calculated, yielding the following results. Here, the largest deviation between runs was calculated. Considering the testing information in

Table 3 and

Table 4, the Flux deployment time from making a GitHub commit to changing the replica count was 47 s. This mainly involved waiting for Flux to detect the commit. Once the commit was detected, deploying changes just took 1.07 s.

6.2. SSH Log Analysis

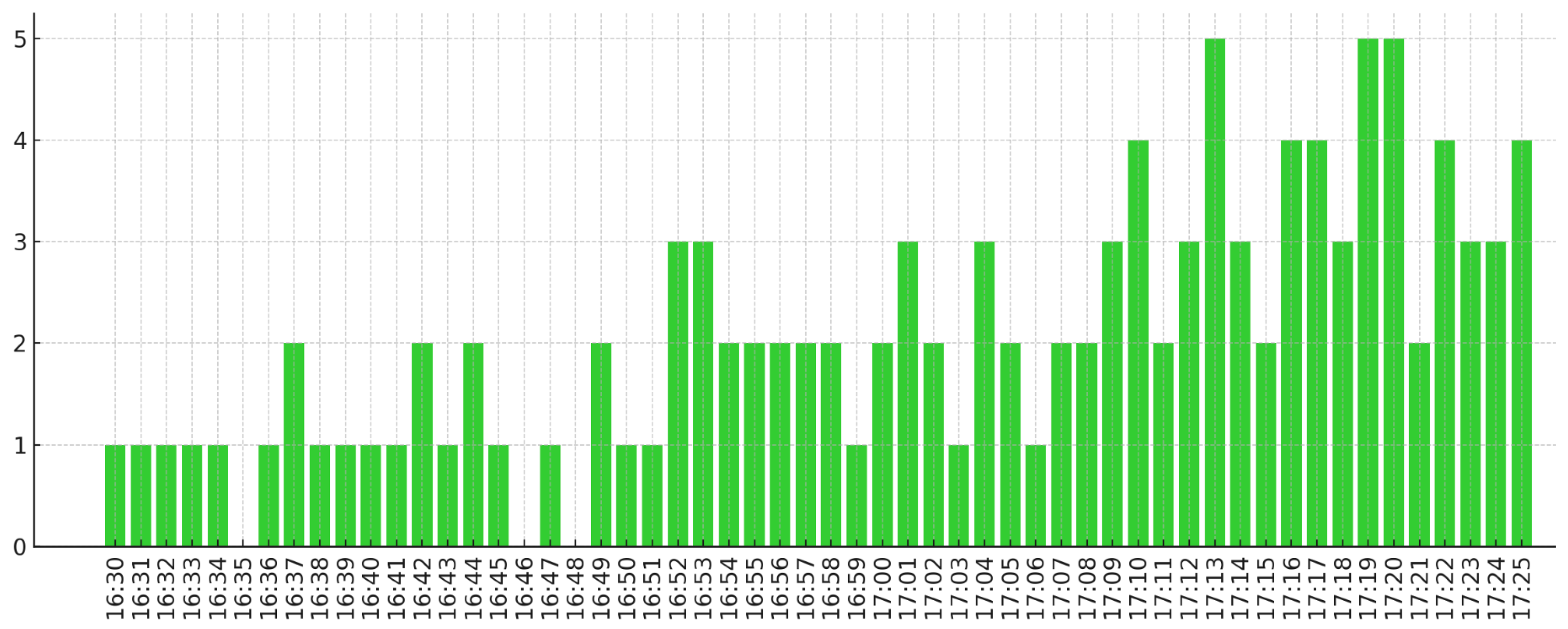

The LogQL query capabilities are demonstrated in

Table 5, which shows failed SSH login attempts, whereas

Table 6 demonstrates Nextcloud Nginx queries. Additionally,

Figure 3 provides a time series analysis of these failed login attempts to identify potential attack patterns.

6.3. NGINX Log Analysis

To ingest nextcloud nginx access logs into the platform, Promtail was configured on the test host to monitor the nginx log path. The Promtail configuration file specified a static file target with appropriate labels to tag the log stream for identification. In this case, Nextcloud Nginx logs were forwarded over TCP to the Loki service running on the cluster, making them visible and searchable within the Grafana interface. The logs were shortened for the table.

6.4. Failure Testing

An important use case is the ability to create visualisations of data in the proposed network analysis model. This allows an analyst to quickly check aggregated information to spot potential issues or areas requiring further investigation. Two experiments were performed to validate this; one to show a list of failed SSH login attempts and a second to show the frequency of the login attempts. A second, more complex visualisation shows the frequency of SSH logs containing an IP address over time as an example of a more complex search query allowed by LogQL.

Two extremely important factors in the design of a syslog collection platform are its resiliency and reliability under different node failure scenarios. The platform needs to be trusted even if it is running in a degraded state; otherwise, an analyst could potentially be operating under false assumptions when responding to system issues. For the high availability and resiliency of the platform to be validated, a platform node can be intentionally crashed to simulate a full node failure while using a script to send syslogs to the platform with an incrementing ID. This can then be checked to validate that the proposed platform received and indexed all log data correctly.

After intentionally inducing a kernel panic using the Linux sysrq-trigger, as shown in

Table 7, all pods were rescheduled on the affected node after it panicked and rebooted. The transmitted syslogs during the test can be validated as received using a LogQL query and validating the count against the transmitted numbers, confirming the platform functioned properly during

node failure, where

N is the total number of nodes, as shown in

Table 8.

The full node and pod recovery time can be calculated by repeating the test, echoing the date and time while performing the previous sysrq-trigger kernel panic, as shown in

Table 7. After the node rebooted and recovered, Kubernetes can be seen rescheduling workload pods on node 2, as shown in

Table 9. The platform fully recovered and passed all health checks within 146 s of the initial crash, as detailed in

Table 10.

In conclusion, the platform successfully demonstrates resiliency to node failure in a three-node configuration. Due to the fully homogeneous and high-availability nature of the platform, higher levels of resiliency would be available proportional to node count. During the test, all syslog messages sent during this process were successfully received, which validates that the three-node platform was not affected by node failure.

6.5. Network Stress Testing

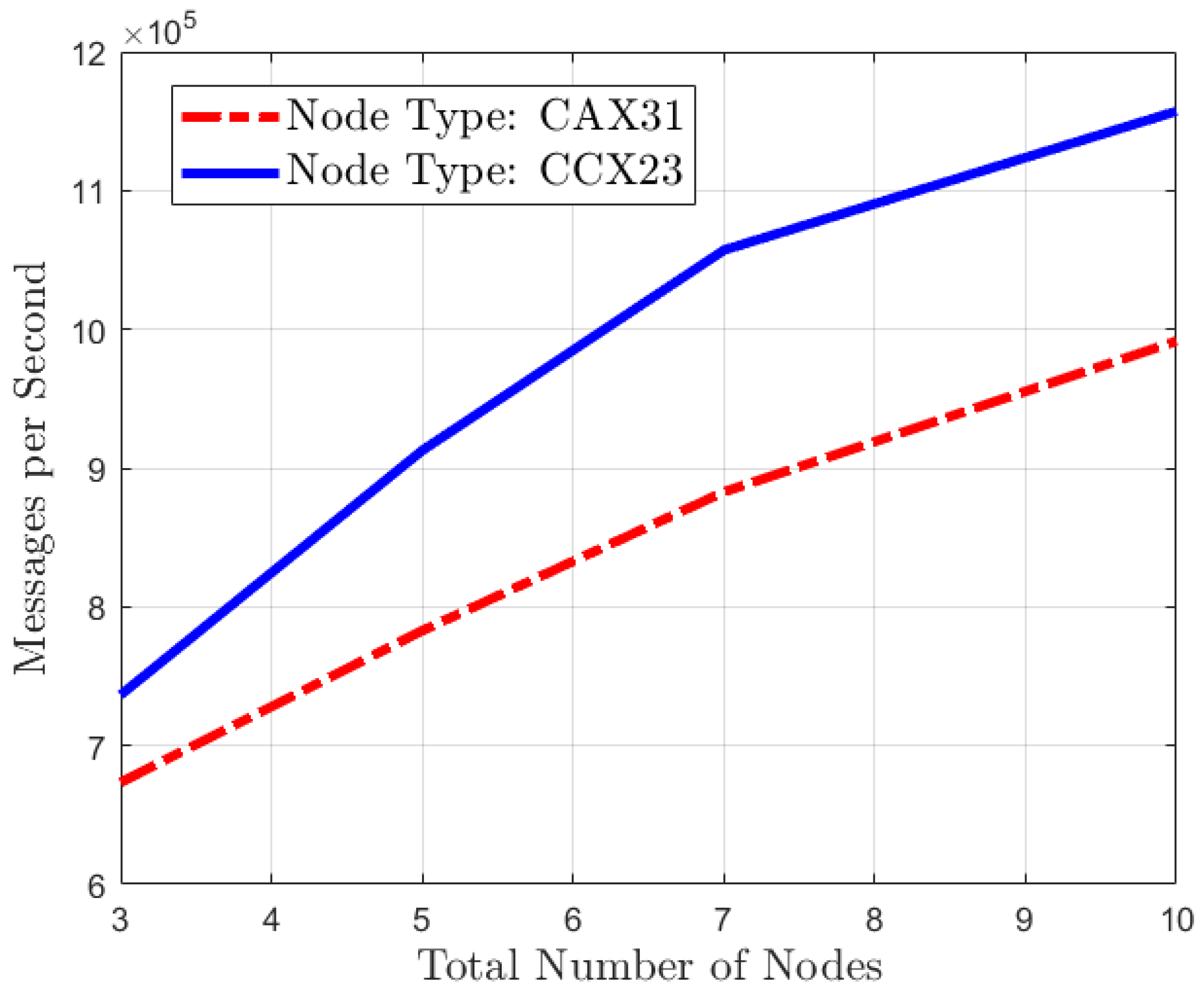

This test is necessary to validate that the proposed platform can operate under high levels of stress, which potentially indicates syslog spam from a device. The “loggen” tool can be used to generate syslogs from the test host synthetically. The results are averaged across five runs of the command for consistency. Hetzner offers a multitude of tiers including “CAX” ARM shared CPU Ampere instances designed for higher values for cost and horizontal scaling. Hetzner also offers reserved resource “CCX” AMD EPYC instances for higher CPU performance, enabling vertical scaling. A comparative performance analysis was conducted between two configurations, i.e., a CAX31 instance with 8 vCPUs and 16 GB of RAM, and a CCX23 instance with 4 vCPUs and 16 GB of RAM.

The “CAX31” Ampere instance receives single- and multi-core Geekbench benchmark scores of 1077 and 5706. The “CCX23” instance receives a higher single-core score of 1535 and a multi-core score of 3536. Both provide a good platform with higher and lower single-core and multi-core scores across different core counts. This provides a strong demonstration of both vertical and horizontal scaling capabilities.

On average, the proposed platform provides an excellent ability to ignore large amounts of syslog spam from devices, as shown in

Figure 4. The performance does not scale directly with node count, as additional replicas add additional storage calculations, reducing the gain, and doubling the node count does not double the performance.

In conclusion, the proposed network model successfully delivered a working syslog collection and analysis platform using a selection of state-of-the-art tools to demonstrate an effective implementation. This was accomplished using a modern application stack to provide an alternative to the Elastic platform, backed by a cutting-edge platform incorporating Kubernetes, Longhorn, and Minio. All are deployed through GitHub Actions, which allows reproducible and automated builds while safely handling privileged secrets using GitHub Actions to minimize the chance of secrets being leaked in pipeline logs. The architecture effectively provides a highly resilient and scalable platform ready for further implementation, evaluation, and production deployment.

7. Comparison Against Existing Tools

Evaluating the end product against the main overall goal, i.e., to design a simple, easily deployable, and scalable in-house syslog collection platform, requires consideration in several areas. In

Table 11, the proposed method is evaluated against a common monolithic product, e.g., the Kiwi syslog Server, and a common SaaS product, e.g., Cisco Splunk. To evaluate its effectiveness as a free, local alternative, the proposed system was compared against the lower-tier pricing options of Kiwi and Splunk. This comparison is relevant as the proposed stack can run at no cost on local infrastructure or very cheaply on cloud services like Hetzner.

As shown in

Table 11, the platform provides a wide range of benefits in comparison to legacy monolithic solutions. This also provides notable functionalities that make most technical features comparable to those of Splunk. However, this is limited in comparison to Splunk in areas such as support contracts and compliance, as it is a self-developed and deployed platform, not provided by major commercial vendors.

One of the major advantages of the proposed method is its flexibility and cost-effectiveness. Traditional monolithic platforms like the Kiwi Syslog Server often come with licensing fees and single-node hardware constraints that limit scalability. In comparison, the proposed Kubernetes-based solution offers preferable scalability by increasing the node and replica counts, making it a more viable option for organisations with future scaling requirements. This is particularly beneficial for startups or small- to medium-sized enterprises that need to manage costs efficiently while ensuring robust data collection and analysis.

The resiliency of the proposed method is another key differentiator. While legacy monolithic systems may suffer from single points of failure, the Kubernetes orchestration allows for automatic failover and high availability, ensuring continuous operation even in the face of node failures. This feature is crucial in mission-critical environments where downtime can lead to significant operational and financial losses.

The proposed solution of Grafana Loki leverages modern LogQL for querying, which offers more advanced and flexible search capabilities compared to the basic regex or text matching used in traditional systems. By offering ElasticSearch’s functionality with lower memory demands, this solution enables network and security teams to run more granular, complex queries. This enhances their ability to quickly detect anomalies and respond to incidents.

Visualization with Grafana provides proven capabilities for real-time monitoring and alert generation. This makes the platform not just a data collection tool, but a comprehensive monitoring solution that can actively contribute to maintaining the network’s security and performance. In comparison, legacy systems often lack these advanced visualization tools, requiring additional third-party software to achieve similar functionalities.

However, the proposed method has certain limitations due to being a self-developed solution; it lacks the commercial support and compliance certifications that come with vendor-provided solutions such as Splunk. Organisations considering this solution may need to invest in in-house expertise or third-party consultants for maintenance and support.

In conclusion, while the proposed scalable, in-house syslog collection platform offers several advantages over traditional and SaaS-based solutions, organisations need to weigh these benefits against the need for commercial support and compliance features. The choice will ultimately depend on the specific needs and resources of the organisation.

8. Conclusions

In this paper, the implementation of a scalable syslog collection platform incorporating modern techniques such as GitOps, CI/CD, Kubernetes, and Infrastructure as Code has been demonstrated. The experimental analysis of the deployment pipeline has shown that builds are fast and repeatable, which permits easy deployment of the tool. The evaluation of the deployment demonstrated that the overall stack is highly resilient to node failure and can handle a high level of syslog ingress, making it resilient to syslog spam up to 115 k logs per second. The proposed system provides both search and visualisation functionalities, shown through the analysis of SSH logs. The proposed platform also integrates Grafana as a unified syslog and SMTP solution, creating a single pane of glass for monitoring. This significantly improves the observation workflow through built-in aggregation tools. Through the analysis and cross-comparison of the proposed project against industry competitors, the platform provides a feature-rich and highly resilient alternative to monolithic stacks such as the Kiwi Syslog Server, while providing strong competition against Cisco Splunk and other increasingly expensive SaaS products. In conclusion, the proposed architecture successfully delivers a highly resilient syslog collection platform, enabling analysts to efficiently work on a platform that is more resilient than monolithic solutions and more cost-effective than modern SaaS platforms.