When Robust Isn’t Resilient: Quantifying Budget-Driven Trade-Offs in Connectivity Cascades with Concurrent Self-Healing

Abstract

1. Introduction

- Robustness is the ability of the system to withstand the initial disturbance; it is often quantified by the fraction of components that remain functional immediately after the shock [1].

- Resiliency (or recovery) is the ability to return to acceptable performance after the disturbance. It combines the depth of degradation with the speed and extent of restoration [2].

1.1. State of the Art: Cascading Failures and Recovery

1.2. Objectives and Contributions of This Work

- Concurrent cascade + self-healing model. We formulate two algorithms—one for link-breaking failure and one for distributed healing—that act within the same simulation step, controlled by a budget parameter and a triggering threshold .

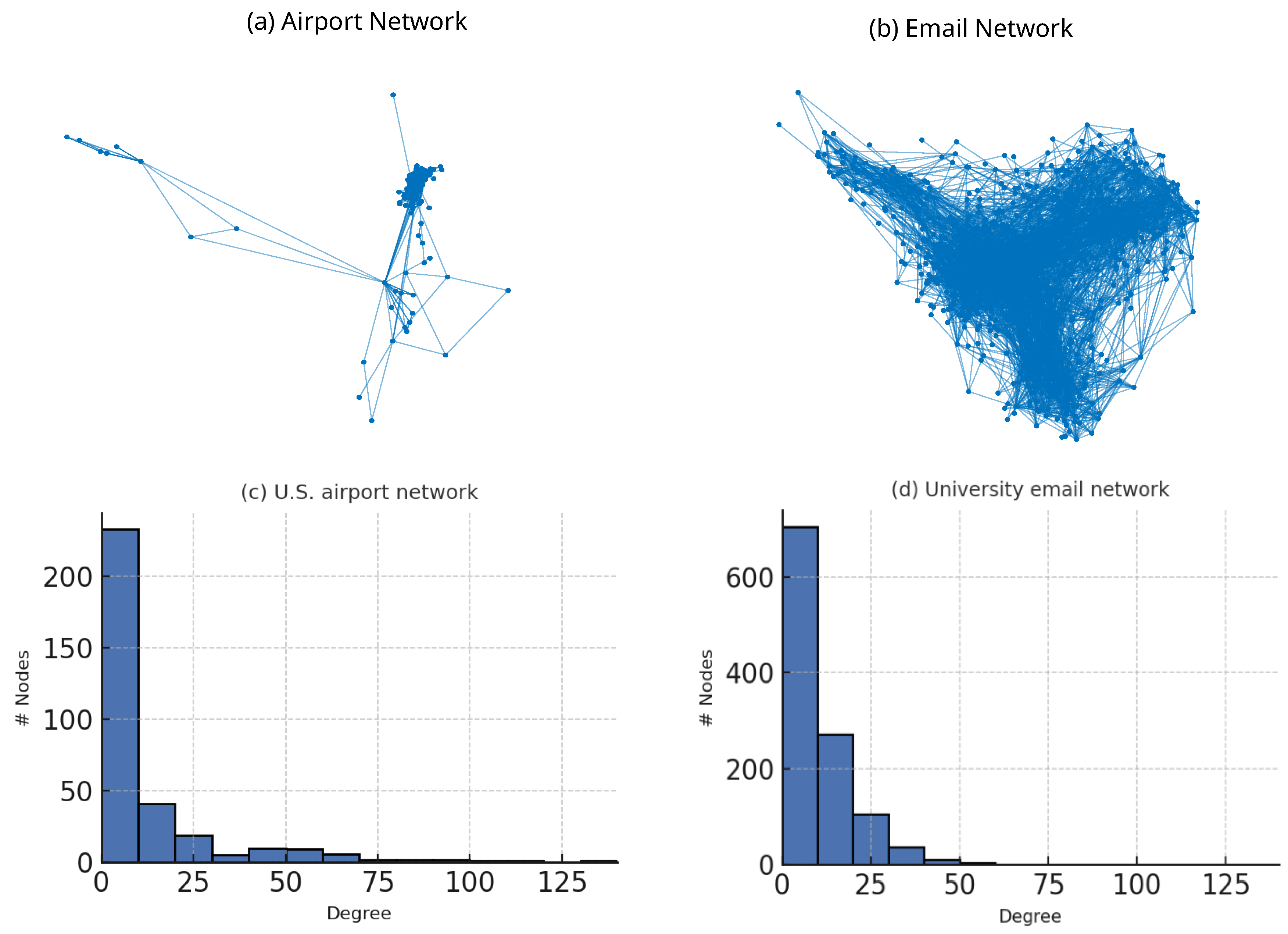

- Quantitative evaluation on real data. Using a U.S. airport network (332 nodes) [39] and a university e-mail network (1133 nodes) we measure robustness and two resiliency metrics: 90% recovery time T90 and cumulative average damage.

- Systematic exploration of parameter space. We vary the degree-loss threshold , the attack mode (random vs. targeted), the healing budget and the trigger time, producing a comprehensive map of robustness and resiliency responses.

- First empirical correlation study. Scatter-plot analysis reveals that robustness and resiliency are only weakly correlated unless the trigger is early and the budget adequate; high robustness can coexist with slow or costly recovery and vice versa. We summarize the relationship with a simple multiplicative fit and discuss design implications.

1.3. Paper Organization

2. Description of Models and Methods

2.1. Connectivity-Based Failure and Healing

- Step 1—Decision (Algorithm 2).All inactive nodes that still have at least one active neighbor are identified. Each candidate node is assigned

- A primary impact: the number of active neighbors that would be saved if the node were reactivated (i.e., neighbors whose ratio would rise from <c to ).

- A secondary impact: the average increase of those neighbors. Nodes are ranked first by primary impact and then, to break ties, by secondary impact.

- Step 2—Implementation (Algorithm 3).Up to B highest-ranked inactive nodes are reactivated, and their original edges to currently active neighbors are restored, all within the current step.

| Algorithm 1. Connectivity-Based CF |

| Input: G, attack, mode, // network, attack size, mode, threshold Output: G_dmg // damaged graph after cascading failure 1 N ← all functional nodes in G 2 E ← all edges in G 3 active ← degree(G) // original degrees of every node 4 idx ← sort(active, descend) // high-degree first 5 IF mode = 0 THEN // random attack 6 rmodes ⊂ N ← randomsample(N, attack) 7 ELSEIF mode = 1 THEN // targeted (highest-degree) attack 8 rmodes ⊂ N ← idx(1: attack) 9 ENDIF 10 FOR each v ∈ rmodes DO // initial removals 11 remove v and all incident edges from G 12 ENDFOR // -------- cascading failures until no new node violates < 13 REPEAT 14 fail ← degree(G) // current degrees 15 ← fail ./ active // element-wise ratio (0 ≤ ≤ 1) 16 needrmv ← ( < ) // Boolean vector 17 cand ← nodes( needrmv = true ) 18 FOR each u ∈ cand DO // remove newly failed nodes 19 remove u and all incident edges from G 20 ENDFOR 21 UNTIL cand = ∅ // stop when no additional failures 22 G_dmg ← G 23 RETURN G_dmg |

| Algorithm 2. Connectivity-Based SH-Decision |

| Input: G_dmg, // current damaged graph G_orig, // original graph (before any failure) // degree–loss threshold Output: inAtv_ranked // inactive nodes ordered by healing impact 1 inAtv ← all INACTIVE nodes in G_dmg 2 impact ← zeros(|inAtv|) // primary-impact score for each inactive node // ---------- Step 1: compute primary impact ------------ 3 FOR k = 1 to |inAtv| DO 4 ii ← inAtv[k] 5 nbx ← active neighbors of ii in G_dmg 6 FOR each nb ∈ nbx DO 7 d_orig ← degree of nb in G_orig 8 d_dmg ← degree of nb in G_dmg 9 ← d_dmg / d_orig 10 IF ≤ THEN // nb is currently endangered 11 drst ← d_dmg + 1 // edge (ii, nb) would be restored 12 ← drst / d_orig // nb’s ratio *after* healing ii 13 IF > THEN 14 impact[k] ← impact[k] + 1 // nb would be rescued 15 ENDIF 16 ENDIF 17 ENDFOR 18 ENDFOR 19 idx ← argsort(impact, ‘descend’) // indices in decreasing impact 20 inAtv1 ← inAtv[idx] // reordered list after primary sort // ---------- Step 2: tie-break with average improvement --------- 21 d ← −∞ · ones(|inAtv|) // secondary score (only for ties) 22 FOR m = 1 to |inAtv1| DO 23 ii ← inAtv1[m] 24 if impact[m] = impact[1] THEN // compute only for nodes sharing max 25 nbx ← active neighbors of ii in G_dmg 26 Δ_list ← ∅ 27 FOR each nb ∈ nbx DO 28 d_orig ← degree of nb in G_orig 29 d_dmg ← degree of nb in G_dmg 30 ← d_dmg / d_orig 31 drst ← d_dmg + 1 32 ← drst / d_orig 33 ← ∪ { } 34 ENDFOR 35 IF || > 0 THEN 36 d[m] ← mean() // average improvement 37 ENDIF 38 ENDIF 39 ENDFOR 40 idx2 ← lexicographic-sort(impact(desc), d(desc)) 41 inAtv_ranked ← inAtv[ idx2 ] // final ranking (impact, then ) 42 RETURN inAtv_ranked |

| Algorithm 3. Connectivity-Based SH-Implement |

| Input: G_dmg, // current damaged graph G_orig, // original graph inAtv, // inactive nodes ranked by Algorithm 2 B // healing budget (number of nodes to reactivate) Output: G_rec // graph after applying self-healing 1 healed ← 0 // number of nodes already reactivated 2 FOR k = 1 to |inAtv| DO 3 IF healed = B THEN 4 BREAK // budget exhausted 5 ENDIF 6 ii ← inAtv[k] // next candidate to heal 7 IF ii is ACTIVE in G_dmg THEN 8 CONTINUE // node already healed by earlier iteration 9 ENDIF 10 nbx ← active neighbors of ii in G_dmg 11 IF nbx ≠ ∅ THEN 12 FOR each nb ∈ nbx DO 13 IF edge (ii, nb) ∈ E(G_orig) AND edge (ii, nb) ∉ E(G_dmg) THEN 14 add edge (ii, nb) to G_dmg 15 ENDIF 16 ENDFOR 17 mark ii as ACTIVE in G_dmg 18 healed ← healed + 1 19 ENDIF // if nbx = ∅, skip without spending budget 20 ENDFOR 21 G_rec ← G_dmg 22 RETURN G_rec |

2.2. Robustness and Resiliency Metrics

- Average Damage over a predefined time window :where is the fraction of nodes that are active at time .

- 90% Recovery time, .

2.3. Description of Data

3. Results and Discussion

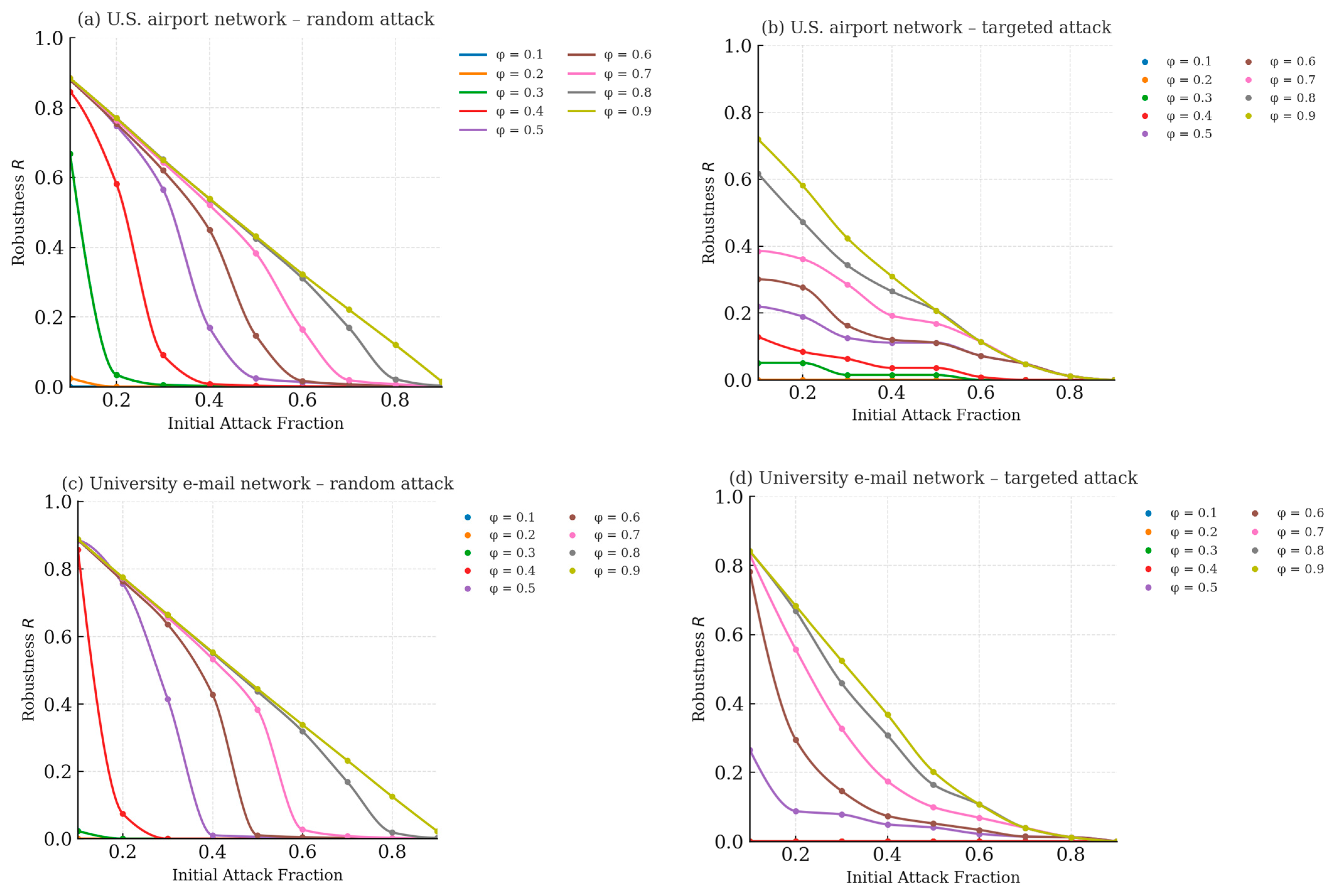

3.1. Robustness Patterns

- First, targeted removal of high-degree hubs produces a markedly steeper drop in robustness R than an equivalent random failure, as can be seen by comparing panels (b) and (d) with panels (a) and (c).

- Second, increasing the degree-loss threshold invariably postpones secondary cascades: larger values preserve a greater fraction of nodes for every initial-attack fraction α, whereas low thresholds allow the network to collapse rapidly.

- Third, even under identical settings the U.S. airport graph retains a higher R than the university e-mail graph; this difference aligns with simple topological descriptors—most notably the airport network’s higher mean degree (12.8 versus 9.6) and its richer redundancy among hubs.

- Taken together, these results confirm that the connectivity-based model reproduces intuitive robustness dynamics and underscore the need for mitigation strategies that are tailored to a network’s specific connectivity profile.

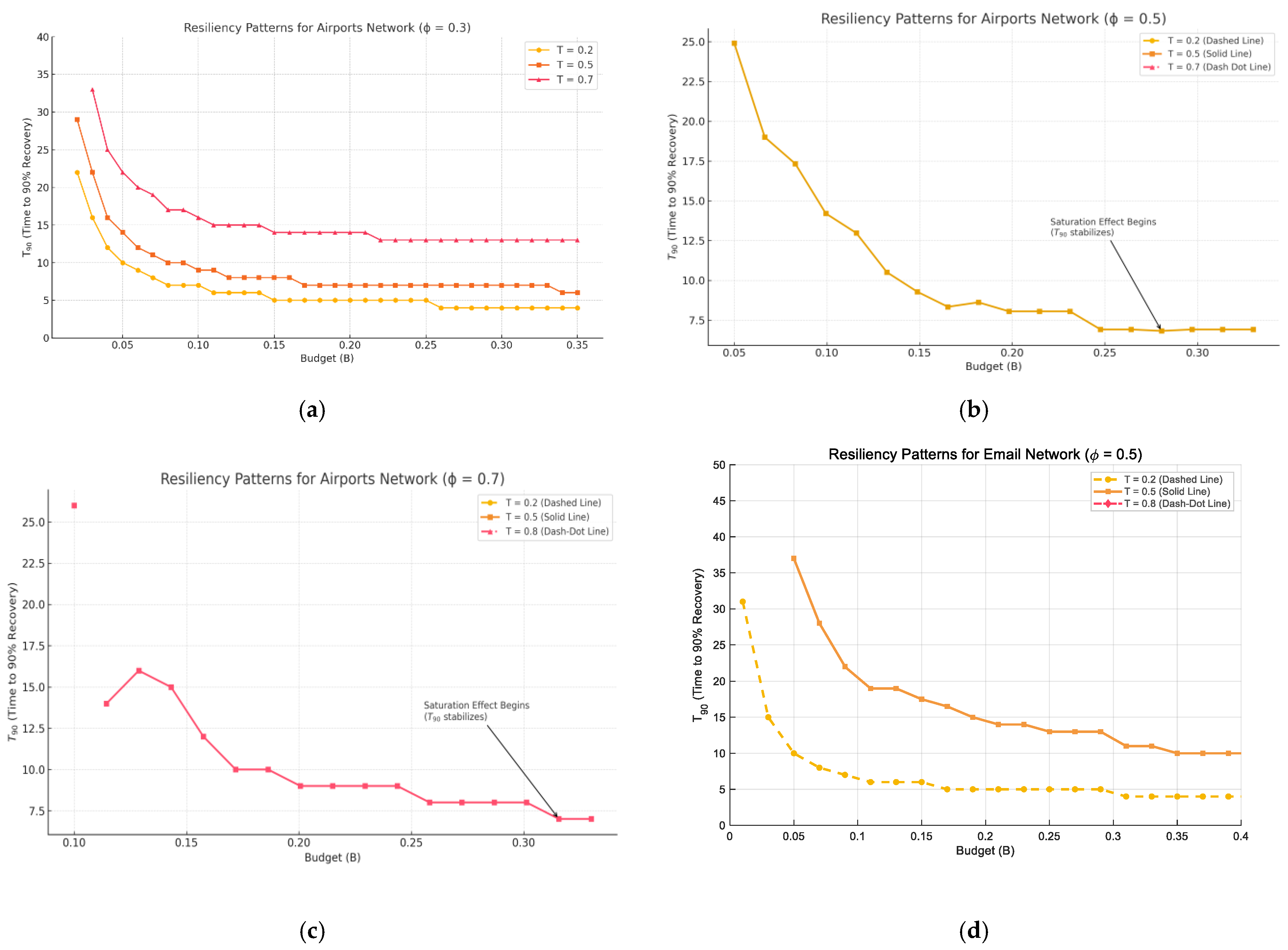

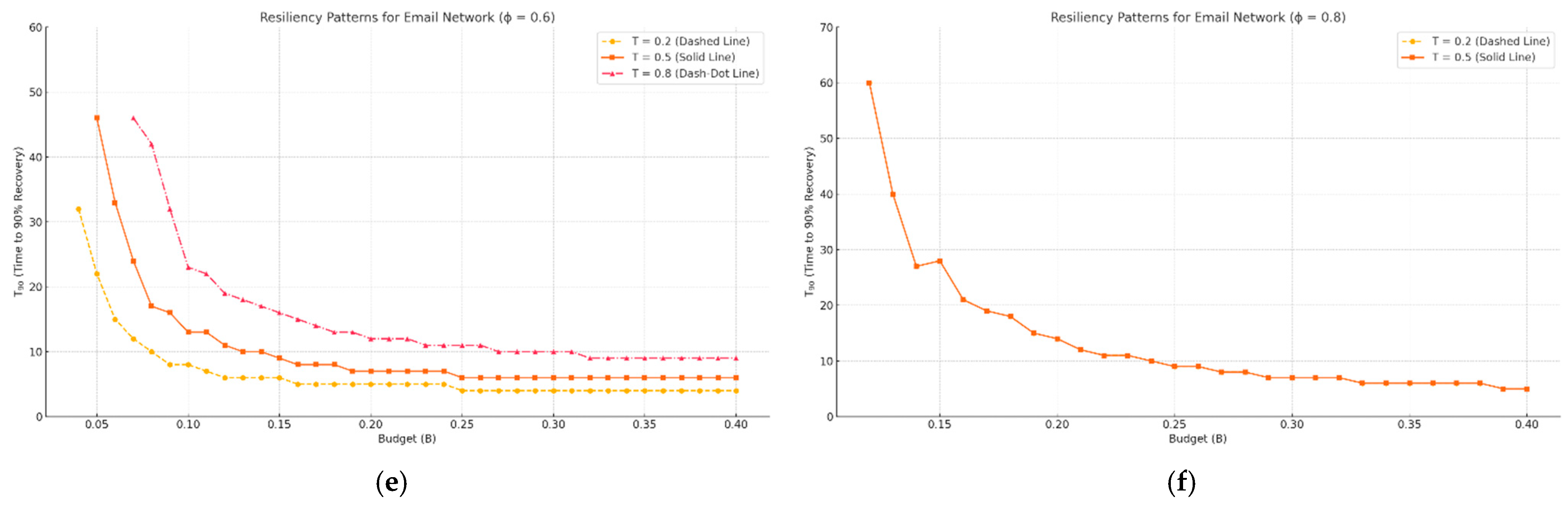

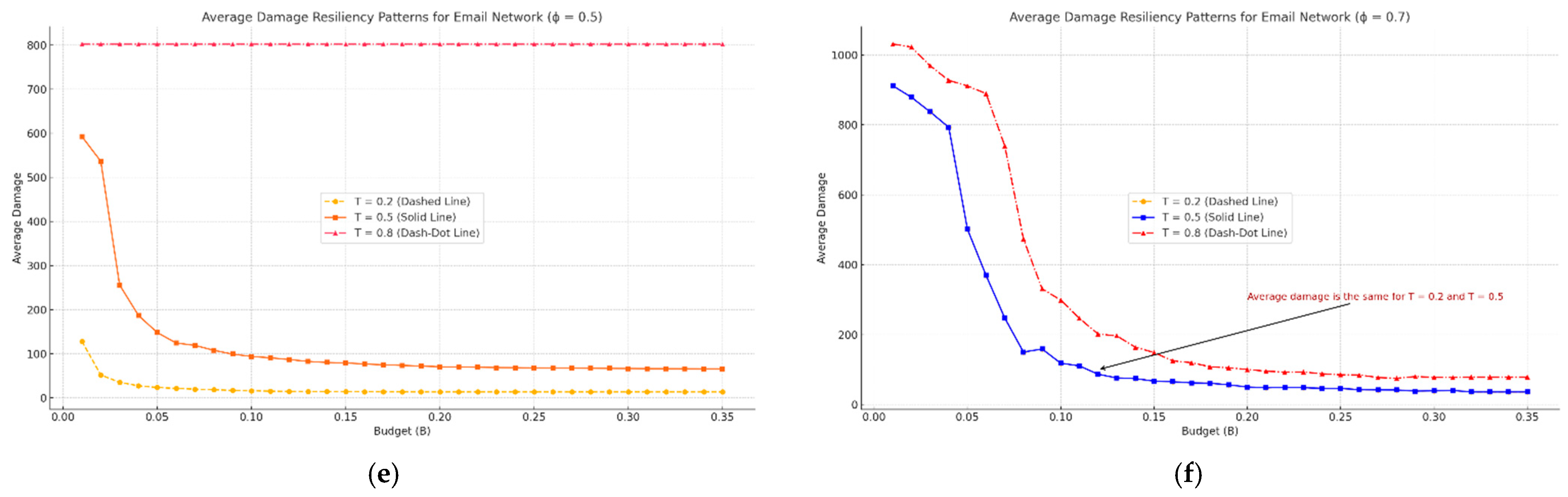

3.2. Resiliency Patterns

3.2.1. Budget Thresholds and Non-Linear Effects

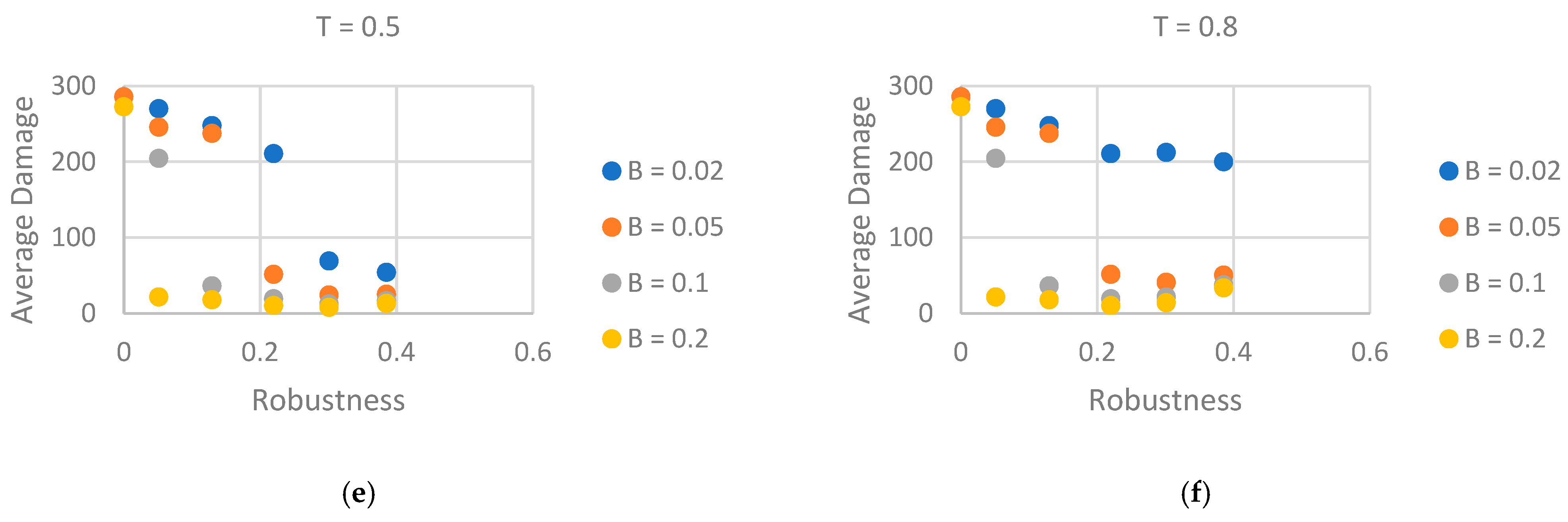

3.2.2. Interplay Between Robustness and Resiliency

3.2.3. Implications

3.2.4. Average Damage as an Alternative Resiliency Metric

3.3. Limitations and Alternative Heuristics

3.4. Correlation Between Robustness and Resiliency (Airport Network, 10% Initial Attack)

3.4.1. Robustness Versus T90 (Panels a–c)

3.4.2. Robustness Versus Average Damage (Panels d–f)

3.4.3. Interpretation and Design Implications

4. Conclusions

- Distinct robustness regimes. Robustness declines smoothly under random attack but collapses abruptly under targeted hub removal; increasing mitigates both effects, although the airport network remains consistently more robust owing to its higher mean degree and redundant hub set.

- Budget-trigger trade-off in resiliency. Early activation with a modest budget outperforms late activation with a larger budget. A critical “saturation” budget exists—about 12% of nodes for the airport graph and 10% for the e-mail graph—beyond which additional resources yield only marginal gains in T90 and average damage.

- Weak correlation between robustness and resiliency. Scatter-plot analysis showed that configurations with high robustness can still recover slowly when under-funded, while low-robustness configurations can rebound rapidly if healing is timely and well resourced. A simple multiplicative fit (with ) summarizes this interaction.

4.1. Design Implications

4.2. Limitations and Future Work

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| G | Current graph |

| Gdmg | Graph after the cascading-failure phase |

| Grec | Graph returned after the healing phase |

| N | List (or count) of currently functional nodes |

| E | List of currently present edges |

| active | Vector of original degrees |

| fail | Vector of current degrees |

| c | Degree-loss threshold that triggers node failure |

| Ratio current degrees/original degrees for a node | |

| needrmv | c |

| cand | Set of nodes that newly fail in the current sweep |

| idx | Indices of nodes sorted by descending original degree |

| rmodes | Initial attack set (random or targeted) |

| inAtv | List of inactive nodes that still have ≥1 active neighbor |

| impact | Primary-impact score: # of endangered neighbors rescued by healing a candidate node |

| d | ) for neighbors rescued by that candidate |

| nbx | Set of active neighbors of a specific inactive node |

| d_orig | Original degree of a neighbor |

| d_dmg | Current degree of a neighbor |

| drst | Degree neighbor would have after edge restoration |

| Updated degree ratio after hypothetical healing | |

| idx2 | Permutation that ranks inAtv lexicographically by primary and secondary impact |

| inAtv_ranked | Final ranked list of inactive nodes to heal |

| B | Healing-budget cap: max # of nodes reactivated in a step |

| healed | Counter for how many nodes have been reactivated so far |

| nb | Individual active neighbor |

| T | Triggering level: fraction of inactive nodes that starts healing |

References

- Carlson, J.M.; Doyle, J. Complexity and robustness. Proc. Natl. Acad. Sci. USA 2002, 99 (Suppl. S1), 2538–2545. [Google Scholar] [CrossRef]

- The Resilience of Networked Infrastructure Systems|Systems Research Series. Available online: https://www.worldscientific.com/worldscibooks/10.1142/8741 (accessed on 24 May 2025).

- Huang, Z.; Wang, C.; Nayak, A.; Stojmenovic, I. Small Cluster in Cyber Physical Systems: Network Topology, Interdependence and Cascading Failures. IEEE Trans. Parallel Distrib. Syst. 2015, 26, 2340–2351. [Google Scholar] [CrossRef]

- Zhang, J.; Yeh, E.; Modiano, E. Robustness of Interdependent Random Geometric Networks. arXiv 2018, arXiv:1709.03032. [Google Scholar] [CrossRef]

- Sergiou, C.; Lestas, M.; Antoniou, P.; Liaskos, C.; Pitsillides, A. Complex Systems: A Communication Networks Perspective Towards 6G. IEEE Access 2020, 8, 89007–89030. [Google Scholar] [CrossRef]

- Pahwa, S.; Scoglio, C.; Scala, A. Abruptness of Cascade Failures in Power Grids. Sci. Rep. 2014, 4, 3694. [Google Scholar] [CrossRef] [PubMed]

- Schäfer, B.; Witthaut, D.; Timme, M.; Latora, V. Dynamically induced cascading failures in power grids. Nat. Commun. 2018, 9, 1975. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Chen, B.; Chen, X.; Gao, X. Cascading Failure Model for Command and Control Networks Based on an m-Order Adjacency Matrix. Mob. Inf. Syst. 2018, 2018, e6404136. [Google Scholar] [CrossRef]

- Aqqad, W.A.; Zhang, X. Modeling command and control systems in wildfire management: Characterization of and design for resiliency. In Proceedings of the 2021 IEEE International Symposium on Technologies for Homeland Security (HST), Boston, MA, USA, 8–9 November 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Li, Y.; Duan, D.; Hu, G.; Lu, Z. Discovering Hidden Group in Financial Transaction Network Using Hidden Markov Model and Genetic Algorithm. In Proceedings of the 2009 Sixth International Conference on Fuzzy Systems and Knowledge Discovery, Tianjin, China, 14–16 August 2009; pp. 253–258. [Google Scholar] [CrossRef]

- Watts, D.J. A simple model of global cascades on random networks. Proc. Natl. Acad. Sci. USA 2002, 99, 5766–5771. [Google Scholar] [CrossRef]

- Stegehuis, C.; van der Hofstad, R.; van Leeuwaarden, J.S.H. Epidemic spreading on complex networks with community structures. Sci. Rep. 2016, 6, 29748. [Google Scholar] [CrossRef]

- Motter, A.E.; Lai, Y.-C. Cascade-based attacks on complex networks. Phys. Rev. E 2002, 66, 065102. [Google Scholar] [CrossRef]

- Huang, X.; Gao, J.; Buldyrev, S.V.; Havlin, S.; Stanley, H.E. Robustness of interdependent networks under targeted attack. Phys. Rev. E 2011, 83, 065101. [Google Scholar] [CrossRef]

- Huang, X.; Shao, S.; Wang, H.; Buldyrev, S.V.; Stanley, H.E.; Havlin, S. The robustness of interdependent clustered networks. EPL Europhys. Lett. 2013, 101, 18002. [Google Scholar] [CrossRef]

- Yuan, X.; Shao, S.; Stanley, H.E.; Havlin, S. How breadth of degree distribution influences network robustness: Comparing localized and random attacks. Phys. Rev. E 2015, 92, 032122. [Google Scholar] [CrossRef]

- Chattopadhyay, S.; Dai, H.; Eun, D.Y.; Hosseinalipour, S. Designing Optimal Interlink Patterns to Maximize Robustness of Interdependent Networks Against Cascading Failures. IEEE Trans. Commun. 2017, 65, 3847–3862. [Google Scholar] [CrossRef]

- Motter, A.E. Cascade control and defense in complex networks. Phys. Rev. Lett. 2004, 93, 098701. [Google Scholar] [CrossRef] [PubMed]

- Gallos, L.K.; Fefferman, N.H. Simple and efficient self-healing strategy for damaged complex networks. Phys. Rev. E 2015, 92, 052806. [Google Scholar] [CrossRef]

- Quattrociocchi, W.; Caldarelli, G.; Scala, A. Self-Healing Networks: Redundancy and Structure. PLOS ONE 2014, 9, e87986. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, J.; Sun, X.; Wandelt, S. Network repair based on community structure. Europhys. Lett. 2017, 118, 68005. [Google Scholar] [CrossRef]

- Liu, C.; Li, D.; Fu, B.; Yang, S.; Wang, Y.; Lu, G. Modeling of self-healing against cascading overload failures in complex networks. Europhys. Lett. 2014, 107, 68003. [Google Scholar] [CrossRef]

- Tierney, K.; Bruneau, M. Conceptualizing and Measuring Resilience: A Key to Disaster Loss Reduction, TR News, No. 250, May 2007. Available online: https://trid.trb.org/View/813539 (accessed on 8 August 2025).

- Mari, S.I.; Lee, Y.H.; Memon, M.S. Sustainable and Resilient Supply Chain Network Design under Disruption Risks. Sustainability 2014, 6, 6666–6686. [Google Scholar] [CrossRef]

- Pumpuni-Lenss, G.; Blackburn, T.; Garstenauer, A. Resilience in Complex Systems: An Agent-Based Approach. Syst. Eng. 2017, 20, 158–172. [Google Scholar] [CrossRef]

- Albert, R.; Jeong, H.; Barabasi, A.-L. Error and attack tolerance of complex networks. Nature 2000, 406, 378–382. [Google Scholar] [CrossRef]

- Cohen, R.; Erez, K.; Ben-Avraham, D.; Havlin, S. Resilience of the Internet to Random Breakdowns. Phys. Rev. Lett. 2000, 85, 4626–4628. [Google Scholar] [CrossRef]

- Shi, Q.; Li, F.; Dong, J.; Olama, M.; Wang, X.; Winstead, C.; Kuruganti, T. Co-optimization of repairs and dynamic network reconfiguration for improved distribution system resilience. Appl. Energy 2022, 318, 119245. [Google Scholar] [CrossRef]

- Zhang, L.; Yu, S.; Zhang, B.; Li, G.; Cai, Y.; Tang, W. Outage management of hybrid AC/DC distribution systems: Co-optimize service restoration with repair crew and mobile energy storage system dispatch. Appl. Energy 2023, 335, 120422. [Google Scholar] [CrossRef]

- Zhou, K.; Jin, Q.; Feng, B.; Wu, L. A bi-level mobile energy storage pre-positioning method for distribution network coupled with transportation network against typhoon disaster. IET Renew. Power Gener. 2024, 18, 3776–3787. [Google Scholar] [CrossRef]

- Jacob, R.A.; Paul, S.; Chowdhury, S.; Gel, Y.R.; Zhang, J. Real-time outage management in active distribution networks using reinforcement learning over graphs. Nat. Commun. 2024, 15, 4766. [Google Scholar] [CrossRef]

- Si, R.; Chen, S.; Zhang, J.; Xu, J.; Zhang, L. A multi-agent reinforcement learning method for distribution system restoration considering dynamic network reconfiguration. Appl. Energy 2024, 372, 123625. [Google Scholar] [CrossRef]

- Yao, Y.; Zhang, X.; Wang, J.; Ding, F. Multi-Agent Reinforcement Learning for Distribution System Critical Load Restoration. In Proceedings of the 2023 IEEE Power & Energy Society General Meeting (PESGM), Orlando, FL, USA, 16–20 July 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Perez, I.A.; Ben Porath, D.; La Rocca, C.E.; Braunstein, L.A.; Havlin, S. Critical behavior of cascading failures in overloaded networks. Phys. Rev. E 2024, 109, 034302. [Google Scholar] [CrossRef]

- Zhao, Y.; Cai, B.; Cozzani, V.; Liu, Y. Failure dependence and cascading failures: A literature review and research opportunities. Reliab. Eng. Syst. Saf. 2025, 256, 110766. [Google Scholar] [CrossRef]

- Brunner, L.G.; Peer, R.A.M.; Zorn, C.; Paulik, R.; Logan, T.M. Understanding cascading risks through real-world interdependent urban infrastructure. Reliab. Eng. Syst. Saf. 2024, 241, 109653. [Google Scholar] [CrossRef]

- Crucitti, P.; Latora, V.; Marchiori, M. Model for cascading failures in complex networks. Phys. Rev. E 2004, 69, 045104. [Google Scholar] [CrossRef]

- Dobson, I.; Carreras, B.A.; Lynch, V.E.; Newman, D.E. Complex systems analysis of series of blackouts: Cascading failure, critical points, and self-organization. Chaos: Interdiscip. J. Nonlinear Sci. 2007, 17, 026103. [Google Scholar] [CrossRef] [PubMed]

- R. A. R. others Nesreen K. Ahmed, and, USAir97|Miscellaneous Networks|Network Repository, Network Data Repository. Available online: https://networkrepository.com/USAir97.php (accessed on 25 May 2025).

- Biswas, S.; Cavdar, B.; Geunes, J. A Review on Response Strategies in Infrastructure Network Restoration. arXiv 2024, arXiv:2407.14510. [Google Scholar] [CrossRef]

- Ouyang, M. Review on modeling and simulation of interdependent critical infrastructure systems. Reliab. Eng. Syst. Saf. 2014, 121, 43–60. [Google Scholar] [CrossRef]

- Brandes, U. A faster algorithm for betweenness centrality. J. Math. Sociol. 2001, 25, 163–177. [Google Scholar] [CrossRef]

- Bergamini, E.; Meyerhenke, H. Approximating Betweenness Centrality in Fully-dynamic Networks. arXiv 2015, arXiv:1510.07971. [Google Scholar] [CrossRef][Green Version]

- Cohen, R.; Erez, K.; Ben-Avraham, D.; Havlin, S. Breakdown of the Internet under Intentional Attack. Phys. Rev. Lett. 2001, 86, 3682–3685. [Google Scholar] [CrossRef]

- Valdez, L.D.; Shekhtman, L.; La Rocca, C.E.; Zhang, X.; Buldyrev, S.V.; Trunfio, P.A.; Braunstein, L.A.; Havlin, S. Cascading failures in complex networks. J. Complex. Netw. 2020, 8, cnaa013. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al Aqqad, W. When Robust Isn’t Resilient: Quantifying Budget-Driven Trade-Offs in Connectivity Cascades with Concurrent Self-Healing. Network 2025, 5, 35. https://doi.org/10.3390/network5030035

Al Aqqad W. When Robust Isn’t Resilient: Quantifying Budget-Driven Trade-Offs in Connectivity Cascades with Concurrent Self-Healing. Network. 2025; 5(3):35. https://doi.org/10.3390/network5030035

Chicago/Turabian StyleAl Aqqad, Waseem. 2025. "When Robust Isn’t Resilient: Quantifying Budget-Driven Trade-Offs in Connectivity Cascades with Concurrent Self-Healing" Network 5, no. 3: 35. https://doi.org/10.3390/network5030035

APA StyleAl Aqqad, W. (2025). When Robust Isn’t Resilient: Quantifying Budget-Driven Trade-Offs in Connectivity Cascades with Concurrent Self-Healing. Network, 5(3), 35. https://doi.org/10.3390/network5030035