1. Introduction

The excessive growth in the domains of cloud computing, artificial intelligence, and edge devices has significantly increased the energy demand in data centres worldwide. Recent studies have reported that data centres account for up to 2% of global electricity use and CO

2 emissions [

1]. This figure is expected to increase exponentially unless efficient energy-saving strategies are implemented. The integration of renewable energy, smart power management of workloads, and energy-sensitive networking are promising approaches to address these challenges.

Network slicing is an effective technique to improve energy efficiency in modern cloud infrastructures. It is in fact a key feature of network communications that enables the creation of multiple virtual networks on top of a shared physical infrastructure. Each “slice” in the infrastructure is designed to meet the specific requirements of different applications, services, or customers. The successful implementation of network slice techniques becomes critical to ensure flexibility, isolation, and the dynamic prioritisation of traffic [

2,

3]. The concept of a network slice can be traced back to the radio access network (RAN or Fog RAN as shown in

Figure 1), which consists of independent network topologies drawn by ring fencing techniques composed of logical ring fences to balance optimal resource allocation while reducing energy consumption [

4].

However, the energy consumption in heterogeneous network slicing architectures has been reported to be significantly high due to poorly optimised resource allocation across virtual networks [

5]. Numerous mission-critical applications demand low latency and high reliability and throughput, utilising access technologies such as nonorthogonal multiple access (NOMA) and orthogonal frequency division multiple access (OFDMA). Additional techniques such as the network slicing Aware User Grouping for NOMA and resourcE allocatioN in edge compuTing (AUGMENT) and the dynamic voltage scaling technique (DVS) have been promising for energy-aware computational offloading. The efficient integration of all these methods for effective optimisation of resource allocation in order to achieve lower energy consumption is still an open and complex challenge [

6].

Figure 1.

Centralised orchestration-based f-RAN network access slicing (from [

7]).

Figure 1.

Centralised orchestration-based f-RAN network access slicing (from [

7]).

Although NOMA clustering has been proposed for increased channel gain in network slices [

5] and numerous strategies enabling efficient computational offloading [

6], the need for lower computation execution time and suboptimal resource allocation still persists for cloud-centric architectures. Although cloud topologies could potentially map resources more efficiently in high-quality network slices with improved ring fences, the efficiency is dependent on computational resources, logical ring fences, and the overall slice-to-ring-fence ratio. In this paper, we demonstrate how optimising the ratio between network slices and ring fencing can significantly reduce energy consumption in cloud data centres by minimising the network “churn” as much as possible.

The main contributions of this paper are listed as follows:

We propose an iterative heuristic energy-aware non-convex (IHEA-NC) algorithm proposed based on the network slicing–ring fencing ratio for minimizing energy consumption in cloud architectures.

We validate the proposed heuristic (IHEA-NC) through simulations in CloudSim and Amazon Web Services (AWS), achieving 91.46% energy savings compared to existing methods (HA-NC by Hossain and Ansari [

5], PSO by Anajemba et al. [

6]).

We demonstrate that the proposed heuristic is scalable and efficient, proving suitability for heterogeneous real-world cloud architectures.

These contributions lead to the design of a solution-specific uneven network slicing–ring fencing architecture starting from the “network slice”, which enables network operators to maintain a high level of interaction with clients seeking end-user services, balancing energy consumption and task execution time.

The remainder of this paper is structured as follows.

Section 2 reviews the state-of-the-art in relation to this research.

Section 3 describes the proposed equation and the respective environmental setups.

Section 4 presents the algorithmic benchmark results and their implications in real-world cloud architectures.

Section 5 concludes this paper and discusses future work that is useful for energy consumption in general, especially for data centres.

2. Related Work

Iterative energy-aware optimisation heuristics that take into account the total energy consumption of the cloud model are not new [

5,

8,

9]. Our work will follow the same concept. The following sections will cover the major components of the proposed heuristic, called the iterative, energy-aware non-convex optimisation equation (IHEA-NC). The first subsection will outline the inconsistencies with resource-specific requirements and its poor flexibility with network slicing parameters. The next subsection will address the desired parameters for non-convex optimisation that accounts for energy-aware scheduling in a near-optimal solution desiring speed in convergence with refined execution time. The last subsection will provide a discussion of the literature relevant to the Heuristic AUGMENT Non-Convex (HA-NC, proposed by Hossain and Ansari in [

5]) algorithm and the Priority Selection Offloading (PSO, proposed by Anajemba in [

6]) algorithm that iteratively derives the refined total energy consumption equation.

2.1. Non-Convex Optimisation in Network Slicing

Issues with network slicing parameters: Transforming high-quality network slices from scalable network topology designs cannot closely optimise energy efficiency from conceptualisation as there are issues with heterogeneous service requirements. However, other authors have considered ultra-reliable low-latency communication (URLLC) traffic [

10,

11]. Their work influences inter-edge traffic without accounting for heterogeneous extreme service requirements and fault-delay tolerance through downstream service quality, further impacting overall performance.

Network slices utilising resource-intensive computational network resources in smaller modular architectures against slices with distributed workloads might ensure inconsistencies in striking a balance with idealised energy consumption and poor computational offloading. Recently, some authors [

9] also discussed the poor flexibility of end-to-end network cutting to jointly allocate parameters as network resources.

2.2. Energy-Aware Constraints and Speed in Convergence

Leveraging desired parameters for non-convex optimisation: Recasting the non-convex optimisation equation into a convex form by joint optimisation can be leveraged with certain parameters, such as energy-aware constraints to facilitate a near-optimal solution [

5]. However, the present metrics, such as data rate or throughput, incorporated with communication techniques between network slices may be insufficient to cope with transmit power [

12].

Refining speed in convergence: Modelling an energy consumption equation based on end-to-end latency constraints seems sufficient to meet QoS in an optimised solution that desires speed in convergence with refined execution time. However, high-compute computational network resources suffer from poor capacity to facilitate communication with increased aggregation and complexity of resource allocation. Computational network resources need to evaluate tasks through constant inter-virtual machine (VM) communication, which mitigates trade-offs in energy consumption.

2.3. The Heuristic AUGMENT Non-Convex Algorithm and Priority Selection Offloading Algorithm

The heuristic non-convex equation: To balance the associated requirements with efficient network slicing, end-to-end latency requirements ensure the required performance levels are met for cloud energy infrastructures. Incorporating the minimum and maximum latency in the non-convex equation ensures that baseline performance levels are set to a defined threshold and ring isolation efficiency is balanced for the non-convex heuristic energy consumption equation.

The IHEA-NC optimisation equation: The inducible delay with computational offloading between smart communication devices (SCDs) with computational network resources [

6,

13] can lead to a significant increase in energy consumption through subsequent iterative tests for the PSO algorithm. Incorporating dynamic voltage scaling (DVS) while solving multi-user offloading enables focusing solely on energy consumption through the IHEA-NC optimisation equation. In general, the IHEA-NC equation aims to address holistic energy-efficient cloud infrastructures.

3. Materials and Methods

This section elaborates on the following key elements of the methodology. Firstly, the proposed IHEA-NC optimisation equation is derived as a mathematical equation describing the associated components that aim to refine the network slicing-to-ring fencing ratio through a convergence criterion. The IHEA-NC equation is “non-convex”, but a gradient descent approach is able to escape local optima by incorporating relevant constraints. The inclusion of latency constraints due to contention factors in non-linear dependencies divides the components into discrete, non-overlapping feasible regions to facilitate the overall optimisation problem. DVS incorporates both continuous power levels and discrete decisions between the minimum and maximum latency thresholds.

Proposed comparisons: Once the derivation of IHEA-NC is explained, we will show how it compares with state-of-the-art heuristics, such as HA-NC and PSO. Each heuristic will be tested under three different scenarios (restricted search space, increased search space, and aggregated architectures). The experimental design covers the basic search strategies, with homogeneous and heterogeneous (aggregated) resources.

The non-linearity of IHEA-NC comes from the power consumption

P of the central processing unit (CPU), which is typically modelled as the cube of dynamic computational speed. Decision variables such as bandwidth

and power consumption

increase the complexity with non-linear dependencies. The non-convex heuristic algorithm described in [

5] is an algorithm known as network slice Aware User Grouping for nonorganic multiple access (NOMA) and resourcE allocatioN in edge compuTing (AUGMENT), which accounts for user grouping, computing resources, and wireless resource allocation to optimise energy efficiency. An improvement over the aforementioned algorithm has been discussed earlier in the literature by including

as the minimum latency and

as the maximum latency over NOMA, which can lead to abnormal cluster sizes with unfair resource allocation. Performance and scalability evaluations were simulated in CloudSim [

14], and also implemented as a proof of concept in Amazon Web Services (AWS).

The preprocessing steps for extracting relevant computational network resource data from the GWA-T-12 Bitbrains dataset are described in the last section.

3.1. Proposed Iterative Energy-Aware Non-Convex Optimisation Heuristic

Total energy consumption of the network: The total energy consumption of the network can be expressed as the sum of the energy consumption in the central cloud model, the network slice, and the ring fence [

15]. In addition, centralised cooling is considered for the core computational tasks.

Energy consumption of the central cloud: The required parameters starting with the central cloud computational power consumption and the cooling power consumption can be incorporated as:

where

is the power efficiency of the central cloud. T is the total operation time of all network slices.

and

represent the computational power and the cooling power consumption of the cloud.

Energy consumption of a network slice: Next, the energy consumption

of a network slice NS with a ring fence RF can be represented as:

Energy consumption of a ring fence within a network slice: To actualise the energy consumption, the energy consumption of a ring fence should be incorporated within that network slice.

This is expressed as follows:

where

,

, and

T represent the efficiency of the ring fence, static power consumption, and total operating time, respectively. Static power consumption is independent of circuit power and cooling.

Incorporating parameters of network resources: Modelling the total network energy consumption taken from each slice according to [

15], the equation should include the bandwidth, processing demands, and the energy consumption of network resources. Hence, the modified energy equation is now given as:

where

is the energy consumption of the network resource

u within each ring fence

and

represents the energy consumed due to the CPU processing of all network resources within each ring fence

considering the processing power consumption

.

represents the energy consumption due to communication between different layers of the network s and , considering the bandwidth and the power consumption over time T. Next, the non-convex optimisation equation above shall be incorporated with end-to-end constraints for the heuristic approach.

3.2. Iterative Heuristic Energy-Aware Non-Convex Equation

To enhance QoS, the minimum and maximum latency constraints such as

and

are incorporated, where the respective weights

and

coupled with latencies

and

are incorporated to ensure performance-sensitive applications, as in [

5].

To provide energy-aware scheduling for techniques such as dynamic adaptation and smart sleeping, DVS with

is included in [

5]. To clarify the terms, the full list is shown below:

| Energy Component | Mathematical Expression |

| | |

| Central Cloud Energy | |

| |

| Network CPU Processing Energy | |

| |

| Network Resource and Ring Fence Energy | |

| |

| Inter-Network Slice Communication Energy | |

| |

| Latency Constraint | |

| |

| DVS Energy | |

With these requirements in mind, the optimisation equation shall now be formulated as the sum of the aforementioned constraints:

+ ’Network CPU Processing Energy’ +

’Network Resource and Ring Fence Energy’ +

’Inter-Network Slice Communication Energy’ +

’Latency Constraint’ +

’DVS Energy’.

OR

where

incorporates the power consumption of the CPU according to the DVS technique. Here,

is the coefficient subject to chip design,

S is the computational speed of the CPU, and

is the task execution time for user

. Thus, the IHEA-NC optimisation equation models a network slicing–ring fencing ratio for cloud infrastructure.

The defined

in (5) of this research paper is described as a pseudocode that efficiently satisfies the degree of convergence. With the initialisation and initial calculation described in Algorithm 1, we can now proceed with the gradient descent optimisation part by evaluating each heuristic against the convergence criterion.

| Algorithm 1 Pseudocode of the proposed IHEA-NC algorithm (using CloudSim terminology) |

Step 1: Initialize parameters

Require: iterationStart, iterationEnd, zetaCC, pComputeCC, pCoolCC, pProcCC, zetaRF, pStaticRF,

commBandwidth, commPower, tau, taskExecutionTime, dynamicScalingFactor, alphaMax, betaMin

Step 2: Create datacenters and initialize datacenter broker

for each network slice ∈ network slices:

hosts(PEs, RAM, BW, Storage with hosts)

DatacenterCharacteristics ← (Architecture, OS, VMM,

hostList, cost, costPerMem, costPerStorage, costPerBw)

Datacenter ← (name, DatacenterCharacteristics,

VmAllocationPolicySimple(hostList), storageList,

schedulingInterval)

for each ring fence ∈ slice:

VMs (MIPS, RAM, BW, size, PEs, VMM);

broker ← submitVmList(vmList)

Step 3: Load Cloudlets from preprocessed dataset and submit

Corresponding VMs and cloudlets

Read fastStorage, rnd CSVs

for each row ∈ CSV:

row ← [CPU, RAM, Disk, BW]

Cloudlet ← (length, PEs, input, output);

cloudletList ← cloudletList ∪ newCloudlet

vmList → broker

cloudletList → broker

Step 4: Simulate inter-VM communication

for each (, ) ∈ VM_Pairs:

simulateCommunicationBetweenVMs(, , BW);

CommEnergy ← (commPower × BW)

for each isolated VM:

IdleEnergy ← IdleEnergy

Step 5: Start CloudSim simulation and collect the simulation results

StartSimulation()

CloudletListProcessed ← Broker.getCloudletReceivedList()

StopSimulation()

for each Cloudlet ∈ CloudletListProcessed:

broker ← [taskExecutionTime, cloudlet.getExecStartTime(),

cloudlet.getFinishTime()]

Step 6: Calculate energy consumption

Compute + cooling:

Static:

Processing:

Communication:

Latency + DVS:

Cooling overhead:

Step 7: Log and write output results

TotalEnergy← calculateTotalEnergyWithLatencyAndDVS(CloudletListProcessed, VMList)

CoolingPower← calculateCoolingPower(TotalEnergy)

TotalEnergyWithCooling← TotalEnergy + CoolingPower

writeCloudletResultsToFile(CloudletListProcessed, OutputFile)← [TotalEnergy, CoolingPower, TotalEnergyWithCooling]

Terminate CloudSim environment |

3.3. Consistent Convergence Criterion

For each algorithm, a consistent convergence criterion ensured a fair comparison of effectiveness by assessing the performance of each algorithm with environmental conditions. This single convergence threshold value was initialised to 0.01, which in practical terms indicates that 1% of the initial total energy must be reduced iteratively. This value refines the sensitivity of each algorithmic step and can be tuned to accelerate convergence if required. The value was kept constant to be able to compare tests in a number of scenarios. The defined step ensured that the desired energy consumption stabilises over a certain threshold through gradient descent. More advanced optimisation strategies could be implemented but will likely take longer to calculate, impacting the resource allocation, which should run in real-time.

3.4. The Iterative Heuristic Energy-Aware Non-Convex (IHEA-NC) Equation

Define the initial threshold value, for instance .

Calculate the initial total energy consumption based on the initial parameters.

Calculate the convergence threshold value as:

Iteration 1: Update the parameters and calculate the new total energy consumption .

Check Convergence: Calculate the difference:

If , the algorithm has converged. Otherwise, proceed to the next iteration.

For, repeat the following steps until convergence is achieved:

- -

Update parameters and calculate the total energy consumption: Update the parameters based on the current iteration. Calculate the total energy consumption .

- -

Check convergence: Calculate the difference in a gradient descent way:

- -

If , the algorithm has converged.

The optimal point is calculated based on input parameters. IHEA-NC does not track parameter deviations. Therefore, the solution will become sub-optimal at some stage. In other words, IHEA-NC can be recalculated upon changes in input parameters, such as CPU, memory, disk I/O or network bandwidth change. It can refine the optimal point with gradient descent to reduce total energy consumption with a few iterations. When cloud architecture or network resources change, a controller or threshold-based trigger (which are not covered in the present paper) could quickly recalculate IHEA-NC to maintain optimal energy consumption (minimising energy consumption and task completion time).

3.4.1. Simulations Using CloudSim

CloudSim is a flexible and extensible cloud simulation framework for cloud-based applications in heterogeneous real-world infrastructures. The key components of the CloudSim tool include datacenter characteristics, DatacenterBroker, NetworkTopology, virtual machines, and cloudlets. In the incorporated network cutting–ring fencing architecture, network slices, ring fences, and computational network resources are mapped to data centres, hosts within data centres, and virtual machines, respectively. The hosts assign performance metrics central to the simulation, including CPU, memory, disk, and network bandwidth. The virtual machines are the CPU, memory, storage, and network bandwidth resources allocated to each network resource inside each ring fence. Finally, cloudlets create tasks performed on “Network Slices” inside virtual machines. A cooling constraint central to was indirectly simulated in the architecture to model initial energy constraints for each heuristic.

3.4.2. GWA-T-12 Bitbrains Dataset

The GWA-T-12 Bitbrains Dataset (available at

https://tinyurl.com/4wr29k4j) contains the network performance metrics of 1750 VMs from a distributed datacentre managed by Bitbrains. CPU, memory, disk, and network data from the fast storage network (fastStorage) and slower network attached storage (Rnd) devices are the parameters used to calculate the energy consumption. The following section describes the calculation for this specific dataset in more detail.

Understanding the data structure: Two data set traces labelled fastStorage and Rnd that consist of the network performance metrics of 1250 VMs and 500 VMs connected to fast storage area network (SAN) devices and slower network attached storage (NAS) devices were loaded from a specified directory. With libraries like Pandas for data manipulation, the required parameters were loaded and used within the simulations.

Filtering the relevant network performance metrics: The total computational load of resources was aggregated for each relevant trace per hour per computational resource. Since the mean usage of resources can fluctuate significantly compared to the daily usage with energy consumption, CPU core values were rounded to 0 or 1, non-numeric values were filled in the “Timestamp” column, and relevant time-series data were extracted with normalisation and consistent scaling.

Loading and structuring the data for CloudSim: Through proper concatenation of the relevant dataframes, the pre-processed dataset from each network storage device is converted to a suitable format to be processed. With columns like timestamp, CPU, memory, disk, and network usage, the script saved the cleaned data set to CSV files for input to CloudSim.

4. Results

Three scenarios are presented to show how the novel network slicing–ring fencing ratio works. Each setup benchmarked speed in convergence with refined execution time and energy consumption. The purpose of these experimental tests was to demonstrate the efficacy of to idealise the slicing-to-ring fencing ratio of the network.

As described in the previous section, the proposed IHEA-NC algorithm optimises energy consumption by balancing network bandwidth and processing power.

Latency constraints are implemented by maintaining a baseline performance level. Moreover, the proposed equation also incorporates energy-aware dynamic voltage scaling (DVS) while solving multi-user offloading in high-quality network slices. It has the key components of modern, scalable cloud model infrastructures.

4.1. Baseline Performance Simulation

The following experiments were performed on CloudSim 3.0.3. A convergence threshold can help idealise the approach of iterative calculation to convergence in energy consumption for the respective algorithm.

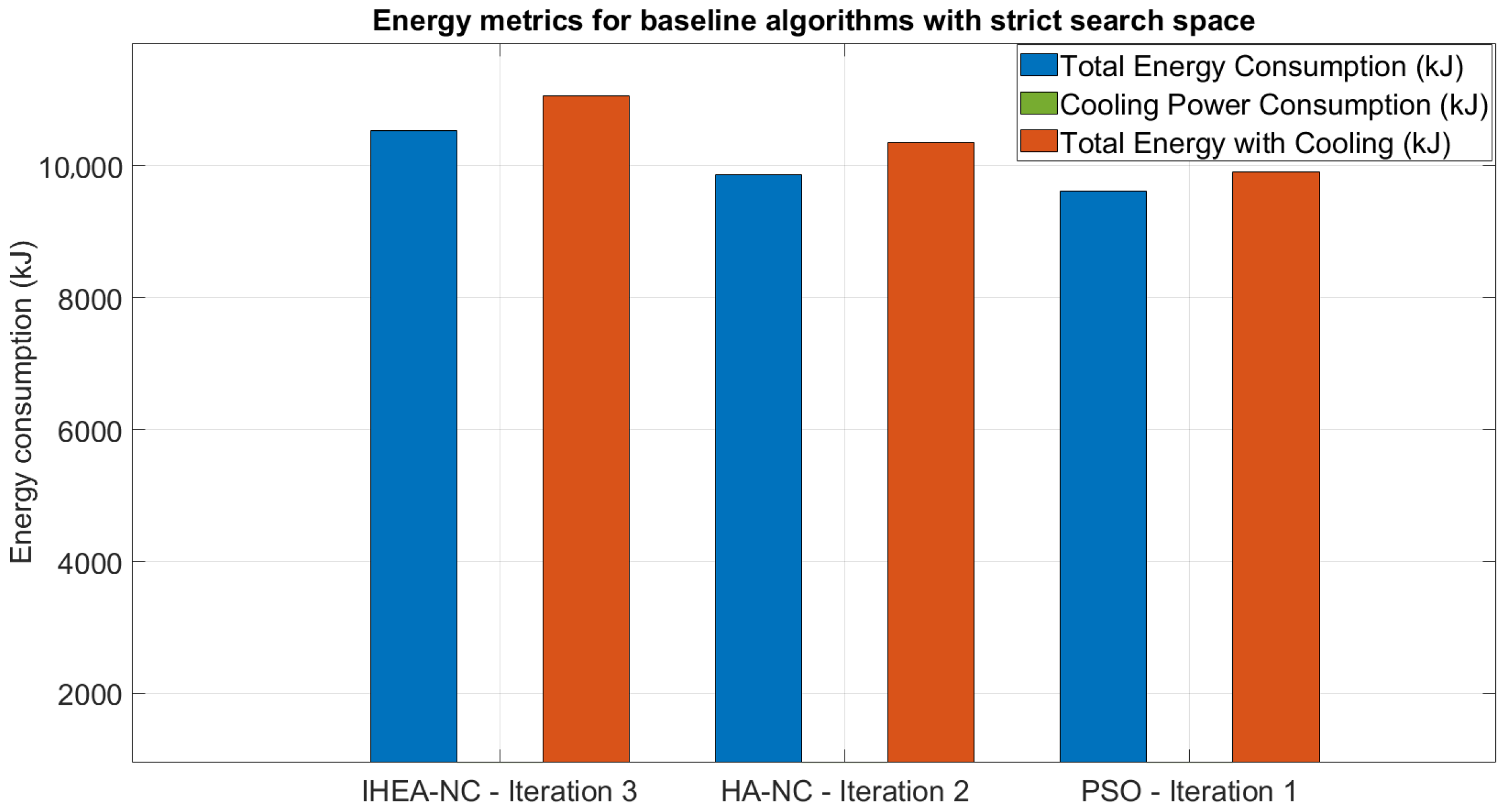

As shown in the baseline

Figure 2, the HA-NC algorithm and the PSO algorithms reported energy consumptions of 10,355.299 kJ and 9909.403 kJ, respectively.

The proposed IHEA-NC algorithm, as well as the other two baseline approaches, converged in three or fewer iterations. Notice that all baseline energy consumptions are within the same order of magnitude.

4.2. Increased Search Space

After reviewing the baseline calculations, we can proceed to show the benefits of the proposed method by increasing the search space in a way similar to [

16], which used a larger search space and delay-specific penalties. It can be seen in

Figure 3 that under similar system requirements, the HA-NC and PSO algorithms reported a total energy consumption of 2464.3 kJ and 10,738.07 kJ, respectively.

See

Table 1 for energy consumption details in all tests.

By comparing the baseline calculations with the improved search space, we can see a gain of 76.20% for HA-NC and a loss of 8.36% for PSO. Meanwhile, the negligible baseline difference of 3.38% between 11,054.202 kJ in

Figure 2 and 10,680.219 kJ in

Figure 3 for IHEA-NC ensured its consistency in total energy consumption within acceptable limits with just a couple of iterations (see

Table 2 for more details on iterations).

4.3. Completion Time

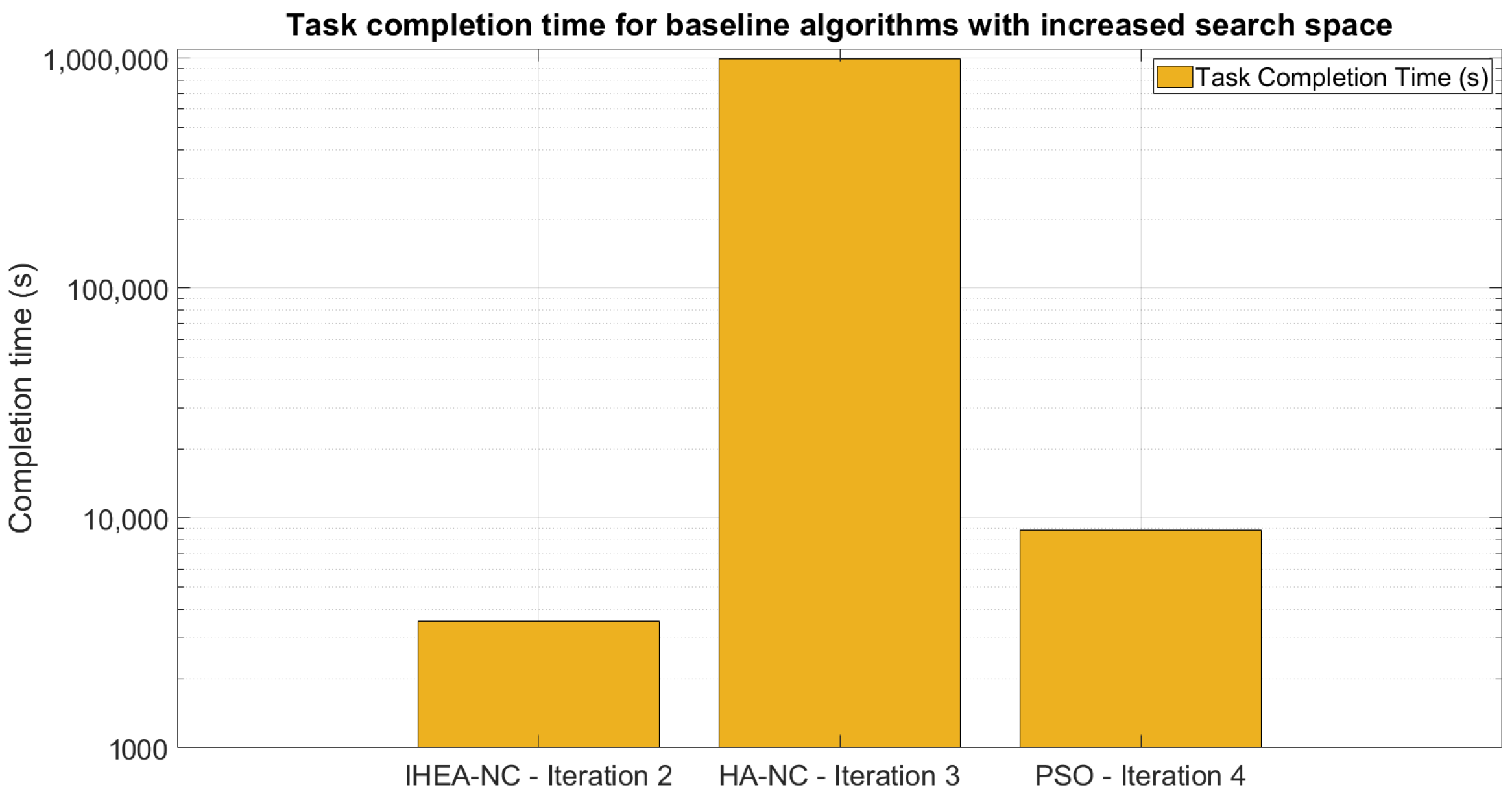

From

Figure 3 we can see that the IHEA-NC algorithm had a performance similar to that of PSO. Regarding task completion time, the disparities for the HA-NC algorithm and the PSO algorithm were reported at 990,102.83 s and 8888.34 s (

Figure 4). The IHEA-NC algorithm takes half the time as compared to PSO which still uses the most iterations for convergence.

Those large disparities in task completion time and completed iterations for the HA-NC and PSO algorithms, respectively, point to initial inadequacies in the desired optimisation ratio. However, the proposed IHEA-NC algorithm completes the simulation tasks in 3900 s, which is a shorter time than HA-NC and PSO. The optimised ratio in IHEA-NC facilitates efficient resource allocation. To further show the applicability of IHEA-NC, a proof-of-concept code was developed in AWS.

4.4. AWS Scenario

The differences in energy consumption with convergence and resource allocation of network slices can be further highlighted with uneven multi-scale architectures.

By designing dense, uneven “aggregated” architectures with high-quality network slices (ring fences with a larger number of hosts), network architects greatly benefit from the efficiency gain in scalability testing by seeking observations with differences in energy metrics. Clients seeking heterogeneous requirements such as virtual network function (VNF) placement [

17,

18,

19,

20] can benefit greatly from energy-efficient models spread across aggregated slices.

Various network slices and ring fences with their computational network resources were simulated in AWS through virtual components with VPCs, subnets, security groups, and elastic compute cloud instances. The IHEA-NC, HA-NC, and PSO algorithms were coded (code available at

https://tinyurl.com/yeyku8d2, (accessed on 15 July 2025)) in the AWS Lambda (serverless) part. Performance metrics were collected using AWS CloudWatch. The metrics were saved in AWS S3 buckets to examine their response performance and log files.

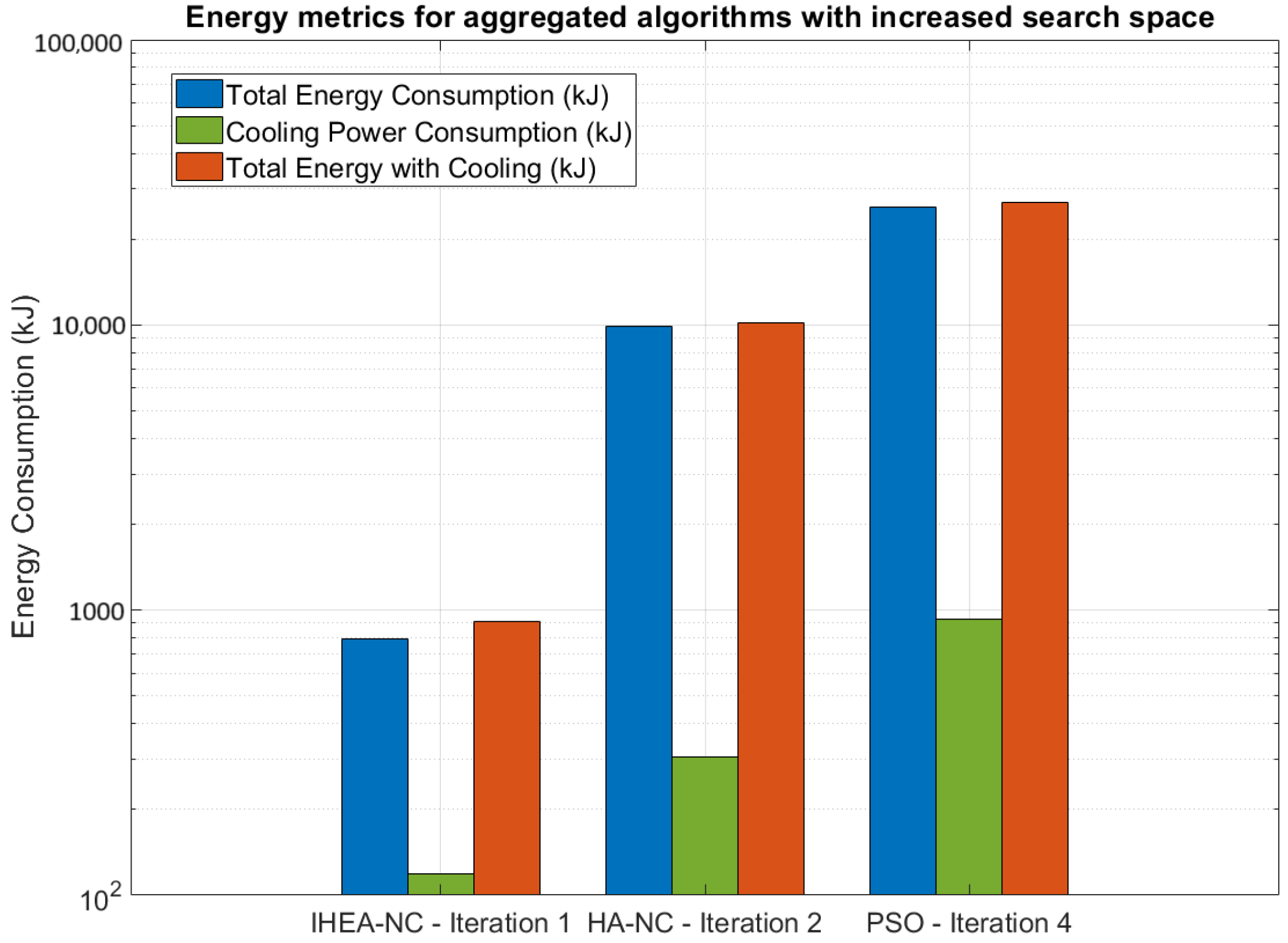

As can be seen in

Figure 5, the simulation in AWS shows encouraging results. While IHEA-NC consumed 912.958 kJ, both the HA-NC and PSO algorithms were well beyond that point (10,168.261 kJ and 26,845.584 kJ, respectively, as seen in

Table 1). In fact, the PSO algorithm reports the worst performance in terms of energy consumption (with and without factoring in cooling energy). It is also interesting to note that the simulation in AWS is qualitatively different from CloudSim (

Figure 3) in which both the HA-NC and the PSO energy consumption were practically the same. A lower power consumption by the IHEA-NC algorithm was also found within the same order of magnitude as compared to HA-NC and PSO.

5. Discussion

The results in

Figure 5 and

Table 1 indicate that IHEA-NC had an overall efficiency gain of 91.46% for total energy consumption (912 kJ vs. 10,680 kJ). Similarly, IHEA-NC in

Figure 4 reported the fastest task completion time evaluated against the other heuristics (3555 vs. 990,102 or 8888 in s), which points to faster convergence times while maintaining accurate task completion.

Table 1 and

Table 2 summarise the energy consumption and the number of iterations. It can be seen that IHEA-NC outperforms the other heuristics, particularly in aggregated cloud models with varied system requirements.

The calculation involved in the network slicing–ring fencing ratio is simple (

) and converges in just a few iterations (which amounts to milliseconds). Thus, it is favourable for energy minimisation in heterogeneous network slicing–ring fencing architectures. IHEA-NC lacks formal robustness against uncertainty in input parameters. Interesting future work could focus on modelling IHEA-NC using input probability distributions and stochastic approaches [

21], or introducing adaptive control techniques [

22,

23].

With varied system requirements depending on the need of the client, discretisation for the compared algorithmic adaptations resulted in limitations with disparate results. For an increased search space, the iterative heuristic energy-aware non-convex optimisation equation ensures its suitability for heterogeneous architectures with resource-heavy workloads. Network architects can optimise their aggregated network topologies consisting of interconnected switches for network operators to adapt through client needs for resource-heavy workloads. The same consistency observed with AWS through virtualisation in this paper is expected to be observed in other cloud providers like Google Cloud Platform (GCP) and Microsoft Azure due to similar platform-specific virtual machine implementations.

We proposed a novel energy optimisation heuristic called IHEA-NC that iteratively refines a network slicing–ring fencing ratio for heterogeneous cloud infrastructures. Our results convincingly show that the proposed approach achieved an improvement of 91.46% compared to state-of-the-art approaches, such as the HA-NC and PSO algorithms [

5,

6].

Although IHEA-NC is calculated iteratively, it has been shown to quickly converge to improved energy consumption and computational execution time within a few iterations. Its simplicity in the calculations makes it very competitive compared to alternative network slicing algorithms [

3,

4]. Switches, workload orchestrators, or cloud controllers are fast enough to calculate

to meet client service agreements [

18,

19,

20], especially in business-critical workloads [

24].

6. Conclusions

We have demonstrated how NOMA clustering based on the delay tolerance of network slices and inter-network resource communication modelled the HA-NC algorithm. The PSO algorithm offloads computational tasks between interconnected devices according to the offloading priority. Through the creation of simulation environments on each respective platform, the IHEA-NC algorithmic programme was shown to be the most efficient by satisfying an efficiency gain of 91.46% by total energy consumption in terms of energy consumption and computational execution time for the aforementioned experimental objectives. It is therefore better than the HA-NC and PSO algorithms [

5,

6] which reported 312.74% (2464.300 kJ vs. 10,168.261 kJ) and 150% (10,738.07 kJ vs. 26,845.584 kJ) increases in total energy consumption, respectively. Similarly, it is more suited to aggregated workloads with lower computational execution time than the HA-NC and PSO algorithms (3555.565 s vs. 990,102.8350 s and 8888.3450 s) which results in higher throughput and overall lower per-task energy consumption in heterogeneous architectures. Due to its fastest task completion time and faster convergence than HA-NC and PSO, IHEA-NC is an advantageous heuristic regardless of the cloud platform and resource allocation. When the underlying cloud architecture and settings change, the IHEA-NC heuristic must be recalculated to keep the energy profile in optimal conditions. Highly dynamic network architectures may rerun IHEA-NC frequently to improve robustness or trigger a recalculation step when a certain pre-set threshold is reached. Monitoring these changes to partially recalculate IHEA-NC is indeed an interesting research direction.

The inclusion of feasible and non-overlapping regions for energy consumption can be further explored by incorporating integrity and confidentiality to prevent security breaches. The work could also be extended by looking at different types of workloads to assign proportional network slices with priority queues [

20]. Finally, green cloud models also benefit from

by reducing their overall carbon footprint that comes as a result of reducing power consumption. The heuristic shown here could also be extended to include offloading strategies that minimise delays and energy consumption in other domains, such as 6G, ubiquitous computing, and future networks.