1. Introduction

In the beginning of the previous decade, the advent of the New Space drove a revolution in the space sector with the introduction of cutting-edge technologies [

1]. The main impact was the cost reduction of Low Earth Orbit (LEO)-based space solutions thanks to the achievement of groundbreaking milestones, such as the introduction of reusable launch vehicles [

2] or the miniaturization of satellite designs [

3]. This resulted in the opening of new technological frontiers and the entry of private companies into the market.

The ambition to join the broadband services market made space companies focus on LEO satellites, since their orbital characteristics allowed the companies to relax their link budget, maximizing operational throughput and reducing latency. Although these benefits were essential for broadband services, the inherent orbital speed of satellites caused link disruptions, leading to discontinuity issues. This situation ended with the emergence of mega-constellations, i.e., LEO constellation architectures that comprise hundreds or even thousands of satellites and use their massiveness to ensure continuity [

4]. These constellations achieved a major milestone with the provision of global and ubiquitous services. Currently, only a few mega-constellation projects are operating commercially.

The global and seamless service provision targeted by the future 6th Generation (6G) encouraged 3rd Generation Partnership Project (3GPP) to integrate satellite systems into cellular networks, aiming to expand the coverage to unserved or under-served geographical areas. This standardization started in Release 17 [

5] with the definition of Non-Terrestrial Networks (NTN), which considered the exploitation of satellites to provide broadband services.

The implementation of NTN raises new opportunities for 3GPP network architectures. The key factor that determines the addressable architectures is the type of satellite payload. While a transparent satellite acts as a repeater or a bent pipe between ground network components, a regenerative satellite embarks extended capabilities that enabled the hosting of network components on board [

6]. The comparison of both payload types opens a broad discussion as each offers its own advantages and disadvantages. For instance, transparent satellites involve simpler designs, reducing their in-orbit cost and time to market, at the expense of a limited service provision [

7]. Consequently, the decision to select a transparent architecture over a regenerative one might depend on certain circumstances of the targeted scenario, such as the financial and technical resources of the satellite provider, the service requirements of the use case, or the project and service duration.

The intention of this work to experiment under real-world conditions has led us to investigate transparent architectures, since real regenerative satellites require the availability of dedicated infrastructure and involve more complex and time-consuming workflows. Regarding the recent standardization efforts of 3GPP in 5th Generation (5G) NTN [

8,

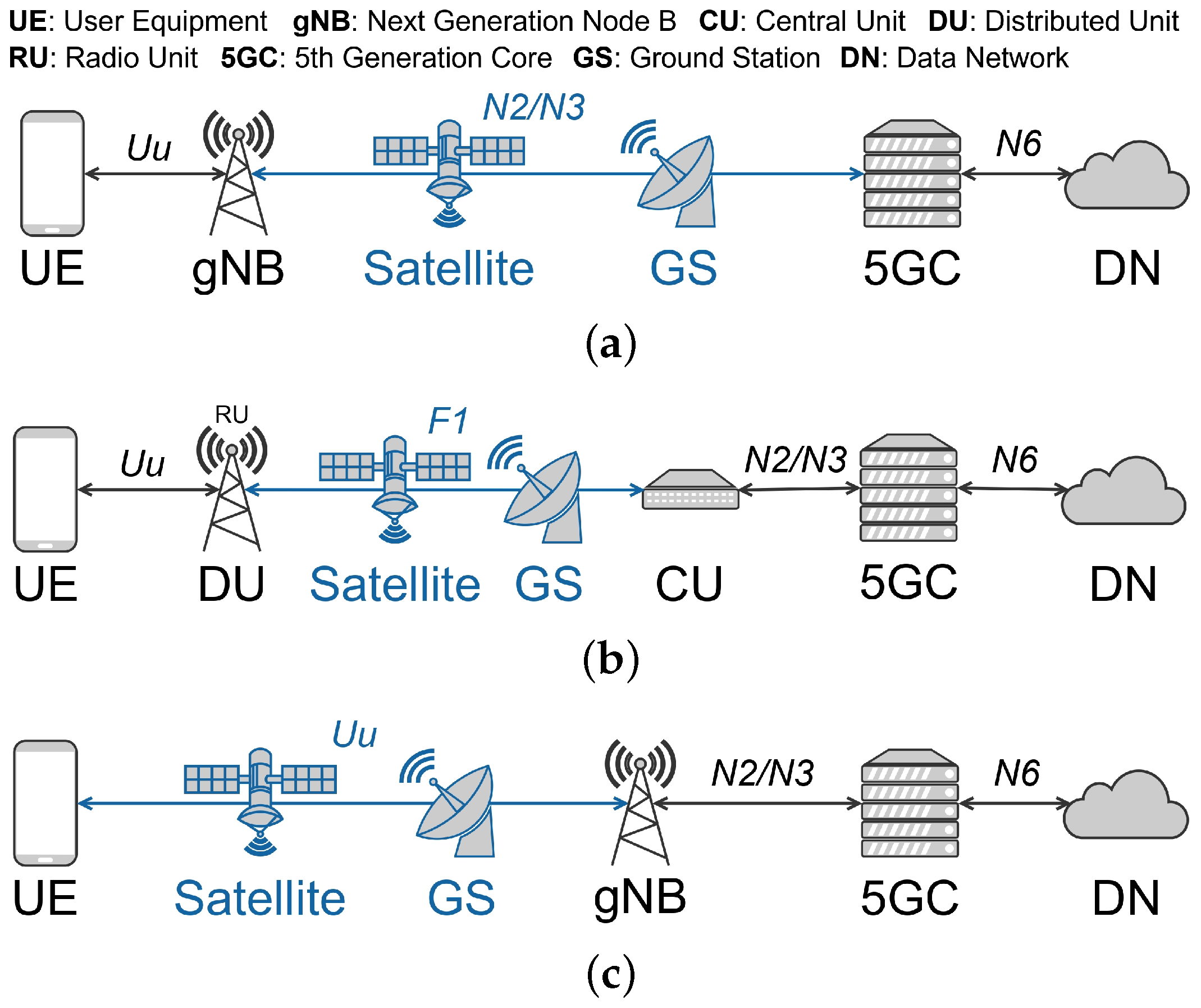

9], we propose the three architectures outlined in

Figure 1. Option (a) positions the satellite link over the backhaul, i.e., the connection between the Radio Access Network (RAN) and the 5G Core (5GC). This connection covers the N2 interface, which connects the Next Generation Node B (gNB) to the Access and Mobility Management Function (AMF), and the N3 interface, which connects the gNB to the User Plane Function (UPF). Option (b) exploits the functional split technology of 5G by which the gNB’s functionality is essentially separated in the Central Unit (CU) and Distributed Unit (DU) modules. This option places the satellite link over the midhaul, i.e., the connection between the CU and the DU. Particularly, the functional split implements split option 2, as it is the only one standardized by 3GPP. The CU and DU are connected via the F1 interface. Finally, option (c) places the satellite link between the User Equipment (UE) and the RAN, relaying the New Radio (NR) signal. The Uu interface connects the UE to the gNB. The affected interfaces in each architecture are subject to a distinct set of performance requirements and limitations, indicating that their relay through satellite links has an impact on their operation or even on their technical viability.

This paper contributes to the exploration of 5G NTN transparent architectures by studying their implementation with mega-constellations, an approach that, to the best of our knowledge, has not been addressed before. We present a methodology to deploy a testbed, based on open-source software, which combines the required 5G functionality with real mega-constellation connectivity. From this testbed, we collect time metrics from each of the transparent architectures proposed in

Figure 1, which are later utilized to evaluate their overall performance. The obtained results provide a valuable insight into the potential of mega-constellations as a viable approach for transparent architectures, introducing the community to this kind of 5G NTN implementation. Nevertheless, it is necessary to note that the integration of external commercial services into the testbed, as it is the case of the mega-constellation, inevitably triggers a number of assumptions throughout the work due to their inherent black-box nature, as well as posing technological limitations related to the abstraction of low-level aspects for end users.

This paper is organized as follows:

Section 2 briefly provides additional context about the evolution of the space communications sector, the current industry of mega-constellations, and the concept of the split gNB.

Section 3 gathers related works and discusses their contributions.

Section 4 explains the development of the testbed after presenting a characterization of the Starlink service.

Section 5 describes the methodology to be followed in the collection of metrics.

Section 6 analyzes technically the metrics obtained from tests.

Section 7 discusses the findings of this paper from a practical perspective. Finally,

Section 8 concludes this paper.

2. Background

The space communications industry historically approached the provision of broadband services with Geosynchronous Equatorial Orbit (GEO) satellites, ensuring service availability to user terminals thanks to their high orbital altitude that provides wide Earth visibility and a fixed position in the sky [

10]. However, this also resulted in large latencies and difficulties to reach polar regions due to their orbital plane configuration situated on the equatorial axis. Despite the benefits of service availability, the elevated latency of about 120 ms (calculated by dividing the orbital altitude, 35,786 Km, by the speed of light) entailed significant performance degradation for broadband services. At present, this industry remains active for stringent use cases, such as backhaul connectivity (SES [

11] and Hughes [

12]) or limited Internet access (Viasat [

13] and Hispasat [

14]).

Over the years, the technological trend of the broadband industry has driven increasingly stringent service requirements in terms of latency and throughput [

15]. The New Space has played a key role in this scenario with the aforementioned impulse to LEO solutions aimed at meeting these requirements. Specifically, the emergence of mega-constellations has been a major achievement, as it has brought groundbreaking vision to the sector on the Internet from space. To date, several proposals for mega-constellation projects have been formalized, but only two have reached commercialization: OneWeb by Eutelsat and Starlink by SpaceX.

Table 1 provides the main information related to some of these projects, including the satellite operator, a few orbital parameters such as their altitude and inclination range, the total number of satellites planned for the final phase of the project, and the number of satellites that have been launched so far. Note that these data are subject to frequent changes and can vary over short periods of time.

The disruption of satellite broadband connectivity has also been reflected in 3GPP with the standardization of NTN. The potential of new vertical opportunities in the market has generated multiple research topics surrounding the implementation of NTN. One such topic is the integration of the 5G functional split technology, whose goal is the disaggregation of the gNB in the CU, DU, and Radio Unit (RU) [

29]. These logical modules host from the upper to the lower layers of the Uu protocol stack, respectively, and can be implemented on separate physical instances. In this way, the gNB can be spatially distributed, notably enhancing the flexibility of the network. This is an interesting approach for NTN to model the configuration of gNB deployment, both in transparent and regenerative architectures [

30].

3. Related Work

The literature contains multiple studies intended to address the experimental issues suffered during the development of satellite-based technologies. This section gathers relevant work and discusses their contributions.

There are a few projects especially aimed at investigating transparent architectures in 5G. In particular, 5G-GOA [

31] and 5G-LEO [

32] are centered on the demonstration of 5G direct access in transparent satellite-based architectures, both adapting OpenAirInterface (OAI) to support the specific NTN conditions according to Release 17 [

33]. The former project focuses on the GEO satellite systems. After preliminary in-lab validation with emulated satellite links [

34,

35], the authors demonstrate the potential of their OAI implementation by deploying the solution with a real GEO satellite [

35]. The latter project focuses on LEO satellite systems. Unlike 5G-GOA, 5G-LEO only includes in-lab demonstrations with a channel emulator, where the solution’s performance is assessed [

36]. Similar to the demonstration performed in 5G-GOA, authors in [

37] also validate an OAI-based solution with a real transparent GEO satellite.

The previous works contribute with the implementation of OAI features to support the direct access architecture in GEO and LEO. However, this objective does not encompass the exploration of alternative architectures or the integration of real LEO satellites into validations and tests.

Many studies also tackle the development of experimental testbeds. The authors in [

38] present a testbed based on OAI which, employing Software-Defined Radio (SDR) modules, enables the deployment of direct access architectures for 5G NTN. It is capable of emulating transparent satellites in a LEO, a Medium Earth Orbit (MEO), a GEO, and even the L2 orbit. Likewise, the testbed introduced in [

39] integrates a real LEO satellite to test 5G NTN technologies. However, the work only describes the future plans for this infrastructure, since the satellite has not been launched yet.

Apart from testbed implementation, other studies also delve into indirect access architectures, i.e., satellites over the backhaul link. In [

40], the authors describe their testbed and demonstrate the feasibility of implementing backhaul services with a real transparent GEO satellite. In a similar way, authors in [

41] assess the performance of satellite backhaul connectivity based on Starlink by conducting a user-centric test campaign. This work is aligned with the present paper, but it is limited to the evaluation of the backhaul architecture.

The scopes of the studies above have similarities with this paper. The current literature provides a comprehensive insight into the feasibility of 5G NTN, mainly for direct access architectures in a GEO. Nevertheless, the exploration of network architectures based on transparent LEO satellites has not been contemplated yet. This paper goes a step further by evaluating these architectures from the perspective of end users, as well as putting mega-constellations in the focus.

4. Testbed Development

This section presents the testbed developed to evaluate the proposed NTN architectures. Aiming to provide a clear understanding of the system, we firstly characterize the performance achieved by the selected mega-constellation connectivity. This will help to quantify and discuss the results obtained from this work. Then, we detail the technical implementation of the testbed, delving into hardware and software domains and offering a critical perspective on some technological limitations that must be considered during testing.

Regarding mega-constellation connectivity, the current market is predominantly dominated by Starlink, as well as by OneWeb. These two initiatives are the only ones that offer commercial services. After reviewing the end-user service accessibility and subscription plans of both options, we opted for Starlink due to its end-user-centric business model, which facilitated equipment acquisition and deployment.

The continuous evolution of the Starlink system (e.g., frequent launches of satellites or upgrades of user terminals) leads to a constantly changing performance. This performance is also influenced by external factors, such as satellite congestion at a given time, geographical location, or weather conditions [

42]. Although Starlink’s service performance has been widely studied in the literature, the interest in conducting our own service characterization lies in achieving a better comprehension of our specific deployment and under our particular conditions.

4.1. Characterization of Starlink

This section encompasses an overview of the space infrastructure presenting the service characterization and a brief introduction to the impact of satellite handovers on performance. This study aims to provide additional context in order to better understand the rest of this paper.

4.1.1. Mega-Constellation Infrastructure

The constant evolution of Starlink’s space infrastructure is reflected in an uninterrupted growth in the number of active satellites and in the re-configuration of orbital parameters. This dynamism usually leads to outdated and inaccurate information regarding the mega-constellation’s configuration.

The Starlink project comprises various satellite versions, which are classified into the first generation and the second generation, officially known as Gen2 [

43]. Each spacecraft design enhances operational capabilities and physical features, such as the implementation of laser Inter-Satellite Links (ISL) in v1.5 [

44] or the introduction of dielectric mirror films in Gen2 to reduce satellite reflection [

45]. The latest versions implement cutting-edge broadband technologies such as direct to cell, an NTN approach to communicate unmodified 3GPP terminals directly with satellites. These advances show the continuous evolution of the mega-constellation and its adaptation to emerging industry demands.

The latest filings indicate that SpaceX has successfully obtained approval for its mega-constellation proposal, which entails the launch of 41,943 satellites as outlined in

Table 1. The initial expectations regarding their orbital configuration have been dynamically readjusted throughout the development of the project. Nevertheless, the near-term scope has been recently updated, leading to the configuration delineated in

Table 2 [

46,

47]. These data represent the orbital shell configuration that has been authorized by the corresponding regulatory entities at this time. It does not describe neither the current state nor the final state, but rather an intermediate step of the development roadmap. In order to provide a visual perspective of this mega-constellation,

Figure 2 shows a simulation of the appearance that it would have at present [

48]. Satellites are particularly concentrated over the most populated latitudes while extending more sparsely over the polar regions, enabling global coverage. Note that this visualization tool (

https://satellitemap.space, accessed on 28 October 2024) highlights the position of the International Space Station (ISS) as a green dot and the calculated areas in which a satellite will soon perform a re-entry as red dots.

4.1.2. Service Performance

The setup for this work was deployed in the laboratories of our office building, which is located in the urban area of Barcelona, Spain (41°23′14.6″ N 2°06′43.1″ E). It consisted of the Standard Actuated dish with the Residential subscription plan [

49], as well as a couple of machines. One was connected to the Local Area Network (LAN) created by the Starlink terminal and the other to our laboratory fiber-based LAN, both via a 1 Gbps Ethernet cable. Ubuntu Server 22.04 with kernel v5.15.0 was executed on each machine.

The characterization methodology involved a series of iPerf3 tests [

50], for which version 3.18 was utilized. As illustrated in

Figure 3, the client ran on the machine hosted by the Starlink terminal and the server on the remaining one. These tests were intended to collect Round-Trip Time (RTT) and bitrate metrics, both in uplink and downlink configurations. They were conducted using User Datagram Protocol (UDP), the transport protocol implemented on the N3 and F1-U interfaces, as well as Transmission Control Protocol (TCP) (based on CUBIC), which is similar to the Stream Control Transmission Protocol (SCTP) transport protocol implemented on the N2 and F1-C interfaces. Likewise, UDP also enabled us to measure Starlink’s maximum performance by exploiting the total throughput of the service, as well as TCP’s performance on connection-oriented links.

In particular, each iPerf3 test was executed for 12 h, performing one measurement per second. These measurements were sequentially divided into 432 batches of 100 measurements, from which the mean bitrate, mean RTT, and mean jitter were obtained. Note that RTT and jitter were only available in TCP tests, as UDP lacks ACK for their calculation. To this end, we planned the following campaigns: (1) a UDP session to assess the potential performance of the user plane in a multi-UE NTN scenario, (2) a 1-stream TCP connection to assess the potential performance of the control plane in a single-UE NTN scenario, and (3) a 10-stream TCP connection to assess the potential performance of the control plane in a multi-UE NTN scenario. Considering that uplink and downlink had to be measured separately, each campaign involved two tests, resulting in a total of 3 days of measurements that were planned for May 2025. They were all conducted under similar weather conditions, clear sky with minimum clouds, in order to minimize the impact of external variables. Added to the unobstructed view of the sky from the terminal position, these conditions made it a favorable environment in which to operate.

The following iPerf3 parameters were set for tests. If UDP, flag-u. Both UDP and TCP were configured to target 1 GBps as the maximum bitrate with flag-b. Since the client was on the Starlink side, the default measurement mode matched the uplink direction, but for downlink, flag-R was necessary to enable the reverse mode. We defined the number of TCP streams with flag-P.

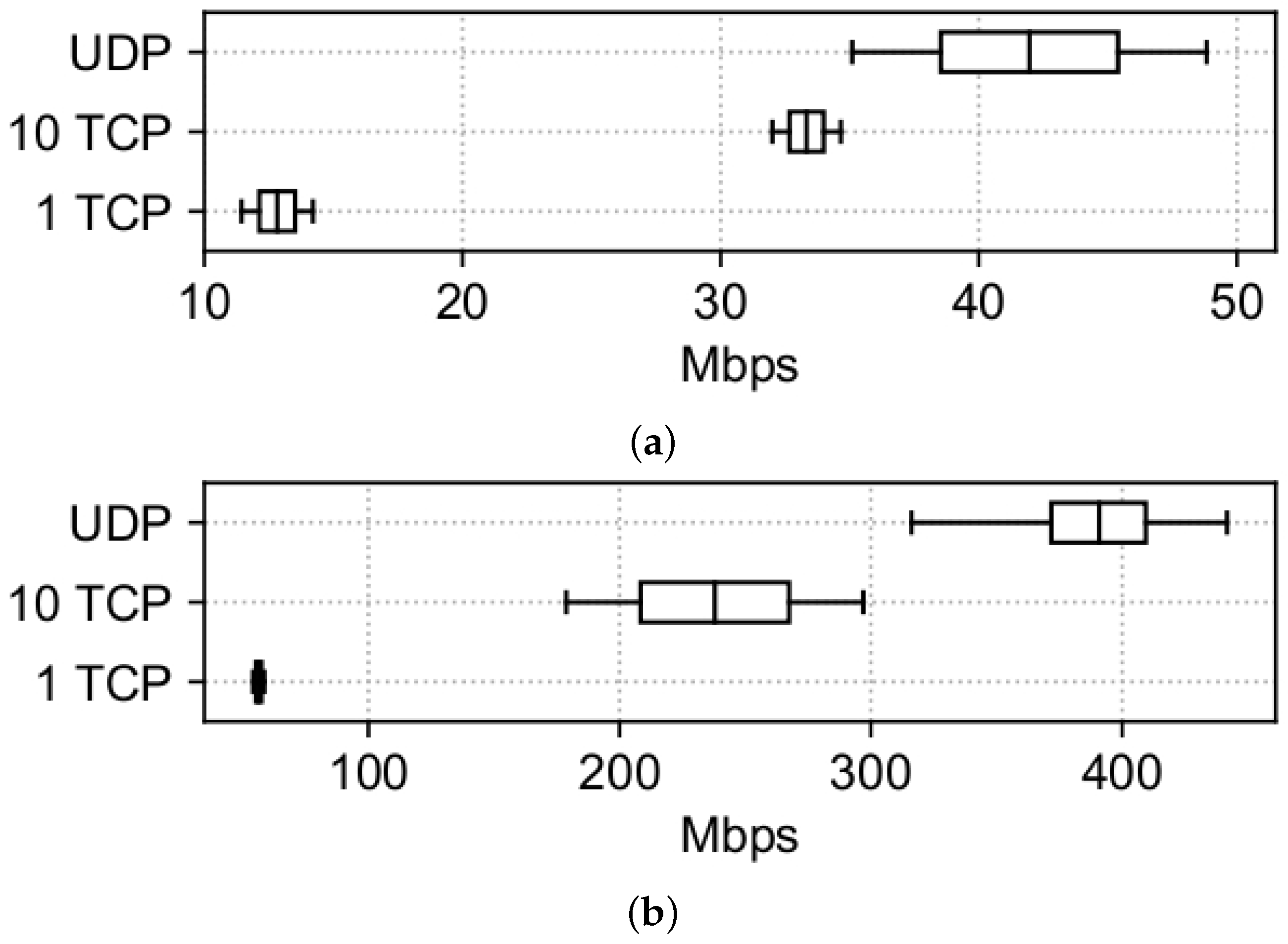

The results are presented in boxplots, which visually represent the data distribution through a five-number summary that includes the minimum, first quartile (Q1), median, third quartile (Q3), and maximum.

Figure 4 shows the bitrate. We observe outstanding performance on UDP with peaks reaching almost 50 Mbps in uplink and 440 Mbps in downlink. Especially in uplink, there is a notable dispersion of the measurements compared to TCP. The 1-stream TCP connection varies in the range of 11–14 Mbps in uplink and 54–58 Mbps in downlink. If we simplify the expectations by assuming simple linear growth, the performance achieved with 10 streams should be 10 times the previous values, but the improvement is 32–35 Mbps in uplink and 180–300 Mbps in downlink.

Figure 5 shows the RTT and jitter. Both are higher with 10 streams due to the larger number of samples, as the 43,200 measurements gathered by 1 stream are increased to 432,000 measurements with 10 streams. The same reason may justify the wider dispersion of both metrics with 10 streams. Likewise, the dispersions of 8 ms with 1 stream and 25 ms with 10 streams demonstrate stable performance, emphasizing the proper management of the dynamism inherent to satellite links by Starlink.

We conclude that Starlink offers reliable service performance, although it is heavily impacted by the adopted transport protocol. Moreover, we also see performance fluctuations when presenting the metrics above over time. This may be caused by satellite handovers, as explained in the following section.

4.1.3. Satellite Handover

After the previous tests, we noticed the presence of periodic sharp fluctuations when metrics were represented in linear graphs. This pattern typically corresponds to satellite handovers. Simply put, a satellite handover is the process by which either the user terminal switches its service link from one satellite to another according to certain criteria (e.g., to maintain service continuity when the current satellite contact window is coming to an end) or the satellite switches its feeder link between gateways to ensure service availability. They might be relevant for the targeted transparent architectures, so in the paragraphs below, we explain and discuss our related observations without entering into a detailed analysis.

Starlink terminals conduct periodic handovers with the objective of preventing connectivity disruptions and ensuring performance [

42]. Specifically, these handovers are managed by a global scheduler that, according to [

51], assigns satellites to user terminals approximately every 15 s, thereby following a user-centric strategy. This also allows the satellites to meet optimal radio link conditions, for instance, by maintaining a high minimum elevation angle. Consequently, service performance remains balanced during short time intervals until the handover causes brief degradation.

The fluctuations pattern is observed in

Figure 6. It shows several metrics collected from the previous tests over time. We visually notice a correlation of the effect of satellite handovers in TCP metrics, although fluctuations are more clearly represented with the UDP bitrate. In TCP, the bitrate graph roughly illustrates these short intervals and the corresponding degradation peaks of handovers. The congestion window size graph oscillates accordingly, resulting in a graph shape similar to that of the bitrate. The RTT graph does not clearly depict handovers, but the existence of these time intervals during which RTT is more consistent is also visible. The retransmission graph does present strong variations in responses to significant performance drops. These metrics demonstrate the variability of service performance in satellite networks, as well as the seamless capacity of Starlink to overcome the inherent dynamism.

4.2. Testbed Architecture

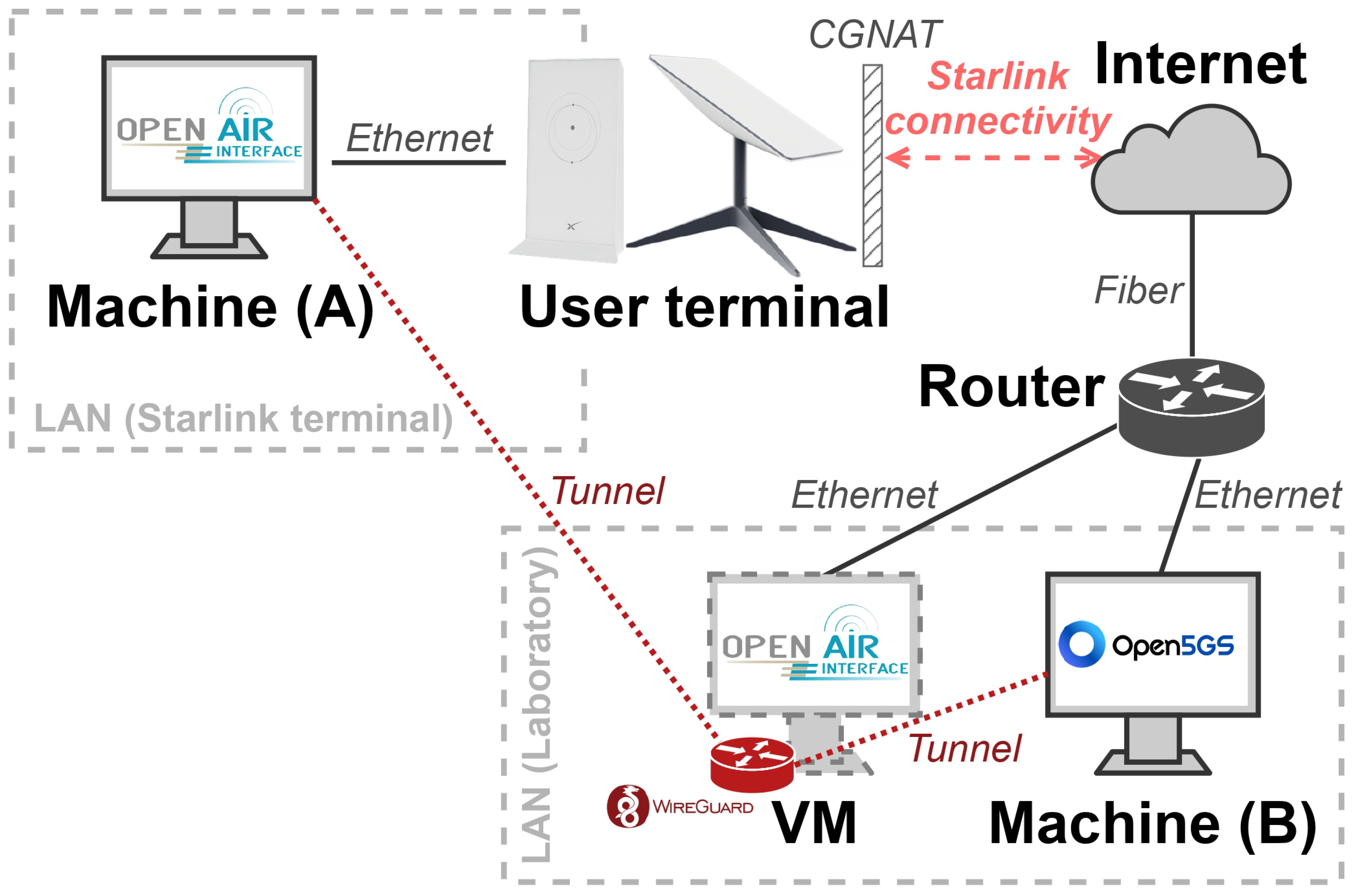

The testbed architecture is outlined in

Figure 7.

Machine (A) and

VM correspond to physical computers, whereas

VM is a Virtual Machine (VM) instance. The physical nodes comprise Asus PN51 equipped with 64 GB of memory and 1 TB of disk space. The VM has 1 virtual CPU, 8 GB of memory, and 20 GB of disk space. Each machine runs Ubuntu Server 22.04 with kernel v5.15.0.

The integration of the mega-constellation leads to the existence of two distinct LANs. While VM and Machine (B) are hosted in the fiber-based LAN of our laboratory, Machine (A) is hosted in the LAN created by the Starlink User terminal. This distribution enables the models to communicate with the components of both LANs through mega-constellation connectivity.

The deployment of the proposed architectures involves always maintaining the UE on the Starlink side and the 5GC on the opposite side, thereby generating traffic from the former LAN. This allows us to simulate a scenario where the UE and the 5GC are geographically distant. To this end, we opted to install OAI [

52] in

Machine (A) and

VM and Open5GS [

53] in

Machine (B). The decision of utilizing OAI is that, unlike srsRAN, it offers an updated implementation method for the 5G UE, as well as the RFSimulator tool [

54]. This tool encapsulates the I/Q samples, which would originally reach the radio’s frontend, in TCP packets to be sent through an Internet Protocol (IP) connection, enabling the transmission of the sampled NR signal over Starlink. We installed the latest code versions of that moment, namely 2024.w22 by OAI (compliant with Release 15) and v2.6.2 by Open5GS. The resulting testbed distributes 5G components across the nodes to implement specific network architectures. Particularly in this work, we move the full or split gNB between

Machine (A) and

VM to implement the proposed architectures, as detailed in

Section 5.

Nonetheless, the present testbed entails noteworthy considerations which may introduce technical limitations during experimentation. Some of them are unavoidable, but others are addressable with the introduction of auxiliary tools or the implementation of additional software features. Below, we explain them and, if possible, provide a solution.

4.2.1. Concealment of the Starlink Operation Details

The integration of Starlink introduces a third-party commercial solution that acts as a black box, so the internal processes or behaviors of the system are completely hidden from end users. This inevitably abstracts us from operational details (e.g., service perturbations caused by satellite overloads), and therefore, we have to understand service performance as something stable and constant over time.

4.2.2. Encapsulation of the Uu Protocol Stack

The transmission of NR via Starlink employing RFSimulator entails two relevant considerations. Firstly, traffic encapsulation is part of Starlink’s proprietary technology. Although this issue should not be present in real transparent architectures, it is not a major concern in the backhaul and midhaul, since 3GPP does not define the technology to be adopted at lower layers. However, it is of greater importance in the NR architecture, as unknown layers are being added to the protocol stack of the Uu interface. Secondly, RFSimulator’s encapsulation of the NR signal in TCP/IP appends even more layers to the protocol stack. This encapsulation also implies the sampling of the NR signal for the subsequent transmission in TCP packets, which results in the disruption of the inherent continuity of radio signals.

4.2.3. Unknown IP Addresses Because of CGNAT

Starlink applies Carrier Grade Network Address Translation (CGNAT) to efficiently manage public IP addresses [

55]. This means that user terminals are not directly assigned a public IP address, hiding the internal LAN from the Internet. In our testbed, this technology presents an obstacle, as the gNB and the CU must bind their public IP address in the N2/N3 and F1 interfaces, respectively.

As CGNAT impedes the previous configuration, we decided to set up a WireGuard Virtual Private Network (VPN) centralized on

VM. By doing so, we were able to ensure that the IP address of

Machine (A) is known in the testbed, enabling the correct configuration of the interfaces. WireGuard also enabled bidirectional access between

Machine (A),

VM, and

Machine (B), regardless of the CGNAT. The usage of WireGuard in the testbed is dependent on the specific transparent architecture to be evaluated, as it is not always strictly required (as explained in

Section 5).

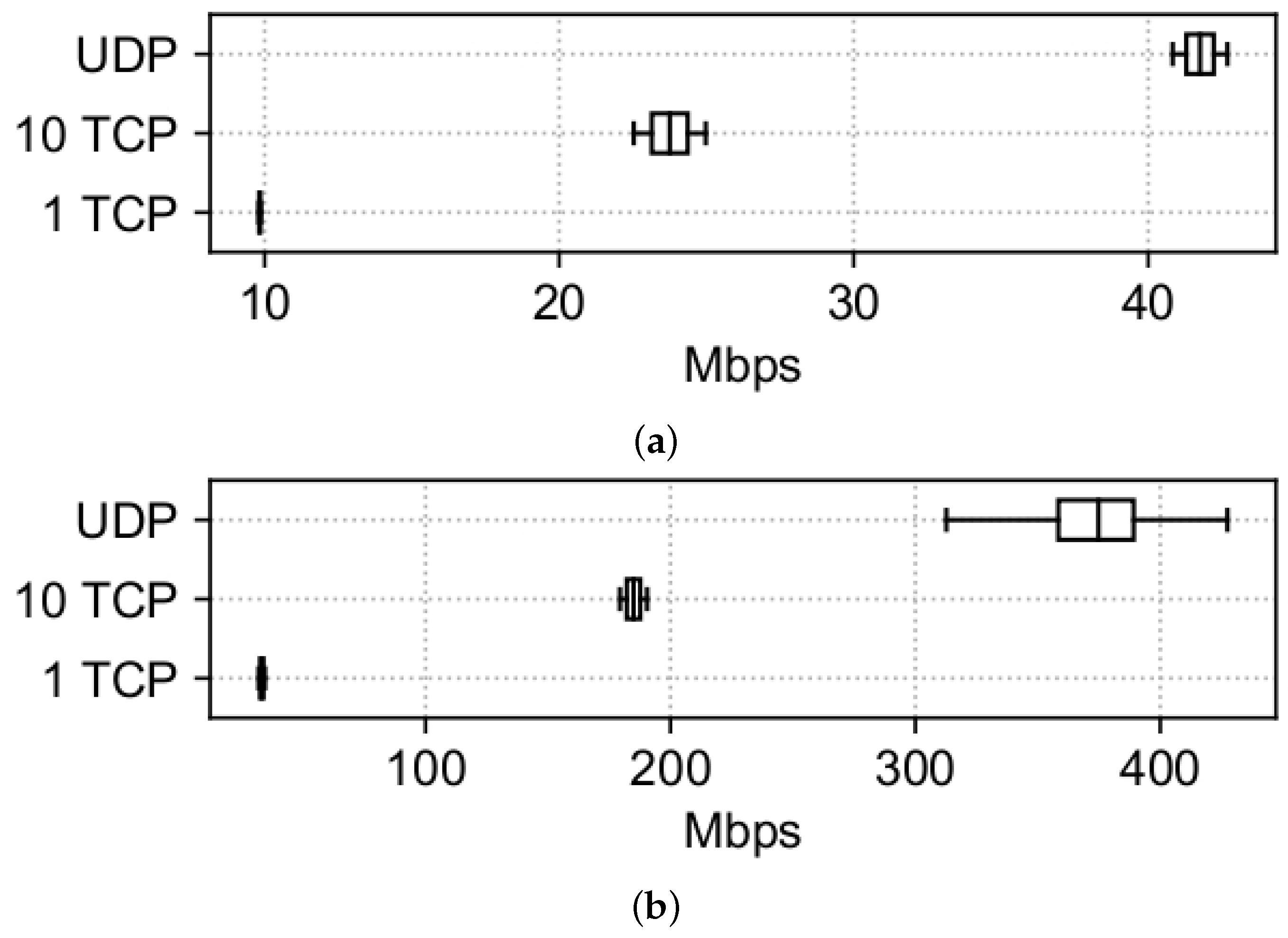

To measure the impact of the VPN, we conducted the same iPerf3 tests as for the characterization of Starlink’s service performance. Again, the test campaigns were conducted in the same setup and under the same weather conditions as before. The results, depicted in

Figure 8, show a decrease in performance compared to those obtained in

Figure 4. In uplink conditions, we observe smoother dispersion, especially in UDP. While the mean bitrate remains similar in UDP, around 42 Mbps, it is notably reduced in TCP. The performance of 10 streams is decreased by 27% (from 33 Mbps to 24 Mbps) and the performance of 1 stream by 23% (from 13 Mbps to 10 Mbps). In downlink conditions, there are also noticeable bitrate reductions, except for UDP, which only drops 15 Mbps. The performance of 10 streams is decreased by 22% (from 237 Mbps to 185 Mbps) and the performance of 1 stream by 41% (from 56 Mbps to 33 Mbps). These serious impacts on TCP may be caused by the specific technology of WireGuard, as it implements UDP at the transport layer. Regardless of this, the results conclude that WireGuard was considerably affecting the bitrate performance of the testbed.

4.2.4. Non-Real-Time Implementation of RFSimulator

The implementation of software tools to simulate radio interfaces poses serious challenges, such as those related to timing aspects. RFSimulator declares itself as non-real-time, meaning that somehow its temporality does not coincide with that of the real world. The consequence is a different perception of latencies between RFSimulator and reality.

To illustrate the idea, consider the following example (with hypothetical values): we observe the expiration of a timer, configured to 400 ms in the OAI code, after 50 ms from its start. Despite the fact that, from our perspective, the timer should not have expired, the “internal clock” of RFSimulator has consumed the equivalent number of ticks to those 400 ms. Although we do not perceive the same course of time as RFSimulator, the timing associated with 3GPP procedures is respected, as both UE and gNB maintain the same temporality. They are synchronously aligned with the “internal clock” of RFSimulator.

The speed of RFSimulator depends on the CPU [

54]. If there are sufficient CPU resources, RFSimulator will run faster than real time. This occurred in the present work, and as RFSimulator was always utilized in the testbed, we had to take it into consideration in further results. In order to quantify the impact, we deployed a baseline architecture which isolated RFSimulator as much as possible, as explained in

Section 5. Specifically, we measured the performance of a conventional Terrestrial Network (TN) architecture that was deployed in our controlled laboratory LAN. This enabled the collection of comparable results to those obtained from the proposed NTN architectures, leading to an enriching understanding of the proportional performance degradation.

4.2.5. Traffic Compression of RFSimulator

OAI indicates that RFSimulator requires a high-speed connection to operate optimally due to the transmission of large volumes of I/Q samples. As a consequence of its non-real-time implementation, the absence of a scheduler to distribute these transmissions over time results in the tendency to maximize CPU and network bandwidth consumption. Thus, the first to limit will determine the operational bitrate of RFSimulator.

In light of the Starlink metrics obtained in

Section 4, the less than 14 Mbps result achieved in the uplink with the 1-stream TCP connection poses a substantial bottleneck for RFSimulator. After analyzing the transmitted I/Q samples, we realized that most of them were

null, proving that there exists a continuous sending of I/Q samples even if the generated signal does not carry data. To optimize this traffic, we opted to apply the fast lossless compression algorithm Zstandard (ZSTD) [

56] on I/Q samples, which resulted in a notable reduction in bitrate.

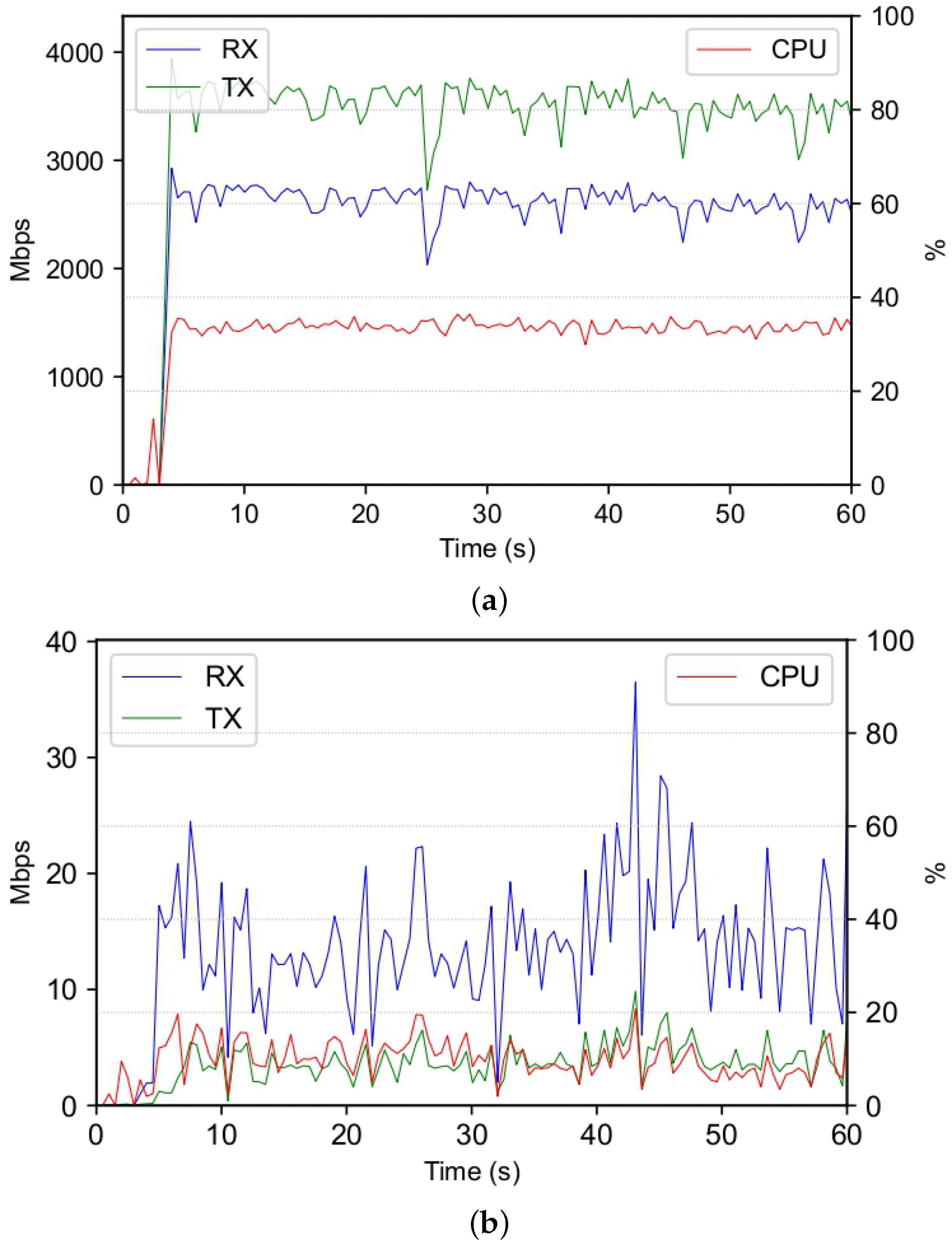

In order to quantify the compression, we utilized two VMs running on the same host to measure the bitrate and the CPU time consumed by RFSimulator in the UE. Each VM allocates 4 virtual CPU cores and has 4 Gbps of network bandwidth available. The results, depicted in

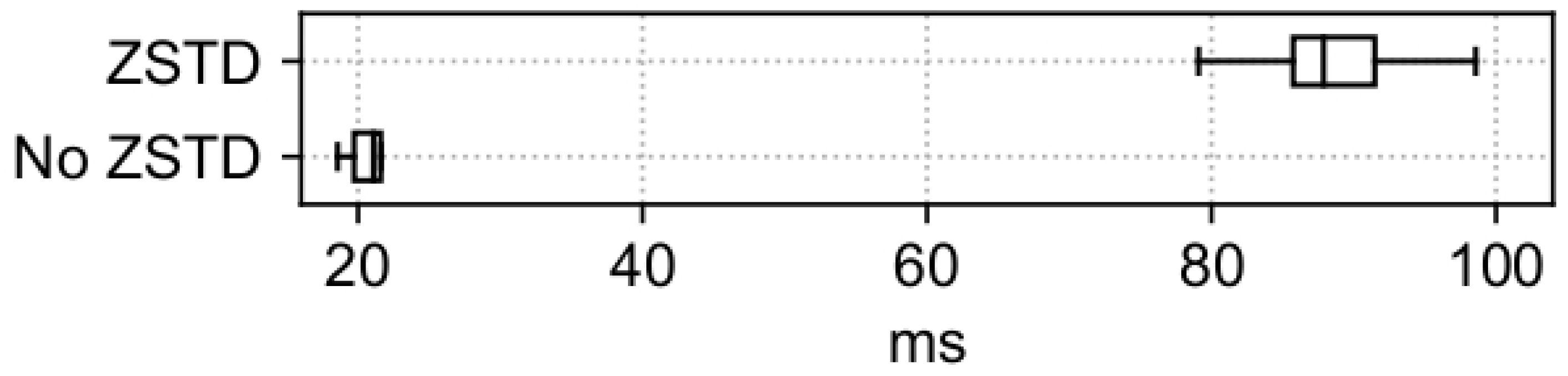

Figure 9, confirm that bitrate now meets the throughput limits of Starlink. Also note the reduction in CPU time, an unexpected behavior which points to the emergence of a new bottleneck in the implementation that prevents the exploitation of the CPU and the bandwidth. This hypothesis is reinforced by the service degradation observed in the UE, since the compression significantly increases RTT when performing a ping to the UPF, as shown in

Figure 10. The “hidden” bottleneck might be altering the nominal performance of RFSimulator.

The present traffic compression is only envisaged for the third proposed architecture with the relay of the NR signal. Despite the speed of ZSTD, the persistent compression and decompression of I/Q samples lead to the accumulation of short delays that are eventually materialized in large RTT at the application level (from about 20 ms to the range of 80–100 ms). This huge degradation will strongly affect further results, so it must be considered in future experiments. The inability to utilize the uncompressed version of RFSimulator over Starlink made traffic compression indispensable.

4.2.6. Methodology of OAI Code

The advances in the OAI code mostly depend on external projects, which leverage this software to achieve their specific goals. The developments resulting from these projects contribute to OAI with the implementation of new features. However, this methodology slows down the integration of NTN in OAI [

33]. Until the main branch of the repository is updated, we understand external developments as preliminary and experimental approaches. For this reason, this work is based on the most recent version of OAI at the time developments began, i.e., mid-2024.

5. Methodology

This section provides a detailed description of the methodology. It includes the deployment of the proposed architectures and the execution of tests for their subsequent analysis.

In

Section 4, we mentioned that the deployment of transparent architectures requires specific distributions of the 5G components across the different nodes of the testbed. For the envisaged architectures,

Table 3 lists the 5G components assigned to each node of

Figure 7 and indicates whether WireGuard or ZSTD compression is necessary or not. The VPN is necessary when

Machine (A) hosts the gNB or DU due to interface configuration requirements. Note that the 5G network is executed in the standalone mode and that RFSimulator operates in band 78 of Frequency Range 1 (FR1), specifically corresponding to the default gNB configuration of USRP 210 in band 78 and Physical Resource Block (PRB) 106 [

57].

The evaluation of the transparent architectures consists of conducting 2000 ping measurements with 1 s intervals. These measurements were sequentially divided into 20 batches to later calculate their mean RTT and their mean jitter. Note that both metrics serve as pivotal indicators of network performance, offering an insightful perspective on the constraints imposed by each architecture. Specifically, ping tests were conducted between the UE and the UPF, i.e., the 5GC component that acts as an intermediary between the 5G network and the Data Network (DN), which is commonly known as the Internet. In essence, it is a high-performance forwarding engine responsible for the optimal management of user data traffic. It is noteworthy that traffic is routed through the Internet in these tests, introducing an additional degree of uncertainty in the results. The reason for this routing mechanism is that Starlink gateways always forward traffic via the Internet, a strategy that is reasonable in view of the primary aim of the project to achieve global Internet connectivity for end users.

6. Results

The present section analyzes the results obtained from the transparent architectures and provides a technical discussion. The objective is to compare the architectures in terms of RTT and jitter in order to understand their feasibility for diverse use cases.

As a baseline to evaluate the impact of the NTN transparent architectures, we firstly collect metrics from a 5G TN (i.e., without satellite links) deployed in our controlled laboratory LAN. The RTT and jitter metrics obtained from tests in each architecture are, respectively, shown in

Table 4 and

Table 5. These tables present the minimum, first quartile (Q1), median, third quartile (Q3), maximum, mean, and standard deviation values, providing a numerical summary equivalent to a boxplot. Likewise,

Figure 11 illustrates the key elements within the deployment that influence the test outcomes (explained below). The following section opens a discussion about these outcomes.

The TN architecture exhibits stable values, with a mean RTT of approximately 15 ms and a mean jitter of 2 ms. It demonstrates that OAI is capable of executing a robust 5G network without major performance constraints. The backhaul architecture increases both the mean RTT and the mean jitter up to 52 ms and 7 ms, respectively, approximating the maximum values shown in

Figure 5 for the 1-stream TCP connection. The midhaul architecture presents a slightly larger degradation than backhaul, with a mean RTT above 60 ms and a mean jitter of nearly 11 ms.

In contrast to backhaul, midhaul reveals susceptibility to delays, as the satellite link affects time-sensitive procedures during the initial steps of the UE attachment. The procedure specifically impacted is the Random Access (RA), through which the UE allocates radio resources to communicate with the gNB.

Briefly, the RA procedure encompasses a sequence of messages between UE and gNB, designated Msg1/2/3/4. While Msg1 and Msg2 are handled by Media Access Control (MAC), Msg3 and Msg4 are handled by Radio Resource Control (RRC). In the midhaul architecture, the satellite link is placed between the DU and the CU, which host MAC and RRC, respectively. This signifies that the transmission of Msg3 and Msg4 is influenced by satellite delays, as shown in

Figure 11, potentially altering the timing of this procedure.

The configuration of timers is crucial to control the message exchange implicated in the RA procedure. For this work, we must emphasize the RA Contention Resolution timer, which corresponds to the in-house implementation of OAI [

58]. This timer is configured in the gNB to measure the time interval between the transmission of Msg2 and the reception of the ACK of Msg4. In this way, the gNB is capable of detecting any failed RA on the UE side to release its allocated radio resources and prevent their wastage with the retransmission of Msg4.

In the midhaul architecture, the previous timer expires regardless of its value, partly due to the non-real-time implementation of RFSimulator explained in

Section 4. This occurs because the timer is governed by RFSimulator’s temporality, and the transmission of Msg3 and Msg4 is subject to Starlink’s latency. The result is that the timer perceives Starlink’s latency, which spans tens of milliseconds, as well as thousands of milliseconds, inevitably exceeding its limit. Considering the causes of this situation, we opted to disable this non-standard timer, achieving the successful completion of the RA procedure. In our single-UE NTN scenario, the disabling of this timer has no effects. However, the gNB could experience problems when managing radio resources if various UEs fail the RA procedure in a multi-UE scenario. In that case, the gNB is not be able to allocate resources, resulting in the automatic rejection of new registration attempts.

Finally, the NR architecture performs the worst, as evidenced by the mean RTT, which exceeds one second, and the mean jitter, which reaches 366 ms. RFSimulator transmits the I/Q samples, which would be normally sent to the SDR frontend, through a TCP socket. Although ZSTD compression significantly reduces the total traffic, a substantial number of samples must be still transmitted. The hypothesis posits that this large amount of traffic transmitted, added to the delay introduced by the compression processing (depicted in

Figure 10), is the main cause of the degradation. It could be causing most of the degradation, resulting in RFSimulator being the bottleneck that makes the results obtained from the relay of NR contrast drastically with those of the rest of the architectures.

In the NR architecture, the Internet Control Message Protocol (ICMP) packet involved in a ping measurement is progressively converted into several packets as it traverses the protocol stack, eventually reaching the physical layer. At this point, these packets are translated into I/Q samples and encapsulated in TCP by RFSimulator. Consequently, a single ICMP packet triggers the transmission of multiple TCP packets over Starlink. This process amplifies the impact of the Starlink delay on the ping measurement, which is further accentuated by the additional increase in RTT introduced by ZSTD, as shown in

Figure 10. For this reason, we conclude that RFSimulator offers a straightforward and easy-to-deploy solution that enables UE registration on the network, albeit with limited service performance.

The results presented in this section demonstrate the notable impact of NTN transparent architectures on user performance. Among the proposed architectures, NR suffers large delays exceeding one second in RTT, whereas backhaul and midhaul incur degradations of approximately 232% and 285% compared to the baseline TN, respectively.

7. Discussion

The previous results prove the feasibility of 5G NTN with transparent architectures based on mega-constellations. In terms of outcomes, this paper not only experimentally demonstrates the integration of mega-constellations into the 3GPP sector but also provides time metrics to assess the performance experienced by end users. These findings may encourage the community to continue exploring space solutions based on mega-constellations as transparent payloads, as well as their potential applications.

The architectures proposed in this work have various practical advantages and disadvantages. The relay of the backhaul enables the roll-out of reliable and robust terrestrial infrastructure in remote locations, eliminating concerns about wired connections to the 5GC. However, expenses are considerable, as it requires having the entire RAN deployed in the target area. The relay of the midhaul lowers these costs by simplifying the complexity of the remote terrestrial infrastructure, which only comprises the DU and RU. This architecture maintains low-level procedures (e.g., synchronization and resource allocation) close to end users while externalizing all other functionalities, leading to delays in internal gNB procedures generated from upper layers (e.g., RRC connection setup). The relay of NR prevents the roll-out of remote terrestrial infrastructure and heavily affects the radio interface between UE and gNB, as has been demonstrated.

The trade-off of each architecture shall be considered by operators. In use cases where the deployment of remote infrastructure is not the primary concern, transparent architectures may be well-suited. They offer potential advantages thanks to simpler satellite technologies, such as the reduction in service costs for end users and the straightforward integration with external networks. As an example of the value of this type of architecture, we can assume that a terrestrial operator plans to serve a remote and unserved town by deploying a number of gNBs locally. These base stations will access transparent satellites to reach the distant operator’s 5GC. In this way, the operator is efficiently meeting the town’s service needs without requiring a complex wired connection to its 5GC.

The particular case of relaying the NR signal may be challenging, since the two-hop path (ground–space–ground) between UE and gNB notably increases latencies of critical transmissions. This results in negative issues, such as the potential congestion of Hybrid Automatic Repeat Request (HARQ) streams caused by packet losses or delays, or the expiration of certain timers in time-sensitive procedures. Likewise, other problems related to low layers can arise, e.g., difficulties during UE-gNB synchronization. All these challenges lead us to propose regenerative architectures in order to achieve a one-hop path (ground–space) between UE and gNB. In this way, latency is reduced, and thus challenges are slightly mitigated.

In this work, a hypothetical usage scenario of regenerative instead of transparent architectures would have presented different challenges. Regardless of the different methodologies that we should have followed, enhancements of time metrics would possibly not exist, as two hops would still be required to reach the destination. However, some of the explained technological limitations would likely not have occurred. For instance, the latency reduction in the midhaul could have mitigated the difficulties caused by the non-real-time nature of RFSimulator. Despite this, the identification of benefits in regenerative architectures implies the need for a dedicated study to demonstrate these assumptions. That work would undoubtedly face significant challenges, such as direct radio communication between the UE and the gNB hosted in the satellite.

Regenerative architectures offer potential advantages, including reduced latency between the UE and the gNB, and enhanced management of radio resources. These improvements can mitigate several constraints associated with transparent payloads, particularly in time-sensitive scenarios. Nonetheless, they also introduce limitations, such as increased satellite complexity. While regenerative architectures can complement transparent ones in some use cases, their adoption strongly depends on mission constraints and system capabilities.

Another relevant point is related to the existence of ISLs. In

Section 4, we mentioned that the latest version of the Starlink satellites included ISL technologies. The impact of ISLs would depend on the specific scenario. If the satellite footprint covers both the terminal and the terrestrial gateway at the same time, ISLs would not be utilized; therefore, performance metrics would not vary. Otherwise, the processing time (decoding, routing, etc.) involved in each ISL hop would decrease performance. As Starlink implements optical ISLs, the performance bottleneck should be maintained in the radio link of the service and feeder links. It would be interesting to numerically characterize the processing time of ISL hops to quantify how they would affect 5G NTN.

8. Conclusions

This paper explored different transparent architectures for 5G NTN based on a mega-constellation. In particular, we studied the time performance offered when the backhaul, the midhaul, and the NR signal are relayed. To this end, we firstly conducted the performance characterization of Starlink, which resulted in a maximum bitrate on UDP of almost 50 Mbps in uplink and 440 Mbps in downlink, as well as a mean RTT of less than 55 ms. Then, we developed a testbed that combined mega-constellation connectivity and open-source software, specifically OAI and Open5GS, to operate a 5G NTN network. Finally, we carried out a testing phase to measure the RTT and jitter achieved by each of the proposed architectures.

The results proved that the backhaul and midhaul architectures achieve similar performance. In midhaul, we disabled a non-standard timer implemented by OAI for the RA procedure, as it expired due to the non-real-time implementation of RFSimulator. The NR architecture obtained the worst performance, since RFSimulator was designed for Ethernet connections. The traffic compression implemented on Starlink demonstrated this. This technological limitation demonstrated that RFSimulator is a straightforward solution that enables UE registration on the network, albeit with limited service performance.

From this work, we conclude that transparent architectures provide benefits for certain use cases, such as those primarily focused on backhaul and midhaul. We also validate the usage of mega-constellations in 5G networks, emphasizing their outstanding performance. Nevertheless, the Uu protocol stack involves time-sensitive procedures that pose significant challenges for transparent architectures, so the latency reduction between UE and gNB offered by regenerative architectures may be crucial. So far, we envision the co-existence of both types of architectures, since they address distinct use cases and offer complementary benefits.