1. Introduction

The success stories of cloud computing demonstrate a significant evolution in information technology, especially in terms of providing new business opportunities. Meanwhile, cloud computing has been the subject of much consideration in both research and in the industry [

1,

2]. As such, end-users are able to obtain on-demand network access, machine resources (vCPU, memory), applications, or the entire infrastructure. This results in our daily lives becoming widely dependent on cloud computing for storing backups, writing/editing online documents, business collaborations and sharing information, and playing games online [

3,

4]. However, certain applications in the field require efficient connectivity, low latency, and a fast response time, especially real-time IoT/applications such as healthcare operations and vehicle traffic [

5]. This is true since the natural structure of the cloud requires that it resides in the far-end network. At this end, edge computing was introduced as a supplemental paradigm for cloud computing as the intermediate anchor between users and the cloud [

6].

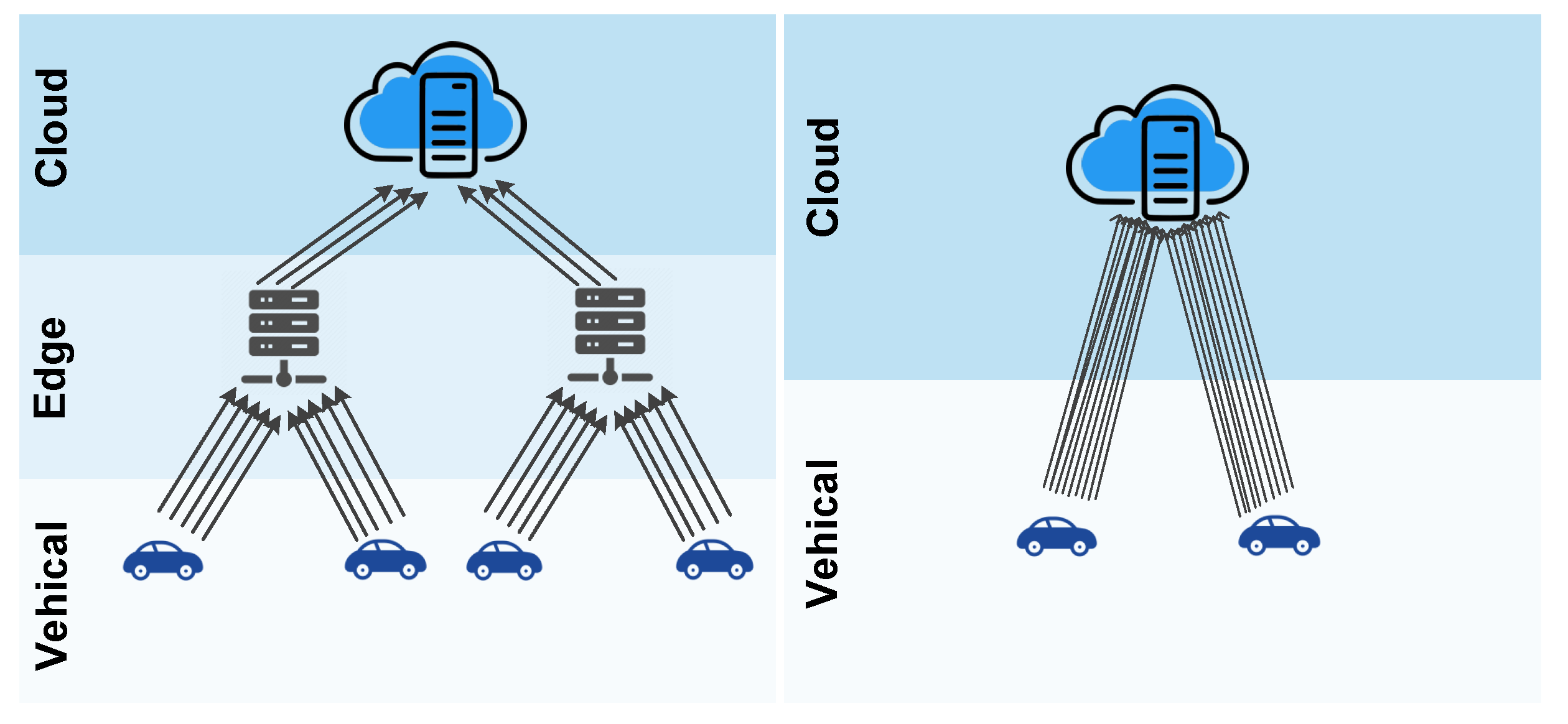

Edge computing is an extended thought of cloud computing by sharing most features with cloud computing [

7,

8]. On one hand, cloud computing hosts high processes, depth analysis, and power energy of IoT devices. On the other hand, edge computing handles important real-time data processing, such as decision-making, and fast data retrieval. At the same time, edge servers will share and update the data center periodically with the cloud [

9]. In other words, edge computing focuses on local activities, small-scale, and real-time intelligence analysis. Thus, data is stored and processed regionally with less data uploading to the cloud. The general benefit is gaining high network reduction with bandwidth efficiency, response time, and low delay [

10].

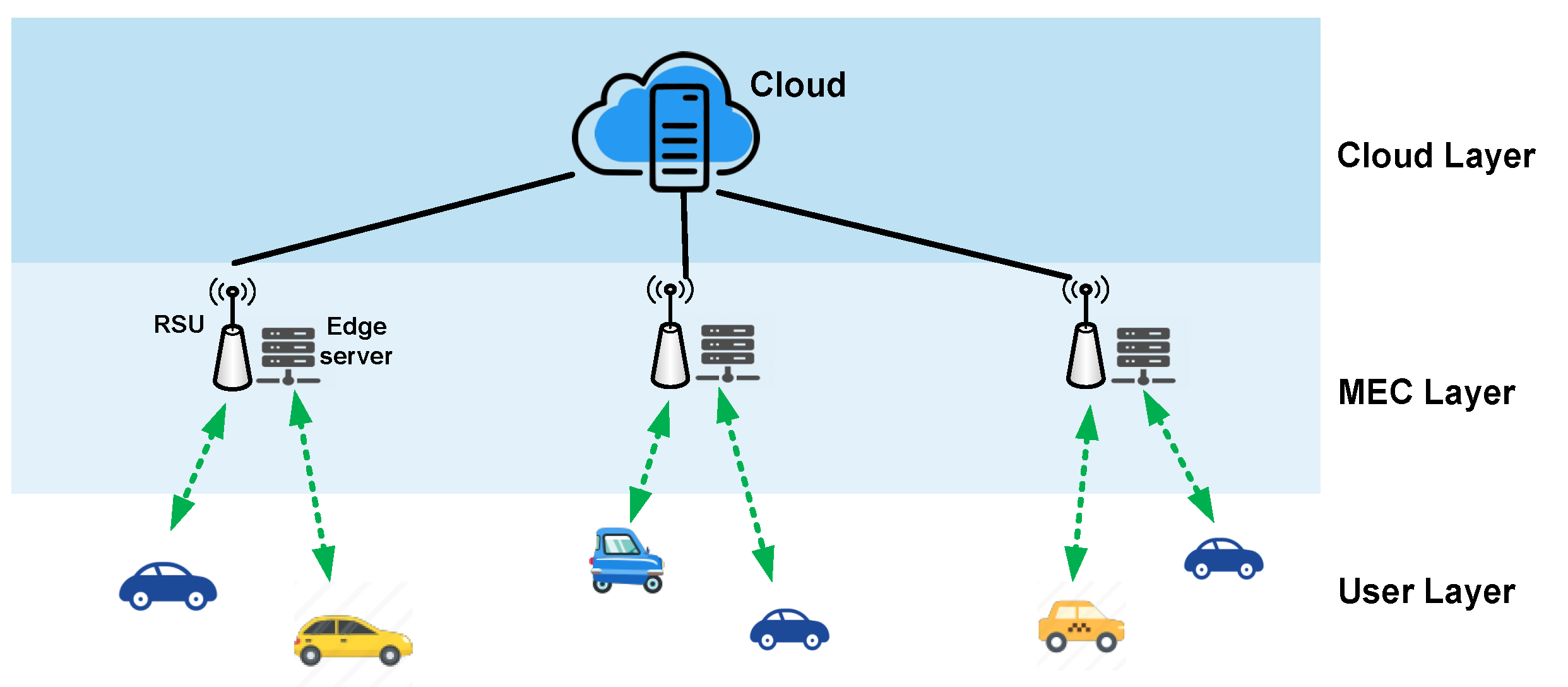

Edge computing is designed to be located as near to the data source of end-users as possible. This frequently results in the tasks of storing used/retrieved data and partial computing tasks being carried out in the edge computing node, which in turn reduces the intermediate data transmission process and communication costs. In the end, the cloud service is pushed to the network edge, and thus the resources required for computation and storage are moved to the proximity of the end device to achieve reduced latency and energy conservation to a large extent. One important sectors that benefits the most from the advantages of all the aforementioned edge computing features is the Internet of Vehicles (IoV). The IoV, by nature, requires high-speed, accurate, and scalable systems while dealing with contemporary traffic challenges.

In IoV technology, the vehicle is presented as a smart object supported with sensing equipment, processes, and storage, that is connected to other nodes such as those of the edge, cloud, or other vehicle(s), situations which constitute the phenomenon which is called vehicle-to-everything communication (V2X) [

11]. Due to the V2X communication model, the IoV may use various technologies for connection, including wireless LAN (WLAN) networks and cellular networks such as 5G or Bluetooth. Several applications have been implemented in IoV with the support of passengers and traffic management managers, and in the urban traffic environment to provide network access for drivers [

12]. Annual reports indicate millions of injuries caused by traffic accidents, and that 90 percent of these accidents are caused by human error. Leveraging the ever-increasing development of IoT and mobile networks, the Internet of Vehicles (IoV) is expected to decrease traffic accident rates, and meet the needs of traffic efficiency and traveling convenience. However, this kind of service requires ultra-low latency (for, e.g., warnings of intersection collision dangers and merging arbitration) for communications, and fast processing, which is considered a big challenge for IoV applications [

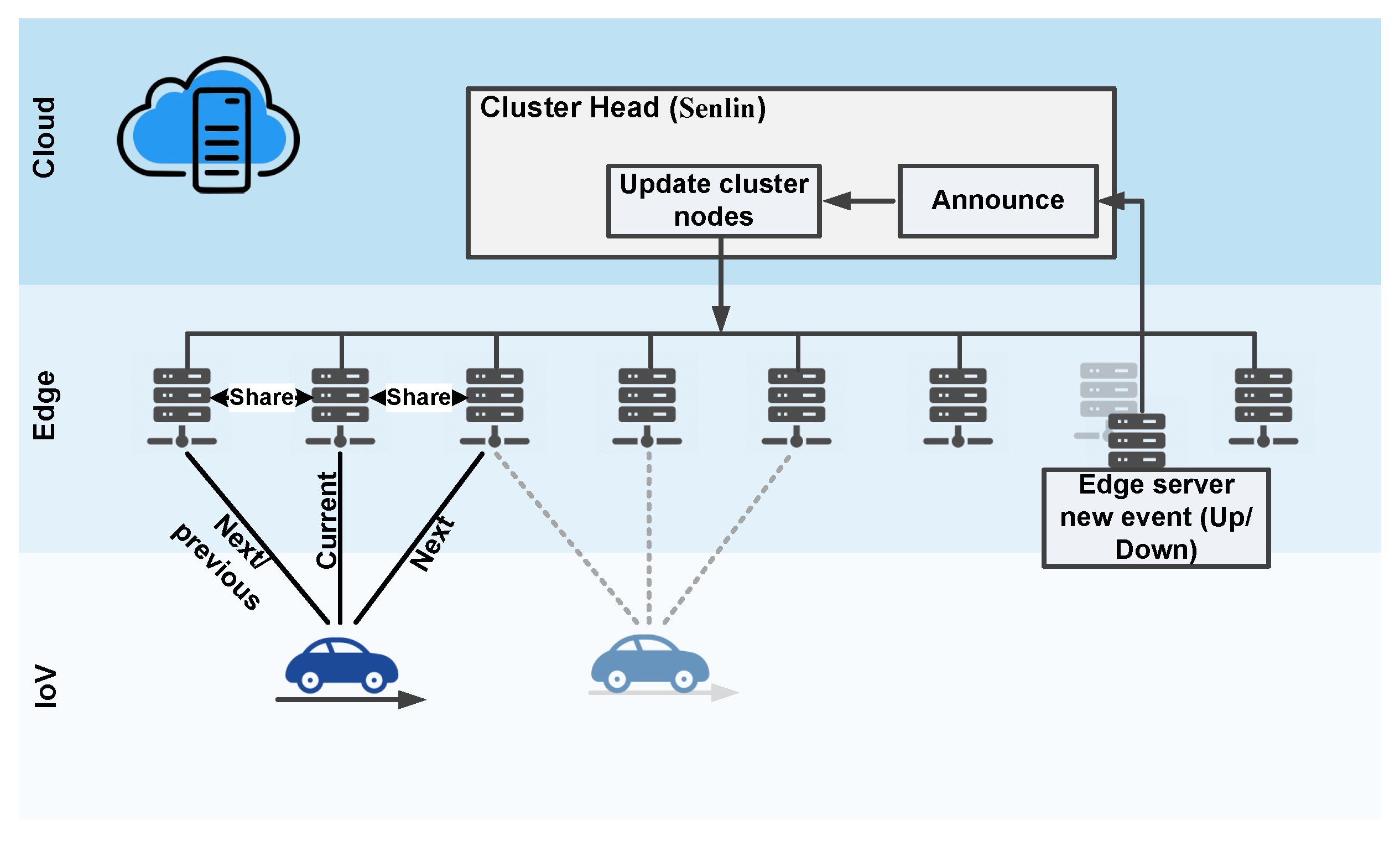

13]. In addition, issues remain unresolved in the traditional network, including the need to meet various pressing demands in heterogeneous and complex vehicular scenarios. This paper proposes a collaborative mobile edge computing framework that guarantees and enhances the connectivity between vehicles (IoV sensors) and cloud servers by utilizing the mobile edge computing paradigm in the middle. The edge server aims to continuously provide stable connections to vehicles moving between regions at high speed. The proposed framework seeks to ensure that the edge server will keep track of the vehicle’s movement and associate it with the correct/near-edge server by sharing the vehicle’s vital information. The proposed framework is implemented using an OpenStack cloud server in front of three edge servers. The evaluation is conducted through three parameters which are throughput, CPU, and latency, for two different scenarios. The main objective of this research is to guarantee and enhance connectivity with an acceptable speed of communication. Aligning with the aforementioned objectives, the paper contributes to (1) designing collaborative edge servers based on regional cluster management; and (2) implement the sector of edge computing servers using OpenStack cloud.

We shall begin by presenting the background and related works of edge computing in

Section 2. The proposed framework is explained in

Section 3.

Section 4 shows the implementation part. Then,

Section 5 presents the testing methodology. The results are discussed in

Section 6. The conclusion is provided in

Section 7.

3. Related Work

Cloud computing technologies have been studied and analyzed in several fields. Vehicular edge computing has been evaluated as a network traffic system which is widely involved in this domain. Meanwhile, several research activities have been conducted with ideas, designs, and implementation.

The authors in [

23] divided the area on the road into several “lane section IDs” and assigned it to the corresponding edge server. The said strategy was implemented over a dynamic map system. Then, all vehicles are connected with edge servers by linking multiple edge servers. The main achievement in this work is that of the reduction in the unstable radio traffic signals which aims to aggregate vehicle data with no effect. The evaluation has been focused on the effectiveness of the load balancing and the scalability of the dynamic map system in multiple edge servers.

The authors in [

18] proposed a novel method to achieve high levels of collaboration among different edge computing anchors by introducing a novel collaborative VEC framework, called CVEC. In particular, CVEC aims to enhance the scalability of vehicular services and applications in two directions, with horizontal and vertical collaborations. In addition, the authors discussed the architecture and all its possible cases with all technical enablers to support the CVEC. The noticeable results collected from this work are the low execution delay, acceptable times for delivering and offloading to the cloud, and time computation at the cloud.

In [

24], the authors proposed a mobile healthcare framework utilizing an edge–fog–cloud collaborative network. Health monitoring was parameterized by using edge, fog, and cloud at the far side for further data analysis such as abnormal status. The difference in the patient position during transportation is critical and fatal in emergency cases. Due to low data delivery in health-related applications and connection interruption, the authors encouraged that the mobility information of the end-user be considered along with a pattern detection scheme inside the cloud. This supports users with nearby health centers. The collected results obtained by theoretical analysis can be observed to demonstrate that the proposed framework minimizes the energy consumption and delay of IoT devices. Other interesting results from the experimental analysis of the proposed module performed with recall value, high precision, and time-efficiency are compared to those of existing models.

The work in [

25] aimed to propose an efficient IoT architecture, mobility-aware scheduling, and allocation protocols for healthcare. The authors proposed a method to support the smooth mobility of patients through an adaptive received signal strength (RSS) by utilizing the hand-off technique. The proposed architecture dynamically ensures the distribution of healthcare tasks among fog/cloud servers. The authors implemented the idea by using a mobility-aware heuristic-based scheduling and allocation approach (MobMBAR). In particular, the MobMBAR aimed to balance the task execution distribution based on the patients’ mobility and the spatial residual of sensed data. The results found from this work claim to reduce the total scheduling time by accounting for most task features including the critical level of individual tasks and the higher response time during the reallocation phase. This work has validated the performance by comparing it with existing solutions using simulations. The authors in [

11] reviewed and addressed the gap between IoV and IoFV by presenting an in-depth analysis of the key differences between them. The major focus of this paper is an examination of the technological components, communication protocols, network infrastructure, data management, applications, objectives, challenges, and future open issues and trends related to both IoV and IoFV. The paper engages in a sufficient analysis of machine learning, artificial intelligence, and blockchain. This work contributes to a deeper understanding of the implications and potential technologies, in the context of transportation systems.

The paper in [

12] presented an overview of the autonomous vehicle concept by discussing the major technologies in the 5G IoV environment, and the authors also presented the security perspectives by showing several susceptible attacks in this environment, along with several practices for meeting the needs of autonomous vehicle security. In particular, the authors reviewed major cyberattacks concerning information and communication security, and some solutions for addressing these cyberattacks.

Table 2 presents a brief summary of the related works.

5. Implementation

The framework has subjected to real-time implementation with three real servers to meet the proof of concept of the claimed idea of this work. The CCVEC framework was designed and implemented using OpenStack [

27] as a cloud and edge computing logic. OpenStack is an open source cloud operating system, which was found to manage and control the entire computer infrastructure, such as storage, resources, and network. Further benefits stem from the fact that OpenStack also provides services and platforms for end-users or enterprises. In this research, the Senlin clustering service is utilized and well configured in order to create and manage the cluster nodes with high orchestration. Initially, the cluster profile was created at the basic level for all cluster nodes using

$openstack cluster profile create myserverProf. Next, using Senlin, the cluster space is created using the predefined profile and name by using

$ senlin cluster-create-p myserverProf clusterName. Later, we put the required size for the cluster to three nodes using

$ senlin cluster-resize–capacity 3 clusterName. It is worth mentioning here that all the cluster nodes and the cloud are functioning with a basic profile to avoid the complexity and trade-off performance. In other words, we have kept all the settings in default just to obtain the proof of concept of our claim.

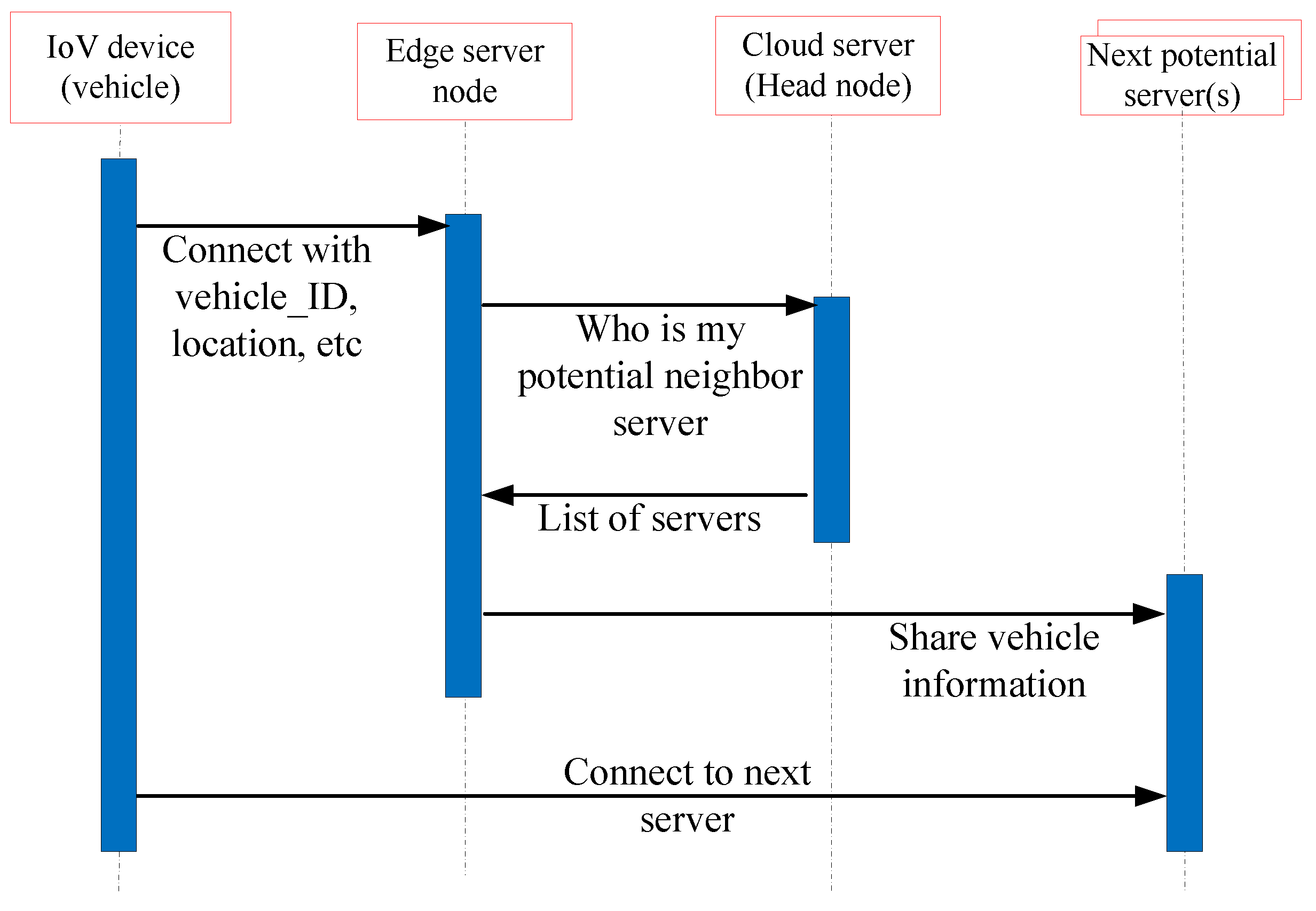

This work makes use of the receiver service in OpenStack which aims to capture events of vehicles connecting to a cluster node. In this work, the API was used in order to share vehicles’ information (e.g., vehicle_ID) between the current and the next node.

Figure 4 shows the passing message between network components.

6. Evaluation Methodology

This section explains the methodology testing of the proposed framework by setting up the environment, the scenario specifications, and the testing parameters to obtain the desired results.

6.1. Testing Environment

The testing platform of the proposed framework is described in this section by presenting the testing methodology, traffic load, and evaluation parameters. All the devices (cloud, edges, and vehicles) involved in this test are connected to the local area (wired). It is worth mentioning earlier that the evaluation does not concern the native clouds’ functionalities such as creating/deleting VMs, reading/writing on storage, and controlling VMs, as it sends a normal string and the destination will sink. However, the evaluation of this work is conducted to examine the connection flow between cluster nodes in order to make a proof-of-concept and validate the claimed idea of this work.

The test-bed environment in this research is conducted by utilizing the VMTP [

28] measurement tool which is a data path performance evaluator specifically built for OpenStack clouds.

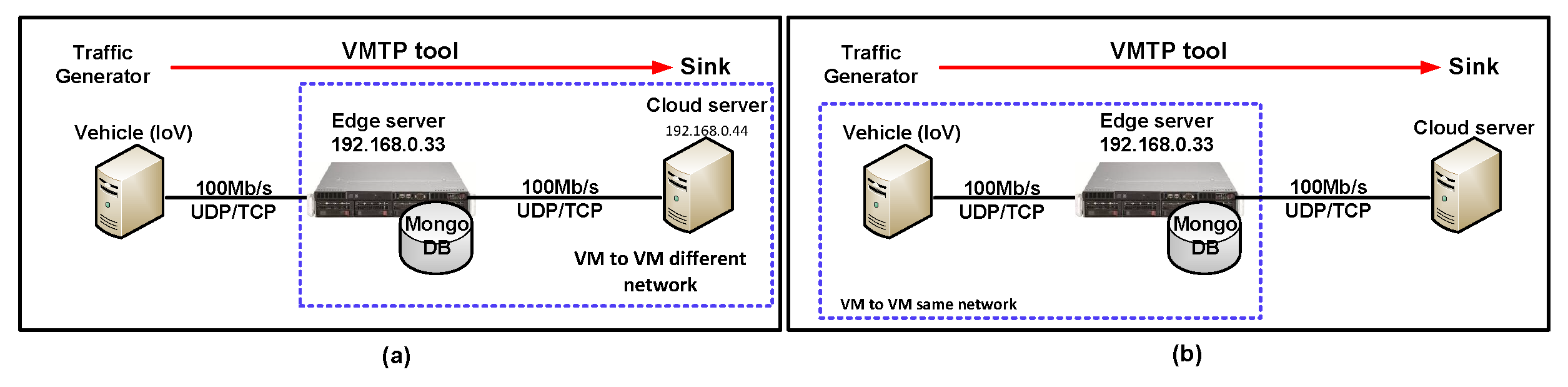

6.2. Testing Scenarios

Scenario 1: The default configuration file uses “admin-openrc.sh” as the rc file which generates six standard sets of performance data. The configuration file has been modified to collect one case for scenario 1. Scenario 1 aims to collect the results regarding the case of east–west flows between VM and VM with the same network, a private fixed IP, and flow1 which evaluates the connection between the edge and cloud node as they can be fixed to an IP. The network traffic was collected with ICMP and TCP protocol measurements. The configuration file and protocol selection have been selected by the command “python vmtp.py-r admin-openrc.sh-p admin –protocols IT”, as can be seen in

Figure 5a. In this scenario, MongoDB was used to store the related information related to the scenarios’ configurations by storing “client_db” with the collection name “pns_web_entry”. The database is reached by the fields vmtp_mongod_port, vmtp_db, vmtp_collection. The time period for this test is set to 120 s (instead of the default 10 s) for the throughput measurement between the edge and cloud server using the line “python vmtp.py–host localadmin@192.168.0.11–host localadmin@192.168.0.33–time 120”.

Scenario 2: In scenario 2, the confirmation file has been edited to generate one case out of six standard sets of performance data. Scenario 2 was conducted to obtain results for the case of east–west flows between VM and VM in different networks with a fixed IP and flow2. This path evaluates the connection between the moving vehicle and the edge node.

The protocols ICMP and TCP have been utilized to measure the connection between the moving vehicle and the edge server using the command “python vmtp2.py-r admin-openrc.sh-p admin–protocols IT”, as can be seen in

Figure 5b. The time period for each test is set to 120 s to obtain an accurate throughput measurement between vehicles and the edge server using the line “python vmtp.py–host localadmin@192.168.0.44–host localadmin@192.168.0.33–time 120”.

It is important to notice that the vehicle device transmits any kind of data towards the edge computing node, regardless of which application has been utilized. Scenario 2 is invoked once the vehicle moves from one edge server to the next server, which occurs manually, as the vehicle mobility is out of this research scope.

6.3. Evaluation Parameters

The proposed mechanism is tested based on three different parameters which are as follows: Round trip time (rtt) in ms to measure the latency, calculated by Equation (

1); Throughput (tp) in kB/s, calculated by Equation (

2); and Memory usage (RAM), which examines the storage footprint of each instance of data retrieval, calculated in Equation (

3). The test was loaded with 500 concurrent connections as a desired load.

where

RTT is the round trip time in s;

s is the server, and

c is client. In throughput,

I is inventory, and

T is time.

mf = memory free,

b = buffer,

c = memory cache, and

mv = max value.

7. Results

This section discusses the most interesting and meaningful results of this research. The results have been obtained from two different scenarios which are the two legs of the framework connections. Scenario 1 tests the connection between the vehicle device and the edge computing server. Scenario 2 tests the connection between the edge and cloud computing servers in terms of cluster management.

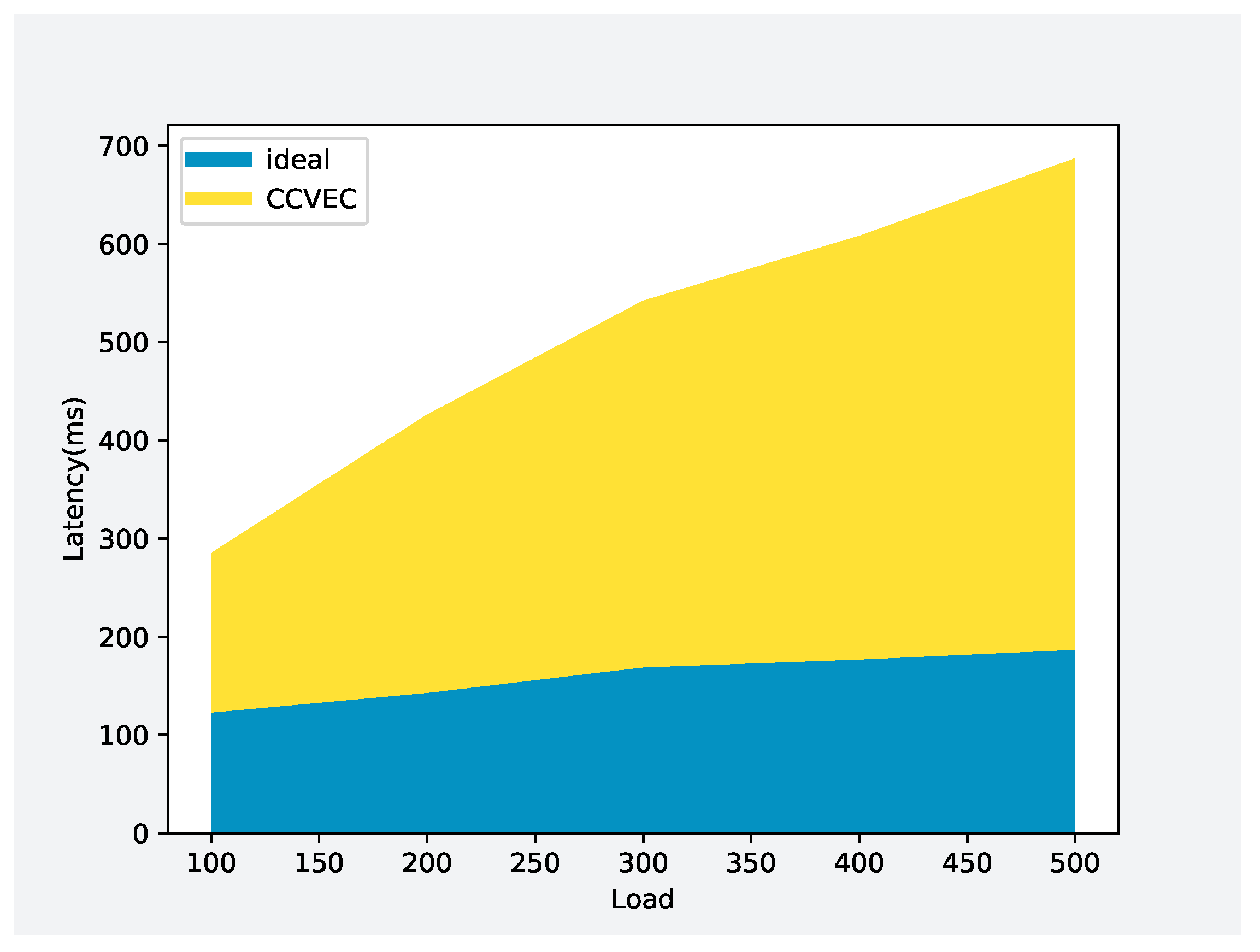

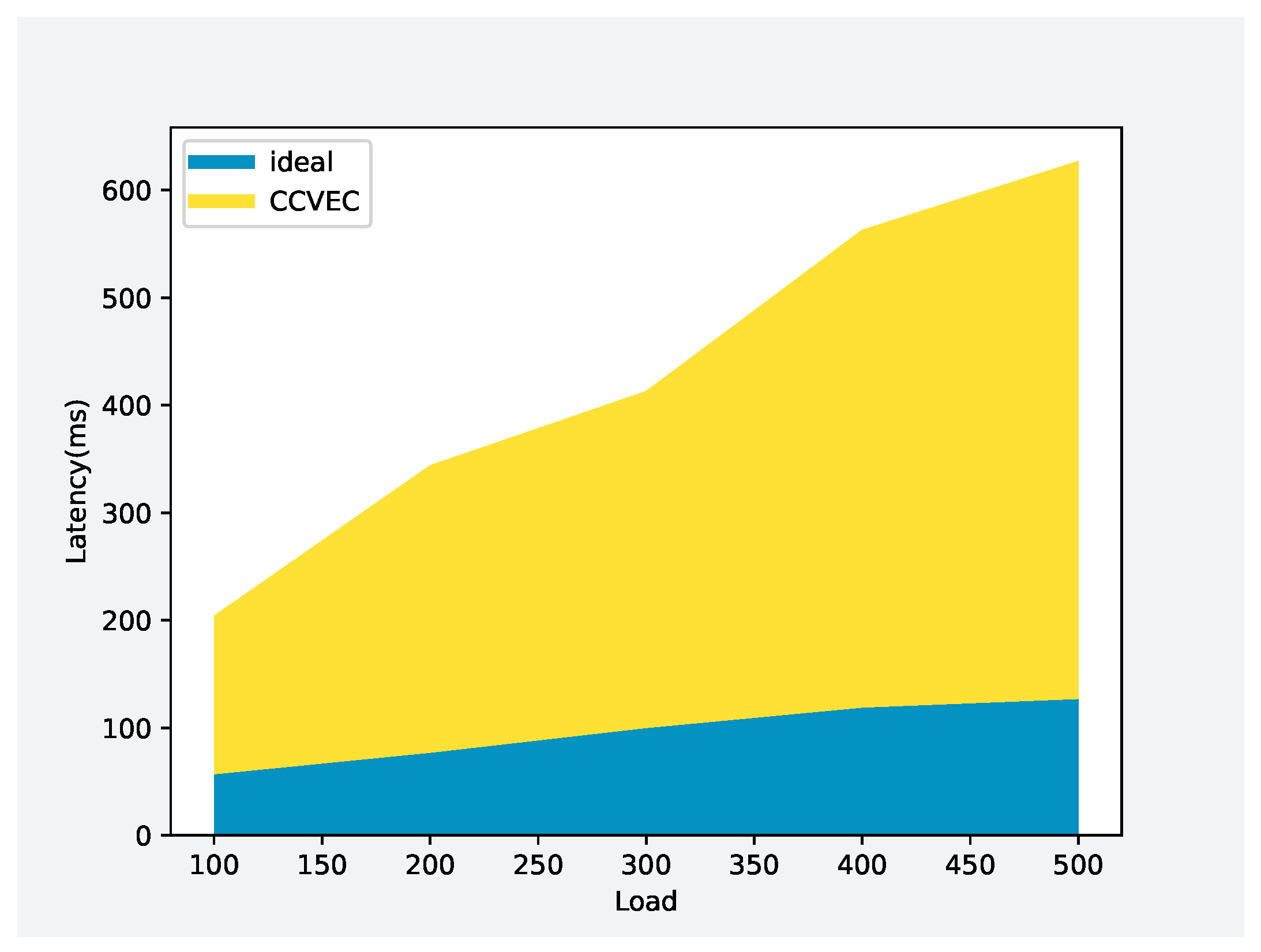

Scenario 1: We can obviously notice from

Figure 6 that, with the latency calculated with the ideal status, the vehicle keeps transferring data towards the edge server, and the test transfers a simple string which only takes 120 ms of latency, which increased dramatically by around 15%. We expected to see stability due to the lack of operations which were involved in the ideal session. Thus, we can clearly notice the difference registered by the proposed CCVEC framework when spending 163 ms for 100 loads and the latency increase while the load increases to spend 283 and 373 ms in 200 and 300 loads, respectively. The latency is attributed to the fact that the edge server is occupied with other connections with the cloud and cluster nodes in the neighbor. Other interesting findings include the fact that the vehicle encounters extra latency when it moves to a new server but it benefits from the pre-processed information that has already been shared from the previous server.

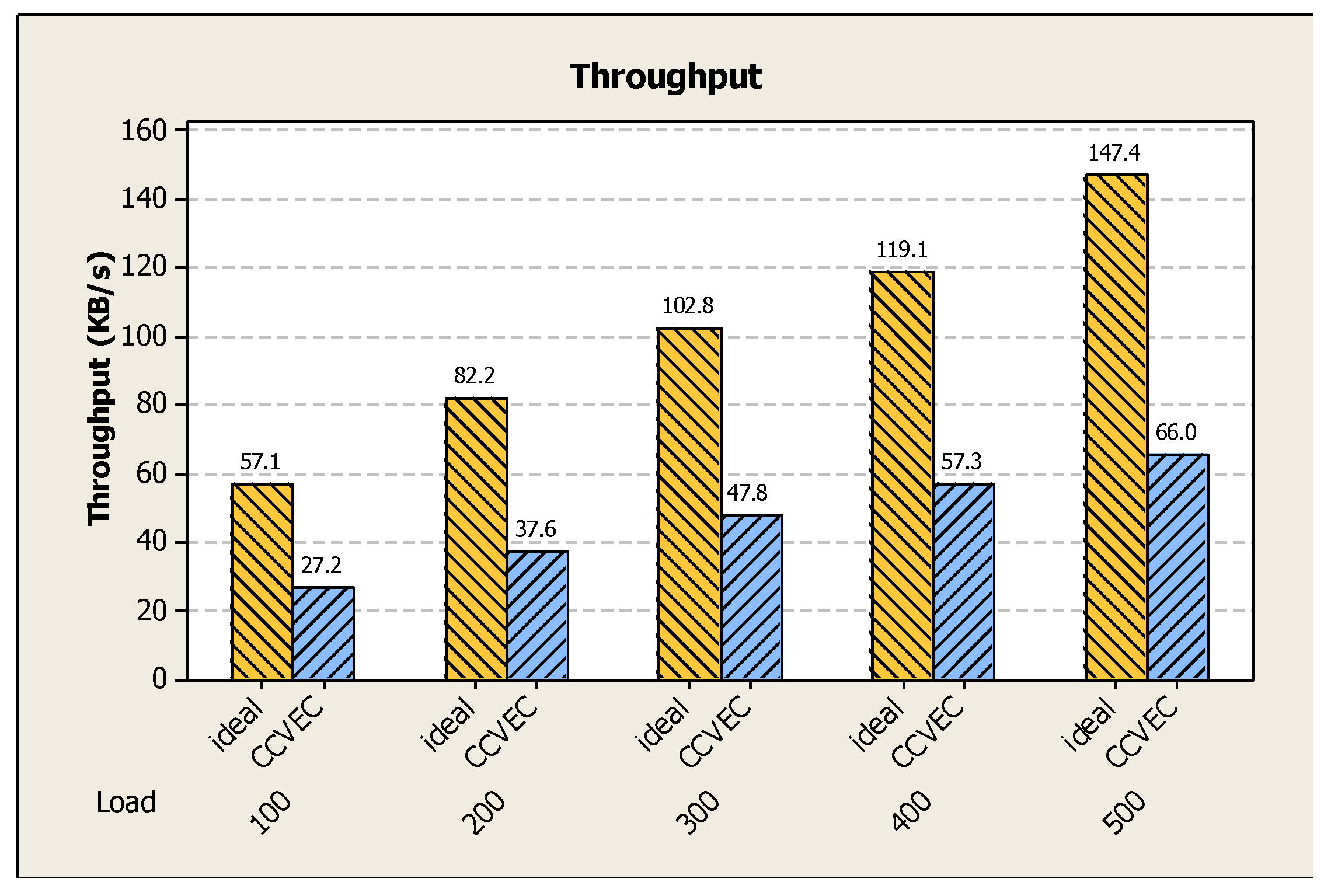

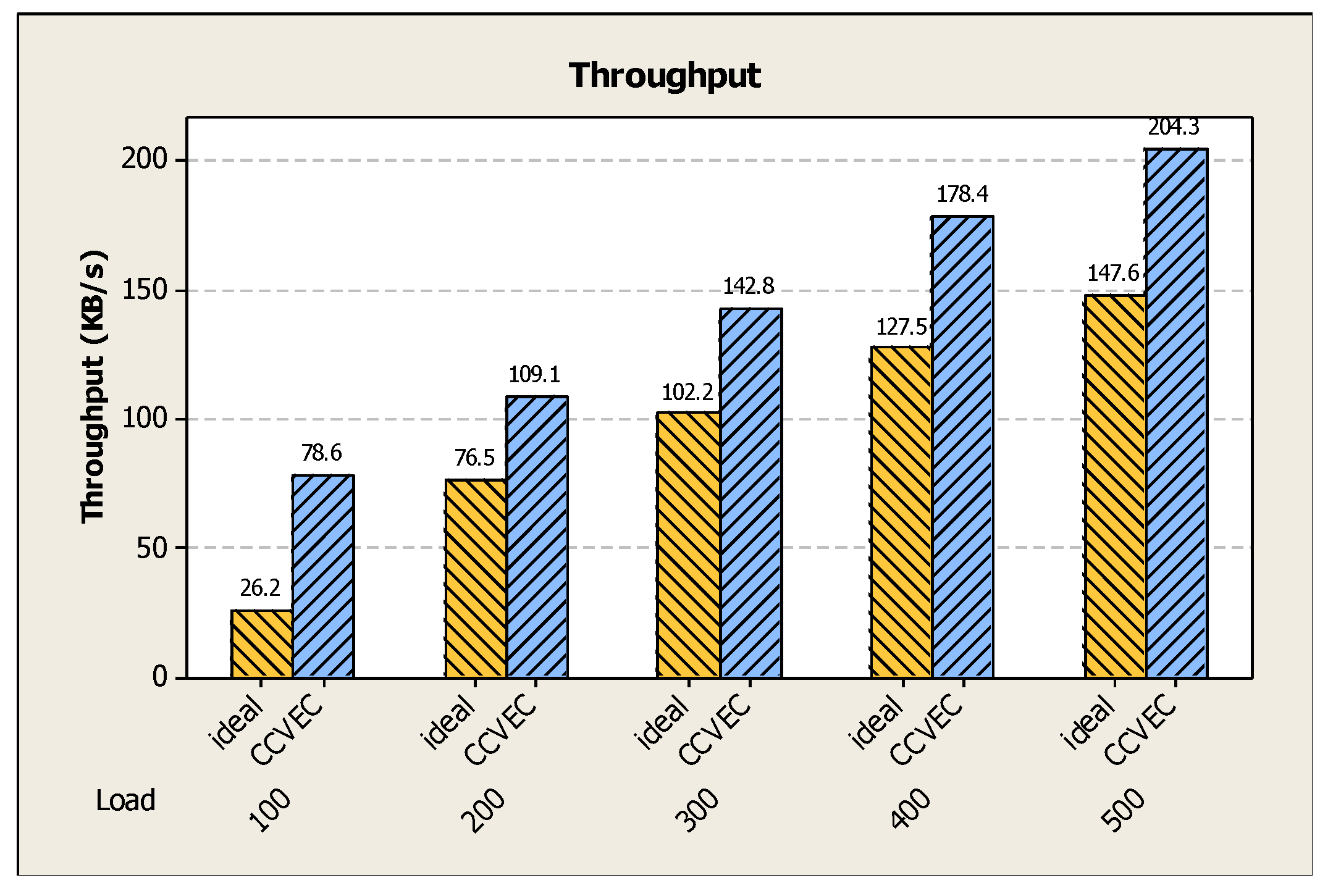

Figure 7 presents interesting findings by measuring the throughput of the proposed mechanism to conclude that, during the ideal session, the edge server receives sufficient throughput from the vehicle device, which is 57.1 kB/s in 100 loads, which is considered to be a high value. That is because the edge server in this case operates normally with other cluster nodes. However, when the patient moves to the next edge server, the CCVEC framework is invoked and the throughput decreases to approximately 50% from the ideal status. That is because the edge server is occupied with the cloud and the next/previous server.

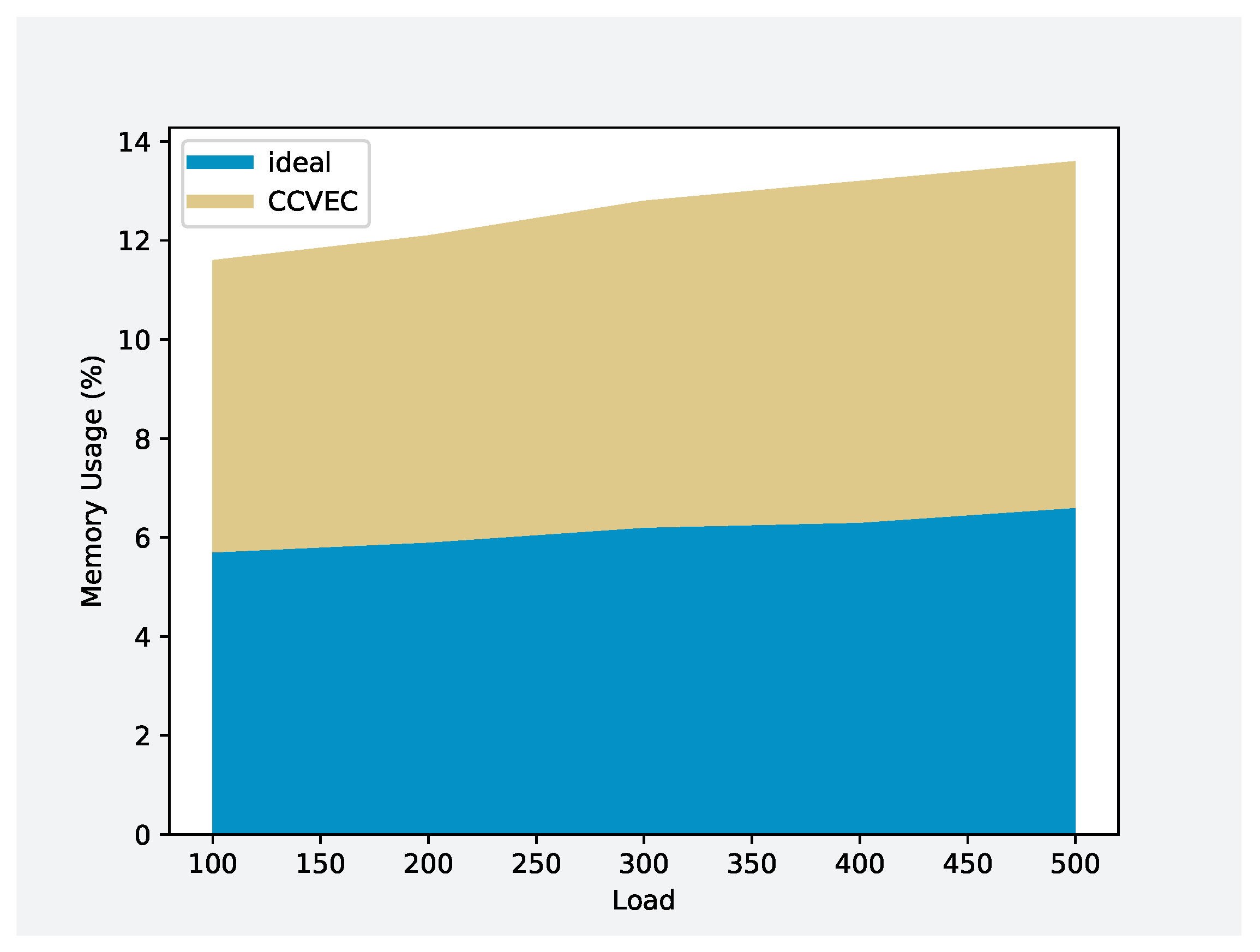

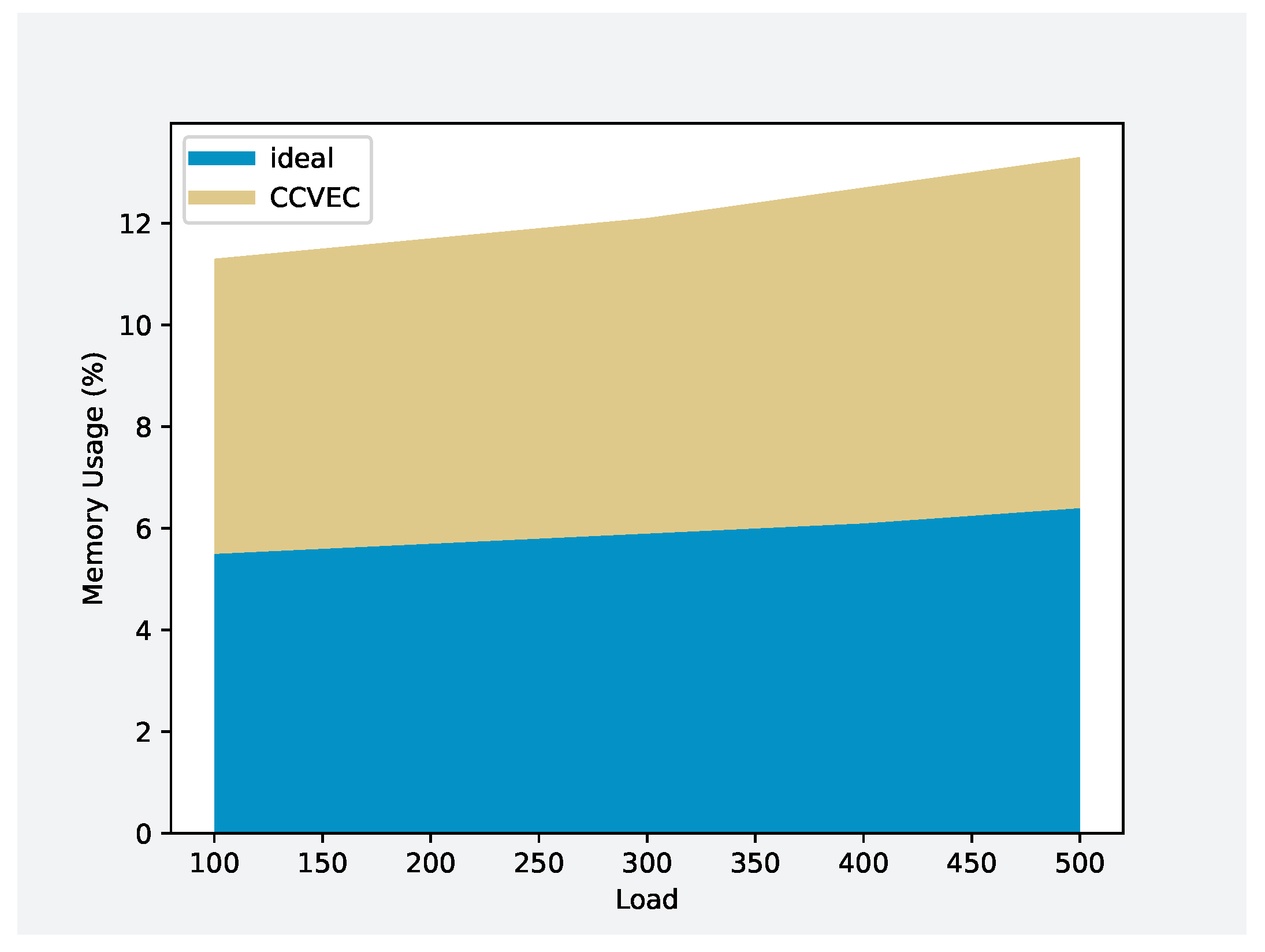

Figure 8 shows the impact of the CCVEC framework on edge servers when it performs read operations from the data center (cloud). Obviously, we can notice that the local database (edge) consumes less memory when it connects to the far-end cloud server, which is due to the high integrity and ideal operations, i.e., it consumes 5.9% of memory. However, once the CCVEC framework is invoked, it starts to read/write the vehicle’s information such as vehicle_ID and the high-resolution map, and the memory increases accordingly to utilize 6.9% of the memory while a load of 400 is applied.

Scenario 2: The scenario presents an interesting part of the results because it measures the vital activities between the edge and the cloud server.

Figure 9 describes the time taken to handle cluster nodes when the vehicle transfers to a new server. It spends 147 ms during 100 loads which is higher than the ideal case by 91 ms. This variance is accepted since it is less than 1 s. We attribute this to the connection with the cloud, next edge server, and some database retrieval. In addition, it is worth mentioning that, when the load is 500, the CCVEC spends 501 ms, which becomes a higher difference from the ideal case because of the intersection session between the two clusters neighbor. The intersection might occur with the previous server as well.

Figure 10 presents the throughput of the proposed CCVEC framework while communicating with the cloud and neighbor servers. In the ideal case, there is low traffic sent towards the cloud from the edge server and it shows 26.2 kB/s in 100 loads, which is due to the fact that no operations were occupied at this time. However, this amount of data (26.2 kB/s) is attributed to the other operations that regularly occurred in the system, such as new events in the cluster change/update policy, or up/down of the cluster. The load 500 shows a promising indication by presenting a 58 kB/s difference from the ideal status. This varies in the cost of all the activities created by the cluster such as update, add, remove, etc. Moreover, the test is conducted in the same network and fixed IP to avoid other network obstacles.

Memory consumption is calculated when the vehicle starts to read/write from the near-edge server. The vehicle updates its track, load map, send/receive ID, and so on. For the overall load, the proposed framework utilized the highest memory (6.8%) footprint, but was still accepted even when a load of 500 is applied as indicated in

Figure 11.

8. Conclusions

By the seamless merging of MEC and vehicular networks, VEC extends the functionalities of the cloud toward the edge of networks, allocating resources in close proximity to vehicles. Several IoV applications are connected to the edge of their network to fulfill the increasing requirements in terms of computation and storage resources. Technically, the collected data from these vehicles have to be transmitted somewhere else to be processed, such as in the cloud. From the earlier designs, the cloud was considered to be too far from the vehicles. In addition, traffic services/applications require very fast and accurate real-time data processing near the vehicles. Edge computing is a solution for vehicles that supports high resource and data processing closer to the vehicle’s device. However, during fast mobility, vehicles encounter slow connection while traveling from one edge server realm to another. This research proposed a framework that guarantees and enhances the connection between the vehicle and edge computing servers. The edge server performs the role of continuously supporting a stable connection for traveling vehicles. The proposed CCVEC framework is implemented and evaluated using OpenStack cloud technology besides three cluster servers. Results have been measured with the throughput, latency, and memory of the entire framework in two different scenarios. We conclude that the proposed framework is performed with acceptable results by spending 163 ms with 100 loads, and the latency increases while the load increases to spend 283 and 373 ms in 200 and 300 loads, respectively. These results are still valid and the values remain within acceptable latency percentages. Also, scenario 2 reflects the same perspective with 500 loads applied, where the throughput is only 60 kB/s from the ideal status. We conclude that the general finding of this research is to facilitate and smoothen the transmission of the vehicle from one realm to the next. Also, vehicle applications are supported with wise decisions in terms of the connection with edge selection. Future works plan to extend the idea by involving new collaborations between vehicles by interchanging vital information on the go which will boost the level of the latency profile and be beneficial from a security perspective while sharing sensitive traffic data.