A Dynamic Service Placement Based on Deep Reinforcement Learning in Mobile Edge Computing

Abstract

:1. Introduction

1.1. Motivation and Challenges

1.2. Contributions and Paper Organization

- We investigate the service placement problem in mobile edge computing with multiple users, and we propose to minimize the total delay of users by considering the limitation on physical resources and cost.

- We propose a decentralized dynamic placement framework based on the deep reinforcement learning (DSP-DRL) by introducing the migration conflict resolution mechanism during the learning process to maintain the service performance for users. We formulate the service placement under the migration conflict into a mixed-integer linear programming (MILP) problem. Then, we propose a migration conflict resolution mechanism to avoid the invalid state and approximate the policy in the decision modular according to the migration feasibility factor.

- Extensive evaluations demonstrate that the proposed dynamic service placement framework outperforms baselines in terms of efficiency and overall latency.

2. Related Work

3. Model and Problem Formulation

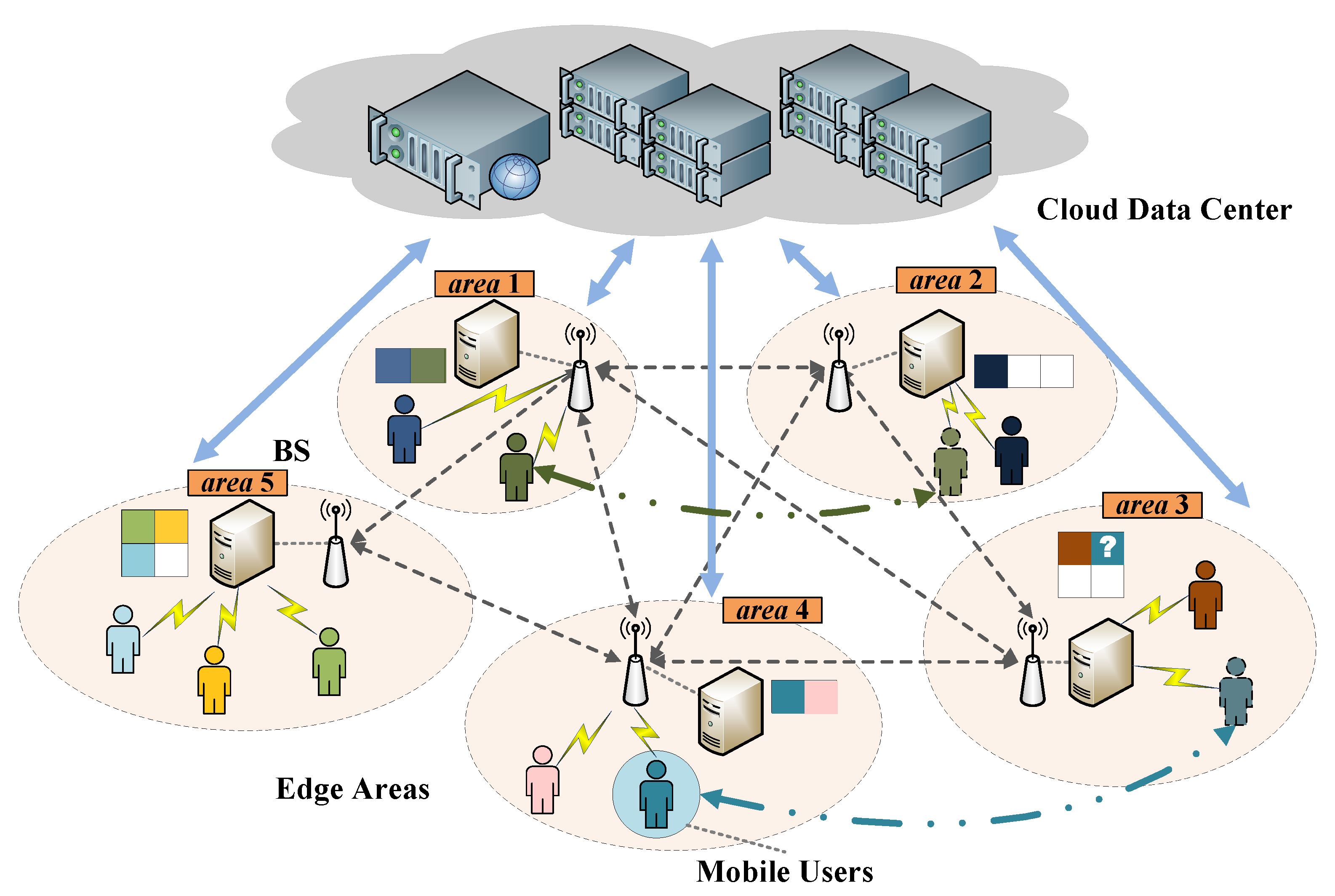

3.1. System Model

3.2. QoS Model

3.2.1. Computing Delay

3.2.2. Communication Delay

3.2.3. Updating Delay

3.3. Problem Formulation

4. Dynamic Service Placement Framework Based on Deep Reinforcement Learning

4.1. Deep Reinforcement Learning Formulation

4.2. Migration Conflicting Resolution Mechanism

4.2.1. Service Placement under Migration Conflict

4.2.2. Migration Conflict Resolution Mechanism

| Algorithm 1 Migration conflict resolution method |

|

4.3. Dynamic Service Placement Based on Deep Reinforcement Learning

| Algorithm 2 Dynamic service placement based on DRL |

|

5. Evaluations

5.1. Basic Setting

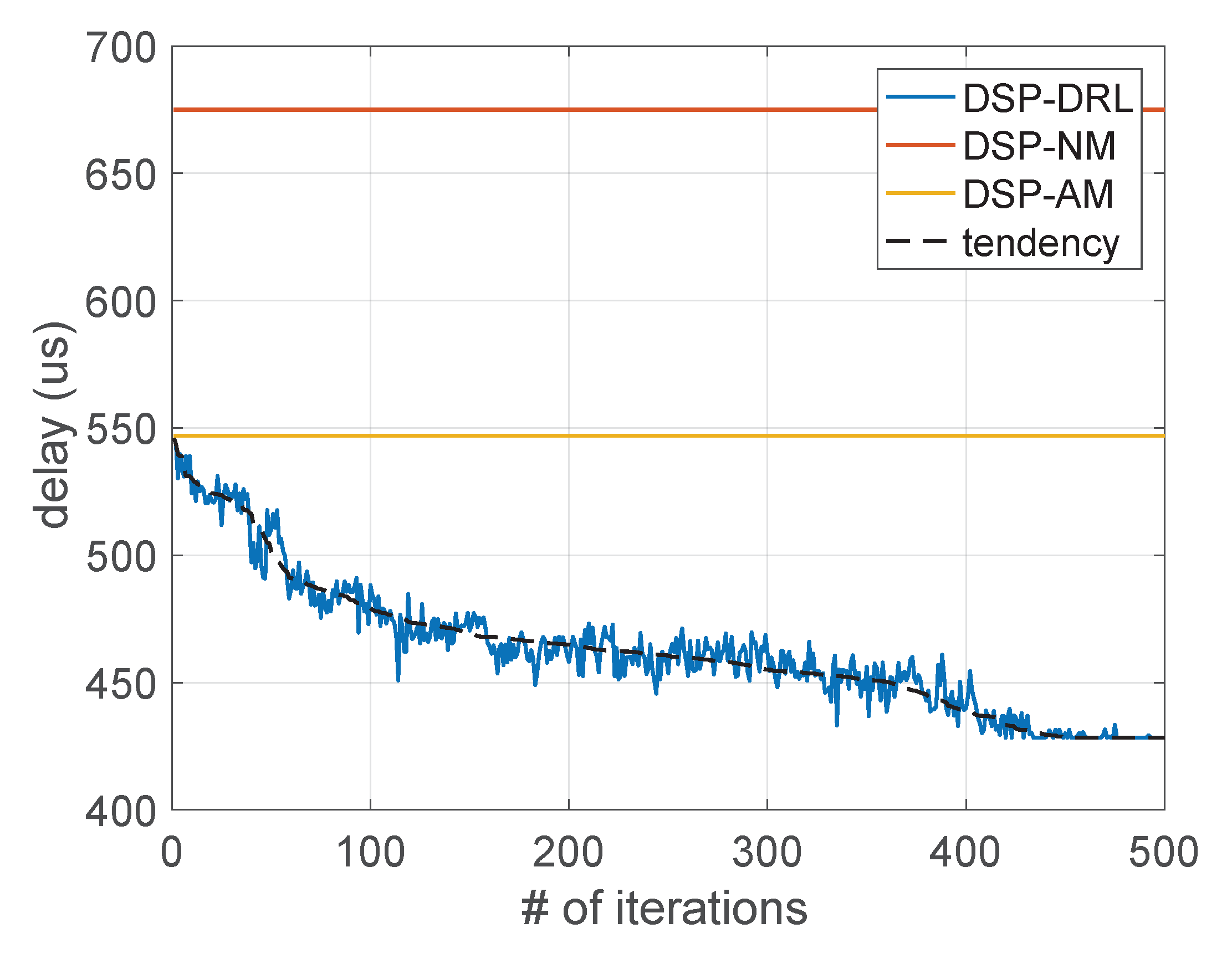

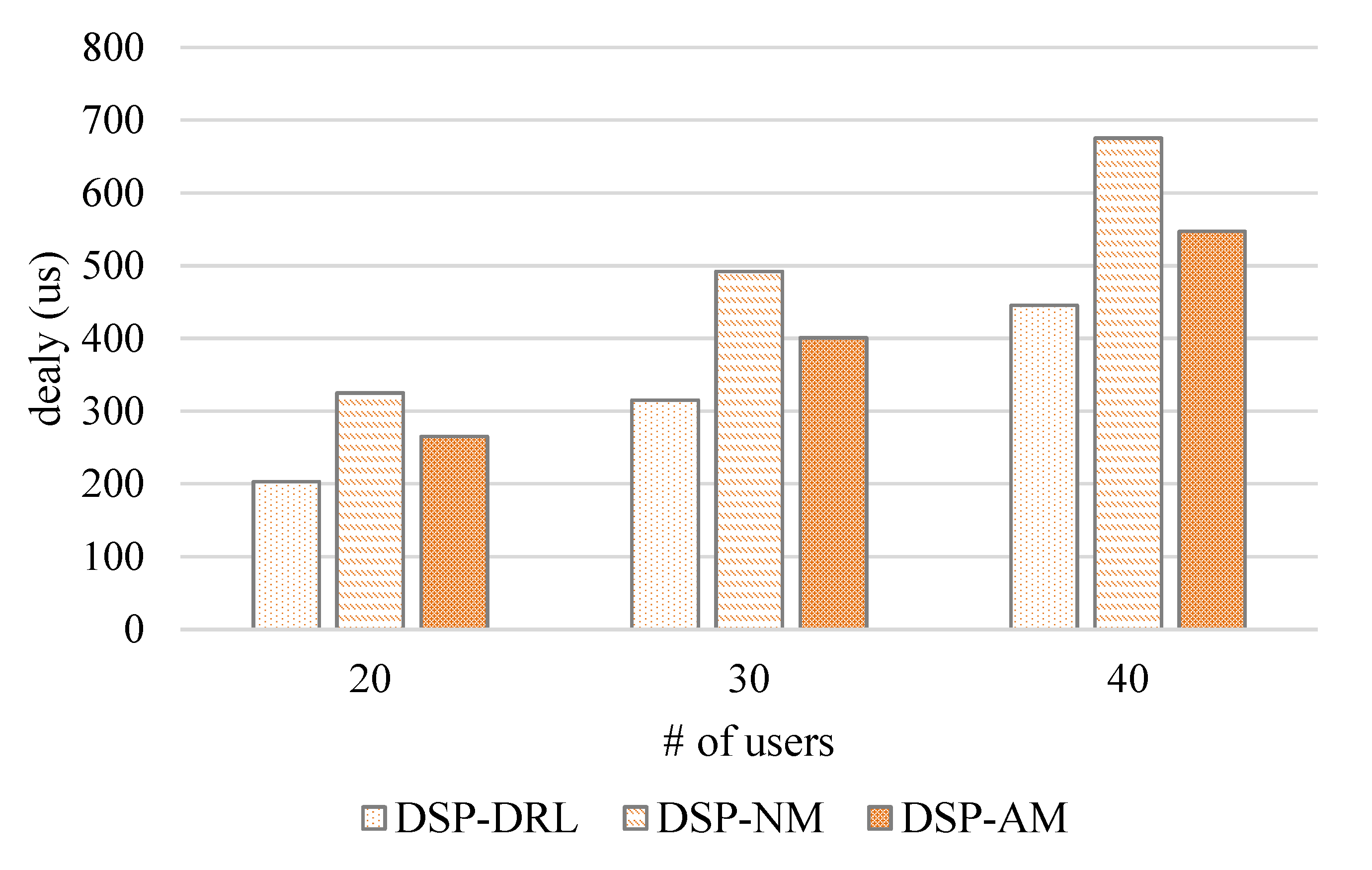

- DSP-NM: Services are placed on the initialized edge server, and there is no migration in the timescale of multiple mobile users.

- DSP-AM: Services always migrate according to the users’ dynamic trajectories in the timescale.

5.2. Experiment Results

5.2.1. Convergence

5.2.2. Total Delay

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Abbas, N.; Zhang, Y.; Taherkordi, A.; Skeie, T. Mobile edge computing: A survey. IEEE Internet Things J. 2017, 5, 450–465. [Google Scholar] [CrossRef] [Green Version]

- Salaht, F.A.; Desprez, F.; Lebre, A. An overview of service placement problem in fog and edge computing. ACM Comput. Surv. (CSUR) 2020, 53, 1–35. [Google Scholar] [CrossRef]

- Siew, M.; Guo, K.; Cai, D.; Li, L.; Quek, T.Q. Let’s Share VMs: Optimal Placement and Pricing across Base Stations in MEC Systems. In Proceedings of the IEEE INFOCOM 2020-IEEE Conference on Computer Communications, Vancouver, BC, Canada, 10–13 May 2021. [Google Scholar]

- Ning, Z.; Dong, P.; Wang, X.; Wang, S.; Hu, X.; Guo, S.; Qiu, T.; Hu, B.; Kwok, R.Y. Distributed and dynamic service placement in pervasive edge computing networks. IEEE Trans. Parallel Distrib. Syst. 2020, 32, 1277–1292. [Google Scholar] [CrossRef]

- Pasteris, S.; Wang, S.; Herbster, M.; He, T. Service placement with provable guarantees in heterogeneous edge computing systems. In Proceedings of the IEEE INFOCOM 2019-IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 514–522. [Google Scholar]

- Chen, L.; Shen, C.; Zhou, P.; Xu, J. Collaborative service placement for edge computing in dense small cell networks. IEEE Trans. Mob. Comput. 2019, 20, 377–390. [Google Scholar] [CrossRef]

- Yu, N.; Xie, Q.; Wang, Q.; Du, H.; Huang, H.; Jia, X. Collaborative service placement for mobile edge computing applications. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, United Arab Emirates, 9–13 December 2018; pp. 1–6. [Google Scholar]

- Gu, L.; Zeng, D.; Hu, J.; Li, B.; Jin, H. Layer Aware Microservice Placement and Request Scheduling at the Edge. In Proceedings of the IEEE INFOCOM 2021-IEEE Conference on Computer Communications, Vancouver, BC, Canada, 10–13 May 2021; pp. 1–9. [Google Scholar]

- Xu, X.; Liu, X.; Xu, Z.; Dai, F.; Zhang, X.; Qi, L. Trust-oriented IoT service placement for smart cities in edge computing. IEEE Internet Things J. 2019, 7, 4084–4091. [Google Scholar] [CrossRef]

- Maia, A.M.; Ghamri-Doudane, Y.; Vieira, D.; de Castro, M.F. Optimized placement of scalable iot services in edge computing. In Proceedings of the 2019 IFIP/IEEE Symposium on Integrated Network and Service Management (IM), Washington, DC, USA, 8–12 April 2019; pp. 189–197. [Google Scholar]

- Fu, K.; Zhang, W.; Chen, Q.; Zeng, D.; Peng, X.; Zheng, W.; Guo, M. Qos-aware and resource efficient microservice deployment in cloud-edge continuum. In Proceedings of the 2021 IEEE International Parallel and Distributed Processing Symposium (IPDPS), Portland, OR, USA, 17–21 May 2021; pp. 932–941. [Google Scholar]

- Wang, S.; Urgaonkar, R.; Zafer, M.; He, T.; Chan, K.; Leung, K.K. Dynamic service migration in mobile edge computing based on Markov decision process. IEEE/ACM Trans. Netw. 2019, 27, 1272–1288. [Google Scholar] [CrossRef] [Green Version]

- Gao, B.; Zhou, Z.; Liu, F.; Xu, F. Winning at the starting line: Joint network selection and service placement for mobile edge computing. In Proceedings of the IEEE INFOCOM 2019-IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 1459–1467. [Google Scholar]

- Ouyang, T.; Zhou, Z.; Chen, X. Follow me at the edge: Mobility-aware dynamic service placement for mobile edge computing. IEEE J. Sel. Areas Commun. 2018, 36, 2333–2345. [Google Scholar] [CrossRef] [Green Version]

- Rui, L.; Zhang, M.; Gao, Z.; Qiu, X.; Wang, Z.; Xiong, A. Service migration in multi-access edge computing: A joint state adaptation and reinforcement learning mechanism. J. Netw. Comput. Appl. 2021, 183, 103058. [Google Scholar] [CrossRef]

- Liu, Q.; Cheng, L.; Ozcelebi, T.; Murphy, J.; Lukkien, J. Deep reinforcement learning for IoT network dynamic clustering in edge computing. In Proceedings of the 2019 19th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGRID), Larnaca, Cyprus, 14–17 May 2019; pp. 600–603. [Google Scholar]

- Park, S.W.; Boukerche, A.; Guan, S. A novel deep reinforcement learning based service migration model for mobile edge computing. In Proceedings of the 2020 IEEE/ACM 24th International Symposium on Distributed Simulation and Real Time Applications (DS-RT), Prague, Czech Republic, 14–16 September 2020; pp. 1–8. [Google Scholar]

- Yuan, Q.; Li, J.; Zhou, H.; Lin, T.; Luo, G.; Shen, X. A joint service migration and mobility optimization approach for vehicular edge computing. IEEE Trans. Veh. Technol. 2020, 69, 9041–9052. [Google Scholar] [CrossRef]

- Pan, L.; Cai, Q.; Fang, Z.; Tang, P.; Huang, L. A deep reinforcement learning framework for rebalancing dockless bike sharing systems. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 1393–1400. [Google Scholar]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic policy gradient algorithms. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 387–395. [Google Scholar]

- Wei, X.; Wang, Y. Joint resource placement and task dispatching in mobile edge computing across timescales. In Proceedings of the 2021 IEEE/ACM 29th International Symposium on Quality of Service (IWQOS), Tokyo, Japan, 25–28 June 2021; pp. 1–6. [Google Scholar]

- Lu, S.; Wu, J.; Duan, Y.; Wang, N.; Fang, J. Towards cost-efficient resource provisioning with multiple mobile users in fog computing. J. Parallel Distrib. Comput. 2020, 146, 96–106. [Google Scholar] [CrossRef]

- Taleb, T.; Ksentini, A.; Frangoudis, P.A. Follow-me cloud: When cloud services follow mobile users. IEEE Trans. Cloud Comput. 2016, 7, 369–382. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.; Guo, Y.; Zhang, N.; Yang, P.; Zhou, A.; Shen, X.S. Delay-aware microservice coordination in mobile edge computing: A reinforcement learning approach. IEEE Trans. Mob. Comput. 2019, 29, 939–951. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Kleinberg, J.; Tardos, E. Algorithm Design; Pearson Education: Noida, India, 2006. [Google Scholar]

| Notation | Definition |

|---|---|

| M | Set of MEC nodes, where . |

| U | Set of users, where . |

| V | Set of services, where . |

| Set of services placed on edge server . | |

| Set of users served by the services in set . | |

| A boolean variable that indicates serving on edge server at time slot t. | |

| The amount of required computing resource of at time slot t. | |

| The computing delay of . | |

| The communication delay of . | |

| Updating delay of during the dynamic migration. | |

| Maximum transmission rate between and . | |

| Channel bandwidth of link between and . | |

| Physical distance between and . | |

| The storage capacity of . | |

| The computing capacity of . |

| Hyperparameter | Settings |

|---|---|

| learning rate for actor | 0.001 |

| earning rate for critic | 0.002 |

| reward decay | 0.9 |

| soft replacement | 0.01 |

| replay memory | 200 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, S.; Wu, J.; Shi, J.; Lu, P.; Fang, J.; Liu, H. A Dynamic Service Placement Based on Deep Reinforcement Learning in Mobile Edge Computing. Network 2022, 2, 106-122. https://doi.org/10.3390/network2010008

Lu S, Wu J, Shi J, Lu P, Fang J, Liu H. A Dynamic Service Placement Based on Deep Reinforcement Learning in Mobile Edge Computing. Network. 2022; 2(1):106-122. https://doi.org/10.3390/network2010008

Chicago/Turabian StyleLu, Shuaibing, Jie Wu, Jiamei Shi, Pengfan Lu, Juan Fang, and Haiming Liu. 2022. "A Dynamic Service Placement Based on Deep Reinforcement Learning in Mobile Edge Computing" Network 2, no. 1: 106-122. https://doi.org/10.3390/network2010008

APA StyleLu, S., Wu, J., Shi, J., Lu, P., Fang, J., & Liu, H. (2022). A Dynamic Service Placement Based on Deep Reinforcement Learning in Mobile Edge Computing. Network, 2(1), 106-122. https://doi.org/10.3390/network2010008