Abstract

This review explores the practical use of the (Observer)—Reporter—Interpreter—Manager—Expert ((O)RIME) model in the assessment of clinical reasoning skills and for the potential to provide effective feedback that can be used in clinical teaching of veterinary learners. For descriptive purposes, we will use the examples of bovine left displaced abomasum and apparently anestric cow. Bearing in mind that the primary purpose of effective clinical teaching is to prepare graduates for a successful career in clinical practice, all effort should be made to have veterinary learners, at graduation, achieve a minimum of Manager level competency in clinical encounters. Contrastingly, there is relatively scant literature concerning clinical teaching in veterinary medicine. There is even less literature available on strategies and frameworks for assessment that can be utilized in the different settings that the veterinary learners are exposed to during their education. Therefore, our intent for this review is to stimulate and/or facilitate discussion and/or research in this important area. The primary aim of preparing this review was to describe a teaching technique not currently used in the teaching of veterinary medicine, with potential to be useful.

1. Introduction

The aim of clinical teaching in veterinary medicine is to prepare new entrants into the profession to meet all required day-1 veterinary graduate competencies. One of the cornerstones in the development of veterinary learners and their transition into practitioners is the exposure to practice. Exposure to practice (experiential learning or work-based learning) aims to assist veterinary learners to develop veterinary medical and professional attributes within the specific clinical context of the work [1]. One problem with exposure to practice is the assessment of progress in the clinical (reasoning) competencies of learners. This may be even more difficult with the increasing number of veterinary schools opting for a partial or entirely distributed model of exposure to practice, as instructors in the distributed institutions may be inexperienced in the assessment of the progression in clinical competencies of learners. In this review, we provide a description of one assessment framework that can be utilized for this purpose.

Development of clinical competencies in veterinary learners is heavily dependent on effective feedback [2,3,4,5,6,7]. With the aim of enhancing the learner’s performance, feedback must be relevant, specific, timely, thorough, given in a ‘safe environment’, and offered in a constructive manner using descriptive rather than evaluative language. For good execution of provision of effective feedback, instructors should receive formal training in the delivery of feedback [2,8,9,10,11,12].

1.1. Basics of Assessment of Clinical Competency of Veterinary Learners

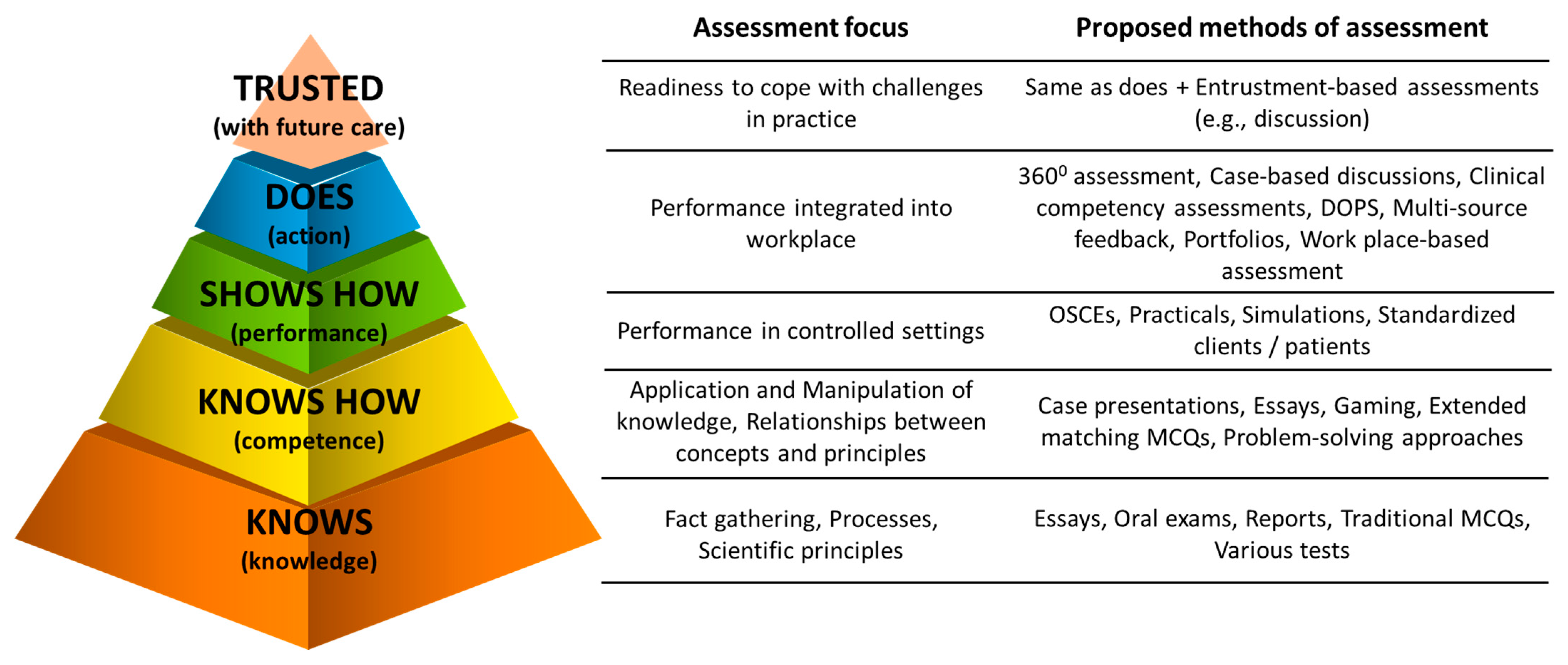

The foundation of assessment should be addressing domains stated within Miller’s pyramid and its extension (Figure 1) [13,14,15]. Miller’s pyramid gave rise to competency-based medical education [13,14]. A variety of frameworks for assessment of clinical competencies are available to medical instructors [14,16]. Detailed discussion of all frameworks is beyond the scope of this article. Readers are recommended to read reviews or appropriate articles on assessment of clinical competencies in learners in medical fields (e.g., [16,17]) and/or educational psychology related to veterinary learners [18].

Figure 1.

Extended Miller’s pyramid of clinical competence of learners that can be useful to frame minds of veterinary instructors when assessing learners or planning learning and assessment activities (Modified from [13,14]). DOPS—Direct Observation of Procedural Skills. MCQ—Multi Choice Question. OSCE—Objective Structured Clinical Examination.

Overall, the frameworks for assessment of clinical competencies of learners have been classified as (1) analytical, that deconstruct clinical competence into individual pieces (e.g., attitudes, knowledge and skills), and each of these is assessed separately (e.g., ACGME framework—Accreditation Council for Graduate Medical Education); (2) synthetic, that attempt to view clinical competency comprehensively (synthesis of attitudes, knowledge and skills), assessing clinical performance in real-world activities (e.g., EPA—Entrustable Professional Activity or RIME frameworks); (3) developmental, that relates to milestones in the progression towards a clinical competence (e.g., ACGME or RIME frameworks and the novice to expert approach); or (4) hybrid, that incorporates assessment of clinical competency from a mixture of the above (e.g., CanMEDs framework, derived from the old acronym of Canadian Medical Education for Specialists) [16,18,19,20].

In contrast, despite a significant proportion of veterinary medical education occurring in clinical settings, the literature describing approaches to assessment of the clinical competency of learners is limited [18]. Taking into consideration the One Health approach, methodologies used in human medicine should be applicable in veterinary medicine. Medical education literature has a much larger body of evidence indicating that some of these methods work in various fields of medicine, including in-patient and out-patient care.

1.2. Common Traps in Assessment of Clinical Competency in Veterinary Learners

Common traps include (1) assessing theoretical knowledge rather than clinical competencies [13,14]; (2) assessing learners against each other rather than set standards [21]; (3) belief that pass/fail or similar grading system negatively affects clinical competency of learners compared to tiered grading system [22,23]; (4) different weightings for attitudes, knowledge and skills [24]; (5) leniency-bias (‘fail-to-fail’ or ‘halo’) using unstructured assessments of the clinical competency of learners [16,22,25]; and (6) occasional rather than continuous assessment of the learner’s performance [24]. A plethora of assessment methods that can objectively assess the clinical competency of the learner in the medical field have emerged in the past few decades [14,16,18] but very few have been adopted in the veterinary medicine setting.

2. (O)RIME Framework for Assessment of Clinical Competency of Learners

One method of assessment that is used and is transferable to veterinary medicine is the (Observer)—Reporter—Interpreter—Manager—Educator ((O)RIME) framework. The RIME framework was first described by Pangaro (1999) and has since been reported in a variety of settings with a minimal need for adaptations. [19,24,26]. In essence, the RIME framework of assessment is a tool used to formatively assess a student’s ability to synthesize knowledge, skills and attitude during a clinical encounter. It classifies learners into a continuum of four levels of professional development, starting as reporters, through interpreter and manager, arriving at the mastery in the learner’s level of clinical competency, namely educator. A major addition to this model proposed by Battistini et al. (2002), was adding an initial step in the professional development of a learner—observer (ORIME) [21,27]—referring to the student’s ability to pay attention and perceive with open-mindedness, events around them.

2.1. Levels of Clinical Competence of Learners Using the (O)RIME Model of Assessment

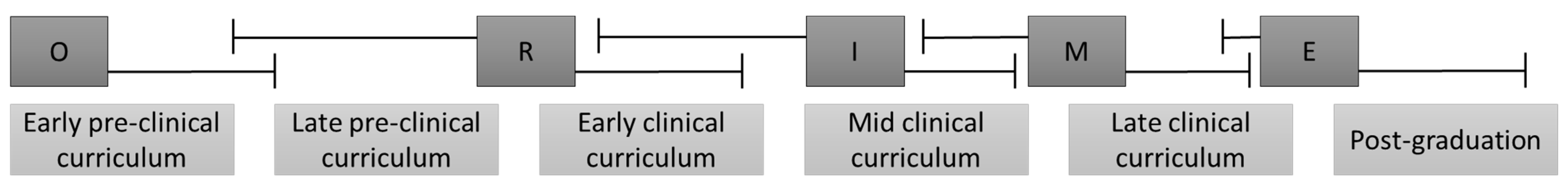

Learners show significant differences in their clinical competencies at the moment of reaching various levels of the ORIME framework. We will briefly present the typical expectations with progression of veterinary learners through the (O)RIME framework (Figure 2) [24,27,28,29,30].

Figure 2.

A typical expectations or progression of veterinary learners through the (O)RIME framework. A significant overlap of the times when a particular learner progresses from one level of the framework to the next is common. O—Observer. R—Reporter. I—Interpreter. M—Manager. E—Educator. Prepared using information from [24,27,28,29].

Learners at the observer level, typically representative of the very early part of the curriculum, lack skills to conduct a comprehensive health interview and/or present the clinical encounter at rounds or to peers or instructors. Learners at the observer level lack competencies that would contribute to the management of the particular case and patient care.

Learners at the reporter level, typically representative of the late part of the pre-clinical and early part of the clinical curriculum, should be capable of gathering reliable clinical information, preparation of basic clinical notes, able to differentiate normal from abnormal, and present their findings to peers and/or instructors.

Learners at the interpreter level, typically representative of the early to mid-part of the clinical curriculum, should be capable of organizing gathered information in a logical way, preparing a prioritized list of differential diagnoses without prodding, and able to support their arguments for inclusion/exclusion of particular diagnoses/tests. They may or may not be able to propose a management plan for the clinical encounter.

Learners at the manager level, typically representative of the mid- to late-part of the clinical curriculum, should be capable of summarizing the gathered information in a logical way using veterinary medical language, preparing a prioritized list of differential diagnoses, support their arguments and propose an appropriate management plan. At the manager level, the learner should consider the client’s circumstances, needs and preferences.

Finally, learners at the educator level, typically representative of the advanced part of the clinical curriculum, should be capable of doing all of the above coupled with a critique of the encounter, including important omissions and further research questions, and present the case in a way that can educate others. Learners at this level are truly self-directed. It is a reality that some learners do not reach the level of educator by the time they graduate from the veterinary school and may need 2–3 years post-graduation or residency to reach this level.

A significant overlap in the levels of achieved clinical competency is common [24,27,29]. This, in part, may be a result of the prior exposure to and complexity of the clinical encounter the learner is dealing with. For example, a learner in familiar or non-complicated clinical encounters may work at Manager level, but for the complicated or unfamiliar clinical encounters may operate at Interpreter level. Indeed, a very complicated clinical encounter may even result in the learner, operating at Reporter level.

2.2. Advantages of the (O)RIME Framework

The major advantages of the (O)RIME framework include

- A systematic structure of expectations that can be coupled with day one competencies to guide students though their education, and allow Faculty to assess and evaluate the introduction, implementation and assessment of these skills in the curriculum [30].

- Allows for early detection of at-risk learners [17,21,28,31]. These identified learners need immediate attention. The prompt addressing of poor performance has been stated as a priority for any medical, presumably including veterinary medical, education delivered in an outcome-based training mode [32].

- Allows for standardized assessment of clinical competencies of learners and, when implemented correctly, should prevent assessment of learners relative to each-other [21].

- As the narrative associated with the (O)RIME framework is easy to understand, it benefits the learner both during feedback sessions or at receiving a report card [26,33].

- At each level, it assesses a synthesis of attitudes, knowledge and skills, rather than assessing them individually as done with many other frameworks of assessment.

- Can be used for assessment of a single clinical encounter, day, week or an entire course. Hence, the (O)RIME framework can be used as an assessment method for the creating of a regular record of in-training assessment (RITA) [34]

- Proven to possess good repeatability and reliability in a variety of human medical clinical settings [18,26,28].

- The ease of relating assessment to Bloom’s taxonomy of learning [35,36], as the five levels of competency represent a developmental framework of progressively higher cognitive skills achieved by the learner; that is, from data gathering to analysis, synthesis, and evaluation [28].

- The framework takes advantage of a clinician’s ability to draw conclusions from observations and data, and uses that the same diagnostic approach in the assessment of students whilst also addressing the emotional difficulty teachers have in “giving” a grade, e.g., “does what I see before me in this patient fit better with xxx” translates into ”does what I see before me in this student fit better with reporter, interpreter or manager?” [37].

2.3. Limitations of the (O)RIME Framework

(O)RIME framework has some limitations.

- The meaning of words and terminology are not always self-evident, e.g., “reporting” is not simply repeating the facts but is about the process of getting the facts [37].

- The (O)RIME framework is not suitable for assessing individual skills. It rather assesses the overall clinical competency of the learner [21], albeit potentially within a single clinical encounter. This is completely understandable as the framework is a synthetic method of assessment, not analytical.

- During a single clinical encounter a learner may demonstrate capacity from several domains of the (O)RIME framework [33]. This may occur in any of the assessments. Analytical methods of assessment may suffer less from this problem.

- The framework is not designed for assessment of non-technical skills and competencies but rather for only technical skills and competencies [26,33]. Hence, Holmes et al. (2014) recommended the use of the (P)RIME framework, where P is for professionalism [33]. An alternative assessment method for soft skills is the multi-sourced feedback [34]. However, the suggested limitation of the framework related to the assessment of non-technical skills may not be entirely true, as some studies have shown it to be effective in assessing these skills and competencies of learners [38]. Clearly, this ‘limitation’ requires further investigation.

- The framework is often not recommended as a sole method of assessment of the progression of learners [21], particularly at schools relying on a tier mark-associated grading system. Hence, some authors have recommended it to be used only as part of the toolbox of assessment of the progression of learners [21] coupled with methodologies such as direct observations.

- For fulfilling the assessment requirements, the (O)RIME framework requires team involvement (observations by all team members), rather than grading by a single person. Hence, some organizational skills are required to ensure ‘a round table discussion’ occurs before the level is discussed by a nominated person with the learner [39]. However, this limitation of the framework may not be always true as some studies have shown good assessment characteristics using the learners’ progression judged by single assessors [40].

- Educators can get confused that the (O)RIME is a developmental framework in which students go through the phases. It is not. When the student moves from ‘reporter’ to ‘interpreter’, they do not stop being a reporter. When they move to ‘manager’, they must continue to gather information and interpret it.

3. Examples of Bovine Clinical Encounters

We provide two examples of the use of the (O)RIME method both in assessment of clinical reasoning competencies and provision of effective feedback using two bovine clinical encounters. From the examples, readers should be able to extract the basics of the method and start using it in their day-to-day practice. The intent is that this review will facilitate discussion and/or research in the areas of assessment of clinical reasoning and effective feedback in clinical teaching of learners of veterinary medicine.

3.1. Example Clinical Encounter: Left Displaced Abomasum in a Dairy Cow

As the first example clinical encounter, we used a case of left displaced abomasum in a mature dairy cow. The ORIME model for the clinical encounter is presented in Table 1. Feedback must be effective and stimulate further development of deep learning and progression in clinical competencies of the leaner [21].

Table 1.

Example clinical encounter: Left displaced abomasum in a mature dairy cow.

3.2. Example Clinical Encounter: Left Displaced Abomasum in a Dairy Cow

As the second example clinical encounter, we used a case of apparently anestric mature dairy cow. The ORIME model for the clinical encounter is presented in Table 2.

Table 2.

Example clinical encounter: Apparently anestrus mature dairy cow.

4. Discussion

The primary aim of preparing this review was to describe a teaching technique used in other disciplines of the OneHealth initiative that is not currently used in the teaching of veterinary medicine, but has been identified as a useful technique based on the success of its use in other disciplines. Assessment of the clinical competency of veterinary learners is a major and, often, challenging task for many veterinary schools. In fact, many of the assessment frameworks lack reliability. For example, a reliable assessment using the multi-source feedback (used by a few schools that we are aware of), requires a minimum of seven assessment forms. In reality, seven available forms are rarely, if ever, reached [24], which compromises the credibility of the assessment of the clinical competency of veterinary learners using that tool. Other assessment methods have good reliability [46] but are not easily implemented due to high work load, unwillingness to participate, or lack of feedback information to the learner (e.g., script concordance questions).

It is our view that the (O)RIME framework has the potential to be an attractive assessment method for measuring and reporting the progression of clinical competencies in veterinary learners; however, it is not suitable for assessment of individual skills (not being subject of this review (NOTE: Readers are urged to seek suitable literature on assessment of individual skills.). The framework has been tested and proven to be useful in a variety of setting and branches of human medical education [19,24,26,29,30,42,47,48,49,50]. Some studies have assessed usefulness in assessment of multi-clerk medical educational facilities [29,42]. Interestingly, single assessor descriptors of progression using the (O)RIME framework have been compared and correlated well with other final scoring systems assessing performance of learners during their clinical rotations [40]. Additionally, the framework has been proven to correlate well between non-trained experienced and less experienced instructors and residents [40]. This is an important finding as instructor experience and training are highly influential in the grading of many other assessment frameworks, but should be taken cautiously as it is based on the experience of in-patients in a single institution. Indeed, training in assessment using the framework resulted in even further improvements of performance [40].

Although results from studies in the field of medical education provide some comfort, for a strong recommendation for use of the (O)RIME framework to be made, research in the field of veterinary medical education is required. The research should test the assessment characteristics of the framework but also its acceptability, educational effect (see below) and feasibility [24,25,51].

As a minimum, the framework needs to be tested for its reliability and validity. Reliability of an assessment method (some authors prefer the term generalizability) describes the extent to which a particular score of the measurement of the clinical competency of the learner would yield the same results on repeated assessment (i.e., what is the reproducibility of scores obtained by that particular method) [51,52]. For the framework to be accepted as an attractive assessment method for measuring clinical competence of veterinary learners, reliability should be at least 80% [24]. However, some studies in human medicine have found the framework performing overall at lesser values (e.g., as low as ~75% [42]) in part due to the subspecialty studied and the need for insufficient assessments. However, this is yet higher than for other proposed assessment tasks, such as the IDEA (interpretive summary, differential diagnosis, explanation of reasoning, and alternatives) that overall had just over 50% reliability [37,53]. Validity of an assessment method describes the degree to which the inferences made about the clinical competence of the learner are correct (i.e., does the method measure what it is supposed to do?) [51,52].

Implementation of the (O)RIME framework should not be based only on quantitative information regarding test performance. The test should be acceptable, educationally suitable and feasible for the institution (or clinical workplace) carrying out the assessment Acceptability of an assessment method describes the extent to which stakeholders endorse the method of assessing the clinical competency of a learner using that particular assessment method [51,52]. The framework has been reported as acceptable for institutions, instructors and learners [30]. Educational effect of an assessment method describes the effect on learner’s motivation to improve their clinical competency [51,52]. Feasibility of an assessment method describes the degree to which the assessment method is affordable and efficient in testing clinical competency of learners [24,51,52]. Introduction of any new assessment method may be seen as additional work and willingness may be lacking in learners and also instructors [24]. Fortunately, the implementation of the (O)RIME framework has been judged positively by medical learners in a few fields [25,49] for the ease of understanding and the feedback.

Usefullness of (O)RIME for Veterinary Medical Education

Adjusting the level of clinical encounters to meet learners needs. Although this is not always possible, the (O)RIME framework provides an early indication of a learner’s level and so (if possible) clinical encounters may be adjusted to best suit the learner’s needs (e.g., less or more complicated cases). For example, clinical encounters involving multiple animals and multiple problems provide a level of complication that extends the learners’ clinical reasoning beyond the individual patient with a single problem, i.e., a simple case of LDA in a dairy herd may be very challenging for one learner, but less challenging for another. Providing the opportunity to challenge the learner by asking them to investigate multiple LDAs occurring in a dairy herd due to dystocia adjusts the level of learning to suit their needs, whilst not negatively effecting the needs of the other learner.

Detection of at-risk learner. When a learner is detected to be lagging behind the expected standard (rather than behind their peers), an immediate and clear feedback on the expected standards required for that particular level of the curriculum (see Figure 2) should be given [24]. Although the feedback may need to be delayed if it was based on a single clinical encounter, particularly if the encounter had been very complicated and/or unfamiliar to the learner. A note should be made to follow the particular learner and if a similar performance is seen in another clinical encounter, a feedback session should be scheduled. For example, a final year veterinary student performing at the level of Reporter (summarizes the information well but is unable to provide differential diagnoses) rather than Manager, in multiple clinical encounters of varying degrees of difficulty and complication requires feedback to assist them in achieving the expected standard.

Documentation of deficiency in core expected outcomes. Regular use of the framework may indicate that a larger proportion of learners (e.g., all or a particular cohort) lack core expected outcomes. Provided this is detected early, remedial action may be taken for the existing cohort or, at least, for the subsequent cohorts of learners. For example, student’s being successful at one “level” requires the student to have the right combination of knowledge, skills and attitude to address the clinical encounter. A lack of ability to ‘shift’ to the next level that is identified in multiple learners may indicate a curriculum deficiency which can be addressed before the end of the student’s formal education.

Improving communication between faculty, institutions, instructors and learners. As regular feedback is a feature of the framework, this assessment method can be used to improve communication channels between the stakeholders [30].

5. Conclusions

Preparing graduates to meet day one veterinary graduate attributes requires effective clinical teaching techniques coupled with effective feedback. Consistency of assessment of clinical reasoning is difficult in the variety of settings utilized to educate veterinary learners. The ORIME framework is a developmental approach that distinguishes between basic and advanced levels of performance. Each step represents a synthesis of skills, knowledge and attitude. The ORIME framework provides assessors with a clear, minimal level descriptive required for each level of training rather than a numerical grading system. We expect this framework will be acceptable for ensuring graduates meet essential Day One competencies and should be suitable for veterinary schools’ accreditors and stakeholders involved in veterinary education and veterinary profession. Within veterinary medicine education, the use of frameworks like ORIME for assessment and feedback are lacking. Research into the effectiveness of ORIME in the medical profession is abundant and it has been found to be a practical, useful assessment and feedback tool that is highly acceptable to learners and instructors. It is hoped that this review stimulates discussion and research into this important area.

6. Glossary of Terms

Clinical competency—ability to consistently select and carry out relevant clinical tasks pertaining to the particular clinical encounter in order to resolve the health or productivity problems for the client, industry and patient, in an economic, effective, efficient and humane manner, followed by self-reflection on the performance.

Clinical encounter—any physical or virtual contact with a veterinary patient and client (e.g., owner, employee of an enterprise) with a primary responsibility to carry out clinical assessment or activity.

Clinical instructor—in addition to the regular veterinary practitioner’s duties, a clinical instructor should fulfil roles of assessor, facilitator, mentor, preceptor, role-model, supervisor, and teacher of veterinary learners in a clinical teaching environment. Apprentice/intern in the upper years, Resident, Veterinary educator/teacher, Veterinary practitioner.

Clinical reasoning—process during which a learner collects information, process it, comes to an understanding of the problem presented during a clinical encounter, and prepares a management plan, followed by evaluation of the outcome and self-reflection. Common synonyms include clinical acumen, clinical critical thinking, clinical decision-making, clinical judgment, clinical problem-solving, and clinical rationale.

Clinical teaching—form of an interpersonal communication between a clinical instructor and a learner that involves a physical or virtual clinical encounter.

Deep learning—aiming for mastery of essential academic content; thinking critically and solving complex problems; working collaboratively and communicating effectively; having an academic mindset; and being empowered through self-directed learning.

Effective feedback—purposeful conversation between the learner and instructor within a context and a culture with aim of stimulating self-reflection as a powerful tool for deep learning.

Self-directed learning—learners take charge of their own learning process by identifying learning needs, goals, and strategies and evaluating learning performances and outcomes. Learner-centered approach to learning.

Work-based learning—educational method that immerses the learners in the workplace.

Author Contributions

All authors have contributed to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Carr, A.N.M.; Kirkwood, R.N.; Petrovski, K.R. Effective Veterinary Clinical Teaching in a Variety of Teaching Settings. Vet. Sci. 2022, 9, 17. [Google Scholar] [CrossRef] [PubMed]

- Modi, J.N.; Anshu; Gupta, P.; Singh, T. Teaching and assessing clinical reasoning skills. Indian Pediatrics 2015, 52, 787–794. [Google Scholar] [CrossRef] [PubMed]

- Lateef, F. Clinical reasoning: The core of medical education and practice. Int. J. Intern. Emerg. Med. 2018, 1, 1015. [Google Scholar]

- Linn, A.; Khaw, C.; Kildea, H.; Tonkin, A. Clinical reasoning: A guide to improving teaching and practice. Aust. Fam. Physician 2012, 41, 18–20. [Google Scholar] [PubMed]

- Dreifuerst, K.T. Using debriefing for meaningful learning to foster development of clinical reasoning in simulation. J. Nurs. Educ. 2012, 51, 326–333. [Google Scholar] [CrossRef]

- Carr, A.N.; Kirkwood, R.N.; Petrovski, K.R. Using the five-microskills method in veterinary medicine clinical teaching. Vet. Sci. 2021, 8, 89. [Google Scholar] [CrossRef]

- McKimm, J. Giving effective feedback. Br. J. Hosp. Med. 2009, 70, 158–161. [Google Scholar] [CrossRef]

- Steinert, Y.; Mann, K.V. Faculty Development: Principles and Practices. J. Vet. Med. Educ. 2006, 33, 317–324. [Google Scholar] [CrossRef]

- Houston, T.K.; Ferenchick, G.S.; Clark, J.M.; Bowen, J.L.; Branch, W.T.; Alguire, P.; Esham, R.H.; Clayton, C.P.; Kern, D.E. Faculty development needs. J. Gen. Intern. Med. 2004, 19, 375–379. [Google Scholar] [CrossRef][Green Version]

- Hashizume, C.T.; Hecker, K.G.; Myhre, D.L.; Bailey, J.V.; Lockyer, J.M. Supporting veterinary preceptors in a distributed model of education: A faculty development needs assessment. J. Vet. Med. Educ. 2016, 43, 104–110. [Google Scholar] [CrossRef]

- Lane, I.F.; Strand, E. Clinical veterinary education: Insights from faculty and strategies for professional development in clinical teaching. J. Vet. Med. Educ. 2008, 35, 397–406. [Google Scholar] [CrossRef] [PubMed]

- Wilkinson, S.T.; Couldry, R.; Phillips, H.; Buck, B. Preceptor development: Providing effective feedback. Hosp Pharm 2013, 48, 26–32. [Google Scholar] [CrossRef] [PubMed]

- Miller, G.E. The assessment of clinical skills/competence/performance. Acad. Med. 1990, 65, S63–S67. [Google Scholar] [CrossRef]

- ten Cate, O.; Carraccio, C.; Damodaran, A.; Gofton, W.; Hamstra, S.J.; Hart, D.; Richardson, D.; Ross, S.; Schultz, K.; Warm, E.; et al. Entrustment Decision Making: Extending Miller’s Pyramid. Acad. Med. 2020, 96, 199–204. [Google Scholar] [CrossRef]

- Al-Eraky, M.; Marei, H. A fresh look at Miller’s pyramid: Assessment at the ‘Is’ and ‘Do’ levels. Med. Educ. 2016, 50, 1253–1257. [Google Scholar] [CrossRef] [PubMed]

- Pangaro, L.; Ten Cate, O. Frameworks for learner assessment in medicine: AMEE Guide No. 78. Med. Teach. 2013, 35, e1197–e1210. [Google Scholar] [CrossRef]

- Hemmer, P.A.; Papp, K.K.; Mechaber, A.J.; Durning, S.J. Evaluation, grading, and use of the RIME vocabulary on internal medicine clerkships: Results of a national survey and comparison to other clinical clerkships. Teach. Learn. Med. 2008, 20, 118–126. [Google Scholar] [CrossRef]

- Smith, C.S. A developmental approach to evaluating competence in clinical reasoning. J. Vet. Med. Educ. 2008, 35, 375–381. [Google Scholar] [CrossRef]

- Pangaro, L.N. A shared professional framework for anatomy and clinical clerkships. Clin. Anat. 2006, 19, 419–428. [Google Scholar] [CrossRef]

- Papp, K.K.; Huang, G.C.; Clabo, L.M.L.; Delva, D.; Fischer, M.; Konopasek, L.; Schwartzstein, R.M.; Gusic, M. Milestones of critical thinking: A developmental model for medicine and nursing. Acad. Med. 2014, 89, 715–720. [Google Scholar] [CrossRef]

- Sepdham, D.; Julka, M.; Hofmann, L.; Dobbie, A. Using the RIME model for learner assessment and feedback. Fam. Med.-Kans. City 2007, 39, 161. [Google Scholar]

- Fazio, S.B.; Torre, D.M.; DeFer, T.M. Grading Practices and Distributions Across Internal Medicine Clerkships. Teach. Learn. Med. 2016, 28, 286–292. [Google Scholar] [CrossRef] [PubMed]

- Spring, L.; Robillard, D.; Gehlbach, L.; Moore Simas, T.A. Impact of pass/fail grading on medical students’ well-being and academic outcomes. Med. Educ. 2011, 45, 867–877. [Google Scholar] [CrossRef] [PubMed]

- Pangaro, L. A new vocabulary and other innovations for improving descriptive in-training evaluations. Acad. Med. 1999, 74, 1203–1207. [Google Scholar] [CrossRef] [PubMed]

- Battistone, M.J.; Pendelton, B.; Milne, C.; Battistone, M.L.; Sande, M.A.; Hemmer, P.A.; Shomaker, T.S. Global descriptive evaluations are more responsive than global numeric ratings in detecting students’ progress during the inpatient portion of an internal medicine clerkship. Acad. Med. 2001, 76, S105–S107. [Google Scholar] [CrossRef]

- Ander, D.S.; Wallenstein, J.; Abramson, J.L.; Click, L.; Shayne, P. Reporter-Interpreter-Manager-Educator (RIME) Descriptive Ratings as and Evaluation tool in an Emergency Medicine Clerkship. J. Emerg. Med. 2012, 43, 720–727. [Google Scholar] [CrossRef]

- Tham, K.Y. Observer-Reporter-Interpreter-Manager-Educator (ORIME) Framework to Guide Formative Assessment of Medical Students. Ann. Acad. Med. Singap. 2013, 42, 603–607. [Google Scholar]

- Tolsgaard, M.G.; Arendrup, H.; Lindhardt, B.O.; Hillingso, J.G.; Stoltenberg, M.; Ringsted, C. Construct Validity of the Reporter-Interpreter-Manager-Educator Structure for Assessing Students’ Patient Encounter Skills. Acad. Med. 2012, 87, 799–806. [Google Scholar] [CrossRef]

- Hauer, K.E.; Mazotti, L.; O’Brien, B.; Hemmer, P.A.; Tong, L. Faculty verbal evaluations reveal strategies used to promote medical student performance. Med. Educ. Online 2011, 16, 6354. [Google Scholar] [CrossRef]

- Battistone, M.J.; Milne, C.; Sande, M.A.; Pangaro, L.N.; Hemmer, P.A.; Shomaker, T.S. The feasibility and acceptability of implementing formal evaluation sessions and using descriptive vocabulary to assess student performance on a clinical clerkship. Teach. Learn. Med. 2002, 14, 5–10. [Google Scholar] [CrossRef]

- Hemmer, P.A.; Pangaro, L. The effectiveness of formal evaluation sessions during clinical clerkships in better identifying students with marginal funds of knowledge. Acad. Med. J. Assoc. Am. Med. Coll. 1997, 72, 641–643. [Google Scholar] [CrossRef] [PubMed]

- Irvine, D. The performance of doctors. II: Maintaining good practice, protecting patients from poor performance. BMJ 1997, 314, 1613. [Google Scholar] [CrossRef] [PubMed]

- Holmes, A.V.; Peltier, C.B.; Hanson, J.L.; Lopreiato, J.O. Writing medical student and resident performance evaluations: Beyond “performed as expected”. Pediatrics 2014, 133, 766–768. [Google Scholar] [CrossRef]

- Cook, R.; Pedley, D.; Thakore, S. A structured competency based training programme for junior trainees in emergency medicine: The “Dundee Model”. Emerg. Med. J. 2006, 23, 18–22. [Google Scholar] [CrossRef][Green Version]

- Krathwohl, D.R. A revision of Bloom’s taxonomy: An overview. Theory Into Pract. 2002, 41, 212–218. [Google Scholar] [CrossRef]

- Bloom, B.S. Taxonomy of Educational Objectives: The Classification of Educational Goals: Cognitive Domain; David McKay Company: New York, NY, USA, 1956. [Google Scholar]

- Pangaro, L. Behind the Curtain with Louis Pangaro: How the RIME Framework Was Born. Available online: https://harvardmacy.org/index.php/hmi/behind-the-curtain-with-louis-pangaro-how-the-rime-framework-was-born (accessed on 18 October 2021).

- Hemmer, P.A.; Hawkins, R.; Jackson, J.L.; Pangaro, L.N. Assessing how well three evaluation methods detect deficiencies in medical students’ professionalism in two settings of an internal medicine clerkship. Acad. Med. 2000, 75, 167–173. [Google Scholar] [CrossRef]

- Bloomfield, L.; Magney, A.; Segelov, E. Reasons to try ‘RIME’. Med. Educ. 2007, 41, 1104. [Google Scholar] [CrossRef]

- Griffith, C.H.; Wilson, J.F. The association of student examination performance with faculty and resident ratings using a modified RIME process. J. Gen. Intern. Med. 2008, 23, 1020–1023. [Google Scholar] [CrossRef]

- Bordage, G.; Daniels, V.; Wolpaw, T.M.; Yudkowsky, R. O–RI–M: Reporting to include data interpretation. Acad. Med. 2021, 96, 1079–1080. [Google Scholar] [CrossRef]

- Ryan, M.S.; Lee, B.; Richards, A.; Perera, R.A.; Haley, K.; Rigby, F.B.; Park, Y.S.; Santen, S.A. Evaluating the Reliability and Validity Evidence of the RIME (Reporter-Interpreter-Manager-Educator) Framework for Summative Assessments Across Clerkships. Acad. Med. 2021, 96, 256–262. [Google Scholar] [CrossRef]

- Neher, J.O.; Gordon, K.C.; Meyer, B.; Stevens, N. A five-step “microskills” model of clinical teaching. J. Am. Board Fam. Pract./Am. Board Fam. Pract. 1992, 5, 419–424. [Google Scholar]

- Neher, J.O.; Stevens, N.G. The one-minute preceptor: Shaping the teaching conversation. Fam. Med. 2003, 35, 391–393. [Google Scholar]

- Swartz, M.K. Revisiting “The One-Minute Preceptor”. J. Pediatric Health Care 2016, 30, 95–96. [Google Scholar] [CrossRef]

- Kelly, W.; Durning, S.; Denton, G. Comparing a script concordance examination to a multiple-choice examination on a core internal medicine clerkship. Teach. Learn. Med. 2012, 24, 187–193. [Google Scholar] [CrossRef]

- Stephens, M.B.; Gimbel, R.W.; Pangaro, L. Commentary: The RIME/EMR scheme: An educational approach to clinical documentation in electronic medical records. Acad. Med. 2011, 86, 11–14. [Google Scholar] [CrossRef]

- Klocko, D.J. Enhancing physician assistant student clinical rotation evaluations with the RIME scoring format: A retrospective 3-year analysis. J. Physician Assist. Educ. 2016, 27, 176–179. [Google Scholar] [CrossRef]

- DeWitt, D.; Carline, J.; Paauw, D.; Pangaro, L. Pilot study of a ‘RIME’-based tool for giving feedback in a multi-specialty longitudinal clerkship. Med. Educ. 2008, 42, 1205–1209. [Google Scholar] [CrossRef]

- Tolsgaard, M.G.; Jepsen, R.; Rasmussen, M.B.; Kayser, L.; Fors, U.; Laursen, L.C.; Svendsen, J.H.; Ringsted, C. The effect of constructing versus solving virtual patient cases on transfer of learning: A randomized trial. Perspect. Med. Educ. 2016, 5, 33–38. [Google Scholar] [CrossRef]

- Van Der Vleuten, C.P.; Schuwirth, L.W. Assessing professional competence: From methods to programmes. Med. Educ. 2005, 39, 309–317. [Google Scholar] [CrossRef]

- Norcini, J.J.; McKinley, D.W. Assessment methods in medical education. Teach. Teach. Educ. 2007, 23, 239–250. [Google Scholar] [CrossRef]

- Baker, E.A.; Ledford, C.H.; Fogg, L.; Way, D.P.; Park, Y.S. The IDEA assessment tool: Assessing the reporting, diagnostic reasoning, and decision-making skills demonstrated in medical students’ hospital admission notes. Teach. Learn. Med. 2015, 27, 163–173. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).