Abstract

When confronted with a public health emergency, significant innovative treatment protocols can sometimes be discovered by medical doctors at the front lines based on repurposed medications. We propose a statistical framework for analyzing the case series of patients treated with such new protocols, that enables a comparison with our prior knowledge of expected outcomes, in the absence of treatment. The goal of the proposed methodology is not to provide a precise measurement of treatment efficacy, but to establish the existence of treatment efficacy, in order to facilitate the binary decision of whether the treatment protocol should be adopted on an emergency basis. The methodology consists of a frequentist component that compares a treatment group against the probability of an adverse outcome in the absence of treatment, and calculates an efficacy threshold that has to be exceeded by this probability, in order to control the corresponding p-value and reject the null hypothesis. The efficacy threshold is further adjusted with a Bayesian technique, in order to also control the false positive rate. A random selection bias threshold is then calculated from the efficacy threshold to control for random selection bias. Exceeding the efficacy threshold establishes the existence of treatment efficacy by the preponderance of evidence, and exceeding the more demanding random selection bias threshold establishes the existence of treatment efficacy by the clear and convincing evidentiary standard. The combined techniques are applied to case series of high-risk COVID-19 outpatients that were treated using the early Zelenko protocol and the more enhanced McCullough protocol.

1. Introduction

In medical research, the efficacy of new drugs or treatment protocols is established by controlled studies in which a treatment group is compared against a control group. A case series is one half of a controlled study consisting only of the treatment group. At the beginning of the COVID-19 pandemic, practicing medical doctors were confronted with having no treatment to offer to their patients that can prevent or minimize hospitalization and/or death. In response, some doctors were compelled to innovate and discover, on their own, treatment protocols using repurposed off-label medications. Most notable examples, amongst several others, include Didier Raoult [1] in the IHU Méditerranée Infection hospital in Marseilles France, Vladimir Zelenko [2] in upstate New York, George Fareed and Brian Tyson [3] in California, Shankara Chetty [4] in South Africa, Jackie Stone [5] in Zimbabwe, and Paul Marik’s group [6,7], which was in the beginning based at the Eastern Virginia Medical School. Their efforts to treat patients generated case series of successfully treated patients that constitute real-world evidence [8].

The goal of this paper is to present a statistical framework for rapidly analyzing systematic case series data of early treatment protocols with binary endpoints (e.g., hospitalization or death), and comparing them against our prior knowledge of the likelihood of adverse outcomes in the absence of treatment. Although the development of the proposed statistical technique was originally motivated by the need to assess available case series [2,9,10,11,12,13] of multidrug treatment protocols [2,14,15,16] for COVID-19, it can also play a very important role in the public health response to future pandemics or epidemics with no established treatment protocols. Furthermore, the potential scope of our methodology is very broad and it can be used to compare any treatment group case series, with binary endpoints, against our prior knowledge of the probability of adverse outcomes based on population-level historical controls. A limitation of the methodology is that it should be used only for treatment protocols that are based on repurposed medications [17] with known acceptable safety. The main advantage of the technique is that it can be very good at rapidly validating and enabling the deployment of treatment protocols, based on repurposed medications, when there is a sufficiently strong signal of efficacy. When confronted with a mass casualty event, it is critically important to be able to rapidly leverage the direct clinical experience of medical doctors, towards formulating an evidence-based standard of care, while also being able to rigorously quantify the quality of the available evidence.

The closest concept to our approach is the idea of using a virtual control group [18], in which the outcomes observed in a treatment group case series are compared against the predicted outcomes for the same patient cohort without treatment, using a trained statistical model, based on data accumulated before the discovery of the treatment in question. The virtual control group method aims to not only establish the existence of efficacy, but to also measure the corresponding treatment efficacy. Our idea is to abandon any attempt to obtain an unbiased measure of the treatment efficacy, and to focus on establishing, with sufficient confidence, the existence of some positive treatment efficacy. We do this by comparing the treatment group case series with a probability lower-bound for the expected negative outcomes without treatment. Such lower bounds can be easily extracted from available data, and can be facilitated by applying risk-stratification on the treatment group case series, when necessary. Thus, our aim is to establish, with sufficient confidence, a positive lower bound for treatment efficacy, quickly and without expending substantial resources, using real-world evidence that has been accumulated from the efforts of practicing physicians. In turn, this can be sufficient for a positive recommendation to adopt the corresponding treatment protocol.

Because case series are susceptible to selection bias, we define two cross-over thresholds for making a positive recommendation: an efficacy threshold, corresponding to a preponderance of evidence standard, where we assume there is no selection bias, and a random selection bias threshold, corresponding to the clear and convincing evidentiary standard, which controls for random selection bias in the case series. Following the recommendation of the American Statistical Association statement on statistical significance and p-values [19], the proposed approach combines use of the p-value, which enables one to reject the null hypothesis, with a Bayesian factor analysis framework [20,21,22,23,24] for controlling the false positive rate [25] in the calculation of the efficacy threshold. Empirically, we have found that the frequentist p-value framework has done a pretty good job on its own, at least for the analysis of the case series data considered in this paper. However, complementing it with Bayesian factor analysis is a reasonable precaution, because it can help raise the red flag when dealing with small sample sizes and/or weak signals.

We apply the proposed framework to the processing of available case series data [2,9,10,11,12,13] that support proposed early outpatient treatment protocols for COVID-19 patients, such as the original Zelenko triple-drug protocol [2] and the more advanced McCullough protocol [14,15,16]. The original Zelenko protocol was first announced on 23 March 2020 [26]. The proposed approach was to risk stratify patients into two groups (low-risk vs. high-risk), provide supportive care to the low-risk group, and treat the high-risk group with a triple-drug protocol (hydroxychloroquine, azithromycin, zinc sulfate). Results were reported in an 28 April 2020 letter [9] and a 14 June 2020 letter [10], and the lab-confirmed subset of the April data was published in a formal case-control study [2]. Zelenko’s letters have been attached to our supplementary material document [27].

The rationale for the triple-drug therapy was based on the following mechanisms of action: hydroxychloroquine prevents the virus from binding with the cells, and also acts as a zinc ionophore that brings the zinc ions inside the cells, which in turn inhibit the RDRP (RNA dependent RNA polymerase) enzyme used by the virus to replicate [28,29]. Azithromycin’s role is to guard against a secondary infection, but we have since learned that it also has its own anti-viral properties [30,31,32], and a signal of the efficacy of adding azithromycin on top of hydroxychloroquine can be clearly discerned in a study of nursing home patients in Andorra, Spain [33].

It is interesting that chloroquine was shown in vitro to have antiviral properties against the previous SARS-CoV-1 virus [34], and that there is an anecdotal report from 1918 [35] about the successful use of quinine dihydrochloride injections as an early treatment of the Spanish flu. In hindsight, it is now known that influenza viruses also use the RDRP protein to replicate [36], which can be inhibited with intracellular zinc ions [28,29]. Consequently, there is a mechanism of action that can explain why we should anticipate the combination of zinc with a zinc ionophore (i.e., hydroxychloroquine, or quercetin [37], or EGCG (Epigallocatechin Gallate) [38]) to inhibit the replication of the influenza viruses. Other RNA viruses, including the respiratory syncytial virus (hereafter RSV) [39] and the highly pathogenic Marburg and Ebola viruses [40,41], are also using the RDRP protein to replicate, raising the question of whether the zinc/zinc ionophore concept could also play a useful role against them.

Zelenko’s protocol was soon extended into a sequenced multidrug approach, known as the McCullough protocol [14,15,16], which is based on the insight that COVID-19 is a tri-phasic illness that manifests in three phases: (1) an initial viral replication phase, in which the virus infects cells and uses them to replicate and make new viral particles, during which patients present with flu-like symptoms; (2) an inflammatory hyper-dysregulated immune-modulatory florid pneumonia, that presents with a cytokine storm, coughing, and shortness of breath, triggered by the toxicity of the spike protein [42], when it is released, as viral particles are destroyed by the immune system, triggering release of interleukin-6 and a wave of cytokines; (3) a thromboembolic phase, during which microscopic blood clots develop in the lungs and the vascular system, causing oxygen desaturation, and very damaging complications that can include embolic stroke, deep vein thrombosis, pulmonary embolism, myocardial injury, heart attacks, and damage to other organs.

The rationale of the original Zelenko protocol was that early intervention to stop the initial viral replication phase could prevent the disease from progressing to the second and third phase, and, in doing so, prevent hospitalizations or death. The McCullough protocol [14,15,16] extends the Zelenko protocol by using multiple drugs in combination sequentially to mitigate each of the three phases of the illness, depending on how they present for each individual patient. McCullough’s therapeutic recommendations for handling the cytokine injury phase and the thrombosis phase of the COVID-19 illness are, for the most part, standard on-label treatments for treating hyper-inflammation and preventing blood clots. The most noteworthy innovations to the antiviral part of the protocol are the addition of ivermectin [43,44,45,46,47,48], which has 20 known mechanisms of action against COVID-19 [49], to be used as an alternative or in conjunction with hydroxychloroquine, the addition of a nutraceutical bundle [50,51,52] combined with a zinc ionophore (quercetin [37] or EGCG [38]) for both low-risk and high-risk patients, and lowering the age threshold for high-risk patients to 50 years. The MATH+ protocol [6,7], developed for hospitalized patients by Marik’s group, follows the same principles of a sequenced multidrug treatment. A similar treatment protocol, based on similar insights, was independently discovered and published on May 2020 by Chetty [4] in South Africa.

McCullough’s protocol [14,15,16] was adopted by some treatment centers throughout the United States and overseas, but has not been endorsed by the United States public health agencies, ostensibly due to lack of support of the entire sequenced treatment algorithm by an RCT (Randomized Controlled Trial). In spite of the urgent need for safe and effective early outpatient treatment protocols for COVID-19, there has been no attempt to conduct any such trials of any comprehensive multidrug outpatient treatment protocols throughout the pandemic. Instead, the prevailing approach has been to try to build treatment protocols, one drug at a time, after validating each drug with an RCT. Because COVID-19 is a multifaceted tri-phasic illness, there is no a priori reason to expect that a single drug alone will work for all three phases of the disease. Consequently, the first priority should be to validate the efficacy of treatment protocols that use multiple drugs in combination, since this is what is actually going to be used in practice to treat patients. To that end we have analyzed the case series by Zelenko [2,9,10], Procter [11,12], and Raoult [13], where such multidrug outpatient treatment protocols have been used by practicing physicians.

The broader context in which the proposed statistical methodology is situated is as follows. Shortly before COVID-19 was declared a pandemic by the World Health Organization, an article [53] was published on 23 February 2020 in the New England Journal of Medicine arguing that “the replacement of randomized trials with non-randomized observational status is a false solution to the serious problem of ensuring that patients receive treatments that are both safe and effective.” The opposing viewpoint was published earlier in 2017 by Frieden [54], highlighting the limitations of RCTs and the need to leverage and overcome the limitations of all available sources of evidence, including real-world evidence [8], in order to make lifesaving public health decisions. In particular, Frieden [54] stressed that the very high cost of RCTs and the long timelines needed for planning, recruiting patients, conducting the study, and publishing it, are limitations that “affect the use of randomized controlled trials for urgent health issues, such as infectious disease outbreaks for which public health decisions must be made quickly on the basis of limited and imperfect data.”

Deaton and Cartwright [55] presented the conceptual framework that underlies RCTs and highlighted several limitations. Among them, they have stressed that randomization requires very large samples on both arms of the trial, otherwise, an RCT should not be presumed to be methodologically superior to a corresponding observational study. For example, the randomized controlled trial study of hydroxychloroquine by Dubee et al. [56], was administratively stopped after recruiting 250 patients, with 124 in the treatment group and 123 in the control group. Although a two-fold mortality rate reduction was observed by day 28, the study failed to reach statistical significance, due to the small sample size. Even if statistical significance had been achieved via a stronger mortality rate reduction signal, the small sample size would have still prevented sufficient randomization. Consequently, although the study has gone through the motions of an RCT, it is not methodologically superior to a retrospective observational study. There are several other RCT studies of hydroxychloroquine with similar shortcomings [57].

Furthermore, although a properly conducted RCT has internal validity, in that the inferences are applicable to the specific group of patients that participated in the trial, the external validity of the RCT outcomes needs to be justified conceptually on the basis of prior knowledge, which is either observational, or based on a deeper understanding of the underlying mechanisms of action. Because COVID-19 mortality risk in the absence of early treatment can span three orders of magnitude (from 0.01% to more than 10%) [58,59,60,61,62,63,64], depending on age and comorbidities, trials using low-risk patient cohorts are not informative about expected outcomes on the high-risk patient cohorts and vice versa. Likewise, the timing of treatment and the medication dosage/duration of treatment will confound the results of an RCT. In general, better results are expected when treatment is initiated earlier rather than later, and negative results can be caused by inappropriate medication dosage (i.e., too much or too little). These are all relevant considerations for establishing the external validity of an RCT.

As was noted by Risch [65], when interpreting evidence from RCTs, and more broadly from any study, we should bear in mind that results of efficacy or toxicity of a treatment regimen on hospitalized patients cannot be extrapolated to outpatients and vice versa. Likewise, Risch [65] noted that evidence of efficacy or lack of efficacy of a single drug do not necessarily extrapolate to using several drugs in combination. This latter point is further amplified when there is an algorithmic overlay governing which drugs should be used and when, based on the individual patient’s medical history and ongoing response to treatment. Consequently, RCTs that compare a single drug monotherapy against supportive care are not always informative about whether the drug should be included in a multidrug protocol.

In addition to all that, we are also confronted with an ethical concern. If the available observational evidence are sufficiently convincing, then there is a crossover point where it is no longer ethical to justify randomly refusing treatment to a large number of patients in order to have a sufficiently large control group. The corresponding mathematical challenge is being able to quantify the quality of our observational evidence in order to determine whether or not we are already situated beyond this ethical crossover point.

Just as the quality of evidence provided by randomized controlled trials is fluid, with respect to successful randomization and external validity, the same is true about the quality of real-world evidence [8] that will inevitably become available from the initial response to an emerging new pandemic. We envision that a successful pandemic response, in the area of early outpatient treatment, will proceed as follows: the first element of pandemic response is to assess and monitor the situation by prospectively collecting data, needed to construct predictive models of the probability of hospitalization and death, in the absence of treatments that have yet to be discovered, as a function of the patient’s medical profile/history. These models do not necessarily need to be sophisticated at the early stages of pandemic response. It could be sufficient to be able to predict good lower bounds for the hospitalization or mortality probabilities, as opposed to more precise estimates. These early data can be used to identify the predictive factors for hospitalization or death and risk stratify the patients into low-risk and high-risk categories. They can also be used as a historical control group that establishes our prior knowledge of expected outcomes, in the absence of treatment, that has yet to be discovered.

In parallel with gathering and analyzing data, which is the primary duty and responsibility of our public health and academic institutions, medical doctors have an ethical responsibility to use the emerging scientific understanding of the new disease and its mechanisms of actions to try to save the lives of as many patients as possible. Under article 37 of the 2013 Helsinki declaration [66], it is ethically appropriate for physicians to “use an unproven intervention, if in the physician’s judgment it offers hope of saving life, re-establishing health or alleviating suffering”, provided, there is informed consent from the patient, and “where proven interventions do not exist, or other known interventions have been ineffective.”

When this effort leads to the discovery of a treatment protocol, with an empirical signal of benefit and acceptable safety, and using the treatment protocol results in a case series of treated patients, then the confluence of the following conditions makes it possible to statistically establish the existence of treatment efficacy: First, the proposed treatment protocols should use repurposed drugs [17] with known acceptable safety. When testing new drugs, we have no prior knowledge of the risks involved and a rigorous controlled study is required to determine the balance of risks and benefits. Second, we need data that give us prior knowledge of the probability risk of the relevant binary endpoints (i.e., hospitalization and/or death) in the absence of treatment, as a function of the relevant stratification parameters. Third, and most importantly, the case series corresponding to treated patients should exhibit a very strong signal of benefit, relative to our prior experience with untreated patients, prior to the discovery of the respective treatment protocol.

Under these conditions, the idea that is proposed in this paper works as follows. Our input is the number N of high-risk patients treated, the number of patients a with an adverse outcome (i.e., hospitalization or death) and selection criteria for extracting the high-risk cohort under consideration, from which we can deduce, based on prior knowledge, that the unknown probability x of an adverse outcome without treatment is bounded by . We also choose the desired level of p-value upper bound , which is typically (95% confidence), although we shall also consider and . The output is an efficacy threshold that gives us the following rigorous mathematical statement: if , then we have more than confidence that the treatment is effective relative to the standard of care. This statement has to be paired with the subjective assessment of our prior knowledge, based on which we need to show that . The upper bound is used by the Bayesian factor technique as part of finalizing the calculation of the efficacy threshold . Implicit in this argument is the assumption of no selection bias, allowing us to apply the probability x at the population level to our particular case series. From the sample size N and the finalized efficacy threshold , we also calculate a random selection bias threshold , higher than , that quantifies how large the gap between and needs to be, in order to mitigate with confidence, any possible random selection bias in the case-series sample .

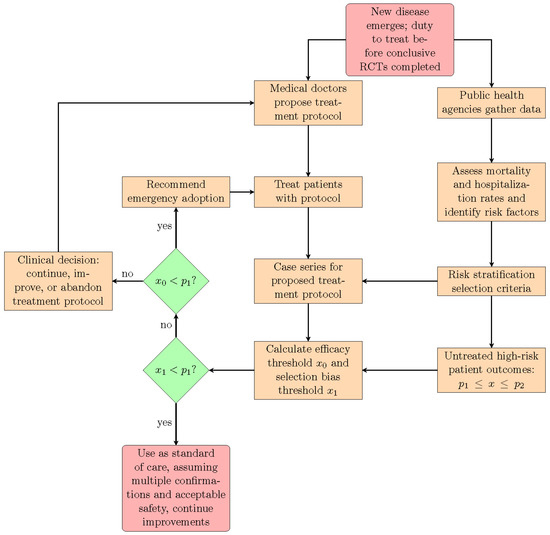

As a result, we can assert the existence of treatment efficacy using two distinct standards of evidence. If we can establish , then the preponderance of evidence is in favor of the existence of treatment efficacy, and this can justify its provisional adoption on an emergency basis, in order to gather more evidence. If we can establish that , then the evidence becomes clear and convincing, and if these results are replicated by multiple treatment centers, then it becomes ethically questionable to deny patients access to the treatment protocol, for the purpose of conducting an RCT, or simply due to therapeutic nihilism by public health authorities. In Figure 1, we show how the proposed statistical methodology can be integrated into an epidemic or pandemic response that leverages and deploys the direct experience of frontline medical doctors, resulting from their efforts to treat their patients. We stress again that this approach is appropriate only for treatment protocols using repurposed medications with known acceptable safety. When new medications, as opposed to repurposed drugs, are introduced into a pre-existing treatment protocol, then they should be rigorously tested both for safety and efficacy with prospective RCTs.

Figure 1.

This flowchart shows the suggested interactions between medical doctors, public health agencies, and the proposed statistical methodology that are needed, in order to implement an emergency epidemic or pandemic response that leverages the direct experience of frontline medical doctors treating their patients.

The paper is organized as follows. In Section 2 we present the technique for calculating the efficacy threshold and the random selection bias threshold. We also explain the relationship of the proposed technique with the exact Fisher test and with the binomial proportion confidence interval problem. In Section 3, we present a Bayesian technique for adjusting the efficacy thresholds in order to also control the corresponding false positive rate. In Section 4, we illustrate an application of both techniques to the Zelenko case series [2,9,10] as well as the Procter [11,12] and Raoult [13] case series. Discussion and conclusions are given in Section 5. With the exception of Section 3, which is mainly relevant to a more careful analysis by biostatisticians, we have strived to make Section 2 and Section 4 of the paper relevant and accessible to both clinicians and biostatisticians by minimizing the mathematical details. Material that is relevant only to biostatisticians is relegated to the appendices. The computer code and the corresponding calculations are included in the supplementary data document [27].

2. Methods—Part I: Frequentist Methods for Case Series Analysis

In this section, we present the technique for comparing a treatment group case series of high-risk patients against the expected probability x of an adverse outcome without treatment, based on prior knowledge. Since our prior knowledge bounds the probability x inside an interval but the precise value of x is unknown, we calculate the minimum value (efficacy threshold) that this probability has to exceed in order to be able to reject the null hypothesis, that the treatment has no efficacy. The proposed technique is equivalent to an exact Fisher test where we take the limit of an infinitely large control group with probability of an adverse outcome set equal to x. We also explain the relationship between the proposed approach and the binomial proportion confidence interval problem, and provide evidence that the corresponding coverage probability is conservative. The assumption of conservative coverage is used, in turn, to derive a random selection bias threshold that x should exceed in order reject the possibility of a false positive result due to random selection bias.

2.1. Comparing Treatment Group against Expected Adverse Event Rate without Treatment

Suppose that we have a treatment group of high-risk patients in which N patients have received treatment and a patients have had an adverse outcome. Let us also assume that all N patients in the case series satisfy precise selection criteria, used to classify them as high-risk patients, from which we can infer, from our prior knowledge, that in the absence of treatment, the probability x of an adverse outcome for a similar population is bounded in the interval . To establish the existence of treatment efficacy, we assume the null hypothesis, that the treatment has no effect and that consequently, the probability of an adverse outcome in the treatment group is also equal to x. Under this null hypothesis the probability of observing a patients with an adverse outcome out of a total of N patients is given by

which corresponds to a binomial distribution. The first factor gives the number of combinations for choosing the a patients that have an adverse outcome out of all N patients. The second factor is the probability that the chosen a patients have an adverse outcome, under the assumption of the null hypothesis. The third factor is likewise the probability that the remaining patients will not have an adverse outcome. Consequently, the product of the three factors is the probability of seeing the event under the null hypothesis.

The corresponding p-value is calculated by adding to the probability of the event , the probability of all other events with smaller or equal probability, and it reads

where H is the modified Heaviside function given by

The Heaviside function factor in Equation (2) selects the events that are less probable than the observed event for inclusion in the probability sum, as per the formal definition of the p-value.

In order to reject the null hypothesis, we need to construct a convincing argument that establishes that , with in order to achieve a 95% confidence. Such an argument, in effect, is a hypothesis test that compares the treatment group outcome against a fixed probability x for an adverse outcome in the absence of treatment. Our proposal for doing so is conceptually very simple. First we calculate an efficacy threshold such that

In doing so, we are seeking the smallest possible value of that satisfies Equation (4). If our prior belief about x is that it satisfies , then it follows that if we show that our prior belief about the lower bound of the probability x of an adverse outcome without treatment exceeds the efficacy threshold , then we have a statistically significant signal of benefit in favor of the proposed treatment protocol. This is, in turn, sufficient to recommend to other physicians to consider using the treatment protocol, on an emergency basis, in order to save as many patients as possible, as soon as possible.

We stress again that implicit in this reasoning is the assumption that all observed adverse events in the treatment group case series have been caused by the disease and not by the treatment. For this reason, this methodology has to be limited only to the evaluation of treatment protocols using repurposed medications [17] with previously known acceptable safety. Furthermore, in order to have a prior belief constraining the probability x of an adverse outcome for high-risk patients, in the absence of treatment, it is necessary for public health agencies and academic institutions to prospectively collect data on the predictive factors for hospitalizations or death, as soon as possible, at the beginning of an emerging new disease. These data can then be used both to define the selection criteria for identifying patients as high-risk and to constrain the corresponding probability x within the interval . Finally, the above argument is predicated on the assumption of no selection bias in the case series , in order for the inequality to be applicable to the case series. We extend the argument to account for selection bias in Section 2.3.

2.2. Comments on the Proposed Hypothesis Testing Technique

We now make the following comments about the above hypothesis testing technique. First, we note that the p-value , corresponding to a comparison of a case series of a treatment group against the probability x of an adverse outcome without treatment, as given by Equation (2), can be also obtained by running an exact Fisher test with an artificial control group of M patients with b adverse outcomes, with , in the limit where the size of this artificial control group goes to infinity. In Appendix A, we give a mathematical proof of this claim and also explain the mathematically precise formulation of the statement. This convergence property is in fact, a consequence of a known relationship [67,68,69] between the hypergeometric distribution, used in the calculation of the exact Fisher test p-value, and the binomial distribution used in the calculation of .

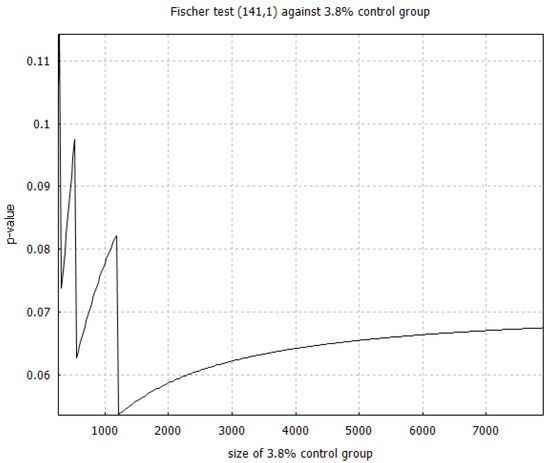

Paradoxically, as shown from the example in Figure 2, the convergence of the p-value is not monotonic with respect to the control group size. Intuitively, increasing the size of the control group should increase confidence in rejecting the null hypothesis, which should result in a monotonically decreasing p-value. Instead, we see that the p-value increases, as the control group size is increased, with intermittent downward jumps driving the convergence to . We also see that the convergence is slower than we might expect. Nevertheless, the result of Appendix A assures us that, in the limit of an infinite control group, the p-value eventually does converge to .

Figure 2.

We plot the p-value calculated from an exact Fisher test that compares the treatment group from the DSZ study [2] (141 high-risk patients treated with 1 death) against an artificial control group with 3.8% mortality rate. Note that the exact p-value in the infinite control group limit should be 0.047, which is approached to three decimals when we get to control group size between 160,000 and 180,000.

Second, the proposed hypothesis technique is also mathematically related to the well-researched binomial proportion confidence interval problem [70]. Given the case series of a treatment group of N patients, with a patients having an adverse outcome, the challenge of the binomial proportion confidence interval problem is to identify a probability interval , such that we can assert, with confidence, that the probability of an adverse event with treatment is inside the interval .

If the null hypothesis is satisfied, then the probability of an adverse outcome with treatment is equal to the probability of an adverse event without treatment, and it follows that the intervals and have to intersect. The contrapositive of this deduction is that if the intervals and do not intersect, then the null hypothesis is false. This argument shows that the upper endpoint is the efficacy threshold that has to be exceeded by all probabilities in the interval in order to reject the null hypothesis and claim a signal of benefit. More specifically, the method proposed in the preceding section for calculating the efficacy threshold is equivalent to calculating the upper endpoint of the Sterne interval [71] for the corresponding binomial proportion confidence interval problem.

It is worth noting that although several alternative techniques have been proposed for solving the binomial proportion confidence interval problem, none of them has coverage consistent with the desired statistical confidence and most of them do not have conservative coverage [72]. This means that, given a case series for a treatment group, the obtained 95% confidence interval for the probability of an adverse event, with treatment, could be wider or narrower than it should be, depending on the sample size N and the unknown true value of that probability. Furthermore, it has already been proven that no solution to the binomial proportion confidence interval problem exists with perfect coverage [73]. For our purposes, a solution technique with conservative coverage that always overestimates the efficacy threshold is acceptable, and to be preferred over techniques that will sometimes overestimate and sometimes underestimate the efficacy threshold.

The coverage of a specific solution technique is quantified via the coverage probability , which is defined as the conditional probability of observing a case series , given a fixed sample size N, for which our solution technique will yield a confidence interval that includes the true probability of an adverse event, under the condition that this true probability is equal to x. Here, is the desired level of confidence. For very large sample sizes, is calculated using computer simulations, however for smaller samples, it can be calculated analytically [74] from the equation,

where is an indicator function, such that if and only if x is in the confidence interval obtained by the proposed solution technique for a given case series corresponding to confidence. Otherwise we set . Conservative coverage requires that our solution technique satisfy for all values of the probability x.

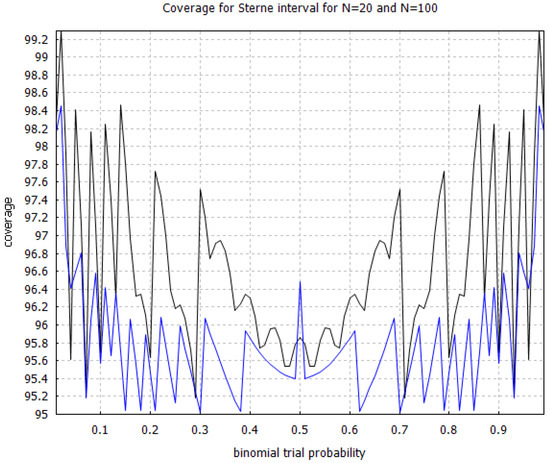

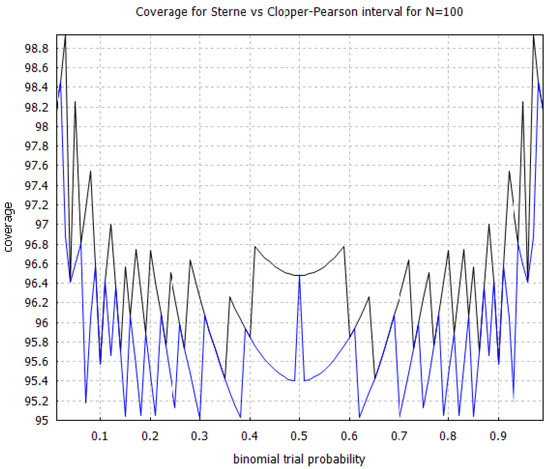

The Clopper–Pearson interval [75] is a very well-known solution technique to the binomial proportion confidence interval problem that is known to have conservative coverage. However, an efficacy threshold, defined as the upper limit of the Clopper–Pearson interval, is not equal to what we would have obtained from an exact Fisher test in the limit of an infinite control group, unlike with the Sterne interval [71]. In Figure 3, we show the coverage probability for the Sterne interval for sample sizes and and note that it also has conservative coverage, which is very desirable in the context of hypothesis testing. In Figure 4, we compare the coverage probability of the Clopper–Pearson interval against the coverage probability of the Sterne interval and note that although they are both conservative, the Sterne interval has less conservative coverage probability than the Clopper–Pearson interval, over the same sample size.

Figure 3.

Coverage probability for the Sterne interval [71] with sample sizes and . The black curve corresponds to and the blue curve, which is situated below the black curve, corresponds to . The coverage probabilities were calculated using increments on the horizontal axis.

Figure 4.

Comparison of the coverage probability for the Clopper–Pearson interval [75] versus the Sterne interval [71] with sample size . The black curve shows the coverage probability for the Clopper–Pearson interval, and the blue curve, which is situated below the black curve, shows the coverage probability for the Sterne interval. The coverage probabilities were calculated using increments on the horizontal axis.

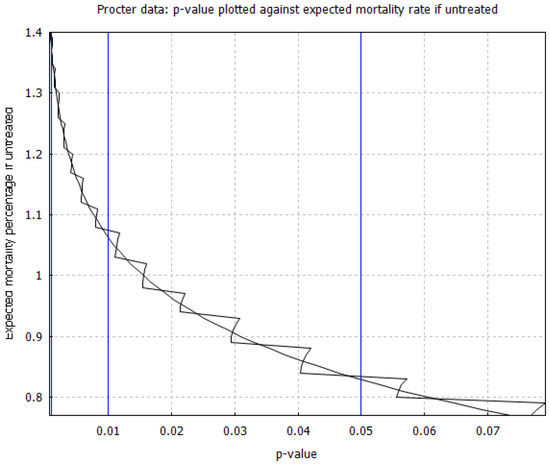

Our third comment concerns the numerical calculation of the efficacy thresholds from the function . To illustrate this calculation with an example, on Figure 5, we plot the p-value against the expected mortality rate x without early outpatient treatment of COVID-19, based on Procter’s combined case series [12] of 869 high-risk patients that received an early treatment protocol with 2 reported deaths. The figure has vertical lines marking the crossover to 95%, 99%, and 99.9% confidence. The corresponding efficacy thresholds are located at the points where the zigzag graph of the function intersects with the vertical lines. Finding the intersection points numerically with an efficient algorithm is challenging due to the zigzag shape of the graph. An efficient such algorithm was discovered very recently [76], although we did not use it in our calculations [27].

Figure 5.

Relationship between p-value and expected mortality rate for high-risk patients without early treatment, based on the case series data from Procter’s dataset of 869 high-risk patients [12]. The zigzag curve follows given by Equation (2), whereas the smooth curve approximates the right tail terms in the p-value sum by replacing them with the left-tail terms on the horizontal axis.

The discontinuous behavior of may seem paradoxical, since we would have expected it to be monotonically decreasing with respect to x, but we have found that it is caused by some of the right-tail contributions to the p-value sum given by Equation (2). If we make an unwarranted approximation, replacing the right-tail sum with the left-tail sum, we obtain the smooth curve shown in Figure 5. The intersection points of the smooth curve with the vertical lines, give the upper endpoint of the Clopper–Pearson interval [75]. Similar graphs for all case series considered in the study have been included in our supplementary data document [27]. We have found empirically, at least for the case series being studied here, that both the correct zigzag curve and the approximate smooth curve give almost the same values for all relevant efficacy thresholds.

2.3. Selection Bias Mitigation and Selection Bias Thresholds

The idea of hypothesis testing, in which we compare a case series of treated patients against the historical population level (or possibly a more limited) control group, is vulnerable to the criticism of possible selection bias in the treatment case series in favor of establishing treatment efficacy. Some of the selection bias could be systemic (i.e., there may be a tendency towards selecting healthier high-risk patients), but even in the absence of any systemic bias, some selection bias will inevitably occur randomly, as a consequence of using a small sample of patients for the treatment case series, randomly chosen out of the general population. We propose the following idea for mitigating random selection bias, and then we discuss the problem of selection bias more broadly.

Suppose that we have a case series , of N treated patients with a adverse outcomes, and suppose that we have calculated the efficacy threshold for this case series. We can choose to be either set equal to , or we can choose to have it further increased, if necessary, using the Bayesian technique of Section 3. In either case, if we have a prior belief that the probability of an adverse event, without treatment, in the high-risk part of the general population, under the same high-risk patient selection criteria used to form the case series, is equal to x, then, when selecting a random sample of N high-risk patients out of the general population, we can have confidence that the true rate of adverse events, without treatment, for that particular sample, will range according to a discretized confidence interval . Here, is the minimum number of adverse events and is the maximum number of adverse events that we expect to see in any one particular sample of N high-risk patients, in the absence of treatment, with confidence . The possible criticism of our approach is that, perhaps, for the specific sample of patients in our case series, the true adverse event rate , without treatment, could happen to be below the efficacy threshold , in spite of the corresponding population level adverse event rate x exceeding the efficacy threshold . This raises the question of how big does the gap between x and need to be, to ensure that the entire confidence interval of lies above the efficacy threshold ? Since the lower endpoint of this confidence interval is , the answer to this question defines a new higher threshold , which we shall call the random selection bias threshold, if set is equal to the minimum value of that satisfies

In Appendix B, we prove that an upper bound of this random selection bias threshold can be calculated by choosing the smallest possible value of that satisfies the implication

Here, the notation represents rounding the number upwards towards the nearest integer. We note that the calculation of the confidence interval for , as given by Appendix B, uses the assumption that the Sterne interval solution [71] of the binomial proportion confidence interval problem has conservative coverage. Given the random selection bias threshold , and the prior knowledge that in a similarly high-risk cohort, at the population level, the probability x of an adverse outcome, in the absence of treatment, ranges between , establishing that with some gap between and can be used to rule out random selection bias, with confidence, as the sole cause of a signal of efficacy.

Generally speaking, it is more likely than not that a strong efficacy signal cannot be caused in a particular observation, solely as a result of random selection bias, as long as x, which is near the center of the confidence interval for , exceeds the efficacy threshold . Even if part of the confidence interval is below , more than half of the interval will be above . As a result, the efficacy threshold and the random selection bias threshold quantify two levels of evidence. Showing establishes the existence of treatment efficacy by the preponderance of evidence. Meeting this evidentiary standard should be sufficient for communicating the proposed treatment protocol to other physicians for emergency adoption, with a caveat that it is still investigational, and that more data are needed before making a definitive claim. Showing establishes the existence of treatment efficacy by the clear and convincing evidentiary standard. Our view is that exceeding the random selection bias threshold , for a treatment protocol with acceptable safety, is an objective milestone beyond which therapeutic nihilism, and even the denial of treatment for research purposes, becomes unethical.

With regards to the broader problem of systemic bias, there are multiple possibilities to consider: there may be some population-level geographic bias in the patients that live in the geographic area served by a particular treatment center; there may be reporting bias, in that we hear about case series because of the good outcomes, without these outcomes being representative of the actual outcomes at the national or international level; there may be bias in the patient demographics (ratio of low vs. high-risk patients), and with respect to the timing of treatment (early vs. late treatment). The latter concern can be addressed by stratifying the case series with respect to risk and/or timing of treatment. Geographic bias can be addressed by investigating case series across multiple geographic locations and/or by using localized population statistics for the historical control. Outcome reporting bias can be minimized, if we have consecutive case series from the same treatment center, where the initially reported outcomes are replicated by subsequent results.

Further mitigation of systemic selection bias is possible by establishing a large gap between the random selection bias threshold and the lower bound for adverse outcomes in the historical control statistics. To quantify the magnitude of systemic selection bias, consider the likelihood ratio of selecting unhealthy vs. healthy patients, if the selection is truly random, i.e., without any systemic bias. Here, we define unhealthy patients to be the high-risk patients that will have an adverse outcome without early treatment, if symptomatically infected, and we define healthy patients to be the patients that are not unhealthy patients. If there is systemic bias in favor of selecting healthy patients, then that could account for a false positive signal of efficacy. It would also reduce the corresponding likelihood ratio to , with a numerical factor measuring how much more likely it is to choose healthy patients due to systemic selection bias. In Appendix B, we have also shown that the systemic selection bias threshold , that x has to overcome in order to mitigate systemic selection bias with magnitude F, is related to the random selection bias threshold via the equation:

Given our prior belief that, at the population level, the probability x of an adverse outcome in high-risk patients without treatment satisfies , we can find the maximum amount of selection bias that can be tolerated, before the evidence quality falls back to the preponderance of evidence evidentiary standard, by solving the equation with respect to F. The corresponding solution is given by

This means that if the systemic bias tends to select healthy high-risk patients F times more likely than the likelihood corresponding to their proportion in the general population of high-risk patients, then implies that we can have at least confidence that the observed positive signal of efficacy cannot be explained solely as a consequence of systemic selection bias.

Last, but not least, statistical quantitative evidence can be corroborated and amplified with more qualitative evidence based on the Bradford Hill criteria [77] for establishing a causal relationship between treatment and positive patient outcomes. Particularly relevant are the criteria of: (1) plausibility, i.e., the existence of a known biological mechanism of action that explains why the treatment protocol is expected to work; (2) consistency, i.e., observing the same effect in different treatment centers in different locations; (3) biological gradient, i.e., observing improved outcomes with increased medication dosage or length of treatment, additional medications, or by initiating treatment earlier, rather than later; (4) temporality, i.e., immediate improvement in symptoms, following the administration of the treatment protocol. The statistical evidence alone speak in support of only the strength of association criterion, but that is only one of the several criteria proposed by Bradford Hill [77]. If we can establish that these additional criteria are satisfied, then that constitutes additional evidence on top of the statistical evidence that treatment efficacy exists and that the signal of benefit cannot be explained away, as a result solely caused by selection bias.

3. Methods—Part II: Bayesian Factor Analysis of Efficacy Thresholds

The methodology that we proposed in Section 2 is also vulnerable to the criticism that rejecting the null hypothesis, solely on the basis that the p-value satisfies is not sufficient for asserting that treatment efficacy is statistically significant. This is indeed the position of the recent American Statistical Association statement on statistical significance and p-values [19]. The problem is that p-values only measure how incompatible the data are with the null hypothesis. Consequently, a concern has been expressed that it is not self-evident that the p-value will always do a good job at controlling the probability of a false positive result [78]. To estimate the latter probability, we would have to formulate the appropriate alternate hypothesis and consider how much the data are compatible or incompatible with that alternate hypothesis. This has prompted recommendations to lower the p-value threshold down to or [78,79]. However, this is only a stopgap measure that does not fundamentally address the problem.

In this section, we supplement the p-value based analysis of Section 2 with a proposal for a Bayesian factor analysis [20,21,22,23,24]. The Bayesian factor compares the alternate hypothesis (treatment efficacy) against the null hypothesis, and can be used to calculate the probability of a false positive result [25]. We do not mean to suggest that the Bayesian factor should replace the p-value in hypothesis testing. Our view is that we need to use both. That is, use the p-value to reject the null hypothesis, and then use the Bayesian factor to assess the strength of the evidence in favor of the alternate hypothesis. This viewpoint is similar to earlier proposals for conditional frequentist testing [20].

In the following, we will briefly review the Bayesian factor framework, and outline our specific proposal for validating and adjusting, as needed, the efficacy threshold . We note that the calculation of the random selection bias threshold is independent of the technique used to calculate the efficacy threshold . In terms of procedure, one could initially calculate the efficacy threshold using only the technique of Section 2, and use that to calculate the corresponding random selection bias threshold . Alternatively, a more detailed analysis would involve: (a) calculating the efficacy threshold using the technique of Section 2; (b) adjusting the efficacy threshold using the technique presented in this section; (c) using the adjusted efficacy threshold to calculate the corresponding random selection bias threshold .

3.1. Bayesian Factor and the False Positive Rate

Let , be two arbitrary events in some probability space. From the definition of conditional probability, we obtain the Bayes rule, given by

Let D represent our data, represent the null hypothesis and represent the alternate hypothesis. In the Bayesian statistics framework, we assign probabilities to the hypotheses representing our prior belief about how likely each hypothesis is, and then calculate the updated probabilities and on the condition of observing the data D. In this way, Bayesian statistics is distinct from frequentist statistics where probabilities are not assigned to the hypotheses themselves. From the Bayes rule we have,

and dividing the two equations gives

The Bayes factor is defined to read

and it is the numerical factor that amplifies our prior belief about the odds ratio after seeing the data D. Here, is the probability of seeing the data D if is true and is likewise the probability of seeing the data D if is true.

To interpret the meaning of the Bayesian factor, the following argument is used to calculate the posterior probabilities and in terms of and . We assume that satisfy and . Combining the second equation with Equations (11) and (12) gives the Bayes theorem

and it follows that the probability of a false positive result is given by

We see that the false positive probability approximately scales as the inverse of the Bayes factor . On the other hand, the dependence of on the prior likelihood ratio , which measures our subjective belief about the odds ratio between and , before seeing the data D, is uncomfortable. There are three ways to cope with that: First, one can simply join the frequentist camp, consider probabilities based on beliefs as meaningless, and forget about the whole thing. Second, one can use an uninformed prior, meaning that we assume that both hypotheses and are equally probable, not having any prior knowledge that favors one over the other, and choose , which corresponds to . An interesting third way is to use the reverse Bayesian analysis technique proposed by Colquhoun [25], which is based on the equivalence

which relates an upper bound on the probability with a corresponding lower bound on the prior likelihood ratio , which is given by

with B being the value of the corresponding Bayesian factor. The meaning of Equation (21) is that, given a desired lower bound for the false positive rate and a threshold B for the Bayesian coefficient, is the minimum prior likelihood ratio for our prior knowledge of the extent to which the alternate hypothesis is favored over the null hypothesis , for which the Bayesian threshold B can control the false positive rate and keep it below . As such, given our subjective choice for , one can calculate the threshold B for the Bayesian factor corresponding to the minimum tolerated false positive rate .

Since we wish to constrain the false positive rates to less than , in order to claim 95% statistical significance, we choose . Kass and Raftery [24] and Jeffries [80] both recommend that the threshold be used for a decisive acceptance of the alternate hypothesis over the null hypothesis . Using , we find that . This means that if we associate the decisive threshold with 95% confidence, doing so is equivalent to a prior belief that the null hypothesis is 5 times more likely than the alternate hypothesis. In turn, this prior belief can be used to deduce Bayesian factor thresholds for higher levels of confidence, consistently with our choice to associate with 95% confidence. This choice can be interpreted as defining the word “decisive” to mean 95% confidence, in the context of stating that is “decisive”. It could be critiqued as being an arbitrary choice, but the same can be said for the p-value threshold and the Bayesian factor threshold. Our particular approach has the advantage of being more transparent, in terms of an intuitive interpretation, than an arbitrary choice made in terms of the prior probabilities for and .

3.2. Application to Hypothesis Testing for Case Series

Now, let us consider how Bayesian factor analysis can be applied to a case series with a treatment group of N patients, where a patients have an adverse outcome. Let be the corresponding efficacy threshold, determined via the techniques of Section 2, and let x be the probability of an adverse outcome with treatment. We define a null hypothesis and an alternate hypothesis about the value of x such that

We use for the upper endpoint of the binomial proportion confidence interval corresponding to the observed data . Consequently, the null hypothesis has been defined to place x outside and above that interval, and the alternative hypothesis considers the remaining possible values for x.

Because both and are composite hypotheses, it is necessary to introduce prior probabilities and , corresponding to and . It may seem tempting to just use uninformed priors for both and , however, doing so would certainly not be appropriate for the null hypothesis in almost all situations, since with many illnesses, we can rule out the probability of an adverse outcome exceeding some upper bound . Instead, we can thus use an uninformed prior on the interval , given by

and perform an appropriate sensitivity analysis on the parameter . In general, increasing will tend to increase the Bayes factor, since doing so will tend to increase the contrast between the null and alternate hypotheses. So, we can explore how much can be decreased and still maintain a decisive Bayes factor. Likewise, for the alternate hypothesis , we will use an uninformed prior on the interval with given by

The reason for this choice is that we have found empirically that, in some cases, the Bayes factor may actually increase if, instead of an uninformed prior on , we use an uninformed prior on the shorter interval . From an intuitive standpoint, we surmise that if the data has a very strong efficacy signal, then the contrast between the null and alternate hypotheses is increased when one eliminates the relatively unlikely values of x between t and . For this reason, we shall use the maximum value of the Bayes factor taken over all values , on a decimal logarithmic scale which is given by

In Appendix C we prove that the function is initially increasing and then decreasing, with respect to t, with a maximum in the interval . If this maximum is located in the narrower interval , then the optimal Bayes factor is indeed obtained when we use a choice for the prior distribution of the alternate hypothesis . If the maximum is formally located at , then the optimal Bayes factor is obtained at . The resulting metric is still dependent on the parameter of the prior distribution of the null hypothesis .

To complete the metric definition by Equations (26) and (27), we now show the calculation of the Bayes factor between and as a function of and the data . We note that the probabilities for seeing the data , under the hypotheses and , are given by:

and

consequently, the corresponding Bayes factor is given by

The integrals can be calculated using exact algebra or numerically with the open source computer algebra software Maxima [81]. The exact algebra calculation takes longer to carry out, but we have confirmed that the numerical calculation using the function quad_qagr is just as accurate.

In order to control for the false positive rate, we propose that the efficacy thresholds with should be increased, if necessary, by requiring that they also satisfy . Since the threshold used for a decisive Bayes factor with corresponds approximately to , it is reasonable to use the empirical formula

to adjust the efficacy thresholds for an arbitrary value of demanded confidence . For , Equation (36) gives . For , we find . Both Bayes factor thresholds also correspond to a prior likelihood ratio . Consequently, they are the thresholds that we recommend imposing on the Bayes factors for the purpose of adjusting the corresponding efficacy thresholds for the choices and .

4. Results

We shall now apply the proposed framework to the processing of available high-risk COVID-19 patient case series by Zelenko [2,9,10], Procter [11,12], and Raoult [13] that provide evidence for the original Zelenko triple-drug protocol [2] and the more advanced McCullough protocol [14,15,16], which are both based on safe repurposed medications. Section 4.1 reviews the case series under consideration. Section 4.2 summarizes the data and the calculation of the corresponding efficacy and random selection bias thresholds. These are used in Section 4.3 and Section 4.4 to assess the evidence in support of mortality rate reduction and hospitalization rate reduction correspondingly. Section 4.5 shows that the Bayesian factor analysis of the efficacy threshold has negligible impact for the specific case series under consideration.

4.1. Review of the Zelenko, Procter and Raoult Case Series

In the Zelenko April 2020 letter [9], Zelenko reported on his outcomes based on a total of 1450 patients that he treated for COVID-19 until 28 April 2020 in an Orthodox Jewish community in upstate New York. From this cohort, 405 patients were classified as high risk and treated with his triple-drug therapy (hydroxychloroquine, azithromycin, zinc sulfate). The reported outcomes were six hospitalizations and two deaths. From amongst the patients classified as low risk, who were given only supportive care, there were no hospitalizations or deaths. Zelenko’s criteria for risk stratification define three categories of high-risk patients: (1) every patient older than 60; (2) every patient younger than 60 but with comorbidities or obesity (BMI (Body Mass Index) ); (3) patients younger than 60 and without comorbidities that presented with shortness of breath.

A subset of the 28 April 2020 case series was published in a case controlled study [2] that included only the patients seen with COVID-19 infection that was confirmed by a PCR (Polymerase Chain Reaction) test or an antibody IgG (Immunoglobulin G) test. The remaining patients were clinically diagnosed from symptomatic presentation and via ruling out a bacterial or influenza infection. This Derwand–Scholz–Zelenko study (hereafter DSZ study) [2] included 335 patients of which 141 patients were classified as high-risk patients and treated with the triple-drug protocol, with 4 hospitalizations and 1 death. Detailed demographic data are given for the high-risk patient treatment group, including a detailed breakdown in the three high-risk categories. The study also included a control group of 377 patients, who were seen by other treatment centers in the same community, that were only offered supportive care and no early outpatient treatment. From this untreated group, 13 patients died and 58 patients were hospitalized. The untreated group includes both low-risk and high-risk patients, so we expect that it underestimates both the hospitalization and mortality risk for high-risk patients. Unfortunately, demographic data were not available for the untreated group, so from a strictly methodological point of view, one cannot entirely rule out the theoretical possibility that the untreated group might have consisted of patients that are at higher risk, on average, than those of the high-risk treatment group. On the other hand, using a case series of untreated patients from Israel [61], with demographic data indicating a combination of low and high-risk patients, with 143 deaths reported out of 4179 untreated patients, gives the same mortality rate as in the DSZ control group, suggesting that the DSZ control group also consists of a mixed demographic of low- and high-risk patients.

The June 2020 Zelenko case series [10] is reported in a letter that Zelenko sent to the Israeli Health Minister at the time, Dr. Moshe Bar Siman-Tov, on 14 June 2020, which was later made publicly available. In the letter, Zelenko reported that a total of approximately 2200 patients were seen as of 14 June 2020, with 800 patients deemed high-risk, under the same criteria who were treated with the triple-drug therapy, since the beginning of the pandemic. The reported cumulative outcomes are: 12 hospitalizations, 2 deaths, no serious side effects, and no cardiac arrhythmias.

During the April 2020–June 2020 interval, at the beginning of May 2020, Zelenko enhanced his triple-drug therapy protocol with oral dexamethasone and budesonide nebulizer. He introduced the blood thinner Eliquis towards the end of May 2020 and the beginning of June 2020. Ivermectin was not used by Zelenko until October 2020. Consequently, the DSZ study [2] and the Zelenko April 2020 case series [9] reflect the outcomes of the triple-drug therapy, when used by itself as an early outpatient treatment. The Zelenko June 2020 case series [10] includes the use of steroid medications and a blood thinner, so the underlying treatment protocol is closer to the McCullough protocol [14,15,16].

It is worth noting that both letters [9,10] were originally posted on Google Drive by Zelenko, and were censored by Google during 2021. The April 2020 letter [9] was cited by Risch [65], whose paper has also preserved the corresponding case series data. The June 2020 letter case series data [10] was independently reported in a subsequent publication by Risch [82], which included only the number of reported deaths, and not the number of hospitalizations. The authors have attached copies of all three Zelenko letters [9,10,26] to our supplementary material document [27].

The Procter case series were reported consecutively in two publications [11,12]. The first paper [11] reports on 922 patients that were seen between April 2020 and September 2020, of which 320 were risk stratified as high-risk patients and treated with the McCullough protocol [14,15,16]. The outcome was six hospitalizations and one death. The second paper [12] reports on an additional patient cohort seen between September 2020 and December 2020. Out of the total number of patients during that time period, 549 were risk stratified as high-risk and treated with an outcome of 14 hospitalizations and one death. For both case series, the risk stratification criteria were similar to those used by Zelenko. However, the age threshold used to risk stratify patients as high-risk was lowered to 50 years. The medications used were customized for each patient in accordance with the McCullough protocol [14,15,16] and included hydroxychloroquine, ivermectin, zinc, azithromycin, doxycycline, budesonide, foliate, thiamin, IV fluids, and for more severe cases, dexamethasone and ceftriaxone were also added. Demographic details for the cohorts were reported in the respective publications [11,12].

The final high-risk patient case series is extracted from a recent cohort study [13] of 10,429 patients that were seen between March 2020 and December 2020 by Raoult’s IHU Méditerranée Infection hospital in Marseille, France. From the entire cohort, 8315 patients were treated with hydroxychloroquine, azithromycin, and zinc. Of those patients, those older than 70 or with comorbidities were also treated with enoxaparin. Low-dose dexamethasone was given on a case by case basis to patients that presented with inflammatory pneumonopathy, high viral loads, or on a case by case basis. This treatment protocol is consistent, to some extent, with the principles that underlie the McCullough protocol [14,15,16]. The remaining 2114 patients did not receive hydroxychloroquine or azithromycin or both due to contraindications or because the patients did not consent to using one or two of these medications. This cohort was used in the Raoult study [13] as a control group. The study risk-stratified the patients by age (see Table 1 of Ref. [13]), making it possible to extract a case series of high-risk patients under the restriction . In the treatment group, this results in 1495 high-risk patients with 5 deaths and 106 hospitalizations. In the control group, under the constraint, there are 520 high-risk patients with 38 hospitalizations and 11 deaths. The authors note that no serious adverse events to the medications were reported, and that the reported deaths were not related to side effects of hydroxychloroquine or azithromycin. Furthermore, no deaths were reported for cohort in both the treatment group and control group.

4.2. Tabular Summaries of the Zelenko, Procter, and Raoult Case Series

Table 1 summarizes the aforementioned case series, including the treatment groups from the DSZ study [2], the Zelenko [2,9,10] and Procter [11,12] case series, and the treatment group from the Raoult study [13]. Note that the Zelenko June 2020 case series and the Procter II case series as reported on Table 1, combine the two respective consecutive case series. We also report in Table 1 the DSZ study’s control group [2], the alternative Israeli control group [61], and the part of the Raoult control group [13]. We emphasize that all reported treatment group case series consist of high-risk patients.

Table 1.

Case series list: The table lists the total number of patients, the subset of high-risk patients that were treated with a sequenced multidrug regimen, number of patients that were hospitalized, and number of deaths, for the following case series: Derwand–Scholtz–Zelenko study treatment group [2], Zelenko’s complete April 2020 data set [9], Zelenko’s complete June 2020 data set [10], Procter’s observational studies [11,12], and Raoult’s high-risk (older than 60) treatment group [13]. The table also lists the same data for the control group in the DSZ study [2], the untreated group in the Israeli study [61], and the control group in the Raoult study [13].

From a cursory examination of Table 1, we see that the mortality rate is consistent across all treatment groups, which speaks to the consistency Bradford Hill criterion [77]. Hospitalization rates are also consistent between the Zelenko [2,9,10] and Procter case series [11,12], but there is a clear discrepancy with the hospitalization rates reported in the Raoult treatment case series [13]. We believe that the reason for the discrepancy is that both Zelenko and Procter explicitly aimed to prevent hospitalizations due to the poor outcomes of the inpatient treatment protocols used in the United States. In Marseille, France, Raoult had the option of using his IHU Méditerranée Infection hospital for short hospitalizations, in order to closely monitor his more concerning cases.

In Table 2, we show the results of comparing the Zelenko April 2020 [9] and Zelenko June 2020 [10] case series against both the original DSZ control group [2] as well as the alternative control group from Israel [61]. The confidence intervals were calculated using Woolf’s formula [83,84]. Although in the original DSZ study [2] mortality rate reduction was not statistically significant, we have found that comparing either the Zelenko April 2020 case series [9] or the June 2020 case series [10] against either control group, gives more than 90% mortality rate reduction, which is also statistically significant in terms of both p-value and confidence interval. Likewise, we see at least 90% hospitalization rate reduction when the Zelenko April 2020 case series or Zelenko June 2020 case series is compared against the DSZ control group, which is statistically significant as well. Because the control groups consist of a combination of both low-risk and high-risk patients, whereas the treatment groups consist of only high-risk patients, the resulting comparisons are biased towards the null, and thus underestimate the actual efficacy of the respective treatment protocols. This comparison is compelling due to the consistency between the two control groups, as evidence in favor of showing the existence of treatment efficacy. However, from a methodological standpoint, it may not be convincing enough by itself in terms of measuring the extent of treatment efficacy.

Table 2.

Exact Fisher test comparing the mortality rate reduction and hospitalization rate reduction between the high-risk patient treated group the DSZ study [2], Zelenko’s complete April 2020 data set [9], and Zelenko’s complete June 2020 data set [10] against the low risk and high-risk patient control groups in the DSZ study [2] and the Israeli study [61]. The p-values where there is a failure to establish 95% confidence are highlighted with bold font.

We have also calculated the efficacy threshold for mortality rate reduction and hospitalization rate reduction corresponding to the case series by Zelenko [2,9,10], Procter [11,12], and Raoult [13]. The calculations are shown in the supplementary material document [27]. The results are tabulated in Table 3. We display the efficacy thresholds for 95%, 99%, and 99.9% confidence, which are calculated as the upper end points of the corresponding Sterne interval [71] and, in parentheses, we display the corresponding random selection bias thresholds. We use precision of 0.1% for most case series, except for the two largest ones, Procter II [12] and Raoult [13], where we use 0.01% precision.

Table 3.

Mortality and hospitalization rate reduction efficacy thresholds, defined as the upper end of the Sterne interval [71], corresponding to 95%, 99%, and 99.9% confidence, for the DSZ study treatment group [2], Zelenko’s complete April 2020 data set [9], Zelenko’s complete June 2020 data set [10], Procter’s observational studies [11,12], and Raoult’s high-risk (older than 60) treatment group [13]. In parenthesis, we also display the corresponding higher random selection bias thresholds.

Each threshold corresponds to a mathematically rigorous conditional statement about rejecting the null hypothesis that the corresponding early outpatient treatment protocol is ineffective. For example, the 1.8% efficacy threshold corresponding to 95% confidence for rejecting the null hypothesis in the Zelenko April 2020 case series [9] corresponds to the following statement: If the expected mortality rate for an equivalent cohort without early outpatient treatment exceeds 1.8%, then the null hypothesis can be rejected with at least 95% confidence. Similar statements can be formulated for each efficacy threshold metric on Table 3. Likewise, the 4.0% random selection bias threshold for the Zelenko April 2020 case series [9] corresponds to the following statement: If the observed mortality rate, at the population level, for high-risk patients, classified as such using the same selection criteria as in the treatment case series, exceeds 4.0%, then we can be 95% confident that the observed signal of efficacy cannot be attributed solely to random selection bias, and we can also reject the null hypothesis with at least 95% confidence. Similar statements are implied from all of the other random selection bias thresholds reported on Table 3.

These statements are mathematical facts. However, to complete the inference argument, they need to be paired with an inevitably subjective statement that provides an estimate, or at least a lower bound, on the expected mortality or hospitalization rates of similar cohorts without early outpatient treatment. Secondarily, we need an inference about the intervals of mortality or hospitalization rates, in the absence of early outpatient treatment, in order to do the Bayesian adjustment of the efficacy thresholds.

4.3. Analysis of Mortality Rate Reduction Efficacy

To establish that early treatment protocols result in mortality rate reduction, when administered to high-risk patients, we recall that patients have been classified as high-risk based on the following three categories: (1) old age; (2) comorbidities or obesity (with ); (3) shortness of breath upon presentation. The age threshold for high risk classification is for the Zelenko [2,9,10] and Raoult [13] case series, and for the Procter [11,12] case series. The high-risk treatment groups for the Zelenko [2,9,10] and Procter [11,12] case series include the demographic distribution of all three categories of high-risk patients, whereas in the Raoult [13] case series we have included only patients. Our approach, in the following, is to lower bound the mortality rate, in the absence of early outpatient treatment, separately for each of the three high-risk patient categories. Then, the common lower bound becomes applicable to any demographic distribution of the three categories. To establish the existence of treatment efficacy, it is sufficient for this lower bound to exceed the corresponding thresholds of Table 3. In the following, we shall now consider the mortality rate for each of the three high-risk patient categories separately.

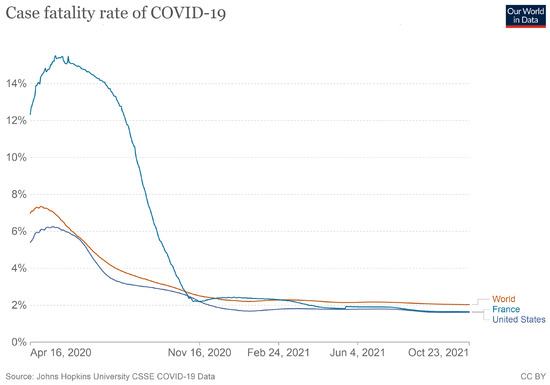

With regards to the first category of patients classified as high-risk due to old age, the earliest data from China [60], as of 11 February 2020, estimated a minimum of 3.6% mortality rate for patients older than 60 and a minimum of 1.3% mortality rate for patients older than 50 (see Table 4). These numbers are consistent with numbers from China [58] and Italy [59] as of March 17, 2020 (see Table 5). We can also estimate the mortality risk of the first category of high-risk patients ( or ) using adjusted estimates by the CDC (Centers for Disease Control and Prevention) [62,63,64] of COVID-19 deaths per symptomatic cases. The CDC report attempts to adjust for the differences in under-reporting of symptomatic illness, hospitalizations, and deaths, and it is based on reports ranging from February 2020 to September 2021. The raw data and a copy of the CDC report website are given in our supplementary material document [27]. From that, we calculate for the group a mortality rate of 2.26% (95% CI: 1.94– 2.61%). We cannot deduce an mortality rate from the CDC report, but note that the mortality rate, according to the CDC, is 4.79% (95% CI: 4.11% to 5.52%). We observe that the stratification of mortality risk with respect to age is consistent between three distinct geographical regions.

Table 4.

Crude case fatality rate data, in the absence of early outpatient treatment, based on early data from China as of 11 February 2020, and published on 30 March 2020. [60]. We highlight with bold font the high-risk age brackets with .

Table 5.

Crude case fatality rate data, in the absence of early outpatient treatment, based on early data from China and Italy as of 17 March 2020 and published on 23 March 2020 [58,59]. We highlight with bold font the high-risk age brackets with .

The second category of high-risk patients are patients with comorbidities regardless of age. In Table 6, we show case fatality rates with respect to comorbidities (i.e., cardiovascular disease, diabetes, respiratory disease, hypertension, cancer), based on data from China [58] in the period up to 11 February 2020, and additional data from Israel [61], with patients diagnosed in the period up to 16 April 2020 and deaths recorded up to July 16, 2020. There is variability in mortality rates from 5% to 15%. The Israeli data appear to show higher mortality rates than the data from China, and the reason for that could be that the Israeli study [61] accounted for the time lag between patient diagnosis and death. Nevertheless, with respect to using 5% as a lower bound mortality rate for high-risk patients with comorbidities, the available data from both locations are consistent.

Table 6.

Case fatality rate based on early-stage analysis of COVID-19 outbreak in China in the period up to 11 February 2020 [58] vs. similar statistics from Israel published on 7 September 2020 [61].

These studies do not account for the mortality risk from obesity, and do not account for the mortality risk corresponding to the third category of high-risk patients that present with shortness of breath. A collaborative study by Risch and a research group in Brazil [85] found, using multivariate regression analysis, that both obesity and dyspnea pose a higher mortality risk than heart disease (see Table 2 of Ref. [85]), therefore, we expect that they both lie in the same 5% to 15% interval as patients with other comorbidities.