Abstract

This paper presents the design and implementation of a high-precision vehicle detection and classification system for toll stations on national highways in Colombia, leveraging LiDAR-based 3D point cloud processing and supervised machine learning. The system integrates a multi-sensor architecture, including a LiDAR scanner, high-resolution cameras, and Doppler radars, with an embedded computing platform for real-time processing and on-site inference. The methodology covers data preprocessing, feature extraction, descriptor encoding, and classification using Support Vector Machines. The system supports eight vehicular categories established by national regulations, which present significant challenges due to the need to differentiate categories by axle count, the presence of lifted axles, and vehicle usage. These distinctions affect toll fees and require a classification strategy beyond geometric profiling. The system achieves overall classification accuracy, including for light vehicles and for vehicles with three or more axles. It also incorporates license plate recognition for complete vehicle traceability. The system was deployed at an operational toll station and has run continuously under real traffic and environmental conditions for over eighteen months. This framework represents a robust, scalable, and strategic technological component within Intelligent Transportation Systems and contributes to data-driven decision-making for road management and toll operations.

1. Introduction

The increasing complexity of modern transportation networks has driven the development of Intelligent Transportation Systems (ITS), technological ecosystems that integrate sensing devices, data processing algorithms, and real-time communication to enhance the efficiency, safety, and sustainability of mobility [1,2]. ITS have proven to be key instruments for optimizing the operation of main infrastructure such as toll stations, where continuous and accurate monitoring of vehicle flow is essential for tariff management, road planning, and strategic decision-making [3]. The ability to automatically classify and count vehicles, without human intervention, reduces waiting times, minimizes operational errors, and enables differentiated pricing schemes and the collection of structured data for traffic analysis [4,5,6,7]. Such digital solutions are especially relevant where mobility demands are growing faster than the capacity to expand physical infrastructure, and a progressive transition toward more innovative, interoperable, and sustainable solutions is needed.

Traditionally, toll stations have used intrusive technologies for vehicle classification, including inductive loops, piezoelectric sensors, and axle-counting systems, using physical barriers or pressure plates embedded in the road [8,9]. Intrusive sensors refer to technologies that require installation directly into the road infrastructure, typically involving saw-cut grooves, drilled holes, or subsurface channels in the pavement [10]. These methods offer a certain degree of accuracy but present several operational limitations. Their installation requires structural interventions on the road surface, often involving lane closures, and is subject to physical wear over time due to repeated traffic loads and adverse weather conditions. Although generally less sensitive to environmental visibility, their performance degrades as components deteriorate, leading to reduced reliability and increased maintenance demands. In response to these constraints, non-intrusive solutions based on computer vision have been explored, employing video cameras combined with pattern recognition algorithms and computational intelligence to infer the type of vehicles in motion [11,12,13]. However, this approach also has vulnerabilities, such as being affected by variations in natural lighting, partial occlusions, and weather conditions such as rain or fog [14,15]. As a result, there is a growing interest in the use of active sensors such as Light Detection and Ranging (LiDAR), which employ laser scanning to generate three-dimensional point clouds of the environment, and are independent of lighting conditions and more robust to partial occlusions [16,17,18,19]. This technology, widely used in perception systems for autonomous vehicles and 3D mapping [20,21,22,23], offers a promising technical framework for vehicle classification applications in tolling environments. However, its adoption in real-world operational contexts still faces challenges in terms of cost, real-time processing, and compatibility with vehicle categorization schemes regulated by national standards.

The processing of point clouds from LiDAR sensors requires efficient computational architectures capable of extracting meaningful features from high-dimensional unstructured data. Unlike images with a regular matrix structure, 3D point clouds are irregular and pose unique challenges in representation, segmentation, and classification [24,25,26]. In this context, various approaches combine filtering, normalization, and ground plane removal techniques with the extraction of descriptors such as Fast Point Feature Histograms (FPFH), which capture local information about surface curvature and orientation [26,27], and which can be encoded using Bag-of-Words (BoW) models to facilitate their use in statistical classifiers [28,29,30]. These feature vectors have been successfully integrated with supervised learning algorithms such as Support Vector Machines (SVM) [31,32], neural networks [25,33,34], and Bayesian networks [35], among others. The combination of these methods has proven effective for 3D object classification, including vehicle recognition, in controlled or simulated environments; however, their implementation in dynamic settings such as real-world toll plazas requires additional adaptations to account for variations in vehicle geometry, movement, multiple lanes, and real-time inference requirements. These limitations underscore the need to design processing pipelines capable of operating autonomously in complex operational environments while complying with each jurisdiction’s regulatory and technical standards.

Despite the growing global interest in LiDAR technologies applied to vehicle monitoring, their adoption in developing countries remains limited due to budget constraints, deficiencies in technological infrastructure, and the absence of public policies promoting road system digital modernization. In the case of Colombia, the national highway network includes more than 140 toll stations operated by public agencies and private concessions, which apply tariff schemes defined by regulations issued by the Instituto Nacional de Vías (INVIAS) and the Agencia Nacional de Infraestructura (ANI) [36,37]. These regulations classify vehicles by vehicle type, axle count, gross vehicle weight, and specific usage, such as distinguishing between buses and trucks with identical axle configurations. This multifactor classification criterion adds notable complexity to the automation process, as it requires detection of lifted axles, evaluation of physical dimensions, and contextual interpretation of vehicle type.

Most Colombian tolls still rely on mechanical counting mechanisms or manual supervision, limiting scalability, traceability, and integration with modern traffic management systems. This situation highlights a persistent gap between technological advances and their implementation in real-world infrastructure, underscoring the need for systems that respond to local technical, regulatory, and operational conditions. This article contributes a replicable and field-tested architecture for automated vehicle counting and classification in toll plazas, tailored to the axle-based tolling model used in Colombia. The proposed system integrates 2D LiDAR sensors, video cameras, and Doppler radar with point cloud processing and supervised learning algorithms.

Unlike most academic proposals, the system was deployed and validated under long-term operational conditions, demonstrating its practical feasibility. It complies with current Colombian toll classification regulations and supports the development of robust, scalable, and non-intrusive ITS technologies capable of real-time operation. Moreover, the system produces structured mobility data that can inform oversight processes and serve as input to transport system models, laying the groundwork for data-driven digital transformation in toll collection and infrastructure planning.

The following sections present the remainder of this paper: Section 2 provides a review of the state of the art in vehicle classification and LiDAR technologies; Section 3 describes the system architecture and the data processing methodology; Section 4 presents the experimental results obtained in real-world tests; and finally, Section 5 outlines the conclusions and future lines of work.

2. Related Work

Automatic vehicle classification is essential in ITS, supporting toll collection, infrastructure management, and logistics planning. In recent years, LiDAR has emerged as a reliable and non-intrusive alternative to traditional sensors such as inductive loops, piezoelectric strips, and vision systems affected by lighting. Its ability to generate detailed 3D point clouds enables precise geometric profiling of moving vehicles, paving the way for advanced classification systems that combine 3D data processing with machine learning to perform robustly under real traffic conditions.

Several technologies have been developed for automated vehicle detection and classification, each with distinct strengths, limitations, and deployment requirements. Table 1 presents a comparative analysis of the most relevant approaches currently used in toll monitoring and ITS, including inductive loops, piezoelectric sensors, video-based methods, and LiDAR-based solutions. These technologies vary in terms of intrusiveness, resilience to environmental conditions, suitability for axle-based classification, and real-time performance.

Table 1.

Comparative analysis of vehicle detection and classification technologies.

Intrusive systems such as inductive loops and piezoelectric sensors are widely used in permanent installations due to their robustness and accuracy in detecting axle counts. However, they require interventions on the road surface, which leads to higher installation and maintenance costs, as well as limited scalability [10]. In contrast, camera-based systems combined with deep learning architectures like YOLO offer non-intrusive installation and good classification by vehicle type, but they suffer from reduced performance in low-light or adverse weather conditions and are not designed for axle-based classification [42].

LiDAR-based approaches strike a balance between non-intrusiveness and structural accuracy. The geometry-based method proposed in this work enables axle-level classification using LiDAR hardware and lightweight algorithms such as FPFH feature extraction and BoW-SVM classification. While deep learning architectures like PointNet or VoxelNet may achieve higher classification power in complex 3D data, they require large training datasets and substantial computational resources [44]. Our system offers a practical, real-time solution tested in operational toll environments, with modularity and scalability as key benefits. This comparison reinforces the suitability of LiDAR-based solutions for toll supervision scenarios, particularly in countries with axle-based tolling policies, including Colombia.

Furthermore, Table 2 summarizes the main methodological and operational characteristics of some studies on vehicle classification using LiDAR sensors and complementary technologies. It reveals an evolution from geometric descriptors to machine learning, alongside increasing LiDAR adoption. However, it also highlights ongoing challenges, including limited validation under real-world conditions, low class diversity, the absence of essential functionalities, such as axle counting or license plate recognition, and weak alignment with regulatory standards. The heterogeneity in sensor configurations further underscores the need for robust and modular solutions tailored to complex environments such as toll stations.

Table 2.

Summary of related works using LiDAR for vehicle classification or axle counting.

The “Sensors/Data source” and “Sensor position” columns reflect the diversity of data acquisition technologies and sensor configurations across the reviewed studies. While all approaches employ LiDAR sensors as the primary data source, their deployment varies widely, from single-channel overhead scanning setups (e.g., [16,17,32,46]) to lateral configurations (e.g., [47,48]), as well as hybrid architectures incorporating multiple viewpoints (e.g., [49,50]). Some studies, such as [47], also integrate camera-based visual data, leveraging sensor fusion techniques to enhance the feature space and compensate for modality-specific limitations. This variation in sensor configuration highlights the crucial role of geometric system design in vehicle classification. The performance of key operations, such as contour extraction, axle counting, and structural profiling, depends on factors such as sensor placement, scan angle, and field of view. Lateral setups often yield richer detail for axle detection, while overhead configurations support lane-based segmentation but may suffer from occlusions. These architectural choices directly affect data quality, algorithm accuracy, and system scalability in real-world deployments like toll stations.

Axle counting is another critical functionality, particularly in regulatory contexts where toll categories depend on the number of axles rather than just size or gross weight. Despite its importance, the “Axle counting” column shows that only a minority of studies explicitly implement this feature, notably [48,49]. These works deploy targeted geometric strategies and assignment algorithms to detect wheel positions and infer axle configurations. However, most studies focus solely on general vehicle classification, omitting axle-related attributes. This omission significantly reduces their applicability in operational tolling systems, especially in countries like Colombia, where axle count determines tariff class. Liftable or non-standard axle arrangements, for instance, require detailed structural analysis that basic morphological classification methods cannot resolve. These limitations highlight the need for classification architectures incorporating flexible, high-precision axle-counting modules.

The “Vehicle image/Plate detection” column reveals that only three studies, including the system proposed in this paper, report using vehicle images. However, only the present work integrates license plate detection as a system feature. For example, ref. [32] captures broad road segments for point cloud generation, and ref. [47] uses video for speed estimation, but neither employs image data for vehicle identification. Including license plate detection is a significant advantage, enabling hybrid validation mechanisms that enhance system traceability and regulatory compliance. Additionally, by linking classification results to license plates, the system supports user segmentation and enables differentiated commercial campaigns, such as targeted discounts and loyalty programs.

The “Classification” and “Categories” columns reveal substantial heterogeneity in the taxonomic schemes across the reviewed studies. While some works rely on simplified class structures with just 2 to 6 categories (e.g., [16,32,46,50]), others, such as [17,47], and the present study, implement more granular taxonomies with up to eight or nine classes. However, only the proposed system adheres explicitly to national regulatory standards. This alignment enables direct integration into toll enforcement frameworks, where tariff assignment depends on detailed vehicle categorization. The lack of standardization in many prior studies is a limitation, especially for deployment in regulatory environments. Generic taxonomies such as “car” or “truck” may be adequate in experimental contexts but are insufficient where vehicle type directly influences pricing, enforcement, or compliance. Using an eight-class model aligned with Colombian regulations in the current study ensures legal compatibility, supports traceability, and enables interoperability with national ITS infrastructure.

The “Classification accuracy” and “Classification algorithms” columns show that reported accuracy levels in the literature range from to . Studies using more advanced methods, such as deep neural networks or SVMs, generally achieve higher performance (e.g., [23,32,50]). The system proposed in this article reaches an overall classification accuracy of , with powerful results in the classification of heavy vehicles, achieving accuracy in categories involving vehicles with three or more axles. However, differences in classification schemes and dataset sizes across studies limit the possibility of direct comparisons.

The classification algorithms employed also reflect a methodological evolution. Early works relied on heuristic rules or basic linear classifiers, whereas recent studies incorporate statistical and machine learning techniques. This study’s use of SVM reflects this shift, effectively balancing classification accuracy, generalization capacity, and computational efficiency.

The “Feature extraction” column shows a similar transition. Early works relied on global geometric descriptors, such as length, height, or width [46,50]. In contrast, recent contributions incorporate more expressive 3D descriptors like VFH (Viewpoint Feature Histogram), SHOT (Signature of Histograms of Orientations), and FPFH [16,32,47]. These local descriptors capture fine-grained surface information and are more robust to occlusions and noise. The present study distinguishes itself by combining global geometric features (e.g., vehicle length, height) and local shape descriptors, enhancing the system’s ability to differentiate between visually similar classes.

The “Samples” column reveals significant variation in dataset sizes, ranging from as few as 65 labeled samples in [49] to over 44,000 objects in the present work. This disparity has profound implications for model training, especially regarding class imbalance and generalization capacity. Smaller datasets often fail to capture the diversity needed to train robust classifiers. In contrast, the large, empirically curated dataset used in the current study supports both statistical validity and operational reliability, making the resulting model more transferable to complex deployment environments such as toll plazas.

Despite notable advances, the state of the art in LiDAR-based vehicle classification still faces critical limitations that hinder its direct application in real-world operational settings. Many systems are validated under controlled conditions, limiting their reliability in high-demand environments like toll plazas, where real-time accuracy under variable conditions is essential. Widely used datasets, such as KITTI [51], nuScenes [20], and ZPVehicles [19], are not tailored to classification schemes based on axle count, usage, or gross weight, requirements standard in countries like Colombia, forcing reliance on narrow proprietary datasets. Deep learning models, though accurate, demand high computational resources, posing challenges for edge deployment in regions with limited infrastructure. Additionally, most systems rely on generic labels like “light” or “heavy” vehicles, which are insufficient for automated tolling or tariff enforcement tasks that require alignment with local classification standards.

In the Latin American context, the development of the SSICAV system in Colombia, documented in the thesis underlying this article, offers a comprehensive and robust solution tailored to the operational realities of the country’s road infrastructure. The multisensor architecture integrates 2D LiDAR, Doppler radars, and video cameras in a processing pipeline that includes distance and statistical filtering, RANSAC-based segmentation, angular correction, and extracting geometric attributes and FPFH. The resulting feature vectors feed into an SVM trained on real-world field data. The system enables automatic classification into eight vehicle categories aligned with official standards from Colombia’s INVIAS and ANI, and it is establishing itself as a pioneering model in the region and a potential reference for large-scale national deployment.

In summary, the academic literature shows steady progress in using LiDAR sensors for vehicle classification, with a clear shift from rule-based models and geometric descriptors toward 3D neural architectures. However, researchers have yet to bridge the gap between experimental developments and practical deployment in real-world tolling environments. This review underscores the need for systems such as the one proposed in this study: solutions that combine geometric precision, computational efficiency, compliance with national standards, and empirical validation under real operational conditions.

3. Materials and Methods

This section presents a real-time non-intrusive system for vehicle counting and classification at toll stations on national highways. The solution integrates LiDAR-based 3D sensing, machine learning, and computer vision to classify vehicles into eight predefined categories (see Table 3), while performing license plate recognition, axle counting, lifted axle detection, and image recording. Designed for continuous 24/7 operation, the system enables flexible deployment and supports periodic retraining with newly labeled data to improve classification performance as more information becomes available.

Table 3.

Vehicle classification categories.

3.1. Hardware Description

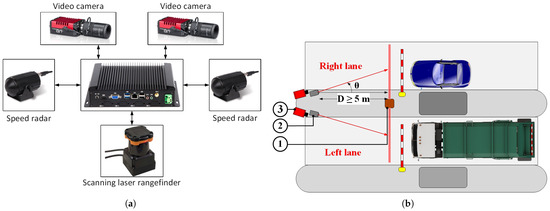

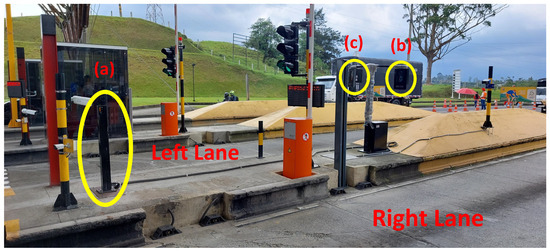

The system uses a robust physical platform composed of a Hokuyo UTM-30LX 2D LiDAR [52], two Stalker stationary speed radars [53], and two Prosilica GT1290C video cameras [54], all connected to a fanless industrial mini-PC housed in an aluminum case, as shown in Figure 1a. This setup enables simultaneous monitoring of two adjacent toll lanes. As illustrated in Figure 1b, each lane is equipped with a dedicated camera and speed radar, while a single LiDAR unit covers both lanes with its wide scanning angle.

Figure 1.

(a) Connection diagram of the vehicle counting and classification system. (b) Spatial arrangement of sensors at a toll station for two adjacent lanes. (1) Scanning laser rangefinder. (2) Speed radars. (3) Video cameras [55].

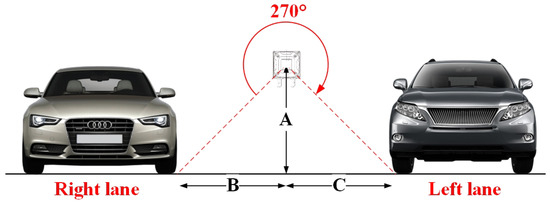

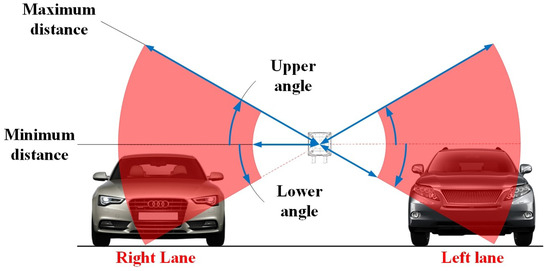

The laser sensor is positioned between two adjacent lanes, directing its beams toward the sides of passing vehicles to generate detailed 3D models of their lateral surfaces (see Figure 2). To ensure accurate data acquisition, the operator must keep distances B and C between m and m, ensuring that no objects obstruct the laser’s line of sight to either the vehicle or the ground. The installation height A should allow full coverage of the wheels. To maintain point cloud density, the system requires vehicle speeds to remain below 40 km/h; handling faster traffic conditions would require higher sampling-rate sensors. Continuous vehicle motion through the scanning zone is also essential to prevent data distortion from unexpected stops.

Figure 2.

Spatial arrangement of the scanning laser rangefinder [55].

Speed radars should be installed 5 to 10 m from the laser sensor and aligned toward the lane center, ensuring their beams intersect the laser’s field of view in the center of the lane (see Figure 1b). Visible-spectrum cameras capture images as vehicles cross the laser beam, and operators must ensure proper focus to enable reliable license plate recognition. They should be positioned as perpendicular as possible to the plate surface. All components must be housed in weather-protected enclosures to ensure long-term durability, consistent performance, and interference-free data acquisition.

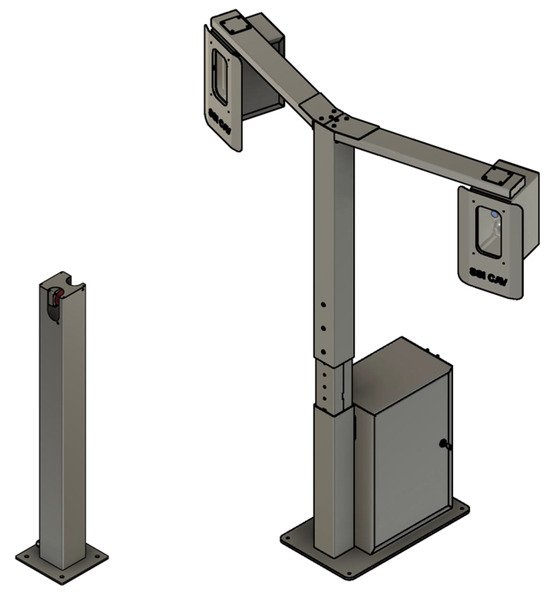

Design and Construction of the Physical Structure

The system’s physical structure ensures reliable performance under high-demand tolling conditions. Made from high-strength, corrosion-resistant steel, its modular design enables easy transport, installation, and reconfiguration. It includes a 1-meter galvanized pole for mounting the laser sensor, an IP65-rated industrial computer and electronics enclosure, and two upper arms with weatherproof housings for cameras and radars. Internal conduits and military-grade connectors protect wiring and ensure electrical stability. With cm adjustable height and angular alignment mechanisms, the CAD-modeled structure (prototyped in Figure 3) offers a durable and flexible platform for advanced vehicle detection and classification.

Figure 3.

Physical structure of the vehicle counting and classification system.

3.2. Software Description

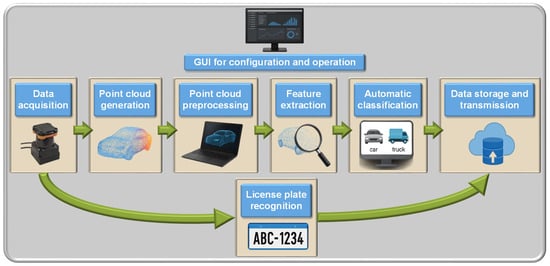

The system comprises interconnected software modules that process data from integrated sensors to generate 3D point clouds of passing vehicles. These are then analyzed and classified into predefined categories using advanced computer vision and pattern recognition techniques. It also includes a module for automatic license plate recognition to manage key vehicle information. Classification results and plate images are stored in structured formats and transmitted to the management system for efficient analysis and use. Figure 4 illustrates the system architecture, and Table 4 details the functional requirements. The software was developed in Python 3.6.9, using the Point Cloud Library (PCL) [56] v1.10.1 for point cloud processing. The following sections present the technical details of each module.

Figure 4.

General block diagram of the vehicle counting and classification system software.

Table 4.

Functional requirements of the vehicle counting and classification system software.

This section describes the architecture and functional modules of the proposed system, based on the structure presented in Figure 4. Each subsection from Section 3.2.1 to Section 3.2.7 corresponds to a specific component in the system’s workflow, including the graphical interface, data acquisition, preprocessing, vehicle segmentation, feature extraction, classification, and plate recognition.

It is worth noting that the Automatic License Plate Recognition (ALPR) module, described in Section 3.2.7, is part of the overall system but is operationally decoupled from the point cloud processing and classification pipeline. While the classification of vehicles is based on LiDAR data and trained with a dedicated dataset (detailed in Section 3.2.6), the ALPR module uses video imagery and a separate dataset, and its outputs are used primarily for record-keeping and traceability rather than influencing classification outcomes.

The organization of the subsections reflects both the logical flow of the system architecture and the functional independence between modules, facilitating clarity in presentation and alignment with the system’s real-world implementation.

3.2.1. Configuration and Processing Graphical Interface

The designed and implemented graphical user interface (GUI) facilitates intuitive and efficient interaction with the proposed system’s data acquisition and processing components. The GUI functions as an integrated control panel for configuration and operational monitoring, allowing real-time management of system parameters according to the specific characteristics of each deployment scenario.

The GUI architecture consists of three main modules: “hardware configuration”, “software configuration”, and “operational control”. Configuration modules must be completed before system activation to ensure synchronized sensors and initialization of parallel processing threads.

The “hardware configuration” module provides interfaces for assigning and validating serial communication ports for the LiDAR scanner, Doppler speed radars, and video cameras. It also allows users to associate sensors with specific toll lanes and define their operational ranges. Additional functionalities include real-time device status indicators, automatic hardware detection routines, and persistent error logging mechanisms.

The “software configuration” module allows fine-grained customization of algorithmic parameters. Key settings include the following: definition of the region of interest (ROI) through angular and distance thresholds for each lane (to enable valid sample selection and distance-based filtering); thresholds for statistical outlier removal; selection of radius parameters for surface normal estimation, keypoint detection, and FPFH computation; configuration of the clustering algorithm for BoW encoding; kernel selection for the support vector machine classifier; and activation of the automatic license plate recognition module. Furthermore, the user can specify storage paths for structured data logging and define host parameters for encrypted data transmission.

All GUI modules operate under a multithreaded execution model, in which each component runs as an independent subprocess. This design ensures non-blocking user interactions and parallel data processing, optimizing computational performance and ensuring real-time system responsiveness, even under conditions of high traffic density.

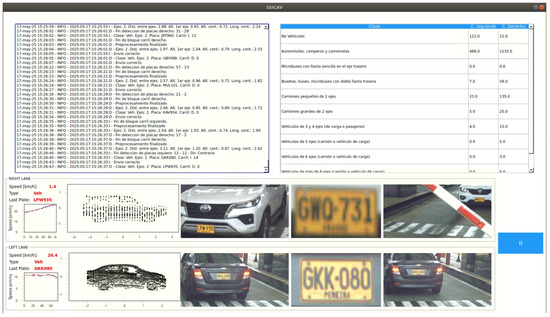

Figure 5 illustrates the GUI operational screen, enabling real-time system performance supervision. Key metrics, such as the number of vehicles processed, classification results, estimated vehicle speed, detected license plate, and the point cloud and photographic record of the latest vehicle for each lane, are updated dynamically. This comprehensive display provides high situational awareness and supports rapid decision-making in the field.

Figure 5.

Configuration and processing interface: operation screen.

The GUI fully complies with the functional requirements outlined in Table 4. It enables in situ system customization and robust interaction with all sensing and processing modules, contributing to its overall usability, adaptability, and scalability across heterogeneous operational environments.

3.2.2. Data Acquisition

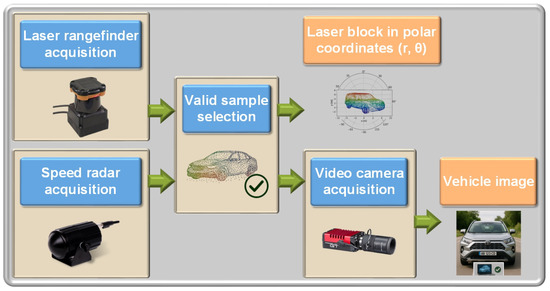

In the data acquisition stage, the system performs sensor configuration and data capture and determines whether the acquired data contains valid information corresponding to a vehicle object. Figure 6 shows the block diagram of the data acquisition module. The laser rangefinder interface uses the HokuyoAIST library [57,58], while communication with the speed sensors relies on the pySerial module, version [59]. Similarly, the system establishes communication with the cameras through the Vimba API provided by the manufacturer, Prosilica [60].

Figure 6.

Block diagram of the data acquisition module.

Valid Sample Selection

This process detects when a vehicle enters the laser sensor’s field of view and initiates a new laser data block that captures all beam measurements during the vehicle’s passage, excluding those associated with zero velocity. For each lane, the system defines a ROI as a conical area in polar coordinates, bounded by specific radial and angular limits (see Figure 7). The ROI excludes irrelevant surfaces such as the ground and nearby obstacles. Once defined, the system continuously monitors objects within the ROI and validates their movement using speed data. It initiates a new data block upon detecting motion and triggers image capture for license plate recognition. The point cloud generation begins once the object exits the ROI and the system has accumulated sufficient valid laser measurements.

Figure 7.

Region of interest (ROI) of valid sample selection [55].

3.2.3. Creation of the Point Cloud

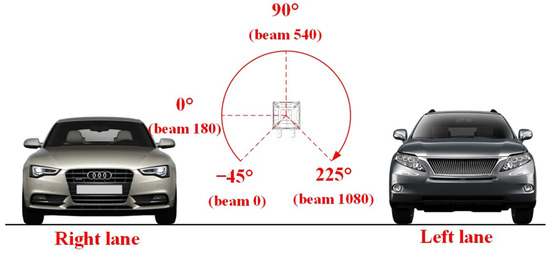

This stage processes the laser data block acquired in the previous step to generate a point cloud representation of the vehicle. The laser scanner operates on a polar coordinate plane in which is oriented horizontally toward the right lane (see Figure 8). It captures measurements across an angular range from to , covering both lanes. The scanner operates at 40 samples per second (SPS), completing a full rotation every 25 milliseconds. With an angular resolution of , each scan yields 1081 data points: 541 corresponding to the right lane and 540 to the left.

Figure 8.

Angular range of the laser rangefinder [55].

After receiving the laser data and the corresponding velocity vector, the system generates a 3D scene representation using a Cartesian coordinate system: Z represents height, X represents length, and Y represents width. Scan data in polar coordinates are converted to Cartesian coordinates using the Equation (1). Angular values for the right lane range from to , and for the left lane, from to .

To compute the X coordinate of each point in the cloud, the system uses the vehicle’s speed vector and processes it to reduce abrupt variations caused by real-time measurement noise. This preprocessing applies a first-order Kalman filter and smoother [61,62,63,64], assuming constant velocity in a linear motion model.

Equation (2) defines the model’s state transition equation for both the Kalman filter and the smoother, and describes the state observation equation, where is the state vector at time k, composed of the vehicle’s position p and velocity v; is the state transition matrix; is the process noise vector; is the laser sampling interval, is the observation vector at time k, represented by the measured vehicle velocity; is the observation or output transition matrix; and is the measurement noise vector.

The covariance matrices are defined according to the methodology described in [64], as shown in Equation (3), where is the process noise covariance matrix, is the measurement noise covariance matrix, is the initial state covariance matrix, is the model’s initial state vector, and is the vehicle’s velocity at the first sample.

Kalman filtering is a forward iterative prediction process that begins with the first sample of the speed vector. Equation (4) describes the filter, where is the predicted state vector at time k, is the predicted state covariance matrix, is the estimated state covariance matrix, and is the Kalman gain matrix. The state vector includes the filtered or estimated vehicle speed.

The Kalman smoother processes filtered velocity by a backward iterative update process that starts from the last estimated state vector and the last state covariance matrix computed by the Kalman filter. Equation (5) describes the smoother, where is the Kalman gain of the smoother, is the smoothed state vector, and is the smoothed state covariance matrix. The smoothed state vector includes the smoothed speed value.

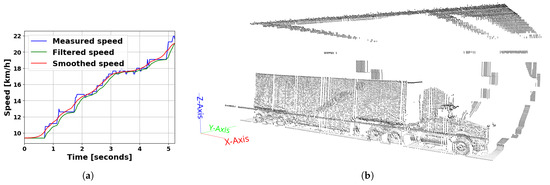

Figure 9a shows an example of the obtained smoothed speed profile. Equation (6) computes the longitudinal X coordinate, where is the previous coordinate (initialized to 0) and is the current smoothed speed.

Figure 9.

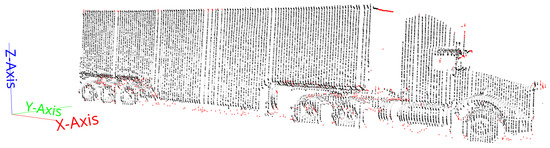

(a) Speed smoothing using Kalman filtering and smoothing. (b) Three-dimensional image of a raw point cloud [55].

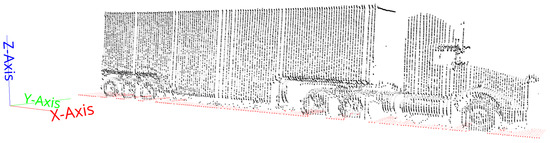

These transformations generate a 3D matrix that defines the vehicle’s point cloud. Figure 9b illustrates an example of a 3D point cloud. However, for vehicles exceeding 25 km/h, the fixed sampling rate of the LiDAR can generate low-resolution point clouds, with intervals between scans greater than 18 cm. To address this, linear interpolation is applied between successive scans when the average speed exceeds 25 km/h, which improves the point density for speeds up to 40 km/h. The following processing stages receive the generated point clouds.

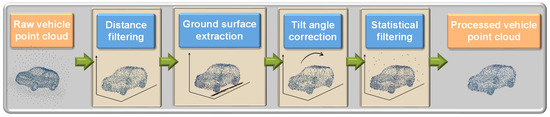

3.2.4. Point Cloud Preprocessing

The point cloud preprocessing stage aims to generate a refined vehicle representation by removing background elements, ground surface data, and noise, and correcting tilt distortions. Figure 10 shows the general block diagram of this process. The following subsections describe in detail the four stages illustrated in the figure.

Figure 10.

Block diagram of the point cloud preprocessing module.

Distance Filtering

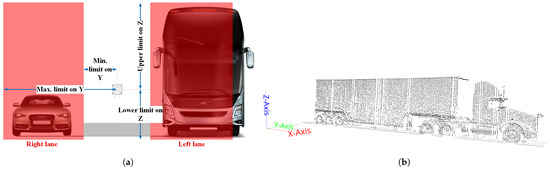

Distance-based filtering removes irrelevant data, such as pedestrians, background structures, other roadside equipment, or vehicles in adjacent lanes. The filter applies thresholds along height (Z-axis) and depth (Y-axis). As shown in Figure 11a, the red area represents the region of interest defined by user-configurable Z and Y boundaries.

Figure 11.

(a) Distance filtering region [55]. (b) Point cloud with distance filtering [55].

The user adjusts the threshold values according to the specific installation parameters of the laser sensor, using lane width and maximum vehicle height as the main criteria. Figure 11b shows the point cloud from Figure 9b after applying distance filtering. The resulting data isolates the target vehicle and the road surface within the corresponding lane, effectively removing background elements.

Ground Surface Extraction

A standard method for segmenting point clouds involves estimating parametric models, such as planes, to isolate structural elements. This stage applies a plane extraction algorithm based on RANSAC to identify and remove the road surface, following the implementation described in [26,27]. The ground is assumed to be the dominant plane, perpendicular to the Z axis and defined by Equation (7). The algorithm selects the plane with the highest number of inlier points within a predefined distance threshold (5 cm) and angular deviation () from the model, then removes those points from the point cloud. With a maximum of 5000 iterations, the method proved effective, as illustrated in Figure 12, where the extracted ground (in red) is separated from the vehicle (in black). Reference [55] describes the details of the implemented algorithm.

Figure 12.

Point cloud with ground surface extraction [55].

Tilt Angle Correction

The next step involves estimating the tilt angles between the ground plane and the XY plane, which result from any inclination of the laser sensor during installation. These angles are computed using Equation (8), based on the coefficients (a, b, and c) of the ground plane model from Equation (7), since these coefficients represent the plane’s normal vector. In this context, denotes the inclination around the X-axis, and around the Y-axis.

This stage rotates each point in the point cloud using the Euclidean rotation matrices and , as defined in Equation (9).

Statistical Filtering

Statistical filtering removes noise from the point cloud, such as isolated points caused by ambient dust or measurement uncertainty. The filter implementation uses the algorithms described in [26,65]. The method calculates the average distance from each point to its k nearest neighbors. It retains only those within a range of , where and are the global mean and standard deviation of all average distances. Reference [55] describes the details of the filtering algorithm implemented in this work. Equation (10) defines the resulting filtered cloud .

After several experiments with different point clouds, the nearest neighbor value and a filtering factor produced satisfactory results, effectively removing numerous outlier points in each cloud tested. Figure 13 shows the results with the filtered cloud in black and the removed points in red, highlighting the improvement in surface homogeneity and the removal of scattered noise.

Figure 13.

Point cloud with statistical filtering [55].

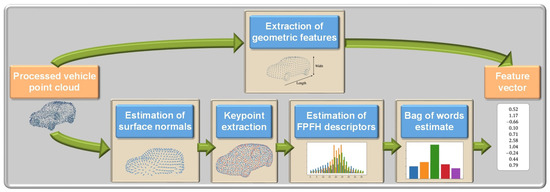

3.2.5. Feature Extraction

The next step involves extracting relevant features from the point cloud, which serve as input to the classifier described in Section 3.2.6, whose objective is to determine the vehicle type automatically. Figure 14 shows a general diagram of the feature extraction process. This stage has two main goals: to reduce the volume of data processed in subsequent stages and to enhance classification efficiency. The Cartesian coordinates of thousands of points offer limited discriminative power and are computationally expensive to process. The feature types extracted to address this are as follows: (1) global geometric properties of the vehicle (e.g., height, length); (2) a BoW representation derived from FPFH, a local 3D descriptor computed using surface normals and keypoints from the point cloud. The following subsections describe the extraction process for each feature type in detail.

Figure 14.

Block diagram of the feature extraction module.

Estimation of Surface Normals

Three-dimensional point clouds from range sensors capture sampled surfaces of real-world objects but lack information about surface orientation and curvature. To recover this geometric context for subsequent analysis, surface normal estimation is required. Extracting surface normals is a computationally efficient and robust technique, particularly effective against discontinuities in raw range data [66,67,68].

Implementing the surface normal extraction method uses the algorithms described in [26], which employ Principal Component Analysis (PCA) to estimate surface normals by fitting a tangent plane to the local surface around each point of interest. This results in a least-squares approximation of a plane in the neighborhood of a point . This approach reduces the normal estimation problem to the eigenvalue and eigenvector analysis of the covariance matrix computed from the point’s local neighborhood.

Equation (11) shows the covariance matrix computation for a point , where k is the number of neighboring points considered within a defined radius r, and denotes the 3D centroid of this neighborhood. The covariance matrix’s eigenvalues and eigenvectors represent the local geometric structure. If the eigenvalues satisfy , then the surface normal is defined as the eigenvector associated with the smallest eigenvalue .

However, the previous method does not determine the sign of , meaning the orientation of the normal vector remains undefined. The normals must comply with Equation (12) to ensure their orientation is consistently toward the viewpoint, i.e., the laser position. In this expression, represents the viewpoint, defined as a central point on the XZ plane of the vehicle’s point cloud, located at a distance equal to or greater than that of the LiDAR sensor.

As noted in [26,69], choosing an appropriate scale factor for r or k value is essential for accurate surface normal estimation and feature extraction in 3D point clouds. The optimal choice depends on task requirements and data characteristics, and may involve techniques such as automatic selection, sensitivity analysis, or empirical testing [24,70,71].

The neighborhood scale factor depends on the required level of geometric detail. Smaller values are needed to capture fine features like edge curvature, while larger values are suitable for less detailed applications. However, small scales must align with the point cloud resolution. For instance, in vehicle point clouds captured at 25 km/h, where point spacing along the X-axis averages 18 cm, the radius r should be greater than that spacing.

Based on this, the neighborhood estimation used a radius of 36 cm, and the viewpoint was placed 5 meters away from the point cloud, perpendicular to the XZ plane and centered with respect to the scene.

Keypoint Extraction

The next step is to identify keypoints: stable, distinctive, and well-defined points within the point cloud. Selecting these reduces the data volume for classification and, when combined with local 3D descriptors, enables a compact and informative representation of the original point cloud [72].

Standard keypoint detectors include voxel sampling, uniform sampling [73], Harris3D [74], SURF 3D [75], NARF [76], and ISS [77]. This work adopts the uniform sampling method, known for its efficiency, repeatability, and low computational cost [73]. The approach subdivides the point cloud into 3D voxels of a predefined radius and selects the point closest to each voxel’s center as a keypoint.

Since wheels are key features for vehicle classification and typically exceed 60 cm in diameter, a voxel radius of 10 cm was chosen for keypoint extraction. This value preserves essential structural details while reducing the number of points by over .

Estimation of FPFH Descriptors

The original format of a point cloud provides Cartesian coordinates relative to the sensor’s origin. However, this raw representation can lead to high ambiguity in applications such as automatic vehicle counting and classification, where a system must compare multiple point sets. To address this, the system uses local descriptors to capture the geometric structure around keypoints, enabling more reliable feature comparison. These descriptors must provide a compact, robust, and invariant representation, resilient to noise and occlusions [78].

Based on the previous definition of local descriptors, the surface normals estimated in Section 3.2.5 for the keypoints extracted in Section 3.2.5 are local descriptors, as they capture geometric information from the neighborhood of each point of interest. However, the geometric detail they provide is relatively limited in terms of the level of classification required. To address this, a more advanced local descriptor called FPFH [78] was selected, which builds upon the information provided by surface normals.

The feature extraction method used for the FPFH descriptor, presented in [78], is based on a simplified version of the original Point Feature Histogram (PFH) algorithm [79,80]. This approach significantly reduces the original method’s computational complexity, making it suitable for real-time applications while preserving most of PFH’s descriptive power. The method calculates the FPFH descriptor by analyzing the angular relationships between surface normals at a set of keypoints and their neighbors [26].

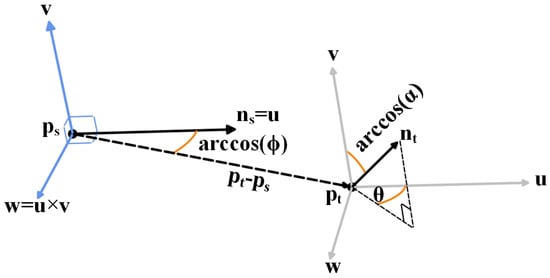

The process begins by estimating angular features between a keypoint and its k nearest neighbors within a specified radius r. For each pair of points and , the method defines a Darboux reference frame at one of the two points, where is the j-th neighbor of , as shown in Figure 15. These angular tuples represent the relative geometry between pairs of surface points and reduce the feature space from 12 (i.e., the x, y, and z coordinates, and normals of the keypoint and its j-th neighbor) to 3 dimensions.

Figure 15.

Graphical representation of the Darboux reference frame.

Unlike PFH, which calculates all possible point-pair interactions in the neighborhood, FPFH limits computations to keypoint-neighbor pairs, offering a substantial speedup. These angular values are then grouped into histograms with 11 bins per dimension, producing a Simplified PFH (SPFH) for each keypoint. In a final step, the method weights the SPFH values of the neighboring points and aggregates them to form the complete FPFH descriptor, as expressed in Equation (13), where denotes the distance between the query point and a neighboring point .

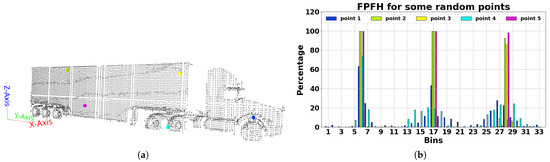

The final output of this stage is a set of 33 normalized values (ranging from 0 to 1, or to ) for each keypoint in the point cloud. Figure 16 displays the histograms of five randomly selected keypoints from a six-axle truck point cloud. The figure shows an apparent similarity between points 2 (green), 3 (yellow), and 5 (magenta), all located on the flat cargo surface parallel to the XZ plane. In contrast, points 1 (blue) and 4 (cyan), located respectively on the fender and a tire, exhibit distinct histogram shapes.

Figure 16.

(a) Point cloud with five random points highlighted to illustrate the FPFH. (b) FPFH for the five random points in the point cloud.

To determine the radius r used in the computation of the FPFH descriptor, the system adopts the hierarchical neighborhood strategy proposed by Rusu [26], which relies on a dual-ring approach. This method defines two distinct radii, , to compute two separate layers of feature representations for each keypoint . The first layer captures surface normals using radius , as described in Section 3.2.5, while the second computes the FPFH features using radius . In this implementation, the system sets the surface normal radius to 36 cm, and it defines the FPFH radius as times , i.e., 43 cm, to ensure that feature extraction remains within the scale of relevant vehicle structures such as wheels.

Bag-of-Words Estimate

Three-dimensional local descriptors capture distinctive geometric features within point clouds, offering robustness and adaptability for object analysis and recognition tasks [28]. However, their quantity varies with point cloud size, often ranging from hundreds to thousands, introducing inconsistency across different vehicles and complicating direct comparison and classification [81]. Consequently, a BoW model standardizes representation [28,29,30]. This approach clusters similar local descriptors into “visual words” or “bags,” yielding a compact, fixed-length vector suitable for classification. The following outlines the method proposed [82,83,84,85,86,87]:

- A visual dictionary is first constructed by clustering FPFH descriptors extracted from a training dataset using methods like k-means.

- Each descriptor is assigned to its nearest visual word based on similarity to cluster centroids.

- Finally, for each point cloud, the frequency of occurrence of each visual word is counted, producing a BoW histogram that summarizes the distribution of local geometric features.

The number of visual words (or clusters) depends on the application and the dataset’s characteristics [81]. This value is fixed and must be large enough to capture meaningful variations while avoiding overfitting to noise [88]. The optimal number is selected experimentally to balance the representation’s discriminative power and generalization capacity [84]. Section 4 of Results evaluates different visual dictionary sizes to analyze their impact on classification performance.

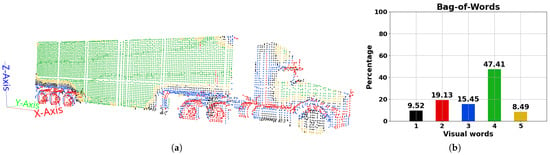

The final output of this stage is a set of N values ranging from 0 to 1 ( to ) for each point cloud, where N represents the number of visual words. Figure 17 shows a histogram (right) generated using five visual words for a single point cloud, and a visualization (left) of the spatial distribution of keypoints assigned to each word across the entire vehicle point cloud.

Figure 17.

(a) Point cloud with the keypoints assigned to each visual word differentiated with colors. (b) BoW for the point cloud.

The system constructs the visual vocabulary using the Mini-Batch K-means algorithm, which improves computational efficiency by processing small random subsets in each iteration [89,90]. Although this method may slightly reduce clustering accuracy, it performs reliably in large-scale settings. Two initialization strategies were tested: K-means++, which improves convergence via probabilistic seeding, and random selection of initial centroids.

Extraction of Geometric Features

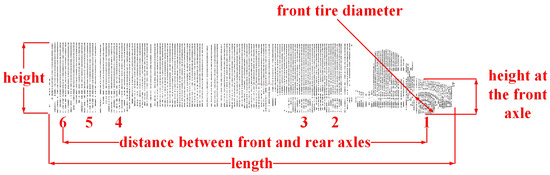

The system classifies vehicles into eight distinct categories, as shown in Table 3, based primarily on the number of wheels in contact with the ground and overall vehicle dimensions. The system uses a geometric feature extraction method to improve classification efficiency, following approaches similar to those in [46,91]. The geometric features extracted from the point cloud are illustrated in Figure 18 and include the following:

Figure 18.

Geometric features of the vehicle in the point cloud [55].

- Number of keypoints: The total number of extracted keypoints indicates the size of the point cloud.

- Vehicle length: Measured as the distance between the farthest points along the X-axis.

- Vehicle height: Defined as the vertical span of the point cloud along the Z-axis.

- Number of axles with tires on the ground: The method excludes lifted axles of freight vehicles with three or more axles.

- Distance between front and rear axles: Calculated as the distance along the X-axis between the centers of the front and rear wheels.

- Vehicle height at the front axle: The vertical distance along the Z-axis at the front axle’s location.

- Front tire diameter: Considering that most vehicles have tires of uniform size.

The output of this stage is a set of seven values that describe the geometric properties of each point cloud. Combined with the N visual words obtained in the previous section, they form the complete feature set used in the following classification stage.

However, due to the significant variance in the magnitudes of geometric features, feature scaling is necessary to ensure equal contribution to the classification model and improve performance [92]. Scaling enhances the convergence of optimization algorithms, reduces classification errors in scale-sensitive models like SVM and KNN, and improves consistency and interpretability, especially when dealing with heterogeneous real-world data.

Section 4 of the Results evaluates four scaling techniques using the Scikit-learn library [90] to assess their impact on classification performance. The Standard scaler removes the mean and scales the data to unit variance by dividing by the standard deviation. The MaxAbs scaler divides each value by its maximum absolute value, preserving sparsity. The MinMax scaler normalizes features to a fixed range (e.g., ). The Robust scaler subtracts the median and scales by the interquartile range (IQR), making it resilient to outliers.

Axle Detection and Counting

As shown in Figure 18, the system can directly calculate the vehicle’s length and height as the difference between the minimum and maximum coordinates along the X and Z axes, respectively. Estimating the remaining geometric features requires identifying the positions of the axles.

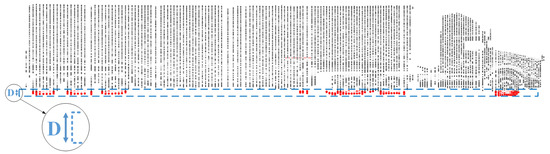

To achieve this, a horizontal slice of the point cloud is extracted at a height D above the ground, capturing only the lower section of the wheels while excluding the vehicle body (see Figure 19). Euclidean clustering is then applied to segment candidate axle regions, using parameters such as the minimum and maximum number of points per cluster (, ) and a search radius .

Figure 19.

Region of the tires in contact with the ground.

The method increases D when it detects three or more axles or when the vehicle height exceeds the threshold ; conversely, it reduces D if it detects fewer than two axles, repeating the process accordingly. The method validates clusters as axles only if they meet the following conditions:

- The height of the cluster must be at least of D; otherwise, the cluster may represent noise or another part of the vehicle.

- The cluster length (interpreted as wheel diameter) must exceed the threshold to exclude small or fragmented clusters.

- The cluster’s minimum Z coordinate must lie within a tolerance from the ground level (i.e., the minimum Z of the entire cloud), ensuring that the axle is in contact with the road and not lifted.

Once the algorithm identifies valid axles, it determines how many are in contact with the ground, locates the positions of the first and last axles, and calculates derived characteristics such as vehicle height at the front axle, inter-axle distance, and wheel diameter.

Extensive testing led to selecting the following parameters: cm, points, points, cm, m, cm, and cm. Figure 20 shows an example of identified axle clusters.

Figure 20.

Clusters identified as vehicle axles.

3.2.6. Automatic Classifier

The automatic classification stage uses the histogram of the N visual words and the extracted geometric descriptors as input. Based on this information, the classifier determines the corresponding vehicle category. Researchers have applied various methods in point cloud classification, including neural networks [25,33,34], Bayesian networks [35], k-nearest neighbors (KNN) [93], and SVM [31,32]. This work adopts and trains an SVM classifier because it effectively handles high-dimensional and complex data from point clouds. SVMs are well-suited for classification tasks even when the data is not linearly separable, offering robust performance under data variability [31,32].

While SVMs are inherently binary classifiers that determine the optimal hyperplane separating two classes, this implementation extends their functionality to multiclass problems using the One-Versus-Rest (OVR) strategy [94,95,96]. This approach decomposes a multiclass problem with K classes into K binary classification tasks, each distinguishing one class from all others.

For each class , a binary SVM model is trained to separate the samples of class (labeled as ) from the rest (labeled as ), solving an individual optimization problem under the maximum margin principle. The predicted class for each sample corresponds to the classifier with the highest decision function value.

A key strength of SVM classifiers lies in kernel functions, which allow nonlinear relationships to be modeled by implicitly mapping the input data to higher-dimensional spaces without explicitly computing the transformation. Section 4 of the Results evaluates three commonly used kernel functions described below: (i) the linear kernel, suitable for linearly separable data; (ii) the polynomial kernel, which captures nonlinearities through adjustable degree and bias terms; (iii) the Radial Gaussian Basis Function (RBF) kernel, which maps the data to an infinite-dimensional space to capture complex nonlinearly separable patterns.

Finally, the classification model follows a structured pipeline composed of the following stages:

- Dataset construction: This process begins by selecting a representative subset of point clouds from a larger dataset as the basis for model development.

- Class labeling: The annotation process assigns each point cloud a class label corresponding to its vehicle category. A Label Encoding scheme then converted these categorical labels into unique integer identifiers to enable compatibility with the classification algorithms used in subsequent stages.

- Data partitioning and class balancing: The labeled subset is partitioned into training and validation sets using a stratified approach to preserve class distribution. Additionally, class balancing techniques are applied when necessary to mitigate the effects of class imbalance and ensure fair model training.

- Visual vocabulary generation: The process constructs a visual dictionary using the Mini-Batch K-means algorithm for FPFH descriptors extracted from the training samples. Each point cloud is then represented as a histogram of visual word occurrences, capturing local geometric patterns in a compact, fixed-length format.

- Geometric feature extraction and normalization: The system extracts seven global geometric descriptors from each point cloud and normalizes them using different scaling strategies to reduce the effects of scale variability and ensure balanced feature influence.

- Feature vector construction: The normalized geometric features are concatenated with the corresponding BoW histogram to form a unified high-dimensional feature vector. This representation integrates global structure and local surface descriptors, enriching the input space for classification.

- Model training and evaluation: The training phase applies SVMs with linear, polynomial, and Gaussian RBF kernels, allowing the model to capture linear and nonlinear class boundaries.

The classifier initially groups vehicles into the first four classes in Table 3, plus a fifth class corresponding to vehicles with three or more axles. Vehicles assigned to Class 5 are subsequently reclassified according to the number of axles, resulting in the eight vehicle categories.

Dataset Construction

The dataset creation process ensures data quality and representativeness by collecting range data, images, and speed records during a pilot deployment at a toll station. LiDAR and speed sensors generate the range data for constructing 3D point clouds, while high-resolution cameras capture synchronized images of each vehicle to support classification and manual verification.

The final dataset comprises over 360,000 vehicle samples, hierarchically organized by date and time to streamline access during processing. Each record included a text file containing range data (with a .out extension) and a synchronized PNG image captured during vehicle passage. Reference [55] provides more details about the dataset construction.

Class Labeling

The dataset’s labeling process is key to ensuring accurate vehicle classification. It involves assigning class labels to each object in the point clouds using automated and manual tools developed specifically for this task.

Labeling begins by reviewing point clouds to discard samples with excessive noise, incomplete captures, or distortions, and labeling them as “unidentified” or “distorted.” Camera images are inspected for clarity and used to verify key vehicle features, while speed data are compared to expected values to detect anomalies.

The labeling process assigns initial classes to point clouds based on geometric features and axle configuration, with visual confirmation provided by corresponding camera images. The final labeled dataset comprises objects, most of which are light vehicles . Table 5 reports the sample distribution across classes, including auxiliary categories such as “non-vehicles” (Pedestrians, bicycles, motorcycles, or objects not subject to tolls under Colombian regulations; they are labeled as Class 0 to help the classifier ignore non-chargeable detections), “unidentified” (Point clouds with ambiguous features—e.g., unclear if a minibus has single or dual rear tires (Class 1 vs. 2), or if a two-axle truck fits Class 1, 3, or 4—making it impossible to assign a reliable label. These cases are excluded from training and evaluation), and “distorted” (Abnormally long point clouds caused when a vehicle stops in the laser beam of the reversible lane, and the radar falsely detects movement. We are evaluating sensor upgrades and algorithmic improvements to address this issue).

Table 5.

Labeled dataset quantities.

Unidentified samples included vehicles with unclear axle configurations, while distorted data resulted from laser beam obstructions, often due to congestion in a central reversible lane. The manual labeling process, being highly time-consuming and labor-intensive, limited the annotated portion of the dataset to ; nevertheless, the resulting subset proved reliable and representative.

Data Partitioning and Class Balancing

Due to the dataset’s significant class imbalance, particularly the predominance of Class 1 vehicles, a balanced subset was created to avoid bias and ensure reliable classification across all vehicle categories. The procedure excluded unidentified and distorted samples. Table 6 presents this balanced dataset, including the numeric codes assigned to each class. The process split the subset into for training and for validation.

Table 6.

Training and validation dataset for the classifier.

3.2.7. Automatic License Plate Recognition

The Automatic License Plate Recognition (ALPR) module has three main stages. The first stage is plate detection, in which the system analyzes the input image to locate candidate regions likely to contain a license plate. The second stage is character recognition, where the characters within the detected regions are extracted and classified. The final stage is plate identification, consolidating the recognized characters into a validated license plate string.

License Plate Detection

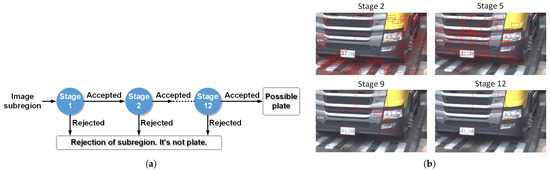

The implementation adopts the method proposed in [97,98] for license plate detection. It combines Local Binary Pattern (LBP) descriptors with a cascade classifier trained using Adaptive Boosting (AdaBoost). The algorithm applies a multi-scale sliding window strategy to grayscale images and sequentially evaluates the candidate regions using weak classifiers.

LBP descriptors encode local texture by comparing each pixel to its eight neighbors, producing binary patterns converted into decimal values [99]. This process results in a compact and discriminative texture representation, facilitating further image analysis tasks. Figure 21 shows an example of the final LBP output.

Figure 21.

Example of visualization of the LBP matrix.

To ensure robustness against plate size and position variations, the detection process is performed at multiple image scales using sliding windows. The method evaluates each candidate region through a 12-stage cascade of weak classifiers. Figure 22a shows the overall structure of the cascade classifier. This design enables early rejection of false positives, significantly reducing computational cost [100,101]. The process forwards the candidate regions that pass all stages to the character recognition module. Figure 22b shows some classifier stages, where four candidate regions, including the correct license plate, are ultimately observed.

Figure 22.

(a) Cascade classifier structure. (b) Results of some stages of the cascade classifier.

Character Recognition

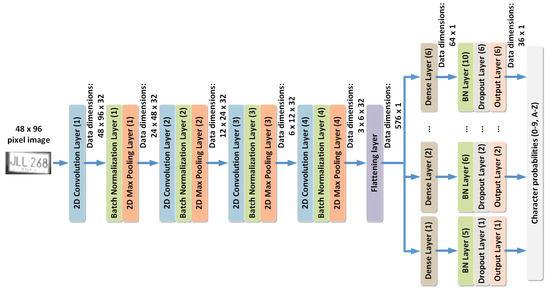

The character recognition module employs a deep learning architecture based on convolutional neural networks (CNN), following recent approaches reported in the literature [102,103,104,105,106,107]. The architecture illustrated in Figure 23 processes -pixel grayscale images and extracts hierarchical features through multiple convolutional, pooling, and normalization layers. It includes six dense layers, one for each character, followed by SoftMax outputs over 36 classes (0 to 9, A to Z). Dropout regularization and batch normalization are applied throughout to prevent overfitting and stabilize training.

Figure 23.

CNN model architecture for license plate character recognition.

The classifier generates a probability distribution of the 36 possible classes for each of the six characters. The method constructs the final license plate by selecting the most probable class at each position. It calculates an overall confidence index by multiplying the individual probabilities. If this index exceeds a threshold of 0.3, the process accepts the image as a valid license plate. This value is determined experimentally, considering that a high value can discard valid plates and a lower value can generate false positives [108]. Figure 24 illustrates four initial candidates, of which only one is correct due to its high confidence index.

Figure 24.

Character recognition results.

License Plate Identification

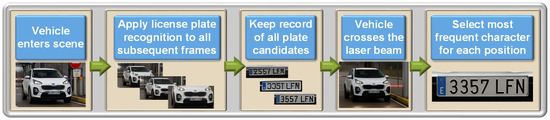

A single image captured per vehicle often results in high recognition error rates, mainly due to occlusions, poor lighting conditions, and imperfect synchronization between image acquisition and the vehicle crossing the laser beam. To address this, the system implements a multi-frame processing strategy to reduce recognition errors (see Figure 25).

Figure 25.

Block diagram of the multi-frame license plate recognition process.

Each video frame is processed independently for license plate detection and character recognition. Once a vehicle enters the scene, the system accumulates detected plate candidates across all subsequent frames until the vehicle crosses the laser beam. Due to noise and variability, some of these detections may contain incorrect characters.

A majority voting scheme is applied to assign a reliable license plate to each vehicle. The method selects the most frequently detected character across all valid frames for the six character positions. This voting mechanism increases robustness by compensating for isolated misclassifications, enabling the system to reconstruct a more accurate license plate even with partial occlusions or recognition noise.

Training the ALPR Classifiers

The evaluation used over 5000 high-resolution visible-spectrum vehicle images to assess the license plate recognition system. The annotation process manually labeled the license plate regions and their corresponding characters. The 12-stage cascade classifier was trained for plate detection using 2500 plate images and 4750 non-plate samples. The process reserved the remaining 2500 plate images for validation.

Detection performance was measured using the Jaccard coefficient, which compares predicted and ground-truth bounding boxes. The system considers a correct detection when . The model achieved accuracy in plate detection, with 200 false positives.

The character recognition process used 10,000 labeled character samples (A to Z, 0 to 9) and 4750 non-character images. The validation process evaluated 155 plates and accepted a result only when the system identified all the characters correctly. The system reached a accuracy, with 13 false positives. The training process used high-quality images captured under favorable conditions.

4. Results

This work’s main contribution is the development of the Vehicle Counting and Classification System, SSICAV. Designed as a comprehensive technological solution, SSICAV aims to optimize vehicle management at toll stations by integrating advanced sensing technologies and data processing capabilities.

4.1. Axle Detection and Counting Results

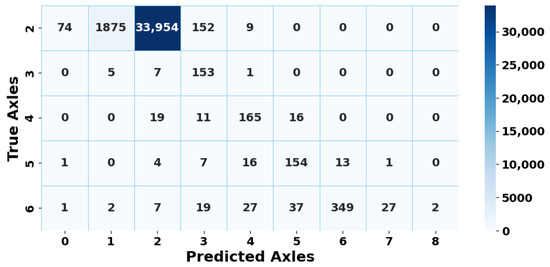

The evaluation treated the axle detection and counting module as a multiclass classification task, where each class corresponds to a vehicle with a specific number of axles, ranging from 2 to 6. Although the classifier could predict classes from 0 to 8, labels outside the valid range (i.e., 0, 1, 7, and 8) represent misclassifications. The analysis excluded vehicles with more than six axles due to their low representativeness in the dataset.

The system evaluation used a test set comprising 37,108 vehicle samples collected under real operating conditions. The overall accuracy reached , suggesting the system reliably estimates axle counts in most cases. However, performance varied across classes, partly due to the inherent class imbalance, as shown in Table 7. Two-axle vehicles accounted for more than of the dataset.

Table 7.

Vehicle distribution by number of axles.

The confusion matrix in Figure 26 highlights the classifier’s ability to detect 2-axle vehicles correctly and reliably. However, the results reveal a systematic tendency to underestimate axle counts, particularly in higher axle configurations (e.g., 3 to 6 axles), which the model frequently misclassified.

Figure 26.

Confusion matrix for axle classification (absolute values).

The metrics demonstrate that the axle classification model performs highly accurately under operational conditions. A global accuracy of and a weighted F1-score of reflect strong performance, especially for dominant classes like 2-axle vehicles. However, macro-averaged metrics (precision , recall , F1-score ) reveal significant class variability. This result is mainly due to reduced precision in underrepresented categories; for example, the 3-axle class achieved high recall () but low precision (), indicating a substantial number of false positives.

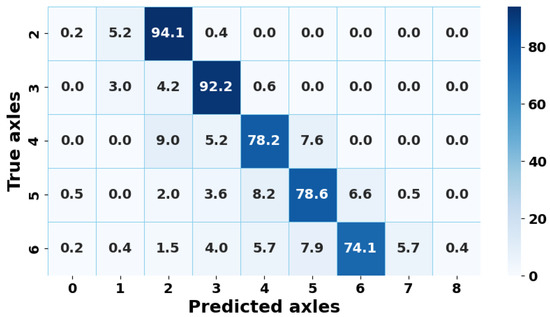

The row-normalized confusion matrix is shown in Figure 27 to facilitate class-wise performance analysis. The model achieved the highest recall for 2-axle vehicles (), which dominate the dataset. Interestingly, despite being a minority class, 3-axle vehicles exhibited a recall of , indicating that the model correctly recognized most true instances of this class. However, their precision was substantially lower (), revealing frequent false positives, primarily due to confusion with 2-axle vehicles. This imbalance suggests that while the model is sensitive to identifying 3-axle configurations, it struggles to discriminate them clearly from more common classes.

Figure 27.

Normalized confusion matrix for axle classification (percentage).

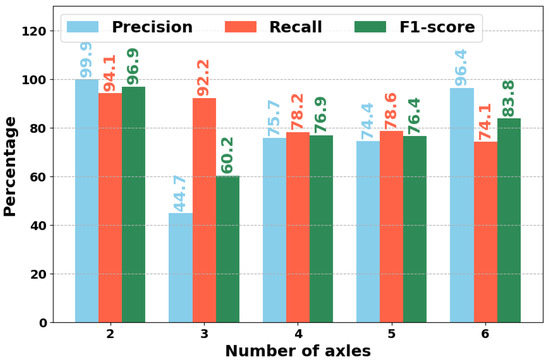

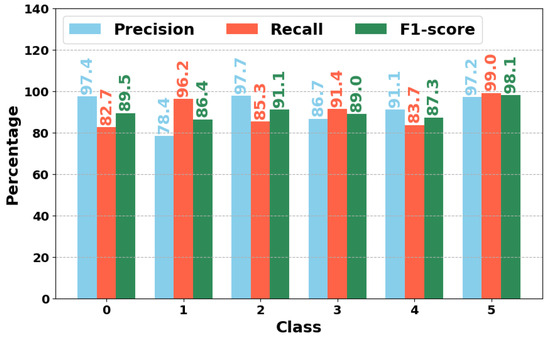

Figure 28 presents the complete set of per-class metrics. The 2-axle category achieved an F1-score of , reflecting consistently high precision and recall. In contrast, the 3-axle class obtained an F1-score of only , reflecting its low precision. The model exhibited more balanced behavior for classes with 4 to 6 axles, achieving F1-scores between and , although recall progressively declined as axle count increased. These patterns highlight the increased classification complexity in higher axle configurations, often compounded by partial occlusions and low data representativeness.

Figure 28.

Precision, recall, and F1-score by axle class.

The analysis identified the sensor’s inability to fully capture some vehicles’ wheels, primarily due to traffic traveling close to the platform where the laser scanner was installed, as one of the main limitations in axle counting. This issue was more evident in long or multi-axle vehicles, and it worsened in reversible lanes due to irregular alignment and frequent stops near the sensor. These conditions led to systematic underestimation in complex axle configurations.

In summary, despite sensor visibility and class imbalance limitations, the model demonstrated high accuracy in detecting 2-axle vehicles and acceptable performance for configurations involving 3 to 6 axles. These results confirm the system’s applicability in toll plaza environments and point to potential improvements. The proposed approach addresses current limitations by combining infrastructure adjustments and sensing enhancements. It includes modifying the toll plaza divider to enable unobstructed laser beam passage and integrating complementary sensors, such as infrared or optical devices, to improve detection under low visibility and complex vehicle configurations.

4.2. License Plate Recognition Results

The annotation process excluded 10,938 images from the evaluation because insufficient resolution prevented visual identification of license plates in those cases. These images came from a dataset of 37,108 labeled samples corresponding to Class 1 through Class 5 vehicles. In the remaining 26,170 images, where the license plate region was sufficiently clear for manual labeling, the recognition performance was assessed based on the number of correctly identified characters per plate. This metric reflects the system’s effectiveness in accurately extracting alphanumeric sequences under real-world operating conditions.

On this subset, the system achieved a character-level accuracy of , measured as the proportion of individual characters correctly recognized across all plate positions. Full-plate recognition, defined as correctly identifying all six alphanumeric characters, was achieved in of the cases. The average normalized Levenshtein distance between the predicted and ground-truth strings was , indicating that fewer than two character-level edits were required to correct a prediction.

Table 8 presents the distribution of prediction accuracy by the number of correct characters. In total, of all correctly segmented plates contained at least five valid characters. Conversely, complete failures occurred in only of the evaluated cases, suggesting a low probability of total misrecognition when the plate is successfully detected.

Table 8.

Distribution of correct characters per plate.

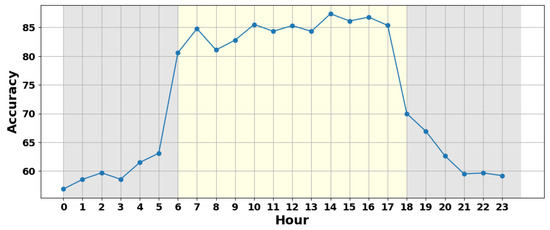

The analysis used the hour of image recording to evaluate the impact of operating conditions on system performance. As illustrated in Figure 29, recognition accuracy exhibits a marked dependence on time of day. Between 07:00 and 18:00, full-plate accuracy consistently exceeds , peaking at at 14:00. Outside these hours, accuracy drops significantly, reaching values below during late-night hours.

Figure 29.

Full-plate recognition accuracy per hour.

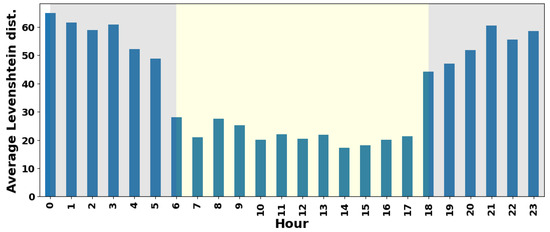

Figure 30 presents a complementary analysis based on Levenshtein distance. This figure shows that the average distance remains below during daylight hours while increasing sharply during nighttime periods. The highest average distance occurs between 18:00 and 05:00, when ambient lighting is lowest, indicating a strong correlation between visibility and recognition fidelity.

Figure 30.

Average normalized Levenshtein distance by hour.

The license plate recognition module demonstrated robust performance under favorable imaging conditions. Recognition quality was notably affected by environmental factors such as lighting and vehicle distance, particularly during low-light periods. These findings suggest that performance could be improved through sensor-level enhancements (e.g., infrared or auxiliary lighting) and adaptive preprocessing techniques to mitigate nighttime degradation.

4.3. Classifier Results

The training process used SVM with three kernel types: linear, RBF, and polynomial. These kernels were selected for their capacity to manage high-dimensional and nonlinear data, as typically found in processed point clouds. Also, the process scaled the geometric features using normalization techniques to maximize model performance, including minmax, maxabs, robust, and standard scaling. This preprocessing for standardizing variable ranges reduced the model’s sensitivity to outliers, improving training stability.

The evaluation tested the model using different BoW configurations by varying the number of generated visual words (5, 25, and 125) and the centroid initialization methods, which included random selection and K-means++. The implementation relied on the Mini-Batch K-means clustering algorithm. The evaluation uses multiple configurations to assess their impact on the classifier’s generalization ability.

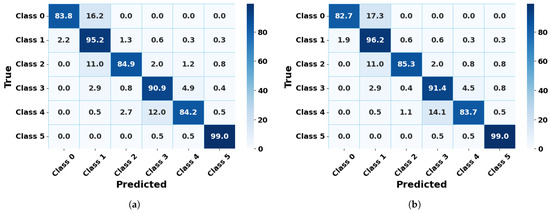

Standard metrics such as macro F1-score and confusion matrices allow for the evaluation of the classifier’s performance. Table 9 shows that the configuration with the best results uses the RBF kernel in combination with the standard scaler. This configuration yielded overall macro F1-score rates exceeding .

Table 9.

Classifier Results. Macro F1-score by configuration.

Despite variations in the number of visual words and the centroid initialization methods, these parameters did not significantly impact the classifier’s overall performance. Moreover, comparative analysis revealed a substantial improvement in accuracy when geometric features and BoW representations were combined. As shown in the GFO (Geometric Features Only) and BWO (BoW Only) columns, the integration of both feature types enhanced the classifier’s precision and robustness, reinforcing its effectiveness for real-world deployment.