Comprehensive Assessment of CNN Sensitivity in Automated Microorganism Classification: Effects of Compression, Non-Uniform Scaling, and Data Augmentation

Abstract

1. Introduction

- Image compression: reducing the amount of data needed to represent a digital image is widely used for data storage and communication. However, the influence of compression type (lossy or lossless) on CNN accuracy remains unexplored in microorganism classification.

- Non-uniform scaling: adjusting image size to fit CNN input layers, often resulting in non-uniform scaling, may impact classification accuracy. However, studies investigating this influence are scarce.

- Dataset size: while CNNs excel with large datasets, the impact of smaller datasets on classification accuracy, particularly in biological images, requires further exploration.

- Data augmentation techniques: the influence of common data augmentation operations (e.g., rotation, mirroring, cropping) on CNN learning remains inadequately studied in microorganism classification.

- A practical demonstration of how dataset size critically affects classification accuracy, reinforcing the importance of data volume in deep learning applications for microorganism recognition;

- A detailed analysis of the effects of image compression and non-uniform scaling—procedures often used to adapt images to CNN input layers—on classification outcomes;

- A systematic evaluation of data augmentation techniques, such as mirroring, rotation, and noise addition, identifying conditions under which they enhance or hinder model performance.

2. Related Work

3. Materials and Methods

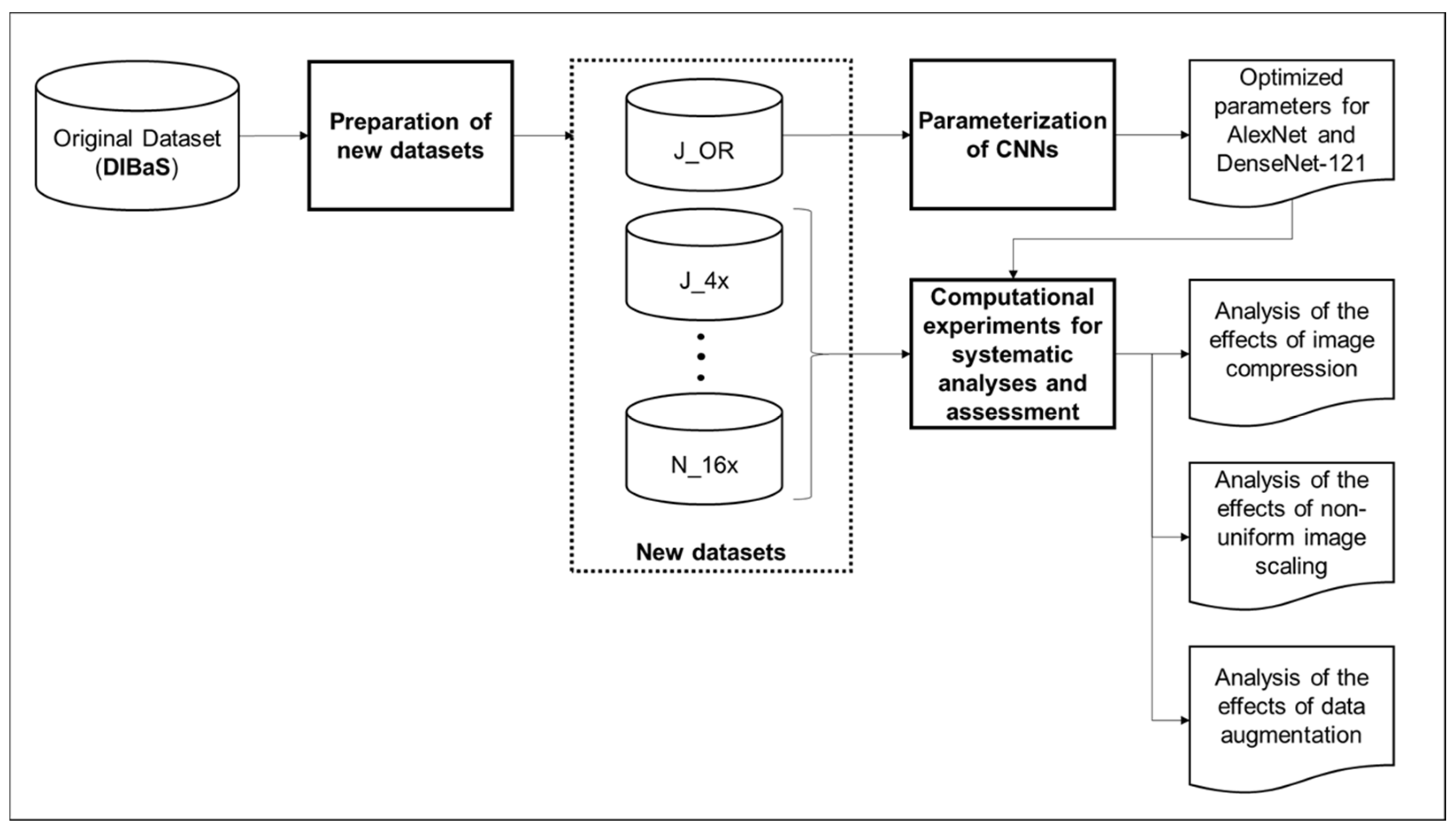

- Preparation of new datasets: To conduct the computational experiments, new datasets were created based on the original dataset. This involved:

- Creation of datasets for each experiment: Tailored datasets were created to analyze different factors influencing CNN performance.

- Dataset splitting: The created datasets were divided into two parts: 80% for training and 20% for validation.

- Parameterization of CNNs: The CNN models, AlexNet and DenseNet-121, were parameterized using the original dataset. This included the optimization of hyperparameters to enhance the performance of both CNN architectures: batch size, learning rate, and number of epochs were optimized.

- Experiments for analyses and assessment: The experiments conducted for each analysis included training and validating using the created datasets and optimized hyperparameters. Training involved iteratively updating the model’s internal parameters over multiple epochs until convergence, while validating evaluated the model’s performance on unseen data. The experiments included:

- Analysis of image compression: Evaluation of the impact of image compression (lossy and lossless) on CNN performance using datasets converted to different file extensions (JPG and PNG).

- Analysis of non-uniform image scaling: Investigation of the influence of non-uniform image scaling on CNN performance using datasets resized to different dimensions.

- Analysis of data augmentation operations: Evaluation of the effects of mirroring, rotation, and noise addition on CNN performance using datasets augmented with these techniques.

3.1. Rationale for Experimental Design

3.2. Image Dataset

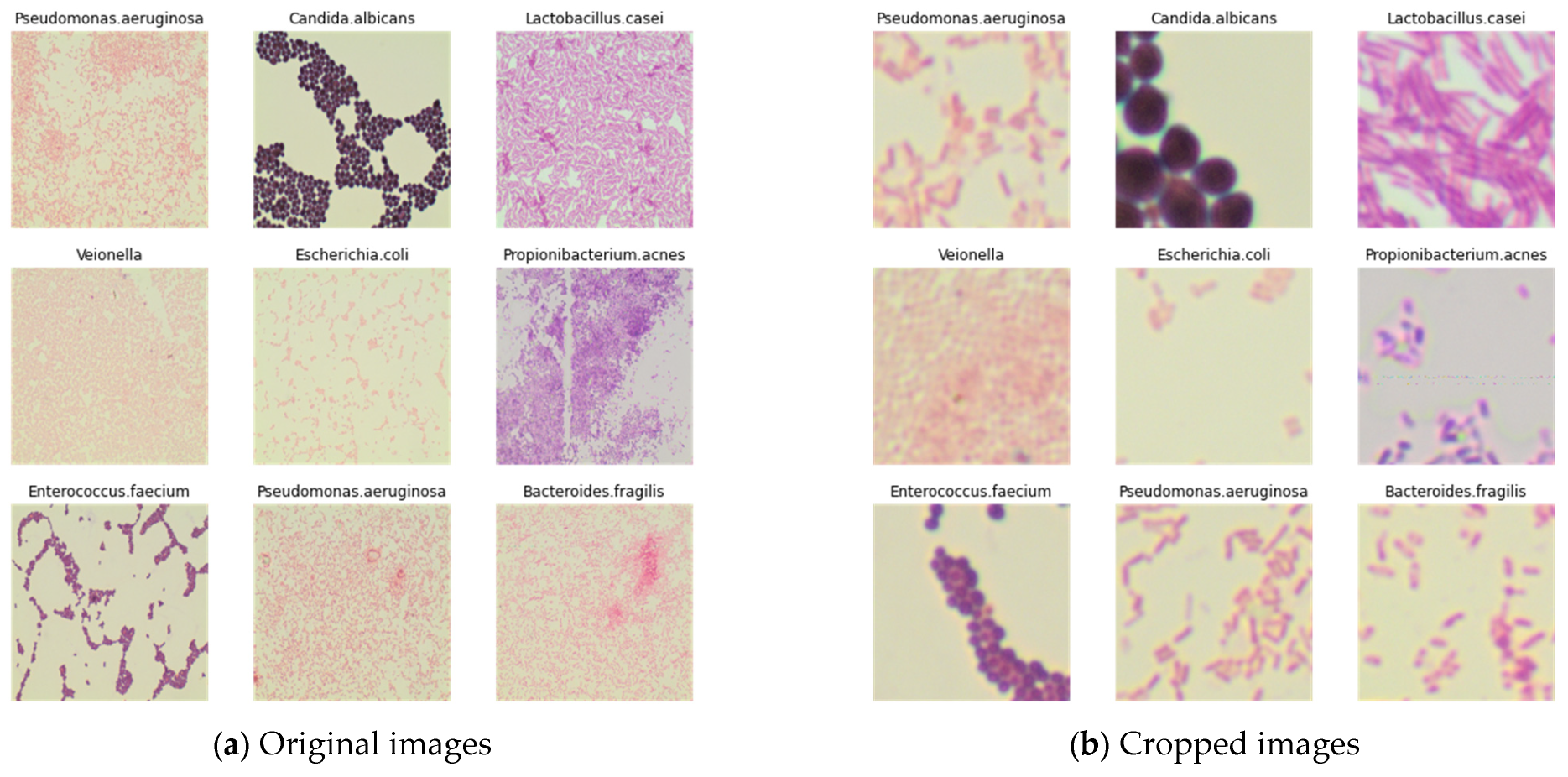

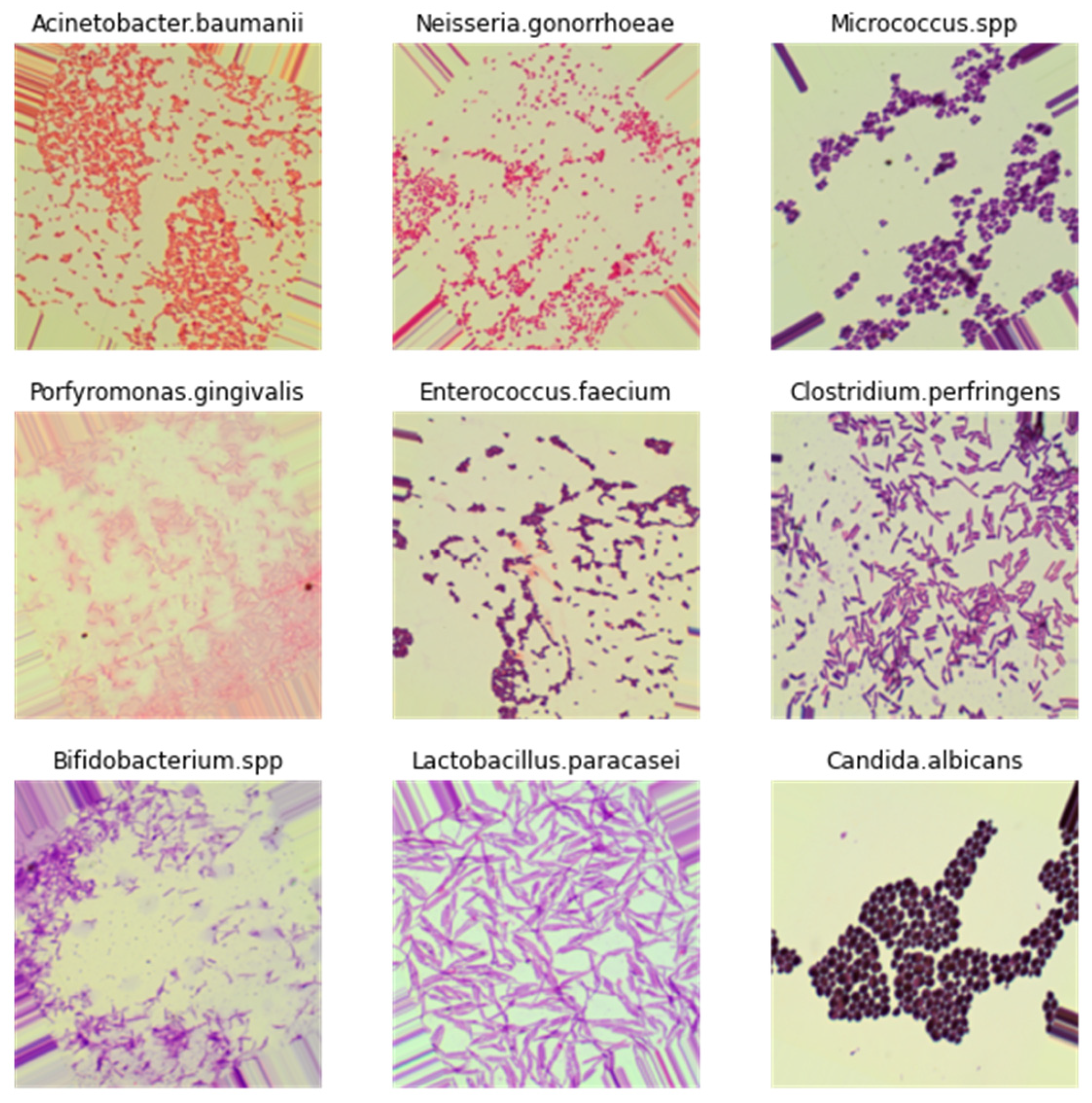

3.3. Preparation of New Datasets

3.4. Parameterization of CNNs

3.5. Performance Metrics

3.6. Computational Resources

4. Results and Discussion

4.1. Parameterization of CNNs

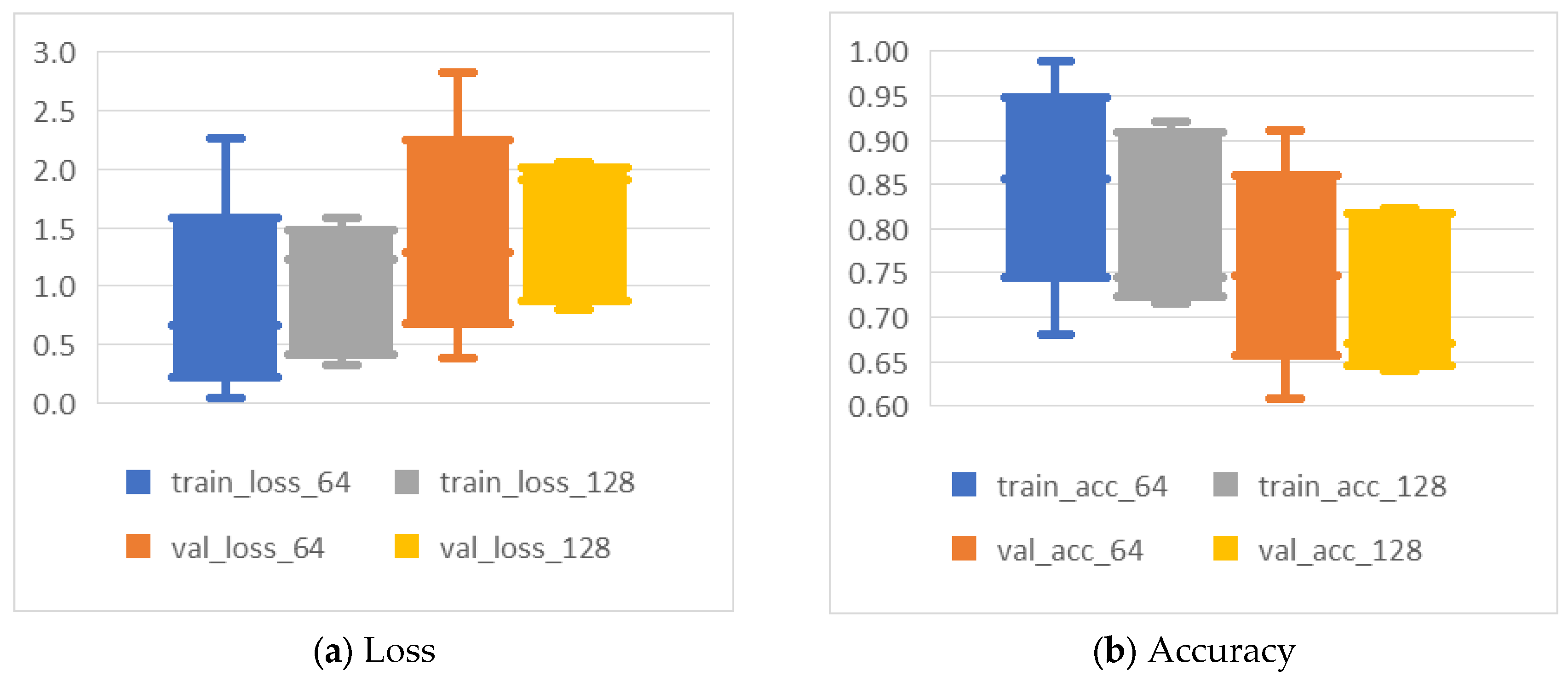

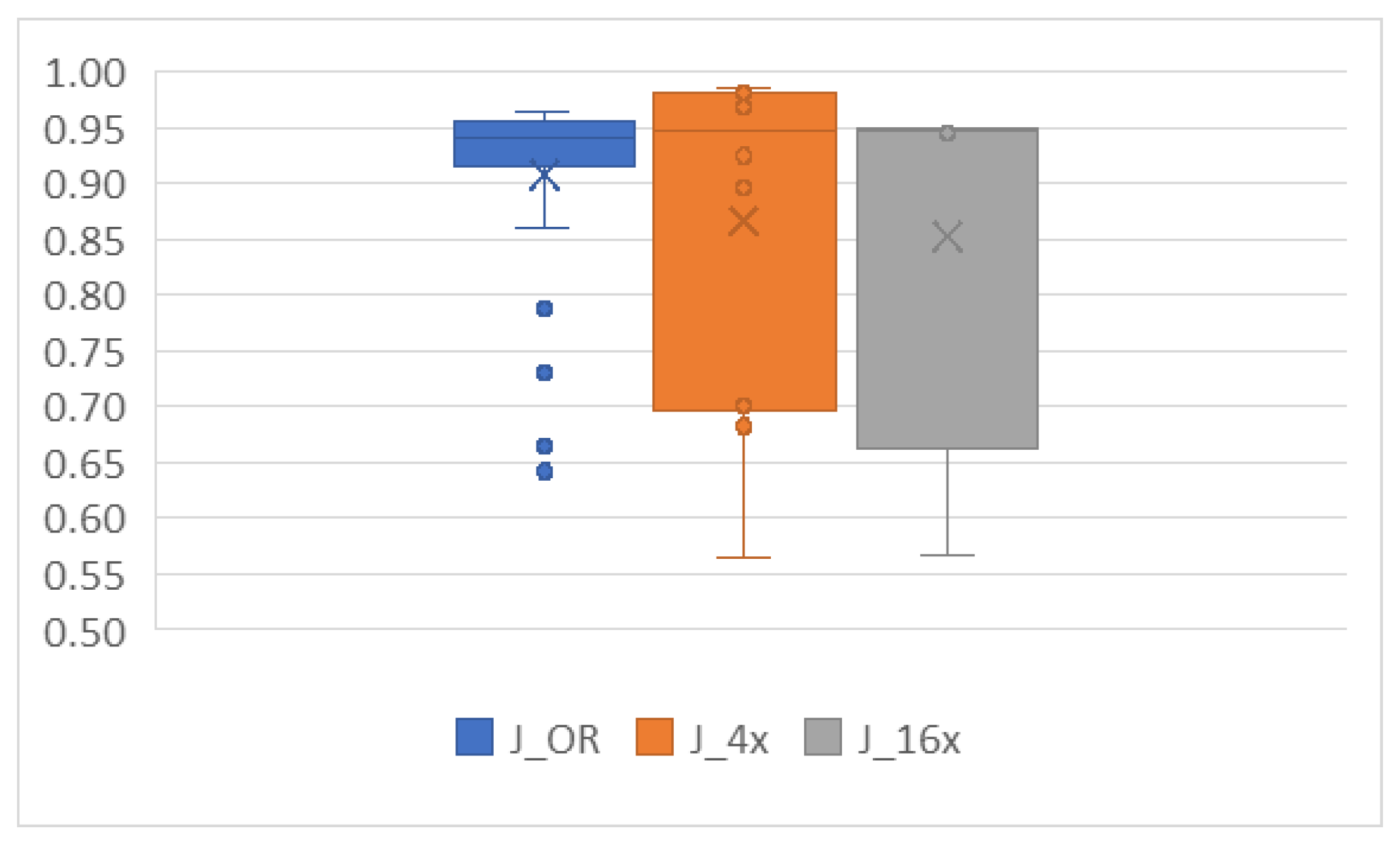

- J_OR—batch size 64 performed best, as expected due to the relatively small number of images in this dataset.

- J_4x—both batch sizes showed satisfactory results.

- J_16x—batch size 128 yielded the best results.

- Batch size differences: For AlexNet, the smaller batch size (64) was optimal for J_OR, likely because of the limited number of images, which matches findings from other studies where small datasets perform better with smaller batch sizes. In contrast, J_16x benefited from a larger batch size (128), possibly due to the larger number of training instances generated from cropping, allowing for more stable gradient updates.

- Epochs and dataset size: Larger datasets, such as J_4x and J_16x, achieved optimal results with fewer epochs compared to J_OR. Figure 5 provides insight into the loss progression, where training loss for J_OR decreased steadily after epoch 50, while validation loss fluctuated more but stabilized after epoch 50 as well. This indicates that smaller datasets require more epochs for the network to learn effectively, while larger datasets can converge more quickly. This aligns with standard DL practices, where smaller datasets often require more epochs to compensate for the lack of diversity in the training data.

- Learning rate: Both models showed that a learning rate of 0.0015 (AlexNet) and 0.001 (DenseNet-121) consistently produced the best results. This suggests that the slightly lower learning rate helps prevent overshooting during optimization, which is particularly useful when training deeper models like DenseNet-121.

- Model comparison: DenseNet-121 outperformed AlexNet in both training and validation accuracy across all datasets, particularly on J_4x and J_16x, with validation accuracies reaching as high as 98.5%. This is expected, given DenseNet-121’s more advanced architecture, which promotes efficient gradient flow through the network, leading to better generalization.

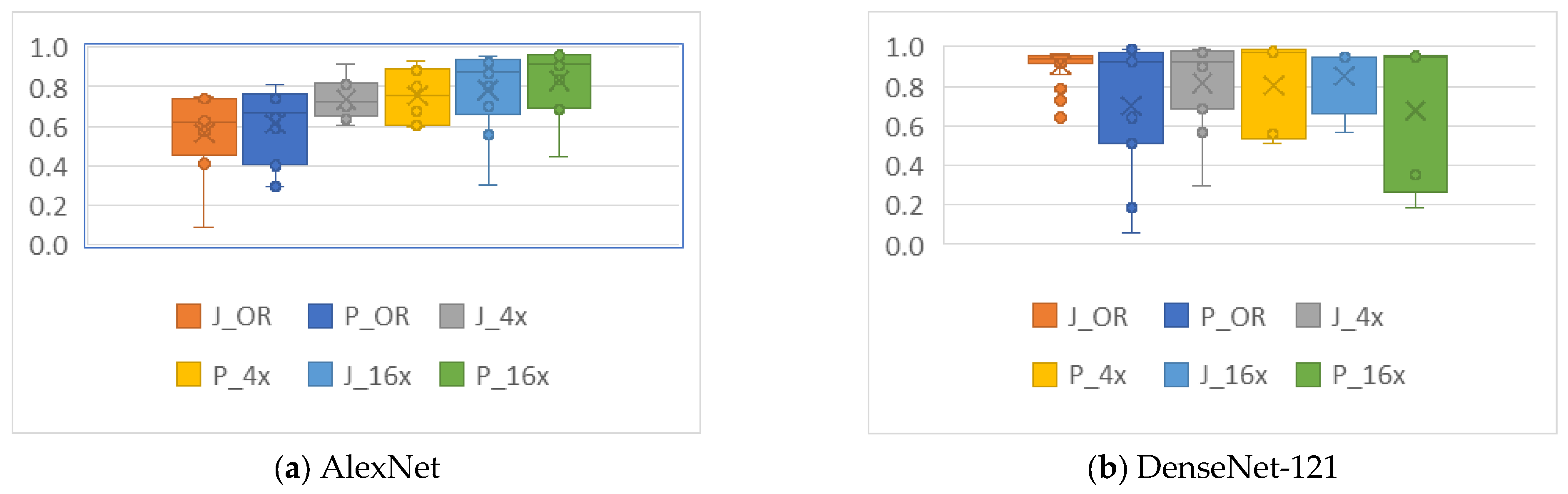

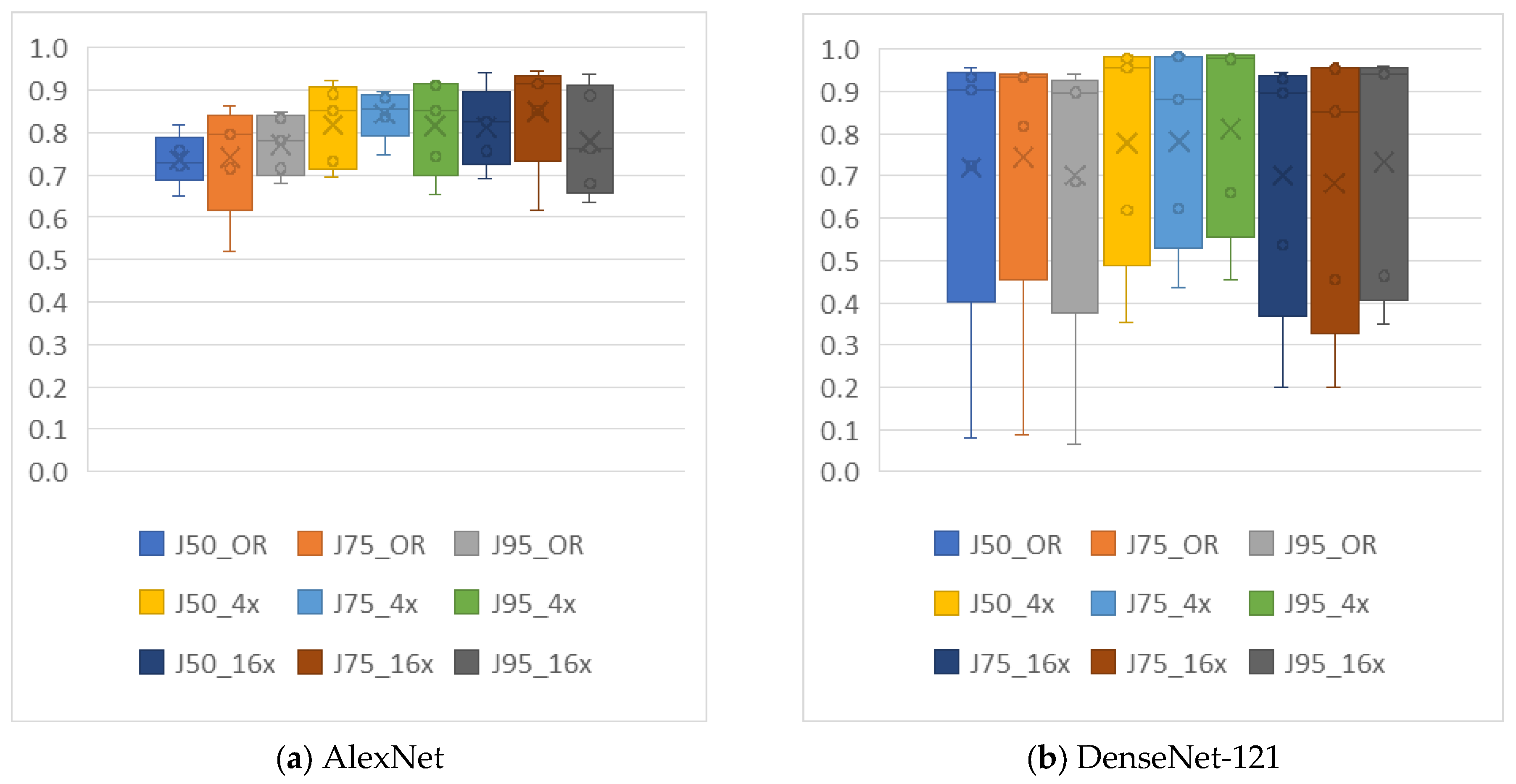

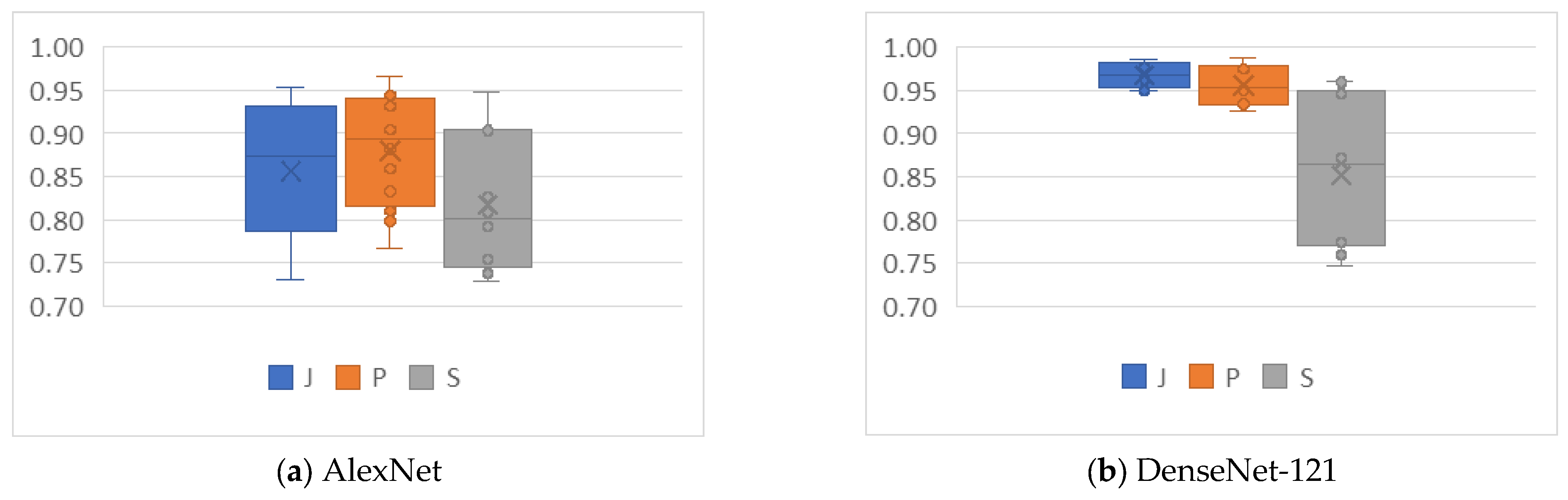

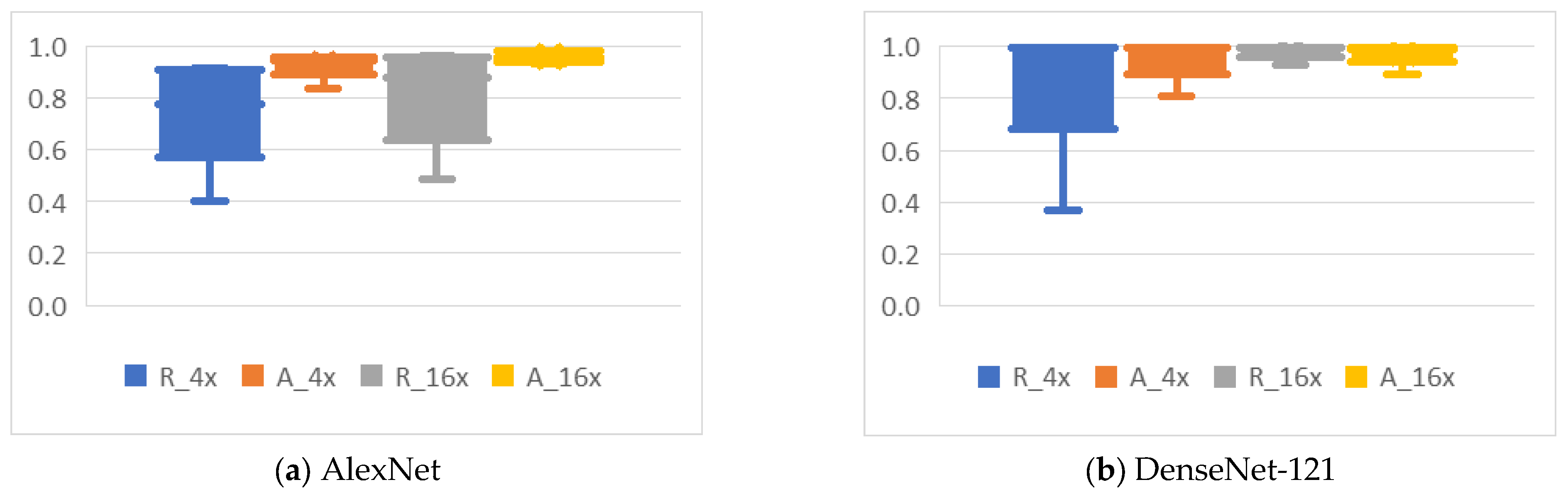

4.2. Analysis of the Effects of Image Compression

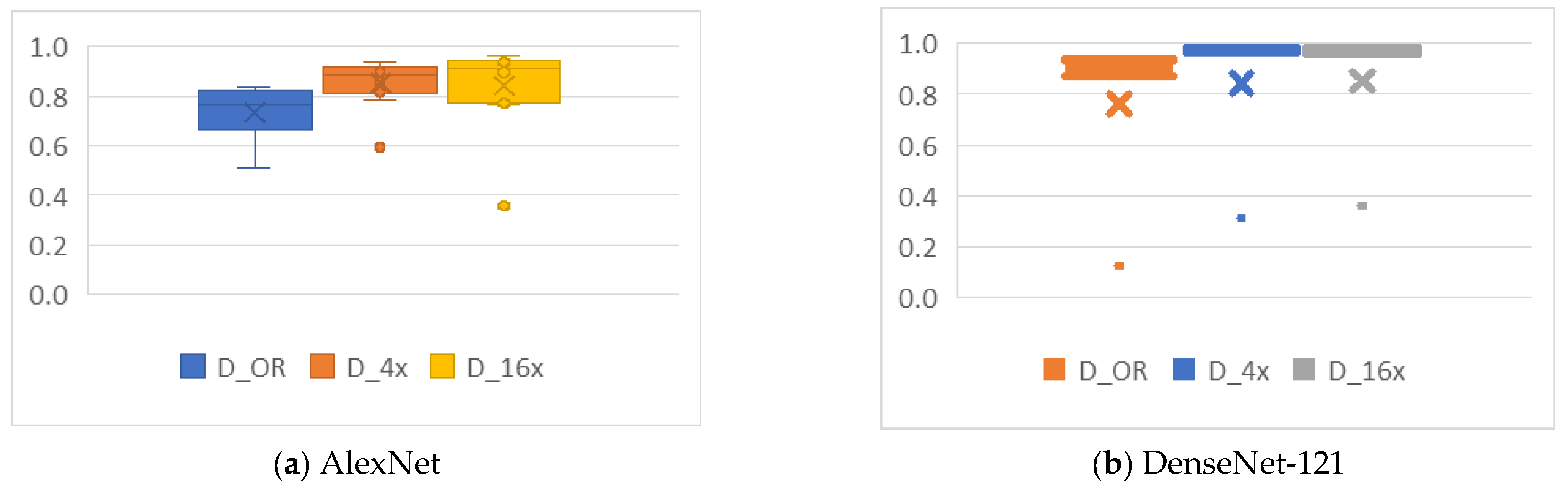

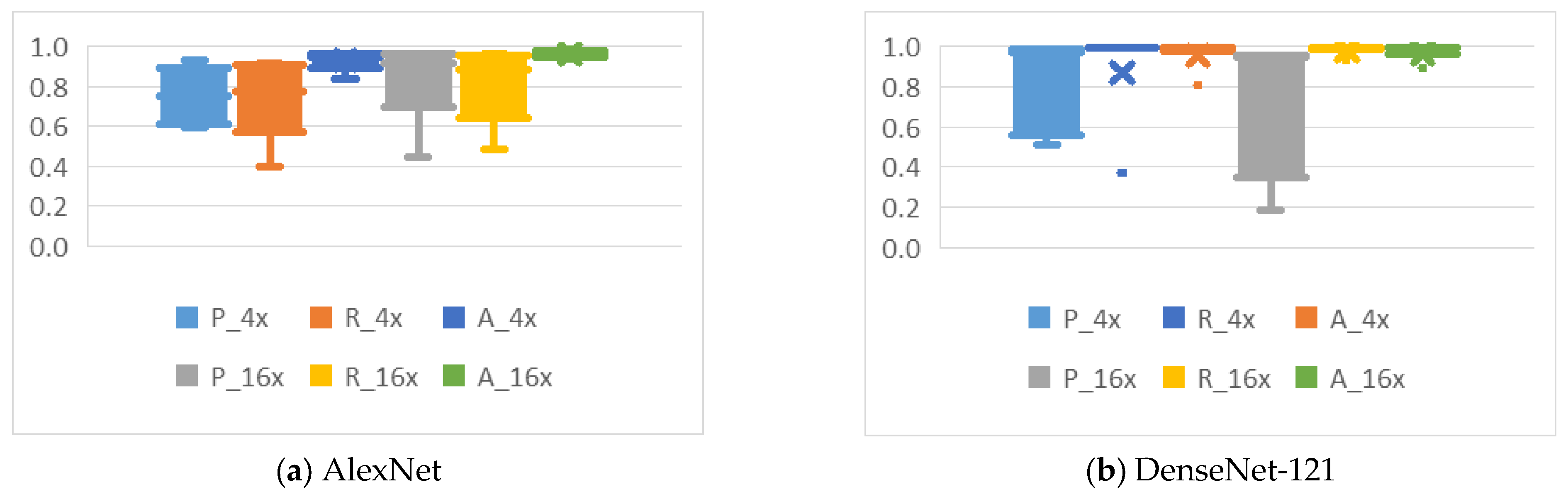

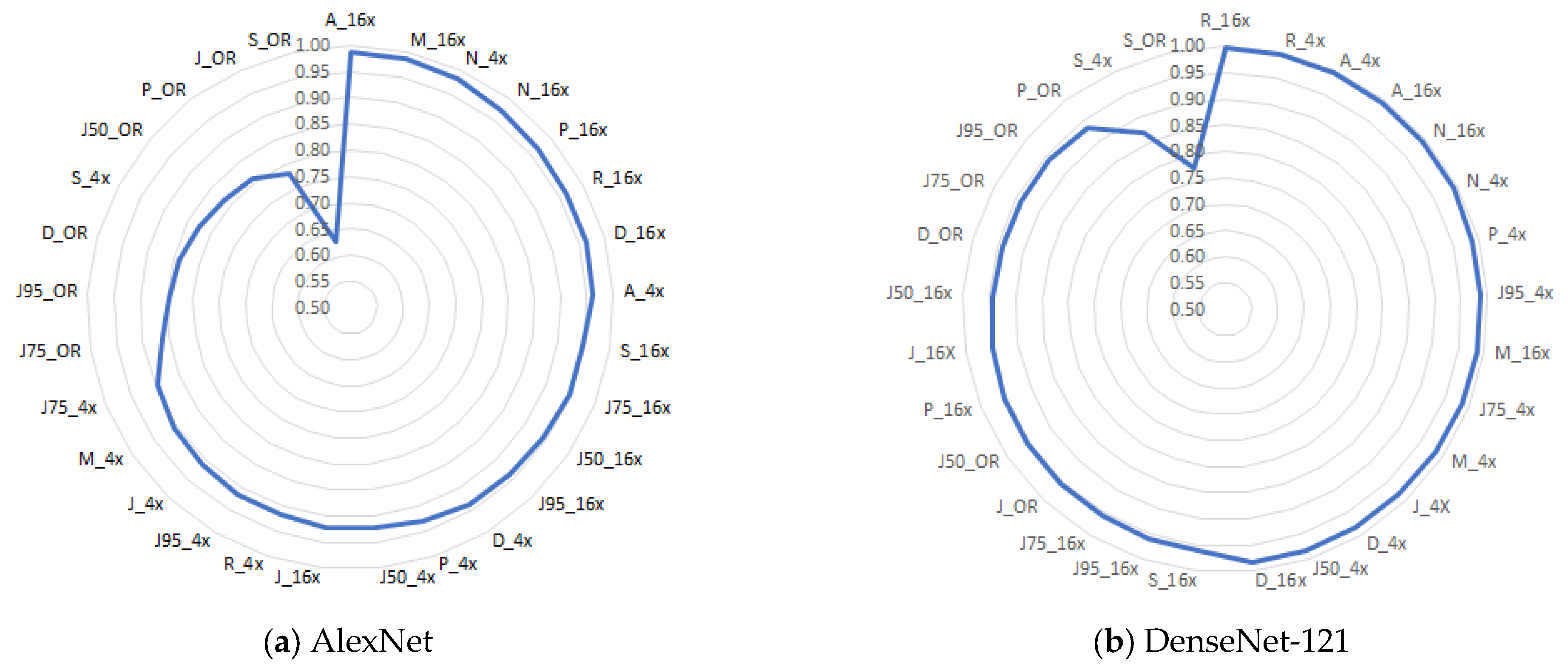

4.3. Analysis of the Effects of Non-Uniform Scalings

4.4. Analysis of the Effects of Data Augmentation

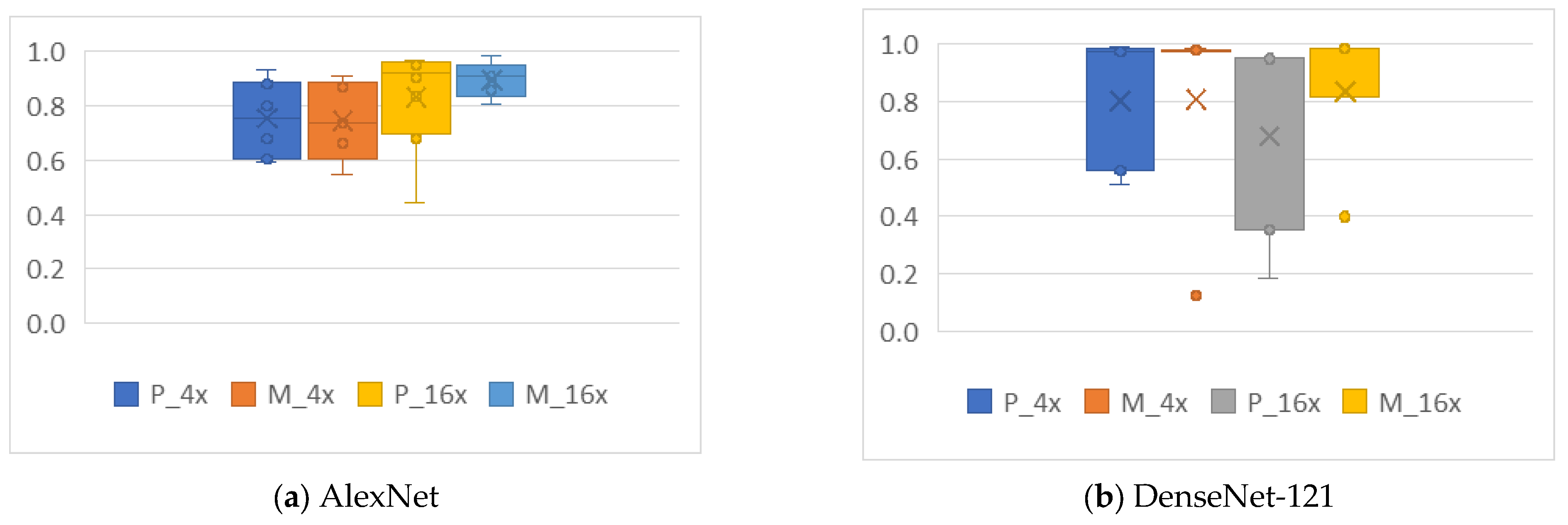

4.4.1. Cropping

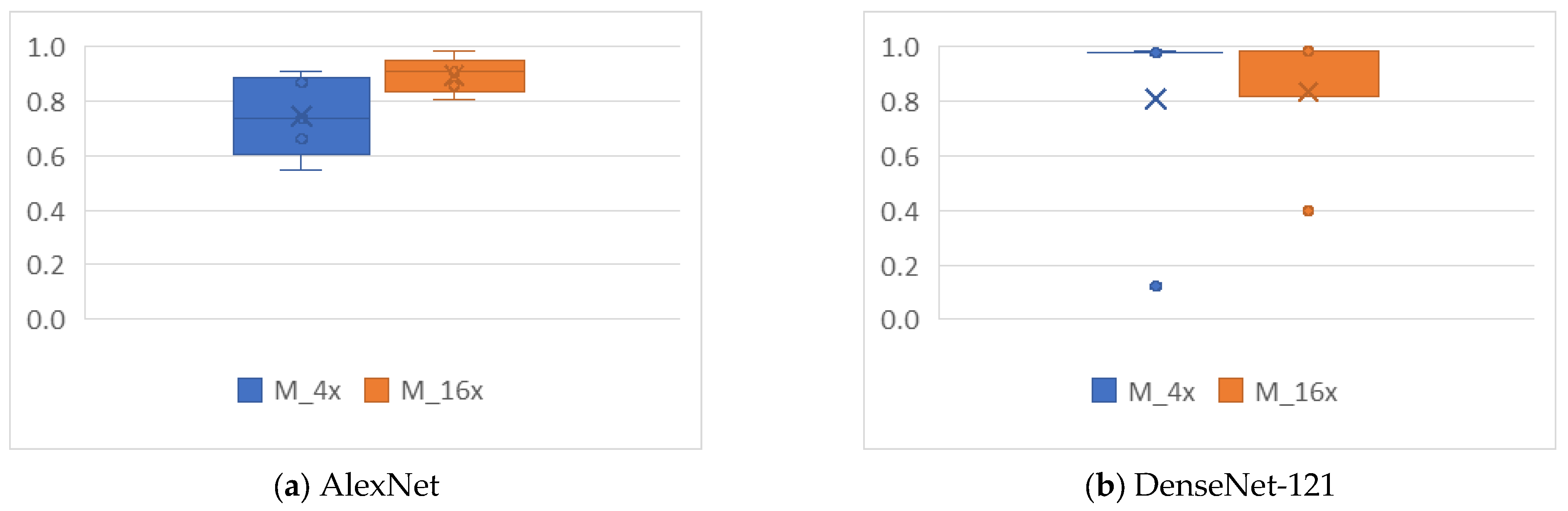

4.4.2. Mirroring

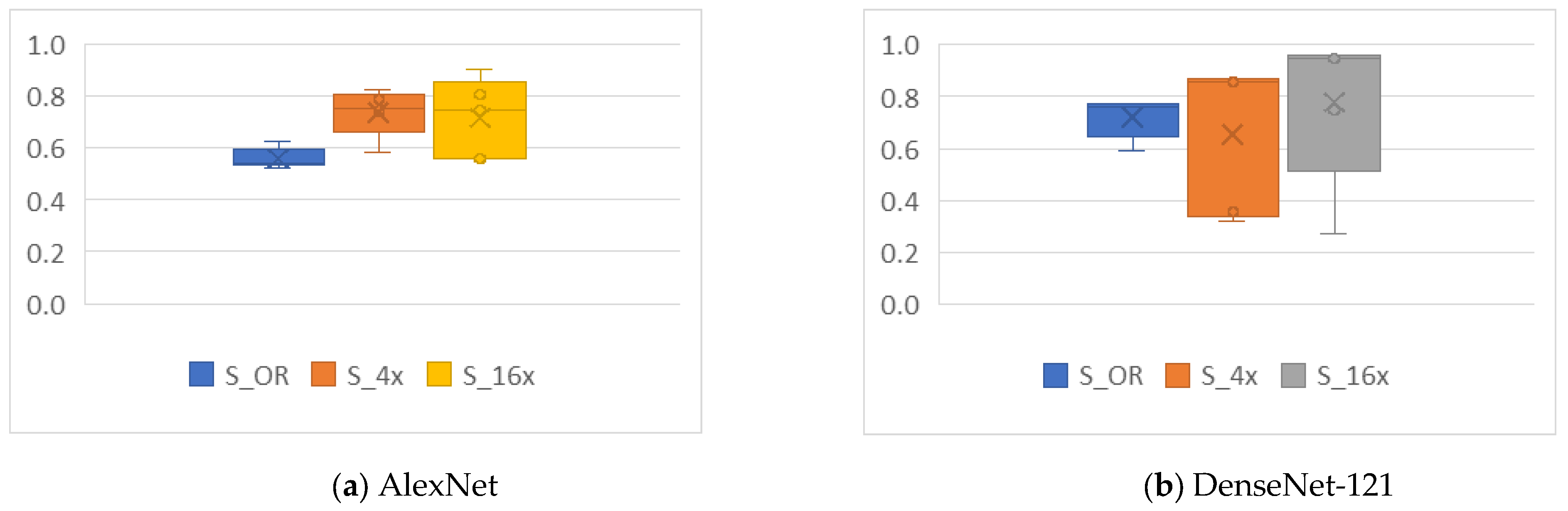

4.4.3. Rotation

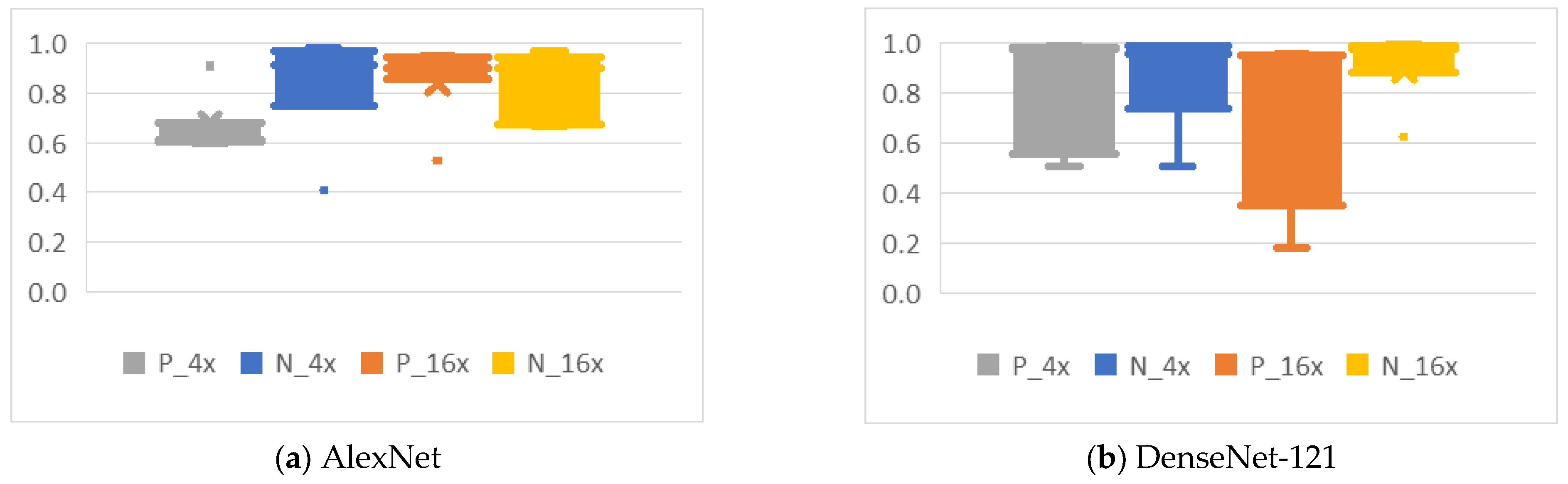

4.4.4. Noise

4.5. Summary of Results, Limitations, Practical Implications, and Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

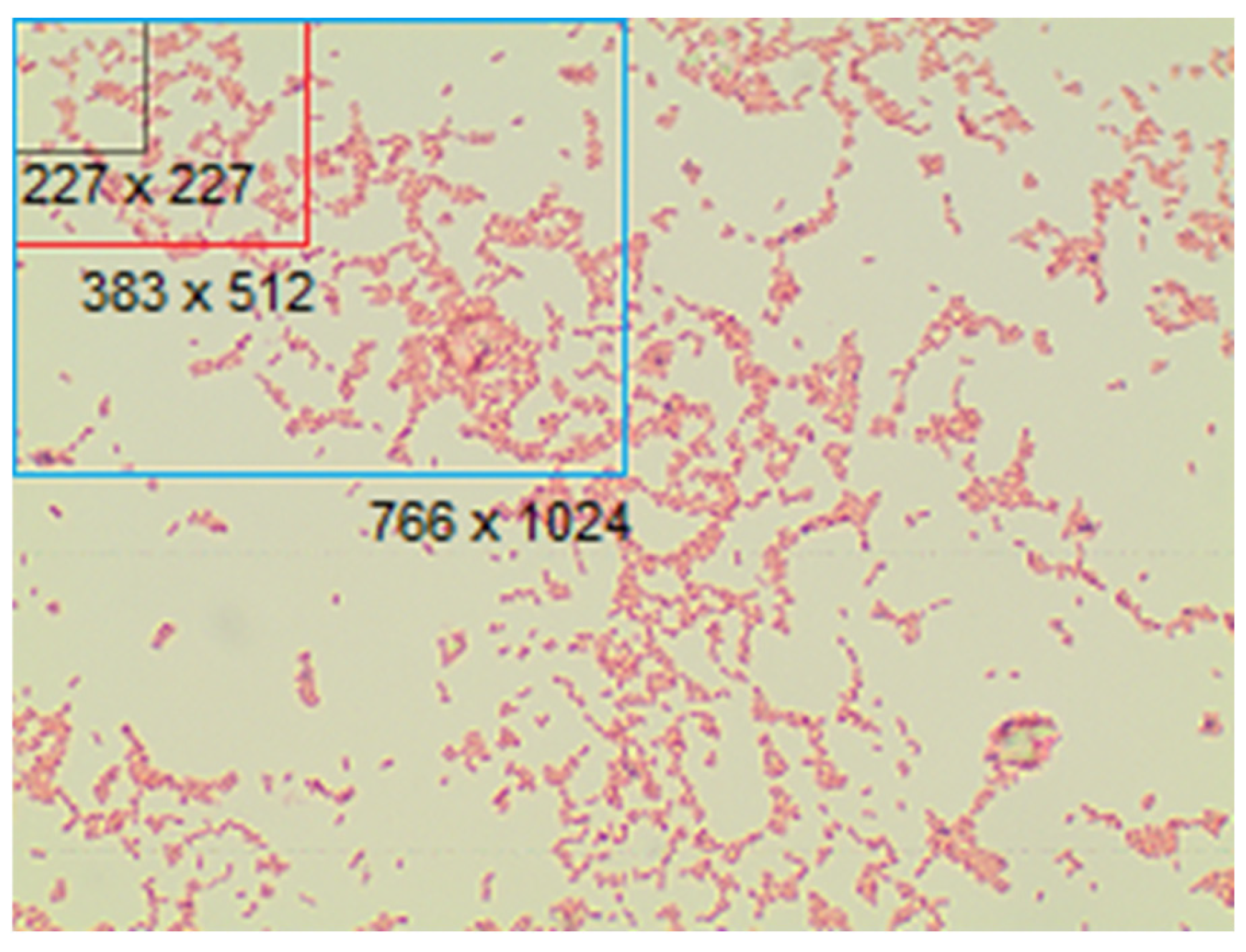

| Sub- Dataset | Description | Resolution (in Pixels) | Number of Images |

|---|---|---|---|

| J_OR | OR converted to JPG (lossy compression) | 1532 × 2048 | 689 |

| J_4x | J_OR cropped in 4 parts | 766 × 1024 | 2756 |

| J_16x | J_OR cropped in 16 parts | 383 × 512 | 11,024 |

| J_227 | J_OR cropped in 227 × 227 pixels images (AlexNet) | 227 × 227 | 37,206 |

| J_224 | J_OR cropped in 224 × 224 pixels images (DenseNet-121) | 224 × 224 | 37,206 |

| J50_OR | OR converted to JPG (50% lossy compression) | 1532 × 2048 | 689 |

| J50_4x | J50_OR cropped in 4 parts | 766 × 1024 | 2756 |

| J50_16x | J50_OR cropped in 16 parts | 383 × 512 | 11,024 |

| J75_OR | OR converted to JPG (75% lossy compression) | 1532 × 2048 | 689 |

| J75_4x | J75_OR cropped in 4 parts | 766 × 1024 | 2756 |

| J75_16x | J75_OR cropped in 16 parts | 383 × 512 | 11,024 |

| J95_OR | OR converted to JPG (95% lossy compression) | 1532 × 2048 | 689 |

| J95_4x | J95_OR cropped in 4 parts | 766 × 1024 | 2756 |

| J95_16x | J95_OR cropped in 16 parts | 383 × 512 | 11,024 |

| P_OR | OR converted to PNG (lossless compression) | 1532 × 2048 | 689 |

| P_4x | P_OR cropped in 4 parts | 766 × 1024 | 2756 |

| P_16x | P_OR cropped in 16 parts | 383 × 512 | 11,024 |

| P_227 | P_OR cropped images with 227 × 227 pixels (AlexNet) | 227 × 227 | 37,206 |

| P_224 | P_OR cropped images with 224 × 224 pixels (DenseNet-121) | 224 × 224 | 37,206 |

| S_OR | Random images from P_227 or P_224 | 1532 × 2048 | 689 |

| S_4x | Random images from P_227 or P_224 | 766 × 1024 | 2756 |

| S_16x | Random images from P_227 or P_224 | 383 × 512 | 11,024 |

| D_OR | P_OR cropped (using the largest possible dimension) | 1532 × 1532 | 689 |

| D_4x | D_OR cropped in 4 parts | 766 × 766 | 2756 |

| D_16x | D_OR cropped in 16 parts | 383 × 383 | 11,024 |

| M_4x | P_OR mirrowed in x, in y, and in x and y | 1532 × 1532 | 2756 |

| M_16x | M_4x cropped in 4 parts | 766 × 766 | 11,024 |

| R_4x | P_OR rotated by 90, 180 and 270 degrees | 1532 × 2048 | 2756 |

| R_16x | R_4x cropped in 4 parts | 766 × 1024 | 11,024 |

| A_4x | P_OR rotated randomly from 0 to 90 degrees | 1532 × 2048 | 2756 |

| A_16x | A_4x cropped in 4 parts | 766 × 1024 | 11,024 |

| N_4x | P_OR noise added | 1532 × 2048 | 2756 |

| N_16x | N_4x cropped in 4 parts | 766 × 1024 | 11,024 |

References

- Tortora, G.J.; Funke, B.R.; Case, C.L. Microbiologia, 10th ed.; Artmed Editora: Porto Alegre, Brazil, 2012; ISBN 978-85-363-2584-0. [Google Scholar]

- World Health Organization. WHO Coronavirus Disease (COVID-19) Dashboard with Vaccination Data|WHO Coronavirus (COVID-19) Dashboard with Vaccination Data; World Health Organization: Geneva, Switzerland, 2022; pp. 1–5. [Google Scholar]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; Hasan, M.; Van Essen, B.C.; Awwal, A.A.S.; Asari, V.K. A state-of-the-art survey on deep learning theory and architectures. Electronics 2019, 8, 292. [Google Scholar] [CrossRef]

- Jo, Y.J.; Park, S.; Jung, J.H.; Yoon, J.; Joo, H.; Kim, M.-H.; Kang, S.-J.; Choi, M.C.; Lee, S.Y.; Park, Y. Holographic deep learning for rapid optical screening of anthrax spores. Sci. Adv. 2017, 3, e1700606. [Google Scholar] [CrossRef] [PubMed]

- Savardi, M.; Ferrari, A.; Signoroni, A. Automatic hemolysis identification on aligned dual-lighting images of cultured blood agar plates. Comput. Methods Programs Biomed. 2018, 156, 13–24. [Google Scholar] [CrossRef] [PubMed]

- Kulwa, F.; Li, C.; Zhang, J.; Shirahama, K.; Kosov, S.; Zhao, X.; Jiang, T.; Grzegorzek, M. A new pairwise deep learning feature for environmental microorganism image analysis. Environ. Sci. Pollut. Res. 2022, 29, 51909–51926. [Google Scholar] [CrossRef]

- Lewy, D.; Mańdziuk, J. An overview of mixing augmentation methods and augmentation strategies. Artif. Intell. Rev. 2022, 56, 2111–2169. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions; Springer International Publishing: Cham, Switzerland, 2021; Volume 8. [Google Scholar] [CrossRef]

- Taye, M.M. Theoretical Understanding of Convolutional Neural Network: Concepts, Architectures, Applications, Future Directions. Computation 2023, 11, 52. [Google Scholar] [CrossRef]

- Angermueller, C.; Pärnamaa, T.; Parts, L.; Stegle, O. Deep learning for computational biology. Mol. Syst. Biol. 2016, 12, 878. [Google Scholar] [CrossRef]

- Cao, C.; Liu, F.; Tan, H.; Song, D.; Shu, W.; Li, W.; Zhou, Y.; Bo, X.; Xie, Z. Deep Learning and Its Applications in Biomedicine. Genom. Proteom. Bioinform. 2018, 16, 17–32. [Google Scholar] [CrossRef]

- Mahmud, M.; Kaiser, M.S.; Hussain, A.; Vassanelli, S. Applications of Deep Learning and Reinforcement Learning to Biological Data. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2063–2079. [Google Scholar] [CrossRef]

- Moen, E.; Bannon, D.; Kudo, T.; Graf, W.; Covert, M.; Van Valen, D. Deep learning for cellular image analysis. Nat. Methods 2019, 16, 1233–1246. [Google Scholar] [CrossRef]

- Karagoz, M.A.; Akay, B.; Basturk, A.; Karaboga, D.; Nalbantoglu, O.U. An unsupervised transfer learning model based on convolutional auto encoder for non-alcoholic steatohepatitis activity scoring and fibrosis staging of liver histopathological images. Neural Comput. Appl. 2023, 35, 10605–10619. [Google Scholar] [CrossRef]

- Poostchi, M.; Silamut, K.; Maude, R.J.; Jaeger, S.; Thoma, G. Image analysis and machine learning for detecting malaria. Transl. Res. 2018, 194, 36–55. [Google Scholar] [CrossRef]

- Mienye, I.D.; Swart, T.G.; Obaido, G.; Jordan, M.; Ilono, P. Deep Convolutional Neural Networks in Medical Image Analysis: A Review. Information 2025, 16, 195. [Google Scholar] [CrossRef]

- Wu, Y.; Gadsden, S.A. Machine learning algorithms in microbial classification: A comparative analysis. Front. Artif. Intell. 2023, 6, 1200994. [Google Scholar] [CrossRef]

- Schäfer, R.; Nicke, T.; Höfener, H.; Lange, A.; Merhof, D.; Feuerhake, F.; Schulz, V.; Lotz, J.; Kiessling, F. Overcoming data scarcity in biomedical imaging with a foundational multi-task model. Nat. Comput. Sci. 2024, 4, 495–509. [Google Scholar] [CrossRef]

- Hegde, R.B.; Prasad, K.; Hebbar, H.; Singh, B.M.K. Comparison of traditional image processing and deep learning approaches for classification of white blood cells in peripheral blood smear images. Biocybern. Biomed. Eng. 2019, 39, 382–392. [Google Scholar] [CrossRef]

- Sharma, M.; Bhave, A.; Janghel, R.R. White blood cell classification using convolutional neural network. Adv. Intell. Syst. Comput. 2019, 900, 135–143. [Google Scholar] [CrossRef]

- Hay, E.A.; Parthasarathy, R. Performance of convolutional neural networks for identification of bacteria in 3D microscopy datasets. PLoS Comput. Biol. 2018, 14, 1–17. [Google Scholar] [CrossRef]

- Xu, Y.; Noy, A.; Lin, M.; Qian, Q.; Li, H.; Jin, R. WeMix: How to Better Utilize Data Augmentation. arXiv 2020, arXiv:2010.01267. [Google Scholar] [CrossRef]

- Benbarrad, T.; Kably, S.; Arioua, M.; Alaoui, N. Compression-Based Data Augmentation for CNN Generalization. In International Conference on Cybersecurity, Cybercrimes, and Smart Emerging Technologies; Springer International Publishing: Cham, Switzerland, 2021; pp. 235–244. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar] [CrossRef]

- Benbarrad, T.; Eloutouate, L.; Arioua, M.; Elouaai, F.; Laanaoui, M.D. Impact of image compression on the performance of steel surface defect classification with a CNN. J. Sens. Actuator Networks 2021, 10, 73. [Google Scholar] [CrossRef]

- Alfio, V.S.; Costantino, D.; Pepe, M. Influence of image tiff format and jpeg compression level in the accuracy of the 3d model and quality of the orthophoto in uav photogrammetry. J. Imaging 2020, 6, 30. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Sornam, M.; Muthusubash, K.; Vanitha, V. A Survey on Image Classification and Activity Recognition using Deep Convolutional Neural Network Architecture. In Proceedings of the 9th International Conference on Advanced Computing, Ho Chi Minh City, Vietnam, 27–29 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 121–126. [Google Scholar] [CrossRef]

- Van Valen, D.A.; Kudo, T.; Lane, K.M.; Macklin, D.N.; Quach, N.T.; DeFelice, M.M.; Maayan, I.; Tanouchi, Y.; Ashley, E.A.; Covert, M.W. Deep Learning Automates the Quantitative Analysis of Individual Cells in Live-Cell Imaging Experiments. PLoS Comput. Biol. 2016, 12, e1005177. [Google Scholar] [CrossRef] [PubMed]

- Ferrari, A.; Lombardi, S.; Signoroni, A. Bacterial colony counting with Convolutional Neural Networks in Digital Microbiology Imaging. Pattern Recognit. 2017, 61, 629–640. [Google Scholar] [CrossRef]

- López, Y.P.; Costa Filho, C.F.F.; Aguilera, L.M.R.; Costa, M.G.F. Automatic classification of light field smear microscopy patches using Convolutional Neural Networks for identifying Mycobacterium Tuberculosis. In Proceedings of the 2017 CHILEAN Conference on Electrical, Electronics Engineering, Information and Communication Technologies (CHILECON), Pucon, Chile, 18–20 October 2017. [Google Scholar] [CrossRef]

- Sadanandan, S.K.; Ranefall, P.; Le Guyader, S.; Wählby, C. Automated Training of Deep Convolutional Neural Networks for Cell Segmentation. Sci. Rep. 2017, 7, 7860. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Qin, J.; Guo, J. Gram staining of intestinal flora classification based on convolutional neural network. In Proceedings of the 2017 IEEE 19th International Conference on e-Health Networking, Applications and Services (Healthcom), Dalian, China, 12–15 October 2017. [Google Scholar] [CrossRef]

- Kim, G.; Jo, Y.; Cho, H.; Choi, G.; Kim, B.S.; Min, H.S.; Park, Y. Automated Identification of Bacteria Using Threedimensional Holographic Imaging and Convolutional Neural Network. In Proceedings of the 2018 IEEE Photonics Conference (IPC), Reston, VA, USA, 30 September 2018–4 October 2018. [Google Scholar]

- Wahid, M.F.; Ahmed, T.; Habib, M.A. Classification of microscopic images of bacteria using deep convolutional neural network. In Proceedings of the 2018 10th International Conference on Electrical and Computer Engineering (ICECE), Dhaka, Bangladesh, 20–22 December 2018; pp. 217–220. [Google Scholar] [CrossRef]

- Tamiev, D.; Furman, P.E.; Reuel, N.F. Automated classification of bacterial cell subpopulations with convolutional neural networks. PLoS ONE 2020, 15, e0241200. [Google Scholar] [CrossRef]

- Cubuk, E.D.; Zoph, B.; Shlens, J.; Le, Q.V. RandAugment: Practical Automated Data Augmentation with a Reduced Search Space. arXiv 2019, arXiv:1909.13719. [Google Scholar] [CrossRef]

- Faryna, K.; Van Der Laak, J.; Litjens, G. Tailoring automated data augmentation to H&E-stained histopathology. In Proceedings of the Machine Learning Research, Hangzhou, China, 17–19 September 2021; Available online: https://proceedings.mlr.press/v143/faryna21a.html (accessed on 5 August 2025).

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2323. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Huang, L.; Wu, T. Novel neural network application for bacterial colony classification. Theor. Biol. Med. Model. 2018, 15, 22. [Google Scholar] [CrossRef]

- Yu, W.; Chang, J.; Yang, C.; Zhang, L.; Shen, H.; Xia, Y.; Sha, J. Automatic classification of leukocytes using deep neural network. In Proceedings of the 2017 IEEE 12th International Conference on ASIC (ASICON), Guiyang, China, 25–28 October 2017; pp. 1041–1044. [Google Scholar] [CrossRef]

- Dubey, A.; Singh, S.K.; Jiang, X. Leveraging CNN and Transfer Learning for Classification of Histopathology Images. In International Conference on Machine Learning, Image Processing, Network Security and Data Sciences; Springer International Publishing: Cham, Switzerland, 2022; Volume 1763, pp. 3–13. [Google Scholar] [CrossRef]

- Panicker, R.O.; Kalmady, K.S.; Rajan, J.; Sabu, M.K. Automatic detection of tuberculosis bacilli from microscopic sputum smear images using deep learning methods. Biocybern. Biomed. Eng. 2018, 38, 691–699. [Google Scholar] [CrossRef]

- Bellenberg, S.; Buetti-Dinh, A.; Galli, V.; Ilie, O.; Herold, M.; Christel, S.; Boretska, M.; Pivkin, I.V.; Wilmes, P.; Sand, W.; et al. Automated microscopic analysis of metal sulfide colonization by acidophilic microorganisms. Appl. Environ. Microbiol. 2018, 84, e01835-18. [Google Scholar] [CrossRef]

- Costa, M.G.F.; Filho, C.F.F.C.; Kimura, A.; Levy, P.C.; Xavier, C.M.; Fujimoto, L.B. A sputum smear microscopy image database for automatic bacilli detection in conventional microscopy. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 841–2844. [Google Scholar] [CrossRef]

- Kuok, C.P.; Horng, M.H.; Liao, Y.M.; Chow, N.H.; Sun, Y.N. An effective and accurate identification system of Mycobacterium tuberculosis using convolution neural networks. Microsc. Res. Tech. 2019, 82, 709–719. [Google Scholar] [CrossRef]

- Smith, K.P.; Kang, A.D.; Kirby, J.E. Automated interpretation of blood culture gram stains by use of a deep convolutional neural network. J. Clin. Microbiol. 2018, 56, e01521-17. [Google Scholar] [CrossRef] [PubMed]

- Zieliński, B.; Plichta, A.; Misztal, K.; Spurek, P.; Brzychczy-Włoch, M.; Ochońska, D. Deep learning approach to bacterial colony classification. PLoS ONE 2017, 12, e0184554. [Google Scholar] [CrossRef] [PubMed]

- Xue, Y.; Ray, N.; Hugh, J.; Bigras, G. Cell counting by regression using convolutional neural network. In Lecture Notes in Computer Science; Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics; Springer International Publishing: Cham, Switzerland, 2016; Volume 9913, pp. 274–290. [Google Scholar] [CrossRef]

- Qin, F.; Gao, N.; Peng, Y.; Wu, Z.; Shen, S.; Grudtsin, A. Fine-grained leukocyte classification with deep residual learning for microscopic images. Comput. Methods Programs Biomed. 2018, 162, 243–252. [Google Scholar] [CrossRef]

- Shahin, A.I.; Guo, Y.; Amin, K.M.; Sharawi, A.A. White blood cells identification system based on convolutional deep neural learning networks. Comput. Methods Programs Biomed. 2019, 168, 69–80. [Google Scholar] [CrossRef] [PubMed]

- Zieliski, B.; Sroka-Oleksiak, A.; Rymarczyk, D.; Piekarczyk, A.; Brzychczy-Woch, M. Deep learning approach to describe and classify fungi microscopic images. PLoS ONE 2020, 15, e0234806. [Google Scholar] [CrossRef]

- Sajedi, H.; Mohammadipanah, F.; Pashaei, A. Image-processing based taxonomy analysis of bacterial macromorphology using machine-learning models. Multimed Tools Appl. 2020, 79, 32711–32730. [Google Scholar] [CrossRef]

- Dodge, S.; Karam, L. Understanding how image quality affects deep neural networks. In Proceedings of the 2016 Eighth International Conference on Quality of Multimedia Experience (QoMEX), Lisbon, Portugal, 6–8 June 2016. [Google Scholar] [CrossRef]

- Kannojia, S.P.; Jaiswal, G. Effects of Varying Resolution on Performance of CNN based Image Classification An Experimental Study. Int. J. Comput. Sci. Eng. 2018, 6, 451–456. [Google Scholar] [CrossRef]

- Chen, Y.; Janowczyk, A.; Madabhushi, A. Quantitative Assessment of the Effects of Compression on Deep Learning in Digital Pathology Image Analysis. JCO Clin. Cancer Inform. 2020, 4, 221–233. [Google Scholar] [CrossRef]

- Yip, M.Y.T.; Lim, G.; Lim, Z.W.; Nguyen, Q.D.; Chong, C.C.Y.; Yu, M.; Bellemo, V.; Xie, Y.; Lee, X.Q.; Hamzah, H.; et al. Technical and imaging factors influencing performance of deep learning systems for diabetic retinopathy. npj Digit. Med. 2020, 3, 31–34. [Google Scholar] [CrossRef] [PubMed]

- Takahashi, S.; Sakaguchi, Y.; Kouno, N.; Takasawa, K.; Ishizu, K.; Akagi, Y.; Aoyama, R.; Teraya, N.; Bolatkan, A.; Shinkai, N.; et al. Comparison of Vision Transformers and Convolutional Neural Networks in Medical Image Analysis: A Systematic Review. J. Med. Syst. 2024, 48, 84. [Google Scholar] [CrossRef] [PubMed]

- Aknda, M.R.; Farid FAl Uddin, J.; Mansor, S.; Kibria, M.G. SCCM: An Interpretable Enhanced Transfer Learning Model for Improved Skin Cancer Classification. BioMedInformatics 2025, 5, 43. [Google Scholar] [CrossRef]

| Bacteria | Number of Images | Bacteria | Number of Images |

|---|---|---|---|

| Acinetobacter baumanii | 20 | Lactobacillus plantarum | 20 |

| Actinomyces israeli | 23 | Lactobacillus reuteri | 20 |

| Bacteroides fragilis | 23 | Lactobacillus rhamnosus | 20 |

| Bifidobacterium spp. | 23 | Lactobacillus salivarius | 20 |

| Candida albicans | 20 | Listeria monocytogenes | 22 |

| Clostridium perfringens | 23 | Micrococcus spp | 21 |

| Enterococcus faecalis | 20 | Neisseria gonorrhoeae | 23 |

| Enterococcus faecium | 20 | Porfyromonas gingivalis | 23 |

| Escherichia coli | 20 | Propionibacterium acnes | 23 |

| Fusobacterium | 23 | Proteus | 20 |

| Lactobacillus casei | 20 | Pseudomonas aeruginosa | 20 |

| Lactobacillus crispatus | 20 | Staphylococcus aureus | 20 |

| Lactobacillus delbrueckii | 20 | Staphylococcus epidermidis | 20 |

| Lactobacillus gasseri | 20 | Staphylococcus saprophiticus | 20 |

| Lactobacillus jehnsenii | 20 | Streptococcus agalactiae | 20 |

| Lactobacillus johnsonii | 20 | Veionella | 22 |

| Lactobacillus paracasei | 20 | --- | --- |

| Resource | GPU with Standard RAM | GPU with High RAM |

|---|---|---|

| GPU | A100-SXM4-40 GB | Tesla P100-PCIE-16 GB |

| RAM | 12.68 GB | 25.45 GB |

| Hard drive | 166.77 GB | 166.77 GB |

| CPU | 2 Intel® Xeon® 2.20 GHz | 4 Intel® Xeon® 2.20 GHz |

| Dataset | Epochs | Learning Ra. | Batch Size | Accuracy | |

|---|---|---|---|---|---|

| Training | Validation | ||||

| J_16x | 50 | 0.0015 | 128 | 0.9901 | 0.9533 |

| J_16x | 50 | 0.0015 | 128 | 0.9732 | 0.9369 |

| J_16x | 50 | 0.0015 | 128 | 0.9776 | 0.9351 |

| J_4x | 75 | 0.0015 | 64 | 0.9891 | 0.9111 |

| J_4x | 75 | 0.0015 | 128 | 0.9202 | 0.8221 |

| J_4x | 75 | 0.0015 | 64 | 0.9052 | 0.8094 |

| J_OR | 75 | 0.0015 | 64 | 0.9475 | 0.7810 |

| J_OR | 75 | 0.001 | 64 | 0.9837 | 0.7737 |

| J_OR | 75 | 0.0015 | 64 | 0.9475 | 0.7445 |

| Dataset | Epochs | Learning Rate | Batch Size | Accuracy | |

|---|---|---|---|---|---|

| Training | Validation | ||||

| J_OR | 50 | 0.0015 | 64 | 0.9909 | 0.9635 |

| J_OR | 50 | 0.001 | 64 | 0.9946 | 0.9562 |

| J_OR | 50 | 0.0001 | 64 | 0.9909 | 0.9562 |

| J_4x | 25 | 0.001 | 64 | 0.9935 | 0.9855 |

| J_4x | 25 | 0.001 | 64 | 0.9917 | 0.9823 |

| J_4x | 25 | 0.001 | 64 | 0.9905 | 0.9809 |

| J_16x | 25 | 0.001 | 64 | 0.9821 | 0.9492 |

| J_16x | 25 | 0.001 | 64 | 0.9812 | 0.9474 |

| J_16x | 25 | 0.001 | 64 | 0.9781 | 0.9456 |

| AlexNet | DenseNet-121 | AlexNet | DenseNet-121 | ||||

|---|---|---|---|---|---|---|---|

| Dataset | val_acc | Dataset | val_acc | Dataset | val_acc | Dataset | val_acc |

| A_16x | 0.9861 | R_16x | 0.9982 | J_16x | 0.9211 | S_16x | 0.9605 |

| M_16x | 0.9855 | R_4x | 0.9964 | R_4x | 0.9165 | J95_16x | 0.9596 |

| N_4x | 0.9819 | A_4x | 0.9948 | J95_4x | 0.9165 | J75_16x | 0.9564 |

| N_16x | 0.9732 | A_16x | 0.9946 | J_4x | 0.9111 | J_OR | 0.9562 |

| P_16x | 0.9660 | N_16x | 0.9936 | M_4x | 0.9074 | J50_OR | 0.9562 |

| R_16x | 0.9651 | N_4x | 0.9909 | J75_4x | 0.8966 | P_16x | 0.9551 |

| D_16x | 0.9646 | P_4x | 0.9882 | J75_OR | 0.8613 | J_16X | 0.9492 |

| A_4x | 0.9619 | J95_4x | 0.9868 | J95_OR | 0.8467 | J50_16x | 0.9437 |

| S_16x | 0.9477 | M_16x | 0.9859 | D_OR | 0.8394 | D_OR | 0.9416 |

| J75_16x | 0.9469 | J75_4x | 0.9850 | S_4x | 0.8271 | J75_OR | 0.9416 |

| J50_16x | 0.9428 | M_4x | 0.9837 | J50_OR | 0.8175 | J95_OR | 0.9416 |

| J95_16x | 0.9374 | J_4X | 0.9823 | P_OR | 0.8102 | P_OR | 0.9343 |

| D_4x | 0.9365 | D_4x | 0.9819 | J_OR | 0.7810 | S_4x | 0.8711 |

| P_4x | 0.9310 | J50_4x | 0.9819 | S_OR | 0.6277 | S_OR | 0.7737 |

| J50_4x | 0.9238 | D_16x | 0.9814 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Boukouvalas, D.T.; Bissaco, M.A.S.; Dellê, H.; Deana, A.M.; Belan, P.A.; Araújo, S.A.d. Comprehensive Assessment of CNN Sensitivity in Automated Microorganism Classification: Effects of Compression, Non-Uniform Scaling, and Data Augmentation. BioMedInformatics 2025, 5, 61. https://doi.org/10.3390/biomedinformatics5040061

Boukouvalas DT, Bissaco MAS, Dellê H, Deana AM, Belan PA, Araújo SAd. Comprehensive Assessment of CNN Sensitivity in Automated Microorganism Classification: Effects of Compression, Non-Uniform Scaling, and Data Augmentation. BioMedInformatics. 2025; 5(4):61. https://doi.org/10.3390/biomedinformatics5040061

Chicago/Turabian StyleBoukouvalas, Dimitria Theophanis, Márcia Aparecida Silva Bissaco, Humberto Dellê, Alessandro Melo Deana, Peterson Adriano Belan, and Sidnei Alves de Araújo. 2025. "Comprehensive Assessment of CNN Sensitivity in Automated Microorganism Classification: Effects of Compression, Non-Uniform Scaling, and Data Augmentation" BioMedInformatics 5, no. 4: 61. https://doi.org/10.3390/biomedinformatics5040061

APA StyleBoukouvalas, D. T., Bissaco, M. A. S., Dellê, H., Deana, A. M., Belan, P. A., & Araújo, S. A. d. (2025). Comprehensive Assessment of CNN Sensitivity in Automated Microorganism Classification: Effects of Compression, Non-Uniform Scaling, and Data Augmentation. BioMedInformatics, 5(4), 61. https://doi.org/10.3390/biomedinformatics5040061