Advancing Emotion Recognition: EEG Analysis and Machine Learning for Biomedical Human–Machine Interaction

Abstract

1. Introduction

1.1. Background

1.2. A Physiological Perspective

- The insular cortex and the hypothalamus—crucially involved in generating the autonomic components of emotions [9]. The human insular cortex is in the depth of the lateral fissure/Sylvian fissure and is connected to the amygdala and various limbic and association cortical areas [11]. Meanwhile, the hypothalamus is a small central structure located under the thalamus [12].

1.3. Electroencephalography (EEG) and Its Assessement

1.4. Problem

1.5. Supporting Studies

1.6. Objectives

2. Materials and Methods

2.1. Input Data

- Display of a 2 s information screen with the number of the current trial, to orientate participants on the progress of the experiment.

- Display of a 5 s recording of the baseline, presenting a fixation cross.

- Display of the selected music video, lasting 1 min.

- Self-assessment of arousal, valence, liking and dominance.

2.2. Methodology

2.2.1. Data Preparation and Analysis

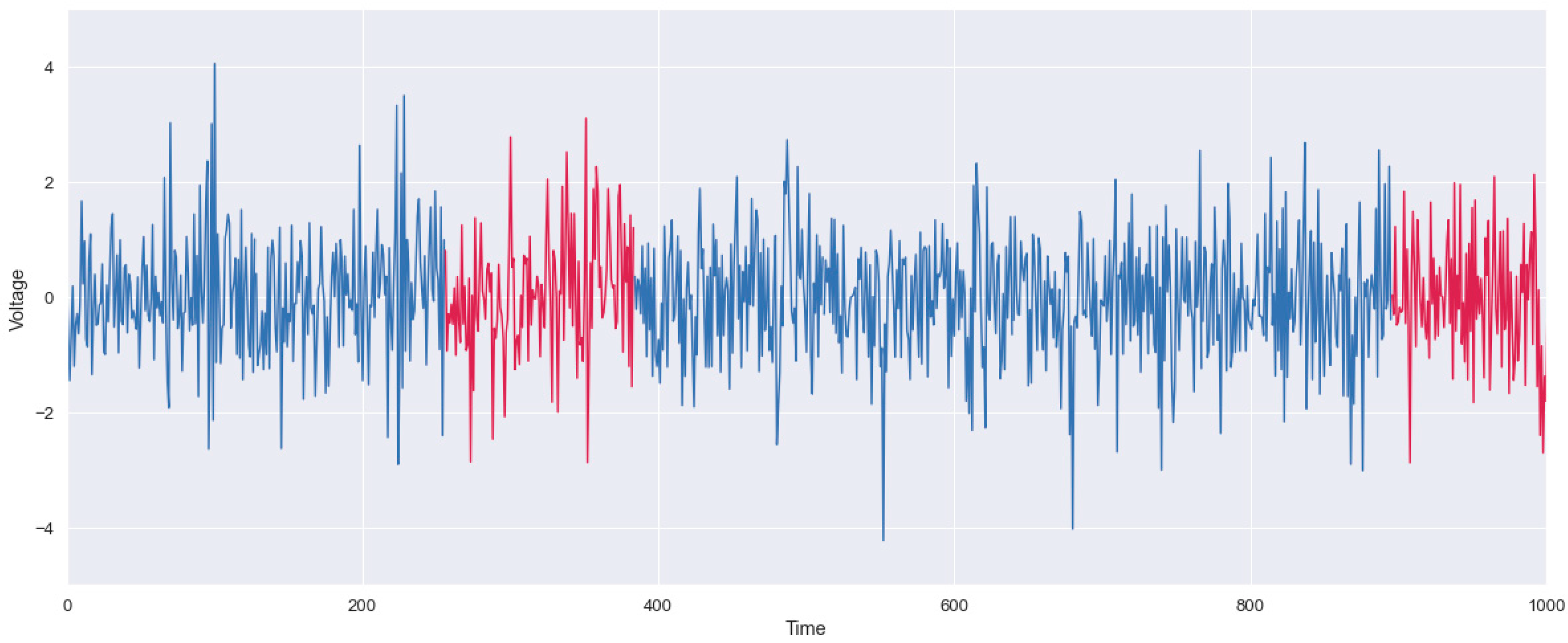

2.2.2. Irregularity Detection

2.2.3. Feature Extraction

2.2.4. Emotion Classification Task

2.2.5. Statistical Analysis

3. Results

Statistical Analysis

4. Discussion

5. Conclusions

5.1. Main Contributions

5.2. Comparative Study

5.3. Limitations and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Study | Data | Stimuli | Methods/Algorithms |

|---|---|---|---|

| EEG-based emotion classification using deep belief networks [26] | Obtained from 6 individuals (3 men and 3 women), each on two journeys at intervals of one week or more. Recorded using an ESI NeuroScan system from a 62-channel electrode cap. | 12 emotional film extracts (6 positive and 6 negative), each 4 min long. | Using the algorithms DBN-HMM (Deep Belief Network-Hidden Markov Model), DBN (Deep Belief Network), GELM (Graph regularised Extreme Learning Machine), SVM (Support Vector Machine) and KNN (K-nearest neighbors) |

| Human Emotion Detection Via Brain Waves Study by Using Electroencephalogram (EEG) [27] | Obtained from an undefined group of individuals. Recorded by a CONTEC KT88-3200 32-channel encephalograph connected to an electrode cap. | 4 2-min videos corresponding to anger, sadness, joy and surprise. | The extracted features were classified using artificial intelligence techniques for emotional faces. |

| Automated Feature Extraction on AsMap for Emotion Classification Using EEG [28] | Datasets SEED and DEAP in different classification problems based on the number of classes. | 15 excerpts from Chinese films about 4 min long. | AsMap + CNN (Asymmetric Map + Convolutional Neural Network), DE (Differential Entropy), DASM (Differential Asymmetry), RASM (Relative Asymmetry) and DCAU (Differential Caudality) |

| Emotion Classification from EEG Signals in Convolutional Neural Networks [29] | Acquired from a group of 10 women aged between 24 and 33. Recorded by the Neurosky Mobile Mind-wave headset. | A specially edited video containing scenes of joy/fun, sadness and fear, lasting 224 s. | CNN (Convolutional Neural Network) |

| Emotion Classification Using EEG Signals [30] | Dataset DEAP | 40 1-min music videos | Naïve Bayes, SVM |

| Emotion Recognition Based on DEAP Database using EEG Time-Frequency Features and Machine Learning Methods [31] | Dataset DEAP | 40 1-min music videos | Random Forest, SVM, k-NN and Weighted k-NN |

References

- Nonweiler, J.; Vives, J.; Barrantes-Vidal, N.; Ballespí, S. Emotional self-knowledge profiles and relationships with mental health indicators support value in ‘knowing thyself’. Sci. Rep. 2024, 14, 7900. [Google Scholar] [CrossRef]

- Aballay, L.N.; Collazos, C.A.; Aciar, S.V.; Torres, A.A. Analysis of Emotion Recognition Methods: A Systematic Mapping of the Literature. In International Congress of Telematics and Computing; Springer Nature: Cham, Switzerland, 2024; pp. 298–313. [Google Scholar] [CrossRef]

- Misselhorn, C.; Poljanšek, T.; Störzinger, T.; Klein, M. (Eds.) Emotional Machines; Springer Fachmedien: Wiesbaden, Germany, 2023. [Google Scholar] [CrossRef]

- Spezialetti, M.; Placidi, G.; Rossi, S. Emotion Recognition for Human-Robot Interaction: Recent Advances and Future Perspectives. Front. Robot. AI 2020, 7, 532279. [Google Scholar] [CrossRef] [PubMed]

- Hattingh, C.J.; Ipser, J.; Tromp, S.A.; Syal, S.; Lochner, C.; Brooks, S.J.; Stein, D.J. Functional magnetic resonance imaging during emotion recognition in social anxiety disorder: An activation likelihood meta-analysis. Front. Hum. Neurosci. 2013, 6, 347. [Google Scholar] [CrossRef]

- Coelho, L. Speech as an Emotional Load Biomarker in Clinical Applications. Med. Interna 2024, 31, 7–13. [Google Scholar]

- Suhaimi, N.S.; Mountstephens, J.; Teo, J. EEG-Based Emotion Recognition: A State-of-the-Art Review of Current Trends and Opportunities. Comput. Intell. Neurosci. 2020, 2020, 8875426. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Lu, B.-L. Emotion classification based on gamma-band EEG. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; pp. 1223–1226. [Google Scholar] [CrossRef]

- Gainotti, G. ENS TEACHING REVIEW Disorders of emotional behaviour. J. Neurol. 2001, 248, 743–749. [Google Scholar] [CrossRef]

- Bellani, M.; Baiano, M.; Brambilla, P. Brain anatomy of major depression II. Focus on amygdala. Epidemiol. Psychiatr. Sci. 2011, 20, 33–36. [Google Scholar] [CrossRef]

- Nieuwenhuys, R. The insular cortex: A review. Prog. Brain Res. 2012, 195, 123–163. [Google Scholar] [CrossRef] [PubMed]

- Baloyannis, S.; Gordeladze, J. (Eds.) Hypothalamus in Health and Diseases; BoD–Books on Demand: London, UK, 2018. [Google Scholar]

- Park, Y.-S.; Sammartino, F.; Young, N.A.; Corrigan, J.; Krishna, V.; Rezai, A.R. Anatomic Review of the Ventral Capsule/Ventral Striatum and the Nucleus Accumbens to Guide Target Selection for Deep Brain Stimulation for Obsessive-Compulsive Disorder. World Neurosurg. 2019, 126, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Bhanji, J.; Smith, D.; Delgado, M. A Brief Anatomical Sketch of Human Ventromedial Prefrontal Cortex. PsyArXiv 2019. [Google Scholar] [CrossRef]

- Bericat, E. The sociology of emotions: Four decades of progress. Curr. Sociol. 2016, 64, 491–513. [Google Scholar] [CrossRef]

- Chafale, D.; Pimpalkar, A. Review on developing corpora for sentiment analysis using plutchik’s wheel of emotions with fuzzy logic. Int. J. Comput. Sci. Eng. 2014, 2, 14–18. [Google Scholar]

- Liu, Y.; Sourina, O.; Nguyen, M.K. Real-Time EEG-Based Emotion Recognition and Its Applications. In Transactions on Computational Science XII: Special Issue on Cyberworlds; Springer: Berlin/Heidelberg, Germany, 2011; pp. 256–277. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, Y.; Li, Y.; Kong, X. Review on Emotion Recognition Based on Electroencephalography. Front. Comput. Neurosci. 2021, 15, 758212. [Google Scholar] [CrossRef] [PubMed]

- Müller-Putz, G.R. Electroencephalography. Handb. Clin. Neurol. 2020, 168, 249–262. [Google Scholar] [CrossRef] [PubMed]

- Subha, D.P.; Joseph, P.K.; Acharya, U.R.; Lim, C.M. EEG signal analysis: A survey. J. Med. Syst. 2010, 34, 195–212. [Google Scholar] [CrossRef]

- Crabbe, J.B.; Dishman, R.K. Brain electrocortical activity during and after exercise: A quantitative synthesis. Psychophysiology 2004, 41, 563–574. [Google Scholar] [CrossRef]

- Groppe, D.M.; Bickel, S.; Keller, C.J.; Jain, S.K.; Hwang, S.T.; Harden, C.; Mehta, A.D. Dominant frequencies of resting human brain activity as measured by the electrocorticogram. Neuroimage 2013, 79, 223–233. [Google Scholar] [CrossRef] [PubMed]

- Adriano, T.; Arriaga, P. Exaustão emocional e reconhecimento de emoções na face e voz em médicos. Psicol. Saúde Doenças 2016, 17, 97–104. [Google Scholar] [CrossRef]

- Santos, P.A.R.D. Rastreamento Virtual da Face: Um Sistema Para Espelhar Emoções. Master’s Thesis, Universidade do Minho, Braga, Portugal, 2019. [Google Scholar]

- Zheng, W.-L.; Zhu, J.-Y.; Peng, Y.; Lu, B.-L. EEG-based emotion classification using deep belief networks. In Proceedings of the 2014 IEEE International Conference on Multimedia and Expo (ICME), Chengdu, China, 14–18 July 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Ismail, W.O.A.S.W.; Hanif, M.; Mohamed, S.B.; Hamzah, N.; Rizman, Z.I. Human Emotion Detection via Brain Waves Study by Using Electroencephalogram (EEG). Int. J. Adv. Sci. Eng. Inf. Technol. 2016, 6, 1005. [Google Scholar] [CrossRef]

- Ahmed, M.Z.I.; Sinha, N.; Phadikar, S.; Ghaderpour, E. Automated Feature Extraction on AsMap for Emotion Classification Using EEG. Sensors 2022, 22, 2346. [Google Scholar] [CrossRef] [PubMed]

- Donmez, H.; Ozkurt, N. Emotion Classification from EEG Signals in Convolutional Neural Networks. In Proceedings of the 2019 Innovations in Intelligent Systems and Applications Conference (ASYU), Izmir, Turkey, 31 October–2 November 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Dabas, H.; Sethi, C.; Dua, C.; Dalawat, M.; Sethia, D. Emotion Classification Using EEG Signals. In Proceedings of the 2018 2nd International Conference on Computer Science and Artificial Intelligence, Shenzhen, China, 8–10 December 2018; ACM: New York, NY, USA, 2018; pp. 380–384. [Google Scholar] [CrossRef]

- Kusumaningrum, T.D.; Faqih, A.; Kusumoputro, B. Emotion Recognition Based on DEAP Database using EEG Time-Frequency Features and Machine Learning Methods. J. Phys. Conf. Ser. 2020, 1501, 012020. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis; Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

- Li, J.W.; Lin, D.; Che, Y.; Lv, J.J.; Chen, R.J.; Wang, L.J.; Zeng, X.X.; Ren, J.C.; Zhao, H.M.; Lu, X. An innovative EEG-based emotion recognition using a single channel-specific feature from the brain rhythm code method. Front. Neurosci. 2023, 17, 1221512. [Google Scholar] [CrossRef] [PubMed]

- Paulauskas, N.; Baskys, A. Application of Histogram-Based Outlier Scores to Detect Computer Network Anomalies. Electronics 2019, 8, 1251. [Google Scholar] [CrossRef]

- Wang, R.; Wang, J.; Yu, H.; Wei, X.; Yang, C.; Deng, B. Power spectral density and coherence analysis of Alzheimer’s EEG. Cogn. Neurodyn. 2015, 9, 291–304. [Google Scholar] [CrossRef] [PubMed]

- Gramfort, A. MEG and EEG data analysis with MNE-Python. Front. Neurosci. 2013, 7, 267. [Google Scholar] [CrossRef]

- Jakkula, V. Tutorial on support vector machine (svm). Sch. EECS Wash. State Univ. 2006, 37, 3. [Google Scholar]

- Desai, M.; Shah, M. An anatomization on breast cancer detection and diagnosis employing multi-layer perceptron neural network (MLP) and Convolutional neural network (CNN). Clin. Ehealth 2021, 4, 1–11. [Google Scholar] [CrossRef]

- Kulkarni, V.Y.; Sinha, P.K. Random forest classifiers: A survey and future research directions. Int. J. Adv. Comput. 2013, 36, 1144–1153. [Google Scholar]

- McKnight, P.E.; Najab, J. Mann-Whitney U Test. In The Corsini Encyclopedia of Psychology; Wiley: Hoboken, NJ, USA, 2010; p. 1. [Google Scholar] [CrossRef]

- Cunha, J.P.Z. Um Estudo Comparativo das Técnicas de Validação Cruzada Aplicadas a Modelos Mistos. Master’s Thesis, Universidade de São Paulo, São Paulo, Brazil, 2019. [Google Scholar] [CrossRef]

- Mangabeira, J.D.C.; de Azevedo, E.C.; Lamparelli, R.A.C. Avaliação do Levantamento do Uso das Terras por Imagens de Satélite de Alta e Média Resolução Espacial; Technical Report; SIDALC: Campinas, Brazil, 2003. [Google Scholar]

- Baptista, I.A.C.S. Desenvolvimento de um Jogo Controlado Através de Potenciais EEG Estacionários Evocados Visualmente. Master’s Thesis, Universidade de Coimbra, Coimbra, Portugal, 2015. [Google Scholar]

- Junior, G.D.B.V.; Lima, B.N.; de Almeida Pereira, A.; Rodrigues, M.F.; de Oliveira, J.R.L.; Silio, L.F.; dos Santos Carvalho, A.; Ferreira, H.R.; Passos, R.P. Determinação das métricas usuais a partir da matriz de confusão de classificadores multiclasses em algoritmos inteligentes nas ciências do movimento humano. Cent. Pesqui. Avançadas Qual. Vida 2022, 14, 1. [Google Scholar] [CrossRef]

- Craig, A.; Tran, Y.; Wijesuriya, N.; Nguyen, H. Regional brain wave activity changes associated with fatigue. Psychophysiology 2012, 49, 574–582. [Google Scholar] [CrossRef] [PubMed]

| Valence and Arousal Combinations | Emotional State |

|---|---|

| High arousal and high valence (HAHV) | Excited and happy |

| Low arousal and high valence (LAHV) | Calm and relaxed |

| High arousal and low valence (HALV) | Angry and nervous |

| Low arousal and low valence (LALV) | Sad and bored |

| HAHV | LAHV | HALV | LALV | |

|---|---|---|---|---|

| Average valence ± Standard deviation | 7.12 ± 1.04 | 6.67 ± 1.09 | 3.19 ± 1.23 | 3.57 ± 1.12 |

| Average arousal ± Standard deviation | 6.81 ± 0.84 | 3.87 ± 1.09 | 6.79 ± 0.96 | 3.47 ± 1.19 |

| HALV | HAHV | LALV | LAHV | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Alpha | Beta | Gamma | Alpha | Beta | Gamma | Alpha | Beta | Gamma | Alpha | Beta | Gamma | ||

| Frontal | Theta | <0.001 | 0.009 | <0.001 | <0.001 | 0.014 | <0.001 | 0.885 | 0.006 | 0.010 | 0.371 | 0.751 | 0.707 |

| Alfa | <0.001 | <0.001 | <0.001 | 0.001 | <0.001 | <0.001 | 0.312 | 0.583 | |||||

| Beta | <0.001 | <0.001 | <0.001 | 0.665 | |||||||||

| Central | Theta | 0.180 | 0.394 | 0.485 | 0.093 | 0.937 | 0.065 | 0.240 | 0.310 | 1.000 | 0.026 | 0.589 | 0.310 |

| Alfa | 0.180 | 0.394 | 0.009 | 0.589 | 1.000 | 0.240 | 0.310 | 0.180 | |||||

| Beta | 0.818 | 0.009 | 0.394 | 0.699 | |||||||||

| Temporal | Theta | 0.667 | 1.000 | 0.667 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 0.333 | 1.000 | 0.333 |

| Alfa | 0.667 | 1.000 | 0.667 | 1.000 | 1.000 | 0.667 | 0.333 | 1.000 | |||||

| Beta | 0.667 | 0.667 | 1.000 | 0.333 | |||||||||

| Parietal | Theta | 0.026 | 0.240 | 0.132 | 0.002 | 0.132 | 0.002 | 1.000 | 0.394 | 0.589 | 0.002 | 0.132 | 0.065 |

| Alfa | 0.065 | 0.132 | 0.002 | 0.009 | 0.589 | 0.180 | 0.394 | 0.065 | |||||

| Beta | 0.394 | 0.002 | 0.240 | 1.000 | |||||||||

| Occipital | Theta | 0.333 | 0.333 | 0.333 | 0.333 | 0.333 | 0.333 | 0.667 | 0.667 | 0.667 | 0.333 | 0.667 | 0.333 |

| Alfa | 0.333 | 0.333 | 0.333 | 0.333 | 0.667 | 0.667 | 0.333 | 0.667 | |||||

| Beta | 0.333 | 0.333 | 0.667 | 0.667 | |||||||||

| SVM | MLP | RF | |

|---|---|---|---|

| Average accuracy ± Standard deviation | 0.5931 ± 0.0364 | 0.5972 ± 0.0321 | 0.5772 ± 0.0273 |

| Average F1 score ± Standard deviation | 0.6458 ± 0.0388 | 0.6354 ± 0.0435 | 0.5899 ± 0.0398 |

| SVM | MLP | RF | |

|---|---|---|---|

| Average accuracy ± Standard deviation | 0.5775 ± 0.0367 | 0.5989 ± 0.0399 | 0.6270 ± 0.0324 |

| Average F1 score ± Standard deviation | 0.5852 ± 0.0360 | 0.6278 ± 0.0359 | 0.6514 ± 0.0354 |

| Left | Frontal | Right | Central | Parietal | Occipital | |

|---|---|---|---|---|---|---|

| Theta | 51.22 | 51.22 | 60.16 | 60.98 | 52.03 | 52.03 |

| Alpha | 59.35 | 56.91 | 54.47 | 59.35 | 55.28 | 60.16 |

| Beta | 63.41 | 58.54 | 55.28 | 59.35 | 63.41 | 57.72 |

| Gamma | 56.10 | 65.85 | 56.10 | 60.98 | 62.60 | 60.16 |

| Left | Frontal | Right | Central | Parietal | Occipital | |

|---|---|---|---|---|---|---|

| Theta | 43.09 | 53.66 | 50.41 | 56.10 | 56.10 | 57.72 |

| Alpha | 51.22 | 53.66 | 53.66 | 55.28 | 54.47 | 47.97 |

| Beta | 52.85 | 50.41 | 52.03 | 47.97 | 52.85 | 51.22 |

| Gamma | 60.98 | 54.47 | 47.97 | 59.35 | 55.28 | 56.10 |

| Left | Frontal | Right | Central | Parietal | Occipital | |

|---|---|---|---|---|---|---|

| Theta | 58.82 | 58.06 | 59.02 | 63.38 | 60.00 | 50.82 |

| Alpha | 55.71 | 62.60 | 63.70 | 54.84 | 50.77 | 56.72 |

| Beta | 63.16 | 55.17 | 55.74 | 49.18 | 51.97 | 46.28 |

| Gamma | 68.25 | 66.67 | 58.91 | 66.07 | 67.69 | 65.12 |

| Left | Frontal | Right | Central | Parietal | Occipital | |

|---|---|---|---|---|---|---|

| Theta | 69.41 | 62.65 | 69.46 | 69.36 | 67.90 | 67.03 |

| Alpha | 64.24 | 56.44 | 71.51 | 65.12 | 67.95 | 69.27 |

| Beta | 26.37 | 67.07 | 67.05 | 64.74 | 61.73 | 65.52 |

| Gamma | 25.29 | 60.82 | 63.22 | 65.54 | 20.00 | 66.29 |

| Study | Classifiers | Parameters | Training/Testing Conditions | Average Accuracy (%) |

|---|---|---|---|---|

| Ahmed et al. (2022) [28] | AsMap + CNN (Asymmetric Map + Convolutional Neural Network), DE (Differential Entropy), DASM (Differential Asymmetry), RASM (Relative Asymmetry) and DCAU (Differential Caudality) | For AsMap + CNN: 3 × 3 kernel, ReLU activation. | Not specified | 97.10% (with SEED) 93.41% (with DEAP) |

| Donmez et al. (2019) [29] | CNN (Convolutional Neural Network) | Not specified | 80/20% split for training/testing (392/98 images). Trained with 20 epochs and 26 iterations per epochs. | 84.69% |

| Dabas et al. (2018) [30] | Naïve Bayes, SVM | Not specified | Not specified | 78.06% (Naïve Bayes) 58.90% (SVM) |

| Kusumaningrum et al. (2020) [31] | Random Forest, SVM, k-NN and Weighted k-NN | For RF: 100 trees. For SVM: linear kernel. For k-NN and Wk_NN: k = 7. | 5-fold cross-validation | 62.58% using Random Forest (highest recognition accuracy compared to other methods employed) |

| This study | RF | 100 trees and ‘gini’ as the splitting metric. | 70/30% split for training/testing | Arousal: 57.72% Valence: 62.70% |

| SVM | Linear kernel. | Arousal: 59.31% Valence: 57.75% | ||

| MLP | ‘tanh’ activation, alpha = 0.3, 400 iterations. | Arousal: 59.72% Valence: 59.89% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reis, S.; Pinto-Coelho, L.; Sousa, M.; Neto, M.; Silva, M. Advancing Emotion Recognition: EEG Analysis and Machine Learning for Biomedical Human–Machine Interaction. BioMedInformatics 2025, 5, 5. https://doi.org/10.3390/biomedinformatics5010005

Reis S, Pinto-Coelho L, Sousa M, Neto M, Silva M. Advancing Emotion Recognition: EEG Analysis and Machine Learning for Biomedical Human–Machine Interaction. BioMedInformatics. 2025; 5(1):5. https://doi.org/10.3390/biomedinformatics5010005

Chicago/Turabian StyleReis, Sara, Luís Pinto-Coelho, Maria Sousa, Mariana Neto, and Marta Silva. 2025. "Advancing Emotion Recognition: EEG Analysis and Machine Learning for Biomedical Human–Machine Interaction" BioMedInformatics 5, no. 1: 5. https://doi.org/10.3390/biomedinformatics5010005

APA StyleReis, S., Pinto-Coelho, L., Sousa, M., Neto, M., & Silva, M. (2025). Advancing Emotion Recognition: EEG Analysis and Machine Learning for Biomedical Human–Machine Interaction. BioMedInformatics, 5(1), 5. https://doi.org/10.3390/biomedinformatics5010005