1. Introduction

Bathymetry, which measures the depth of the benthic floor in aquatic environments relative to the water level, defines the underwater topography of oceans, estuaries, and lakes [

1]. It has applications in a wide range of fields, including navigation, benthic habitat mapping [

2,

3,

4], the identification of marine objects in shallow waters such as seagrass and algae [

5,

6], sustainable management [

7], hydrological modeling [

8], monitoring sea-level rise due to climate change, and sediment removal [

9,

10,

11]. These diverse use cases highlight the critical need for accurate and efficient bathymetric mapping. Historically, bathymetry was measured using a heavy rope, and creating a bathymetric map for a large region required significant manpower, equipment, time, and extensive in situ field observations. Although modern approaches such as airborne Light Detection and Ranging (LiDAR) [

12] and Sound Navigation and Ranging (Sonar) systems [

13,

14] have been developed, each method still faces significant limitations. For example, the logistics and time required for vessel-mounted Sonar systems to cover an area, along with the need to correct for changing tidal states during data collection, restrict its use to ship-based operations. LiDAR is highly sensitive to water clarity and is associated with very high operational costs.

Recent technical advances have made remote mapping of bathymetry possible, and these technologies can be divided into two types: active and passive remote sensing [

15]. Active sensors such as LiDAR have been widely employed for bathymetric mapping. LiDAR is capable of producing high-accuracy results in clear waters but is constrained by coarse spatial coverage and high operational costs [

7]. In contrast, passive remote sensing techniques utilize reflected sunlight across multiple spectral bands to estimate water depth. Common multi-spectral platforms include SPOT [

16], IRS-1C/1D and LISS-III [

17], IKONOS [

18], Landsat-8 [

19], QuickBird [

20], Sentinel-1 [

21], Sentinel-2 [

22], Worldview-2 (WV-2) [

23], and hyperspectral imaging [

24]. These methods offer extended depth detection capabilities compared to active methods, enabling bathymetric mapping in deeper waters, particularly in clear-water conditions. However, the accuracy of passive methods can be considerably affected by environmental factors, such as water clarity, turbidity, bottom reflectance characteristics, and atmospheric conditions, which collectively pose challenges to achieving reliable depth measurements [

15,

24].

Multi-spectral image-based methods assume that the depth of ocean is inversely proportional to the atmospherically corrected reflected energy from the water surface [

15]. These methods can be categorized into two groups: (1) Traditional physics-based approaches, and (2) machine learning-based methods. For physics-based approaches, radiative transfer [

17,

23], linear transform [

18], ratio transform [

18,

19], log-band ratio [

20], optimal band ratio analysis (OBRA) [

20], depth inversion limits of the FFT methodology [

21], and downscaled coastal aerosol band [

22] methods have been reported in the literature. With the growth of data availability and computational resources, machine learning models have gained increasing attention as powerful alternatives to physics-based approaches. Machine learning-based approaches can be categorized into three groups: (1) traditional models, such as linear regression (LR) [

25,

26,

27], support vector machine (SVM) [

28,

29,

30] and clustering [

31], (2) ensemble-based methods including Bagging, least squares boosting, gradient boosting (GB) [

32], and random forest (RF) [

29,

33,

34], and (3) neural network and fuzzy logic systems such as artificial neural networks (ANN) [

35], adaptive neuro-fuzzy inference systems [

36], deep convolutional neural networks (DCNN) [

37], deep variational autoencoders [

38] and self-attention mechanisms [

39].

Physics-based models often assume a straightforward linear relationship between surface reflectance and water depth, which fails to capture the intrinsic non-linearity of real-world aquatic environments [

7]. This limits their ability to adapt to different conditions. Additionally, such models can be affected by factors like water clarity, bottom reflectance, and atmospheric conditions, leading to a decline in performance when faced with more complex environmental variables [

15]. On the other hand, data-driven machine learning approaches are effective at capturing these non-linear relationships, but they have their own set of limitations. SVM is sensitive to feature selection and kernel parameters and often experiences decreased performance when dealing with high-dimensional remote sensing data [

40]. RF models are robust but have limited generalization abilities when dealing with data with high noise levels or spatial heterogeneity, especially when sample sizes are small [

41]. Deep learning methods like ANN and DCNN perform well with large datasets but usually require extensive labeled data for training [

42]. Additionally, the generalization abilities of these models in bathymetric mapping are often limited due to spatial heterogeneity, resulting in limited spatial generalization across regions [

43].

In the past few years, ensemble learning methods based on decision trees, especially Gradient Boosting, have significantly improved the accuracy of bathymetric estimation through stepwise optimization of weak learners [

32]. Gradient boosting sequentially trains a set of weak decision tree learners by minimizing a differential loss function through gradient descent optimization. However, these boosting algorithms are prone to overfitting, especially with small or noisy datasets, because gradient calculations typically use the same training data as the models, lacking exposure to independent data for effective generalization [

44]. To address these challenges, the Categorical Boosting (CatBoost) algorithm has been developed and applied for remote sensing bathymetric estimation [

45]. The study in [

46], which used multi-temporal Sentinel-2 imagery, showed that CatBoost significantly outperformed traditional LR models for bathymetry change estimation. Additionally, the results in [

47] indicated that CatBoost could more accurately capture non-linear relationships compared to quasi-empirical models. However, both studies focused solely on Sentinel-2 images and lacked a comprehensive comparison with other machine learning methods. In this study, we explored the applicability of WV-2 images for bathymetry estimation under complex environmental conditions. We optimized the CatBoost model and compared its performance to state-of-the-art machine learning algorithms using datasets collected from three locations in Florida. In addition, we analyzed the contribution of individual spectral bands and found that utilizing full spectral range likely offers the best solution for handling complex water quality conditions, particularly when organic matter is present in the water.

3. Experiment Setup

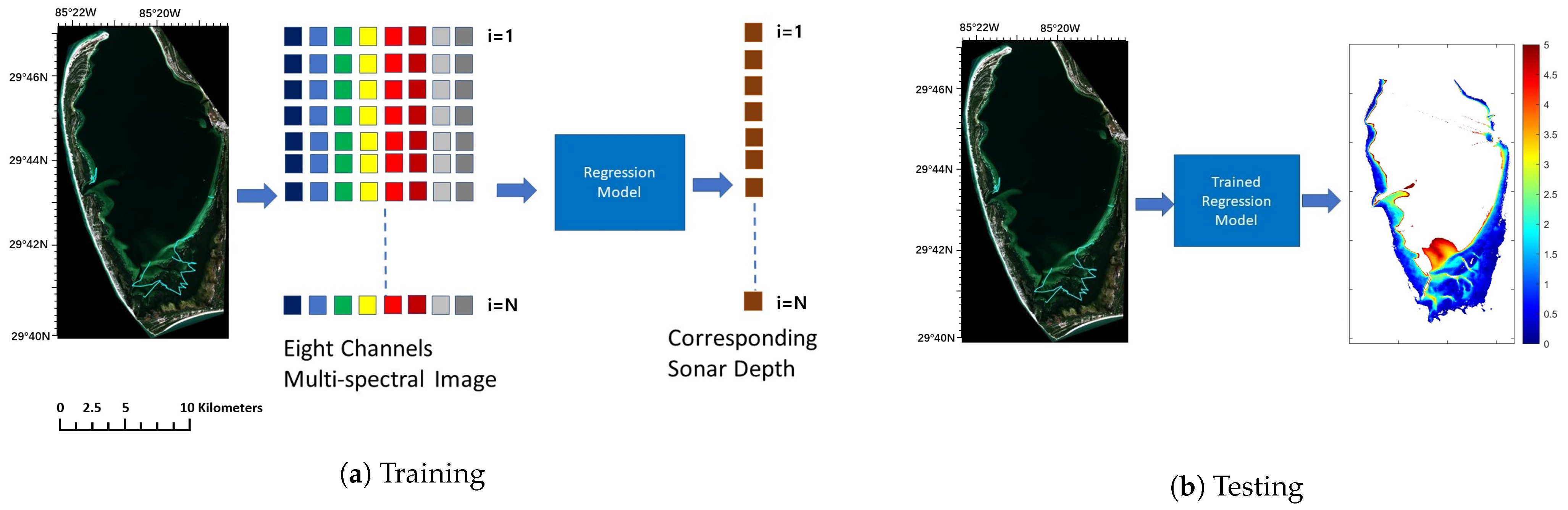

We conducted four experiments in this study to investigate the effectiveness of using multi-spectral images combined with the CatBoostOpt model for estimating bathymetry in shallow sea areas.

Experiment 1: Hyperparameter determination. The bathymetry estimation models have many hyperparameters, some of them related to the machine learning model and others are related to how we organize the data or how we train the models, e.g., the size of image patches extracted from multi-spectral images for bathymetry. In the CatBoost model, there are seven hyperparameters in the model as listed in

Section 2.3. We utilized trial and error to optimize these hyperparameters based on grid search and selected a set of hyperparameters that best fitted our datasets for bathymetry.

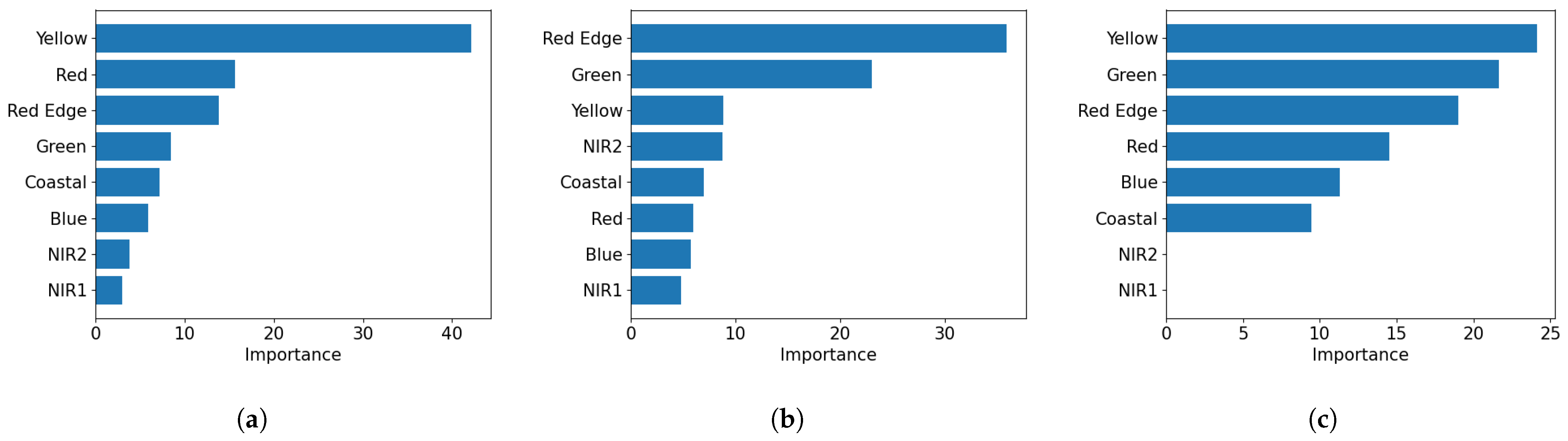

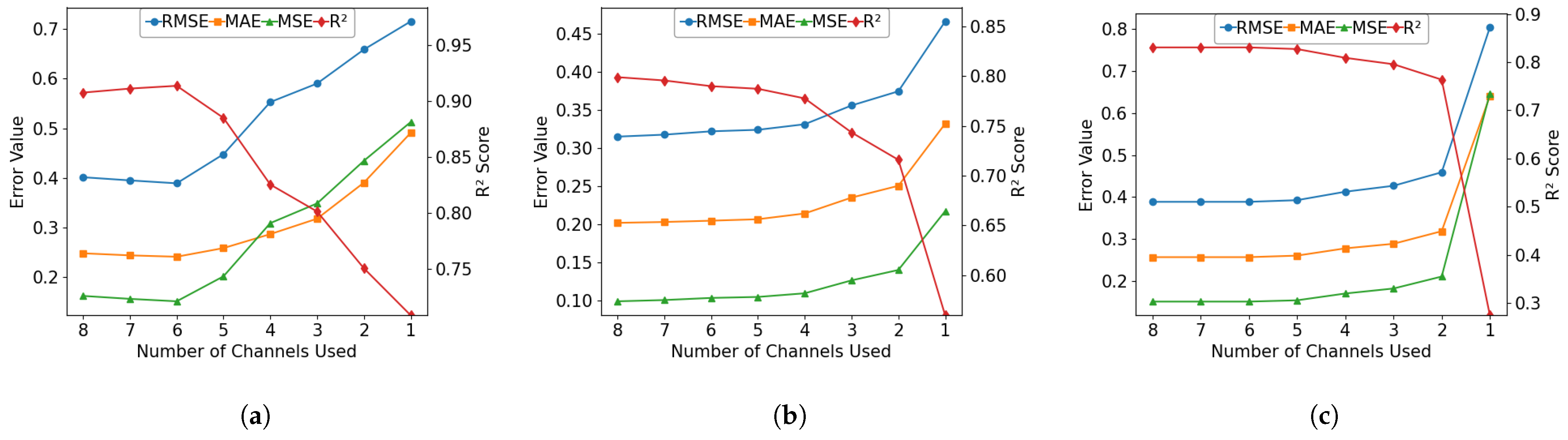

Experiment 2: Band importance investigation. To evaluate the impact of each image band on model performance, we conducted a series of experiments to quantify the contribution of each spectral band and to compare the effectiveness of different band combination strategies across three locations using the CatBoostOpt model.

Experiment 3: Comparison study for bathymetry estimation. We compared the CatBoostOpt model with other machine learning approaches for baythymetry estimation through 3-fold CV on the collected Sonar measurements and corresponding multi-spectral images as described below,

We extracted image patches () from the multi-spectral images at the locations where Sonar measurements (y) were taken, and created a dataset , where and y represent an image patch and Sonar measurement, respectively.

We performed 3-fold CV on the dataset and evaluated the performance of each model in terms of MAE, RMSE, MSE, RMSE(%), MAPE(%), and R2.

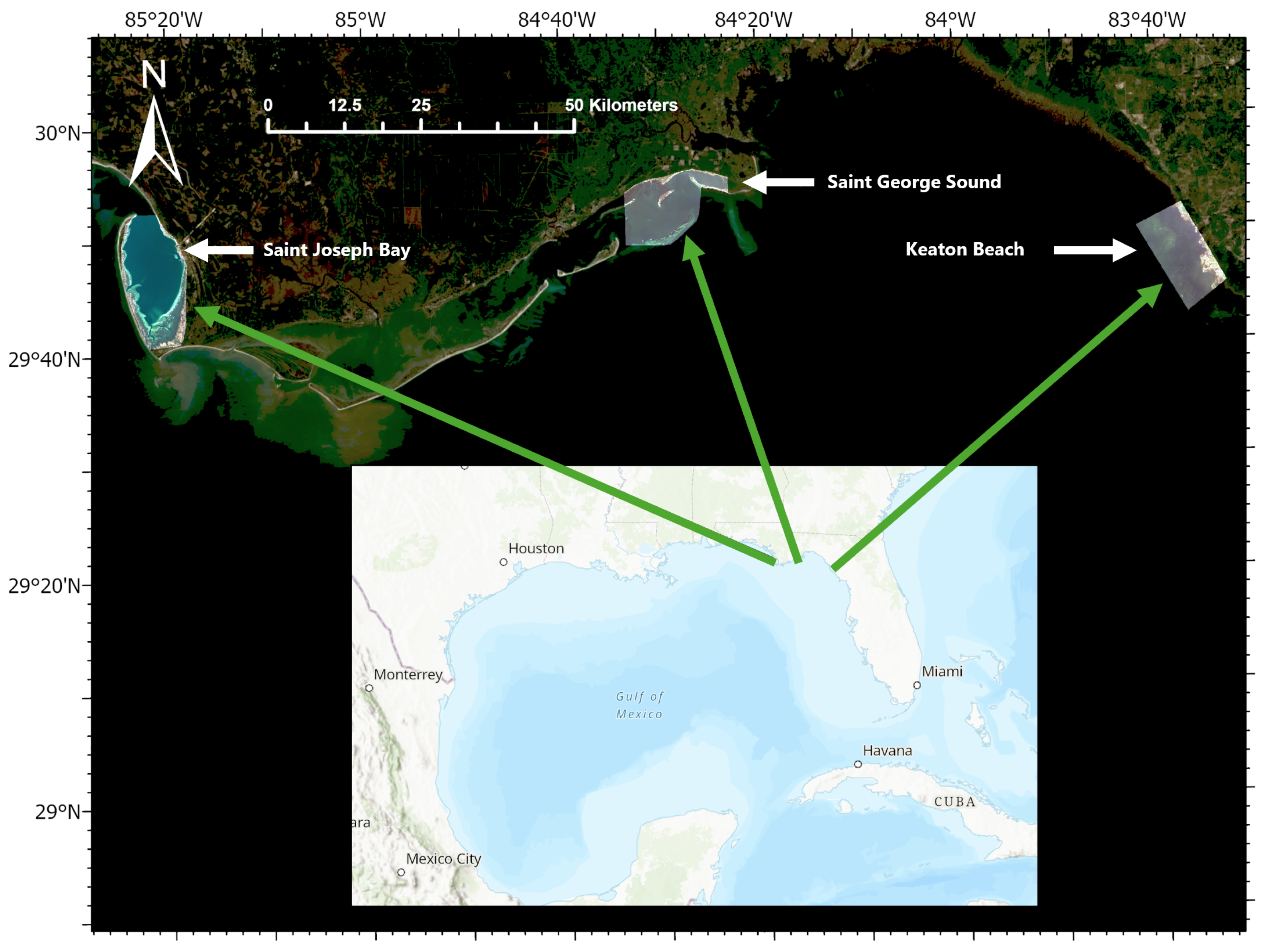

We repeated the above steps at all three locations including SJB, KB, and SGS.

Experiment 4: Case study for bathymetry estimation. We trained all the competing models with all the Sonar measurements and applied the trained model to multi-spectral images collected at the three sites to estimate bathymetry. The estimated bathymetry maps were then compared with the historical bathymetry data collected by the NOAA.

5. Discussion

Different multi-spectral remote sensing imagery and machine learning algorithms have been applied to estimate bathymetry in the literature. However, it is difficult to make a fair comparison due to the fact that each study utilized different image modalities, algorithms, and/or different locations. For example, Tonion et al. [

29] achieved a mean average error of 0.158 m using a random forest algorithm with combined image modalities of Planetscope, Sentinel 2, and Landsat 8 satellite images in Cesenatico, Italy. Misra et al. [

28] achieved an RMSE of 0.35 m using SVM with Landsat 7 ETM+ and Landsat 8 OLI image modalities in Ameland Inlet, Netherlands. Eugenio et al. [

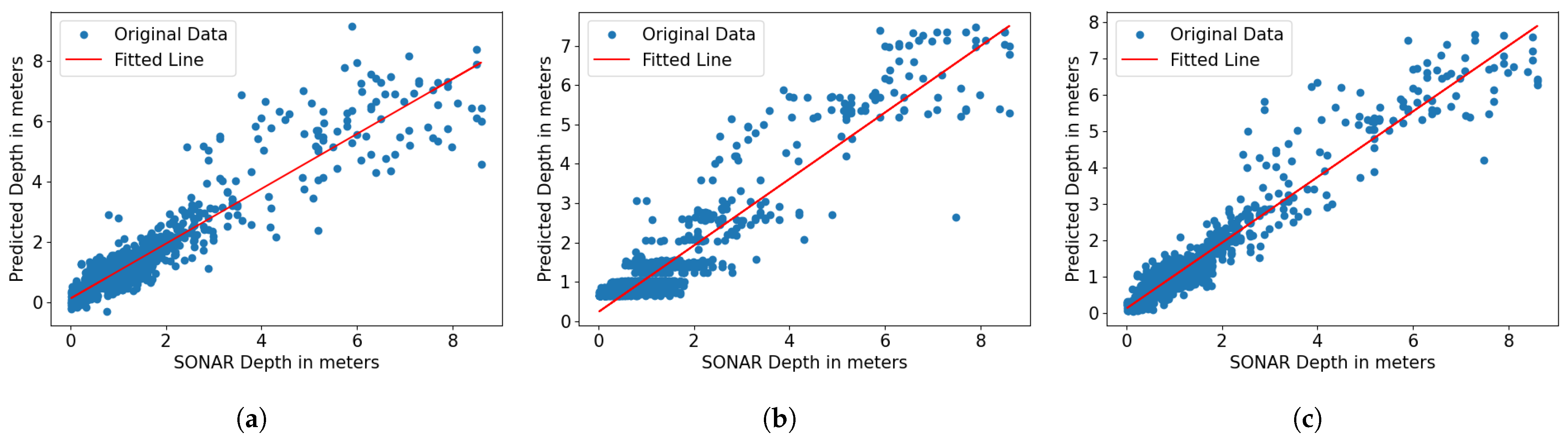

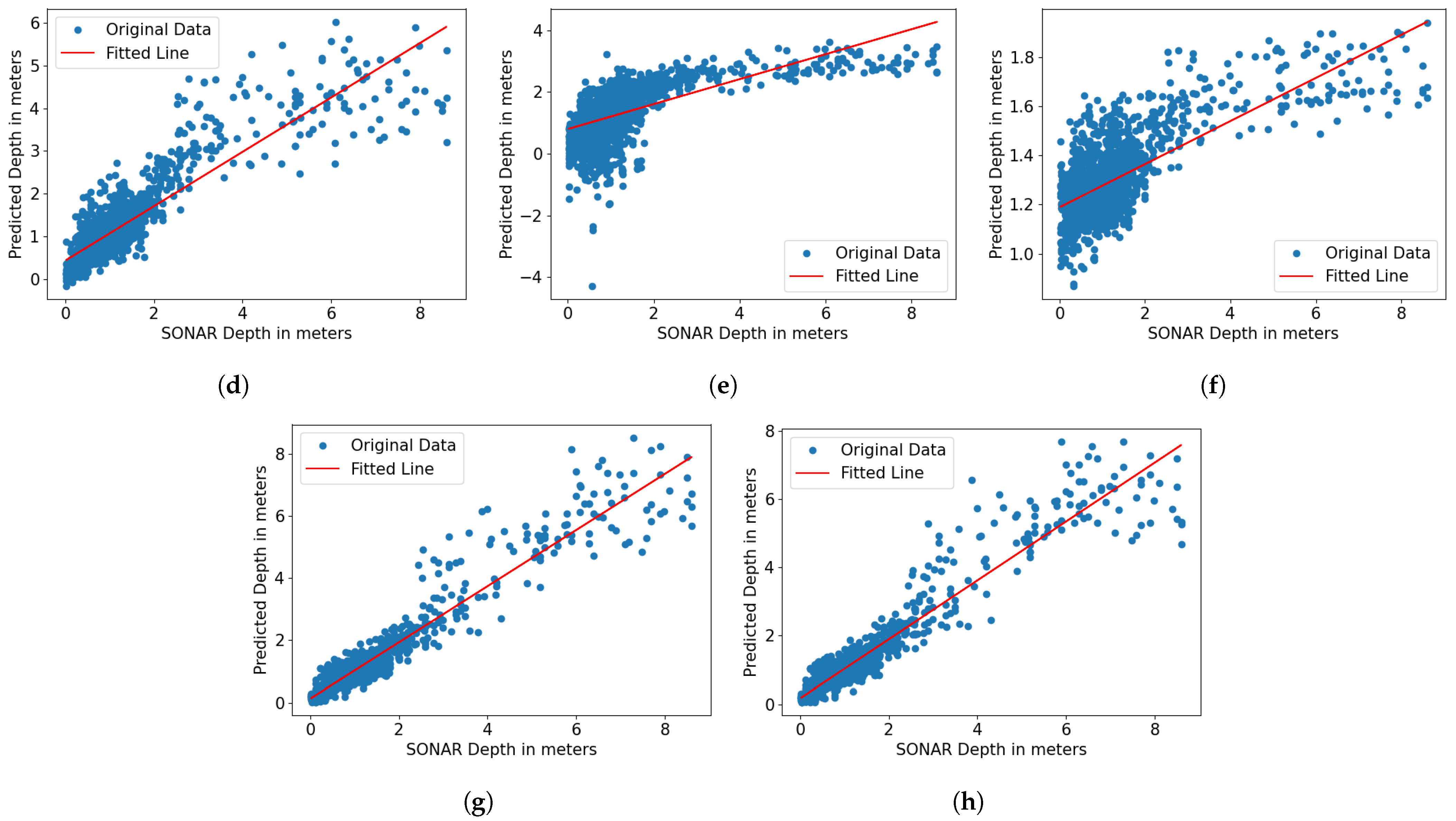

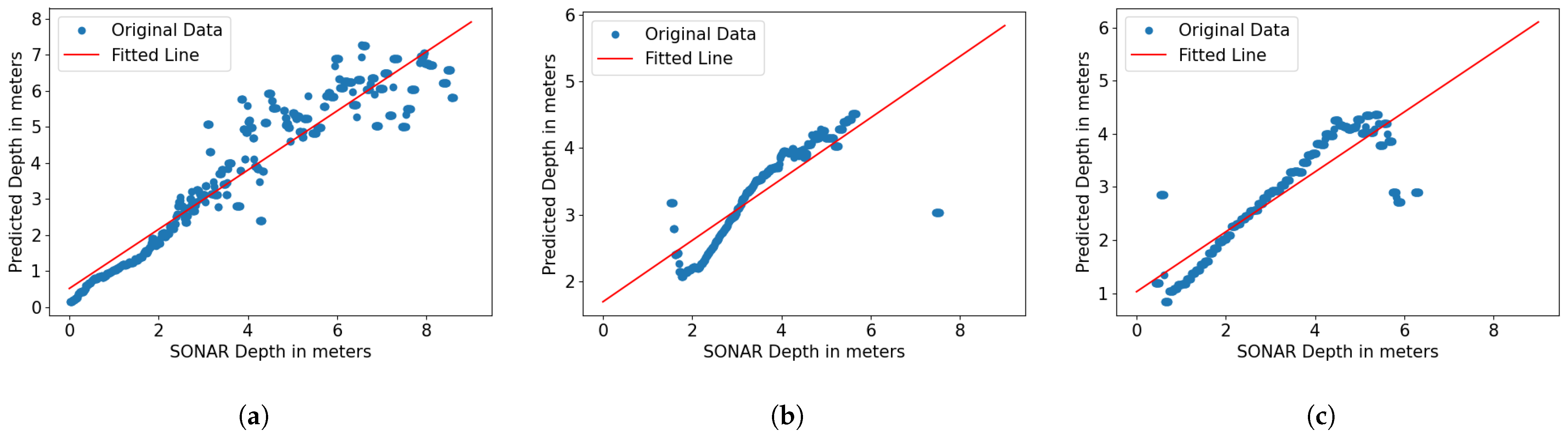

23] achieved RMSEs of 1.2 m and 1.94 m in the Granadilla and Corralejo areas, respectively, with Worldview-2 images. Our study provided a fair comparison platform for different machine learning algorithms in bathymetry estimation since all algorithms used the same dataset. Our results showed that the recent machine learning algorithm, CatBoost, when optimized, outperformed all competing machine learning algorithms including linear models, SVM, convolutional neural networks, and other boosting algorithm families. The optimized CatBoost algorithm, combined with the log-ratio transformation of WV-2 images, achieved RMSEs of 0.33 m, 0.29 m, and 0.37 m at SJB, KB, and SGS, respectively, a significant improvement compared to other algorithms.

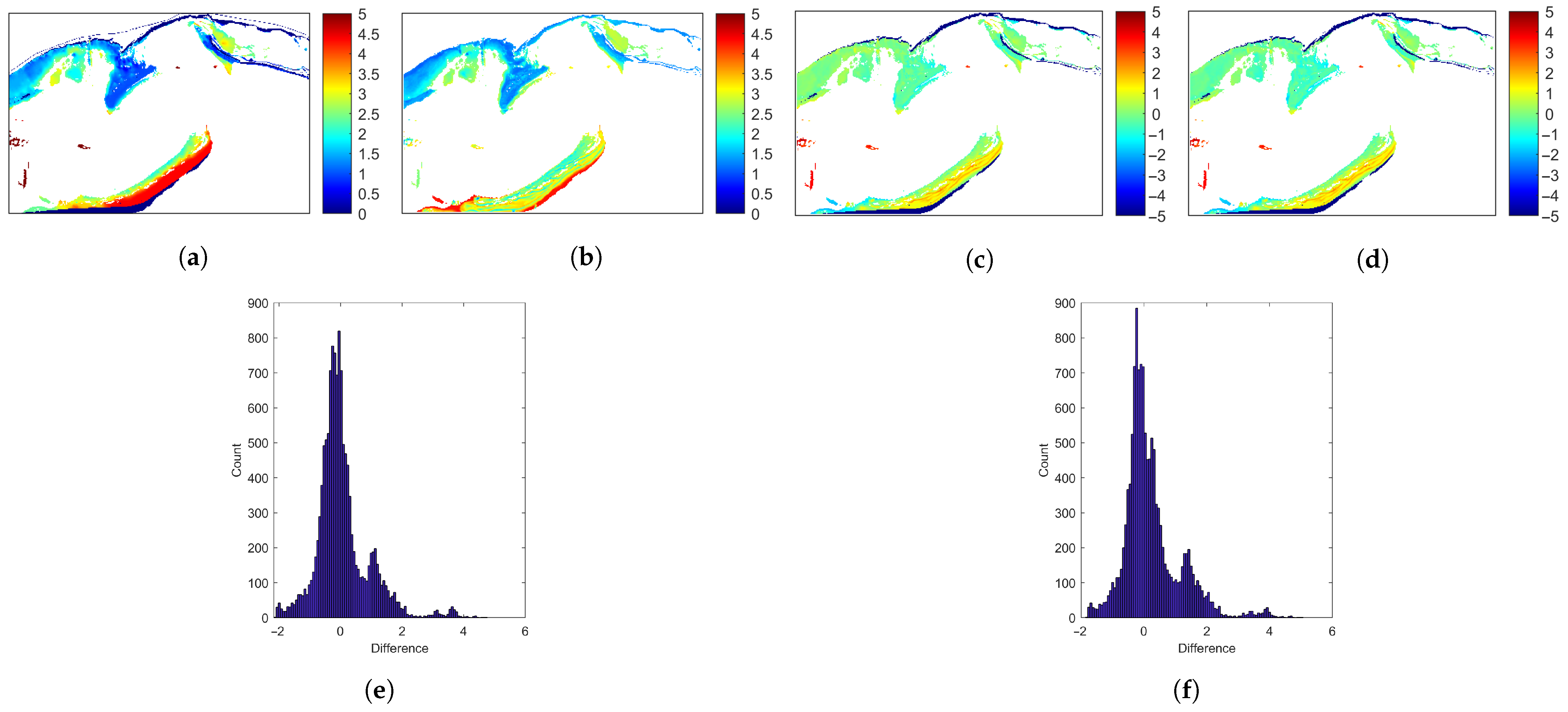

Though WV-2 images are limited to retrieving depths of approximately 5 m, their high spatial resolution and rich multi-spectral information provide significant advantages for accurately estimating bathymetry in complex shallow-water environments, where detailed spatial variation is critical. An important consideration in our study is the emerging capability of satellite-based bathymetry, which has demonstrated the ability to capture smaller-scale details in bathymetry, such as sand ripples in regions like Saint George Sound. This enhanced spatial resolution surpasses that of traditional NOAA methods, providing a more detailed view of the seafloor. These finer-scale depth variations are particularly significant when mapping seagrass density and predicting seagrass presence or absence using depth-driven models. The ability to resolve these small-scale features can lead to more accurate and effective conservation and management strategies for seagrass habitats.

Gradient boosting utilizes a greedy algorithm to sequentially train an ensemble of decision trees to fit the data and outperformed all other non-boosting algorithms by large margins as shown in

Table 5,

Table 6 and

Table 7. However, there remains a risk of overfitting, especially when dealing with small and noisy datasets. One of the major improvements of CatBoost over traditional gradient boosting is its use of separate, unseen data to compute the gradients of the loss function during training, which reduces the risk of overfitting when the available data are noisy or limited [

45]. To further improve the CatBoost model, we optimized the model’s hyperparameters and compared the performance of the original CatBoost model with that of the optimized version, CatBoostOpt. The results obtained by CatBoostOpt showed significant improvement, indicating that hyperparameter tuning was essential for enhancing model performance. Without optimization, the model tended to underfit, particularly in complex coastal environments, thereby limiting its effectiveness for bathymetric estimation.

In bathymetry estimation, raw, log-linear, and log-ratio are commonly used transformations. The raw reflectance maintains the physical meaning of the measurements but often exhibits skewed distributions due to large magnitude differences and non-linear relationships among the measurements [

31]. The log-linear transformation reduces the influence of large values and enhances sensitivity to small values, which is particularly important for shallow water bathymetry. However, the transformed values lose their direct physical interpretation [

59]. The log-ratio transformation calculates the ratio between different bands to enhance depth-related information, effectively normalizing for water turbidity, bottom reflectance, and mitigating the influence of varying environmental conditions [

18]. In our study, the raw and log-linear transformed reflectance performed similarly, as shown in

Table 5 and

Table 6, while the log-ratio transformed reflectance achieved the best performance at all three locations, as shown in

Table 7, showcasing the super performances of the log-ratio transformed reflectance for bathymetry.

Bathymetry estimation typically utilizes short-wavelength bands, such as Coastal, Blue, and Green, and sometimes also includes Yellow, since these wavelengths can penetrate water more deeply [

68]. However, the study sites examined in this paper are influenced by CDOM-rich, oligotrophic discharge from terrestrial water sources [

50], along with other diffuse sources along this section of the coastline. The presence of CDOM significantly alters the optical properties of the water, and our band importance analysis revealed that the contribution of each band to bathymetry estimation is not consistent with the basic physics discussed in [

68], due to the influence of CDOM. Our band elimination study further demonstrated that using all eight bands available in the WV-2 sensor provided no substantial performance difference compared to using the top six bands. Therefore, we utilized all eight bands in our study to better mitigate the CDOM effects across different locations.

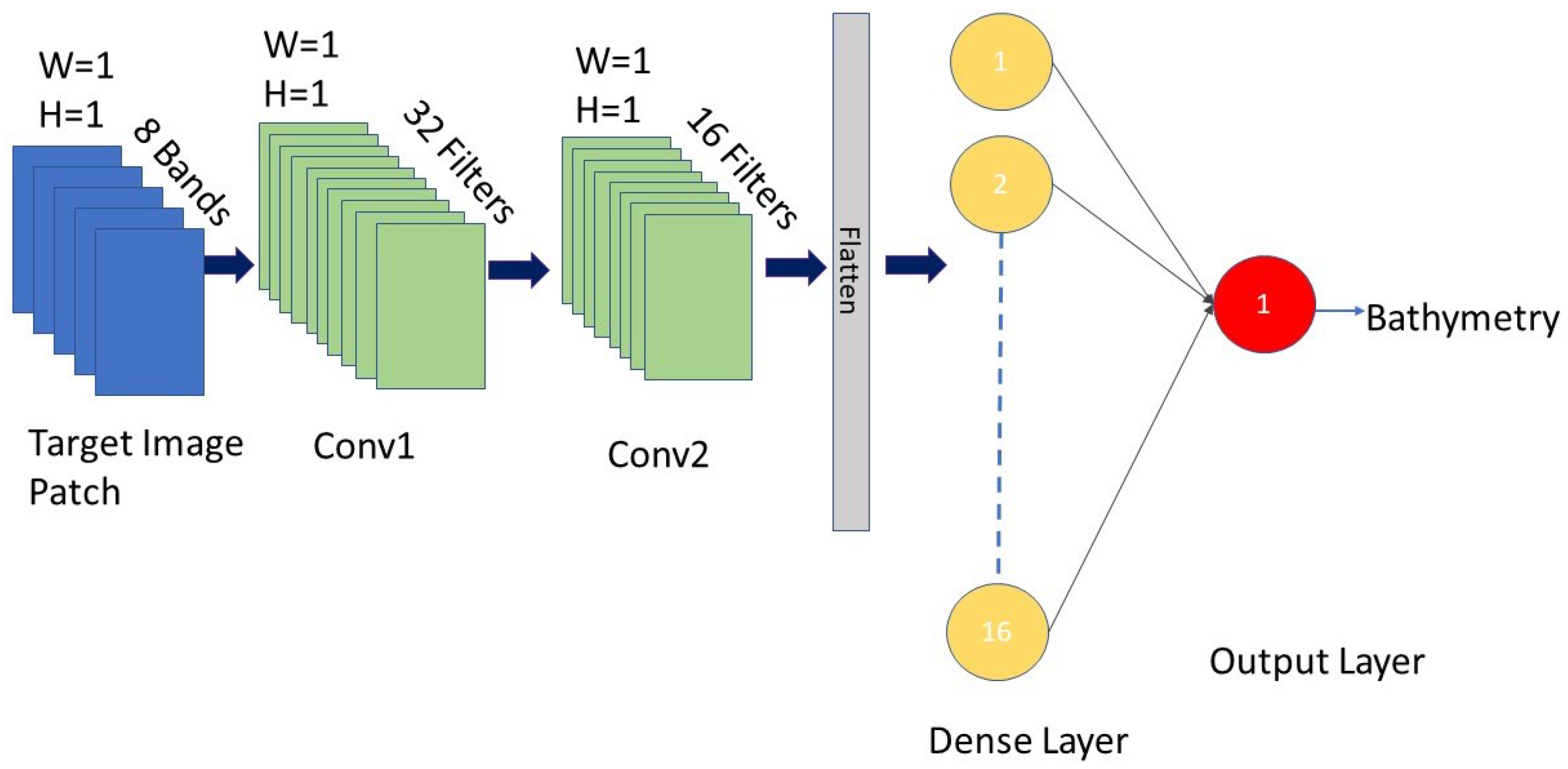

The DCNN was consistently underperformed, regardless of the reflectance transformation method employed. This underperformance may stem from the limited spatial structure in the input data, as only pixel-wise spectral features were available, which hampers the convolutional layers’ ability to capture meaningful spatial patterns. Additionally, DCNNs typically require large datasets for effective training, and the relatively small dataset used in this study may have led to overfitting and reduced generalization capability.

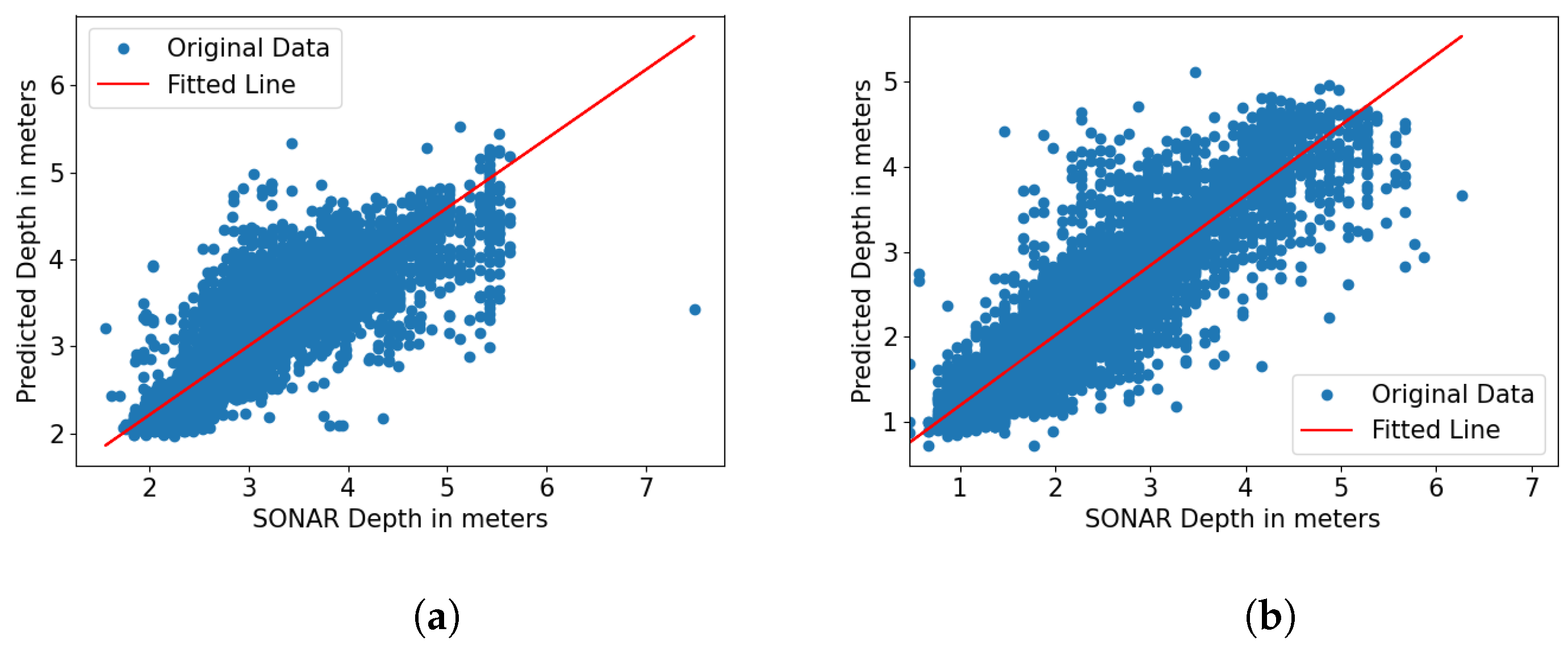

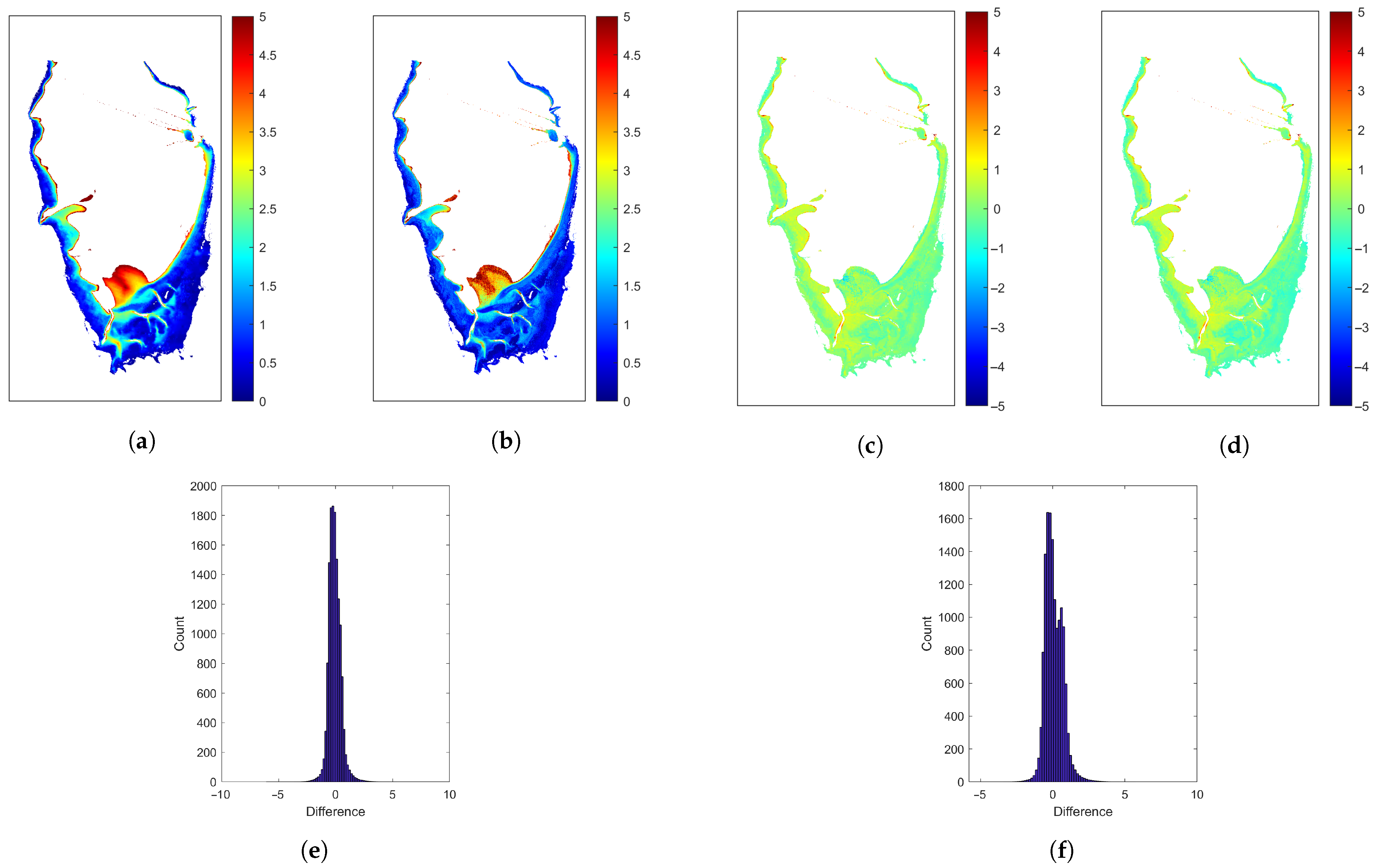

The bathymetry correction step in the seagrass area did not always reduce the overall error. The RMSE between the NOAA bathymetry and the model-predicted bathymetry with and without the correction is shown in

Table 8. The correction step reduced RMSE errors at the KB location but increased RMSE errors at SJB and SGS. We obtained the best RMSE values of

and

without seagrass correction at SJB and SGS, respectively, and achieved the best RMSE of

at KB with the correction, as listed in

Table 8. The varying effects of the seagrass correction across different sites may be attributed to differences in seagrass density, species composition, and local water clarity. Additionally, the correction algorithm assumes that seagrass has a uniform influence across all study sites. However, this may not accurately represent the spatial variability of the submerged vegetation.

Our study advances bathymetry estimation by improving upon the methodologies employed in previous research [

69]. The CatBoost model achieved R

2 values between 0.84 and 0.92 with RMSE below 0.5 m for depths shallower than 7 m, while CatBoostOpt further improved accuracy, achieving RMSE values of 0.36 m, 0.30 m, and 0.38 m at SJB, KB, and SGS, respectively. Unlike previous studies, we evaluated different reflectance transformations, demonstrating that log-ratio transformation significantly enhances depth estimation accuracy. Additionally, our study validated the model across multiple coastal locations in Florida, demonstrating its robustness under diverse environmental conditions. Overall, our findings confirm that hyperparameter tuning and proper reflectance transformations are critical for improving satellite-based bathymetric mapping, providing an accurate and scalable solution for coastal monitoring.

In this study, we still need to train a separate model for bathymetry estimation at different locations because the multi-spectral images collected at different locations are typically impacted by the quality of water at that location, making pixel values in the images location-dependent. We assumed that the depth of the ocean is inversely proportional to the atmospherically corrected reflected energy from the water surface. However, we did not consider the effects posed by the quality of water, which means that the same pixel values in the image may represent different ocean depths at different locations if the water quality varies. Potential solutions include training separate models for different locations if data are available, or adjusting models that were trained for different locations to a specific location by taking water quality into account. In the machine learning community, domain adaptation techniques [

70] are also possible solutions for this challenge.