Temporal Autocorrelation of Sentinel-1 SAR Imagery for Detecting Settlement Expansion

Abstract

1. Introduction

2. Materials and Methods

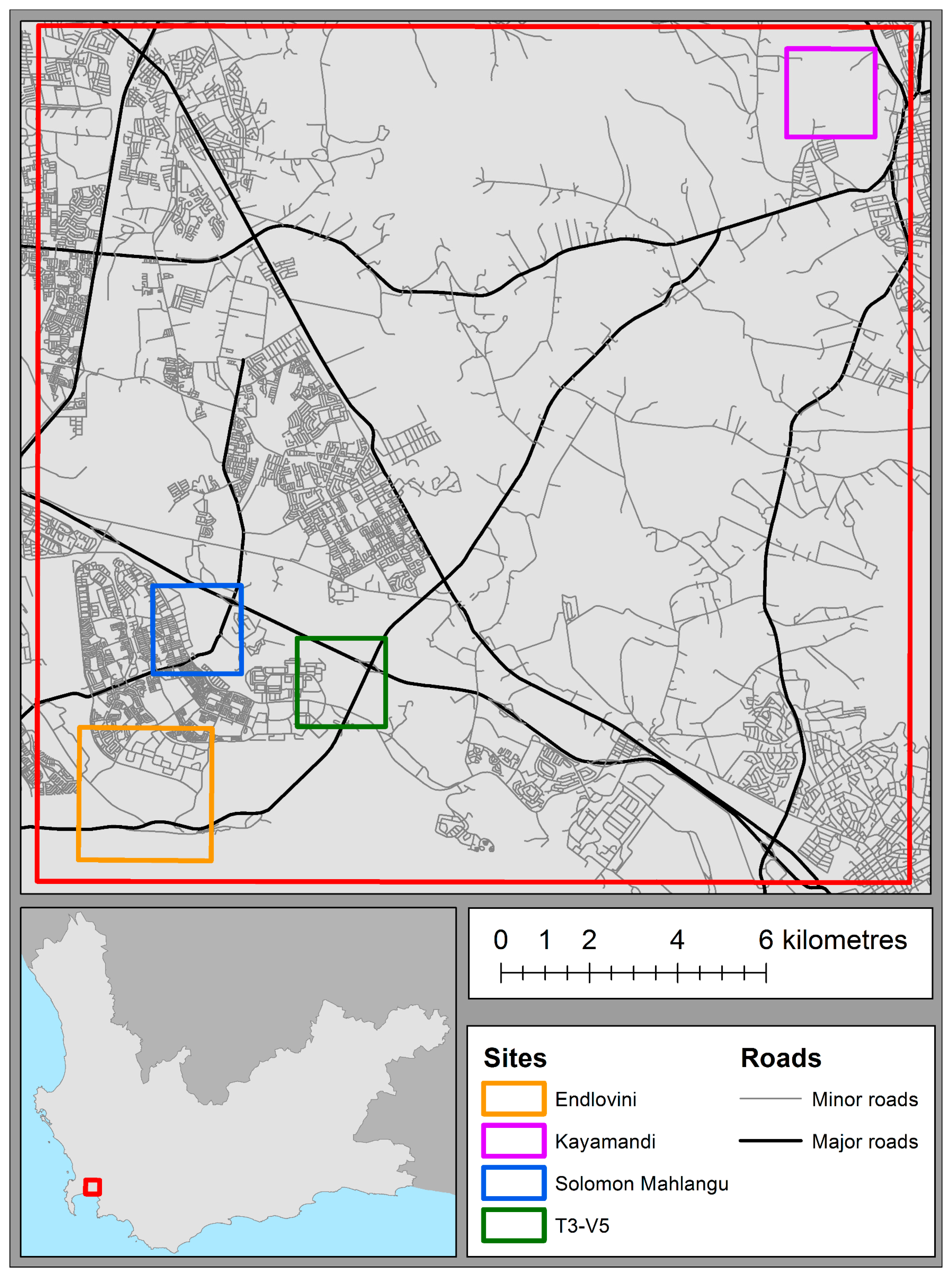

2.1. Study Area

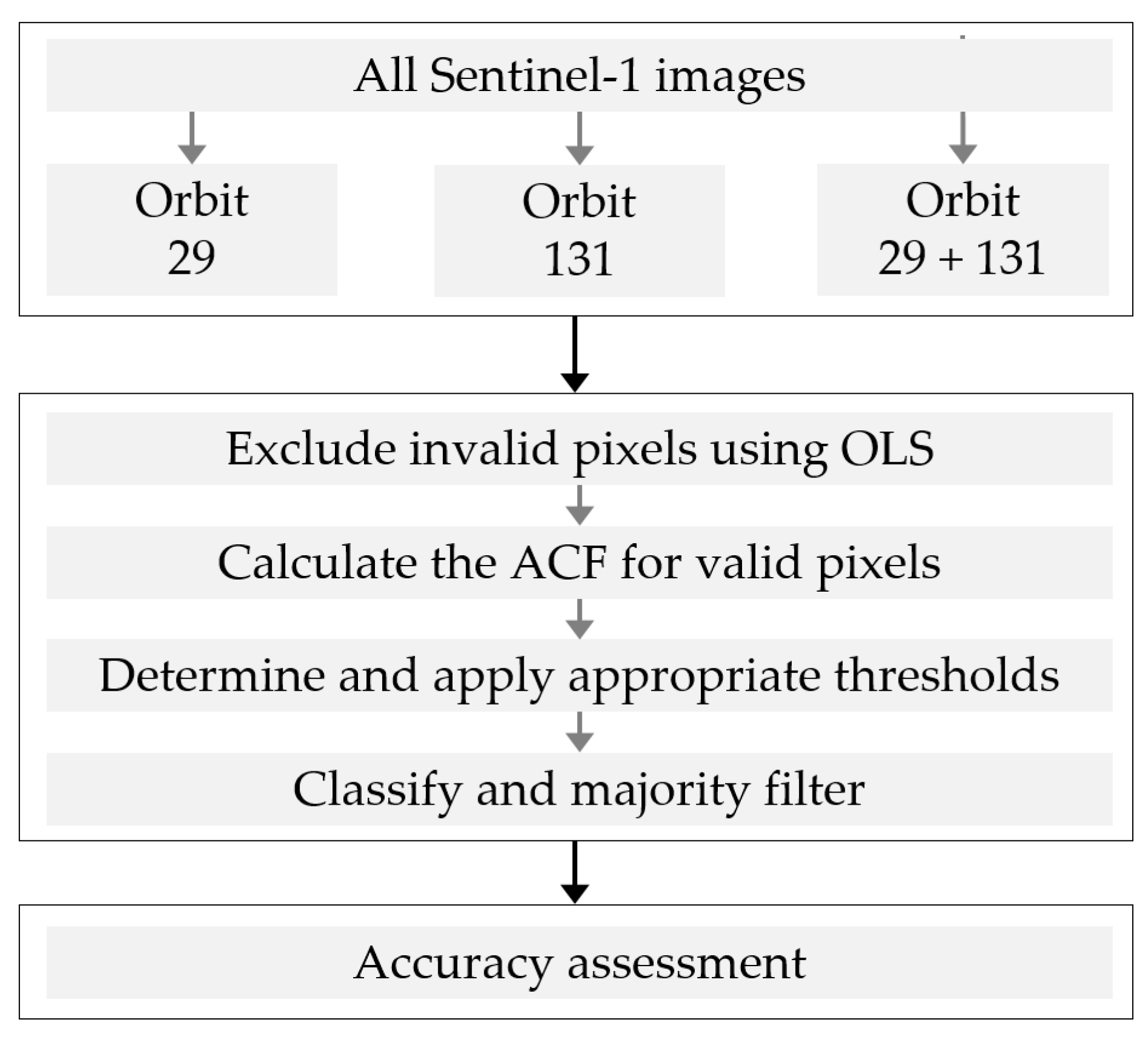

2.2. Experimental Design

- Acquisition and stacking of Sentinel-1 GRD scenes into three stacks;

- Determination of building orientation;

- Exclusion of invalid pixels;

- Calculation of temporal autocorrelation;

- Thresholding, classification, and filtering of changing pixels;

- Accuracy assessment.

2.3. Data Collection and Preparation

2.3.1. Sentinel-1 Imagery

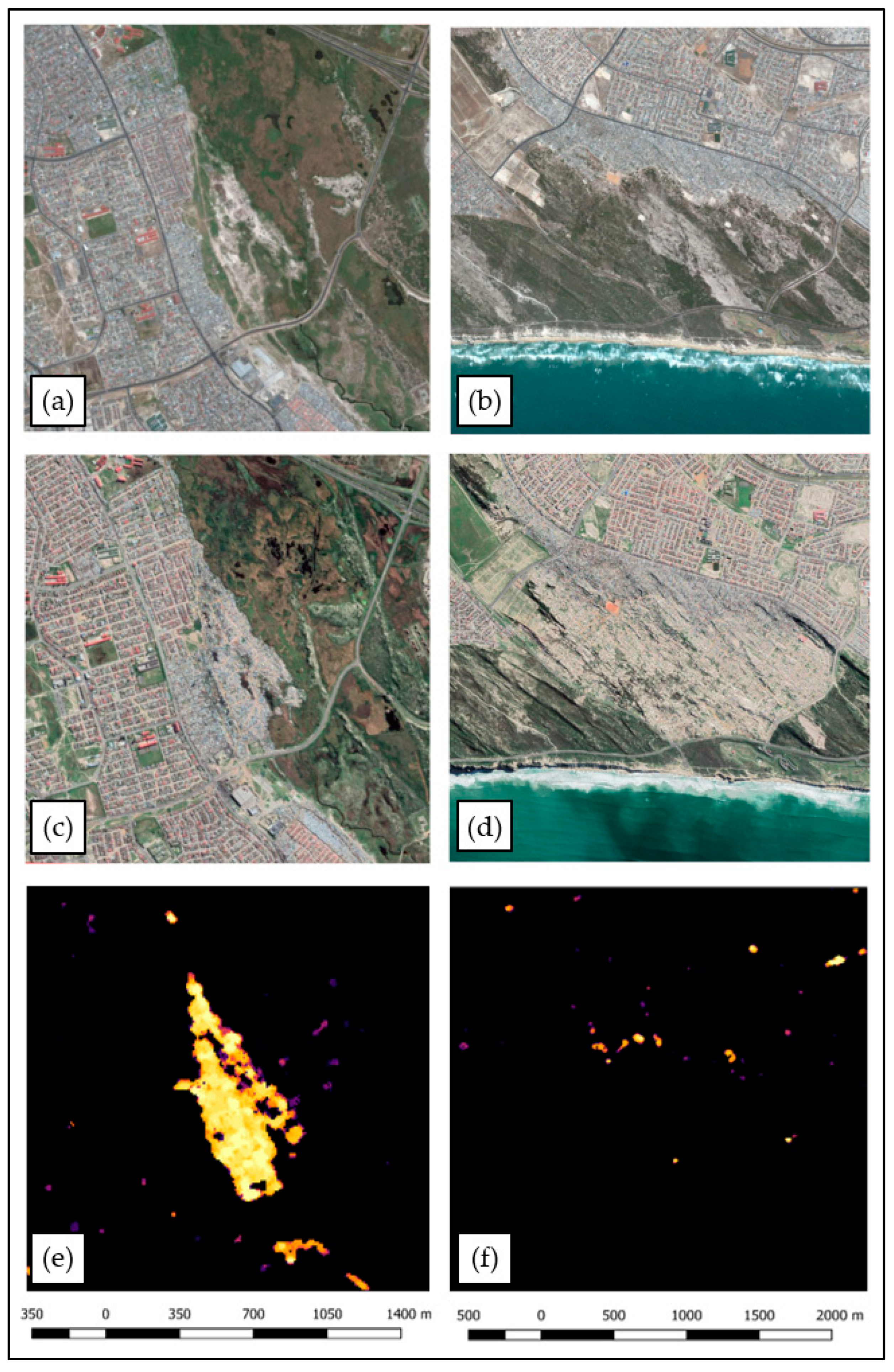

2.3.2. Google Earth Reference Imagery

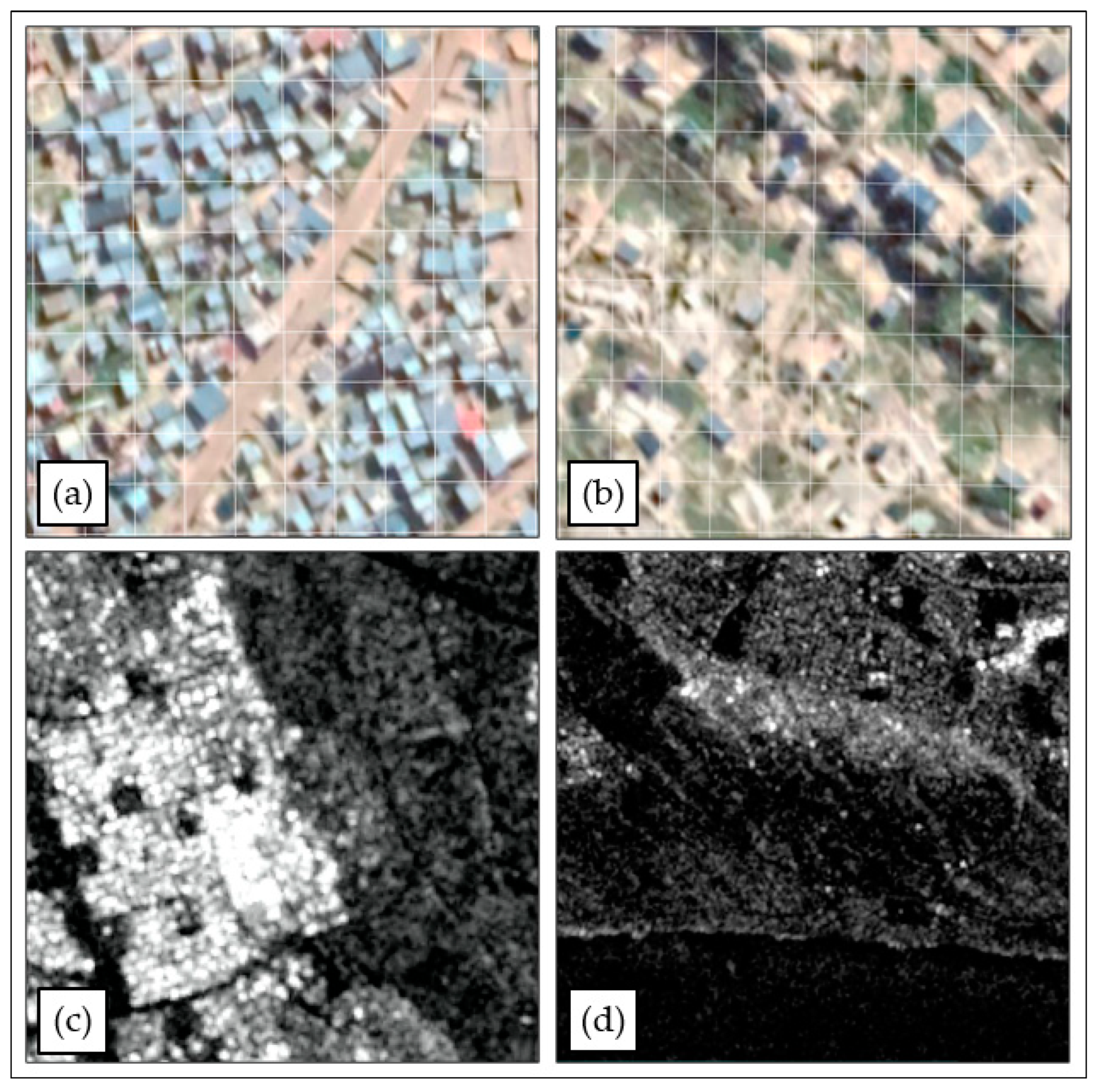

2.4. Estimation of Building Orientation

| Site | Building Orientation Angle (α) | Orbit 29 (Look Direction = 72°) | Orbit 131 (Look Direction = 73°) | ||

|---|---|---|---|---|---|

| Incidence Angle (ϕ) | POA (θ) | Incidence Angle (ϕ) | POA (θ) | ||

| 1 | −5 | 43.23 | 6.85 | 32.21 | 7.11 |

| 2 | 6 | 43.38 | −8.23 | 32.39 | −10.59 |

| 3 | −37 | 43.11 | 45.91 | 32.05 | 43.09 |

| 4 | −28 | 44.15 | 36.54 | 33.33 | −57.67 |

| Mean | 43.47 | 32.50 | |||

2.5. Pixel Exclusion Based on Ordinary Least Squares

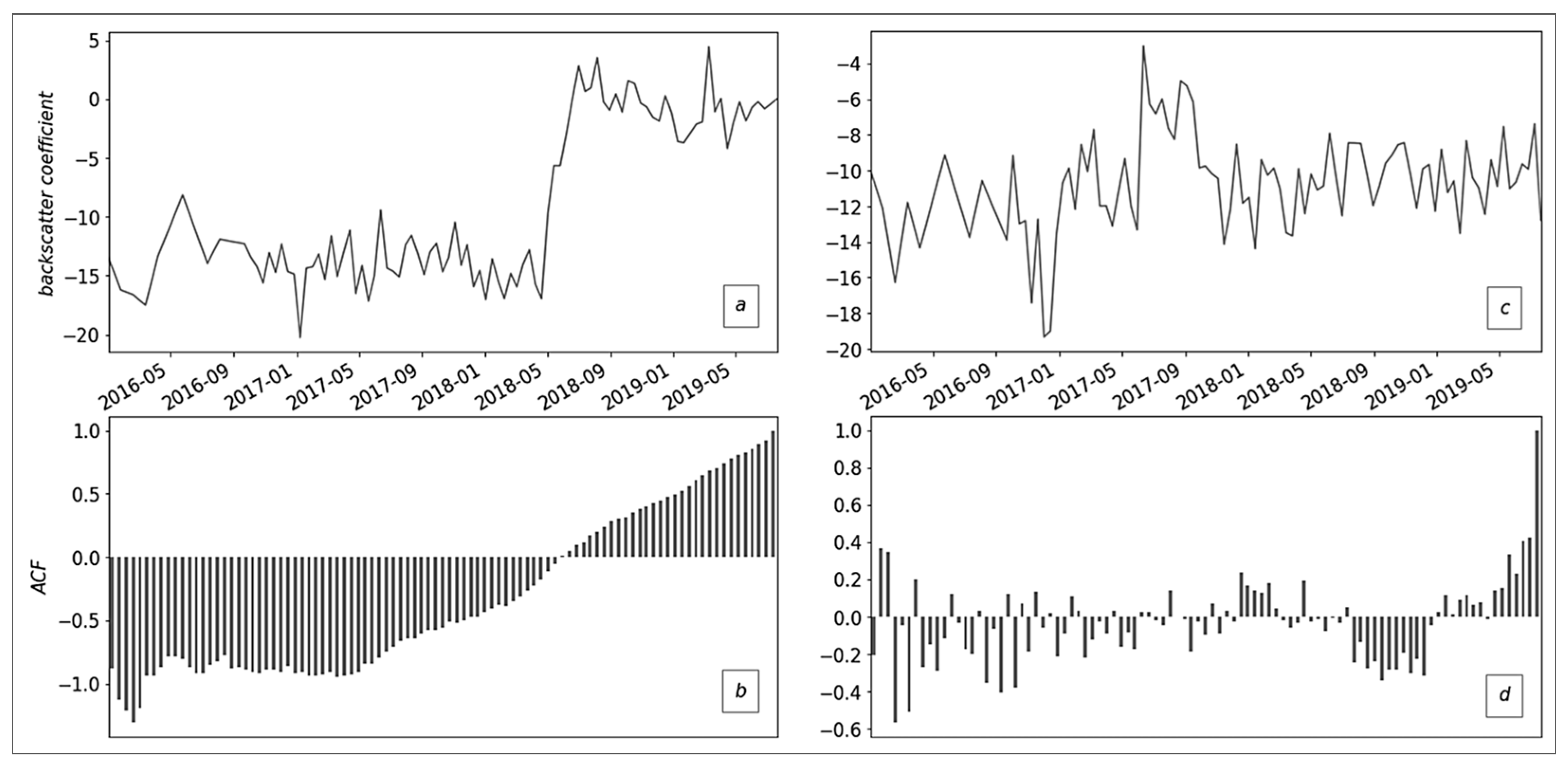

2.6. Autocorrelation Function Calculation

2.7. Thresholding and Classification

2.8. Accuracy Assessment

2.8.1. Sampling Scheme

2.8.2. Accuracy Metrics

3. Results

4. Discussion

4.1. Robustness of ACF-Based Classification

4.2. Effect of Building Properties

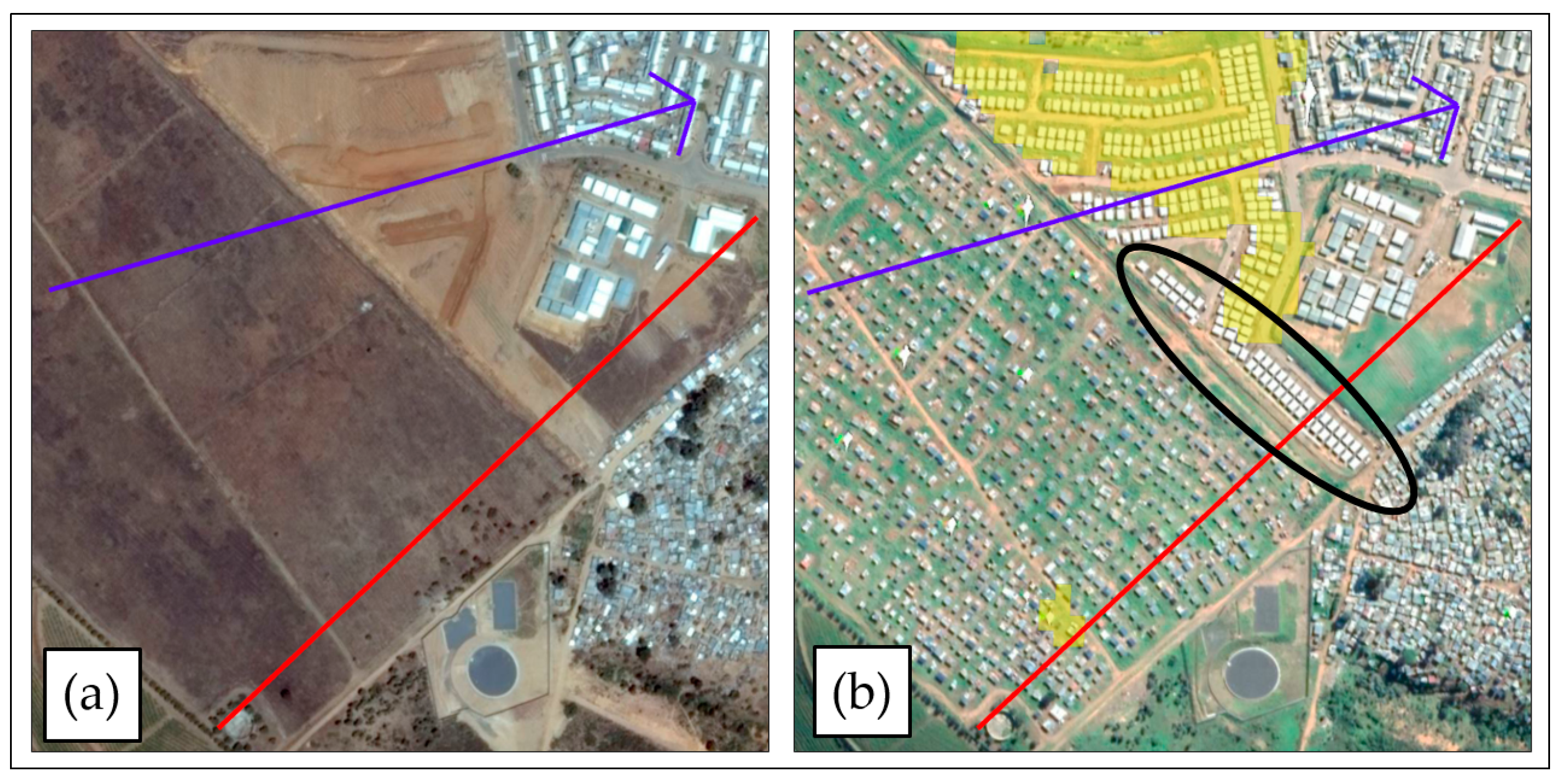

4.2.1. Building Density

- Infancy: settlements are built on open land in a dispersed layout, where approximately 50% of the land would be converted into houses;

- Booming: characterized by rapid expansion until most of the vacant land has been converted into settlements. Peaks when approximately 80% of the land has been converted;

- Saturation: characterized by vertical expansion once all the vacant land has been converted.

4.2.2. Building Orientation

4.3. Comparison to Other Methods

4.4. Limitations and Future Recommendations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- United Nations Department of Economic and Social Affairs (Population Division). World Urbanization Prospects 2018: Highlights (ST/ESA/SER.A/421); United Nations Department of Economic and Social Affairs: New York, NY, USA, 2019. [Google Scholar]

- Li, X.; Gong, P.; Liang, L. A 30-Year (1984–2013) Record of Annual Urban Dynamics of Beijing City Derived from Landsat Data. Remote Sens. Environ. 2015, 166, 78–90. [Google Scholar] [CrossRef]

- Lopez, J.F.; Shimoni, M.; Grippa, T. Extraction of African Urban and Rural Structural Features Using SAR Sentinel-1 Data. In Proceedings of the 2017 Joint Urban Remote Sensing Event (JURSE), Dubai, United Arab Emirates, 6–8 March 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–4. [Google Scholar]

- Zhou, T.; Li, Z.; Pan, J. Multi-Feature Classification of Multi-Sensor Satellite Imagery Based on Dual-Polarimetric Sentinel-1A, Landsat-8 OLI, and Hyperion Images for Urban Land-Cover Classification. Sensors 2018, 18, 373. [Google Scholar] [CrossRef]

- Sinha, S.; Santra, A.; Mitra, S.S. A Method for Built-up Area Extraction Using Dual Polarimetric ALOS PALSAR. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 4, 455–458. [Google Scholar] [CrossRef]

- Gong, P.; Li, X.; Zhang, W. 40-Year (1978—2017) Human Settlement Changes in China Reflected by Impervious Surfaces from Satellite Remote Sensing. Sci. Bull. 2019, 64, 756–763. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Hu, G.; Chen, Y.; Li, X.; Xu, X.; Li, S.; Pei, F.; Wang, S. High-Resolution Multi-Temporal Mapping of Global Urban Land Using Landsat Images Based on the Google Earth Engine Platform. Remote Sens. Environ. 2018, 209, 227–239. [Google Scholar] [CrossRef]

- Kleynhans, W.; Salmon, B.P.; Olivier, J.C.; van den Bergh, F.; Wessels, K.J.; Grobler, T.L.; Steenkamp, K.C. Land Cover Change Detection Using Autocorrelation Analysis on MODIS Time-Series Data: Detection of New Human Settlements in the Gauteng Province of South Africa. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 777–783. [Google Scholar] [CrossRef]

- Ratha, D.; Gamba, P.; Bhattacharya, A.; Frery, A.C. Novel Techniques for Built-up Area Extraction from Polarimetric SAR Images. IEEE Geosci. Remote Sens. Lett. 2019, 17, 177–181. [Google Scholar] [CrossRef]

- Sun, Z.; Xu, R.; Du, W.; Wang, L.; Lu, D. High-Resolution Urban Land Mapping in China from Sentinel 1A/2 Imagery Based on Google Earth Engine. Remote Sens. 2019, 11, 752. [Google Scholar] [CrossRef]

- Callaghan, K.; Engelbrecht, J.; Kemp, J. The Use of Landsat and Aerial Photography for the Assessment of Coastal Erosion and Erosion Susceptibility in False Bay, South Africa. S. Afr. J. Geomat. 2015, 4, 65–79. [Google Scholar] [CrossRef]

- Zhou, T.; Zhao, M.; Sun, C.; Pan, J. Exploring the Impact of Seasonality on Urban Land-Cover Mapping Using Multi-Season Sentinel-1A and GF-1 WFV Images in a Subtropical Monsoon-Climate Region. ISPRS Int. J. Geoinf. 2017, 7, 3. [Google Scholar] [CrossRef]

- Dolean, B.E.; Bilașco, Ș.; Petrea, D.; Moldovan, C.; Vescan, I.; Roșca, S.; Fodorean, I. Evaluation of the Built-Up Area Dynamics in the First Ring of Cluj-Napoca Metropolitan Area, Romania by Semi-Automatic GIS Analysis of Landsat Satellite Images. Appl. Sci. 2020, 10, 7722. [Google Scholar] [CrossRef]

- Weng, Q. Remote Sensing of Impervious Surfaces in the Urban Areas: Requirements, Methods, and Trends. Remote Sens. Environ. 2012, 117, 34–49. [Google Scholar] [CrossRef]

- Chini, M.; Pelich, R.; Hostache, R.; Matgen, P.; Lopez-martinez, C. Polarimetric and Multitemporal Information Extracted from Sentinel-1 SAR Data to Map Buildings. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Kuala Lumpur, Malaysia, 17–22 July 2022; IEEE: Piscataway, NJ, USA, 2018; pp. 8688–8690. [Google Scholar]

- Parelius, E.J. A Review of Deep-Learning Methods for Change Detection in Multispectral Remote Sensing Images. Remote Sens. 2023, 15, 2092. [Google Scholar] [CrossRef]

- Engelbrecht, J.; Theron, A.; Vhengani, L.; Kemp, J. A Simple Normalized Difference Approach to Burnt Area Mapping Using Multi-Polarisation C-Band SAR. Remote Sens. 2017, 9, 764. [Google Scholar] [CrossRef]

- Vu, P.X.; Duc, N.T.; Yem, V.V. Application of Statistical Models for Change Detection in SAR Imagery. In Proceedings of the 2015 International Conference on Computing, Management and Telecommunications (ComManTel), DaNang, Vietnam, 28–30 December 2015; pp. 239–244. [Google Scholar] [CrossRef]

- Yuan, J.; Lv, X.; Dou, F.; Yao, J. Change Analysis in Urban Areas Based on Statistical Features and Temporal Clustering Using TerraSAR-X Time-Series Images. Remote Sens. 2019, 11, 926. [Google Scholar] [CrossRef]

- Li, Q.; Gong, L.; Zhang, J. A Correlation Change Detection Method Integrating PCA and Multi- Texture Features of SAR Image for Building Damage Detection. Eur. J. Remote Sens. 2019, 52, 435–447. [Google Scholar] [CrossRef]

- Jensen, J.R.; Im, J. Remote Sensing Change Detection in Urban Environments. In Geo-Spatial Technologies in Urban Environments (Second Edition): Policy, Practice, and Pixels; Springer: Berlin/Heidelberg, Germany, 2007; pp. 7–31. [Google Scholar]

- Shafique, A.; Cao, G.; Khan, Z.; Asad, M.; Aslam, M. Deep Learning-Based Change Detection in Remote Sensing Images: A Review. Remote Sens. 2022, 14, 871. [Google Scholar] [CrossRef]

- Bai, T.; Wang, L.; Yin, D.; Sun, K.; Chen, Y.; Li, W.; Li, D. Deep Learning for Change Detection in Remote Sensing: A Review. Geo-Spat. Inf. Sci. 2022, 1–27. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, M.; Zhang, R.; Chen, S.; Zhan, Z. Change Detection Based on Artificial Intelligence: State-of-the-Art and Challenges. Remote Sens. 2020, 12, 1688. [Google Scholar] [CrossRef]

- Khelifi, L.; Mignotte, M. Deep Learning for Change Detection in Remote Sensing Images: Comprehensive Review and Meta-Analysis. IEEE Access 2020, 8, 126385–126400. [Google Scholar] [CrossRef]

- Gao, F.; Liu, X.; Dong, J.; Zhong, G.; Jian, M. Change Detection in SAR Images Based on Deep Semi-NMF and SVD Networks. Remote Sens. 2017, 9, 435. [Google Scholar] [CrossRef]

- Jaturapitpornchai, R.; Matsuoka, M.; Kanemoto, N.; Kuzuoka, S.; Ito, R.; Nakamura, R. Sar-Image Based Urban Change Detection in Bangkok, Thailand Using Deep Learning. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Yokohama, Japan, 28 July–2 August 2019; pp. 7403–7406. [Google Scholar] [CrossRef]

- Du, Y.; Zhong, R.; Li, Q.; Zhang, F. TransUNet++SAR: Change Detection with Deep Learning about Architectural Ensemble in SAR Images. Remote Sens. 2022, 15, 6. [Google Scholar] [CrossRef]

- Pang, L.; Sun, J.; Chi, Y.; Yang, Y.; Zhang, F.; Zhang, L. CD-TransUNet: A Hybrid Transformer Network for the Change Detection of Urban Buildings Using L-Band SAR Images. Sustainability 2022, 14, 9847. [Google Scholar] [CrossRef]

- De, S.; Pirrone, D.; Bovolo, F.; Bruzzone, L.; Bhattacharya, A. A Novel Change Detection Framework Based on Deep Learning for the Analysis of Multi-Temporal Polarimetric SAR Images. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 5193–5196. [Google Scholar] [CrossRef]

- Li, L.; Wang, C.; Zhang, H.; Zhang, B. Residual Unet for Urban Building Change Detection with Sentinel-1 SAR Data. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Yokohama, Japan, 28 July–2 August 2019; pp. 1498–1501. [Google Scholar] [CrossRef]

- Hafner, S.; Nascetti, A.; Azizpour, H.; Ban, Y. Sentinel-1 and Sentinel-2 Data Fusion for Urban Change Detection Using a Dual Stream U-Net. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Brown, T.T.; Wood, J.D.; Griffith, D.A. Using Spatial Autocorrelation Analysis to Guide Mixed Methods Survey Sample Design Decisions. J. Mix. Methods Res. 2017, 11, 394–414. [Google Scholar] [CrossRef]

- Madsen, H. Time Series Analysis; Chapman and Hall/CRC: Boca Raton, FL, USA, 2007. [Google Scholar]

- Kleynhans, W.; Salmon, B.P.; Wessels, K.J.; Olivier, J.C. Rapid Detection of New and Expanding Human Settlements in the Limpopo Province of South Africa Using a Spatio-Temporal Change Detection Method. Int. J. Appl. Earth Obs. Geoinf. 2015, 40, 74–80. [Google Scholar] [CrossRef][Green Version]

- Olding, W.C.; Olivier, J.C.; Salmon, B.P.; Kleynhans, W. Unsupervised Land Cover Change Estimation Using Region Covariance Estimates. IEEE Geosci. Remote Sens. Lett. 2019, 16, 347–351. [Google Scholar] [CrossRef]

- Mahlalela, P.T.; Blamey, R.C.; Cjc, R. Mechanisms behind Early Winter Rainfall Variability in the Southwestern Cape, South Africa. Clim. Dyn. 2019, 53, 21–39. [Google Scholar] [CrossRef]

- Ulaby, F.T.; Dubois, P.C.; Van Zyl, J. Radar Mapping of Surface Soil Moisture. J. Hydrol. 1996, 184, 57–84. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-Scale Geospatial Analysis for Everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 Mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Chu, H.; Ge, L.; Wang, X. Using Dual-Polarised L-Band SAR and Optical Satellite Imagery for Land Cover Classification in Southern Vietnam: Comparison and Combination. In Proceedings of the 10th Australian Space Science Conference Series, Brisbane, Australia, 27–30 September 2010. [Google Scholar]

- Google. Sentinel-1 Algorithms. Available online: https://developers.google.com/earth-engine/guides/sentinel1 (accessed on 12 August 2023).

- Goudarzi, M.A.; Landry, R.J. Assessing Horizontal Positional Accuracy of Google Earth Imagery in the City of Montreal, Canada. Geod. Cartogr. 2017, 43, 56–65. [Google Scholar] [CrossRef]

- Pulighe, G.; Baiocchi, V.; Lupia, F. Horizontal Accuracy Assessment of Very High Resolution Google Earth Images in the City of Rome, Italy. Int. J. Digit. Earth 2016, 9, 342–362. [Google Scholar] [CrossRef]

- Ubukawa, T. An Evaluation of the Horizontal Positional Accuracy of Google and Bing Satellite Imagery and Three Roads Data Sets Based on High Resolution Satellite Imagery; Center for International Earth Science Information Network (CIESIN): Palisades, NY, USA, 2013. [Google Scholar]

- Kimura, H. Radar Polarization Orientation Shifts in Built-up Areas. IEEE Geosci. Remote Sens. Lett. 2008, 5, 217–221. [Google Scholar] [CrossRef]

- Koppel, K.; Zalite, K.; Voormansik, K.; Jagdhuber, T. Sensitivity of Sentinel-1 Backscatter to Characteristics of Buildings. Int. J. Remote Sens. 2017, 38, 6298–6318. [Google Scholar] [CrossRef]

- Robertson, C.; George, S.C. Theory and Practical Recommendations for Autocorrelation-Based Image Correlation Spectroscopy. J. Biomed. Opt. 2012, 17, 80801. [Google Scholar] [CrossRef] [PubMed]

- McKinney, W.; Perktold, J.; Seabold, S. Time Series Analysis in Python with Statsmodels. In Proceedings of the 10th Python in Science Conference (SCIPY 2011), Austin, TX, USA, 11–16 July 2011; pp. 96–102. [Google Scholar]

- Tinungki, G.M. The Analysis of Partial Autocorrelation Function in Predicting Maximum Wind Speed. In Proceedings of the IOP Conference Series: Earth and Environmental Science, Makassar, Indonesia, 30 August–1 September 2018; IOP Publishing: Bristol, UK, 2019; p. 12097. [Google Scholar]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices, 2nd ed.; Taylor & Francis: Oxford, UK, 2019. [Google Scholar]

- Allouche, O.; Tsoar, A.; Kadmon, R. Assessing the Accuracy of Species Distribution Models: Prevalence, Kappa and the True Skill Statistic (TSS). J. Appl. Ecol. 2006, 43, 1223–1232. [Google Scholar] [CrossRef]

- Boughorbel, S.; Jarray, F.; El-Anbari, M. Optimal Classifier for Imbalanced Data Using Matthews Correlation Coefficient Metric. PLoS ONE 2017, 12, e0177678. [Google Scholar] [CrossRef]

- Luque, A.; Carrasco, A.; Martín, A.; de las Heras, A. The Impact of Class Imbalance in Classification Performance Metrics Based on the Binary Confusion Matrix. Pattern Recognit. 2019, 91, 216–231. [Google Scholar] [CrossRef]

- Mukaka, M.M. Statistics Corner: A Guide to Appropriate Use of Correlation Coefficient in Medical Research. Malawi Med. J. 2012, 24, 69–71. [Google Scholar]

- Schober, P.; Schwarte, L.A. Correlation Coefficients: Appropriate Use and Interpretation. Anesth. Analg. 2018, 126, 1763–1768. [Google Scholar] [CrossRef] [PubMed]

- Corbane, C.; Baghdadi, N.; Descombes, X.; Junior, G.W.; Villeneuve, N.; Petit, M. Comparative Study on the Performance of Multiparameter Sar Data for Operational Urban Areas Extraction Using Textural Features. IEEE Geosci. Remote Sens. Lett. 2009, 6, 728–732. [Google Scholar] [CrossRef]

- Xia, Z.G.; Henderson, F.M. Understanding the Relationships between Radar Response Patterns and the Bio- and Geophysical Parameters of Urban Areas. IEEE Trans. Geosci. Remote Sens. 1997, 35, 93–101. [Google Scholar] [CrossRef]

- Henderson, F.M. An Analysis of Settlement Characterization in Central Europe Using SIR-B Radar Imagery. Remote Sens. Environ. 1995, 54, 61–70. [Google Scholar] [CrossRef]

- Sunuprapto, H.; Hussin, Y.A. A Comparison Between Optical and Radar Satellite Images in Detecting Burnt Tropical Forest in South Sumatra, Indonesia. Int. Arch. Photogramm. Remote Sens. 2000, XXXIII, 580–587. [Google Scholar]

- Ulaby, F.T.; Fawwaz, T.; Dobson, M.C.; Álvarez-Pérez, J.L. Handbook of Radar Scattering Statistics for Terrain; Artech House: London, UK, 2019; ISBN 1630817023. [Google Scholar]

- Sen, L.J. Speckle Analysis and Smoothing of Synthetic Aperture Radar Images. Comput. Graph. Image Process. 1981, 17, 24–32. [Google Scholar]

- Xie, H.; Pierce, L.E.; Ulaby, F.T. Statistical Properties of Logarithmically Transformed Speckle. IEEE Trans. Geosci. Remote Sens. 2002, 40, 721–727. [Google Scholar] [CrossRef]

- Abebe, F.K. Modelling Informal Settlement Growth in Dar Es Salaam, Tanzania. Master’s Thesis, University of Twente, Enskord, The Netherlands, 2011. [Google Scholar]

- Ferro, A.; Brunner, D.; Bruzzone, L.; Lemoine, G. On the Relationship between Double Bounce and the Orientation of Buildings in VHR SAR Images. IEEE Geosci. Remote Sens. Lett. 2011, 8, 612–616. [Google Scholar] [CrossRef]

- Li, H.; Li, Q.; Wu, G.; Chen, J.; Liang, S. The Impacts of Building Orientation on Polarimetric Orientation Angle Estimation and Model-Based Decomposition for Multilook Polarimetric SAR Data in Urban Areas. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5520–5532. [Google Scholar] [CrossRef]

- Augustijn-Beckers, E.W.; Flacke, J.; Retsios, B. Simulating Informal Settlement Growth in Dar Es Salaam, Tanzania: An Agent-Based Housing Model. Comput. Environ. Urban Syst. 2011, 35, 93–103. [Google Scholar] [CrossRef]

- Celik, N. Change Detection of Urban Areas in Ankara through Google Earth Engine. In Proceedings of the 41st International Conference on Telecommunications and Signal Processing, Athens, Greece, 4–6 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–5. [Google Scholar]

- Xiang, D.; Tang, T.; Ban, Y.; Su, Y.; Kuang, G. Unsupervised Polarimetric SAR Urban Area Classification Based on Model-Based Decomposition with Cross Scattering. ISPRS J. Photogramm. Remote Sens. 2016, 116, 86–100. [Google Scholar] [CrossRef]

- Hu, H.; Ban, Y. Unsupervised Change Detection in Multitemporal SAR Images over Large Urban Areas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 3248–3261. [Google Scholar] [CrossRef]

- Chen, H.; Jiao, L.; Liang, M.; Liu, F.; Yang, S.; Hou, B. Fast Unsupervised Deep Fusion Network for Change Detection of Multitemporal SAR Images. Neurocomputing 2019, 332, 56–70. [Google Scholar] [CrossRef]

- Li, L.; Wang, C.; Zhang, H.; Zhang, B.; Wu, F. Urban Building Change Detection in SAR Images Using Combined Differential Image and Residual U-Net Network. Remote Sens. 2019, 11, 1091. [Google Scholar] [CrossRef]

- Kleynhans, W.; Salmon, B.P.; Wessels, K.J. A Novel Framework for Parameter Selection of the Autocorrelation Change Detection Method Using 250m MODIS Time-Series Data in the Gauteng Province of South Africa. S. Afr. J. Geomat. 2017, 6, 407. [Google Scholar] [CrossRef]

- Kleynhans, W.; Salmon, B.P.; Wessels, K.J.; Olivier, J.C. A Spatio-Temporal Autocorrelation Change Detection Approach Using Hyper-Temporal Satellite Data. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Melbourne, Australia, 21–26 July 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 3459–3462. [Google Scholar]

| Year | Orbit 29 | Orbit 131 | Orbit 29 + 131 |

|---|---|---|---|

| 2016 | 16 | 17 | 33 |

| 2017 | 29 | 30 | 59 |

| 2018 | 30 | 31 | 61 |

| 2019 | 18 | 17 (16) | 35 (34) |

| Total | 93 | 95 (94) | 188 (187) |

| Slope | |||

|---|---|---|---|

| >1 | ≤1 | ||

| Intercept | >−6 dB | Urban (increase) | Urban (stable/decrease) |

| ≤6 dB | Other to urban | Other (stable/decrease) | |

| Area | Change (Reference) (60%) | Change (Classified) (15%) | No Change (25%) | Total (100%) |

|---|---|---|---|---|

| Site 3 (3 × 3 km) | 144 (md = 60 m) | 36 (md = 40 m) | 60 (md = 80 m) | 240 |

| Sites 1, 2, and 4 (2 × 2 km) | 132 (md = 30 m) | 33 (md = 20 m) | 55 (md = 60 m) | 220 |

| Predicted Class | Instances | |||

|---|---|---|---|---|

| P | N | |||

| Actual class | P | True Positive (TP) | False Negative (FN) | mp |

| N | False Positive (FP) | True Negative (TN) | mn | |

| Estimations | ep | en | m | |

| Metric | Abbr. | Equation |

|---|---|---|

| Sensitivity | SNS | |

| Specificity | SPC | |

| Bookmaker Informedness | BM | |

| Matthews Correlation Coefficient | MCC | |

| Overall Accuracy | OA |

| Site 1 (δ = 0.13) | Site 2 (δ = 0.12) | Site 3 (δ = 0.54) | Site 4 (δ = 0.38) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Orbit | 131 | 29 | 29 + 131 | 131 | 29 | 29 + 131 | 131 | 29 | 29 + 131 | 131 | 29 | 29 + 131 |

| Threshold | 60 | 45 | 95 | 49 | 47 | 95 | 35 | 44 | 76 | 38 | 41 | 77 |

| Sensitivity | 0.604 | 0.854 | 0.802 | 0.732 | 0.814 | 0.825 | 0.182 | 0.145 | 0.145 | 0.265 | 0.309 | 0.279 |

| Specificity | 0.911 | 0.782 | 0.766 | 0.821 | 0.724 | 0.732 | 0.914 | 0.984 | 0.984 | 0.901 | 0.954 | 0.947 |

| MCCn | 0.775 | 0.816 | 0.782 | 0.778 | 0.767 | 0.776 | 0.564 | 0.630 | 0.630 | 0.607 | 0.682 | 0.660 |

| BMn | 0.758 | 0.818 | 0.784 | 0.777 | 0.769 | 0.778 | 0.548 | 0.565 | 0.565 | 0.583 | 0.631 | 0.613 |

| MM | 0.762 | 0.818 | 0.784 | 0.777 | 0.769 | 0.778 | 0.552 | 0.581 | 0.581 | 0.589 | 0.644 | 0.625 |

| OA | 0.777 | 0.814 | 0.782 | 0.782 | 0.764 | 0.773 | 0.746 | 0.792 | 0.792 | 0.705 | 0.755 | 0.741 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kapp, J.; Kemp, J. Temporal Autocorrelation of Sentinel-1 SAR Imagery for Detecting Settlement Expansion. Geomatics 2023, 3, 427-446. https://doi.org/10.3390/geomatics3030023

Kapp J, Kemp J. Temporal Autocorrelation of Sentinel-1 SAR Imagery for Detecting Settlement Expansion. Geomatics. 2023; 3(3):427-446. https://doi.org/10.3390/geomatics3030023

Chicago/Turabian StyleKapp, James, and Jaco Kemp. 2023. "Temporal Autocorrelation of Sentinel-1 SAR Imagery for Detecting Settlement Expansion" Geomatics 3, no. 3: 427-446. https://doi.org/10.3390/geomatics3030023

APA StyleKapp, J., & Kemp, J. (2023). Temporal Autocorrelation of Sentinel-1 SAR Imagery for Detecting Settlement Expansion. Geomatics, 3(3), 427-446. https://doi.org/10.3390/geomatics3030023