Three Dimensional Change Detection Using Point Clouds: A Review

Abstract

1. Introduction

- Challenges related to the use of point clouds in CD and survey of 3D CD methods;

- Comprehensive review of the popular point clouds datasets used for 3D CD benchmarks;

- Detailed description of evaluation metrics used to quantify change detection performance;

- List of the remaining challenges and future research that will help to advance the development of CD using 3D point clouds.

2. 3D Change Detection Using 3D Point Clouds

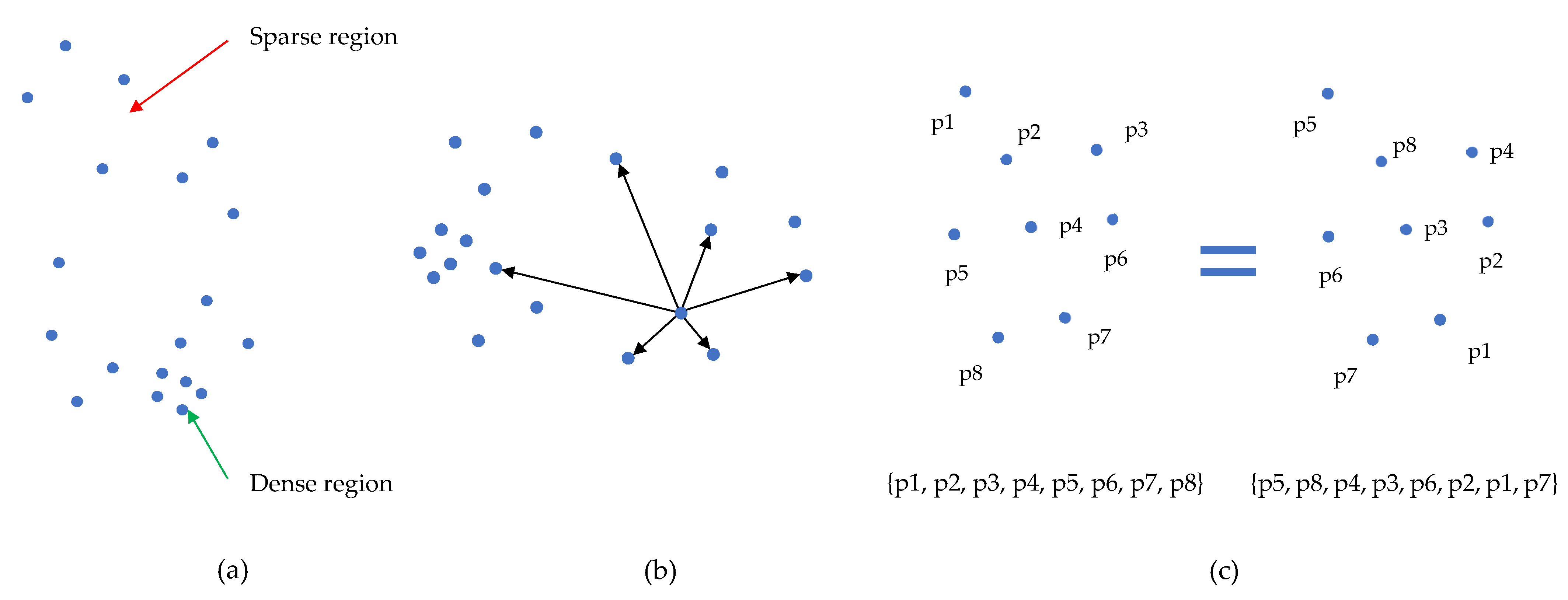

2.1. Challenges and Specificities

2.1.1. Acquisition Challenges

2.1.2. Three Dimensional Point Clouds Specificities

2.2. Data Preprocessing

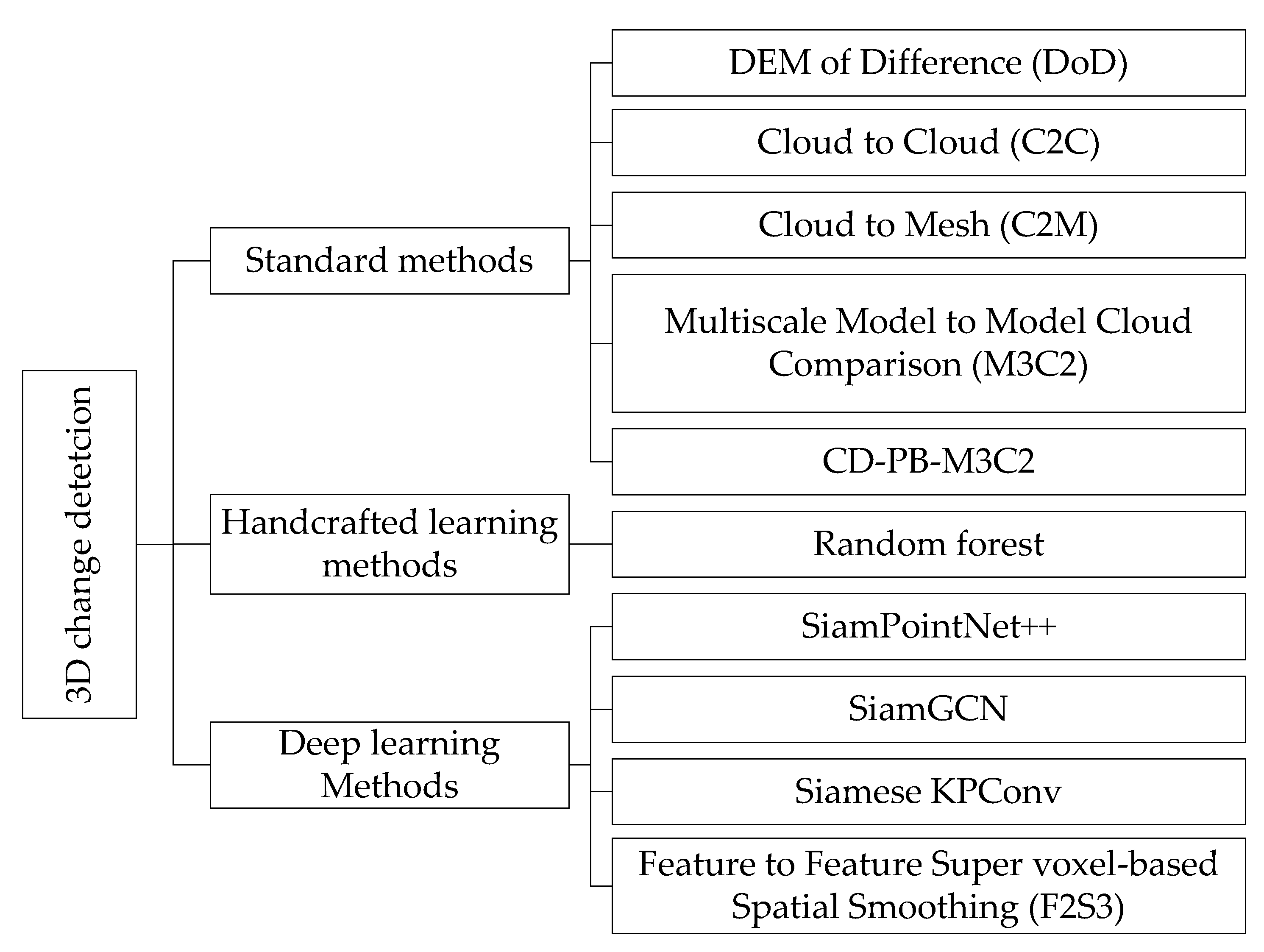

2.3. Three Dimensional Change Detection Methods

- Change Unit. This taxonomy depends on the basic unit used in the CD process, such as methods based on points, voxels, objects and rays;

- Order of classification and change detection. In this categorization, difference exists between methods that proceed to change detection and then classification (pre-classification methods), those that proceed to classification first (post-classification methods), and those that integrate the two steps into one (integrated);

- Used technique. Methods are classified here based on the used technique whether it is based on distance or learning, etc;

- Target. This depends on the application domain: urban, forestry, maritime, etc.

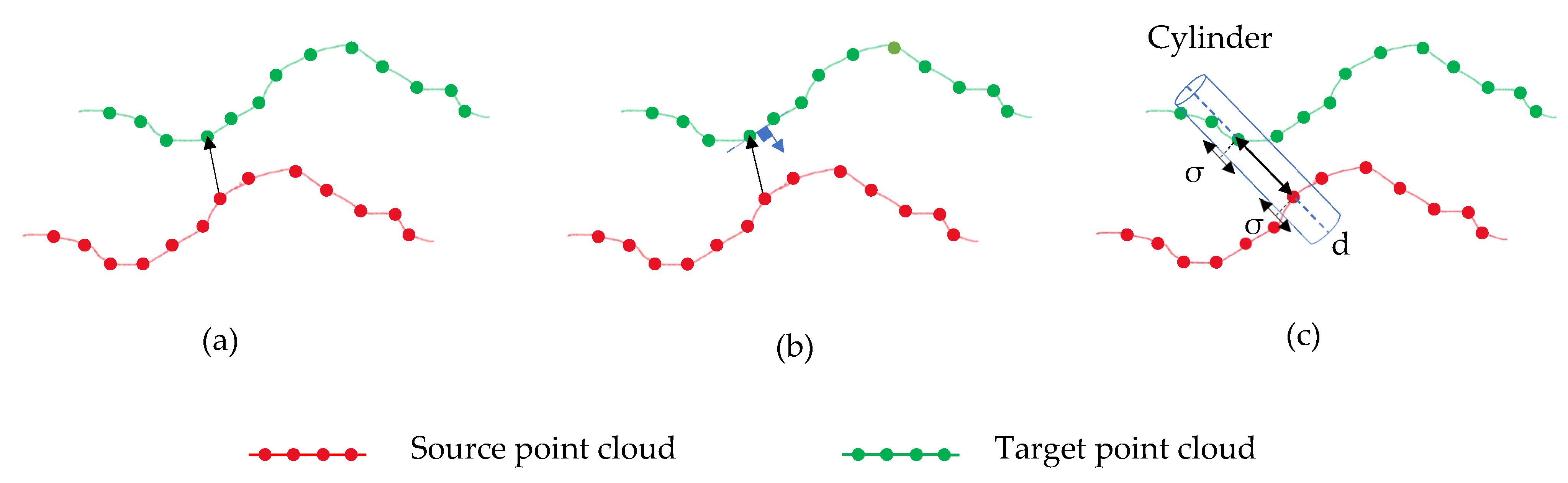

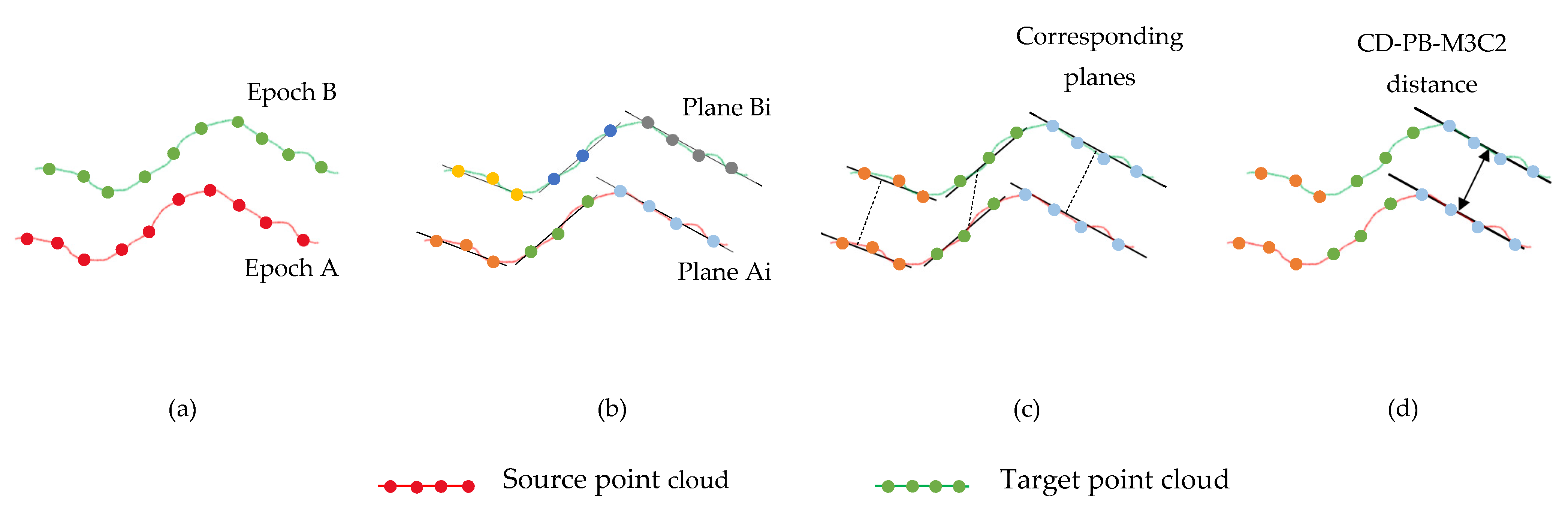

2.3.1. Standard Methods

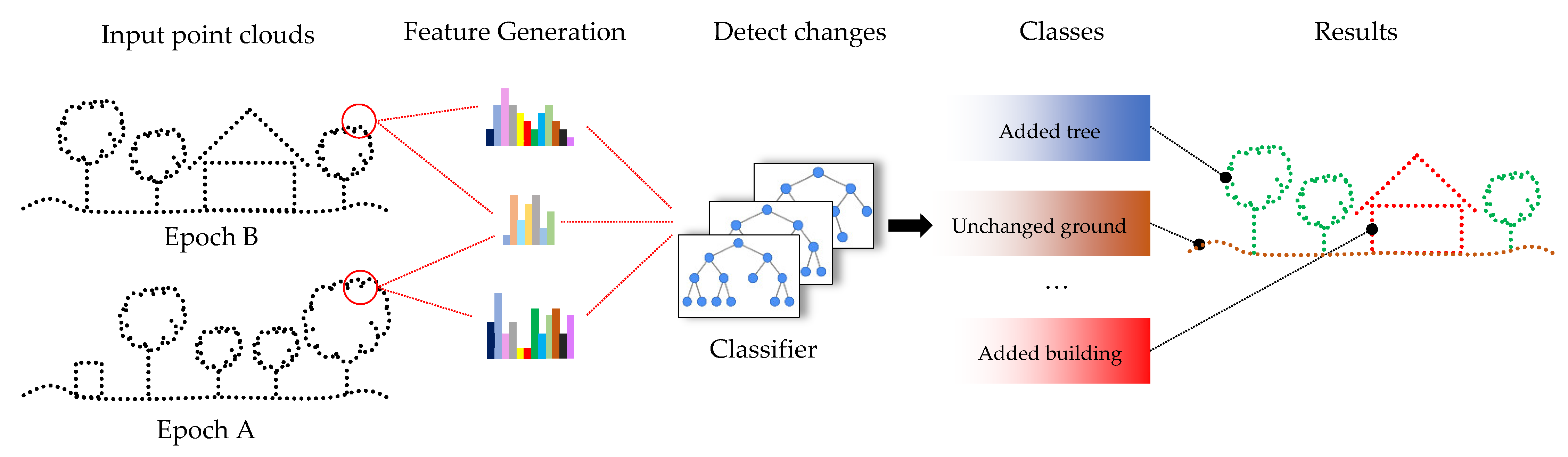

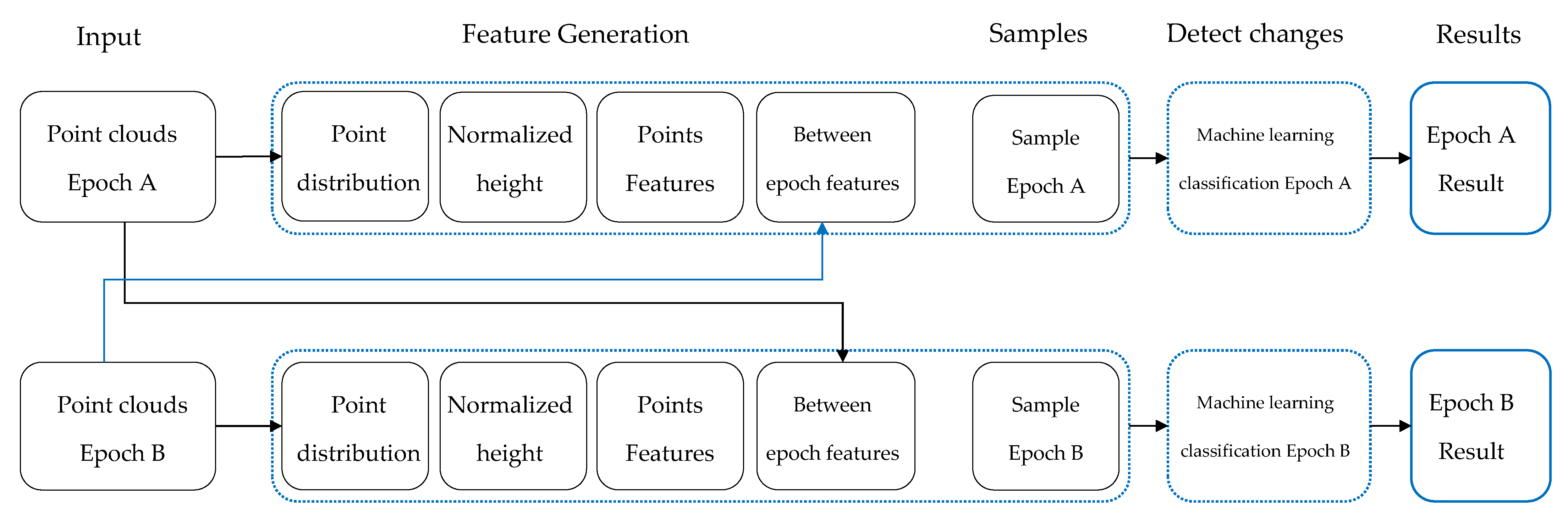

2.3.2. Machine Learning with Handcrafted Features

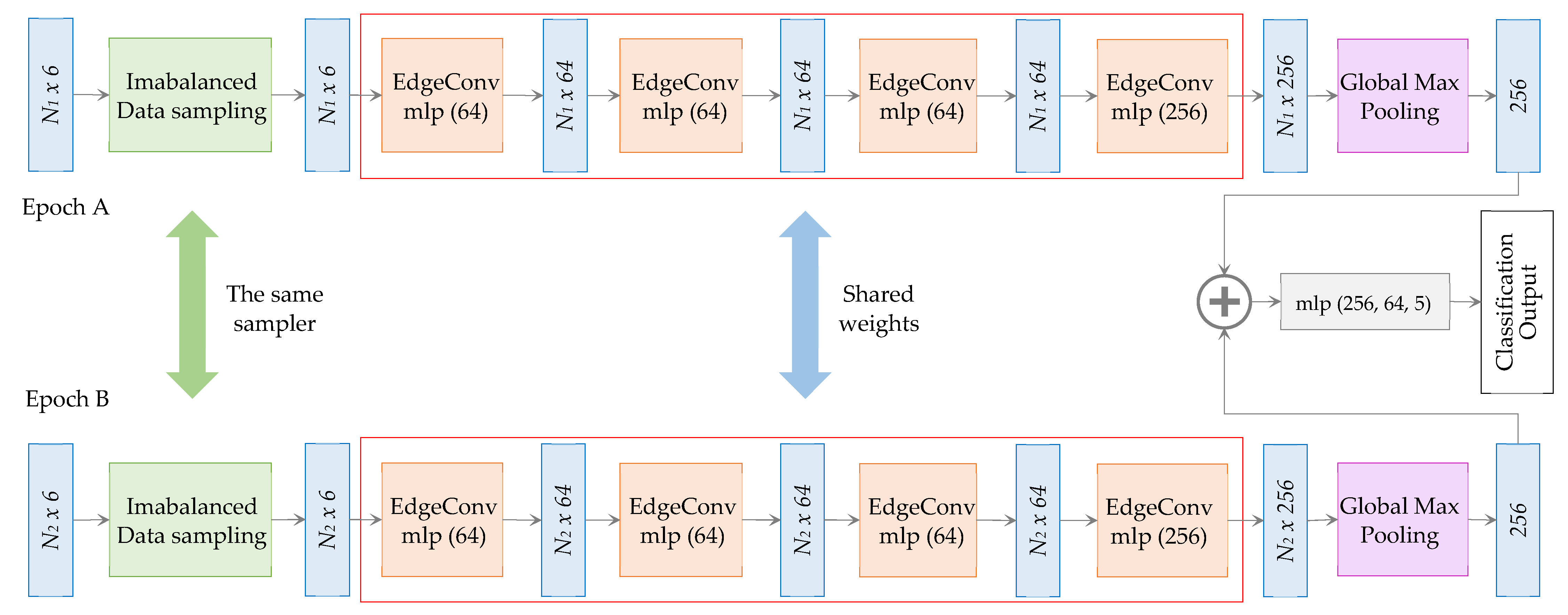

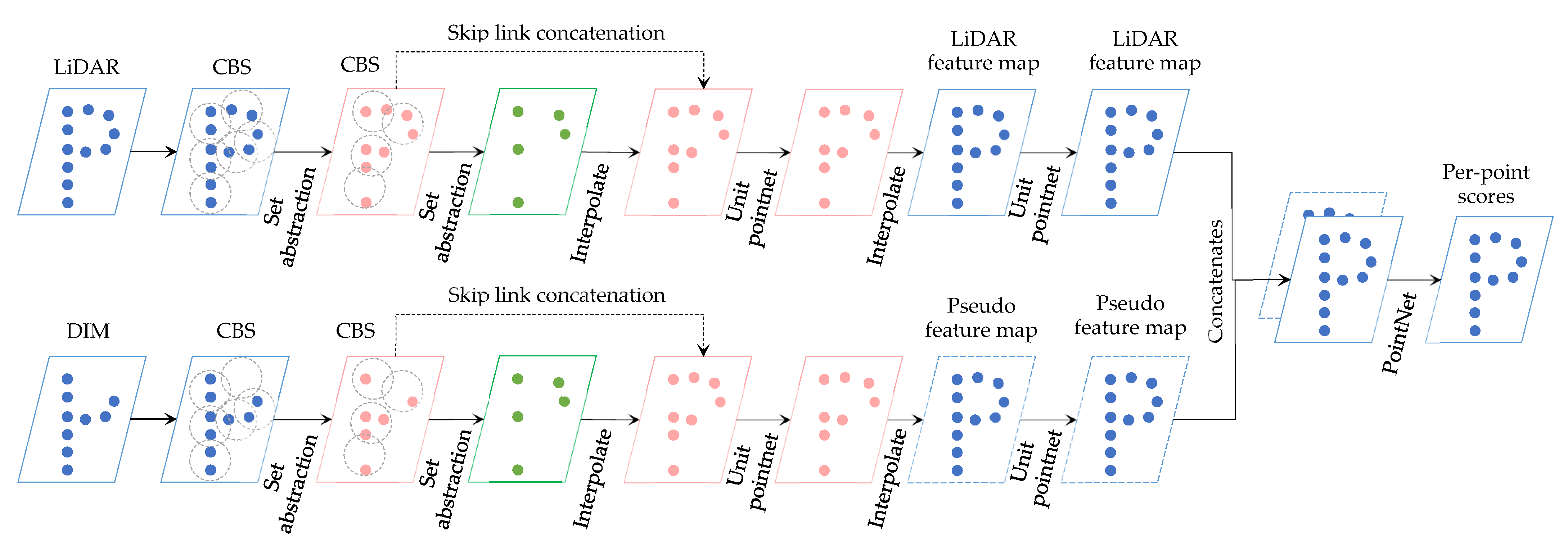

2.3.3. Deep Learning Methods

3. Benchmarks

3.1. Datasets for 3D Change Detection

3.2. Evaluation Metrics

4. Discussion and Perspectives

- The use of heterogeneous and multi-modal data (acquired by photogrammetry, laser scanner or other acquisition techniques).

- The use of multi-resolution data (acquired by sensors with different specifications).

- The availability of benchmark data for the 3D CD.

- The exploitation of the progress made in the 3D semantic segmentation to integrate this information in the 3D CD process.

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Virtanen, J.P.; Kukko, A.; Kaartinen, H.; Jaakkola, A.; Turppa, T.; Hyyppä, H.; Hyyppä, J. Nationwide point cloud-The future topographic core data. ISPRS Int. J. Geo-Inf. 2017, 6, 243. [Google Scholar] [CrossRef]

- Théau, J. Change Detection. In Encyclopedia of GIS; Shekhar, S., Xiong, H., Eds.; Springer: Boston, MA, USA, 2008; pp. 77–84. ISBN 978-0-387-35973-1. [Google Scholar]

- Si Salah, H.; Ait-Aoudia, S.; Rezgui, A.; Goldin, S.E. Change detection in urban areas from remote sensing data: A multidimensional classification scheme. Int. J. Remote Sens. 2019, 40, 6635–6679. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, M.; Zhang, R.; Chen, S.; Zhan, Z. Change detection based on artificial intelligence: State-of-the-art and challenges. Remote Sens. 2020, 12, 1688. [Google Scholar] [CrossRef]

- Seo, J.; Park, W.; Kim, T. Feature-Based Approach to Change Detection of Small Objects from High-Resolution Satellite Images. Remote Sens. 2022, 14, 462. [Google Scholar] [CrossRef]

- Han, T.; Tang, Y.; Yang, X.; Lin, Z.; Zou, B.; Feng, H. Change detection for heterogeneous remote sensing images with improved training of hierarchical extreme learning machine (Helm). Remote Sens. 2021, 13, 4918. [Google Scholar] [CrossRef]

- You, Y.; Cao, J.; Zhou, W. A survey of change detection methods based on remote sensing images for multi-source and multi-objective scenarios. Remote Sens. 2020, 12, 2460. [Google Scholar] [CrossRef]

- Ghaderpour, E.; Vujadinovic, T. Change detection within remotely sensed satellite image time series via spectral analysis. Remote Sens. 2020, 12, 4001. [Google Scholar] [CrossRef]

- Afaq, Y.; Manocha, A. Analysis on change detection techniques for remote sensing applications: A review. Ecol. Inform. 2021, 63, 101310. [Google Scholar] [CrossRef]

- Goswami, A.; Sharma, D.; Mathuku, H.; Gangadharan, S.M.P.; Yadav, C.S.; Sahu, S.K.; Pradhan, M.K.; Singh, J.; Imran, H. Change Detection in Remote Sensing Image Data Comparing Algebraic and Machine Learning Methods. Electronics 2022, 11, 431. [Google Scholar] [CrossRef]

- Asokan, A.; Anitha, J. Change detection techniques for remote sensing applications: A survey. Earth Sci. Inform. 2019, 12, 143–160. [Google Scholar] [CrossRef]

- Kiran, B.R.; Roldão, L.; Irastorza, B.; Verastegui, R.; Süss, S.; Yogamani, S.; Talpaert, V.; Lepoutre, A.; Trehard, G. Real-time dynamic object detection for autonomous driving using prior 3D-maps. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2019; Volume 11133, pp. 567–582. [Google Scholar] [CrossRef]

- Khatab, E.; Onsy, A.; Varley, M.; Abouelfarag, A. Vulnerable objects detection for autonomous driving: A review. Integration 2021, 78, 36–48. [Google Scholar] [CrossRef]

- Qin, R.; Tian, J.; Reinartz, P. 3D change detection–Approaches and applications. ISPRS J. Photogramm. Remote Sens. 2016, 122, 41–56. [Google Scholar] [CrossRef]

- Shuai, W.; Jiang, F.; Zheng, H.; Li, J. MSGATN: A Superpixel-Based Multi-Scale Siamese Graph Attention Network for Change Detection in Remote Sensing Images. Appl. Sci. 2022, 12, 5158. [Google Scholar] [CrossRef]

- Lv, Z.Y.; Liu, T.F.; Benediktsson, J.A.; Lei, T.; Wan, Y.L. Multi-scale object histogram distance for LCCD using bi-temporal very-high-resolution remote sensing images. Remote Sens. 2018, 10, 1809. [Google Scholar] [CrossRef]

- De Gélis, I.; Lefèvre, S.; Corpetti, T. Change detection in urban point clouds: An experimental comparison with simulated 3d datasets. Remote Sens. 2021, 13, 2629. [Google Scholar] [CrossRef]

- Zięba-Kulawik, K.; Skoczylas, K.; Wężyk, P.; Teller, J.; Mustafa, A.; Omrani, H. Monitoring of urban forests using 3D spatial indices based on LiDAR point clouds and voxel approach. Urban For. Urban Green 2021, 65, 127324. [Google Scholar] [CrossRef]

- De Gelis, I.; Lefevre, S.; Corpetti, T.; Ristorcelli, T.; Thenoz, C.; Lassalle, P. Benchmarking Change Detection in Urban 3D Point Clouds. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 3352–3355. [Google Scholar] [CrossRef]

- Tompalski, P.; Coops, N.C.; White, J.C.; Goodbody, T.R.H.; Hennigar, C.R.; Wulder, M.A.; Socha, J.; Woods, M.E. Estimating Changes in Forest Attributes and Enhancing Growth Projections: A Review of Existing Approaches and Future Directions Using Airborne 3D Point Cloud Data. Curr. For. Rep. 2021, 7, 25–30. [Google Scholar] [CrossRef]

- Duncanson, L.; Dubayah, R. Monitoring individual tree-based change with airborne lidar. Ecol. Evol. 2018, 8, 5079–5089. [Google Scholar] [CrossRef]

- Yrttimaa, T.; Luoma, V.; Saarinen, N.; Kankare, V.; Junttila, S.; Holopainen, M.; Hyyppä, J.; Vastaranta, M. Structural changes in Boreal forests can be quantified using terrestrial laser scanning. Remote Sens. 2020, 12, 2672. [Google Scholar] [CrossRef]

- Gstaiger, V.; Tian, J.; Kiefl, R.; Kurz, F. 2D vs. 3D change detection using aerial imagery to support crisis management of large-scale events. Remote Sens. 2018, 10, 2054. [Google Scholar] [CrossRef]

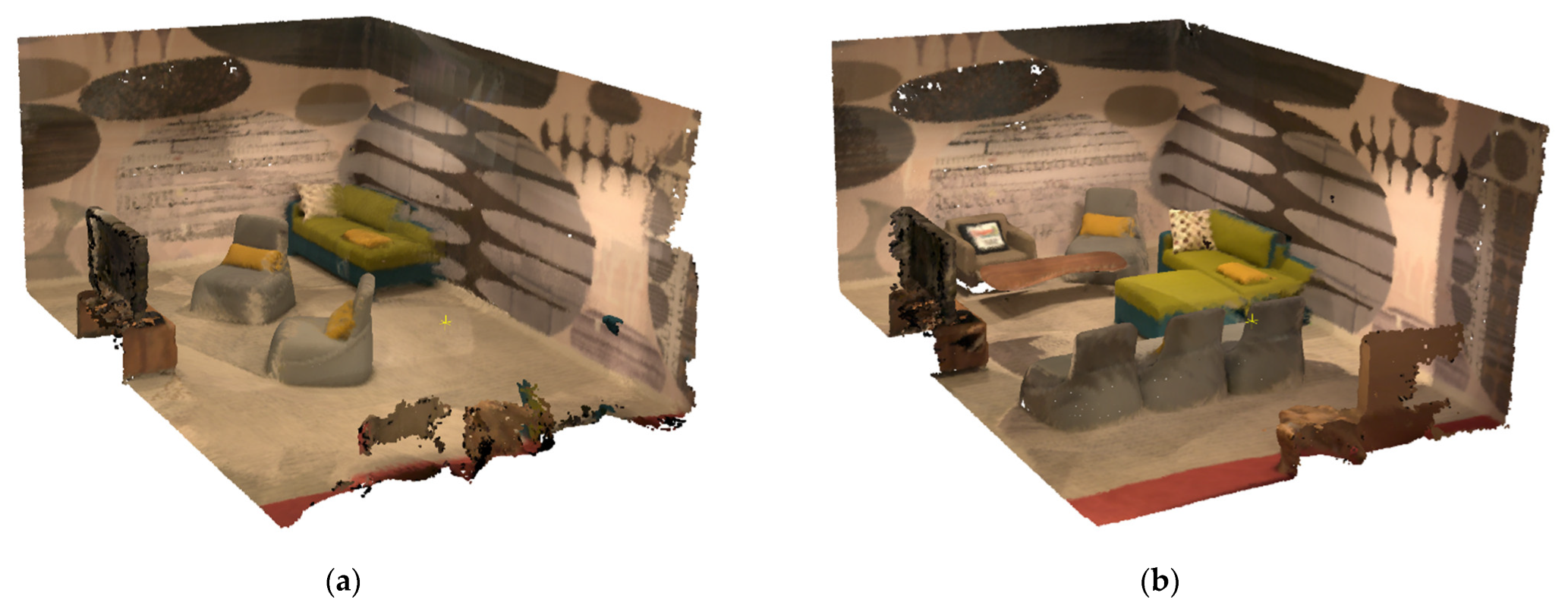

- Koeva, M.; Nikoohemat, S.; Elberink, S.O.; Morales, J.; Lemmen, C.; Zevenbergen, J. Towards 3D indoor cadastre based on change detection from point clouds. Remote Sens. 2019, 11, 1972. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Gilani, S.A.N.; Siddiqui, F.U. An effective data-driven method for 3-D building roof reconstruction and robust change detection. Remote Sens. 2018, 10, 1512. [Google Scholar] [CrossRef]

- Awrangjeb, M. Effective generation and update of a building map database through automatic building change detection from LiDAR point cloud data. Remote Sens. 2015, 7, 14119–14150. [Google Scholar] [CrossRef]

- Mayr, A.; Rutzinger, M.; Geitner, C. Object-based point cloud analysis for landslide and erosion monitoring. Photogramm. Eng. Remote Sens. 2019, 85, 455–462. [Google Scholar] [CrossRef]

- Kromer, R.A.; Abellán, A.; Hutchinson, D.J.; Lato, M.; Chanut, M.A.; Dubois, L.; Jaboyedoff, M. Automated terrestrial laser scanning with near-real-time change detection-Monitoring of the Séchilienne landslide. Earth Surf. Dyn. 2017, 5, 293–310. [Google Scholar] [CrossRef]

- Bessin, Z.; Letortu, P.; Jaud, M.; Delacourt, C.; Costa, S.; Maquaire, O.; Davidson, R.; Corpetti, T. Cliff change detection using siamese kpconv deep network on 3d point clouds. In Proceedings of the ISPRS Congress, Nice, France, June 2022; Volume 53, pp. 457–476. [Google Scholar]

- Gojcic, Z.; Schmid, L.; Wieser, A. Dense 3D displacement vector fields for point cloud-based landslide monitoring. Landslides 2021, 18, 3821–3832. [Google Scholar] [CrossRef]

- Mayr, A.; Rutzinger, M.; Bremer, M.; Oude Elberink, S.; Stumpf, F.; Geitner, C. Object-based classification of terrestrial laser scanning point clouds for landslide monitoring. Photogramm. Rec. 2017, 32, 377–397. [Google Scholar] [CrossRef]

- Meyer, T.; Brunn, A.; Stilla, U. Automation in Construction Change detection for indoor construction progress monitoring based on BIM, point clouds and uncertainties. Autom. Constr. 2022, 141, 104442. [Google Scholar] [CrossRef]

- Okyay, U.; Telling, J.; Glennie, C.L.; Dietrich, W.E. Airborne lidar change detection: An overview of Earth sciences applications. Earth-Sci. Rev. 2019, 198, 102929. [Google Scholar] [CrossRef]

- Anders, K.; Marx, S.; Boike, J.; Herfort, B.; Wilcox, E.J.; Langer, M.; Marsh, P.; Höfle, B. Multitemporal terrestrial laser scanning point clouds for thaw subsidence observation at Arctic permafrost monitoring sites. Earth Surf. Process Landf. 2020, 45, 1589–1600. [Google Scholar] [CrossRef]

- Girardeau-Montaut, D.; Roux, M.; Marc, R.; Thibault, G. Change detection on point cloud data acquired with a ground laser scanner. In Proceedings of the ISPRS WG III/3, III/4, V/3 Workshop Laser Scanning 2005, Enschede, The Netherlands, 12–14 September 2005; Volume 36. [Google Scholar]

- Dai, C.; Zhang, Z.; Lin, D. An object-based bidirectional method for integrated building extraction and change detection between multimodal point clouds. Remote Sens. 2020, 12, 1680. [Google Scholar] [CrossRef]

- Schachtschneider, J.; Schlichting, A.; Brenner, C. Assessing temporal behavior in lidar point clouds of urban environments. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2017, 42, 543–550. [Google Scholar] [CrossRef]

- Aijazi, A.K.; Checchin, P.; Trassoudaine, L. Detecting and Updating Changes in Lidar Point Clouds for Automatic 3D Urban Cartography. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 2, 7–12. [Google Scholar] [CrossRef]

- Gehrung, J.; Hebel, M.; Arens, M.; Stilla, U. A fast voxel-based indicator for change detection using low resolution octrees. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 4, 357–364. [Google Scholar] [CrossRef]

- Gehrung, J.; Hebel, M.; Arens, M.; Stilla, U. A voxel-based metadata structure for change detection in point clouds of large-scale urban areas. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 4, 97–104. [Google Scholar] [CrossRef]

- Harith, A.; Laefer, D.F.; Dolores, C.; Manuel, V. Voxel Change: Big Data–Based Change Detection for Aerial Urban LiDAR of Unequal Densities. J. Surv. Eng. 2021, 147, 4021023. [Google Scholar] [CrossRef]

- Moravec, H.P. Sensor Fusion in Certainty Grids for Mobile Robots. Sens. Devices Syst. Robot. 1989, 9, 253–276. [Google Scholar] [CrossRef]

- Elfes, A. Occupancy Grids: A Probabilistic Framework for Robot Perception and Navigation; Carnegie Mellon University: Pittsburgh, PA, USA, 1989. [Google Scholar]

- Nguyen, A.; Le, B. 3D point cloud segmentation: A survey. In Proceedings of the 2013 6th IEEE Conference on Robotics, Automation and Mechatronics (RAM), Manila, Philippines, 12–15 November 2013; pp. 225–230. [Google Scholar]

- Liu, W.; Sun, J.; Li, W.; Hu, T.; Wang, P. Deep learning on point clouds and its application: A survey. Sensors 2019, 19, 4188. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep learning for 3D point clouds: A survey. arXiv 2019, arXiv:1912.12033. [Google Scholar] [CrossRef] [PubMed]

- Xie, Y.; Tian, J.; Zhu, X.X. Linking Points with Labels in 3D: A Review of Point Cloud Semantic Segmentation. IEEE Geosci. Remote Sens. Mag. 2020, 8, 38–59. [Google Scholar] [CrossRef]

- Ouyang, B.; Raviv, D. Occlusion guided scene flow estimation on 3D point clouds. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021; pp. 2799–2808. [Google Scholar] [CrossRef]

- Jund, P.; Sweeney, C.; Abdo, N.; Chen, Z.; Shlens, J. Scalable Scene Flow from Point Clouds in the Real World; IEEE: Piscatway, NJ, USA, 2021. [Google Scholar]

- Vedula, S.; Baker, S.; Rander, P.; Collins, R.; Kanade, T. Three-dimensional scene flow. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 475–480. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Sun, Y.; Liu, C.; Liu, M. PointTrackNet: An End-to-End Network for 3-D Object Detection and Tracking from Point Clouds. IEEE Robot. Autom. Lett. 2020, 5, 3206–3212. [Google Scholar] [CrossRef]

- Girão, P.; Asvadi, A.; Peixoto, P.; Nunes, U. 3D object tracking in driving environment: A short review and a benchmark dataset. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 7–12. [Google Scholar] [CrossRef]

- Zeibak, R.; Filin, S. Change detection via terrestrial laser scanning. In Proceedings of the ISPRS Workshop on Laser Scanning, ISPRS Workshop on Laser Scanning 2007 and SilviLaser 2007, Espoo, Finland, 12–14 September 2007; pp. 430–435. [Google Scholar]

- Jiang, H.; Peng, M.; Zhong, Y.; Xie, H.; Hao, Z.; Lin, J.; Ma, X.; Hu, X. A Survey on Deep Learning-Based Change Detection from High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 1552. [Google Scholar] [CrossRef]

- Hemati, M.; Hasanlou, M.; Mahdianpari, M.; Mohammadimanesh, F. A systematic review of landsat data for change detection applications: 50 years of monitoring the earth. Remote Sens. 2021, 13, 2869. [Google Scholar] [CrossRef]

- Pang, S.; Hu, X.; Wang, Z.; Lu, Y. Object-based analysis of airborne LiDAR data for building change detection. Remote Sens. 2014, 6, 10733–10749. [Google Scholar] [CrossRef]

- Xu, S.; Vosselman, G.; Elberink, S.O. Detection and classification of changes in buildings from airborne laser scanning data. Remote Sens. 2015, 7, 17051–17076. [Google Scholar] [CrossRef]

- Chen, J.; Yi, J.S.K.; Kahoush, M.; Cho, E.S.; Cho, Y.K. Point cloud scene completion of obstructed building facades with generative adversarial inpainting. Sensors 2020, 20, 5029. [Google Scholar] [CrossRef]

- Dai, A.; Ritchie, D.; Bokeloh, M.; Reed, S.; Sturm, J.; Niebner, M. ScanComplete: Large-Scale Scene Completion and Semantic Segmentation for 3D Scans. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4578–4587. [Google Scholar] [CrossRef]

- Yang, X.; Zou, H.; Kong, X.; Huang, T.; Liu, Y.; Li, W.; Wen, F.; Zhang, H. Semantic Segmentation-assisted Scene Completion for LiDAR Point Clouds. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 3555–3562. [Google Scholar] [CrossRef]

- Roldao, L.; de Charette, R.; Verroust-Blondet, A. 3D Semantic Scene Completion: A Survey. Int. J. Comput. Vis. 2022, 130, 1978–2005. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Z.; Li, X.; Zang, Y. Data-driven point cloud objects completion. Sensors 2019, 19, 1514. [Google Scholar] [CrossRef]

- Czerniawski, T.; Ma, J.W.; Leite, F. Automated building change detection with amodal completion of point clouds. Autom. Constr. 2021, 124, 103568. [Google Scholar] [CrossRef]

- Singer, N.; Asari, V.K. View-Agnostic Point Cloud Generation for Occlusion Reduction in Aerial Lidar. Remote Sens. 2022, 14, 2955. [Google Scholar] [CrossRef]

- Poux, F.; Billen, R. Voxel-Based 3D Point Cloud Semantic Segmentation : Unsupervised Geometric and Relationship Featuring vs Deep Learning Methods. ISPRS Int. J. Geo-Inf. 2019, 8, 213. [Google Scholar] [CrossRef]

- Wang, J.; Gao, F.; Dong, J.; Zhang, S.; Du, Q. Change Detection From Synthetic Aperture Radar Images via Graph-Based Knowledge Supplement Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1823–1836. [Google Scholar] [CrossRef]

- Khelifi, L.; Mignotte, M. Deep Learning for Change Detection in Remote Sensing Images: Comprehensive Review and Meta-Analysis. IEEE Access 2020, 8, 126385–126400. [Google Scholar] [CrossRef]

- Shafique, A.; Cao, G.; Khan, Z.; Asad, M.; Aslam, M. Deep Learning-Based Change Detection in Remote Sensing Images: A Review. Remote Sens. 2022, 14, 871. [Google Scholar] [CrossRef]

- Chen, H.; Qi, Z.; Shi, Z. Remote Sensing Image Change Detection with Transformers. In IEEE Transactions on Geoscience and Remote Sensing; IEEE: Piscatway, NJ, USA, 2022; Volume 60. [Google Scholar] [CrossRef]

- Shen, L.; Lu, Y.; Chen, H.; Wei, H.; Xie, D.; Yue, J.; Chen, R.; Lv, S.; Jiang, B. S2looking: A satellite side-looking dataset for building change detection. Remote Sens. 2021, 13, 5094. [Google Scholar] [CrossRef]

- Tian, S.; Ma, A.; Zheng, Z.; Zhong, Y. Hi-UCD: A Large-scale Dataset for Urban Semantic Change Detection in Remote Sensing Imagery. ML4D Workshop at Conference on Neural Information Processing Systems (NeurIPS 2020), Vancouver, Canada. arXiv 2020, arXiv:2011.03247. [Google Scholar]

- Yang, K.; Xia, G.-S.; Liu, Z.; Du, B.; Yang, W.; Pelillo, M.; Zhang, L. Semantic Change Detection with Asymmetric Siamese Networks. arXiv 2020, arXiv:2010.05687. [Google Scholar]

- Peng, D.; Bruzzone, L.; Zhang, Y.; Guan, H.; DIng, H.; Huang, X. SemiCDNet: A Semisupervised Convolutional Neural Network for Change Detection in High Resolution Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5891–5906. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Caye Daudt, R.; Le Saux, B.; Boulch, A.; Gousseau, Y. HRSCD-High Resolution Semantic Change Detection Dataset. Available online: https://ieee-dataport.org/open-access/hrscd-high-resolution-semantic-change-detection-dataset (accessed on 27 August 2022). [CrossRef]

- Růžička, V.; D’Aronco, S.; Wegner, J.D.; Schindler, K. Deep active learning in remote sensing for data efficient change detection. arXiv 2020, arXiv:2008.11201. [Google Scholar]

- Nafisa, M.; Babikir, M. Change Detection Techniques using Optical Remote Sensing: A Survey. Am. Sci. Res. J. Eng. Technol. Sci. 2016, 17, 42–51. [Google Scholar]

- Zhu, Z. Change detection using landsat time series: A review of frequencies, preprocessing, algorithms, and applications. ISPRS J. Photogramm. Remote Sens. 2017, 130, 370–384. [Google Scholar] [CrossRef]

- Carrilho, A.C.; Galo, M.; Dos Santos, R.C. Statistical outlier detection method for airborne LiDAR data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2018, 42, 87–92. [Google Scholar] [CrossRef]

- Hu, C.; Pan, Z.; Li, P. A 3D point cloud filtering method for leaves based on manifold distance and normal estimation. Remote Sens. 2019, 11, 198. [Google Scholar] [CrossRef]

- Gao, R.; Li, M.; Yang, S.J.; Cho, K. Reflective Noise Filtering of Large-Scale Point Cloud Using Transformer. Remote Sens. 2022, 14, 577. [Google Scholar] [CrossRef]

- Cai, S.; Zhang, W.; Liang, X.; Wan, P.; Qi, J.; Yu, S. Filtering Airborne LiDAR Data Through Complementary Cloth Simulation and Progressive TIN Densification Filters. Remote Sens. 2019, 11, 1037. [Google Scholar] [CrossRef]

- Li, Y.; Wu, H.; Xu, H.; An, R.; Xu, J.; He, Q. A gradient-constrained morphological filtering algorithm for airborne LiDAR. Opt. Laser Technol. 2013, 54, 288–296. [Google Scholar] [CrossRef]

- Chen, Q.; Gong, P.; Baldocchi, D.; Xie, G. Filtering airborne laser scanning data with morphological methods. Photogramm. Eng. Remote Sens. 2007, 73, 175–185. [Google Scholar] [CrossRef]

- Hui, Z.; Hu, Y.; Yevenyo, Y.Z.; Yu, X. An improved morphological algorithm for filtering airborne LiDAR point cloud based on multi-level kriging interpolation. Remote Sens. 2016, 8, 35. [Google Scholar] [CrossRef]

- Pingel, T.J.; Clarke, K.C.; McBride, W.A. An improved simple morphological filter for the terrain classification of airborne LIDAR data. ISPRS J. Photogramm. Remote Sens. 2013, 77, 21–30. [Google Scholar] [CrossRef]

- Hu, H.; Ding, Y.; Zhu, Q.; Wu, B.; Lin, H.; Du, Z.; Zhang, Y.; Zhang, Y. An adaptive surface filter for airborne laser scanning point clouds by means of regularization and bending energy. ISPRS J. Photogramm. Remote Sens. 2014, 92, 98–111. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, H.; Zhang, H.; Sun, M.; Liu, X. A point cloud filtering approach to generating DTMs for steep mountainous areas and adjacent residential areas. Remote Sens. 2016, 8, 71. [Google Scholar] [CrossRef]

- Mongus, D.; Žalik, B. Parameter-free ground filtering of LiDAR data for automatic DTM generation. ISPRS J. Photogramm. Remote Sens. 2012, 67, 1–12. [Google Scholar] [CrossRef]

- Vosselman, G. Slope based filtering of laser altimetry data. Int. Arch. Photogramm. Remote Sens. 2000, 33, 678–684. [Google Scholar] [CrossRef]

- Meng, X.; Currit, N.; Zhao, K. Ground filtering algorithms for airborne LiDAR data: A review of critical issues. Remote Sens. 2010, 2, 833–860. [Google Scholar] [CrossRef]

- Lin, X.; Zhang, J. Segmentation-based filtering of airborne LiDAR point clouds by progressive densification of terrain segments. Remote Sens. 2014, 6, 1294–1326. [Google Scholar] [CrossRef]

- Richter, R.; Behrens, M.; Döllner, J. Object class segmentation of massive 3D point clouds of urban areas using point cloud topology. Int. J. Remote Sens. 2013, 34, 8408–8424. [Google Scholar] [CrossRef]

- Yan, M.; Blaschke, T.; Liu, Y.; Wu, L. An object-based analysis filtering algorithm for airborne laser scanning. Int. J. Remote Sens. 2012, 33, 7099–7116. [Google Scholar] [CrossRef]

- Sithole, G.; Vosselman, G. Filtering of airborne laser scanner data based on segmented point clouds. Geoinf. Sci. 2005, 36, 66–71. [Google Scholar]

- Bellekens, B.; Spruyt, V.; Weyn, M. A Survey of Rigid 3D Pointcloud Registration Algorithms. In Proceedings of the AMBIENT 2014: The Fourth International Conference on Ambient Computing, Applications, Services and Technologies, Rome, Italy, 24–28 August 2014; pp. 8–13. [Google Scholar]

- Zhang, Z.; Dai, Y.; Sun, J. Deep learning based point cloud registration: An overview. Virtual Real. Intell. Hardw. 2020, 2, 222–246. [Google Scholar] [CrossRef]

- Huang, X.; Mei, G.; Zhang, J.; Abbas, R. A comprehensive survey on point cloud registration. arXiv 2021, arXiv:2103.02690. [Google Scholar]

- Axelsson, P. DEM Generation from Laser Scanner Data Using Adaptive TIN Models. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2000, 33, 110–117. [Google Scholar]

- Matikainen, L.; Hyyppä, J.; Kaartinen, H. Automatic detection of changes from laser scanner and aerial image data for updating building maps. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2004, 35, 434–439. [Google Scholar]

- Vu, T.T.; Matsuoka, M.; Yamazaki, F. LIDAR-based change detection of buildings in dense urban areas. Int. Geosci. Remote Sens. Symp. 2004, 5, 3413–3416. [Google Scholar] [CrossRef]

- Vosselman, G.; Sithole, G.; Systems, S. CHANGE DETECTION FOR UPDATING MEDIUM SCALE MAPS USING LASER. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 34, 207–212. [Google Scholar]

- Choi, K.; Lee, I.; Kim, S. A Feature Based Approach to Automatic Change Detection from Lidar Data in Urban Areas. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2009, 18, 259–264. [Google Scholar]

- Matikainen, L.; Hyyppä, J.; Ahokas, E.; Markelin, L.; Kaartinen, H. Automatic detection of buildings and changes in buildings for updating of maps. Remote Sens. 2010, 2, 1217–1248. [Google Scholar] [CrossRef]

- Stal, C.; Tack, F.; de Maeyer, P.; de Wulf, A.; Goossens, R. Airborne photogrammetry and lidar for DSM extraction and 3D change detection over an urban area-a comparative study. Int. J. Remote Sens. 2013, 34, 1087–1110. [Google Scholar] [CrossRef]

- Malpica, J.A.; Alonso, M.C.; Papí, F.; Arozarena, A.; De Agirrea, A.M. Change detection of buildings from satellite imagery and lidar data. Int. J. Remote Sens. 2013, 34, 1652–1675. [Google Scholar] [CrossRef]

- Teo, T.A.; Shih, T.Y. Lidar-based change detection and change-type determination in urban areas. Int. J. Remote Sens. 2013, 34, 968–981. [Google Scholar] [CrossRef]

- Zhang, X.; Glennie, C. Change detection from differential airborne LiDAR using a weighted anisotropic iterative closest point algorithm. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; IEEE: Piscatway, NJ, USA, 2014; pp. 2162–2165. [Google Scholar]

- Tang, F.; Xiang, Z.; Teng, D.; Hu, B.; Bai, Y. A multilevel change detection method for buildings using laser scanning data and GIS data. In Proceedings of the 2015 IEEE International Conference on Digital Signal Processing (DSP), Singapore, 21–24 July 2015; pp. 1011–1015. [Google Scholar]

- Xu, S.; Vosselman, G.; Oude Elberink, S. Detection and Classification of Changes in Buildings from Airborne Laser Scanning Data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 2, 343–348. [Google Scholar] [CrossRef]

- Xu, H.; Cheng, L.; Li, M.; Chen, Y.; Zhong, L. Using octrees to detect changes to buildings and trees in the urban environment from airborne liDAR data. Remote Sens. 2015, 7, 9682–9704. [Google Scholar] [CrossRef]

- Du, S.; Zhang, Y.; Qin, R.; Yang, Z.; Zou, Z.; Tang, Y.; Fan, C. Building change detection using old aerial images and new LiDAR data. Remote Sens. 2016, 8, 1030. [Google Scholar] [CrossRef]

- Matikainen, L.; Hyyppä, J.; Litkey, P. Multispectral airborne laser scanning for automated map updating. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences-ISPRS Archives, Prague, Czech Republic, 12–19 July 2016; Volume 41, pp. 323–330. [Google Scholar]

- Matikainen, L.; Karila, K.; Hyyppä, J.; Litkey, P.; Puttonen, E.; Ahokas, E. Object-based analysis of multispectral airborne laser scanner data for land cover classification and map updating. ISPRS J. Photogramm. Remote Sens. 2017, 128, 298–313. [Google Scholar] [CrossRef]

- Zhao, K.; Suarez, J.C.; Garcia, M.; Hu, T.; Wang, C.; Londo, A. Utility of multitemporal lidar for forest and carbon monitoring: Tree growth, biomass dynamics, and carbon flux. Remote Sens. Environ. 2018, 204, 883–897. [Google Scholar] [CrossRef]

- Marinelli, D.; Paris, C.; Bruzzone, L. A Novel Approach to 3-D Change Detection in Multitemporal LiDAR Data Acquired in Forest Areas. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3030–3046. [Google Scholar] [CrossRef]

- Zhang, Z.; Vosselman, G.; Gerke, M.; Persello, C.; Tuia, D.; Yang, M.Y. Change detection between digital surface models from airborne laser scanning and dense image matching using convolutional neural networks. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 4, 453–460. [Google Scholar] [CrossRef]

- Zhang, Z.; Vosselman, G.; Gerke, M.; Persello, C.; Tuia, D.; Yang, M.Y. Detecting building changes between airborne laser scanning and photogrammetric data. Remote Sens. 2019, 11, 2417. [Google Scholar] [CrossRef]

- Fekete, A.; Cserep, M. Tree segmentation and change detection of large urban areas based on airborne LiDAR. Comput. Geosci. 2021, 156, 104900. [Google Scholar] [CrossRef]

- Huang, R.; Xu, Y.; Hoegner, L.; Stilla, U. Semantics-aided 3D change detection on construction sites using UAV-based photogrammetric point clouds. Autom. Constr. 2021, 134, 104057. [Google Scholar] [CrossRef]

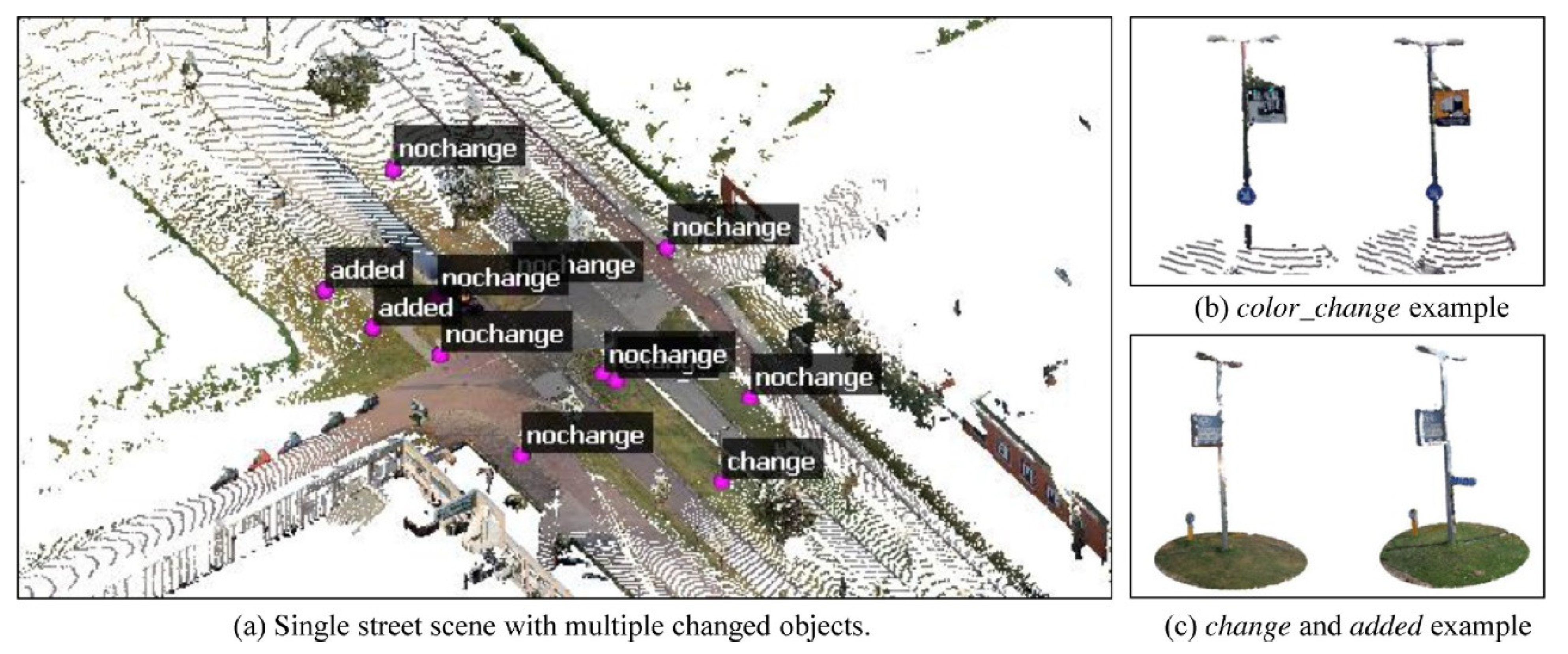

- Ku, T.; Galanakis, S.; Boom, B.; Veltkamp, R.C.; Bangera, D.; Gangisetty, S.; Stagakis, N.; Arvanitis, G.; Moustakas, K. SHREC 2021: 3D Point Cloud Change Detection for Street Scenes; Elsevier: Amsterdam, The Netherlands, 2021; Volume 99. [Google Scholar]

- De Gelis, I.; Lefèvre, S.; Corpetti, T. Détection de changements urbains 3D par un réseau Siamois sur nuage de points. In Proceedings of the ORASIS 2021, Saint Ferréol, France, 13–17 September 2021. [Google Scholar]

- Tran, T.H.G.; Ressl, C.; Pfeifer, N. Integrated change detection and classification in urban areas based on airborne laser scanning point clouds. Sensors (Switzerland) 2018, 18, 448. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. Photogrammetric Point Clouds: Quality Assessment, Filtering, and Change Detection. Ph.D. Thesis, University of Twente, Faculty of Geo-Information Science and Earth Observation (ITC), 2022; 170p. [Google Scholar] [CrossRef]

- Williams, R.D. DEMs of Difference. Geomorphol. Technol. 2012, 2, 1–17. [Google Scholar]

- Washington-allen, R. Las2DoD: Change Detection Based on Digital Elevation Models Derived from Dense Point Clouds with Spatially Varied Uncertainty. Remote Sens. 2022, 14, 1537. [Google Scholar]

- Scott, C.P.; Beckley, M.; Phan, M.; Zawacki, E.; Crosby, C.; Nandigam, V.; Arrowsmith, R. Statewide USGS 3DEP Lidar Topographic Differencing Applied to Indiana, USA. Remote Sens. 2022, 14, 847. [Google Scholar] [CrossRef]

- Cignoni, P.; Rocchini, C.; Scopigno, R. Metro: Measuring Error on Simplified Surfaces. Comput. Graph. Forum 1998, 17, 167–174. [Google Scholar] [CrossRef]

- Barnhart, T.B.; Crosby, B.T. Comparing two methods of surface change detection on an evolving thermokarst using high-temporal-frequency terrestrial laser scanning, Selawik River, Alaska. Remote Sens. 2013, 5, 2813–2837. [Google Scholar] [CrossRef]

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (N-Z). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef]

- Zahs, V.; Winiwarter, L.; Anders, K.; Williams, J.G.; Rutzinger, M.; Höfle, B. Correspondence-driven plane-based M3C2 for lower uncertainty in 3D topographic change quantification. ISPRS J. Photogramm. Remote Sens. 2022, 183, 541–559. [Google Scholar] [CrossRef]

- Wagner, A. A new approach for geo-monitoring using modern total stations and RGB + D images. Meas. J. Int. Meas. Confed. 2016, 82, 64–74. [Google Scholar] [CrossRef]

- Gojcic, Z.; Zhou, C.; Wieser, A. F2S3: Robustified determination of 3D displacement vector fields using deep learning. J. Appl. Geod. 2020, 14, 177–189. [Google Scholar] [CrossRef]

- Gojcic, Z.; Zhou, C.; Wieser, A. Robust point correspondences for point cloud based deformation monitoring of natural structures. In Proceedings of the 4 th Joint International Symposium on Deformation Monitoring (JISDM), Athens, Greece, 15–17 May 2019; pp. 15–17. [Google Scholar]

- Poiesi, F.; Boscaini, D. Distinctive 3D local deep descriptors. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 5720–5727. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic Graph CNN for Learning on Point Clouds. ACM Trans. Graph. 2019, 38, 146. [Google Scholar] [CrossRef]

- Thomas, H.; Qi, C.R.; Deschaud, J.E.; Marcotegui, B.; Goulette, F.; Guibas, L. KPConv: Flexible and deformable convolution for point clouds. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 6410–6419. [Google Scholar] [CrossRef]

- Krishnan, S.; Crosby, C.; Nandigam, V.; Phan, M.; Cowart, C.; Baru, C.; Arrowsmith, R. Opentopography: A Services Oriented Architecture for community access to LIDAR topography. In Proceedings of the COM.Geo 2011-2nd International Conference on Computing for Geospatial Research and Applications, 2011, Washington, DC, USA, 23 May 2011. [Google Scholar] [CrossRef]

- Crosby, C.J.; Arrowsmith, R.J.; Nandigam, V.; Baru, C. Online access and processing of LiDAR topography data. In Geoinformatics; Keller, G.R., Baru, C., Eds.; Cambridge University Press: Cambridge, UK, 2011; pp. 251–265. ISBN 9780511976308. [Google Scholar]

- Scott, C.; Phan, M.; Nandigam, V.; Crosby, C.; Arrowsmith, J.R. Measuring change at Earth’s surface: On-demand vertical and three-dimensional topographic differencing implemented in OpenTopography. Geosphere 2021, 17, 1318–1332. [Google Scholar] [CrossRef]

- Cemellini, B.; van Opstal, W.; Wang, C.-K.; Xenakis, D. Chronocity: Towards an Open Point Cloud Map Supporting on-the-Fly Change Detection. Master’s Thesis, Delft University of Technology, Delft, The Netherlands, 2017. Available online: http://resolver.tudelft.nl/uuid:4088debb-bc22-48d3-9085-a847aa3d3c92 (accessed on 27 August 2022).

- Hebel, M.; Arens, M.; Stilla, U. Change detection in urban areas by object-based analysis and on-the-fly comparison of multi-view ALS data. ISPRS J. Photogramm. Remote Sens. 2013, 86, 52–64. [Google Scholar] [CrossRef]

- Vos, S.; Lindenbergh, R.; de Vries, S. CoastScan: Continuous Monitoring of Coastal Change Using Terrestrial Laser Scanning. Coast. Dyn. 2017, 2, 1518–1528. [Google Scholar]

- Anders, K.; Winiwarter, L.; Mara, H.; Lindenbergh, R.; Vos, S.E.; Höfle, B. Fully automatic spatiotemporal segmentation of 3D LiDAR time series for the extraction of natural surface changes. ISPRS J. Photogramm. Remote Sens. 2021, 173, 297–308. [Google Scholar] [CrossRef]

- Fehr, M.; Furrer, F.; Dryanovski, I.; Sturm, J.; Gilitschenski, I.; Siegwart, R.; Cadena, C. TSDF-based change detection for consistent long-term dense reconstruction and dynamic object discovery. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5237–5244. [Google Scholar] [CrossRef]

- Vos, S.; Anders, K.; Kuschnerus, M.; Lindenbergh, R.; Höfle, B.; Aarninkhof, S.; De Vries, S. A high-resolution 4D terrestrial laser scan dataset of the Kijkduin. Sci. Data 2022, 9, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Zahs, V.; Winiwarter, L.; Anders, K.; Williams, J.G.; Rutzinger, M.; Bremer, M.; Höfle, B. Correspondence-Driven Plane-Based M3C2 for Quantification of 3D Topographic Change with Lower Uncertainty [110Data and Source Code]; heiDATA: Heidelberg, Germany, 2021. [Google Scholar] [CrossRef]

- Anders, K.; Winiwarter, L.; Hofle, B. Improving Change Analysis from Near-Continuous 3D Time Series by Considering Full Temporal Information. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Gehrung, J.; Hebel, M.; Arens, M.; Stilla, U. AN APPROACH to EXTRACT MOVING OBJECTS from MLS DATA USING A VOLUMETRIC BACKGROUND REPRESENTATION. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 107–114. [Google Scholar] [CrossRef]

- Zhu, J.; Gehrung, J.; Huang, R.; Borgmann, B.; Sun, Z.; Hoegner, L.; Hebel, M.; Xu, Y.; Stilla, U. TUM-MLS-2016: An annotated mobile LiDAR dataset of the TUM city campus for semantic point cloud interpretation in urban areas. Remote Sens. 2020, 12, 1875. [Google Scholar] [CrossRef]

- De Gélis, I.; Lefèvre, S.; Corpetti, T. Urb3DCD: Urban Point Clouds Simulated Dataset for 3D Change Detection. Remote Sens. 2021, 13, 2629. [Google Scholar] [CrossRef]

- Palazzolo, E. Fast Image-Based Geometric Change Detection in a 3D Model. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 6308–6315. [Google Scholar]

- Winiwarter, L.; Anders, K.; Höfle, B. M3C2-EP: Pushing the limits of 3D topographic point cloud change detection by error propagation. ISPRS J. Photogramm. Remote Sens. 2021, 178, 240–258. [Google Scholar] [CrossRef]

- Mayr, A.; Bremer, M.; Rutzinger, M.; Quality, P.C. 3D point errors and change detection accuracy of unmanned aerial vehicle laser scanning data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, V, 765–772. [Google Scholar] [CrossRef]

- Winiwarter, L.; Anders, K.; Wujanz, D.; Höfle, B. Influence of Ranging Uncertainty of Terrestrial Laser Scanning on Change Detection in Topographic 3D Point Clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 5, 789–796. [Google Scholar] [CrossRef]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A survey on deep transfer learning. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2018; Volume 11141, pp. 270–279. [Google Scholar] [CrossRef]

- Chen, Y.; Hu, V.T.; Gavves, E.; Mensink, T.; Mettes, P.; Yang, P.; Snoek, C.G.M. PointMixup: Augmentation for Point Clouds. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2020; Volume 12348, pp. 330–345. [Google Scholar] [CrossRef]

- Šebek, P.; Pokorný, Š.; Vacek, P.; Svoboda, T. Real3D-Aug: Point Cloud Augmentation by Placing Real Objects with Occlusion Handling for 3D Detection and Segmentation. arXiv 2022, arXiv:2206.07634. [Google Scholar]

- Nagy, B.; Kovacs, L.; Benedek, C. ChangeGAN: A Deep Network for Change Detection in Coarsely Registered Point Clouds. IEEE Robot. Autom. Lett. 2021, 6, 8277–8284. [Google Scholar] [CrossRef]

- Li, C.L.; Zaheer, M.; Zhang, Y.; Póczos, B.; Salakhutdinov, R. Point cloud gan. arXiv 2019, arXiv:1810.05795. [Google Scholar]

- Yuan, W.; Yuan, X.; Fan, Z.; Guo, Z.; Shi, X.; Gong, J.; Shibasaki, R. Graph neural network based multi-feature fusion for building change detection. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2021, 43, 377–382. [Google Scholar] [CrossRef]

- Wu, J.; Li, B.; Qin, Y.; Ni, W.; Zhang, H.; Fu, R.; Sun, Y. A multiscale graph convolutional network for change detection in homogeneous and heterogeneous remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102615. [Google Scholar] [CrossRef]

| 2D CD | 3D CD | |

|---|---|---|

| Data source | Optical images, multi spectral images, RADAR images [66], Digital Surface, Terrain, and Canopy Models, and 2D Vector data. | Point clouds, InSAR (Interferometric SAR), Digital surface model, stereo and multi-view images, 3D models, building information models, and RGB-D images. |

| Advantages | Well-investigated [7,67,68,69], available datasets [70,71,72,73,74,75], available implementation [68,74,76] | Height component, Robust to illumination differences, Free of perspective effect, and provide volumetric differences. |

| Disadvantages | Strongly affected by illumination and atmospheric conditions. Limited by viewpoint and perspective distortions. | Unreliable 3D information may result in artifacts. Limited data availability. Expensive processing. |

| Authors | Year | Input Data | Change Detection Approach | Change | ||

|---|---|---|---|---|---|---|

| LiDAR | Image | Maps | Detection Class | |||

| Matikainen et al. [100] | 2004 | X | Ortho | X | Post-classification | Building |

| Vu et al. [101] | 2004 | X | Pre-classification | Building | ||

| DSM-based | ||||||

| Vosselman et al. [102] | 2004 | X | X | Post-classification | Building | |

| Choi et al. [103] | 2009 | X | Post-classification | Ground, vegetation, Building | ||

| Matikainen et al. [104] | 2010 | X | Ortho | X | Post-classification | Building |

| Stal et al. [105] | 2013 | X | Ortho | Post-classification | Building | |

| Malpica et al. [106] | 2013 | X | Original | Post-classification | Building | |

| Teo et al. [107] | 2013 | X | Post-classification | Building | ||

| DSM-based | ||||||

| Pang et al. [56] | 2014 | X | Pre-classification | Building | ||

| DSM-based | ||||||

| Zhang et al. [108] | 2014 | X | Pre-classification | Ground | ||

| Tang et al. [109] | 2015 | X | X | Post-classification | Building | |

| Awrangjeb et al. [26] | 2015 | X | X | Post-classification | Building | |

| Xu et al. [57,110] | 2013, 2015 | X | Post-classification | Building | ||

| Xu et al. [57,111] | 2015 | X | Pre-classification | Building, tree | ||

| Du et al. [112] | 2016 | X | Original | Pre-classification | Building | |

| Matikainen et al. [113] | 2016 | X | Ortho | X | Post-classification | Building |

| Matikainen et al. [114] | 2017 | X | Ortho | X | Post-classification | Building, roads |

| Kaiguang et al. [115] | 2018 | X | Post-classification | Forest | ||

| Marinelli et al. [116] | 2018 | X | Post-classification | Forest | ||

| Zhang et al. [117] | 2019 | X | Ortho | Integrated | Building | |

| Zhang et al. [118] | 2019 | X | Ortho | Integrated | Building | |

| Yrttimaa et al. [22] | 2020 | X | Post-classification | Forest | ||

| Fekete et al. [119] | 2021 | X | Post-classification | Tree | ||

| DSM-based | ||||||

| Huang et al. [120] | 2021 | X | Original | Post-classification | Building | |

| Ku et al. [121] | 2021 | X | Integrated | Building, street, tree | ||

| Iris et al. [122] | 2021 | X | Integrated | Building | ||

| Tran et al. [123] | 2021 | X | Integrated | Ground, vegetation, building | ||

| Zhang [124] | 2022 | X | Integrated | Building | ||

| Dai et al. [36] | 2022 | X | Integrated | Building | ||

| Dataset | Class Label | Change Label | Years | Reference |

|---|---|---|---|---|

| OpenTopography | Multiple years | [138,139] | ||

| AHN1, AHN2, AHN3, AHN4 | X | Multiple years | [141] | |

| Abenberg—ALS test dataset | X | 2008–2009 | [142] | |

| 4D objects by changes | X | 2017 | [143,144] | |

| ICRA 2017—Change Detection Datasets | 2017 | [145] | ||

| PLS dataset of Kijkduin beach-dune | 2016–2017 | [146] | ||

| CG-PB-M3C2 | 2019 | [147] | ||

| Near-continuous 3D time series | [148] | |||

| Change3D Benchmark | X | 2016–2020 | [121] | |

| TUM City Campus—MLS test dataset | X | 2009–2016–2018 | [150] | |

| URB3DCD | X | X | [122] | |

| The 2017 Change Detection Dataset | X | 2017 | [152] |

| Detected | Reference | |

|---|---|---|

| Changed | Not Changed | |

| Changed | TP | FP |

| Not changed | FN | TN |

| Metric | Description | Equation |

|---|---|---|

| Overall accuracy | It is the general evaluation metric for prediction results. | |

| Precision | It measures the fraction of detections that were changed. | |

| Recall | It measures the fraction of correctly detected changes. | |

| F1 score | It refers to recall and precision together. | |

| Intersection over union | Or the Jaccard Index. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kharroubi, A.; Poux, F.; Ballouch, Z.; Hajji, R.; Billen, R. Three Dimensional Change Detection Using Point Clouds: A Review. Geomatics 2022, 2, 457-485. https://doi.org/10.3390/geomatics2040025

Kharroubi A, Poux F, Ballouch Z, Hajji R, Billen R. Three Dimensional Change Detection Using Point Clouds: A Review. Geomatics. 2022; 2(4):457-485. https://doi.org/10.3390/geomatics2040025

Chicago/Turabian StyleKharroubi, Abderrazzaq, Florent Poux, Zouhair Ballouch, Rafika Hajji, and Roland Billen. 2022. "Three Dimensional Change Detection Using Point Clouds: A Review" Geomatics 2, no. 4: 457-485. https://doi.org/10.3390/geomatics2040025

APA StyleKharroubi, A., Poux, F., Ballouch, Z., Hajji, R., & Billen, R. (2022). Three Dimensional Change Detection Using Point Clouds: A Review. Geomatics, 2(4), 457-485. https://doi.org/10.3390/geomatics2040025