Effective Automated Procedures for Hydrographic Data Review

Abstract

:1. Introduction

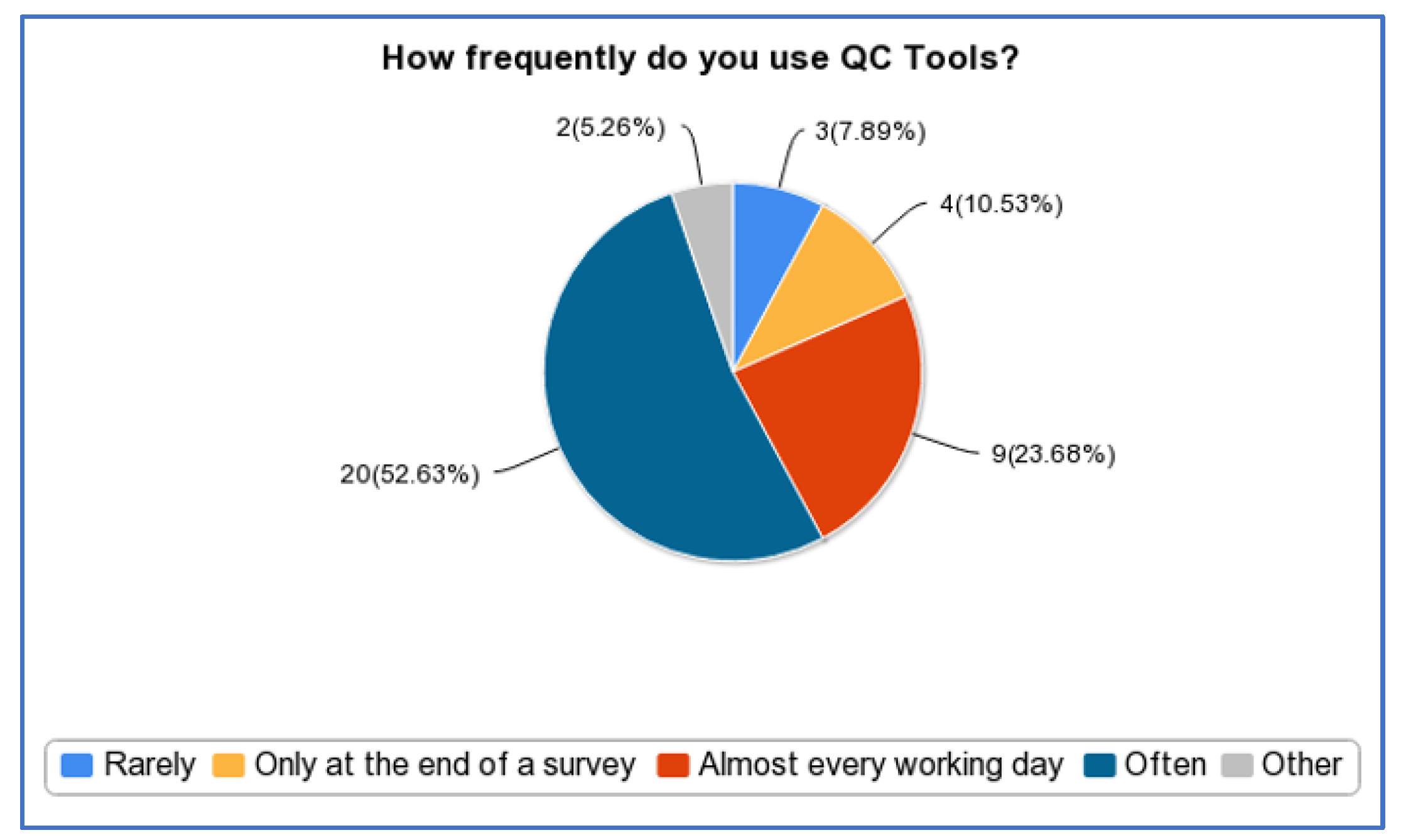

2. Rationale and Design Principles

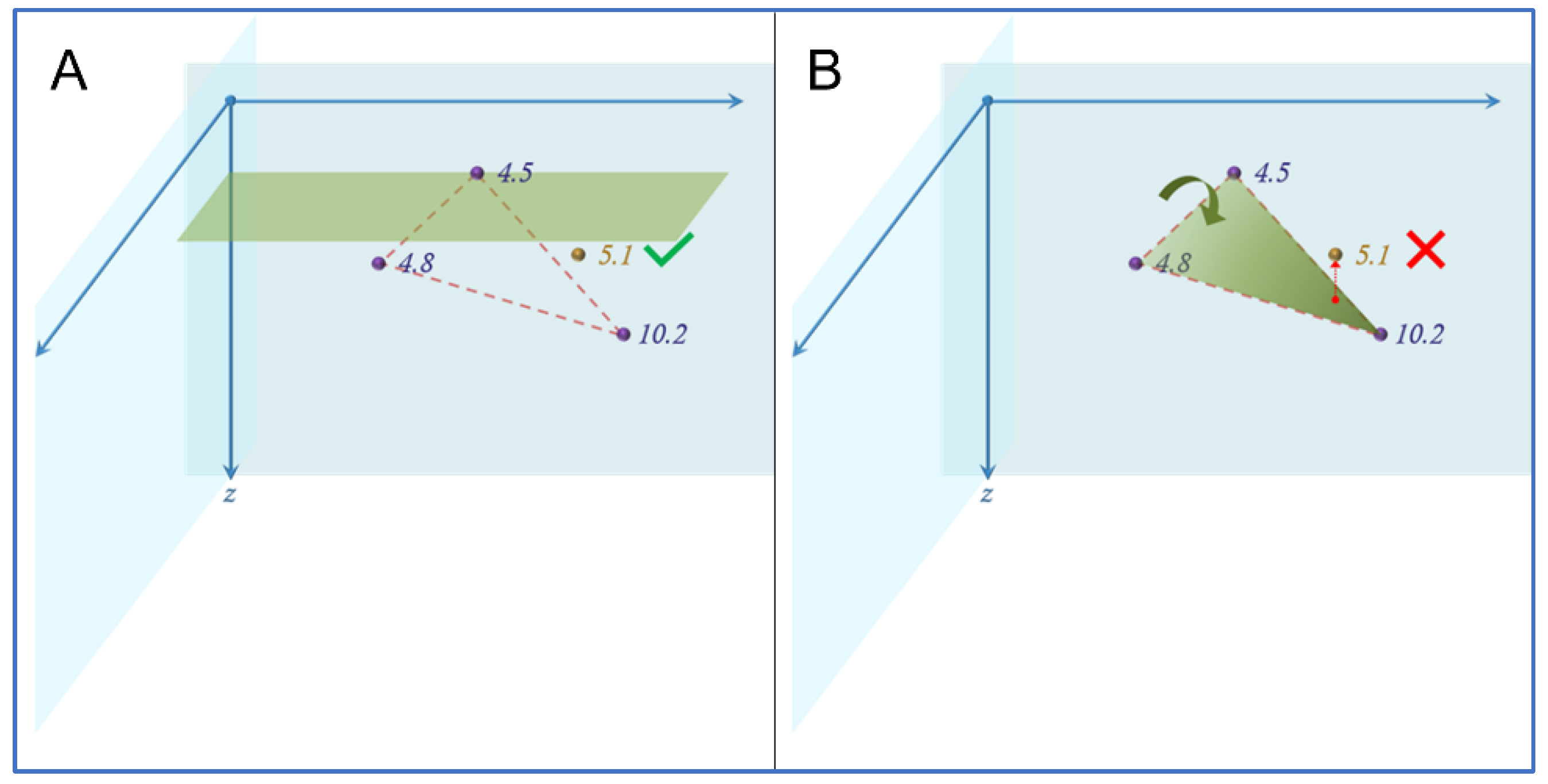

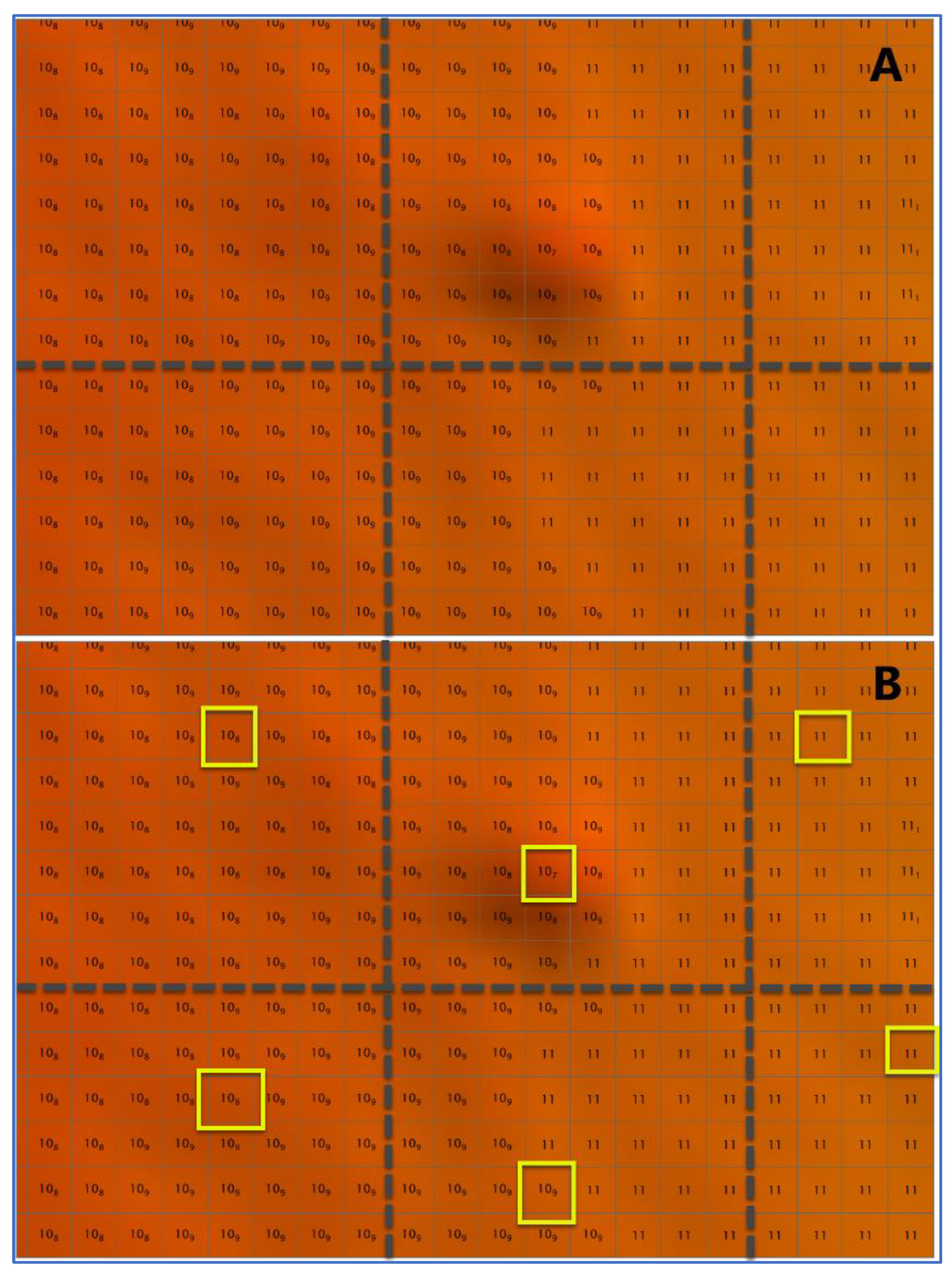

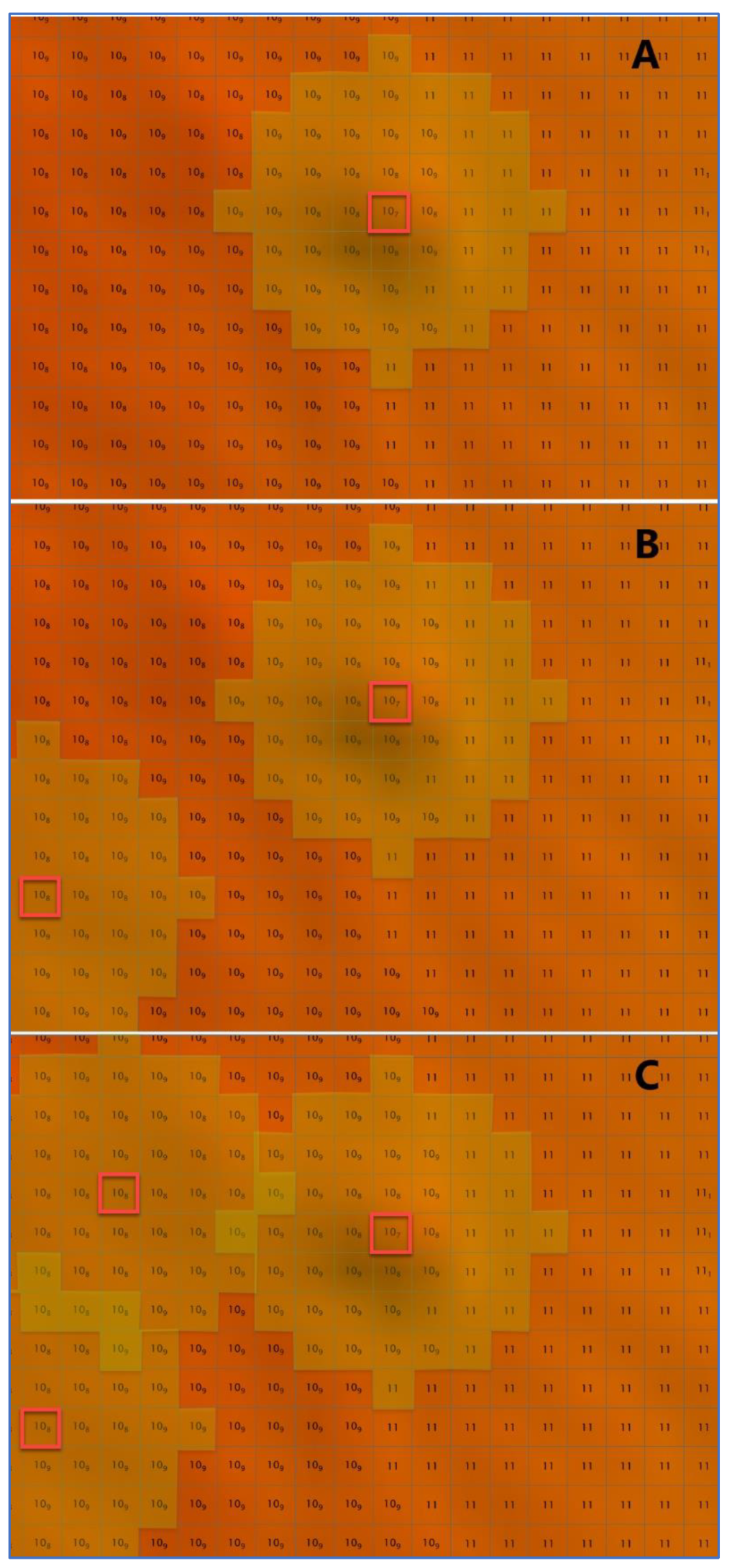

2.1. Grid Quality Control

2.2. Significant Features Validation

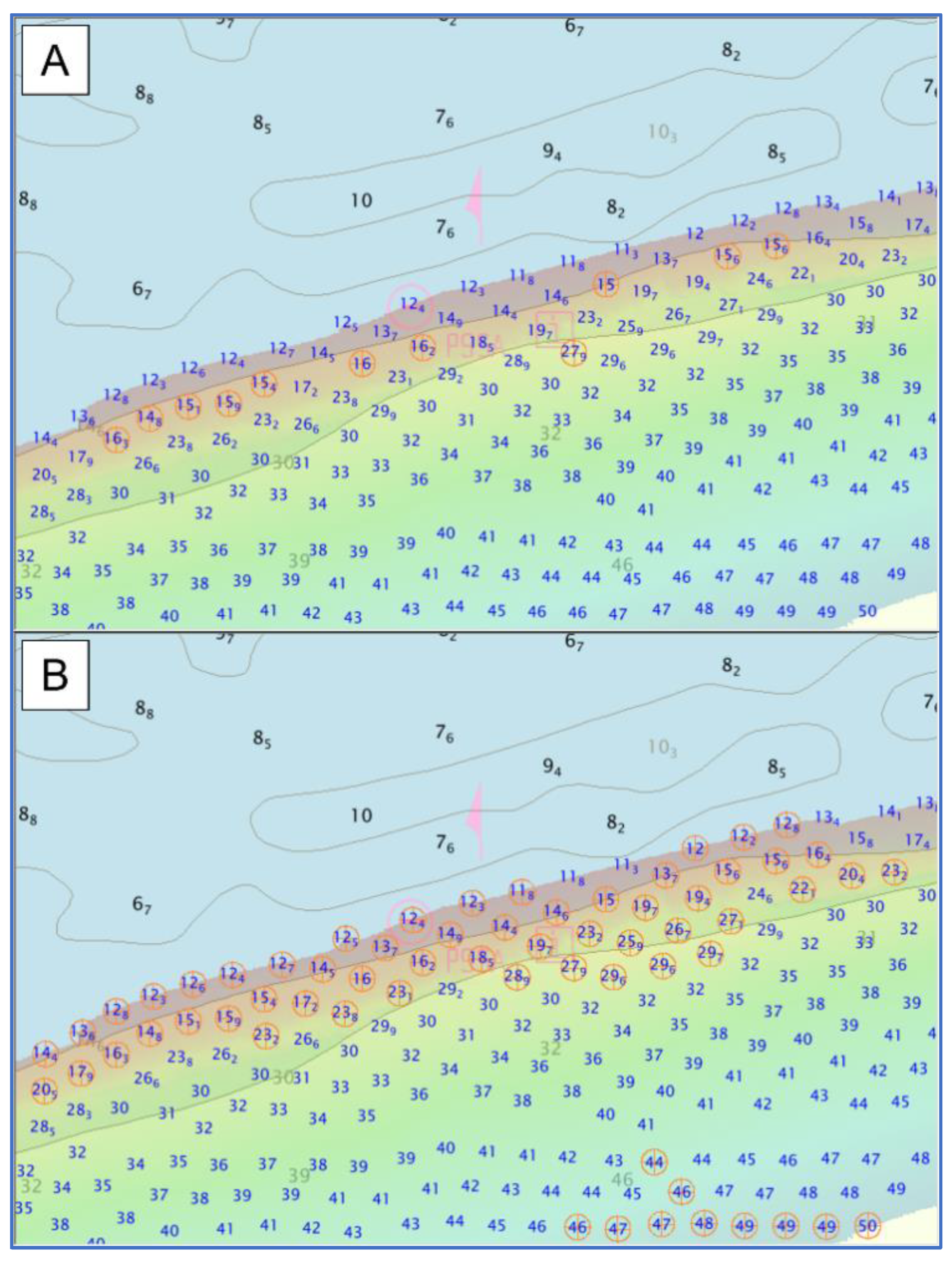

2.3. Survey Soundings and Chart Adequacy

3. Implementation and Results

- Several scripts that can be used as a foundation to create new, custom algorithms.

- A command line interface useful to integrate some of the algorithms in the processing pipeline.

- An application with a graphical user interface (the app).

3.1. QC Tools

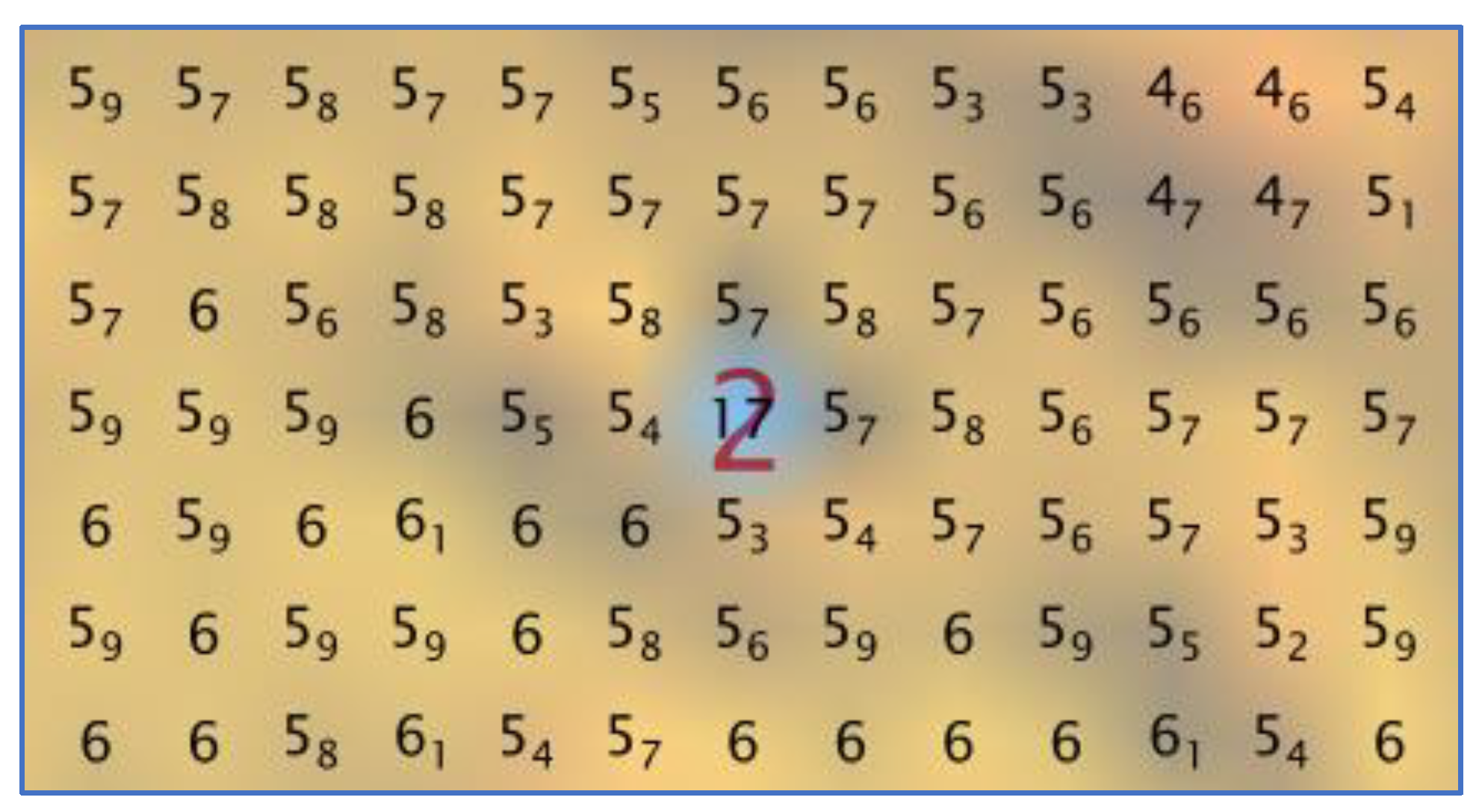

- Detect candidate fliers and significant holidays in gridded bathymetry.

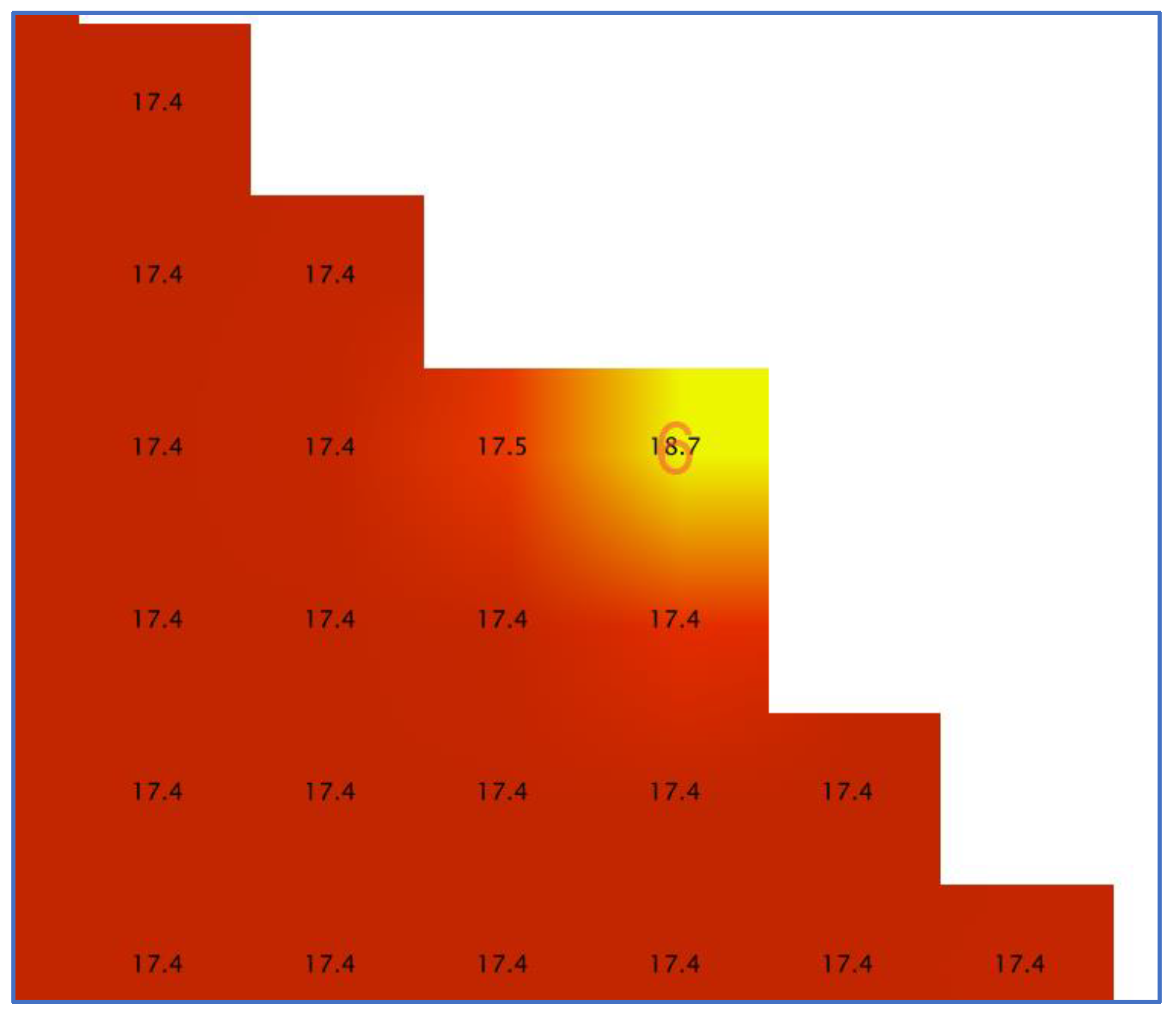

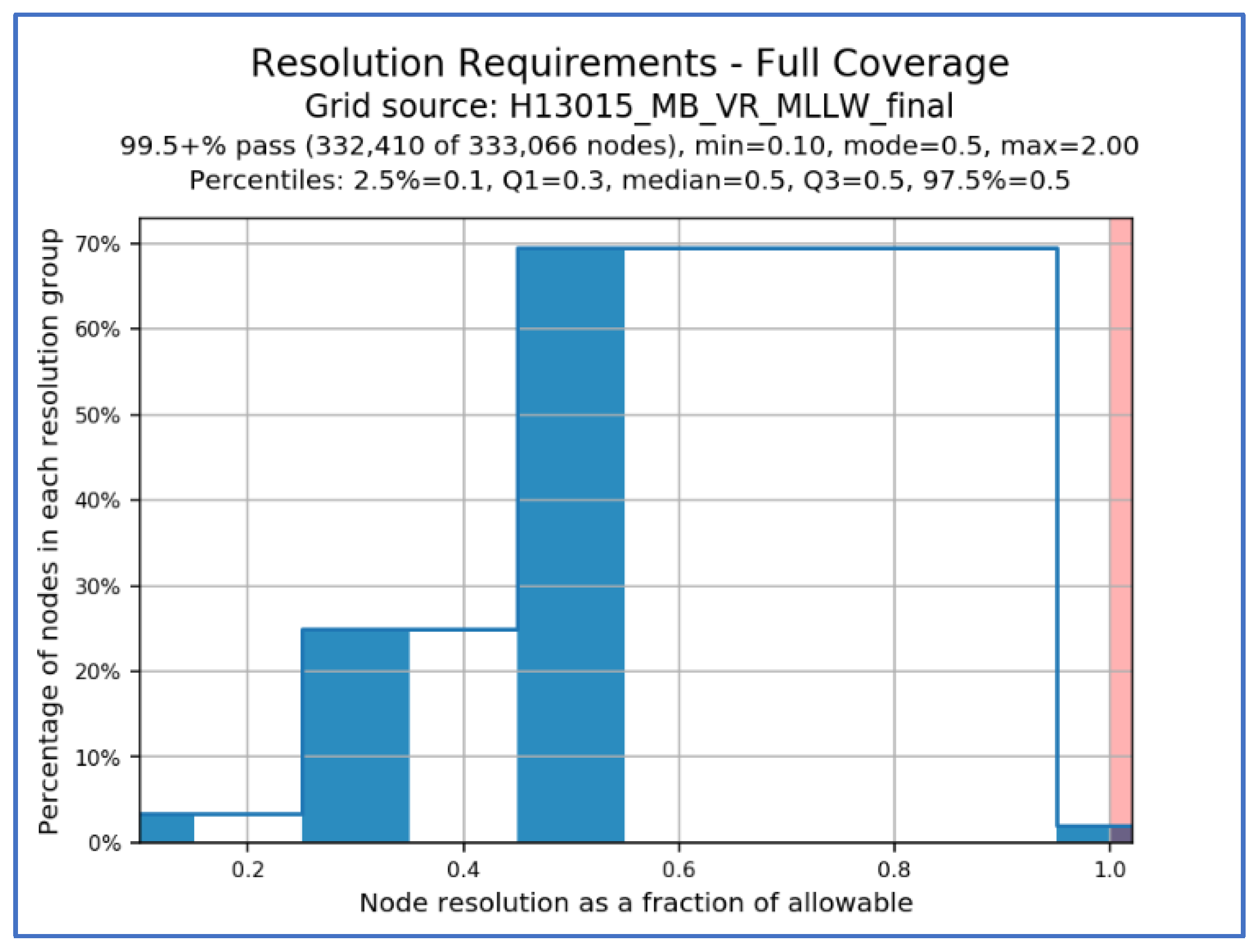

- Ensure that gridded bathymetry fulfills statistical requirements (e.g., sounding density and uncertainty).

- Check the validity of BAG files containing gridded bathymetry.

- Scan selected designated soundings to ensure their significance.

- Validate the attributes of significant features.

- Ensure consistency between grids and significant features.

- Extract seabed area characteristics for public distribution.

- Analyze the folder structure of a survey dataset for proper archival.

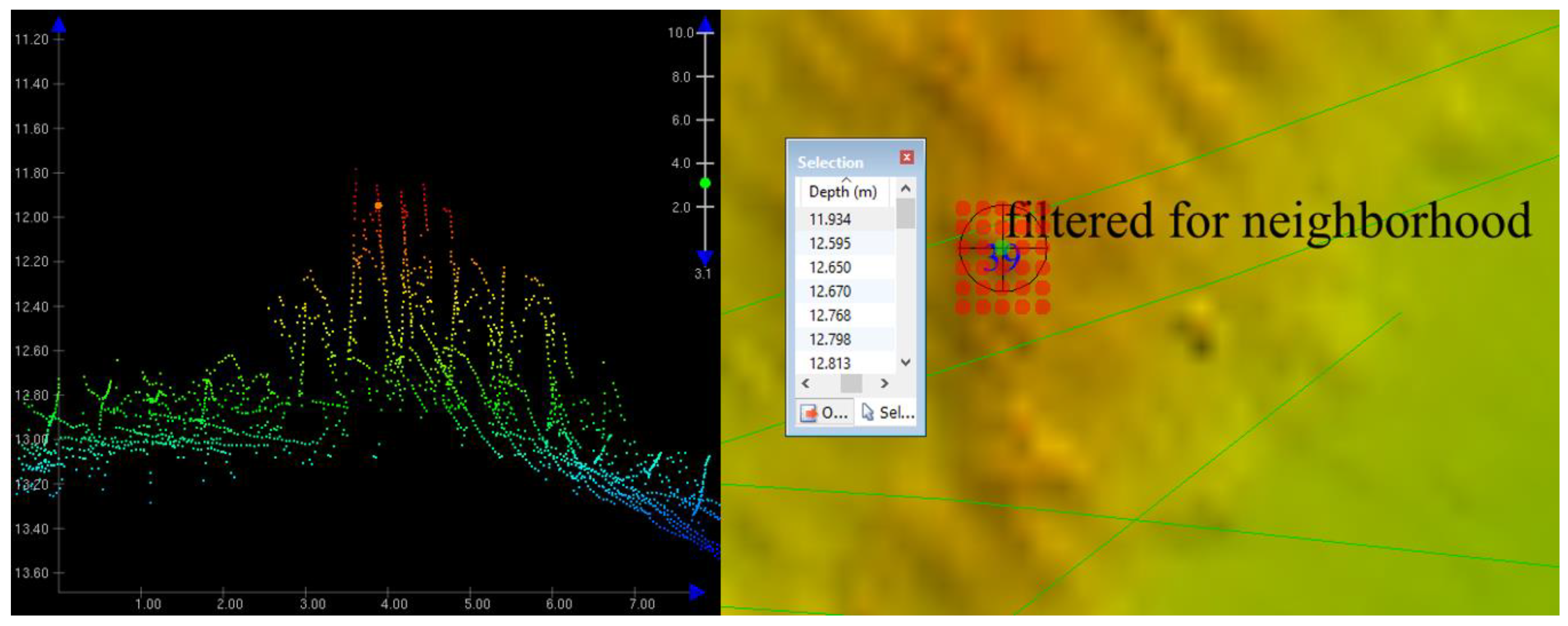

3.1.1. Grid Quality Controls

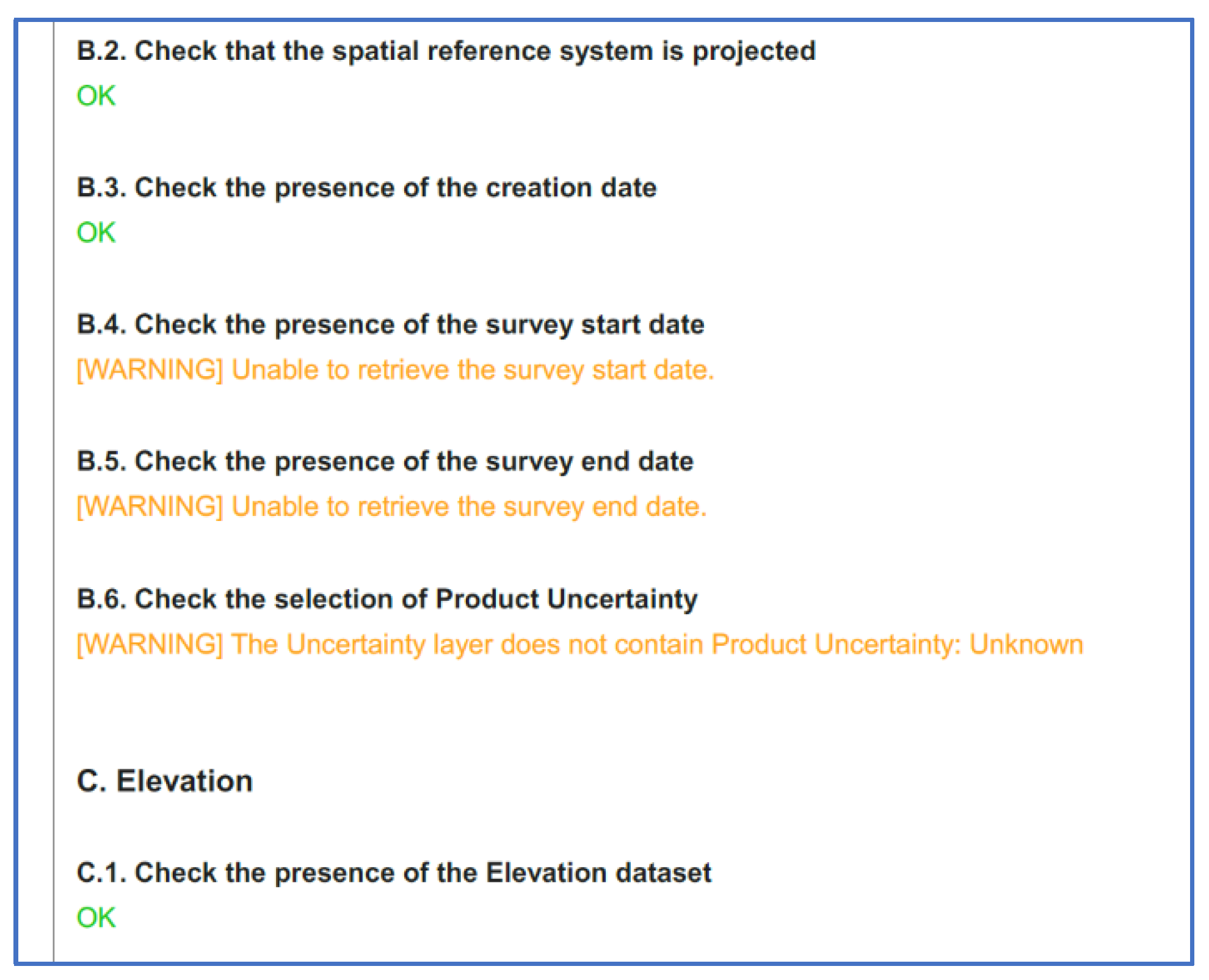

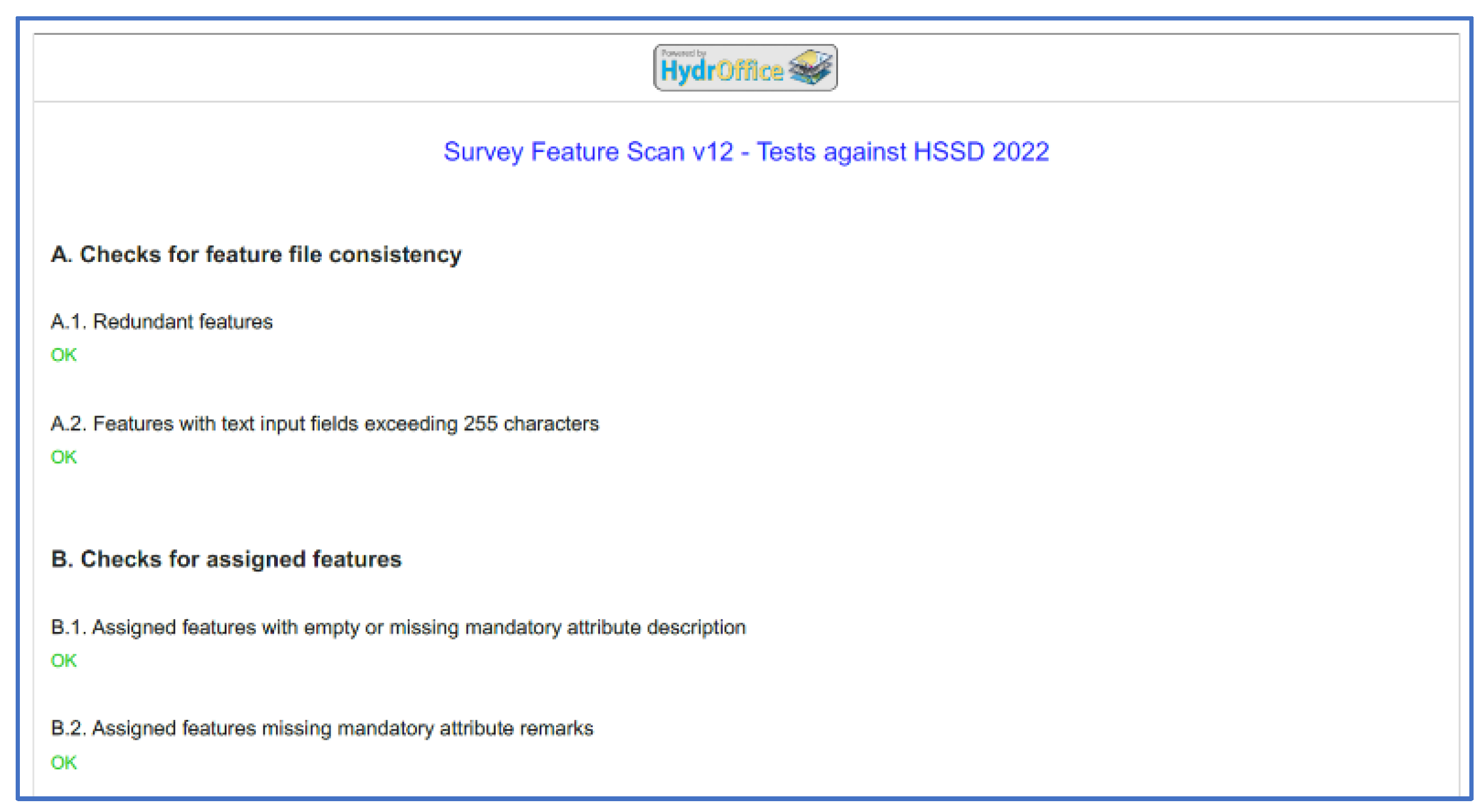

3.1.2. Significant Features Validation

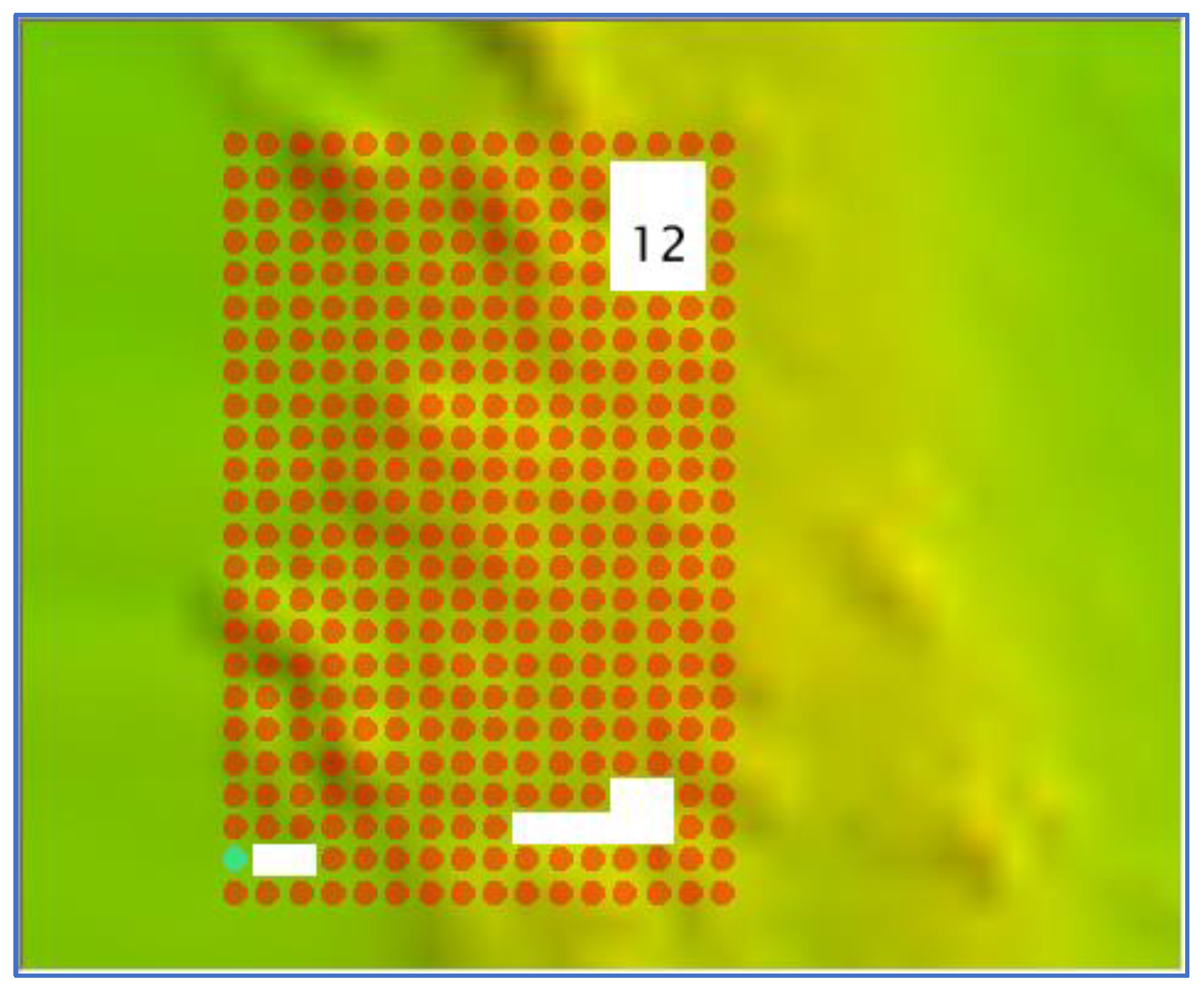

3.2. CA Tools

- Identify chart discrepancies for a bathymetric grid or a set of survey soundings.

- Select a significant set of soundings from a bathymetric grid.

4. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Le Deunf, J.; Debese, N.; Schmitt, T.; Billot, R. A Review of Data Cleaning Approaches in a Hydrographic Framework with a Focus on Bathymetric Multibeam Echosounder Datasets. Geosciences 2020, 10, 254. [Google Scholar] [CrossRef]

- Wlodarczyk-Sielicka, M.; Blaszczak-Bak, W. Processing of Bathymetric Data: The Fusion of New Reduction Methods for Spatial Big Data. Sensors 2020, 20, 6207. [Google Scholar] [CrossRef] [PubMed]

- Evans, B. What are our Shared Challenges. In Proceedings of the NOAA Field Procedures Workshop, Virginia Beach, VA, USA, 24–26 January 2017. [Google Scholar]

- Calder, B. Multi-algorithm swath consistency detection for multibeam echosounder data. Int. Hydrogr. Rev. 2007, 8. Available online: https://journals.lib.unb.ca/index.php/ihr/article/view/20778 (accessed on 8 August 2022).

- Deunf, J.L.; Khannoussi, A.; Lecornu, L.; Meyer, P.; Puentes, J. Automatic Data Quality Assessment of Hydrographic Surveys Taking Into Account Experts’ Preferences. In Proceedings of the OCEANS 2021: San Diego–Porto, Porto, Portugal, 20–23 September 2021; pp. 1–10. [Google Scholar] [CrossRef]

- Masetti, G.; Faulkes, T.; Kastrisios, C. Hydrographic Survey Validation and Chart Adequacy Assessment Using Automated Solutions. In Proceedings of the US Hydro 2019, Biloxi, MS, USA, 19–21 March 2019. [Google Scholar] [CrossRef]

- Hughes Clarke, J.E.; Mayer, L.A.; Wells, D.E. Shallow-water imaging multibeam sonars: A new tool for investigating seafloor processes in the coastal zone and on the continental shelf. Mar. Geophys. Res. 1996, 18, 607–629. [Google Scholar] [CrossRef]

- Ladner, R.W.; Elmore, P.; Perkins, A.L.; Bourgeois, B.; Avera, W. Automated cleaning and uncertainty attribution of archival bathymetry based on a priori knowledge. Mar. Geophys. Res. 2017, 38, 291–301. [Google Scholar] [CrossRef]

- Eeg, J. On the identification of spikes in soundings. Int. Hydrogr. Rev. 1995, 72, 33–41. [Google Scholar]

- Debese, N.; Bisquay, H. Automatic detection of punctual errors in multibeam data using a robust estimator. Int. Hydrogr. Rev. 1999, 76, 49–63. [Google Scholar]

- Hughes Clarke, J.E. The Impact of Acoustic Imaging Geometry on the Fidelity of Seabed Bathymetric Models. Geosciences 2018, 8, 109. [Google Scholar] [CrossRef]

- Bottelier, P.; Briese, C.; Hennis, N.; Lindenbergh, R.; Pfeifer, N. Distinguishing features from outliers in automatic Kriging-based filtering of MBES data: A comparative study. In Geostatistics for Environmental Applications; Springer: Berlin/Heidelberg, Germany, 2005; pp. 403–414. [Google Scholar] [CrossRef]

- NOAA. Hydrographic Surveys Specifications and Deliverables; National Oceanic and Atmospheric Administration, National Ocean Service: Silver Spring, MD, USA, 2022. [Google Scholar]

- Jakobsson, M.; Calder, B.; Mayer, L. On the effect of random errors in gridded bathymetric compilations. J. Geophys. Res. Solid Earth 2002, 107, ETG 14-1–ETG 14-11. [Google Scholar] [CrossRef]

- Masetti, G.; Faulkes, T.; Kastrisios, C. Automated Identification of Discrepancies between Nautical Charts and Survey Soundings. ISPRS Int. J. Geo-Inf. 2018, 7, 392. [Google Scholar] [CrossRef] [Green Version]

- Wilson, M.; Masetti, G.; Calder, B.R. Automated Tools to Improve the Ping-to-Chart Workflow. Int. Hydrogr. Rev. 2017, 17, 21–30. [Google Scholar]

- IHO. S-57: Transfer Standard for Digital Hydrographic Data; International Hydrographic Organization: Monte Carlo, Monaco, 2000. [Google Scholar]

- Calder, B.; Byrne, S.; Lamey, B.; Brennan, R.T.; Case, J.D.; Fabre, D.; Gallagher, B.; Ladner, R.W.; Moggert, F.; Paton, M. The open navigation surface project. Int. Hydrogr. Rev. 2005, 6, 9–18. [Google Scholar]

- Quick, L.; Foster, B.; Hart, K. CARIS: Managing bathymetric metadata from “Ping” to Chart. In Proceedings of the OCEANS 2009, Biloxi, MS, USA, 26–29 October 2009; pp. 1–9. [Google Scholar] [CrossRef]

- Younkin, E. Kluster: Distributed Multibeam Processing System in the Pangeo Ecosystem. In Proceedings of the OCEANS 2021: San Diego–Porto, Porto, Portugal, 20–23 September 2021; pp. 1–6. [Google Scholar] [CrossRef]

- van Rossum, G. The Python Language Reference: Release 3.6.4; 12th Media Services: Suwanee, GA, USA, 2018; p. 168. [Google Scholar]

- Calder, B.; Mayer, L. Robust Automatic Multi-beam Bathymetric Processing. In Proceedings of the US Hydro 2001, Norfolk, VA, USA, 22–24 May 2001. [Google Scholar]

- Hou, T.; Huff, L.C.; Mayer, L.A. Automatic detection of outliers in multibeam echo sounding data. In Proceedings of the US Hydro 2001, Norfolk, VA, USA, 22–24 May 2001. [Google Scholar]

- Mayer, L.A. Frontiers in Seafloor Mapping and Visualization. Mar. Geophys. Res. 2006, 27, 7–17. [Google Scholar] [CrossRef]

- Mayer, L.A.; Paton, M.; Gee, L.; Gardner, S.V.; Ware, C. Interactive 3-D visualization: A tool for seafloor navigation, exploration and engineering. In Proceedings of the OCEANS 2000 MTS/IEEE Conference and Exhibition, Conference Proceedings (Cat. No.00CH37158). Providence, RI, USA, 11–14 September 2000; Volume 912, pp. 913–919. [Google Scholar] [CrossRef]

- Gonsalves, M. Survey Wellness. In Proceedings of the NOAA Coast Survey Field Procedures Workshop, Virginia Beach, VA, USA, 27–20 January 2015. [Google Scholar]

- Briggs, K.B.; Lyons, A.P.; Pouliquen, E.; Mayer, L.A.; Richardson, M.D. Seafloor Roughness, Sediment Grain Size, and Temporal Stability; Naval Research Lab: Washington, DC, USA, 2005. [Google Scholar]

- Hare, R.; Eakins, B.; Amante, C. Modelling bathymetric uncertainty. Int. Hydrogr. Rev. 2011, 9, 31–42. [Google Scholar]

- Armstrong, A.A.; Huff, L.C.; Glang, G.F. New technology for shallow water hydrographic surveys. Int. Hydrogr. Rev. 1998, 2, 27–41. [Google Scholar]

- Dyer, N.; Kastrisios, C.; De Floriani, L. Label-based generalization of bathymetry data for hydrographic sounding selection. Cartogr. Geogr. Inf. Sci. 2022, 49, 338–353. [Google Scholar] [CrossRef]

- Zoraster, S.; Bayer, S. Automated cartographic sounding selection. Int. Hydrogr. Rev. 1992, 1, 103–116. [Google Scholar]

- Sui, H.; Zhu, X.; Zhang, A. A System for Fast Cartographic Sounding Selection. Mar. Geod. 2005, 28, 159–165. [Google Scholar] [CrossRef]

- Riley, J.; Gallagher, B.; Noll, G. Hydrographic Data Integration with PYDRO. In Proceedings of the 2nd International Conference on High Resolution Survey in Shallow Water, Portsmouth, NH, USA, 24–27 September 2001. [Google Scholar]

- IHO. S-44: Standards for Hydrographic Surveys; International Hydrographic Organization: Monte Carlo, Monaco, 2020. [Google Scholar]

- Hughes Clarke, J.E. Multibeam Echosounders. In Submarine Geomorphology; Micallef, A., Krastel, S., Savini, A., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 25–41. [Google Scholar]

- Lurton, X.; Augustin, J.-M. A measurement quality factor for swath bathymetry sounders. IEEE J. Ocean. Eng. 2010, 35, 852–862. [Google Scholar] [CrossRef]

- Warmerdam, F. The Geospatial Data Abstraction Library. In Open Source Approaches in Spatial Data Handling; Hall, G.B., Leahy, M.G., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 87–104. [Google Scholar]

- QGIS.org. QGIS Geographic Information System. Available online: http://www.qgis.org/ (accessed on 14 August 2022).

| Detect Fliers’ Algorithm | Search Height Required |

|---|---|

| Laplacian Operator | Yes |

| Gaussian Curvature | No |

| Adjacent Cells | Yes |

| Edge Slivers | Yes |

| Isolated Nodes | No |

| Noisy Edges | No |

| Noisy Margins | No |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Masetti, G.; Faulkes, T.; Wilson, M.; Wallace, J. Effective Automated Procedures for Hydrographic Data Review. Geomatics 2022, 2, 338-354. https://doi.org/10.3390/geomatics2030019

Masetti G, Faulkes T, Wilson M, Wallace J. Effective Automated Procedures for Hydrographic Data Review. Geomatics. 2022; 2(3):338-354. https://doi.org/10.3390/geomatics2030019

Chicago/Turabian StyleMasetti, Giuseppe, Tyanne Faulkes, Matthew Wilson, and Julia Wallace. 2022. "Effective Automated Procedures for Hydrographic Data Review" Geomatics 2, no. 3: 338-354. https://doi.org/10.3390/geomatics2030019

APA StyleMasetti, G., Faulkes, T., Wilson, M., & Wallace, J. (2022). Effective Automated Procedures for Hydrographic Data Review. Geomatics, 2(3), 338-354. https://doi.org/10.3390/geomatics2030019