Multigrid/Multiresolution Interpolation: Reducing Oversmoothing and Other Sampling Effects

Abstract

1. Introduction

- Deterministic interpolation methods include nearest (natural) neighbour (NN) [25], inverse distance weighting (IDW) [26], or trend surface mapping (TS) [27]. These methods often work better with homogeneous distributions of data points. There are also models, as ANUDEM a.k.a. ArcGIS TOPO2GRID [28] that are designed to interpolate data along curves (e.g., isolines or river basins).

- Geostatistical interpolation is commonly known as kriging which estimates elevation using the best linear unbiased predictor, under the assumption of certain stationarity assumptions [29,30]. There are many variants that overcome some limitations about those statistical assumptions (such as indicator kriging), or improve prediction based on co-variables (co-kriging).

- Machine learning interpolation methods apply interpolation/classification methods to group “likewise” measurements thus enhancing their efficiency by using previous results. Despite the widespread use of machine learning, its use applied to spatial data is still a field of research; dealing with spatial heterogeneity and the problem of scale are areas in which these techniques can excel (see [31,32]). These methods are also showing their great potential when dealing with multi-source multi-quality data [33].

2. Method

2.1. Top-Down Multigrid/Multiresolution Algorithm

- Start with a partition of I in intervals of the formwith and . Thus the sidelength of each is equal to , being the sidelength of I equal to 1.

- Chose those such that for some k there is some observation point . Let us call the number of those observation points inside and estimate the average value of f in to beThis means that our estimation of f in is the most likely one (maximum likelihood) given by the arithmetic mean of the measured points inside .

- Let us now focus on some such that there is no . Let us consider its neighbor intervals, of the form , such that the value of could be computed in them; let us denote that set of neighbor intervals . Then, we will interpolatewhere the are weights assigned to intervals . The simplest weight assignment would be the number of points inside B, that is , meaning that we take as a part of the larger set and then we estimate as the average of f over the points measure in that enlarged set . Under this assumption, we can also interpolate the number of expected measurement points in (e.g., after a new statistically independent measurement of the function) asequating in subindices notation.Remark 1.For a partition of I with , the expression “such that the value of could be computed in them” will also include the rough estimation of (and of , ) from the previous partition given by (4) below.

- Now, we will refine the partition of I by defining, for each four subintervals (quadtree structure), with . If our partition of I was made in intervals, then this one will be in intervals of the formand assign to each of these subintervals the following values of and (until a better approximation is made)

- At this point, we have for the partition of I in intervals a rough estimation of , in each of its subintervals. Then, we can relabel those subintervals applying the substitution and go back to step 2 to calculate an improved interpolation on a new partition in new updated intervals of side-length .

2.2. Some Properties of the Algorithm

- Exactness: The method is an exact interpolator meaning that, for any partition of I in subintervals, the interpolated is the mean of observed values of f at points within , in particular for containing one single point (that is the usual meaning of exact interpolation method).

- Smoothing: Smoothing of the surface is done during the down-scaling process, applying a nearest neighbors weighted averaging (2) and (3). The neighborhood can be extended to only first-neighbors or to second-neighbors or can be weighted unevenly (e.g., assigning weight to second neighbors, assuming octogonal symmetry). In order to get smoother surfaces, the application of Equation (1) can be stopped at some resolution , applying from there on only the generalization operation; then, the method will not be exact at the highest resolution (i.e., pointwise).

- Statistical expectation: At every resolution level n, pixels containing data points are asigned the average value of elevation, which is an unbiased estimator of the mean. However, pixels not containing data points are estimated from their surrounding pixels either at that resolution, n, if they contain data points, or at the previous resolution, , if they do not. Equations (2) and (3), when used to estimate and using as the known up to that level, operate as unbiased estimators acting on unbiased estimations, and then will provide the unbiased expected value of when averaged over all possible data samplings. As for the case of ordinary kriging, the underlying hypothesis is that f is “locally constant”, hence the neighborhood averaging.

- Sensitivity to outliers: As long as the method is based on data averages (or estimated averages), outliers will have their effect on the results. They cannot be safely removed unless strong statistical assumptions (for instance, based on asymptotic standard error of the mean) are made scale-wide, because the same error correction should be applied at all scales. This will be assessed using K-fold cross-validation (see Section 2.4 below).

2.3. Fractal Extrapolation

- Globally: from the globally mean roughness at the smallest scale (one pixel of the final interpolated map) computed from neighbor height differences between intervals containing observation points. If there are such K pairs of neighboring intervals, then . The value of H is estimated from the previous resolution roughness, which is already known: . Going global, maximizes the number K, thus the estimation is improved, however local roughness could vary from part to part of the domain.

- Locally: in this strategy a value is estimated for in each interval, using only the neighbor height differences of observation points within that n-th resolution interval (of size L). However, whenever there are no pairs of neighboring points within that interval, is estimated from the previous resolution (of size ) by the same interpolation method used to estimate . This implies that not only has to be interpolated, but also using the same algorithm.

2.4. Surface Validation and Error Estimation

3. Case Studies

3.1. SRTM Digital Elevation Model Sample Reconstruction

- random point subsampling;

- transect subsampling with 25 km long straight parallel transects.

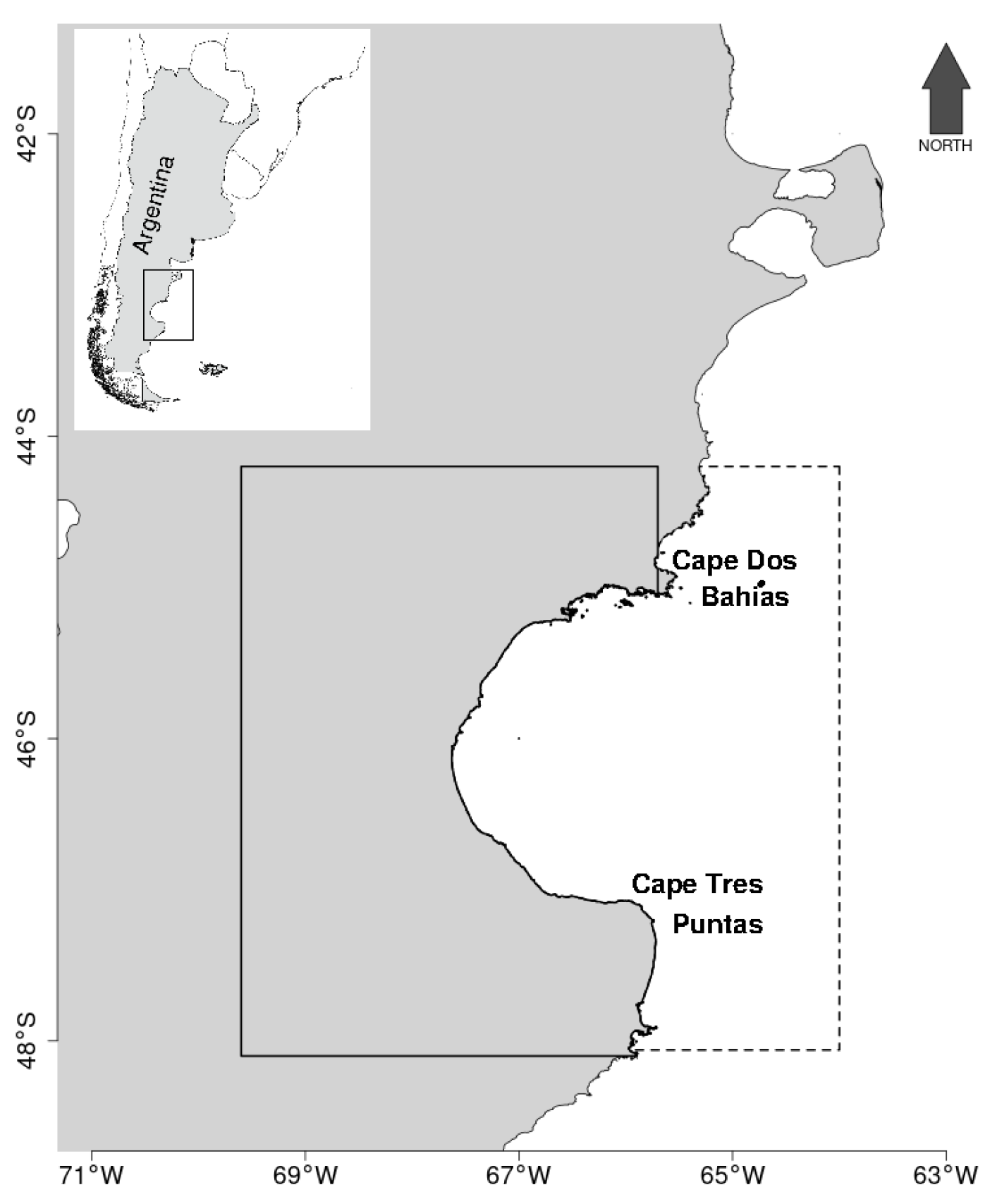

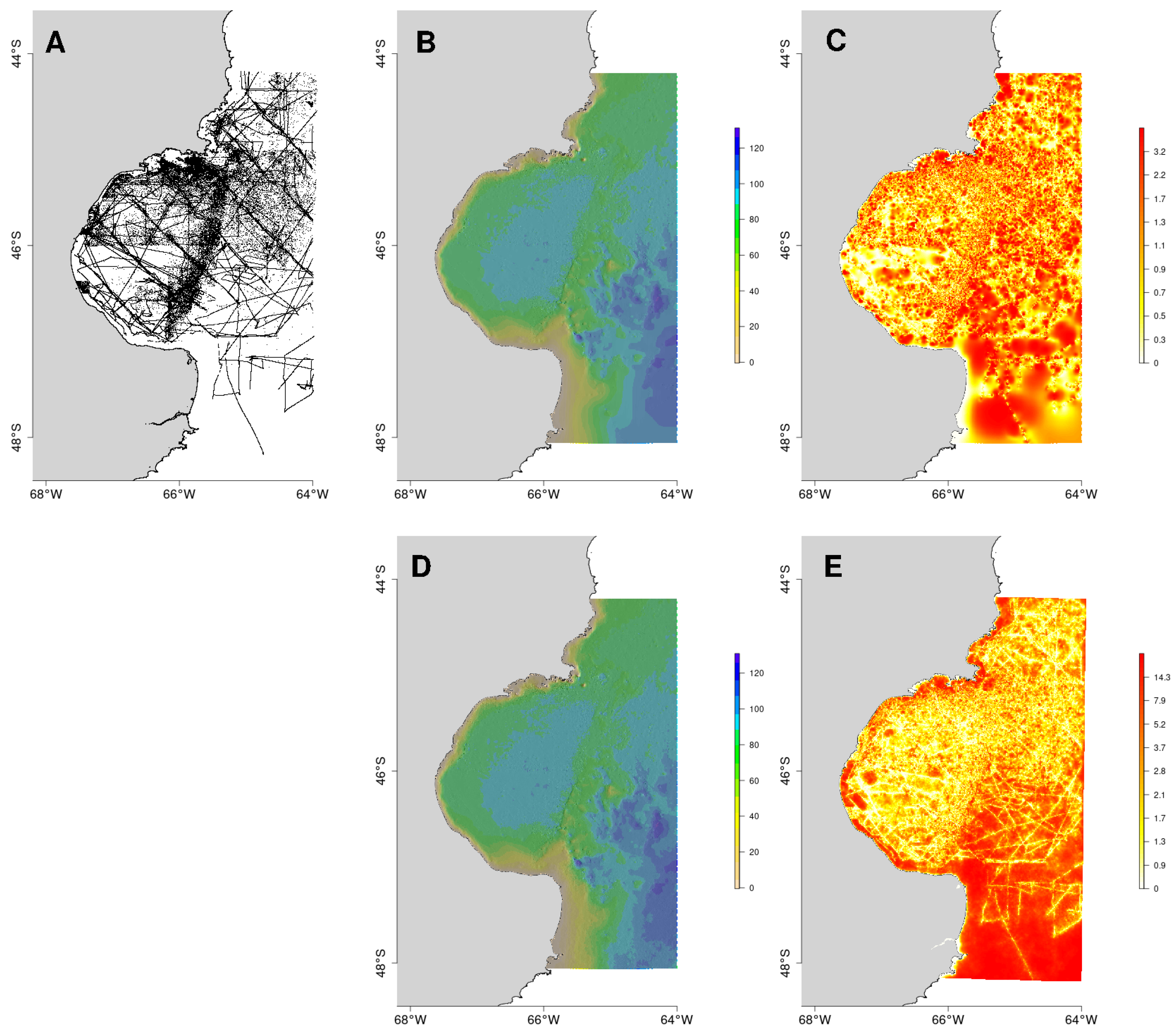

3.2. Gulf of San Jorge Bathymetry Interpolation

- Acoustic data from single and split-beam echosounders (SBES): This type of data is distributed in transects, within which there is a very high density of sounding points (depending on the vessel speed and the ping rate, but not greater than one sounding point every ten meters). In addition, the vertical resolution, although dependent on the working frequency, is usually less than . In our study case we have several sources of this bathymetric information:

- The bathymetric data repository published by the National Institute for Fisheries Research and Development (INIDEP) of Argentina, which regularly conducts stock assessment surveys. This repository has a horizontal resolution of one sounding point every (see details in [68]). In our study area, there were 85085 sounding points, with depths between and . These data are distributed in transects located mainly in the northern and southern areas of the GSJ, with less density in the central area.

- Data from oceanographic campaigns collected in the framework of research project PICT 2016-0218, from the analysis of oceanographic and fishing campaigns carried out by different Argentine intitutions. This database consisted of 147,755 bathymetric points, with depths between and . These data are distributed throughout the study area in transects with a mostly NW-SE orientation.

- Data from coastal campaigns. There were 4281 bathymetric points, with depth values between (negative means above low-tide level, that is, the intertidal area) and , all of them acquired with portable echosounders from small vessels. These data are in areas very close to the coast, in the north of the GSJ.

Considering the tidal amplitude ranges in the GSJ, in order to refer all measured depths to a reference low-tide level, a tide correction was applied using the open OSU Tide Prediction Software (OTPS, available from https://www.tpxo.net/otps; access date 17 June 2022) [69]. - Acoustic data from Multibeam (MBES) and Interferometric Sidescan Sonar (ISSS), which are acoustic sounders that, unlike SBES, provide wide swath coverage, at very high vertical and horizontal resolutions (up to a few centimeters). For our study area, these data come from three acoustic surveys in coastal areas (north of the GSJ), two with MBES and one with ISSS. For this work, the bathymetric surfaces were subsampled onto a grid. In total, 11,305 bathymetric points were included, with depth values between and .

- Data from nautical charts: the basic source of bathymetric information are always nautical charts, in this case developed and maintained by the Naval Hydrography Services (Servicio de Hidrografía Naval) of Argentina. For our study area, data from six nautical charts were used; one of these charts, covered the entire area, while the other five cover smaller coastal areas, located to the north and west of the gulf, with higher detail. In total, 3522 bathymetric points were used, with depths between and deep.

- Data from the citicen-science project “Observadores a bordo” (on-board observers, POBCh). Most of the GSJ waters are under the jurisdiction of the province of Chubut, whose Fisheries Secretariat developed the program POBCh for years to control fisheries. In this program, along with fishing data, depth data were taken at those places where fishing sets were made (along with information of date and time). After this database depuration, we used 38,249 bathymetric points in our study area, with depths between and and distributed throughout the entire GSJ except for the SW quadrant, which is under the jurisdiction of another province. Depth data were also corrected using OTPS based on observers annotated coordinates and local time.

- Coastline. The isoline of the SRTM30 model was used as the union limit between the emerged and submerged areas. Points were generated along this line, that also includes islands, separated by 20–30 m (a second of arc, corresponding to the SRTM resolution) and with a depth value of . For the study area, 59,128 points were included from Santa Elena Bay, to the north, to Punta Buque. Coastline is used as a boundary condition and thus not included in the cross-validation process (i.e., it is always included in the interpolation) [36].

Outlier Detection

4. Discussion

4.1. Asessment of the Interpolations

4.2. Assessment of the Method

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Badura, J.; Przybylski, B. Application of digital elevation models to geological and geomorphological studies-some examples. Przegląd Geol. 2005, 53, 977–983. [Google Scholar]

- Ogania, J.; Puno, G.; Alivio, M.; Taylaran, J. Effect of digital elevation model’s resolution in producing flood hazard maps. Glob. J. Environ. Sci. Manag. 2019, 5, 95–106. [Google Scholar]

- Bove, G.; Becker, A.; Sweeney, B.; Vousdoukas, M.; Kulp, S. A method for regional estimation of climate change exposure of coastal infrastructure: Case of USVI and the influence of digital elevation models on assessments. Sci. Total Environ. 2020, 710, 136162. [Google Scholar] [CrossRef] [PubMed]

- Green, J.; Pugh, D.T. Bardsey—An island in a strong tidal stream: Underestimating coastal tides due to unresolved topography. Ocean. Sci. 2020, 16, 1337–1345. [Google Scholar] [CrossRef]

- Kalbermatten, M.; Van De Ville, D.; Turberg, P.; Tuia, D.; Joost, S. Multiscale analysis of geomorphological and geological features in high resolution digital elevation models using the wavelet transform. Geomorphology 2012, 138, 352–363. [Google Scholar] [CrossRef]

- Sofia, G. Combining geomorphometry, feature extraction techniques and Earth-surface processes research: The way forward. Geomorphology 2020, 355, 107055. [Google Scholar] [CrossRef]

- Lecours, V.; Dolan, M.F.; Micallef, A.; Lucieer, V.L. A review of marine geomorphometry, the quantitative study of the seafloor. Hydrol. Earth Syst. Sci. 2016, 20, 3207–3244. [Google Scholar] [CrossRef]

- Marceau, D.J.; Hay, G.J. Remote sensing contributions to the scale issue. Can. J. Remote Sens. 1999, 25, 357–366. [Google Scholar] [CrossRef]

- Newman, D.R.; Cockburn, J.M.; Draguţ, L.; Lindsay, J.B. Evaluating Scaling Frameworks for Multiscale Geomorphometric Analysis. Geomatics 2022, 2, 36–51. [Google Scholar] [CrossRef]

- Alcaras, E.; Amoroso, P.P.; Parente, C. The Influence of Interpolated Point Location and Density on 3D Bathymetric Models Generated by Kriging Methods: An Application on the Giglio Island Seabed (Italy). Geosciences 2022, 12, 62. [Google Scholar] [CrossRef]

- Hengl, T. Finding the right pixel size. Comput. Geosci. 2006, 32, 1283–1298. [Google Scholar] [CrossRef]

- Habib, M.; Alzubi, Y.; Malkawi, A.; Awwad, M. Impact of interpolation techniques on the accuracy of large-scale digital elevation model. Open Geosci. 2020, 12, 190–202. [Google Scholar] [CrossRef]

- Farr, T.G.; Rosen, P.A.; Caro, E.; Crippen, R.; Duren, R.; Hensley, S.; Kobrick, M.; Paller, M.; Rodriguez, E.; Roth, L.; et al. The shuttle radar topography mission. Rev. Geophys. 2007, 45, RG2004. [Google Scholar] [CrossRef]

- Abrams, M.; Crippen, R.; Fujisada, H. ASTER global digital elevation model (GDEM) and ASTER global water body dataset (ASTWBD). Remote Sens. 2020, 12, 1156. [Google Scholar] [CrossRef]

- Tachikawa, T.; Hato, M.; Kaku, M.; Iwasaki, A. Characteristics of ASTER GDEM version 2. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 3657–3660. [Google Scholar]

- Tadono, T.; Ishida, H.; Oda, F.; Naito, S.; Minakawa, K.; Iwamoto, H. Precise Global DEM Generation by ALOS PRISM. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, II-4, 71–76. [Google Scholar] [CrossRef]

- Abshire, J.B.; Sun, X.; Riris, H.; Sirota, J.M.; McGarry, J.F.; Palm, S.; Yi, D.; Liiva, P. Geoscience Laser Altimeter System (GLAS) on the ICESat Mission: On-orbit measurement performance. Geophys. Res. Lett. 2005, 32, L21S02. [Google Scholar] [CrossRef]

- Shuman, C.A.; Zwally, H.J.; Schutz, B.E.; Brenner, A.C.; DiMarzio, J.P.; Suchdeo, V.P.; Fricker, H.A. ICESat Antarctic elevation data: Preliminary precision and accuracy assessment. Geophys. Res. Lett. 2006, 33, L07501. [Google Scholar] [CrossRef]

- Hall, J. GEBCO Centennial Special Issue—Charting the secret world of the ocean floor: The GEBCO project 1903–2003. Mar. Geophys. Res. 2006, 27, 1–5. [Google Scholar] [CrossRef]

- Weatherall, P.; Marks, K.M.; Jakobsson, M.; Schmitt, T.; Tani, S.; Arndt, J.E.; Rovere, M.; Chayes, D.; Ferrini, V.; Wigley, R. A new digital bathymetric model of the world’s oceans. Earth Space Sci. 2015, 2, 331–345. [Google Scholar] [CrossRef]

- Novaczek, E.; Devillers, R.; Edinger, E. Generating higher resolution regional seafloor maps from crowd-sourced bathymetry. PLoS ONE 2019, 14, e0216792. [Google Scholar] [CrossRef]

- Li, J.; Heap, A.D. A Review of Spatial Interpolation Methods for Environmental Scientists; Record 2008/23; Geoscience Australia: Canberra, Australia, 2008; p. 137. Available online: http://www.ga.gov.au/servlet/BigObjFileManager?bigobjid=GA12526 (accessed on 17 June 2022).

- Li, J.; Heap, A.D. Spatial interpolation methods applied in the environmental sciences: A review. Environ. Model. Softw. 2014, 53, 173–189. [Google Scholar] [CrossRef]

- Jiang, Z. A survey on spatial prediction methods. IEEE Trans. Knowl. Data Eng. 2018, 31, 1645–1664. [Google Scholar] [CrossRef]

- Yanalak, M. Sibson (natural neighbour) and non-Sibsonian interpolation for digital elevation model (DEM). Surv. Rev. 2004, 37, 360–376. [Google Scholar] [CrossRef]

- Shepard, D. A two-dimensional interpolation function for irregularly-spaced data. In Proceedings of the 1968 23rd ACM National Conference, New York, NY, USA, 27–29 August 1968; pp. 517–524. [Google Scholar]

- Chorley, R.J.; Haggett, P. Trend-surface mapping in geographical research. Trans. Inst. Br. Geogr. 1965, 37, 47–67. [Google Scholar] [CrossRef]

- Hutchinson, M.F. A new procedure for gridding elevation and stream line data with automatic removal of spurious pits. J. Hydrol. 1989, 106, 211–232. [Google Scholar] [CrossRef]

- Van der Meer, F. Remote-sensing image analysis and geostatistics. Int. J. Remote Sens. 2012, 33, 5644–5676. [Google Scholar] [CrossRef]

- Maroufpoor, S.; Bozorg-Haddad, O.; Chu, X. Chapter 9—Geostatistics: Principles and methods. In Handbook of Probabilistic Models; Samui, P., Tien Bui, D., Chakraborty, S., Deo, R.C., Eds.; Butterworth-Heinemann: Cambridge, MA, USA, 2020; pp. 229–242. [Google Scholar] [CrossRef]

- Kopczewska, K. Spatial machine learning: New opportunities for regional science. Ann. Reg. Sci. 2022, 68, 713–755. [Google Scholar] [CrossRef]

- Nikparvar, B.; Thill, J.C. Machine learning of spatial data. ISPRS Int. J. Geo-Inf. 2021, 10, 600. [Google Scholar] [CrossRef]

- Kamolov, A.A.; Park, S. Prediction of Depth of Seawater Using Fuzzy C-Means Clustering Algorithm of Crowdsourced SONAR Data. Sustainability 2021, 13, 5823. [Google Scholar] [CrossRef]

- Rezaee, H.; Asghari, O.; Yamamoto, J. On the reduction of the ordinary kriging smoothing effect. J. Min. Environ. 2011, 2, 102–117. [Google Scholar]

- Wang, Q.; Xiao, H.; Wu, W.; Su, F.; Zuo, X.; Yao, G.; Zheng, G. Reconstructing High-Precision Coral Reef Geomorphology from Active Remote Sensing Datasets: A Robust Spatial Variability Modified Ordinary Kriging Method. Remote Sens. 2022, 14, 253. [Google Scholar] [CrossRef]

- Sánchez-Carnero, N.; Ace na, S.; Rodríguez-Pérez, D.; Cou nago, E.; Fraile, P.; Freire, J. Fast and low-cost method for VBES bathymetry generation in coastal areas. Estuar. Coast. Shelf Sci. 2012, 114, 175–182. [Google Scholar] [CrossRef]

- Li, Y.; Rendas, M.J. Tuning interpolation methods for environmental uni-dimensional (transect) surveys. In Proceedings of the OCEANS 2015-MTS/IEEE, Washington, DC, USA, 19–22 October 2015; pp. 1–8. [Google Scholar] [CrossRef]

- Wang, J.; Zhao, M.W.; Jiang, L.; Yang, C.C.; Huang, X.L.; Xu, Y.; Lu, J. A new strategy combined HASM and classical interpolation methods for DEM construction in areas without sufficient terrain data. J. Mt. Sci. 2021, 18, 2761–2775. [Google Scholar] [CrossRef]

- Sánchez-Carnero, N.; Rodríguez-Pérez, D. A sea bottom classification of the Robredo area in the Northern San Jorge Gulf (Argentina). Geo-Mar. Lett. 2021, 41, 1–14. [Google Scholar] [CrossRef]

- Ibrahim, P.O.; Sternberg, H. Bathymetric Survey for Enhancing the Volumetric Capacity of Tagwai Dam in Nigeria via Leapfrogging Approach. Geomatics 2021, 1, 246–257. [Google Scholar] [CrossRef]

- Perivolioti, T.M.; Mouratidis, A.; Terzopoulos, D.; Kalaitzis, P.; Ampatzidis, D.; Tušer, M.; Frouzova, J.; Bobori, D. Production, Validation and Morphometric Analysis of a Digital Terrain Model for Lake Trichonis Using Geospatial Technologies and Hydroacoustics. ISPRS Int. J. Geo-Inf. 2021, 10, 91. [Google Scholar] [CrossRef]

- Liu, K.; Song, C. Modeling lake bathymetry and water storage from DEM data constrained by limited underwater surveys. J. Hydrol. 2022, 604, 127260. [Google Scholar] [CrossRef]

- Tran, T.T. Improving variogram reproduction on dense simulation grids. Comput. Geosci. 1994, 20, 1161–1168. [Google Scholar] [CrossRef]

- Yaou, M.H.; Chang, W.T. Fast surface interpolation using multiresolution wavelet transform. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 673–688. [Google Scholar] [CrossRef]

- Yutani, T.; Yono, O.; Kuwatani, T.; Matsuoka, D.; Kaneko, J.; Hidaka, M.; Kasaya, T.; Kido, Y.; Ishikawa, Y.; Ueki, T.; et al. Super-Resolution and Feature Extraction for Ocean Bathymetric Maps Using Sparse Coding. Sensors 2022, 22, 3198. [Google Scholar] [CrossRef]

- Zhang, Y.; Yu, W. Comparison of DEM Super-Resolution Methods Based on Interpolation and Neural Networks. Sensors 2022, 22, 745. [Google Scholar] [CrossRef] [PubMed]

- Shekhar, P.; Patra, A.; Stefanescu, E.R. Multilevel methods for sparse representation of topographical data. Procedia Comput. Sci. 2016, 80, 887–896. [Google Scholar] [CrossRef]

- Rasera, L.G.; Gravey, M.; Lane, S.N.; Mariethoz, G. Downscaling images with trends using multiple-point statistics simulation: An application to digital elevation models. Math. Geosci. 2020, 52, 145–187. [Google Scholar] [CrossRef]

- Zakeri, F.; Mariethoz, G. A review of geostatistical simulation models applied to satellite remote sensing: Methods and applications. Remote Sens. Environ. 2021, 259, 112381. [Google Scholar] [CrossRef]

- Blondel, P. Quantitative Analyses of Morphological Data; Springer Geology; Springer: Cham, Switzerland, 2018; pp. 63–74. [Google Scholar] [CrossRef]

- Gagnon, J.S.; Lovejoy, S.; Schertzer, D. Multifractal earth topography. Nonlinear Process. Geophys. 2006, 13, 541–570. [Google Scholar] [CrossRef]

- Henrico, I. Optimal interpolation method to predict the bathymetry of Saldanha Bay. Trans. GIS 2021, 25, 1991–2009. [Google Scholar] [CrossRef]

- Herzfeld, U.C.; Overbeck, C. Analysis and simulation of scale-dependent fractal surfaces with application to seafloor morphology. Comput. Geosci. 1999, 25, 979–1007. [Google Scholar] [CrossRef]

- McClean, C.J.; Evans, I.S. Apparent fractal dimensions from continental scale digital elevation models using variogram methods. Trans. GIS 2000, 4, 361–378. [Google Scholar] [CrossRef]

- Saupe, D. Algorithms for random fractals. In The Science of Fractal Images; Springer: Berlin, Germany, 1988; pp. 71–136. [Google Scholar]

- Ebert, D.S.; Musgrave, F.K.; Peachey, D.; Perlin, K.; Worley, S. Texturing & Modeling: A Procedural Approach; Morgan Kaufmann: San Francisco, CA, USA, 2003. [Google Scholar]

- Wadoux, A.M.C.; Heuvelink, G.B.; de Bruin, S.; Brus, D.J. Spatial cross-validation is not the right way to evaluate map accuracy. Ecol. Model. 2021, 457, 109692. [Google Scholar] [CrossRef]

- Fernández, M.; Roux, A.; Fernández, E.; Caló, J.; Marcos, A.; Aldacur, H. Grain-size analysis of surficial sediments from Golfo San Jorge, Argentina. J. Mar. Biol. Assoc. U. K. 2003, 83, 1193–1197. [Google Scholar] [CrossRef]

- Desiage, P.A.; Montero-Serrano, J.C.; St-Onge, G.; Crespi-Abril, A.C.; Giarratano, E.; Gil, M.N.; Haller, M.J. Quantifying sources and transport pathways of surface sediments in the Gulf of San Jorge, central Patagonia (Argentina). Oceanography 2018, 31, 92–103. [Google Scholar] [CrossRef]

- Isla, F.I.; Iantanos, N.; Estrada, E. Playas reflectivas y disipativas macromareales del Golfo San Jorge, Chubut. Rev. Asoc. Argent. Sedimentol. 2002, 9, 155–164. [Google Scholar]

- Carbajal, J.C.; Rivas, A.L.; Chavanne, C. High-frequency frontal displacements south of San Jorge Gulf during a tidal cycle near spring and neap phases: Biological implications between tidal states. Oceanography 2018, 31, 60–69. [Google Scholar] [CrossRef]

- Sylwan, C.A. Geology of the Golfo San Jorge Basin, Argentina. Geología de la Cuenca del Golfo San Jorge, Argentina. J. Iber. Geol. 2001, 27, 123–158. [Google Scholar]

- Cuiti no, J.I.; Scasso, R.A.; Ventura Santos, R.; Mancini, L.H. Sr ages for the Chenque Formation in the Comodoro Rivadavia región (Golfo San Jorge basin, Argentina): Stratigraphic implications. Lat. Am. J. Sedimentol. Basin Anal. 2015, 22, 13–28. [Google Scholar]

- Martinez, O.A.; Kutschker, A. The ‘Rodados Patagónicos’ (Patagonian shingle formation) of eastern Patagonia: Environmental conditions of gravel sedimentation. Biol. J. Linn. Soc. 2011, 103, 336–345. [Google Scholar] [CrossRef][Green Version]

- St-Onge, G.; Ferreyra, G.A. Introduction to the Special Issue on the Gulf of San Jorge (Patagonia, Argentina). Oceanography 2018, 31, 14–15. [Google Scholar] [CrossRef]

- Góngora, M.E.; González-Zevallos, D.; Pettovello, A.; Mendía, L. Caracterización de las principales pesquerías del golfo San Jorge Patagonia, Argentina. Lat. Am. J. Aquat. Res. 2012, 40, 1–11. [Google Scholar] [CrossRef]

- De la Garza, J.; Moriondo Danovaro, P.; Fernández, M.; Ravalli, C.; Souto, V.; Waessle, J. An Overview of the Argentine Red Shrimp (Pleoticus muelleri, Decapoda, Solenoceridae) Fishery in Argentina: Biology, Fishing, Management and Ecological Interactions. 2017. Available online: http://hdl.handle.net/1834/15133 (accessed on 17 June 2022).

- Sonvico, P.; Cascallares, G.; Madirolas, A.; Cabreira, A.; Menna, B.V. Repositorio de Líneas Batimétricas de Las Campa Nas de Investigación del INIDEP; INIDEP Report ASES 053; INIDEP: Mar del Plata, Argentina, 2021.

- Egbert, G.D.; Erofeeva, S.Y. Efficient inverse modeling of barotropic ocean tides. J. Atmos. Ocean. Technol. 2002, 19, 183–204. [Google Scholar] [CrossRef]

- Tukey, J.W. Exploratory Data Analysis; Addison-Wesley Publishing Company: Reading, MA, USA, 1977; Volume 2. [Google Scholar]

- Hackbusch, W. Multi-Grid Methods and Applications; Springer Science & Business Media: Berlin, Germany, 2013; Volume 4. [Google Scholar]

- Adelson, E.; Anderson, C.; Bergen, J.; Burt, P.; Ogden, J. Pyramid Methods in Image Processing. RCA Eng. 1983, 29. [Google Scholar]

- Sekulić, A.; Kilibarda, M.; Heuvelink, G.; Nikolić, M.; Bajat, B. Random forest spatial interpolation. Remote Sens. 2020, 12, 1687. [Google Scholar] [CrossRef]

- Liu, P.; Jin, S.; Wu, Z. Assessment of the Seafloor Topography Accuracy in the Emperor Seamount Chain by Ship-Based Water Depth Data and Satellite-Based Gravity Data. Sensors 2022, 22, 3189. [Google Scholar] [CrossRef] [PubMed]

- Manssen, M.; Weigel, M.; Hartmann, A.K. Random number generators for massively parallel simulations on GPU. Eur. Phys. J. Spec. Top. 2012, 210, 53–71. [Google Scholar] [CrossRef]

- Malinverno, A. Segmentation of topographic profiles of the seafloor based on a self-affine model. IEEE J. Ocean. Eng. 1989, 14, 348–359. [Google Scholar] [CrossRef]

- Wilson, M.F.J.; O’Connell, B.; Brown, C.; Guinan, J.C.; Grehan, A.J. Multiscale Terrain Analysis of Multibeam Bathymetry Data for Habitat Mapping on the Continental Slope. Mar. Geod. 2007, 30, 3–35. [Google Scholar] [CrossRef]

| Simple Interpolation | Fractal Extrapolation | SRTM90 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 308.6 | 308.7 | 308.7 | 308.6 | 308.6 | 308.6 | 308.7 | 308.6 | 308.6 | 308.6 | 308.4 | |

| 184.2 | 185.1 | 185.6 | 185.9 | 186.2 | 184.2 | 185.1 | 185.7 | 186.0 | 186.2 | 187.2 | |

| 12.55 | 10.15 | 8.23 | 6.65 | 5.40 | 19.33 | 16.47 | 15.18 | 14.58 | 15.75 | 2.50 | |

| 0.044 | 0.152 | 0.087 | 0.045 | 0.065 | 0.052 | 0.182 | 0.115 | 0.023 | 0.088 | −0.007 | |

| 20.23 | 16.22 | 13.11 | 10.54 | 8.48 | 20.85 | 16.80 | 13.78 | 11.44 | 9.90 | 0.85 | |

| 8.00 | 6.53 | 5.10 | 4.01 | 3.13 | 12.72 | 10.34 | 8.58 | 7.32 | 7.02 | 1.25 | |

| 26.45 | 21.28 | 17.24 | 13.86 | 11.14 | 37.88 | 32.33 | 28.95 | 26.51 | 27.35 | 5.10 | |

| 0.9945 | 0.9965 | 0.9975 | 0.9985 | 0.9990 | 0.9944 | 0.9964 | 0.9973 | 0.9984 | 0.9989 | 1.000 | |

| Simple Interpolation | Fractal Extrapolation | SRTM90 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 298.5 | 311.0 | 310.9 | 307.9 | 307.8 | 299.4 | 311.3 | 310.9 | 307.9 | 307.8 | 308.4 | |

| 140.0 | 170.6 | 180.7 | 178.4 | 182.6 | 139.7 | 170.2 | 180.8 | 178.2 | 182.5 | 187.2 | |

| 73.33 | 53.39 | 52.70 | 31.49 | 23.98 | 136.55 | 109.22 | 88.23 | 61.90 | 44.72 | 2.50 | |

| −9.992 | 2.467 | 2.355 | −0.616 | −0.698 | −9.168 | 2.775 | 2.403 | −0.684 | −0.708 | −0.007 | |

| 105.56 | 79.76 | 55.92 | 40.38 | 28.05 | 111.59 | 85.59 | 60.45 | 43.97 | 30.68 | 0.85 | |

| 49.02 | 42.17 | 32.34 | 20.42 | 14.99 | 89.00 | 78.49 | 66.26 | 44.98 | 31.60 | 1.25 | |

| 153.37 | 108.89 | 106.08 | 66.91 | 50.70 | 247.59 | 196.91 | 172.97 | 126.35 | 92.25 | 5.10 | |

| 0.8430 | 0.9113 | 0.9589 | 0.9787 | 0.9899 | 0.8398 | 0.9082 | 0.9582 | 0.9780 | 0.9895 | 1.000 | |

| Simple Interpolation | Fractal Extrapolation | |

|---|---|---|

| 81.32 | 81.24 | |

| 24.06 | 24.20 | |

| 2.02 | 9.65 | |

| 1.28 | 4.77 | |

| 4.08 | 22.87 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rodriguez-Perez, D.; Sanchez-Carnero, N. Multigrid/Multiresolution Interpolation: Reducing Oversmoothing and Other Sampling Effects. Geomatics 2022, 2, 236-253. https://doi.org/10.3390/geomatics2030014

Rodriguez-Perez D, Sanchez-Carnero N. Multigrid/Multiresolution Interpolation: Reducing Oversmoothing and Other Sampling Effects. Geomatics. 2022; 2(3):236-253. https://doi.org/10.3390/geomatics2030014

Chicago/Turabian StyleRodriguez-Perez, Daniel, and Noela Sanchez-Carnero. 2022. "Multigrid/Multiresolution Interpolation: Reducing Oversmoothing and Other Sampling Effects" Geomatics 2, no. 3: 236-253. https://doi.org/10.3390/geomatics2030014

APA StyleRodriguez-Perez, D., & Sanchez-Carnero, N. (2022). Multigrid/Multiresolution Interpolation: Reducing Oversmoothing and Other Sampling Effects. Geomatics, 2(3), 236-253. https://doi.org/10.3390/geomatics2030014