Crack Detection, Classification, and Segmentation on Road Pavement Material Using Multi-Scale Feature Aggregation and Transformer-Based Attention Mechanisms

Abstract

1. Introduction

- Multi-Scale Feature Aggregation for Comprehensive Detection: We introduce a multi-scale feature aggregation approach that enables the model to effectively detect cracks of varying sizes, from fine hairline fissures to large structural cracks. By integrating features from multiple scales, the model achieves a higher level of detail and accuracy in crack detection.

- Transformer-Based Attention Mechanisms for Enhanced Focus: We incorporate transformer-based attention mechanisms into the crack detection model, allowing it to dynamically focus on the most relevant regions of the pavement images. This reduces the likelihood of false positives and improves the model’s ability to detect cracks in complex environments.

- Unified Model for Detection, Classification, and Segmentation: The proposed methodology integrates detection, classification, and segmentation into a single, unified model. This holistic approach not only identifies the presence of cracks but also categorizes them by type and provides precise segmentation maps, which are essential for targeted maintenance actions.

2. Related Work

2.1. Overview of Existing Methods in Crack Detection, Classification, and Segmentation

- i.

- Image-Processing Techniques

- ii.

- Machine Learning Approaches

- iii.

- Deep Learning Techniques

2.2. Limitations of Current Approaches in Handling Multi-Scale Features and Focusing on Relevant Image Regions

- Multi-Scale Feature Handling

- Focus on Relevant Image Regions

3. Materials and Methods

3.1. Data Preprocessing

- RCD-IIUM dataset: The RCD-IIUM dataset is a collection of high-resolution images of pavement surfaces from different roads in Malaysia. The images have been labeled to identify three main types of cracks: alligator, longitudinal, and transverse. The labeling was conducted carefully to make sure the data were accurate and useful for training the model.

- Online available data: To add more variety to the training data and improve the model’s ability to generalize, we also included images from publicly available datasets. These images come from different locations and cover a wide range of pavement surfaces and conditions. By using these additional data, we aimed to make the model more adaptable to different lighting conditions, surface textures, and crack appearances.

- Rotation and scaling: Images are randomly rotated and scaled to simulate different perspectives and distances, ensuring that the model learns to recognize cracks from various angles and sizes. Special attention is given to images containing longitudinal and transverse cracks. Careless rotation could alter the orientation of these specific crack types, potentially mislabeling them during training. Therefore, when rotating such images, a controlled rotation angle is applied to preserve their structural integrity and ensure that longitudinal and transverse cracks remain correctly classified. Mathematically, if I(x, y) represents the pixel intensity at coordinates (x, y), then the rotation operation can be represented as Equation (1):where θ is the rotation angle.I′(x′, y′) = I(cos(θ) x + sin(θ)·y, −sin(θ)·x + cos(θ)·y)

- Random cropping: This technique involves selecting random subregions of the image to focus on specific areas of the pavement, which makes the model more sensitive to cracks of different sizes and shapes. If I(x, y) represents the original image, then cropping can be defined as selecting a submatrix I′[x1:x2, y1:y2] where x1, x2, y1, and y2 are the cropping boundaries.

- Noise injection: Gaussian noise is added to the images to increase the model’s robustness against real-world imperfections, such as dirt or shadows. Mathematically, if I(x, y) is the original image and N(x, y) is Gaussian noise with mean μ and variance σ2, then the noisy image I′(x, y) is represented as Equation (2):where N (x, y)∼N(μ, σ2).I′(x, y) = I(x, y) + N(x, y)

- Contrast adjustment: This involves modifying the contrast of the images to ensure that the model can handle various lighting conditions. The contrast adjustment can be mathematically represented by Equation (3):where α is the contrast factor.I′(x, y) = α (I(x, y) − 128) + 128

3.2. Multi-Scale Feature Aggregation

- CNN architecture: We use a deep Convolutional Neural Network (CNN) as the backbone of our model. Networks like ResNet or EfficientNet are employed for their ability to extract rich, hierarchical features from images. The input image I(x, y) is processed through multiple convolutional layers, where each layer l applies a filter fl to produce feature maps Fl(x, y), represented as Equation (4):

- Feature Pyramid Network (FPN): The FPN is integrated into the CNN architecture to aggregate features from different layers. The FPN enhances the model’s ability to detect both fine details and broader patterns. Mathematically, if Fl represents the feature map from the l-th layer, then the FPN combines these maps, represented as Equation (5):where αl is the learnable weight that determines the contribution of each layer’s features.

- Feature fusion strategy: The features extracted by the FPN are fused using a weighted averaging technique. This strategy combines features across scales, ensuring a comprehensive representation of the pavement surface. The final fused feature map can be expressed as Equation (6):where ws is the weight assigned to features on different scales s.

3.3. Transformer-Based Attention Mechanisms

- Integration with aggregated features: The aggregated multi-scale features from the FPN are passed through the Vision Transformer. The transformer treats the image as a sequence of non-overlapping patches Pi, where each patch is embedded into a vector space. The embedding for each patch is given by Equation (7):where We is the embedding matrix and be is the bias. The transformer applies self-attention across these patches to capture long-range dependencies.

- Self-attention mechanisms: The self-attention mechanism computes the relevance of each patch with respect to all others. For each patch i, its attention score with patch j is computed as Equation (8):where Qi and Kj are the query and key vectors for patches i and j, and is the dimension of the key vectors. The final output for each patch is represented as Equation (9):where is the value vector for patch j. The output Oi is then passed through the remaining layers of the transformer to produce the final attention-enhanced feature map.

3.4. Unified Model Architecture

- (a)

- Detection Branch: RetinaNet

- Backbone: ResNet-50 is used as the backbone network within RetinaNet for feature extraction. This provides a deep representation of the input images, capturing both low-level and high-level features.

- Focal loss: RetinaNet uses focal loss to address the issue of class imbalance by down-weighting the loss assigned to well-classified examples. The focal loss is defined as Equation (10):where pt is the predicted probability for the correct class, αt is a balancing factor, and γ is a focusing parameter, typically set to 2.0.

- Anchor boxes: The model uses a predefined set of anchor boxes that are adapted to the typical sizes and aspect ratios of pavement cracks, ensuring that the detection network is well-tuned to identify cracks of varying shapes and sizes.

- (b)

- Classification Branch: ResNet

- Feature extraction: ResNet-34, a variant with fewer layers than deeper models, is chosen for its balance between computational efficiency and feature extraction capability. The network extracts hierarchical features from the input images, which are then used to classify the cracks into different categories.

- Cross-entropy loss: The classification branch employs cross-entropy loss, which is effective for multi-class classification tasks and represented as Equation (11):where C is the number of classes, yc is the true label, and y^c is the predicted probability for class c.

- (c)

- Segmentation Branch: U-Net

- Encoder–decoder structure: U-Net’s encoder–decoder structure allows for capturing context on multiple scales, making it ideal for segmenting cracks of various sizes and shapes. The encoder is based on a modified ResNet architecture, while the decoder up-samples the features to match the input resolution.

- Skip connections: U-Net employs skip connections that directly connect corresponding layers in the encoder and decoder, preserving spatial information that is crucial for accurate segmentation.

- Dice loss: The segmentation branch uses Dice loss, which is particularly effective for imbalanced segmentation tasks where the target (cracks) occupies only a small portion of the image, represented as Equation (12):where P represents the predicted segmentation, and G represents the ground truth segmentation.

- (d)

- Joint Optimization

4. Results

4.1. Experimental Setup

- Training environment: The training process for the model was conducted in a high-performance computing environment tailored to handle the computational intensity of deep learning tasks. The hardware and software setup used is detailed in Table 4.

- Hyperparameters: The model’s hyperparameters were selected based on prior research and iterative experimentation. These hyperparameters were fine-tuned to optimize the model’s performance while ensuring stability during training. The key hyperparameters used are presented in Table 5.

- Evaluation metrics: To comprehensively evaluate the performance of the model across detection, classification, and segmentation tasks, the following metrics were employed, as shown in Table 6.

4.2. Detection Performance

- Precision and recall: The model achieved high precision and recall scores across all crack types, indicating its effectiveness in correctly identifying and localizing cracks with minimal false positives and false negatives.

- F1-score: The F1-scores, which balance precision and recall, were consistently strong across all crack types, confirming the robustness of the detection process.

- IoU and mAP: The Intersection over Union (IoU) and mean average precision (mAP) metrics further demonstrate the model’s ability to accurately delineate crack boundaries, with longitudinal cracks showing the best overall performance.

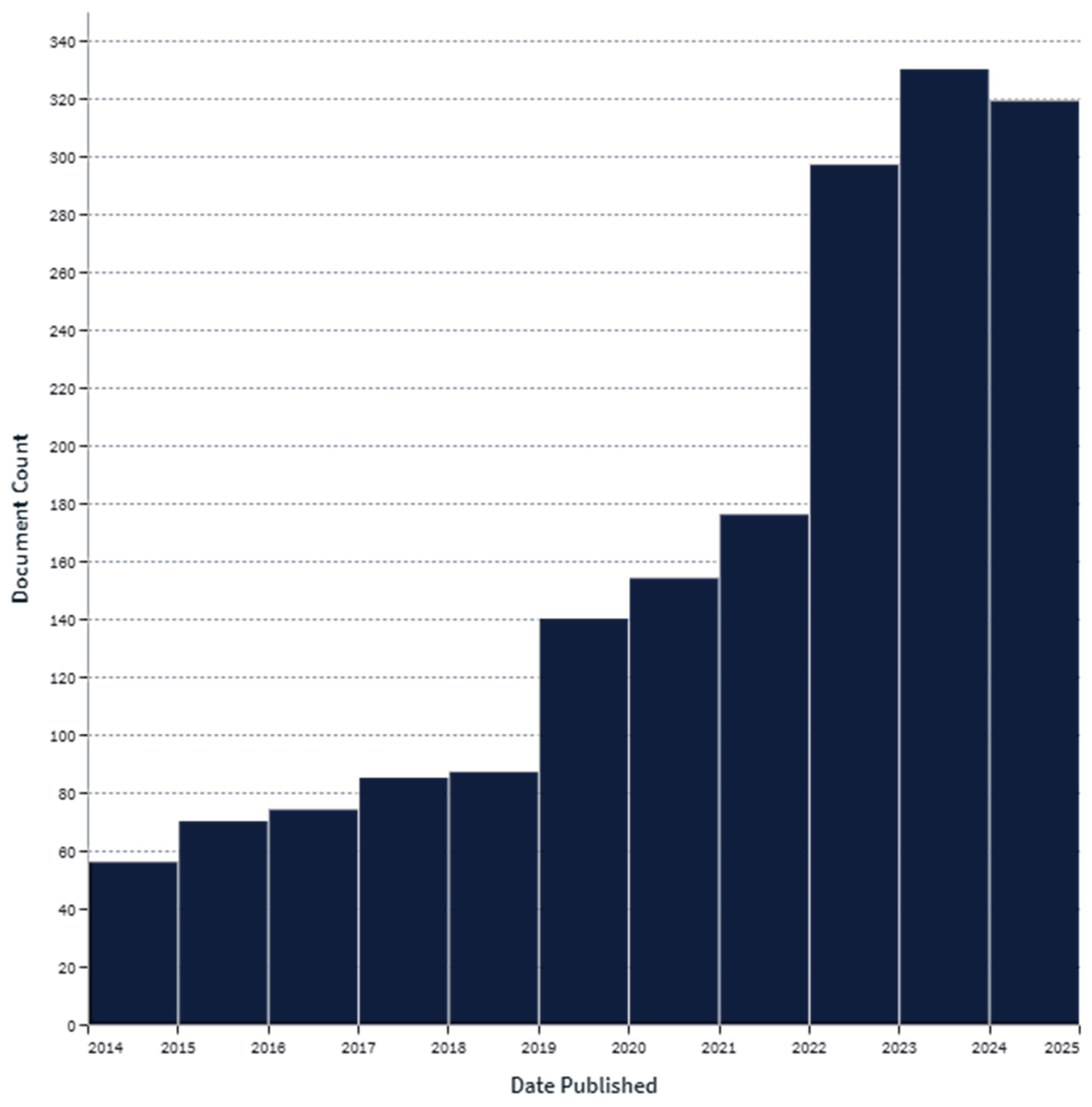

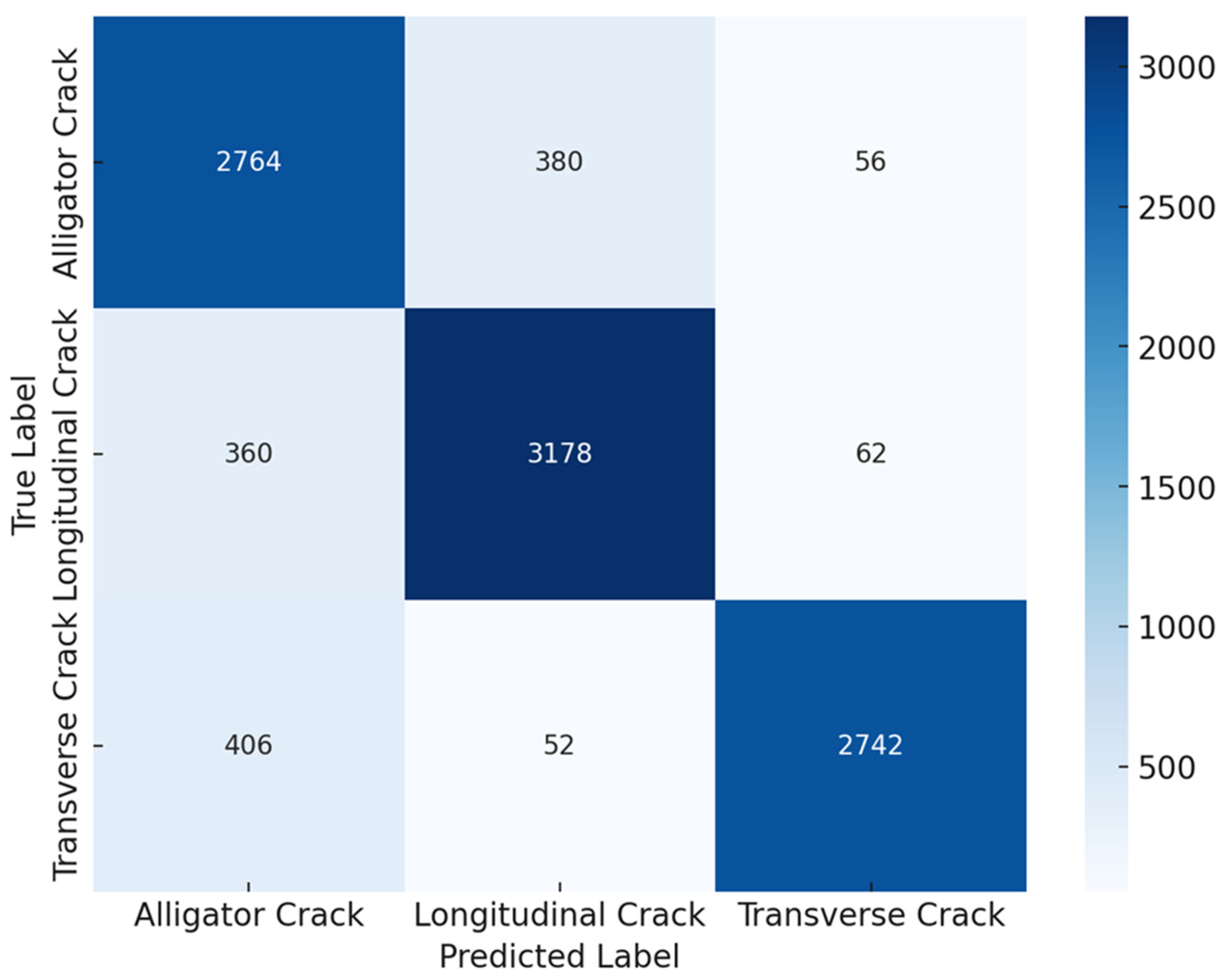

4.3. Classification Performance

- Classification accuracy: The model demonstrated high accuracy across all crack types, with the highest performance observed for longitudinal cracks. This indicates the model’s strong ability to distinguish between different types of cracks.

- Precision and recall: The close alignment of precision and recall metrics across all crack types suggests that the model is consistent in correctly identifying and categorizing cracks without overfitting to any particular class.

- F1-Score: The F1-scores confirm that the model maintains a good balance between precision and recall, ensuring reliable classification performance.

4.4. Segmentation Performance

- Dice Coefficient and IoU: The model’s ability to accurately segment cracks is reflected in the Dice Coefficient and IoU metrics, which measure the overlap between the predicted and actual crack boundaries. Longitudinal cracks achieved the highest segmentation accuracy, while transverse cracks, with more irregular shapes, were more challenging to segment.

- Pixel accuracy: The pixel accuracy values indicate that the model correctly classified the majority of pixels in the images, further confirming its effectiveness in crack segmentation.

- Precision and recall: The segmentation precision and recall metrics highlight the model’s capability to not only identify the correct pixels but also ensure that most of the relevant pixels are captured, minimizing false negatives.

4.5. Comparison of Model Performance before and after Transformer-Based Attention Mechanisms

- Impact on detection: The integration of attention mechanisms significantly enhanced detection precision and recall, resulting in a more accurate localization of cracks. The IoU improvements further indicate a more precise alignment of detected crack regions with ground truth data.

- Classification gains: The classification metrics saw substantial gains, particularly in recall and F1-score, highlighting the model’s improved ability to differentiate between crack types after applying attention mechanisms.

- Segmentation enhancement: The most notable improvements were observed in the segmentation task, where the IoU and Dice Coefficient metrics showed dramatic increases. This suggests that attention mechanisms allowed the model to better focus on the critical areas of the image, resulting in more accurate crack boundary delineation.

4.6. Time Efficiency Metrics

- Inference time increase: The addition of attention mechanisms resulted in a modest increase in inference time, which is expected due to the increased computational complexity.

- Efficiency trade-offs: While there is a slight increase in processing time, the significant improvements in detection, classification, and segmentation accuracy make this trade-off worthwhile, especially in applications where precision is critical.

4.7. Overall Model Performance Summary

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ashraf, A.; Sophian, A.; Shafie, A.A.; Gunawan, T.S.; Ismail, N.N.; Bawono, A.A. Efficient Pavement Crack Detection and Classification Using Custom YOLOv7 Model. Indones. J. Electr. Eng. Inform. 2023, 11, 119–132. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, B.; Wang, J.; Li, J.; Sun, X. APLCNet: Automatic Pixel-Level Crack Detection Network Based on Instance Segmentation. IEEE Access 2020, 8, 199159–199170. [Google Scholar] [CrossRef]

- Mandal, V.; Uong, L.; Adu-Gyamfi, Y. Automated Road Crack Detection Using Deep Convolutional Neural Networks. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 5212–5215. [Google Scholar]

- Ashraf, A.; Sophian, A.; Shafie, A.A.; Gunawan, T.S.; Ismail, N.N. Machine Learning-Based Pavement Crack Detection, Classification, and Characterization: A Review. Bull. Electr. Eng. Inform. 2023, 12, 3601–3619. [Google Scholar] [CrossRef]

- Hassan, S.A.; Han, S.H.; Shin, S.Y. Real-Time Road Cracks Detection Based on Improved Deep Convolutional Neural Network. In Proceedings of the 2020 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), London, ON, Canada, 30 August–2 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–4. [Google Scholar]

- Jing, P.; Yu, H.; Hua, Z.; Xie, S.; Song, C. Road Crack Detection Using Deep Neural Network Based on Attention Mechanism and Residual Structure. IEEE Access 2023, 11, 919–929. [Google Scholar] [CrossRef]

- Liu, H.; Yang, C.; Li, A.; Huang, S.; Feng, X.; Ruan, Z.; Ge, Y. Deep Domain Adaptation for Pavement Crack Detection. IEEE Trans. Intell. Transp. Syst. 2022, 24, 1669–1681. [Google Scholar] [CrossRef]

- Ashraf, A.; Sophian, A.; Shafie, A.A.; Gunawan, T.S.; Ismail, N.N.; Bawono, A.A. Detection of Road Cracks Using Convolutional Neural Networks and Threshold Segmentation. J. Integr. Adv. Eng. 2022, 2, 123–134. [Google Scholar] [CrossRef]

- Gehri, N.; Mata-Falcón, J.; Kaufmann, W. Automated Crack Detection and Measurement Based on Digital Image Correlation. Constr. Build. Mater. 2020, 256, 119383. [Google Scholar] [CrossRef]

- Lins, R.G.; Givigi, S.N. Automatic Crack Detection and Measurement Based on Image Analysis. IEEE Trans. Instrum. Meas. 2016, 65, 583–590. [Google Scholar] [CrossRef]

- Fan, Z.; Wu, Y.; Lu, J.; Li, W. Automatic Pavement Crack Detection Based on Structured Prediction with the Convolutional Neural Network. arXiv 2018, arXiv:1802.02208. [Google Scholar]

- Dung, C.V.; Anh, L.D. Autonomous Concrete Crack Detection Using Deep Fully Convolutional Neural Network. Autom. Constr. 2019, 99, 52–58. [Google Scholar] [CrossRef]

- THE LENS. Available online: https://www.lens.org/ (accessed on 8 September 2024).

- Naik, S.K.; Murthy, C.A. Hue-Preserving Color Image Enhancement without Gamut Problem. IEEE Trans. Image Process. 2003, 12, 1591–1598. [Google Scholar] [CrossRef] [PubMed]

- Boubenna, H.; Lee, D. Image-Based Emotion Recognition Using Evolutionary Algorithms. Biol. Inspired Cogn. Archit. 2018, 24, 70–76. [Google Scholar] [CrossRef]

- Zhou, D.; Dong, W.; Shen, X. Image Zooming Using Directional Cubic Convolution Interpolation. IET Image Process. 2012, 6, 627–634. [Google Scholar] [CrossRef]

- Yang, X.; Li, H.; Yu, Y.; Luo, X.; Huang, T.; Yang, X. Automatic Pixel-Level Crack Detection and Measurement Using Fully Convolutional Network. Comput. Aided Civ. Infrastruct. Eng. 2018, 33, 1090–1109. [Google Scholar] [CrossRef]

- Zou, Q.; Zhang, Z.; Li, Q.; Qi, X.; Wang, Q.; Wang, S. DeepCrack: Learning Hierarchical Convolutional Features for Crack Detection. IEEE Trans. Image Process. 2019, 28, 1498–1512. [Google Scholar] [CrossRef]

- Jo, Y.; Ryu, S. Pothole Detection System Using a Black-Box Camera. Sensors 2015, 15, 29316–29331. [Google Scholar] [CrossRef]

- Decker, D.S. Best Practices for Crack Treatments in Asphalt Pavements. In Proceedings of the 6th Eurasphalt & Eurobitume Congress, Prague, Czech Republic, 1–3 June 2016; Czech Technical University in Prague: Prague, Czech Republic, 2016. [Google Scholar]

- Ayenu-Prah, A.; Attoh-Okine, N. Evaluating Pavement Cracks with Bidimensional Empirical Mode Decomposition. EURASIP J. Adv. Signal Process. 2008, 2008, 861701. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, F.; Zhang, Y.D.; Zhu, Y.J. Road Crack Detection Using Deep Convolutional Neural Network. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 3708–3712. [Google Scholar]

- Maeda, H.; Sekimoto, Y.; Seto, T.; Kashiyama, T.; Omata, H. Road Damage Detection Using Deep Neural Networks with Images Captured Through a Smartphone. arXiv 2018, arXiv:1801.09454. [Google Scholar] [CrossRef]

- Chen, H.; Su, Y.; He, W. Automatic Crack Segmentation Using Deep High-Resolution Representation Learning. Appl. Opt. 2021, 60, 6080. [Google Scholar] [CrossRef]

- Feng, X.; Xiao, L.; Li, W.; Pei, L.; Sun, Z.; Ma, Z.; Shen, H.; Ju, H. Pavement Crack Detection and Segmentation Method Based on Improved Deep Learning Fusion Model. Math. Probl. Eng. 2020, 2020, 1–22. [Google Scholar] [CrossRef]

- Yang, F.; Zhang, L.; Yu, S.; Prokhorov, D.; Mei, X.; Ling, H. Feature Pyramid and Hierarchical Boosting Network for Pavement Crack Detection. IEEE Trans. Intell. Transp. Syst. 2020, 21, 1525–1535. [Google Scholar] [CrossRef]

- Wang, X.; Zhaozheng, H.; Li, N.; Qin, L. Pavement Crack Analysis by Referring to Historical Crack Data Based on Multi-Scale Localization. PLoS ONE 2020, 15, e0235171. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Liu, R.; Ali, R.; Chen, B.; Lin, H.; Li, Y.; Zhang, H. DFP-Net: A Crack Segmentation Method Based on a Feature Pyramid Network. Appl. Sci. 2024, 14, 651. [Google Scholar] [CrossRef]

- Ranyal, E.; Sadhu, A.; Jain, K. Enhancing Pavement Health Assessment: An Attention-Based Approach for Accurate Crack Detection, Measurement, and Mapping. Expert Syst. Appl. 2024, 247, 123314. [Google Scholar] [CrossRef]

- Ashraf, A.; Sophian, A.; Akramin Shafie, A.; Surya Gunawan, T.; Ismail, N.N.; Aryo Bawono, A. RCD-IIUM: A Comprehensive Malaysian Road Crack Dataset for Infrastructure Analysis. In Proceedings of the 2024 9th International Conference on Mechatronics Engineering (ICOM), Kuala Lumpur, Malaysia, 13–14 August 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 200–206. [Google Scholar]

- Arya, D.; Maeda, H.; Ghosh, S.K.; Toshniwal, D.; Sekimoto, Y. RDD2020: An Annotated Image Dataset for Automatic Road Damage Detection Using Deep Learning. Data Brief 2021, 36, 107133. [Google Scholar] [CrossRef]

- Bawono, A.A. State of the Art: Functional Performance of Pavement. In Engineered Cementitious Composites for Electrified Roadway in Megacities: A Comprehensive Study on Functional Performance; Springer International Publishing: Berlin/Heidelberg, Germany, 2022; pp. 37–63. [Google Scholar]

- Rosso, M.M.; Aloisio, A.; Randazzo, V.; Tanzi, L.; Cirrincione, G.; Marano, G.C. Comparative deep learning studies for indirect tunnel monitoring with and without Fourier pre-processing. Integr. Comput.-Aided Eng. 2024, 31, 213–232. [Google Scholar] [CrossRef]

- Zhu, G.; Liu, J.; Fan, Z.; Yuan, D.; Ma, P.; Wang, M.; Sheng, W.; Wang, K.C. A lightweight encoder–decoder network for automatic pavement crack detection. Comput.-Aided Civ. Infrastruct. Eng. 2024, 39, 1743–1765. [Google Scholar] [CrossRef]

| Dataset Name | Total Number of Images | Number of Images Used | Citation |

|---|---|---|---|

| RCD-IIUM | 2210 | 2210 | [30] |

| RDD2020: An Image Dataset for Smartphone-based Road Damage Detection and Classification | 26,336 | 2000 | [31] |

| CRACK500 | 500 | 500 | [26] |

| Crack Type | Number of Images | Percentage of Total |

|---|---|---|

| Alligator Crack | 3200 | 32% |

| Longitudinal Crack | 3600 | 36% |

| Transverse Crack | 3200 | 32% |

| Total | 10,000 | 100% |

| Step | Description | Code Segment |

|---|---|---|

| 1 | Input Data: Load the input images from the RCD-IIUM dataset or publicly available datasets. Apply data augmentation techniques such as rotation, cropping, noise injection, and contrast adjustment to enhance dataset diversity. | python images = load images(dataset_path) augmented_images = apply_augmentations(images) |

| 2 | Feature Extraction (ResNet-50 Backbone): Pass the input images through a shared feature extraction backbone (ResNet-50) to capture multi-scale hierarchical features. | python features = ResNet50(images) |

| 3 | Detection (RetinaNet): Utilize RetinaNet with anchor boxes to detect cracks. The detection branch uses focal loss to handle class imbalance. The output is bounding boxes marking the cracks’ location. | python detection_boxes = RetinaNet(features, anchor_boxes) loss_detection = focal_loss(detection_boxes, ground_truth_boxes) |

| 4 | Classification (ResNet-34): Use the shared feature maps in the classification branch (ResNet-34) to classify detected cracks into specific types (alligator, longitudinal, transverse) using cross-entropy loss. | python crack_classes = ResNet34(features) loss_classification = cross_entropy_loss(crack_classes, ground_truth_classes) |

| 5 | Segmentation (U-Net): Apply U-Net’s encoder–decoder structure on shared features to perform pixel-level segmentation for crack boundaries. Optimize segmentation using Dice loss to handle imbalanced datasets. | python crack_masks = UNet(features) loss_segmentation = dice_loss(crack_masks, ground_truth_masks) |

| 6 | Attention Mechanisms: Use Vision Transformers to apply self-attention mechanisms after feature extraction. This splits the input into non-overlapping patches and prioritizes relevant image regions, focusing on cracks. | python patches = split_into_patches(features) attention_scores = self_attention(patches) enhanced_features = apply_attention(attention_scores) |

| 7 | Joint Optimization: Combine the loss functions from detection, classification, and segmentation branches and perform joint optimization to ensure shared features are learned effectively for all tasks. | python total_loss = λ1 × loss_detection + λ2 * loss_classification + λ3 * loss_segmentation optimizer.minimize(total_loss) |

| 8 | Output: Generate the final outputs: bounding boxes for crack detection, crack type classification, and segmentation maps that precisely delineate the cracks’ boundaries. | python final_output = generate_output(detection_boxes, crack_classes, crack_masks) |

| Component | Specification & Description |

|---|---|

| GPU | NVIDIA GeForce RTX 3090 (24 GB)—Excellent balance of processing power and memory capacity, suitable for deep learning workloads involving large datasets. |

| CPU | Intel Core i9-11900K—A powerful CPU that complements the GPU by efficiently managing system operations and preprocessing tasks. |

| Memory | 64 GB DDR4 RAM—Adequate memory to handle large batch sizes and ensure smooth operation during model training. |

| Storage | 2 TB NVMe SSD—High-speed storage used to manage large datasets and ensure quick data retrieval during training. |

| Operating System | Windows 11 Pro—A modern OS providing robust support for development and deep learning frameworks. |

| Deep Learning Framework | PyTorch 1.9—Chosen for its flexibility and extensive support for custom model architecture development. |

| CUDA Version | 11.2—Optimized for NVIDIA GPUs, ensuring efficient execution of parallel computations. |

| Python Version | 3.8—Used for scripting and running the deep learning models, offering compatibility with the latest libraries and frameworks. |

| Hyperparameter | Value |

|---|---|

| Learning Rate | 0.001 |

| Batch Size | 16 |

| Optimizer | Adam |

| Epochs | 100 |

| Learning Rate Decay | 0.0001 |

| Weight Decay | 0.0005 |

| Focal Loss Gamma | 2.0 |

| Dice Loss Weight | 0.7 |

| Cross-Entropy Loss Weight | 0.3 |

| Metric | Detection | Classification | Segmentation |

|---|---|---|---|

| Precision (%) | ✓ | ✓ | ✓ |

| Recall (%) | ✓ | ✓ | ✓ |

| F1-Score (%) | ✓ | ✓ | |

| Intersection over Union (IoU) | ✓ | ✓ | |

| Accuracy (%) | ✓ | ✓ | |

| Dice Coefficient (%) | ✓ | ||

| Pixel Accuracy (%) | ✓ | ||

| Mean Average Precision (mAP) | ✓ |

| Metric | Alligator Cracks | Longitudinal Cracks | Transverse Cracks | Average (All Cracks) |

|---|---|---|---|---|

| Precision (%) | 88.4 | 90.2 | 87.6 | 88.7 |

| Recall (%) | 86.7 | 88.5 | 85.9 | 87.0 |

| F1-Score (%) | 87.5 | 89.3 | 86.7 | 87.8 |

| Intersection over Union (IoU) | 78.8 | 80.5 | 77.1 | 78.8 |

| Mean Average Precision (mAP) | 0.88 | 0.90 | 0.87 | 0.88 |

| Metric | Alligator Cracks | Longitudinal Cracks | Transverse Cracks | Average (All Cracks) |

|---|---|---|---|---|

| Classification Accuracy (%) | 88.4 | 90.2 | 87.6 | 88.7 |

| Precision (%) | 87.9 | 89.8 | 87.1 | 88.3 |

| Recall (%) | 86.4 | 88.3 | 85.7 | 86.8 |

| F1-Score (%) | 87.1 | 89.0 | 86.4 | 87.5 |

| Metric | Alligator Cracks | Longitudinal Cracks | Transverse Cracks | Average (All Cracks) |

|---|---|---|---|---|

| Dice Coefficient (%) | 80.5 | 82.0 | 78.5 | 80.3 |

| Intersection over Union (IoU) | 74.2 | 76.0 | 72.5 | 74.2 |

| Pixel Accuracy (%) | 87.0 | 88.2 | 85.1 | 86.8 |

| Precision (%) | 84.5 | 85.7 | 82.6 | 84.3 |

| Recall (%) | 83.0 | 84.3 | 81.2 | 82.8 |

| Metric | Without Transformer | With Transformer | Improvement (%) |

|---|---|---|---|

| Precision (Detection) (%) | 88.7 | 94.3 | +5.6 |

| Recall (Detection) (%) | 87.0 | 95.1 | +8.1 |

| F1-Score (Detection) (%) | 87.8 | 94.4 | +6.6 |

| IoU (Detection) (%) | 78.8 | 93.2 | +14.4 |

| Precision (Classification) (%) | 88.3 | 94.8 | +7.4 |

| Recall (Classification) (%) | 86.8 | 94.2 | +7.4 |

| F1-Score (Classification) (%) | 87.5 | 94.5 | +7.0 |

| IoU (Segmentation) (%) | 74.2 | 92.3 | +18.1 |

| Dice Coefficient (Segmentation) | 80.3 | 94.7 | +14.4 |

| Precision (Segmentation) (%) | 84.5 | 91.5 | +7.0 |

| Recall (Segmentation) (%) | 82.8 | 90.7 | +7.9 |

| Metric | Without Transformer | With Transformer | Difference (ms) |

|---|---|---|---|

| Average Inference Time (ms) | 110 | 135 | +25 |

| Detection Time (ms) | 45 | 58 | +13 |

| Classification Time (ms) | 35 | 43 | +8 |

| Segmentation Time (ms) | 30 | 34 | +4 |

| Task | Precision (%) | Recall (%) | F1-Score (%) | IoU (%) | Dice Coefficient |

|---|---|---|---|---|---|

| Detection | 94.3 | 95.1 | 94.4 | 93.2 | - |

| Classification | 94.8 | 94.2 | 94.5 | - | - |

| Segmentation | 91.5 | 90.7 | - | 92.3 | 94.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ashraf, A.; Sophian, A.; Bawono, A.A. Crack Detection, Classification, and Segmentation on Road Pavement Material Using Multi-Scale Feature Aggregation and Transformer-Based Attention Mechanisms. Constr. Mater. 2024, 4, 655-675. https://doi.org/10.3390/constrmater4040036

Ashraf A, Sophian A, Bawono AA. Crack Detection, Classification, and Segmentation on Road Pavement Material Using Multi-Scale Feature Aggregation and Transformer-Based Attention Mechanisms. Construction Materials. 2024; 4(4):655-675. https://doi.org/10.3390/constrmater4040036

Chicago/Turabian StyleAshraf, Arselan, Ali Sophian, and Ali Aryo Bawono. 2024. "Crack Detection, Classification, and Segmentation on Road Pavement Material Using Multi-Scale Feature Aggregation and Transformer-Based Attention Mechanisms" Construction Materials 4, no. 4: 655-675. https://doi.org/10.3390/constrmater4040036

APA StyleAshraf, A., Sophian, A., & Bawono, A. A. (2024). Crack Detection, Classification, and Segmentation on Road Pavement Material Using Multi-Scale Feature Aggregation and Transformer-Based Attention Mechanisms. Construction Materials, 4(4), 655-675. https://doi.org/10.3390/constrmater4040036