Investigating Bounding Box, Landmark, and Segmentation Approaches for Automatic Human Barefoot Print Classification on Soil Substrates Using Deep Learning

Abstract

1. Introduction

2. Related Work

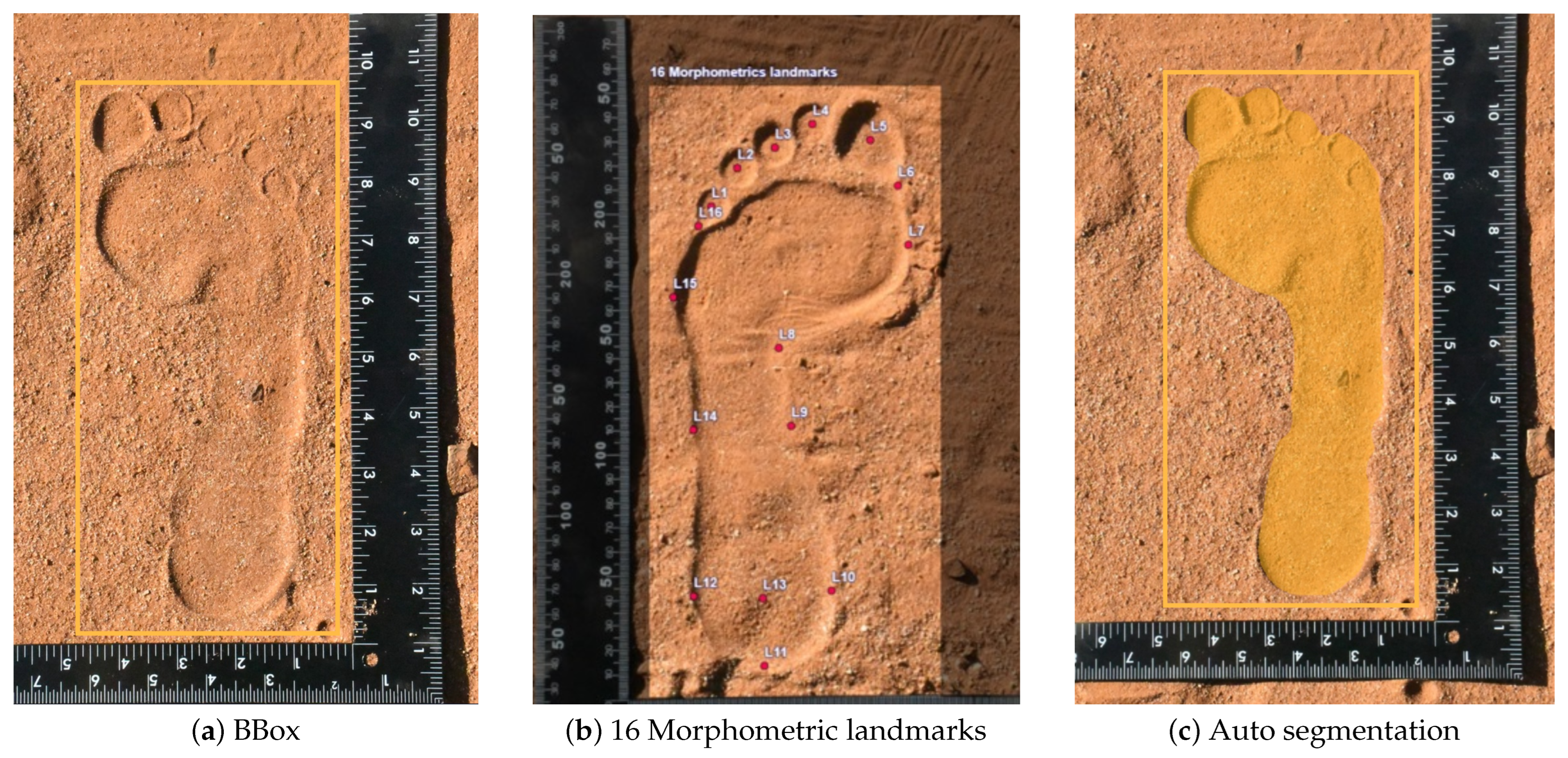

- This study is the first to investigate barefoot print classification through deep learning using a bounding box (BBox), anatomical landmarks, and automatic segmented outlines on the soil substrate.

- Developed a method that automates the identification of 16 anatomical landmarks by integrating manual annotation with automatic identification using a DeepFIT network.

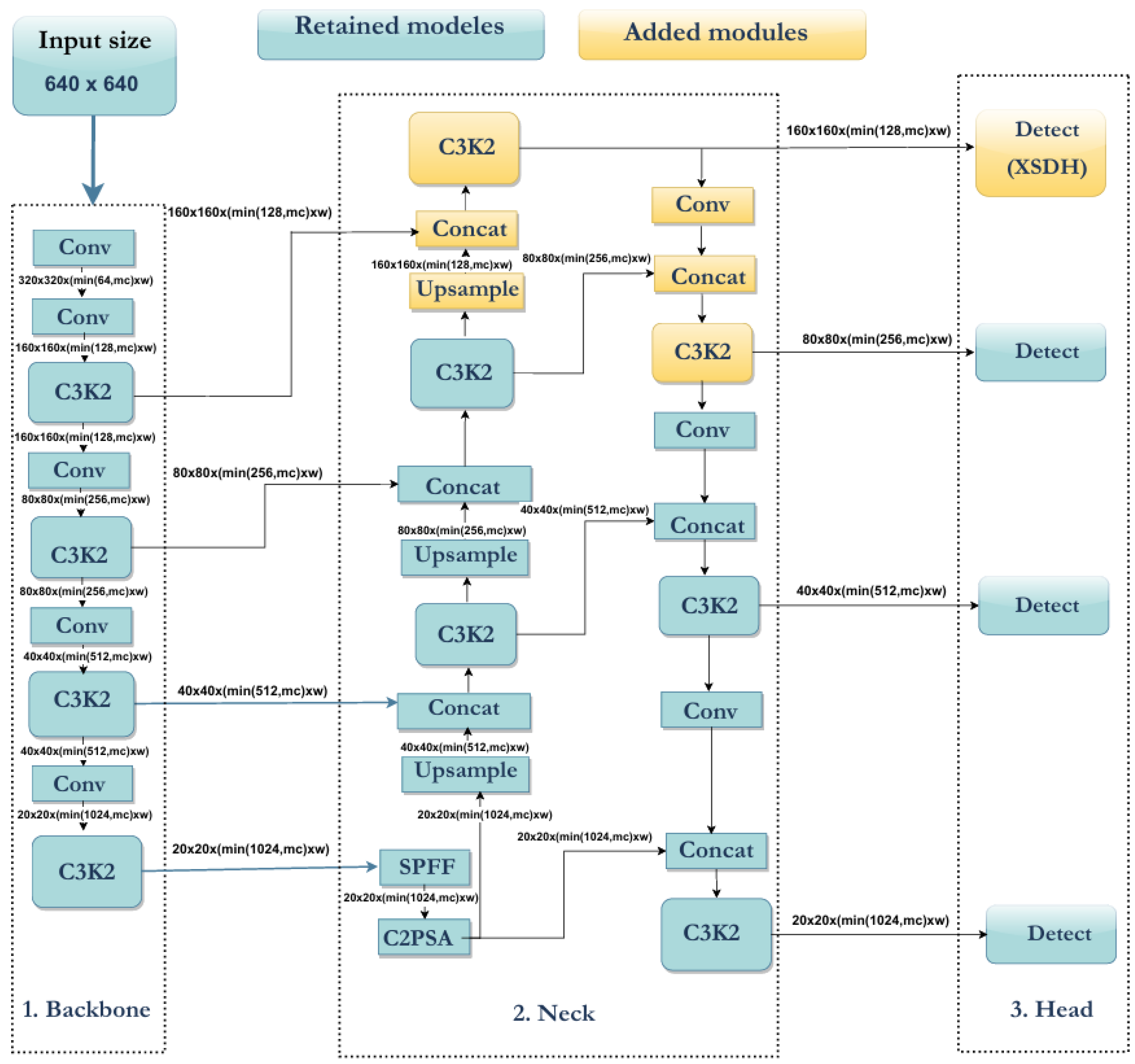

- Introduce a DeepFIT architecture with an extra small detection head (XSDH) to enhance the model’s ability to capture fine details that are critical for precise morphometric analysis, which can be crucial for differentiating between individuals.

- This paper presents a novel application of the Segment Anything model, utilizing a BBox as a prompt to automatically extract precise footprint outlines, ensuring consistency and reproducibility across large datasets.

- This is the only study that automatically identifies a set of footprints (left and right) on the soil substrate by correlating them based on similar morphometric features and labeling them as belonging to one individual.

3. Materials and Methods

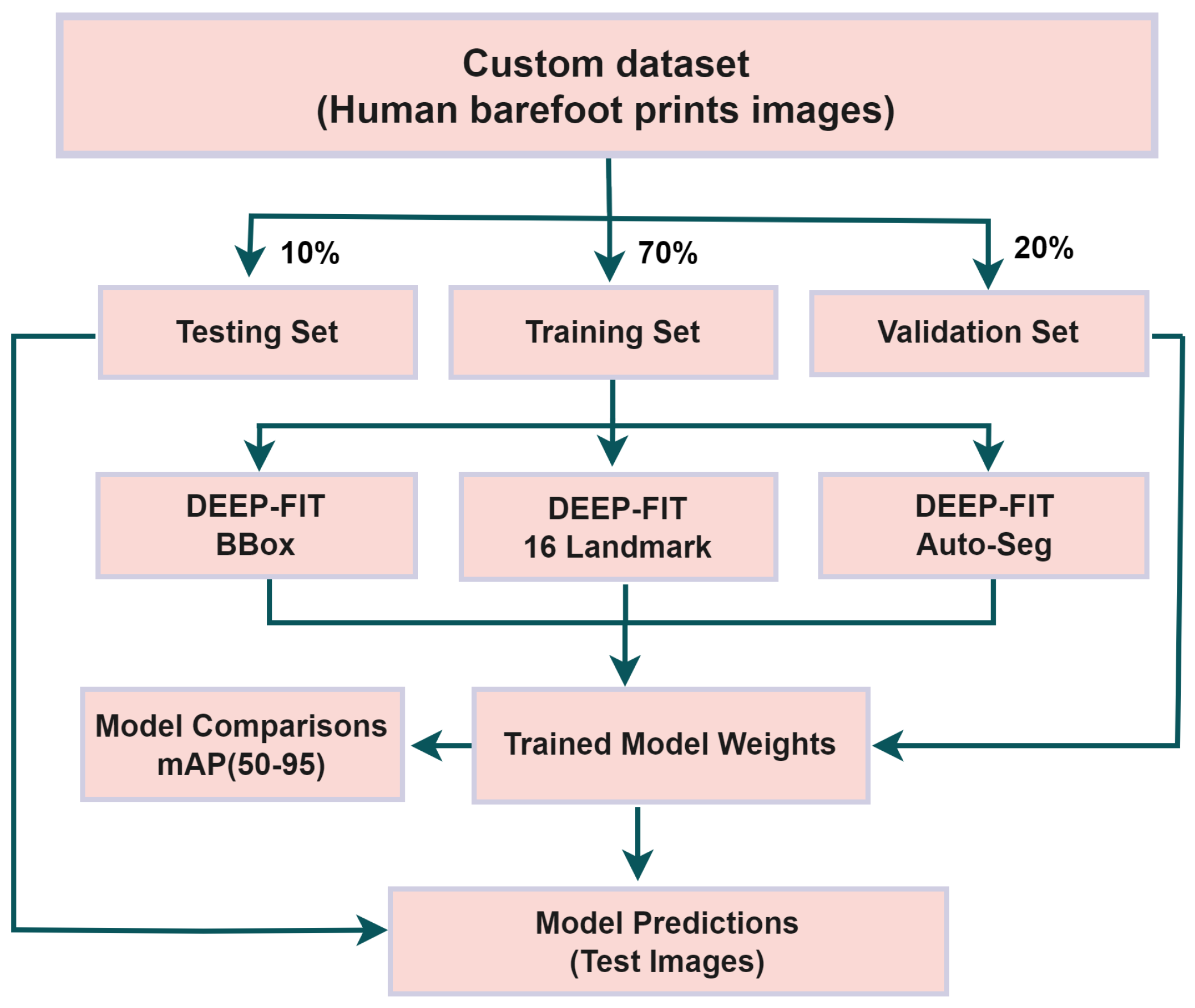

3.1. Methodology Description

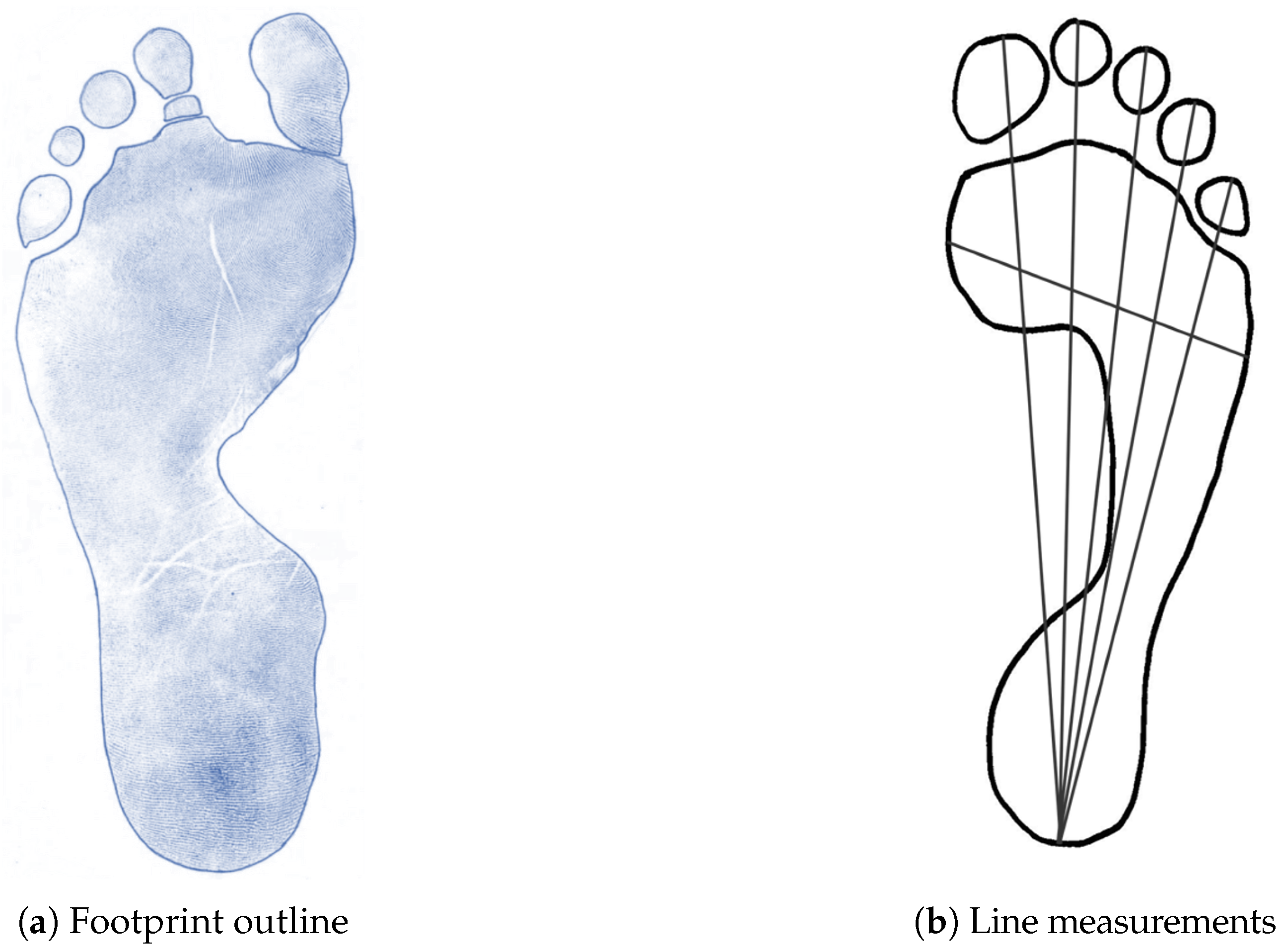

3.2. DeepFIT Morphometric Landmark Method

- -

- and are the true coordinates of landmark l,

- -

- and are the predicted coordinates,

- -

- is the true probability of correct identification,

- -

- is the predicted probability,

- -

3.3. DeepFIT Auto-Segmentation Based Method

- , represents the segmentation mask produced by the Segment Anything Model from image I,

- , and represents the set of coordinates that define the contour of the footprint, derived from segmentation S.

3.4. DeepFIT Network Structure

3.5. Extra Small Detection Head (XSDH)

- : GIoU loss for BBox regression

- : Binary cross-entropy for objectness prediction

- : Binary cross-entropy for class prediction

4. Experimental Process

4.1. Dataset

4.2. Hyperparameters and Training Environment

4.3. Performance Matrics

5. Experimental Analysis

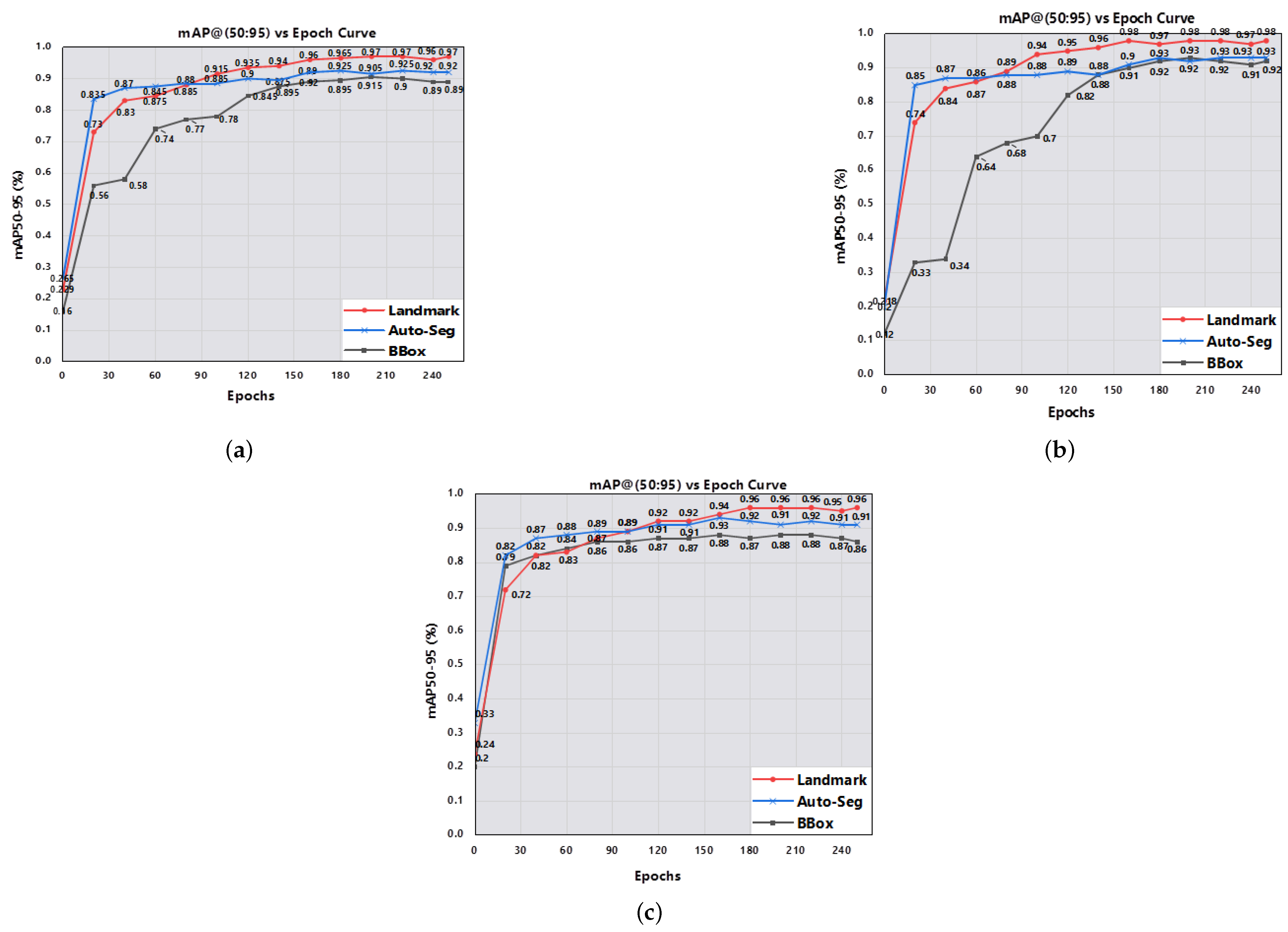

5.1. Model Training Analysis

5.2. Ablation Experimental Results

5.3. Statistical Analysis

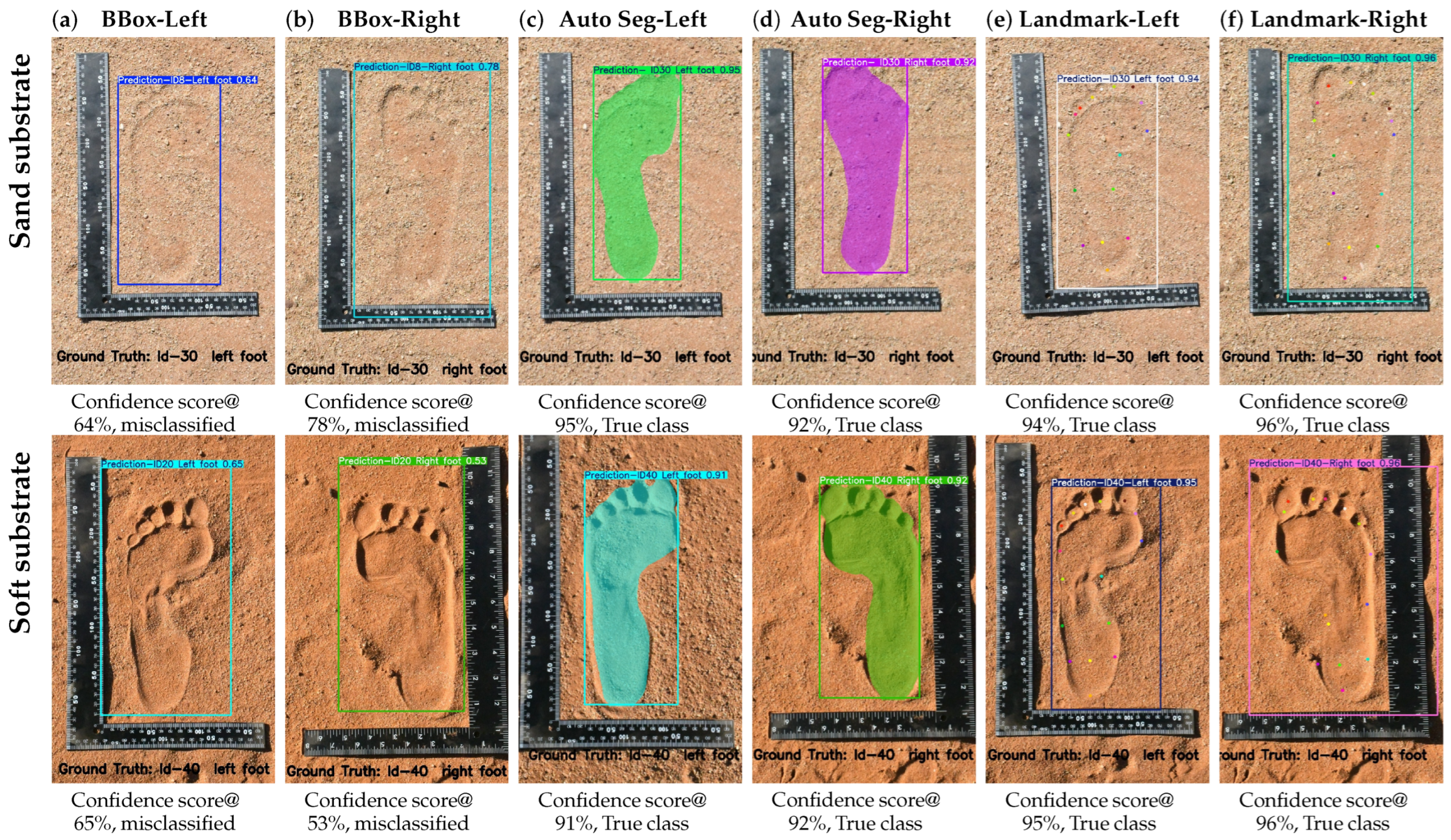

5.4. Sample Visualization of Experimental Results for Two Target Groups

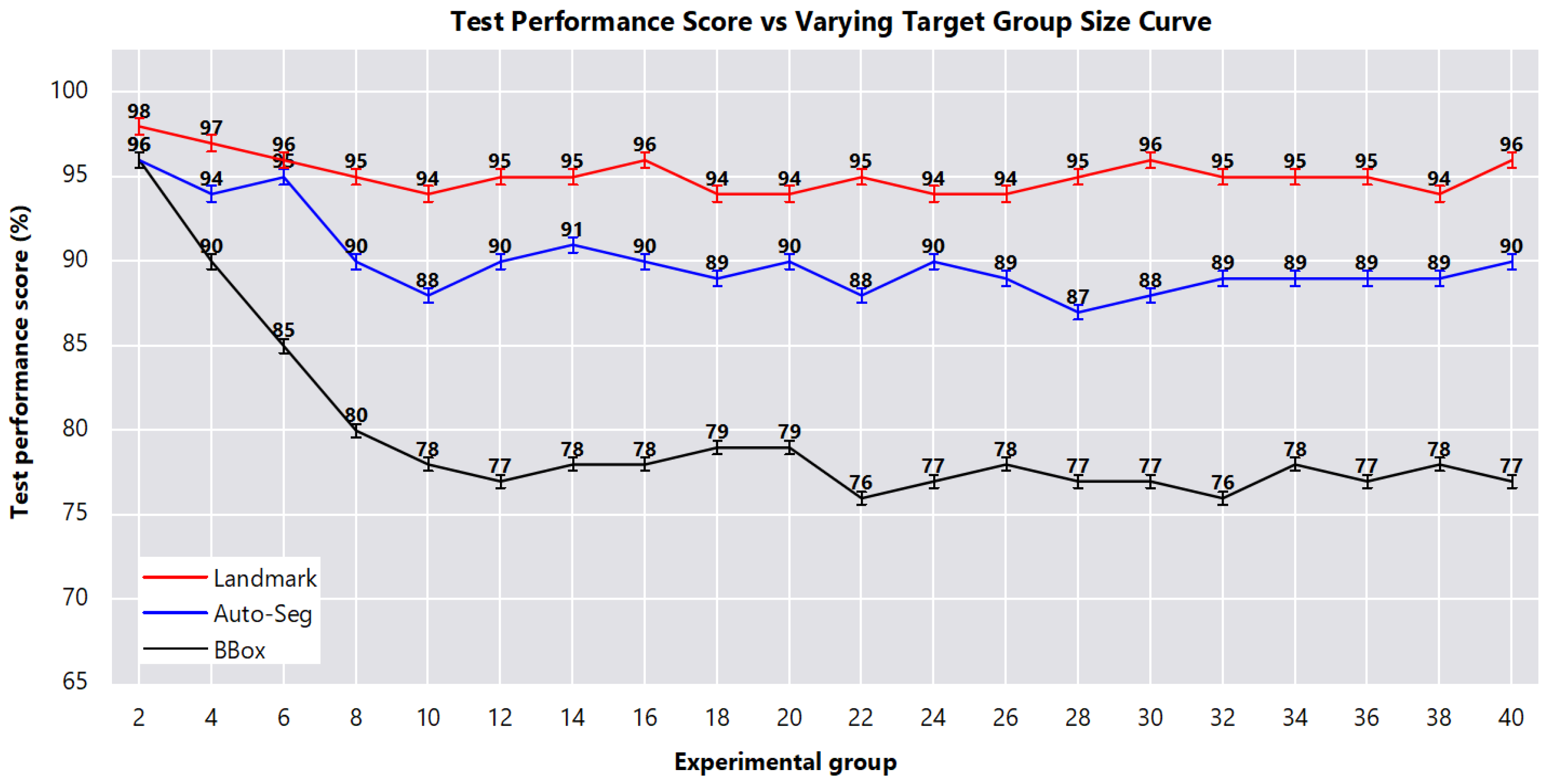

5.4.1. Performance Analysis on Small Target Groups (2–10 Individuals)

5.4.2. Performance Analysis on Large Target Groups (11–40 Individuals)

6. Discussion

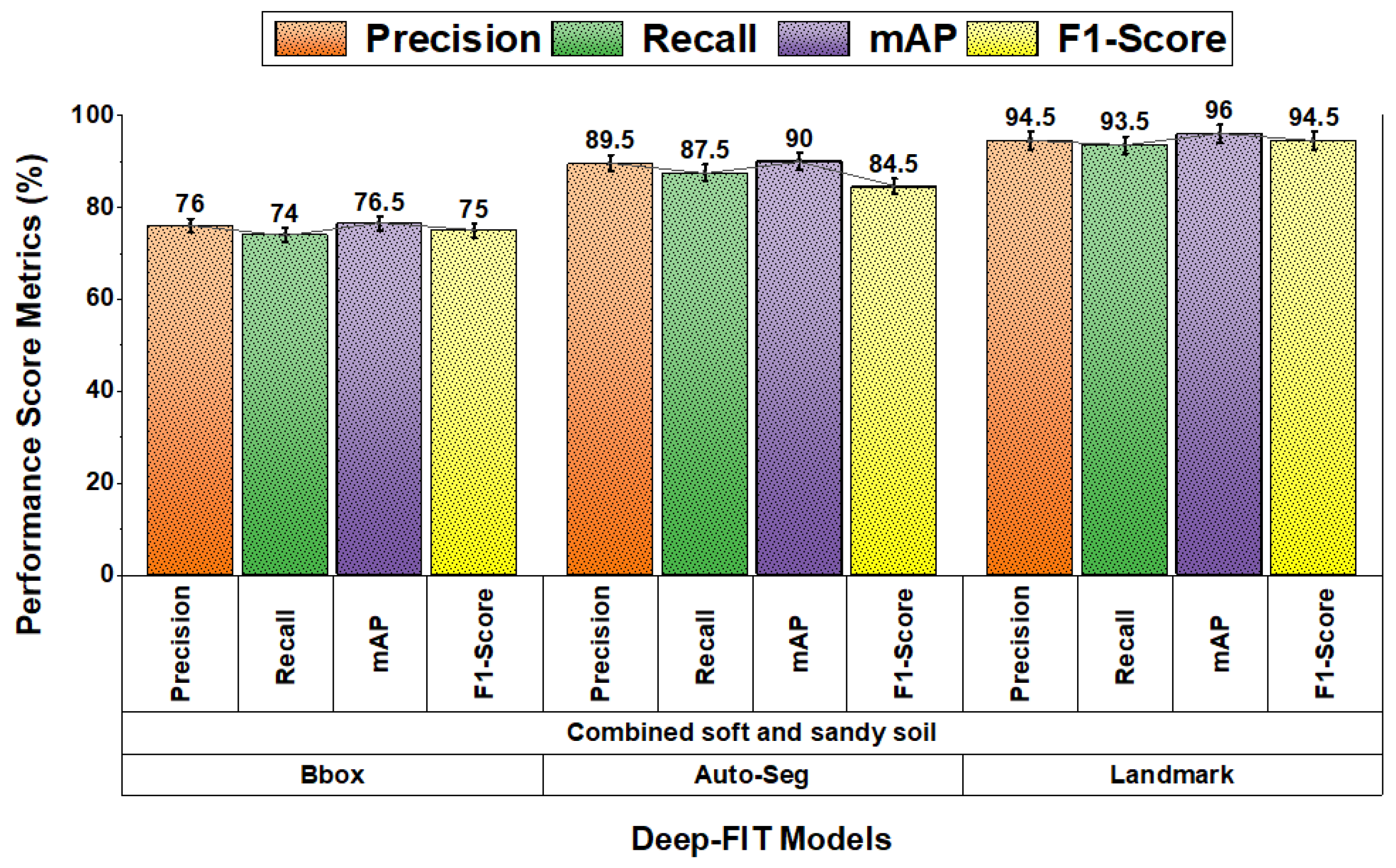

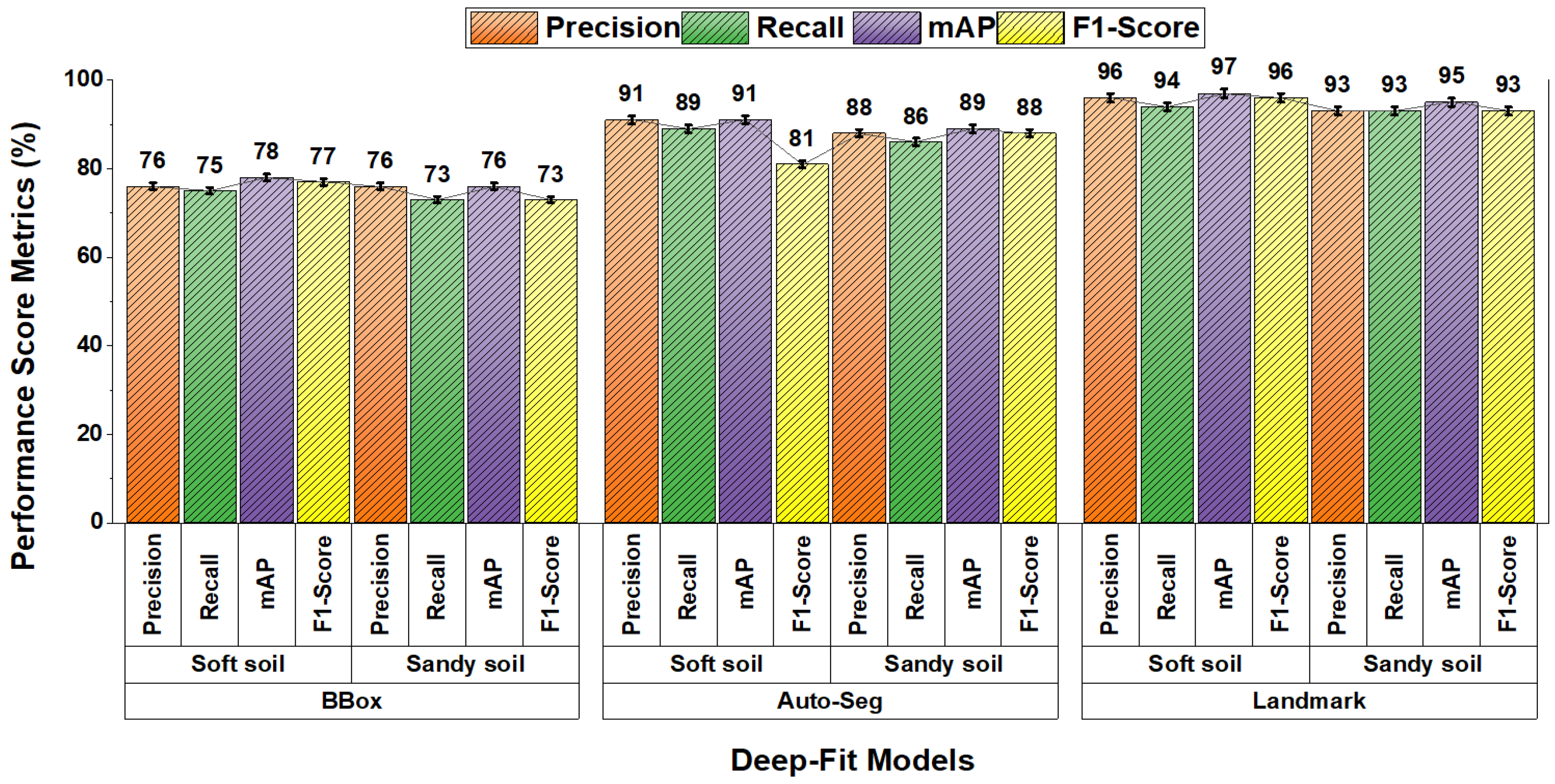

6.1. Performance Analysis of the DeepFIT BBox Baseline Method

6.2. Performance Analysis of the DeepFIT Auto-Seg Method

6.3. Performance Analysis of the DeepFIT Landmark Method

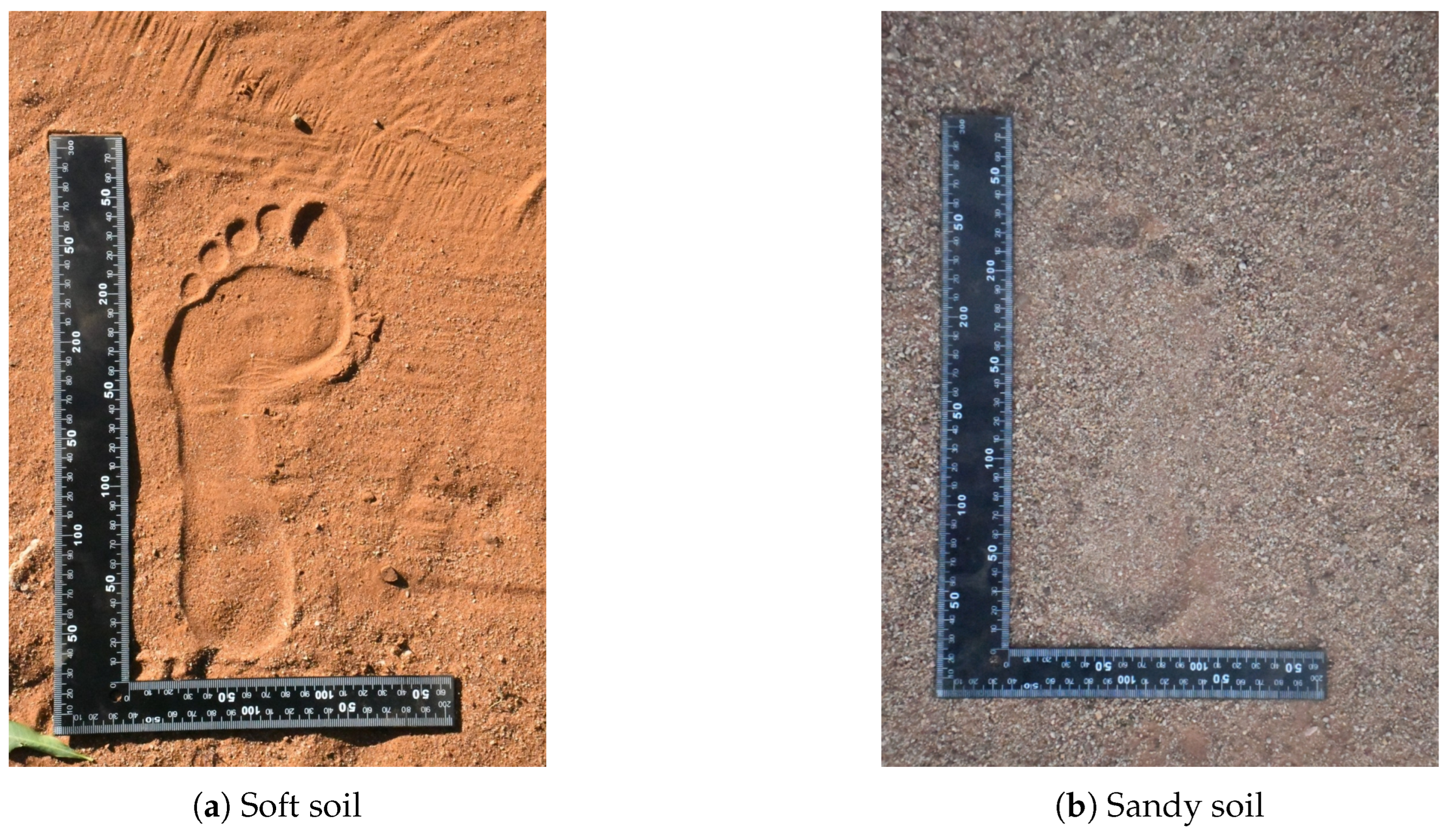

6.4. Effect of Soil Substrates and Other Factors on the Classification of Barefoot Prints

7. Study Limitations

8. Conclusions and Future Direction

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DeepFIT | Deeplearning Footprint Identification Technology |

| XSDH | Extra Small Detection Head |

| C3k2 | Cross-Stage Partial Structure with Convolution 3 and kernel size 2 |

| SPPF | Spatial Pyramid Pooling Fast |

| AutoSeg | Automatic Segmentation |

| CNN | Convolutional Neural Network |

References

- Khokher, R.; Singh, R.C. Footprint-Based Personal Recognition Using Scanning Technique. Indian J. Sci. Technol. 2016, 9, 1–10. [Google Scholar] [CrossRef]

- Osisanwo, F.; Adetunmbi, A.O.; Álese, B.K. Barefoot Morphology: A Person Unique Feature for Forensic Identification. In Proceedings of the 9th International Conference on Internet Technology and Secured Transactions (ICITST), London, UK, 8–10 December 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 356–359. [Google Scholar]

- Atamturk, D.; Duyar, I. Age-related factors in the relationship between foot measurements and living stature and body weight. J. Forensic Sci. 2008, 53, 1296–1300. [Google Scholar] [PubMed]

- Abledu, J.K.; Abledu, G.K.; Offei, E.B.; Antwi, E.M. Determination of sex from footprint dimensions in a Ghanaian population. PLoS ONE 2015, 10, e0139891. [Google Scholar]

- Reel, S.; Rouse, S.; Obe, W.V.; Doherty, P. Estimation of stature from static and dynamic footprints. Forensic Sci. Int. 2012, 219, 283-e1. [Google Scholar] [CrossRef]

- Khan, H.B.M.A.; Moorthy, T.N. Stature Estimation from the Anthropometric Measurements of Foot Outline in Adult Indigenous Melanau Ethnics of East Malaysia by Regression Analysis. Sri Lanka J. Forensic Med. Sci. Law 2014, 4, 2. [Google Scholar] [CrossRef]

- D’Août, K.; Meert, L.; Van Gheluwe, B.; De Clercq, D.; Aerts, P. Experimentally Generated Footprints in Sand: Analysis and Consequences for the Interpretation of Fossil and Forensic Footprints. Am. J. Phys. Anthropol. 2010, 141, 515–525. [Google Scholar]

- Kelemework, A.; Abebayehu, A.T.; Amberbir, T.A.; Agedew, G.; Asmamaw, A.A.; Deribe, K.D.; Davey, G. “Why Should I Worry, Since I Have Healthy Feet?” A Qualitative Study Exploring Barriers to Use of Footwear among Rural Community Members in Northern Ethiopia. BMJ Open 2016, 6, e010354. [Google Scholar]

- Main, M. African Adventurer’s Guide: Botswana; Penguin Random House South Africa: Cape Town, South Africa, 2012. [Google Scholar]

- Palla, S.; Shivajirao, A. Anthropometric examination of footprints in South Indian population for sex estimation. Forensic Sci. Int. Rep. 2024, 9, 100354. [Google Scholar] [CrossRef]

- Mukhra, R.; Krishan, K.; Kanchan, T. Bare footprint metric analysis methods for comparison and identification in forensic examinations: A review of literature. J. Forensic Leg. Med. 2018, 58, 101–112. [Google Scholar] [CrossRef]

- Tucker, J.M.; King, C.; Lekivetz, R.; Murdoch, R.; Jewell, Z.C.; Alibhai, S.K. Development of a Non-Invasive Method for Species and Sex Identification of Rare Forest Carnivores Using Footprint Identification Technology. Ecol. Inform. 2024, 79, 102431. [Google Scholar]

- Kistner, F.; Tulowietzki, J.; Slaney, L.; Alibhai, S.; Jewell, Z.; Ramosaj, B.; Pauly, M. Enhancing Endangered Species Monitoring by Lowering Data Entry Requirements with Imputation Techniques as a Preprocessing Step for the Footprint Identification Technology (FIT). Ecol. Inform. 2024, 82, 102676. [Google Scholar] [CrossRef]

- Krishna, S.T.; Kalluri, H.K. Deep Learning and Transfer Learning Approaches for Image Classification. Int. J. Recent Technol. Eng. 2019, 7, 427–432. [Google Scholar]

- Montalbo, F.J.P. A Computer-Aided Diagnosis of Brain Tumors Using a Fine-Tuned YOLO-Based Model with Transfer Learning. KSII Trans. Internet Inf. Syst. 2020, 14, 4816–4834. [Google Scholar]

- Situ, Z.; Teng, S.; Liao, X.; Chen, G.; Zhou, Q. Real-Time Sewer Defect Detection Based on YOLO Network, Transfer Learning, and Channel Pruning Algorithm. J. Civ. Struct. Health Monit. 2024, 14, 41–57. [Google Scholar] [CrossRef]

- Tinao, P.; Jamisola, R.S., Jr.; Mpoeleng, D.; Bennitt, E.; Mmereki, W. Automatic Animal Identification from Drone Camera Based on Point Pattern Analysis of Herd Behaviour. Ecol. Inform. 2021, 66, 101485. [Google Scholar]

- Yamashita, A.B. Forensic Barefoot Morphology Comparison. Can. J. Criminol. Crim. Justice 2007, 49, 647–656. [Google Scholar] [CrossRef]

- Burrow, J.G.; Kelly, H.D.; Francis, B.E. Forensic Podiatry—An Overview. J. Forensic Sci. Crim. Investig. 2017, 5, 1–8. [Google Scholar] [CrossRef]

- Vernon, W.; Reel, S.; Howsam, N. Examination and Interpretation of Barefoot Prints in Forensic Investigations. Res. Rep. Forensic Med. Sci. 2020, 1–14. [Google Scholar]

- Kennedy, R.B.; Yamashita, A.B. Barefoot Morphology Comparisons: A Summary. J. Forensic Ident. 2007, 57, 383. [Google Scholar]

- Gunn, N. Old and New Methods of Evaluating Footprint Impressions by a Forensic Podiatrist. Br. J. Podiatr. Med. Surg. 1991, 3, 8–11. [Google Scholar]

- Robbins, L.M.; Gantt, R. Footprints: Collection, Analysis, and Interpretation; Charles C Thomas Publisher: Springfield, IL, USA, 1985. [Google Scholar]

- Kennedy, R.B.; Pressman, I.S.; Chen, S.; Petersen, P.H.; Pressman, A.E. Statistical Analysis of Barefoot Impressions. J. Forensic Sci. 2003, 48, JFS2001337. [Google Scholar] [CrossRef]

- Smerecki, C.J.; Lovejoy, C.O. Identification via Pedal Morphology. Int. Crim. Police Rev. 1985, 40, 186–190. [Google Scholar]

- Domjanic, J.; Fieder, M.; Seidler, H.; Mitteroecker, P. Geometric Morphometric Footprint Analysis of Young Women. J. Foot Ankle Res. 2013, 6, 27. [Google Scholar] [CrossRef] [PubMed]

- Reel, S.M.L. Development and Evaluation of a Valid and Reliable Footprint Measurement Approach in Forensic Identification. Ph.D. Thesis, University of Leeds, Leeds, UK, 2012. [Google Scholar]

- Liau, A.P.B.-Y.; Jan, Y.-K.; Tsai, J.-Y.; Akhyar, F.; Lin, C.-Y.; Subiakto, R.B.R.; Lung, C.-W. Deep Learning in Left and Right Footprint Image Detection Based on Plantar Pressure. Appl. Sci. 2022, 12, 8885. [Google Scholar] [CrossRef]

- Chen, L.; Jin, L.; Li, Y.; Liu, M.; Liao, B.; Yi, C.; Sun, Z. Triple Generalized-Inverse Neural Network for Diagnosis of Flat Foot. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 8594–8599. [Google Scholar] [CrossRef]

- Keatsamarn, T.; Pintavirooj, C. Footprint Identification Using Deep Learning. In Proceedings of the 2018 11th Biomedical Engineering International Conference (BMEiCON)*, Chiang Mai, Thailand, 21–24 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–4. [Google Scholar]

- Budka, M.; Bennett, M.R.; Reynolds, S.C.; Barefoot, S.; Reel, S.; Reidy, S.; Walker, J. Sexing White 2D Footprints Using Convolutional Neural Networks. PLoS ONE 2021, 16, e0255630. [Google Scholar] [CrossRef]

- BEng, Y.Y.; Tang, Y.; MEng, J.C.; MEng, X.Z. Score-based likelihood ratios for barefootprint evidence using deep learning features. J. Forensic Sci. 2025, 70, 98–116. [Google Scholar] [CrossRef]

- Shen, Y.; Jiang, X.; Zhao, Y.; Xie, W. Barefoot Footprint Detection Algorithm Based on YOLOv8-StarNet. Sensors 2025, 25, 4578. [Google Scholar] [CrossRef]

- İbrahimoğlu, N.; Osmani, A.; Ghaffari, A.; Günay, F.B.; Çavdar, T.; Yıldız, F. FootprintNet: A Siamese network method for biometric identification using footprints. J. Supercomput. 2025, 81, 714. [Google Scholar] [CrossRef]

- Jin, Y. Algorithm of personal recognition based on multi-scale features from barefoot footprint image. Forensic Sci. Technol. 2022, 47, 587–592. [Google Scholar]

- Cunningham, P.; Cord, M.; Delany, S.J. Supervised Learning. In Machine Learning Techniques for Multimedia: Case Studies on Organization and Retrieval; Springer: Berlin/Heidelberg, Germany, 2008; pp. 21–49. [Google Scholar]

- Vandaele, R.; Aceto, J.; Muller, M.; Péronnet, F.; Debat, V.; Wang, C.W.; Huang, C.T.; Jodogne, S.; Martinive, P.; Geurts, P.; et al. Landmark Detection in 2D Bioimages for Geometric Morphometrics: A Multi-Resolution Approach. Sci. Rep. 2018, 8, 538. [Google Scholar] [CrossRef]

- Jiang, C.; Mu, X.; Zhang, B.; Xie, M.; Liang, C. YOLO-Based Missile Pose Estimation under Uncalibrated Conditions. IEEE Access 2024, 12, 112462–112469. [Google Scholar] [CrossRef]

- Chen, D.; Chen, Y.; Ma, J.; Cheng, C.; Xi, X.; Zhu, R.; Cui, Z. An Ensemble Deep Neural Network for Footprint Image Retrieval Based on Transfer Learning. J. Sens. 2021, 2021, 6631029. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef] [PubMed]

- Zou, X.; Yang, J.; Zhang, H.; Li, F.; Li, L.; Wang, J.; Wang, L.; Gao, J.; Lee, Y.J. Segment Everything Everywhere All at Once. Adv. Neural Inf. Process. Syst. 2023, 36, 19769–19782. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 4015–4026. [Google Scholar]

- Moorthy, T.N.; Sulaiman, S.F.B. Individualizing Characteristics of Footprints in Malaysian Malays for Person Identification from a Forensic Perspective. Egypt. J. Forensic Sci. 2015, 5, 13–22. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ali, M.L.; Zhang, Z. The YOLO Framework: A Comprehensive Review of Evolution, Applications, and Benchmarks in Object Detection. Computers 2024, 13, 336. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Gong, S.; Yu, W.; Chen, J.; Qi, D.; Lu, J. SN-YOLO: A Super Neck Algorithm Based on YOLO11 for Traffic Object Detection. In Proceedings of the International Conference on Computer Vision, Robotics, and Automation Engineering (CRAE 2025), Shanghai, China, 27–29 June 2025; SPIE: Bellingham, WA, USA, 2025; Volume 13790, pp. 95–101. [Google Scholar]

- Misbah, M.; Khan, M.U.; Kaleem, Z.; Muqaibel, A.; Alam, M.Z.; Liu, R.; Yuen, C. MSF-GhostNet: Computationally-Efficient YOLO for Detecting Drones in Low-Light Conditions. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 18, 3840–3851. [Google Scholar] [CrossRef]

- Liu, C.; Tao, Y.; Liang, J.; Li, K.; Chen, Y. Object Detection Based on YOLO Network. In Proceedings of the 2018 IEEE 4th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 14–16 December 2018; pp. 799–803. [Google Scholar]

- Kukartsev, V.V.; Ageev, R.A.; Borodulin, A.S.; Gantimurov, A.P.; Kleshko, I.I. Deep Learning for Object Detection in Images: Development and Evaluation of the YOLOv8 Model Using Ultralytics and Roboflow Libraries. In Proceedings of the Computer Science On-Line Conference; Springer Nature: Cham, Switzerland, 2024; pp. 629–637. [Google Scholar]

- Alhussainan, N.F.; Ben Youssef, B.; Ben Ismail, M.M. A Deep Learning Approach for Brain Tumor Firmness Detection Based on Five Different YOLO Versions: YOLOv3–YOLOv7. Computation 2024, 12, 44. [Google Scholar] [CrossRef]

- Ryu, S.E.; Chung, K.Y. Detection Model of Occluded Object Based on YOLO Using Hard-Example Mining and Augmentation Policy Optimization. Appl. Sci. 2021, 11, 7093. [Google Scholar] [CrossRef]

- DiMaggio, J.A.; Vernon, W. Forensic Podiatry Principles and Human Identification. In Forensic Podiatry: Principles and Methods; Humana Press: Totowa, NJ, USA, 2011; pp. 13–24. [Google Scholar] [CrossRef]

- Chan, S.; Zheng, J.; Wang, L.; Wang, T.; Zhou, X.; Xu, Y.; Fang, K. Rotating Object Detection in Remote-Sensing Environment. Soft Comput. 2022, 26, 8037–8045. [Google Scholar] [CrossRef]

- Nakajima, K.; Mizukami, Y.; Tanaka, K.; Tamura, T. Footprint-Based Personal Recognition. IEEE Trans. Biomed. Eng. 2000, 47, 1534–1537. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Ding, N.; Lin, S.; Lv, H.; Liu, X. Research on the Measurement Method of Barefoot Footprint Similarity. In Proceedings of the International Conference on Statistics, Data Science, and Computational Intelligence (CSDSCI 2022), Qingdao, China, 19–21 August 2022; SPIE: Bellingham, WA, USA, 2023; Volume 12510, pp. 112–118. [Google Scholar]

- Howsam, N.; Bridgen, A. A comparative study of standing fleshed foot and walking and jumping barefootprint measurements. Sci. Justice 2018, 58, 346–354. [Google Scholar] [CrossRef] [PubMed]

- Bennett, M.R.; Morse, S.A. Human Footprints: Fossilised Locomotion? Springer: Dordrecht, The Netherlands, 2014; Volume 216. [Google Scholar]

- Wiseman, A.L.A.; De Groote, I. One Size Fits All? Stature Estimation from Footprints and the Effect of Substrate and Speed on Footprint Creation. Anat. Rec. 2022, 305, 1692–1700. [Google Scholar] [CrossRef]

- Marty, D.; Strasser, A.; Meyer, C.A. Formation and Taphonomy of Human Footprints in Microbial Mats of Present-Day Tidal-Flat Environments: Implications for the Study of Fossil Footprints. Ichnos 2009, 16, 127–142. [Google Scholar] [CrossRef]

- Bates, K.T.; Savage, R.; Pataky, T.C.; Morse, S.A.; Webster, E.; Falkingham, P.L.; Ren, L.; Qian, Z.; Collins, D.; Bennett, M.R.; et al. Does footprint depth correlate with foot motion and pressure? J. R. Soc. Interface 2013, 10, 20130009. [Google Scholar] [CrossRef]

- Hatala, K.G.; Wunderlich, R.E.; Dingwall, H.L.; Richmond, B.G. Interpreting Locomotor Biomechanics from the Morphology of Human Footprints. J. Hum. Evol. 2016, 90, 38–48. [Google Scholar] [CrossRef]

| Study | Method | Medium | Features Used | Dataset Size | Accuracy | Remarks |

|---|---|---|---|---|---|---|

| [28] | YOLOv4 | Pressure scanner | Plantar pressure | 974 images | 99% | Barefoot pressure images are collected for cerebral palsy patient |

| [29] | TGINN | Smart insole | Flat foot | 835 images | 82% | Flat foot dataset collected using smart insole |

| [30] | CNN | Optical sensor | Foot pressure | 13 indiv. | 92.69% | Footprint images using optical sensor system with information generated by foot pressure |

| [31] | CNN | Inkless pad | Friction ridges | 2800 images | 90% | Standing footprint collected using inkless pad system for sex classification |

| [32] | Likelihood ratios | 2D inkless scan system | Gray scale barefoot prints | 3000 indiv. & 54,118 footprints | 98.4% | Barefoot prints are collected using 2D inkless scan system as evidence for court use |

| [33] | YOLOv8 StarNet | White sheet | RGB footprints | 300 indiv. & 2400 images | 73% | Barefoot prints are collected using a digital camera for recognition |

| [34] | Footprint Net | Capture of inked prints | Intensity spectral variation | 220 indiv. & 2200 images | 99% | Barefoot prints are captured using a scanner for biometric recognition |

| [35] | ResNet50 | Ink and scanned images | Shape contour features | 10,000 indiv. & 16 images each | 96.2% | Barefoot prints are scanned and inked for personal recognition |

| Our study | DeepFIT auto segmentation | Soft and sandy soil substrate | Segmented outlines | 40 indiv. & 22,000 images | 90% | Barefoot print images are collected on soil substrate using a camera for identification. |

| Our study | DeepFIT landmark | Soft and sandy soil substrate | 16 landmark points | 40 indiv. & 22,000 images | 96% | Barefoot print images are collected on soil substrate using a camera for identification. |

| Landmark | Description of Landmark |

|---|---|

| L1 | Centre of 1st toe |

| L2 | Centre of 2nd toe |

| L3 | Centre of 3rd toe |

| L4 | Centre of 4th toe |

| L5 | Centre of 5th toe |

| L6 | Head of 1st metatarsal |

| L7 | Right ball width landmark |

| L8 | Right instep curvature landmark |

| L9 | Right instep width landmark |

| L10 | Right heel width landmark |

| L11 | The most backward and prominent point of the heel |

| L12 | Left heel width landmark |

| L13 | centre of the heel landmark |

| L14 | Left instep width landmark |

| L15 | Left ball width landmark |

| L16 | Head of 5st metatarsal |

| Landmark | Description of Landmark |

|---|---|

| Learning rate | |

| Batch size | 16 |

| Momentum | 0.99 |

| Weight decay | 0.0005 |

| Epochs | 240 |

| Experiment ID | Model Variant | BBox | Landmarks | Segmentation | XSDH | mAP50-95 (%) |

|---|---|---|---|---|---|---|

| 1 | Baseline BBox | ✓ | – | – | – | 76.3 |

| 2 | Baseline BBox + XSDH | ✓ | – | – | ✓ | 77 |

| 3 | Baseline BBox + Segmentation | ✓ | – | ✓ | – | 88 |

| 4 | Baseline BBox + Segmentation & XSDH | ✓ | – | ✓ | ✓ | 90 |

| 5 | Baseline BBox + Landmark | ✓ | ✓ | – | – | 89 |

| 6 | Baseline BBox + Landmark & XSDH | ✓ | ✓ | – | ✓ | 96 |

| Soft Soil | ||||

|---|---|---|---|---|

| Method | Precision (%) | Recall (%) | mAP (%) | F1-Score (%) |

| BBox | 76 | 75 | 78 | 77 |

| Auto-Seg | 91 | 89 | 91 | 81 |

| Landmark | 96 | 94 | 97 | 96 |

| Sand Soil | ||||

| Method | Precision (%) | Recall (%) | mAP (%) | F1-Score (%) |

| BBox | 76 | 73 | 76 | 73 |

| Auto-Seg | 88 | 86 | 89 | 88 |

| Landmark | 93 | 93 | 95 | 93 |

| Method | Mean AP | Std Dev | Comparison | t-Value | p-Value | Significant |

|---|---|---|---|---|---|---|

| BBox | 76.8 | 5.41 | BBox vs. Auto-Seg | −16.94 | Yes | |

| BBox vs. Landmark | −14.04 | Yes | ||||

| Auto-Seg | 90.1 | 2.29 | Auto-Seg vs. Landmark | −9.18 | Yes | |

| Landmark | 96.3 | 1.49 | (already shown above) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mmereki, W.; Jamisola, R.S., Jr.; Jewell, Z.C.; Petso, T.; Matsebe, O.; Alibhai, S.K. Investigating Bounding Box, Landmark, and Segmentation Approaches for Automatic Human Barefoot Print Classification on Soil Substrates Using Deep Learning. Forensic Sci. 2025, 5, 56. https://doi.org/10.3390/forensicsci5040056

Mmereki W, Jamisola RS Jr., Jewell ZC, Petso T, Matsebe O, Alibhai SK. Investigating Bounding Box, Landmark, and Segmentation Approaches for Automatic Human Barefoot Print Classification on Soil Substrates Using Deep Learning. Forensic Sciences. 2025; 5(4):56. https://doi.org/10.3390/forensicsci5040056

Chicago/Turabian StyleMmereki, Wazha, Rodrigo S. Jamisola, Jr., Zoe C. Jewell, Tinao Petso, Oduetse Matsebe, and Sky K. Alibhai. 2025. "Investigating Bounding Box, Landmark, and Segmentation Approaches for Automatic Human Barefoot Print Classification on Soil Substrates Using Deep Learning" Forensic Sciences 5, no. 4: 56. https://doi.org/10.3390/forensicsci5040056

APA StyleMmereki, W., Jamisola, R. S., Jr., Jewell, Z. C., Petso, T., Matsebe, O., & Alibhai, S. K. (2025). Investigating Bounding Box, Landmark, and Segmentation Approaches for Automatic Human Barefoot Print Classification on Soil Substrates Using Deep Learning. Forensic Sciences, 5(4), 56. https://doi.org/10.3390/forensicsci5040056