1. Introduction

Spoken language is a fundamental medium of communication, but it excludes individuals with hearing impairments who rely on sign language. For deaf or hard of hearing people, sign languages are their main way to talk and understand each other. These languages use hand signs, face expressions, and body movement. In Morocco, many deaf people use Moroccan Sign Language (MSL) to communicate. But most hearing people do not know it, so it is hard for deaf people to receive things like education, healthcare, or take part in society [

1].

To address these barriers, researchers are developing technologies that translate sign language gestures into written or spoken forms. These solutions aim to improve communication and promote social inclusion for deaf individuals [

2,

3]. Thanks to advances in machine learning and embedded systems, it is now feasible to create recognition tools that function efficiently on compact, low-power devices [

4,

5].

One effective method involves sensor gloves equipped with motion detectors such as accelerometers and gyroscopes. These gloves capture precise hand movements, which are then processed by artificial neural networks to accurately identify gestures. The recognized signs can be converted into text or voice outputs and delivered through user-friendly platforms like smartphone applications [

6,

7,

8].

While many sign language recognition systems focus on languages like Arabic Sign Language or American Sign Language, the lessons learned from those efforts are valuable for developing MSL-specific tools. Moroccan Sign Language is unique and plays a crucial role in the cultural identity of the deaf community but remains largely unknown to the wider population, which sustains communication barriers and social isolation [

1].

Accordingly, we propose a glove-based embedded system tailored for MSL gesture recognition. Tailoring solutions to the local language and cultural context ensure better adoption and effectiveness compared to adapting systems designed for other sign languages [

3].

The solution proposed here translates MSL alphabet gestures into text using a glove fitted with MPU6050 sensors, which combine accelerometers and gyroscopes to measure hand movement dynamics. A Raspberry Pi Pico microcontroller processes the sensor data, selected for its compactness, low power needs, and sufficient computing power.

Through the acquisition of motion data (see

Figure 1), a specialized dataset is constructed to train an artificial neural network capable of recognizing individual letters in Moroccan Sign Language (MSL) [

5]. Upon detection of a gesture, the corresponding character is instantly identified and processed, enabling real-time interpretation. Furthermore, an integrated audio sensor captures ambient sounds, enhancing the interaction and paving the way for potential bidirectional communication in future developments.

The remainder of this paper is structured to follow logical progression.

Section 2 presents the related works, highlighting previous research efforts on sign language recognition systems using different sensing technologies and machine learning models, and positioning the current contribution within this context.

Section 3 explores various existing approaches and technologies in gesture recognition.

Section 4 outlines the criteria applied in selecting the recognition strategy best suited for this application.

Section 5 provides technical insight into the system’s hardware, focusing on the integration of the Raspberry Pi Pico with MPU6050 sensors.

Section 6 delves into the hardware configuration, highlighting how the Raspberry Pi Pico is interfaced with the MPU6050 motion sensors to enable precise gesture data collection.

Section 7 concludes the paper by summarizing the primary findings, identifying current system limitations, and proposing future improvements aimed at strengthening communication support for the deaf and hard-of-hearing community in Morocco.

2. Related Work

Recent studies have explored smart glove-based systems for sign language recognition using various sensing technologies and machine learning models. For instance, Wong et al. [

9] developed a multi-feature capacitive sensor system achieving high gesture classification accuracy using traditional ML algorithms. Ravan et al. [

10] proposed an inductive sensor-based glove integrated with neural networks for dynamic gesture recognition. Yudhana et al. [

11] employed both flex and IMU sensors for real-time translation of sign language into text. However, few studies have focused specifically on Moroccan Sign Language (MSL), and most existing works rely on vision-based methods [

4,

5,

6], which require high computational resources and are unsuitable for low-cost, portable devices. Our work distinguishes itself by developing an embedded, low-power glove-based system for MSL recognition, integrating IMU sensors, real-time processing on a Raspberry Pi Pico, and a custom dataset exceeding 7000 samples.

3. System Design and Methodology

3.1. Hardware Architecture

The proposed system includes a smart glove equipped with five MPU6050 inertial sensors, each carefully positioned on a finger to capture precise motion data such as acceleration and angular velocity. To handle the issue of identical I

2C addresses among the sensors, a TCA9548A I

2C multiplexer, is incorporated, allowing the central controller to communicate sequentially with each sensor without conflict [

12].

At the heart of the system is the Raspberry Pi Pico microcontroller [

13], which manages data collection, filtering, and real-time classification of gestures via an embedded Artificial Neural Network (ANN). This ANN, trained in advance on a specialized dataset of Moroccan Sign Language (MSL) gestures, interprets the sensor data to identify the corresponding Arabic alphabet character.

When a gesture is recognized, the detected letter is immediately transmitted to a voice output module. This module utilizes either a digital-to-analog converter (DAC) or pre-recorded audio clips to produce the spoken equivalent of the recognized letter in Arabic, offering auditory feedback for hearing users.

An ambient microphone sensor is also included to capture environmental sounds, enhancing the system’s interactive features and enabling potential two-way communication in future updates.

The Raspberry Pi Pico, featuring a dual-core RP2040 microcontroller based on the ARM Cortex-M0+ architecture and designed by ARM Ltd., (Cambridge, UK), supports real-time data acquisition and preprocessing. Its multiple I2C buses and general-purpose input/output (GPIO) pins enable seamless interfacing with the multiplexer and various sensors. This optimized architecture ensures efficient conversion of physical gestures into digital signals with minimal latency, making it highly suitable for embedded intelligent systems.

The integration of these components results in a responsive system capable of capturing and interpreting gestures with high precision, forming the foundation for translating sign language into understandable visual and auditory formats, thereby improving communication accessibility for deaf users in Moroccan society.

3.2. Sensor Placement and Motion Data Acquisition

The effectiveness of the gesture recognition process largely depends on the precise placement of the motion sensors on the glove. In this system, each MPU6050 sensor is carefully positioned on a finger to ensure optimal capture of both linear acceleration and angular velocity across three axes. This arrangement enables the system to detect even subtle variations in finger movement, which is crucial for distinguishing between signs with similar trajectories or shapes. The sensor layout was designed to align with the natural articulation of the hand, providing reliable and consistent data acquisition during sign execution.

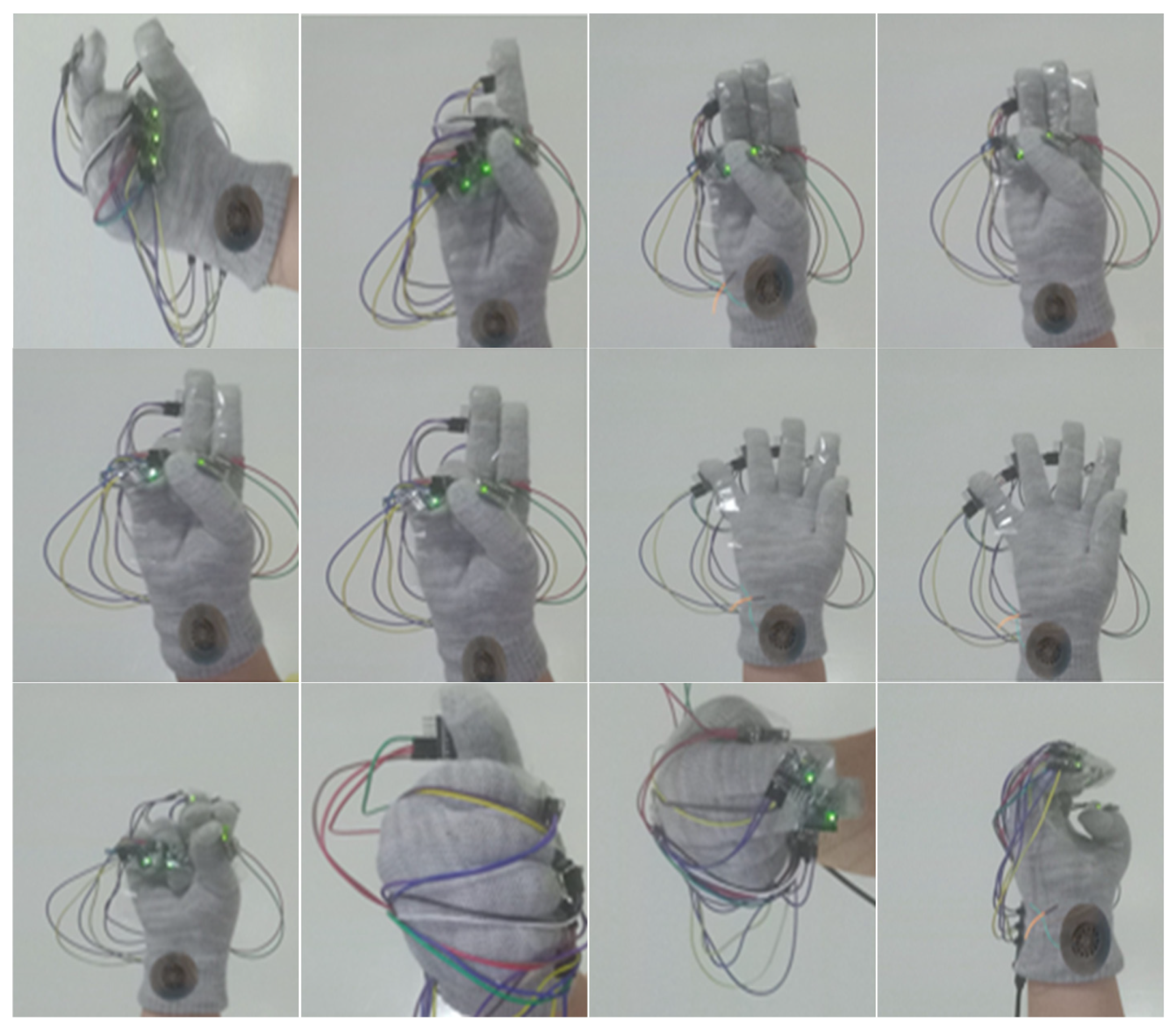

Although

Figure 2 illustrates the theoretical placement of the sensors, it does not convey the physical assembly and integration of components. To complement this schematic,

Figure 3 displays real photographs of the glove prototype from various angles, highlighting the wiring structure, sensor alignment, and overall ergonomic configuration of the wearable system.

The glove integrates five MPU6050 motion sensors—one per finger—connected via individual colored wires. The various angles show the placement of the sensors, the wiring layout, and the overall flexibility of the glove during gesture performance.

The MPU6050, manufactured by TDK InvenSense (San Jose, CA, USA), is an integrated 6-axis motion tracking device combining a 3-axis gyroscope and a 3-axis accelerometer. It allows precise measurement of angular velocity and linear acceleration, which is essential for real-time gesture recognition in embedded systems.

An additional OLED display (SSD1306, 0.96-inch) was integrated into the glove, enabling real-time visualization of recognized Moroccan Sign Language (MSL) letters. This ensures immediate textual feedback in addition to audio output.

The SSD1306 OLED display is a 0.96-inch monochrome screen commonly used in embedded systems. It communicates via the I2C interface and is sourced from manufacturers in China, such as Jiangxi Wisevision Optronics Co., Ltd., or OpenELAB Technology Ltd. The display provides clear real-time feedback, which is essential for interactive gesture recognition.

To improve the glove’s durability and user safety, all sensor wires were protected with flexible silicone sleeves to prevent accidental disconnections. A detachable connector interface was implemented between the MPU6050 sensors and the Raspberry Pi Pico to facilitate maintenance. In future iterations, the glove design will transition to a flexible PCB-based layout to enhance robustness, comfort, and long-term usability.

3.3. Embedded Machine Learning Implementation

The implementation of Embedded Machine Learning (EML) for sign language recognition was achieved by designing and training an Artificial Neural Network (ANN) model using Python 3.11 and TensorFlow 2.14 on Google Colab. The trained model was then quantized and deployed to a Raspberry Pi Pico microcontroller. The ANN architecture is composed of an input layer of 30 neurons, corresponding to six features (3-axis accelerometer and 3-axis gyroscope) from each of the five MPU6050 sensors. This is followed by a hidden layer with 20 neurons, and an output layer with 8 neurons, representing the recognized gesture classes.

The hidden layer utilizes the ReLU (Rectified Linear Unit) activation function [

14]:

The ReLU function is widely used in embedded machine learning due to its efficiency and its contribution to stable training without vanishing gradients [

15].

The output layer applies the Softmax function [

16]:

This function converts the raw output scores into a probability distribution over the 28 gesture classes, ensuring that the highest-probability output corresponds to the predicted letter in Moroccan Sign Language.

The ANN was trained using the Adam optimizer and categorical cross-entropy loss for multi-class classification, achieving over 97% accuracy on the test set. After training, the model was converted to TensorFlow Lite Micro (TFLM) format using post-training quantization, reducing both size and inference latency. [

17]. This lightweight model runs directly on the Raspberry Pi Pico, with an inference time of less than 30 ms, making it suitable for real-time gesture recognition.

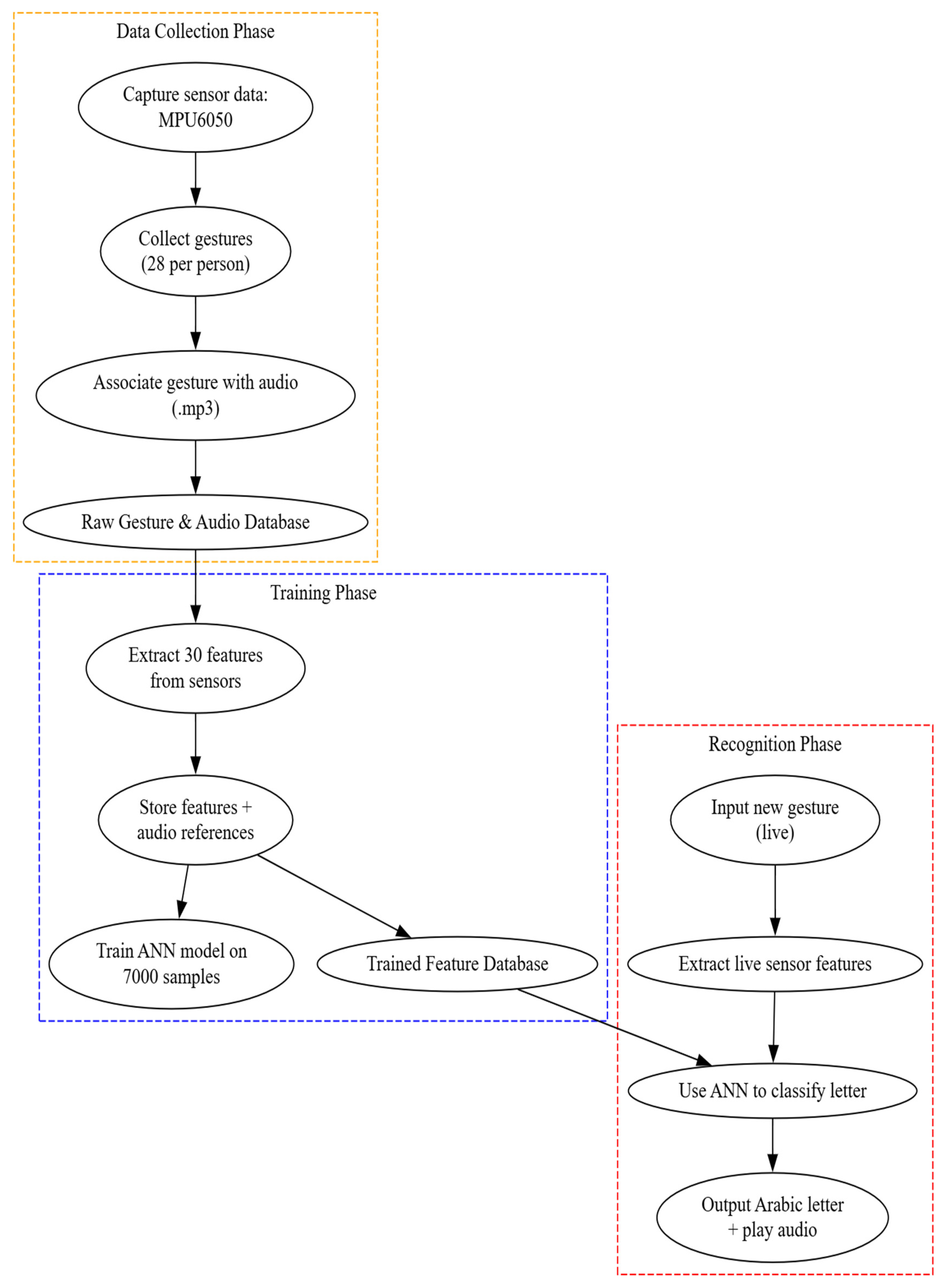

Our gesture recognition system is divided into three essential stages: data collection, training, and recognition (shown in

Figure 4). In the first phase, five MPU6050 sensors—each placed on a different finger—record motion and orientation data across three axes, producing 30 numeric features per gesture. A group of 50 participants performed 28 unique hand gestures, each representing a letter of the Arabic alphabet, with multiple repetitions per gesture, leading to a dataset of over 7000 labeled samples. For each gesture, an audio file (.mp3) is also linked, enabling auditory feedback. Once gathered, the sensor data is cleaned and normalized, then stored in a structured database along with audio references. In the training phase, these processed features are used to train an Artificial Neural Network (ANN) capable of accurately distinguishing between gestures. Finally, in the recognition phase, the system captures a new gesture in real time, extracts its features, identifies the corresponding Arabic letter using the trained ANN, and outputs both the letter and its audio.

3.4. Dataset Construction

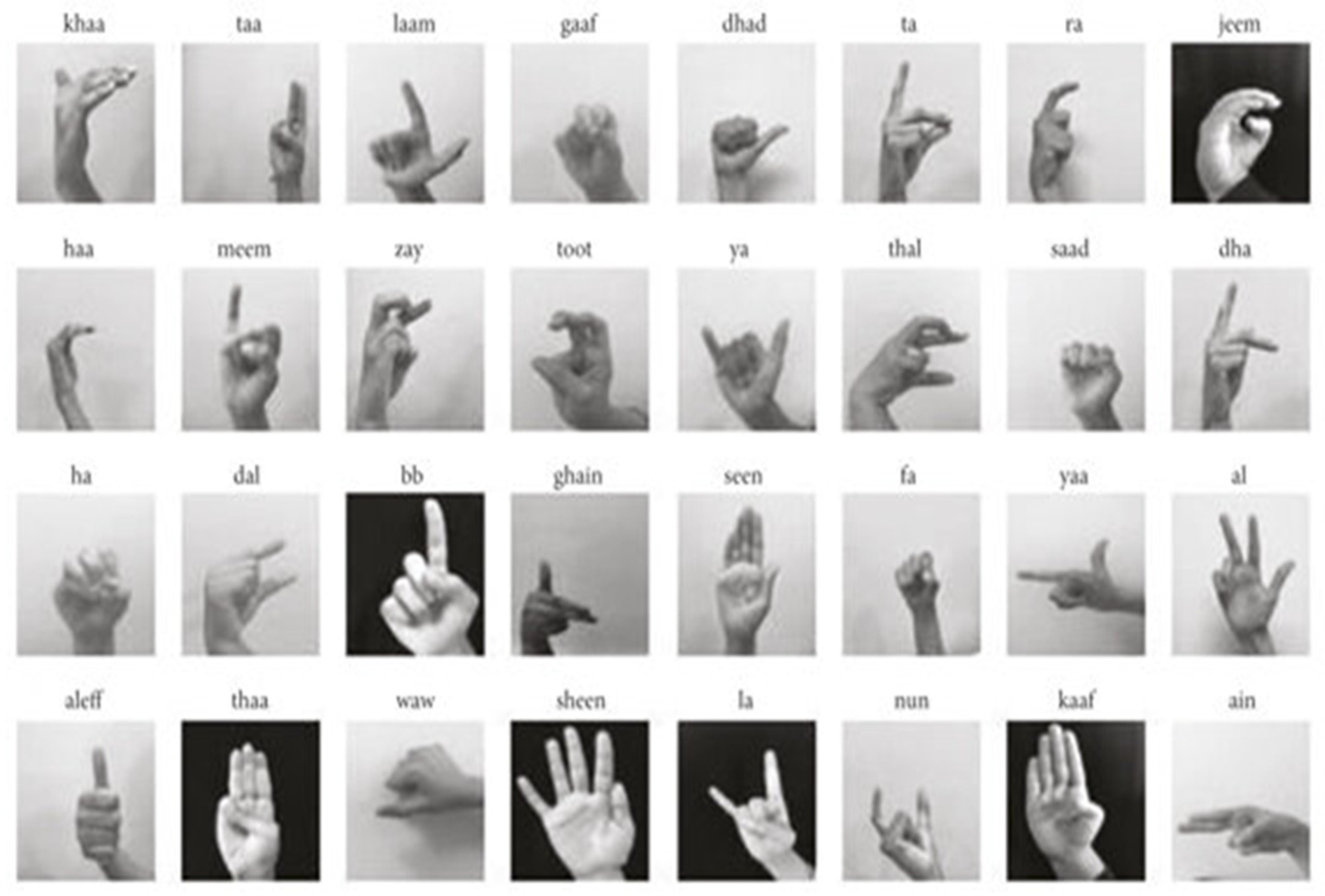

To ensure the development of a robust and realistic gesture recognition system, we constructed a dedicated dataset specifically tailored to Moroccan Sign Language (MSL), covering the 28 letters of the Arabic alphabet (ا to ي).

The data collection involved 50 voluntary participants, most of whom were non-signers, each wearing the smart glove equipped with five MPU6050 motion sensors—one per finger. Each participant performed multiple repetitions for each of the 28 signs, leading to a wide diversity of gesture executions in terms of hand orientation, gesture dynamics, speed, and personal variability.

In total, over 7000 labeled gesture samples were recorded. Each sample consists of:

- ⮚

A 30-dimensional feature vector (3-axis accelerometer + 3-axis gyroscope × 5 sensors);

- ⮚

A corresponding Arabic letter label; and

- ⮚

An associated audio file (.mp3) used for potential voice feedback.

The dataset was divided into training (70%), validation (15%), and test (15%) subsets to guarantee robust evaluation and reproducibility.

To ensure gesture quality and repeatability, the data collection phase was conducted under controlled conditions and extended over a period of more than three months. The recorded data were systematically filtered, normalized, and organized in a Raw Gesture & Audio Database, which will serve as the foundation for training and evaluating machine learning models in subsequent stages. An overview of the dataset used in this study is presented in

Table 1.

Table 1.

Dataset Overview.

Table 1.

Dataset Overview.

| Sign Gesture | Repetitions per Participant | Number of Participants | Total Samples |

|---|

| Arabic Alphabet [5] | Multiple | 50 | >7000 |

Figure 4.

Block diagram of the system.

Figure 4.

Block diagram of the system.

4. Training and Evaluation

4.1. Model Training

The artificial neural network (ANN) developed for gesture classification was designed to balance performance and computational efficiency, ensuring suitability for deployment on a resource-constrained embedded device such as the Raspberry Pi Pico.

Training was conducted using Python and TensorFlow on Google Colab, allowing access to GPU acceleration and efficient experimentation. The input consists of 30 motion features derived from five MPU6050 sensors, each delivering six-axis data (3-axis accelerometer and 3-axis gyroscope). This implementation was guided by official deployment examples provided by the Raspberry Pi Foundation for TensorFlow Lite on the RP2040 platform [

11]

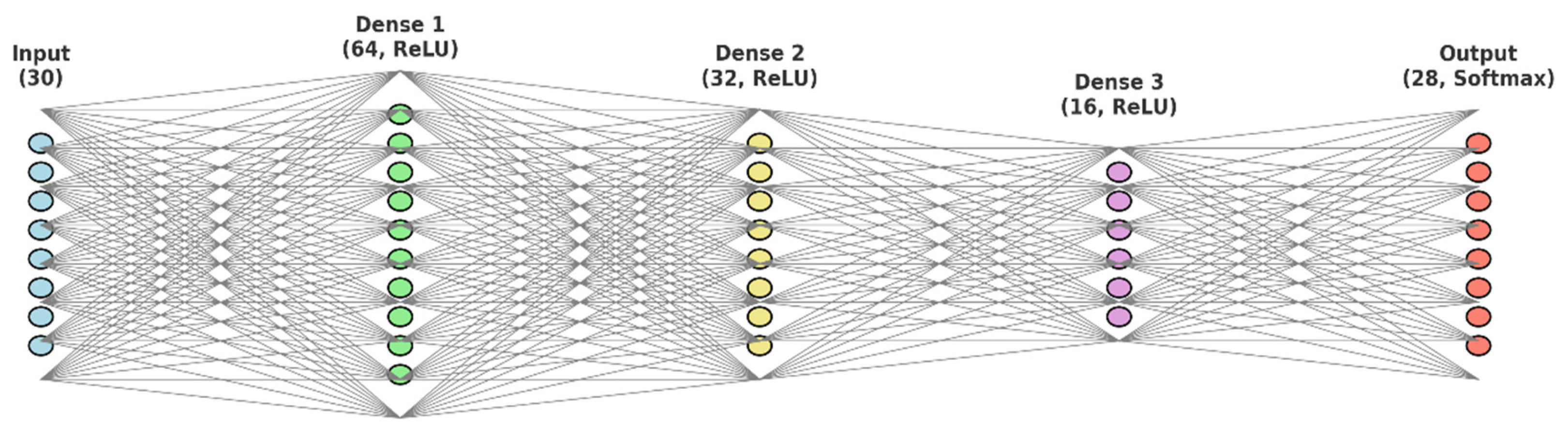

The proposed ANN architecture is composed of the following layers:

- ⮚

Input Layer: 30 neurons, corresponding to the real-time motion features from all fingers.

- ⮚

Dense Layer 1: 64 neurons with ReLU activation, enabling the network to learn complex nonlinear relationships efficiently.

- ⮚

Dense Layer 2: 32 neurons with ReLU activation, refining feature extraction and intermediate representation.

- ⮚

Dense Layer 3: 16 neurons with ReLU activation, adding depth while remaining lightweight enough for microcontroller inference.

- ⮚

Output Layer: 28 neurons with Softmax activation, each neuron representing one Arabic letter from Moroccan Sign Language (MSL). The Softmax function ensures that the output forms a valid probability distribution over the gesture classes.

Figure 5 illustrates a simplified schematic of the proposed artificial neural network (ANN) architecture used for Moroccan Sign Language gesture classification. While the number of neurons per layer is reduced for clarity, the structure reflects the actual sequence and function of the model’s layers as implemented on the Raspberry Pi Pico.

The network consists of an input layer (30 features), three hidden layers (64, 32, and 16 neurons, respectively, with ReLU activation), and an output layer with 28 neurons using Softmax activation.

The model was compiled with the following settings:

- ⮚

Optimizer: Adam, chosen for its adaptive learning rate and fast convergence

- ⮚

Loss function: Categorical cross-entropy, suitable for multi-class classification

- ⮚

Metrics: Accuracy, tracked during training and validation

After training on the constructed dataset (see

Section 3.4), the model achieved strong classification performance and was subsequently quantized using TensorFlow Lite Micro (TFLM). The final quantized model had a memory footprint under 150 KB, and inference latency remained below 30 ms on the Raspberry Pi Pico, confirming its ability to support real-time gesture recognition with minimal delay.

4.2. Evaluation Metrics

To assess the performance and generalization capability of the trained artificial neural network (ANN), a range of evaluation metrics were monitored throughout the training process. These include:

- ⮚

Training accuracy: Measures how well the model fits the training data.

- ⮚

Validation accuracy: Evaluates performance on unseen samples during training.

- ⮚

Categorical cross-entropy loss: Indicates the model’s prediction error for multi-class classification.

- ⮚

Test accuracy: Computed on a held-out dataset to estimate real-world performance.

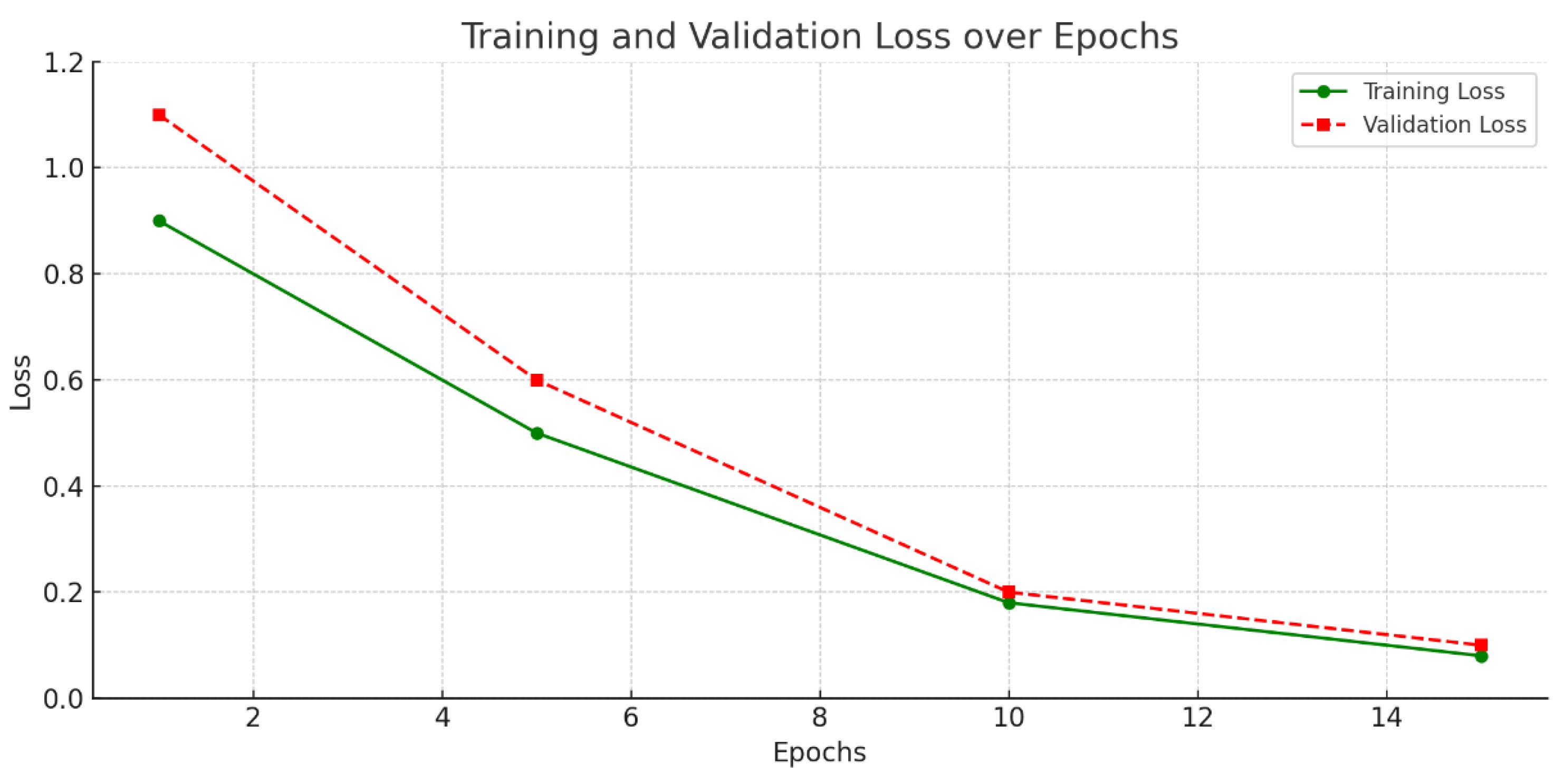

To better visualize the learning dynamics of the ANN, the evolution of training and validation accuracy, as well as the loss function, was plotted over 15 epochs. As shown in

Figure 6 and

Figure 7, the model demonstrates consistent improvement and convergence. The training and validation accuracy curves increase steadily and remain closely aligned, indicating low overfitting. Likewise, the training and validation loss curves exhibit a smooth and monotonic decrease, further confirming the effectiveness and stability of the training process.

Figure 6 and

Figure 7 show a consistent improvement in both accuracy and loss over the training epochs. The training and validation accuracy curves remain closely aligned, reaching 98% and 96%, respectively, indicating strong generalization and stable learning. Similarly, the training and validation losses decrease steadily and converge below 0.1, confirming effective optimization and the absence of significant overfitting.

These results highlight the suitability of the proposed ANN architecture and dataset for accurate and efficient gesture classification.

4.3. Experimental Setup

To evaluate the gesture recognition system in real-world conditions, the final trained and quantized ANN model was deployed onto a Raspberry Pi Pico microcontroller. The experimental setup includes the following components:

- ⮚

A wearable glove equipped with five MPU6050 inertial sensors, each attached to a finger to capture 6-axis motion data (accelerometer and gyroscope).

- ⮚

A TCA9548A I2C multiplexer to resolve address conflicts and enable sequential sensor data acquisition.

- ⮚

The OLED display enhances usability by providing silent, visual feedback, which is particularly beneficial in noisy environments or when audio playback is not desired.

- ⮚

An OLED display module for visual output of recognized letters.

- ⮚

A voice output module (based on pre-recorded Arabic audio clips) connected to the Pico for auditory feedback.

- ⮚

A microphone sensor for potential environmental sound capture and future interaction extensions.

Sensor data were sampled at 50 Hz and passed through low-pass filtering to reduce noise. Real-time inference was performed directly on the Raspberry Pi Pico using the quantized TFLite Micro version of the trained model. Upon recognizing a gesture, the corresponding Arabic letter was displayed on the OLED screen and vocalized via the speaker module.

This hardware configuration proved to be compact, responsive, and suitable for embedded gesture recognition, with an average inference time of less than 30 ms per gesture.

4.4. Performance Evaluation

To validate these findings, the system was tested by multiple users performing each gesture in a variety of conditions, including different lighting environments and hand positions.

The deployed system was tested across various real-world scenarios to assess its responsiveness, reliability, and robustness under practical constraints. The evaluation focused on key performance indicators such as inference latency, accuracy consistency, and environmental robustness.

The model achieved an average inference time below 30 ms per gesture on the Raspberry Pi Pico, ensuring real-time interaction without noticeable delay. During testing, the system consistently maintained high recognition accuracy across different users, lighting conditions, and gesture execution styles.

In addition to accuracy metrics, the system’s stability was evaluated by running extended sessions in diverse indoor environments. No significant performance degradation or sensor failure was observed, confirming the viability of the hardware-software integration for portable and embedded use.

Overall, the glove-based system demonstrated reliable real-time performance, strong classification precision, and operational stability, making it a suitable assistive technology for daily use by deaf and hard-of-hearing individuals.

The 98% training accuracy refers to the best-performing model on the training set, while the 96% test accuracy represents performance on unseen data. Misclassification of certain letters is due to visual similarity, as discussed in

Section 5.2.

5. Results and Discussion

5.1. Recognition Accuracy

The proposed ANN-based system achieved a peak test accuracy of 96%, with training accuracy reaching 98%, demonstrating the model’s ability to generalize well to unseen data. This high recognition rate is attributed to the well-structured dataset, precise sensor placement, and optimized network architecture. The real-time inference on the Raspberry Pi Pico further validated the system’s responsiveness and deployment readiness.

5.2. Limitations and Challenges

Despite strong overall performance, several limitations were observed:

- ⮚

Sensor drift over prolonged use can affect calibration and recognition reliability.

- ⮚

Variations in glove fit across users may alter motion dynamics and introduce noise.

- ⮚

Some signs involving subtle finger differences (e.g., س vs. ش, ح vs. خ) were more prone to misclassification due to gesture similarity.

Despite these isolated misclassifications, their overall impact on system accuracy was minor. The model maintained an average test accuracy of 96%, as the confused gestures represented less than 2% of the total dataset. This confirms the robustness of the proposed ANN model and the consistency of its generalization performance.

Addressing these issues may require more advanced preprocessing, adaptive calibration, or incorporating additional sensor modalities (e.g., flex or pressure sensors).

5.3. Real-Time Application

The system is compact, cost-effective (estimated under $25), and provides instantaneous visual and auditory feedback. This makes it particularly suitable for use in educational settings, healthcare, or public services to assist deaf individuals in daily communication scenarios such as transportation, shopping, or classroom interactions.

5.4. Comparative Analysis

To further contextualize the performance of the proposed system, a comparison was conducted with other relevant sign language recognition solutions.

Table 2 summarizes the performance of our system compared to existing sensor-based and vision-based approaches for sign language recognition.

According to the updated

Table 2, the proposed glove demonstrates high recognition accuracy while remaining low-cost, portable, and entirely embedded. Other approaches, such as inductive, capacitive, or flex sensor gloves, also show good performance but often require more complex design, higher cost, or limited durability.

Vision-based solutions generally achieve strong accuracy as well, but they depend on heavy computation and image preprocessing, which restricts their use in real-time wearable systems. These comparisons underline the strength of our design, which balances accuracy with simplicity, affordability, and suitability for embedded deployment.

6. Future Work and Improvements

Future work will explore advanced sequence models such as Long Short-Term Memory (LSTM) networks and lightweight Transformer encoders. These architectures are better suited for sequential sign language data and will be trained on our custom MSL dataset to assess performance improvements over the current ANN-based approach.

Extending recognition from isolated letters to continuous words and sentences will be a key priority in future research. Sequence modeling techniques will be applied to enable dynamic recognition for more natural and practical communication.

While the proposed system demonstrates strong performance in recognizing isolated letters from Moroccan Sign Language (MSL), several directions remain for future enhancement:

- ⮚

Alphabet-to-Word and Sentence Translation: Extending the system to recognize complete words or sentences will require sequence modeling techniques, such as Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, or Transformer-based architectures.

- ⮚

Gesture Intensity and Duration Analysis: Incorporating analog signal processing or pressure/flex sensors could help distinguish gestures with subtle temporal or force-related variations, enhancing recognition of expressive content or emotion.

- ⮚

User Adaptation and Personalization: Implementing adaptive calibration routines would allow the glove to automatically adjust to different hand sizes and user movement profiles, improving long-term robustness.

- ⮚

Multimodal Fusion and Context Awareness: Integrating additional sensors (e.g., microphones, cameras, EMG) could support multimodal learning, enabling the system to better handle ambiguous or context-dependent gestures.

- ⮚

Analog Artificial Neural Networks (AANNs): Investigating neuromorphic computing and transistor-based analog circuits may enable ultra-low-power inference suitable for continuous use in wearable applications.

These future developments aim to move beyond isolated gesture recognition toward a fully autonomous, context-aware interface that better supports deaf and hard-of-hearing individuals.

7. Conclusions

This study presented a low-cost, portable, and real-time system for recognizing Moroccan Sign Language (MSL) alphabet gestures using a wearable glove equipped with motion sensors and an embedded artificial neural network (ANN). The system leverages five MPU6050 inertial sensors and a Raspberry Pi Pico microcontroller to perform gesture recognition directly on-device, eliminating the need for external computing resources. Through a carefully constructed dataset of over 7000 labeled samples collected from 50 participants, the ANN model was trained and optimized to achieve high accuracy and fast inference times. The system reached 98% training accuracy and 96% validation accuracy, with inference latency below 30 ms, validating its suitability for real-world deployment. Beyond its technical achievements, this system represents a meaningful step toward more inclusive communication tools for individuals who rely on sign language for the deaf and hard-of-hearing population in Morocco. Future work will focus on extending the system to full-word and sentence recognition, improving sensor integration, and exploring neuromorphic alternatives for ultra-low-power operation. This research provides a promising foundation for the development of intelligent, context-aware sign language recognition solutions that are both accessible and impactful.