Physics Guided Neural Networks with Knowledge Graph

Abstract

1. Introduction

- To construct a hybrid system, physics-based model knowledge and neural networks are combined.

- The learning aim of the neural network is achieved by using scientific knowledge as a physics-based loss function.

- Optimizing empirical loss and physical consistency in model training.

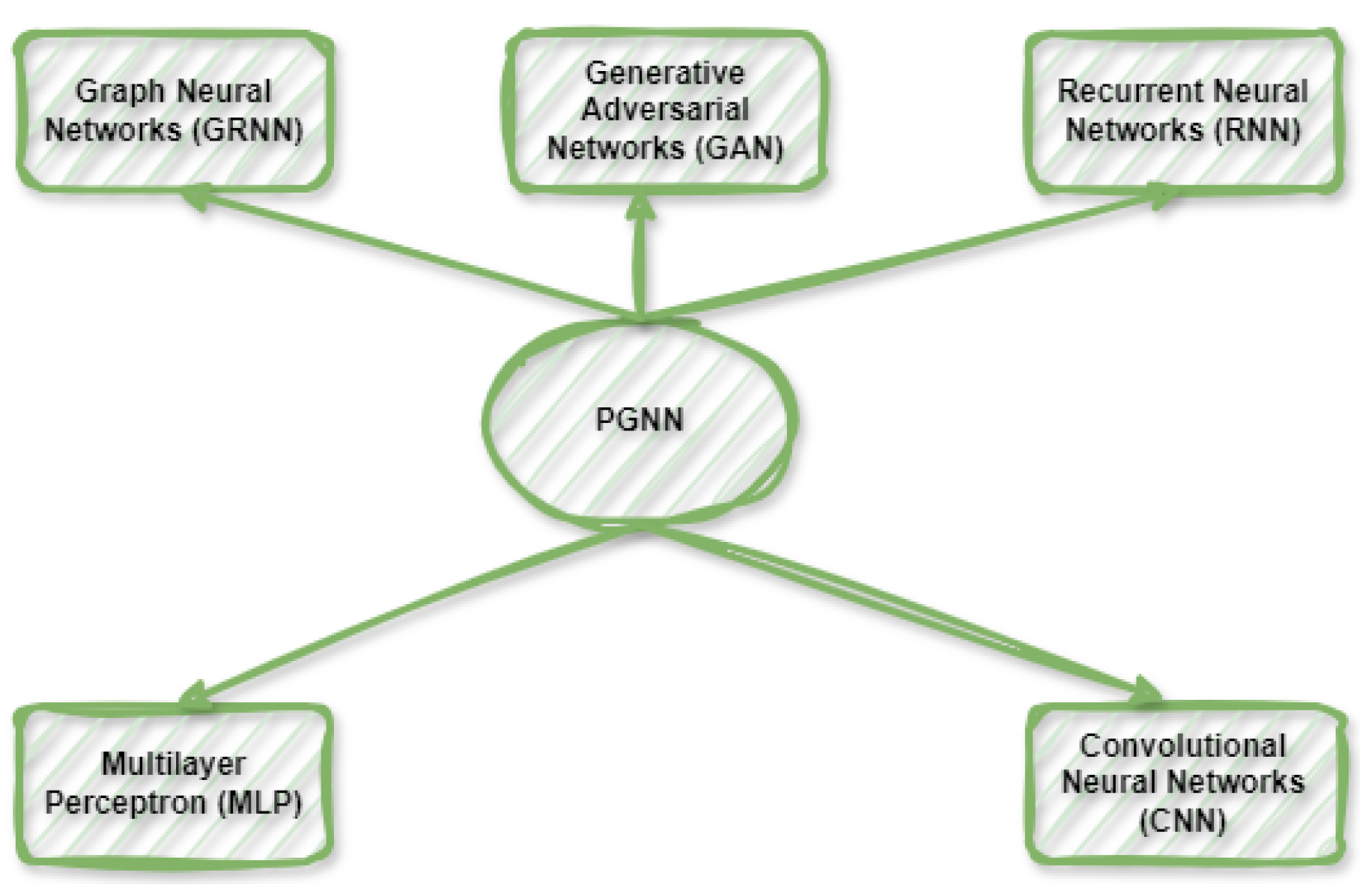

2. Type of PGNN

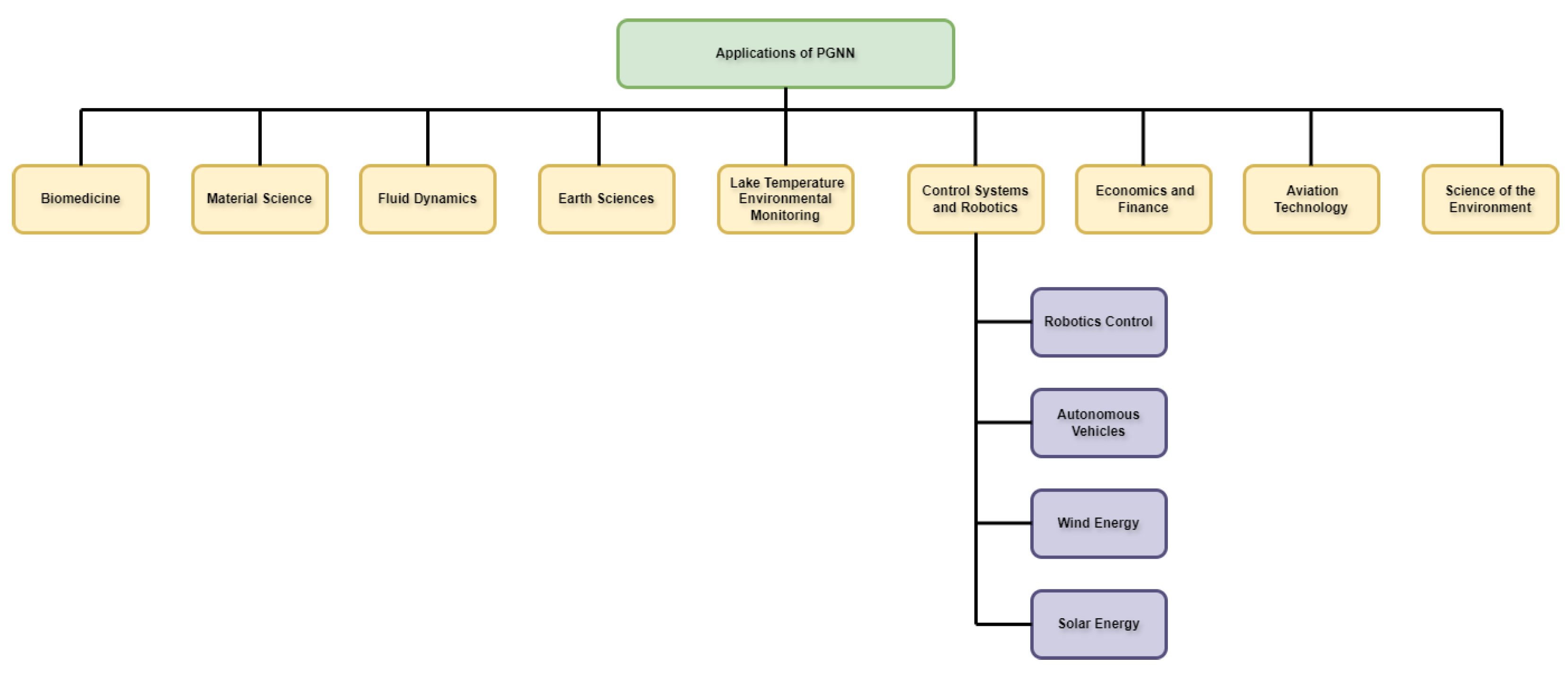

3. Application of PGNN

3.1. Biomedicine

- Drug discovery using predictive modeling.

- Using medical data to forecast patient outcomes.

- Medical diagnostics and image analysis [3].

3.2. Material Science

- Forecasting characteristics and actions of materials.

- Increasing the rate of material discovery.

- Enhancing the methods for synthesizing materials.

3.3. Fluid Dynamics

- Fluid flow pattern modeling and prediction [9].

- Heat transfer and turbulence simulation.

- Creating more aerodynamically efficient systems.

3.4. Earth Sciences

- Forecasting the effects of climate change.

- Examining and simulating geological mechanisms.

- Examining how environments impact ecosystems.

3.5. Lake Temperature Environmental Monitoring

- Monitoring and predicting the temperature of lakes [4].

- Comprehending the effects of global warming on aquatic environments.

3.6. Control Systems and Robotics

- Robotics control: To improve motion planning and control tactics, PGNNs may be used in robotic control systems.

- Autonomous vehicles: By taking into account environmental influences and physical limitations, PGNNs help forecast and optimize the behavior of autonomous vehicles [10].

- Wind energy: PGNNs help maximize energy efficiency in wind turbine installation and design.

- Solar energy: By predicting solar panel performance in response to environmental conditions, PGNNs may help maximize the harvesting of solar energy [11].

3.7. Economics and Finance

- Stock market prediction: By combining market dynamics and economic concepts, PGNNs are utilized to model and forecast stock market movements.

- Financial risk management: By taking into account the influence of several economic aspects, PGNNs help improve risk assessment models in finance.

- Image reconstruction: By combining physical limitations, PGNNs used in computer vision may enhance image reconstruction, producing sharper and more accurate pictures.

- 3D object identification: By using physical characteristics and limitations throughout the learning process, PGNNs help with 3D object identification.

3.8. Aviation Technology

- Structural health monitoring: to guarantee dependability and safety, PGNNs are able to forecast and track the structural health of aircraft and spacecraft components [12].

- Flight control systems: by taking system dynamics and aerodynamics into account, PGNNs help to optimize flight control systems [12].

3.9. Science of the Environment

- Air quality prediction: pollutant emissions, weather patterns, and geographic characteristics are among the variables that PGNNs are utilized to model and forecast air quality.

- Ecological modeling: by forecasting how changes in the environment would affect biodiversity, PGNNs help model ecological systems.

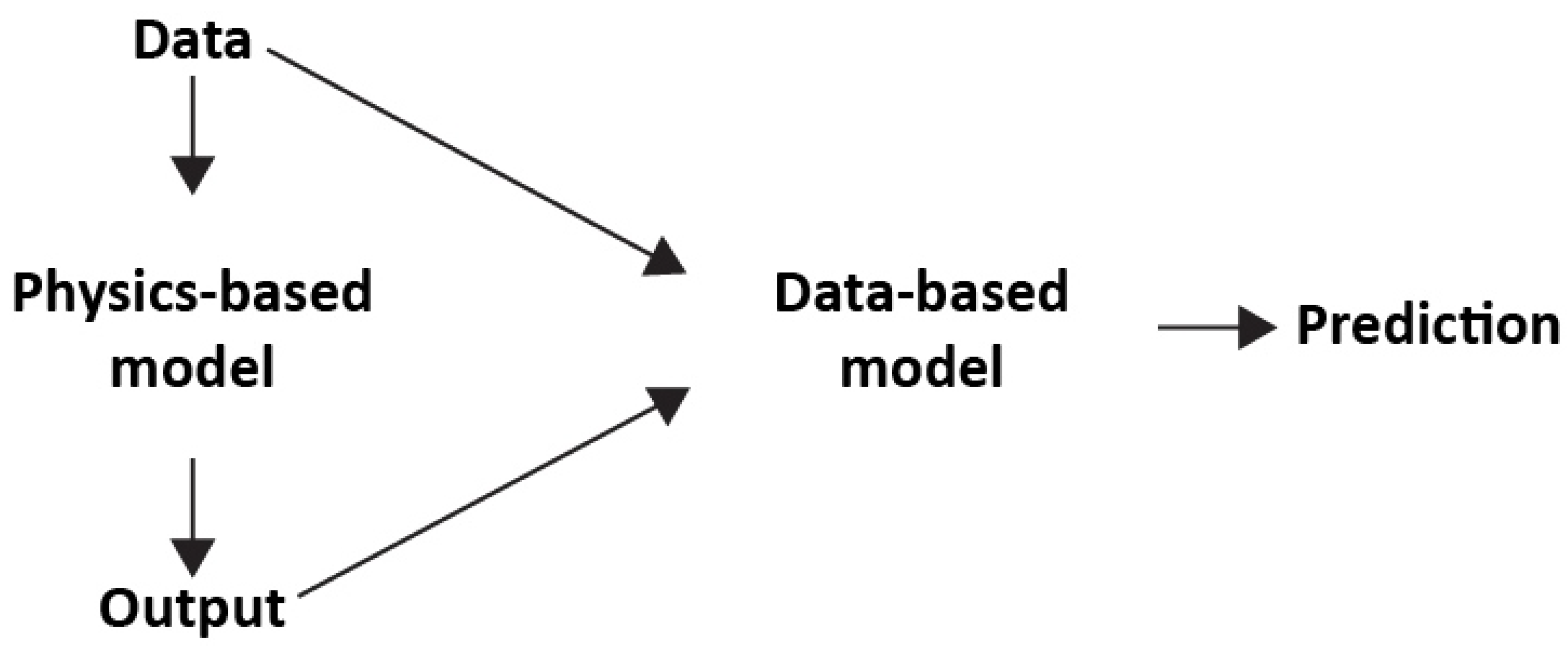

4. Constructing Hybrid Physics–Data Models

5. Enhancing Model Training with Physics-Based Loss Functions

- represents the empirical loss, typically a mean squared error or cross-entropy, which measures how closely the model predictions match the actual target Y from the data.

- is a regularization term that controls model complexity, preventing overfitting.

- The hyper-parameter determines the relative importance of reducing physical inconsistency compared with minimizing empirical loss and model complexity.

6. How PGNNs Work

6.1. Components

- Physics-based models: These simulations of physical processes, such as heat transport, fluid dynamics, or quantum mechanics, incorporate domain-specific information.

- Neural networks: Neural networks are universal function approximators that are capable of learning complex mappings between input characteristics and predicted outputs.

6.2. Hybrid Setup

- Observational features and physics-based simulations: PGNNs use both empirical data and results from physics-based simulations, making the most of each knowledge source.

- Neural network architecture: Integrate with the combined input of simulated outputs and observational characteristics, a customized neural network architecture is created. This allows the model to provide predictions that combine theoretical understanding with empirical observations.

- Physics-based loss functions: The neural network’s learning goal incorporates these loss functions. They improve accuracy and consistency by directing the training process to generate predictions that comply with accepted physics rules by encoding well-known physical principles.

7. Comparison with Other Approaches

PGNN

8. Review Method

8.1. Research Question

8.2. Keyword Selection

- Physics Guided Neural Network,

- PGNN,

- Physics Informed Neural Network,

- PINN,

- PHynet,

- Semi-Supervised Graph Neural Network,

- PGDL, and

- PeNN.

8.3. Collection of Documents and Filtering (Inclusion/Exclusion Criteria)

8.4. Source Material and Search Strategy

8.5. Analysis Data Collection and Database Selection

8.6. Publication and Citation Frequency

8.7. Bibliography Analysis Using Knowledge Graph

| Algorithm 1 Constructing a knowledge graph from abstracts using BERT embeddings. |

| Require: Abstracts Abstracts Ensure: Knowledge graph G

|

8.8. Document Analysis

9. PGNN Equations

- h represents the time step .

- is the neural network prediction at time step .

- denotes any additional physics-based inputs.

10. Description, Benefits, and Applications of Key Concepts

11. Distinctive Characteristics and Advantages of PGNNs

- Hybridization: Physics-guided neural networks (PGNNs) integrate neural networks with physics-based information, resulting in a potent fusion. This integration enables PGNNs to harness the advantages of both methodologies, leading to models that are more resilient and easier to comprehend.

- Enhanced generalizability: By integrating physical constraints into the learning process, PGNNs exhibit improved capacity to apply learned knowledge to new contexts, particularly when dealing with limited data. This feature allows PGNNs to achieve high performance on data that has not been previously seen and to make accurate predictions beyond the data used for training.

- Application in multiscale multi-physics phenomena: PGNNs are very effective in speeding up the numerical simulation of intricate systems that exhibit both multiscale and multi-physics phenomena. Their capacity to accurately represent the complex interplay between many physical phenomena makes them indispensable in modeling and forecasting the behavior of such systems.

- Future research opportunities: Future research possibilities arise from the use of PGNNs, providing prospects to investigate several facets of their use and advancement. Potential areas for additional investigation are the examination of causal links, the enhancement of algorithms to achieve better performance, and the integration of deep learning solvers with scientific models. These research areas show potential for enhancing the capabilities and uses of PGNNs in many sectors.

11.1. Limitation of PGNN

- Statistics-based training: The main limitation of PgNNs is that their training process is solely based on statistical correlations in data. As a result, their outputs may not fully adhere to underlying physical laws and could violate them in certain cases [33].

- Sparse training data: PgNNs struggle when the training dataset is sparse, which is often the case in scientific fields. Sparse data leads to failure in extrapolating predictions outside the scope of the training data, making the models less effective in real-world applications [34].

- Interpolation issues: Even for inputs within the sparse training datasets, PgNN predictions may be inaccurate, especially in complex, non-linear problems. PgNNs have difficulty interpolating across a wide range of physical parameters, such as different Reynolds numbers in fluid dynamics [19].

- Boundary and initial condition problems: PgNNs may not fully satisfy the boundary and initial conditions under which the training data were generated. As these conditions vary from problem to problem, the training process becomes prohibitively costly, especially for inverse problems [35].

- Resolution invariance: PgNN-based models are not resolution-invariant by design, meaning that models trained at one resolution cannot be easily applied to problems at different resolutions [36].

- Averaging effects: During training, PgNNs may treat minor variations in the functional dependencies between input and output as noise, which can result in averaged solutions. While the model performs optimally over the entire dataset, individual case predictions may be suboptimal [37].

- Complex dataset handling: PgNNs struggle when the training dataset is diverse, i.e., when there are drastically different interdependencies between input and output pairs. To address this, increasing the model size may help, but it requires more data and makes training costlier and, in some cases, impractical [35].

- Scaling to larger systems: As systems grow more complex, scaling PGNNs becomes computationally expensive, making it difficult to apply them to large-scale problems [38].

- Data quality and quantity: PGNNs rely heavily on high-quality, complete datasets. Incomplete or noisy data can lead to poor performance, and obtaining clean data is often costly and challenging [35].

- Balancing physics constraints and flexibility: It is difficult to find the right balance between adhering to physical laws and allowing flexibility in data-driven learning. Too much focus on physics constraints can limit the learning process, while too much flexibility can lead to physically inaccurate results [39].

- Slower performance: PGNNs can be computationally slower than traditional methods due to the complex optimization process needed to balance physics constraints with data-driven learning [40].

11.2. Challenge of PGNN

- 1.

- Challenge 1: Integration of Multiple Laws of PhysicsThe incorporation of numerous physics principles into Physics-Guided Neural Networks (PGNNs) offers potential advantages, but it also poses some difficulties. To maximize the performance of PGNN, it is necessary to solve open challenges such as comparing various integration techniques, giving heuristics for their application, and defining the combinability and order of physics laws [25,41,42,43,44].

- 2.

- Challenge 2: Instructions for Creating Efficient PGNN ModelsIt is crucial to establish standards for creating physics-guided neural networks (PGNNs) that are successful. Although multiple PGNN designs can tackle different challenges, their efficacy may differ. Systematic methodologies and guidelines are required to develop PGNN models, which provide a comprehensive and sequential approach suitable for both experienced practitioners and novices. The current understanding of good PGNN design is often both complimentary and conflicting, suggesting a lack of defined principles [45,46,47,48,49].

- 3.

- Challenge 3: Balancing Physics-Based Constraints with Data-Driven FlexibilityA key challenge in PGNNs is maintaining a balance between applying physics rules and allowing flexibility for data-driven learning. If too much focus is placed on the physics constraints, the model may struggle to learn effectively from data. On the other hand, if the model is too flexible, it may break important physical laws. Recent studies suggest [39] that a balanced approach for vapor compression systems using two methods:

- (a)

- Modular model implementation: Data-driven models for individual components are built separately and integrated, allowing flexibility and reuse across different systems.

- (b)

- Physical conservation enforcement: Physical laws like mass and energy conservation are enforced, ensuring accuracy while maintaining the efficiency of data-driven techniques.

11.3. Discussion the Future Research Direction of PGNN

12. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Robinson, H.; Pawar, S.; Rasheed, A.; San, O. Physics guided neural networks for modelling of non-linear dynamics. Neural Netw. 2022, 154, 333–345. [Google Scholar] [CrossRef]

- Robinson, H.; Lundby, E.; Rasheed, A.; Gravdahl, J.T. Deep learning assisted physics-based modeling of aluminum extraction process. Eng. Appl. Artif. Intell. 2023, 125, 106623. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y.; Li, X.; Jiang, S.; Dixit, K.; Zhang, X.; Ji, X. Pgnn: Physics-guided neural network for fourier ptychographic microscopy. arXiv 2019, arXiv:1909.08869. [Google Scholar]

- Daw, A.; Karpatne, A.; Watkins, W.D.; Read, J.S.; Kumar, V. Physics-guided neural networks (pgnn): An application in lake temperature modeling. In Knowledge Guided Machine Learning; Chapman and Hall/CRC: Boca Raton, FL, USA, 2022; pp. 353–372. [Google Scholar]

- Vaida, M.; Patil, P. Semi-Supervised Graph Neural Network with Probabilistic Modeling to Mitigate Uncertainty. In Proceedings of the 2020 the 4th International Conference on Information System and Data Mining, Hawaii, HI, USA, 15–17 May 2020; pp. 152–156. [Google Scholar]

- Blakseth, S.S.; Rasheed, A.; Kvamsdal, T.; San, O. Combining physics-based and data-driven techniques for reliable hybrid analysis and modeling using the corrective source term approach. Appl. Soft Comput. 2022, 128, 109533. [Google Scholar] [CrossRef]

- Faroughi, S.A.; Pawar, N.; Fernandes, C.; Raissi, M.; Das, S.; Kalantari, N.K.; Mahjour, S.K. Physics-guided, physics-informed, and physics-encoded neural networks in scientific computing. arXiv 2022, arXiv:2211.07377. [Google Scholar]

- Khademi, M.; Schulte, O. Deep generative probabilistic graph neural networks for scene graph generation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11237–11245. [Google Scholar]

- Kumar, A.; Ridha, S.; Narahari, M.; Ilyas, S.U. Physics-guided deep neural network to characterize non-Newtonian fluid flow for optimal use of energy resources. Expert Syst. Appl. 2021, 183, 115409. [Google Scholar] [CrossRef]

- Fan, D. Physics-Guided Neural Networks for Inversion-Based Feedforward Control of a Hybrid Stepper Motor. Master’s Thesis, Eindhoven University of Technology, Eindhoven, The Netherlands, 2022. [Google Scholar]

- Bento, M.E. Physics-guided neural network for load margin assessment of power systems. IEEE Trans. Power Syst. 2023, 39, 564–575. [Google Scholar] [CrossRef]

- Li, H.; Gou, L.; Li, H.; Liu, Z. Physics-guided neural network model for aeroengine control system sensor fault diagnosis under dynamic conditions. Aerospace 2023, 10, 644. [Google Scholar] [CrossRef]

- Tadesse, Z.; Patel, K.; Chaudhary, S.; Nagpal, A. Neural networks for prediction of deflection in composite bridges. J. Constr. Steel Res. 2012, 68, 138–149. [Google Scholar] [CrossRef]

- Hung, T.V.; Viet, V.Q.; Van Thuat, D. A deep learning-based procedure for estimation of ultimate load carrying of steel trusses using advanced analysis. J. Sci. Technol. Civ. Eng. JSTCE—HUCE 2019, 13, 113–123. [Google Scholar] [CrossRef]

- Cheng, C.; Zhang, G.T. Deep learning method based on physics informed neural network with resnet block for solving fluid flow problems. Water 2021, 13, 423. [Google Scholar] [CrossRef]

- Lou, Q.; Meng, X.; Karniadakis, G.E. Physics-informed neural networks for solving forward and inverse flow problems via the Boltzmann-BGK formulation. J. Comput. Phys. 2021, 447, 110676. [Google Scholar] [CrossRef]

- You, H.; Zhang, Q.; Ross, C.J.; Lee, C.H.; Yu, Y. Learning deep implicit Fourier neural operators (IFNOs) with applications to heterogeneous material modeling. Comput. Methods Appl. Mech. Eng. 2022, 398, 115296. [Google Scholar] [CrossRef]

- Dulny, A.; Hotho, A.; Krause, A. NeuralPDE: Modelling dynamical systems from data. In Advances in Artificial Intelligence; Springer: Cham, Switzerland, 2022; pp. 75–89. [Google Scholar]

- Faroughi, S.A.; Roriz, A.I.; Fernandes, C. A meta-model to predict the drag coefficient of a particle translating in viscoelastic fluids: A machine learning approach. Polymers 2022, 14, 430. [Google Scholar] [CrossRef] [PubMed]

- Maddu, S.; Sturm, D.; Cheeseman, B.L.; Müller, C.L.; Sbalzarini, I.F. STENCIL-NET: Data-driven solution-adaptive discretization of partial differential equations. arXiv 2021, arXiv:2101.06182. [Google Scholar]

- Banga, S.; Gehani, H.; Bhilare, S.; Patel, S.; Kara, L.B. 3D Topology Optimization using Convolutional Neural Networks. arXiv 2018, arXiv:1808.07440. [Google Scholar]

- Alawieh, M.B.; Lin, Y.; Zhang, Z.; Li, M.; Huang, Q.; Pan, D.Z. GAN-SRAF: Sub-Resolution Assist Feature Generation Using Conditional Generative Adversarial Networks. In Proceedings of the 56th Annual Design Automation Conference 2019, DAC ’19, Las Vegas, NV, USA, 2–6 June 2019; ACM: New York, NY, USA, 2019; p. 149. [Google Scholar] [CrossRef]

- Huang, C.; Liu, H.; Wu, S.; Jiang, X.; Zhou, L.; Hu, J. Physics-guided neural network for channeled spectropolarimeter spectral reconstruction. Opt. Express 2023, 31, 24387–24403. [Google Scholar] [CrossRef]

- Wu, P.; Dai, H.; Li, Y.; He, Y.; Zhong, R.; He, J. A physics-informed machine learning model for surface roughness prediction in milling operations. Int. J. Adv. Manuf. Technol. 2022, 123, 4065–4076. [Google Scholar] [CrossRef]

- Muralidhar, N.; Bu, J.; Cao, Z.; He, L.; Ramakrishnan, N.; Tafti, D.; Karpatne, A. Phynet: Physics guided neural networks for particle drag force prediction in assembly. In Proceedings of the 2020 SIAM International Conference on Data Mining, Cincinnati, OH, USA, 7–9 May 2020; SIAM: Philadelphia, PA, USA, 2020; pp. 559–567. [Google Scholar]

- Cai, X.; Huang, C.; Xia, L.; Ren, X. LightGCL: Simple yet effective graph contrastive learning for recommendation. arXiv 2023, arXiv:2302.08191. [Google Scholar]

- He, W.; Sun, G.; Lu, J.; Fang, X.S. Candidate-aware Graph Contrastive Learning for Recommendation. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval, Taipei, Taiwan, 23–27 July 2023; pp. 1670–1679. [Google Scholar] [CrossRef]

- Luo, X.; Yuan, J.; Huang, Z.; Jiang, H.; Qin, Y.; Ju, W.; Zhang, M.; Sun, Y. Hope: High-order graph ode for modeling interacting dynamics. In Proceedings of the International Conference on Machine Learning. PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 23124–23139. [Google Scholar]

- Yang, J.; Xu, H.; Mirzoyan, S.; Chen, T.; Liu, Z.; Ju, W.; Liu, L.; Zhang, M.; Wang, S. Poisoning scientific knowledge using large language models. bioRxiv 2023. [Google Scholar] [CrossRef]

- Wang, Y.; Song, Y.; Li, S.; Cheng, C.; Ju, W.; Zhang, M.; Wang, S. Disencite: Graph-based disentangled representation learning for context-specific citation generation. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Association for the Advancement of Artificial Intelligence: Washington, DC, USA, 2022; Volume 36, pp. 11449–11458. [Google Scholar]

- Ju, W.; Wang, Y.; Qin, Y.; Mao, Z.; Xiao, Z.; Luo, J.; Yang, J.; Gu, Y.; Wang, D.; Long, Q.; et al. Towards Graph Contrastive Learning: A Survey and Beyond. arXiv 2024, arXiv:2405.11868. [Google Scholar]

- Ju, W.; Yi, S.; Wang, Y.; Xiao, Z.; Mao, Z.; Li, H.; Gu, Y.; Qin, Y.; Yin, N.; Wang, S.; et al. A survey of graph neural networks in real world: Imbalance, noise, privacy and ood challenges. arXiv 2024, arXiv:2403.04468. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Li, Z.; Zheng, H.; Kovachki, N.; Jin, D.; Chen, H.; Liu, B.; Azizzadenesheli, K.; Anandkumar, A. Physics-informed neural operator for learning partial differential equations. ACM/JMS J. Data Sci. 2024, 1, 1–27. [Google Scholar] [CrossRef]

- Biegler, L.; Biros, G.; Ghattas, O.; Heinkenschloss, M.; Keyes, D.; Mallick, B.; Marzouk, Y.; Tenorio, L.; van Bloemen Waanders, B.; Willcox, K. Large-Scale Inverse Problems and Quantification of Uncertainty; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2011. [Google Scholar]

- Li, Z.; Kovachki, N.; Azizzadenesheli, K.; Liu, B.; Bhattacharya, K.; Stuart, A.; Anandkumar, A. Fourier neural operator for parametric partial differential equations. arXiv 2020, arXiv:2010.08895. [Google Scholar]

- Karniadakis, G.E.; Kevrekidis, I.G.; Lu, L.; Perdikaris, P.; Wang, S.; Yang, L. Physics-informed machine learning. Nat. Rev. Phys. 2021, 3, 422–440. [Google Scholar] [CrossRef]

- Wang, R.; Yu, R. Physics-guided deep learning for dynamical systems: A survey. arXiv 2021, arXiv:2107.01272. [Google Scholar]

- Ma, J.; Qiao, H.; Laughman, C.R. A Physics-Constrained Data-Driven Modeling Approach for Vapor Compression Systems; Mitsubishi Electric Research Laboratories: Cambridge, MA, USA, 2024. [Google Scholar]

- Bu, J. Achieving More with Less: Learning Generalizable Neural Networks With Less Labeled Data and Computational Overheads; Virginia Tech: Blacksburg, VA, USA, 2023. [Google Scholar]

- Elhamod, M.; Bu, J.; Singh, C.; Redell, M.; Ghosh, A.; Podolskiy, V.; Lee, W.C.; Karpatne, A. CoPhy-PGNN: Learning physics-guided neural networks with competing loss functions for solving eigenvalue problems. ACM Trans. Intell. Syst. Technol. 2022, 13, 1–23. [Google Scholar] [CrossRef]

- Jin, W.; Chen, L.; Lamichhane, S.; Kavousi, M.; Tan, S.X.D. HierPINN-EM: Fast Learning-Based Electromigration Analysis for Multi-Segment Interconnects Using Hierarchical Physics-informed Neural Network. In Proceedings of the 41st IEEE/ACM International Conference on Computer-Aided Design, San Diego, CA, USA, 29 October–3 November 2022; pp. 1–9. [Google Scholar]

- Tognan, A.; Patanè, A.; Laurenti, L.; Salvati, E. A Bayesian defect-based physics-guided neural network model for probabilistic fatigue endurance limit evaluation. Comput. Methods Appl. Mech. Eng. 2024, 418, 116521. [Google Scholar] [CrossRef]

- Lian, X.; Chen, L. Probabilistic group nearest neighbor queries in uncertain databases. IEEE Trans. Knowl. Data Eng. 2008, 20, 809–824. [Google Scholar] [CrossRef]

- Bolderman, M.; Lazar, M.; Butler, H. On feedforward control using physics—Guided neural networks: Training cost regularization and optimized initialization. In Proceedings of the 2022 European Control Conference (ECC), London, UK, 12–15 July 2022; pp. 1403–1408. [Google Scholar]

- He, Y.; Wang, Z.; Xiang, H.; Jiang, X.; Tang, D. An artificial viscosity augmented physics-informed neural network for incompressible flow. Appl. Math. Mech. 2023, 44, 1101–1110. [Google Scholar] [CrossRef]

- García-Cervera, C.J.; Kessler, M.; Periago, F. Control of partial differential equations via physics-informed neural networks. J. Optim. Theory Appl. 2023, 196, 391–414. [Google Scholar] [CrossRef]

- Zhao, Y.; Guo, L.; Wong, P.P.L. Application of physics-informed neural network in the analysis of hydrodynamic lubrication. Friction 2023, 11, 1253–1264. [Google Scholar] [CrossRef]

- Demirel, O.B.; Yaman, B.; Shenoy, C.; Moeller, S.; Weingärtner, S.; Akçakaya, M. Signal intensity informed multi-coil encoding operator for physics-guided deep learning reconstruction of highly accelerated myocardial perfusion CMR. Magn. Reson. Med. 2023, 89, 308–321. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Li, Y.; Zhou, W.; Chen, X.; Yao, W.; Zhao, Y. TONR: An exploration for a novel way combining neural network with topology optimization. Comput. Methods Appl. Mech. Eng. 2021, 386, 114083. [Google Scholar] [CrossRef]

- Wang, S.; Sankaran, S.; Perdikaris, P. Respecting causality is all you need for training physics-informed neural networks. arXiv 2022, arXiv:2203.07404. [Google Scholar]

- Cai, S.; Mao, Z.; Wang, Z.; Yin, M.; Karniadakis, G.E. Physics-informed neural networks (PINNs) for fluid mechanics: A review. Acta Mech. Sin. 2021, 37, 1727–1738. [Google Scholar] [CrossRef]

- Jagtap, A.D.; Kharazmi, E.; Karniadakis, G.E. Conservative physics-informed neural networks on discrete domains for conservation laws: Applications to forward and inverse problems. Comput. Methods Appl. Mech. Eng. 2020, 365, 113028. [Google Scholar] [CrossRef]

| Aspect | PGNN | PiNN | PENN |

|---|---|---|---|

| Definition | Neural networks that incorporate physical principles into their training to model complex phenomena. | Designed to solve PDEs by incorporating physical laws into the training process. | Combines the strengths of PgNN and PiNN, allowing hard-encoded prior knowledge while being adaptable to physical scenarios. |

| Applications | Structural analysis, topology optimization, health condition assessment, fluid mechanics, solid mechanics, etc. | Fluid mechanics, fluid dynamics, neural particle methods. Advanced variants include PhySRNet, PDDO-PiNN, PiELM, DPiNN, and PiNN-FEM for computational mechanics. | Effective in modeling complex material responses and damage, as well as in extrapolation tasks. |

| Advantages | Can effectively utilize sparse data and incorporate physical constraints to model complex phenomena. | Can deduce governing equations and unknown boundary conditions, improving predictive capabilities. | Demonstrates superior performance in accuracy and computational efficiency compared with PgNN and PiNN. |

| Limitations | Statistics-based training, sparse datasets, interpolation issues, boundary condition challenges, resolution-dependent, average solutions, diversity struggles. | Training complexity, convergence issues, generalization limitations, high computational cost, inverse problem difficulty. | Geometry restrictions, overfitting, training complexity, implementation difficulty, memory cost, initial setup. |

| Experimental Case Study | Tadesse et al. [13] predicted mid-span deflections in composite bridges using ANN with a maximum RMSE of 3.79%. Hung et al. [14] applied ANN to predict the ultimate load factor in a non-linear steel truss with high accuracy. | Cheng and Zhang [15] developed Res-PiNN for fluid simulation with superior results over traditional PiNN. Lou et al. [16] applied PiNN to inverse multiscale flow modeling. | You et al. [17] introduced IFNO for material response modeling, outperforming FNO in hyperelastic and brittle materials. Dulny et al. [18] combined NeuralODE with the Method of Lines for PDE problems but faced limitations with elliptical second-order PDEs. |

| Model Flexibility | Limited flexibility, mainly for graph-structured problems. | High flexibility, effective across multiple physics domains. | Specialized for implicit modeling of complex material behavior. |

| Performance and Accuracy | Stable and accurate in graph-related domains but less effective in continuous fields. | Enhanced PiNN variants (e.g., Res-PiNN) show improved accuracy in handling complex phenomena. | IFNO outperforms traditional methods in material modeling but struggles with elliptical PDEs. |

| Capabilities | |||

| Speed Improvement | ✓ | ✓ | ✓ |

| Easy Network Training | ✓ | × | × |

| Training Without Labeled Data | × | ✓ | × |

| Physics-Based Loss Function | × | ✓ | ✓ |

| Continuous Solutions | × | ✓ | ✓ |

| Spatiotemporal Interpolation | × | ✓ | ✓ |

| Physics Encoding | × | × | ✓ |

| Operator Learning | × | × | ✓ |

| Continuous-Depth Models | × | × | ✓ |

| Spatiotemporal Extrapolation | × | × | ✓ |

| Solution Transferability | × | × | ✓ |

| Search Engine | Primary Studies |

|---|---|

| ACM Digital Library | 7 |

| Elsevier ScienceDirect | 11 |

| IEEEXplore Digital Library | 4 |

| Springer Online Library | 35 |

| Wiley InterScience | 13 |

| Google Scholar | 11 |

| Total | 81 |

| Search Engine | Search Queries | Results | Primary Studies |

|---|---|---|---|

| ACM Digital Library | acmdlTitle, recordAbstract, author keyword:“physics guided neural network” Or“physics Informed neural network” | 130,654 | 7 |

| Elsevier ScienceDirect | pub-date > 2021 and pub-date < 2024 and TITLE-ABSTR-KEY(“physics guided neural network”) or TITLE-ABSTR-KEY(“PGNN”) or TITLE-ABSTR-KEY(“PINN”)[All Sources(Computer Science)] | 35 | 11 |

| IEEEXplore Digital Library | ((((“Document Title”:“PGNN”) OR “Abstract”:“PGNN”) OR “Author Keywords”:“PGNN”) OR ((“Document Title”:“physics guided neural network”) OR “Abstract”:“physics guided neural network”) OR “Author Keywords”:“physics guided neural network”) OR ((“Document Title”:“physics informed neural network”) OR “Abstract”:“physics informed neural network”) OR “Author Keywords”:“physics informed neural network”)) and refined by Year: 2021–2024 | 251 | 4 |

| Springer Online Library | “PGNN” OR “physics guided neural network” OR “physics informed neural network” within 2021–2024 | 161 | 35 |

| Wiley InterScience | “PGNN” in Article Titles OR “physics guided neural network” in Abstract OR “physics informed neural network” in Keywords between years 2021–2024 | 1477 | 13 |

| Google Scholar | “PGNN” “physics guided neural network” “physics informed neural network”, None of the words: “Physics” “Physics guided Deep Learning”,“PHynet”, “Semi-Supervised Graph Neural Network”, “PGDL”, Date filter: 2021–2024 | 2280 | 11 |

| Keyword | Description | Benefits | Applications |

|---|---|---|---|

| PGNN | Physics-guided neural network (PGNN) is a neural network architecture that includes ideas from physics into its design and training process. The purpose of this integration is to enhance the model’s performance, interpretability, and generalization by using the underlying physical laws, restrictions, or relationships present in the data. PGNNs are especially valuable in scientific and technical fields where comprehending fundamental physical events is essential for precise forecasting and decision-making. Some examples of these fields include fluid dynamics, material science, and structural mechanics. | Enhanced efficiency and comprehension by using ideas derived from physics

|

|

| Physics-Informed Neural Network | A physics-informed neural network (PINN) is a kind of neural network model that incorporates the understanding of physics principles directly into its structure or training process. These networks use physics-based constraints or equations to direct their learning process, allowing them to more effectively capture fundamental physical correlations and enhance their ability to make accurate predictions, particularly in situations when data are few or unreliable. Physics-informed neural networks (PINNs) have diverse applications in computational physics, medical imaging, and environmental modeling. |

|

|

| Physics-Guided Deep Learning | A method known as “physics-guided deep learning” involves augmenting or guiding deep learning algorithms using physics-derived concepts. Deep learning models may now take use of established physical laws, restrictions, or correlations to enhance their robustness, interpretability, and performance thanks to this integration. These models may become more broadly applicable by adding knowledge of physics, particularly in fields where physical principles control the underlying events. Applications for physics-guided deep learning may be found in astronomy, geophysics, and biophysics, among other scientific fields. |

|

|

| Semi-Supervised Graph Neural Network | A Semi-Supervised Graph Neural Network (SSGNN) is a neural network architecture especially tailored for tackling semi-supervised learning tasks on data organized as graphs. Graph neural networks (GNNs) expand conventional neural network designs to process data formatted as graphs, enabling them to capture relational information and connections among data points in a graph. SSGNNs, in the context of semi-supervised learning, use both labeled and unlabeled data to enhance model performance and generalization. They are used in many fields, such as social network analysis, recommendation systems, and biological network analysis. |

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gupta, K.D.; Siddique, S.; George, R.; Kamal, M.; Rifat, R.H.; Haque, M.A. Physics Guided Neural Networks with Knowledge Graph. Digital 2024, 4, 846-865. https://doi.org/10.3390/digital4040042

Gupta KD, Siddique S, George R, Kamal M, Rifat RH, Haque MA. Physics Guided Neural Networks with Knowledge Graph. Digital. 2024; 4(4):846-865. https://doi.org/10.3390/digital4040042

Chicago/Turabian StyleGupta, Kishor Datta, Sunzida Siddique, Roy George, Marufa Kamal, Rakib Hossain Rifat, and Mohd Ariful Haque. 2024. "Physics Guided Neural Networks with Knowledge Graph" Digital 4, no. 4: 846-865. https://doi.org/10.3390/digital4040042

APA StyleGupta, K. D., Siddique, S., George, R., Kamal, M., Rifat, R. H., & Haque, M. A. (2024). Physics Guided Neural Networks with Knowledge Graph. Digital, 4(4), 846-865. https://doi.org/10.3390/digital4040042