Simple Summary

This study examines whether fractal dimension and lacunarity, two texture measures that describe image complexity, provide additional information beyond standard radiomic features. Using simulated images, we compared these measures to Image Biomarker Standardisation Initiative (IBSI) descriptors with and without wavelet filtering. We found that fractal dimension captures fine texture variation, while lacunarity describes larger structural patterns. Together they provide complementary information, suggesting that both can enhance radiomic analyses focused on multiscale heterogeneity.

Abstract

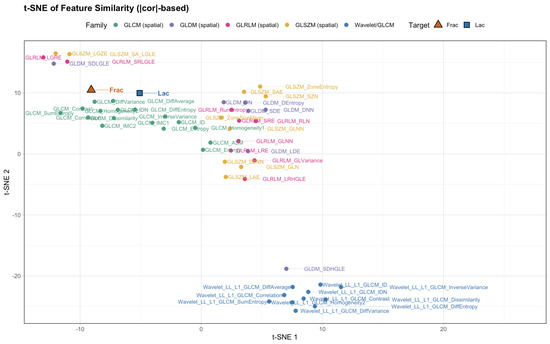

Fractal dimension (Frac) and lacunarity (Lac) are frequently proposed as biomarkers of multiscale image complexity, but their incremental value over standardized radiomics remains uncertain. We position both measures within the Image Biomarker Standardisation Initiative (IBSI) feature space by running a fully reproducible comparison in two settings. In a baseline experiment, we analyze simulated textured ROIs discretized to , computing 92 IBSI descriptors together with Frac (box counting) and Lac (gliding box), for 94 features per ROI. In a wavelet-augmented experiment, we analyze ROIs and add level-1 wavelet descriptors by recomputing first-order and GLCM features in each sub-band (LL, LH, HL, and HH), contributing additional features and yielding 246 features per ROI. Feature similarity is summarized by a consensus score that averages z-scored absolute Pearson and Spearman correlations, distance correlation, maximal information coefficient, and cosine similarity, and is visualized with clustered heatmaps, dendrograms, sparse networks, PCA loadings, and UMAP and t-SNE embeddings. Across both settings a stable two-block organization emerges. Frac co-locates with contrast, difference, and short-run statistics that capture high-frequency variation; when wavelets are included, detail-band terms from LH, HL, and HH join this group. Lac co-locates with measures of large, coherent structure—GLSZM zone size, GLRLM long-run, and high-gray-level emphases—and with GLCM homogeneity and correlation; LL (approximation) wavelet features align with this block. Pairwise associations are modest in the baseline but become very strong with wavelets (for example, Frac versus GLCM difference entropy, which summarizes the randomness of gray-level differences, with ; and Lac versus GLCM inverse difference normalized (IDN), a homogeneity measure that weights small intensity differences more heavily, with ). The multimetric consensus and geometric embeddings consistently place Frac and Lac in overlapping yet separable neighborhoods, indicating related but non-duplicative information. Practically, Frac and Lac are most useful when multiscale heterogeneity is central and they add a measurable signal beyond strong IBSI baselines (with or without wavelets); otherwise, closely related variance can be absorbed by standard texture families.

Keywords:

radiomics; fractal dimension; lacunarity; wavelets; GLCM; GLRLM; GLSZM; GLDM; NGTDM; IBSI; texture; heterogeneity 1. Introduction

Radiomics converts medical images into large panels of quantitative descriptors that summarize intensity distributions, texture, shape, and heterogeneity for modeling and decision support across clinical domains [1,2,3,4]. To enable reproducibility and comparability, the Image Biomarker Standardisation Initiative (IBSI) has codified definitions and implementation choices, such as gray-level discretization, neighborhood topology, and angular or distance aggregation, for a core set of matrix-based texture families: the gray-level co-occurrence matrix (GLCM), gray-level run-length matrix (GLRLM), gray-level size-zone matrix (GLSZM), gray-level dependence matrix (GLDM; also called NGLDM), and the neighborhood gray-tone difference matrix (NGTDM). These specifications are widely adopted in open-source toolkits including PyRadiomics [4,5,6,7,8,9,10].

Alongside these standardized families, fractal descriptors, particularly fractal dimension (Frac) and lacunarity (Lac), remain attractive because they target explicitly multiscale properties: Frac quantifies boundary or texture roughness across scales, whereas Lac characterizes the heterogeneity of gaps or voids as a function of scale [11,12,13]. Applications spanning oncology and neuroimaging report encouraging, though heterogeneous, associations with grade, phenotype, and outcome [14,15,16].

These observations motivate two practical questions that matter for clinical translation. First, to what extent do Frac and Lac provide information that is complementary to IBSI-standardized texture families that already encode high-frequency contrast or large-structure emphasis? Strong inter-feature correlations and redundancy are well documented in radiomics, especially among texture families and under different discretization choices [17,18,19,20,21]. Second, how sensitive are fractal estimators to preprocessing and acquisition factors known to affect radiomic stability, including voxel size, gray-level discretization, reconstruction, and segmentation variability [4,22,23,24]? Addressing redundancy and sensitivity is essential to avoid optimistic estimates of model performance, clarify interpretation, and support reproducible pipelines [1,2].

In this work we (i) synthesize the relevant literature on fractal and lacunarity analysis in medical imaging; (ii) provide explicit mathematical definitions for all features considered to ensure unambiguous reproducibility; and (iii) run a two-part controlled simulation in which textured, heterogeneous ROIs are generated and Frac and Lac are compared against standardized radiomics in two configurations: first without wavelets and then with wavelets. In the baseline setting, Frac and Lac are evaluated alongside 92 IBSI features, yielding 94 features per ROI. In the wavelet-augmented setting, we recompute first-order and GLCM descriptors in each of the four wavelet sub-bands (LL, LH, HL, and HH), adding 152 wavelet features for a total of 246 per ROI. Similarities are quantified with multiple complementary measures, Pearson correlation, Spearman correlation, distance correlation, the maximal information coefficient, and cosine similarity, and summarized with heatmaps, dendrograms, correlation networks, principal component loadings, and two-dimensional embeddings based on UMAP and t-SNE. Our goal is not to advocate for or against fractal biomarkers as such, but to delineate when they add a genuinely complementary signal and when they largely recapitulate standardized descriptors derived from the spatial domain or from wavelets.

Relation to Existing Benchmarks

Our study is positioned as a benchmark comparison that complements established reproducibility and harmonization frameworks in radiomics [4,21,22]. Previous benchmark efforts have mainly quantified how acquisition variability, voxel size, reconstruction, and gray-level discretization influence standardized features across scanners and cohorts, often recommending harmonization methods such as ComBat and rigorous reporting of preprocessing choices [4,23,24]. In contrast, the present study focuses on methodological redundancy and complementarity under controlled simulation. By generating synthetic regions of interest with tunable spatial correlation and optional heterogeneity, we are able to attribute observed associations to feature definitions rather than to protocol confounders.

Within this controlled environment, we evaluate whether fractal descriptors—fractal dimension (Frac) and lacunarity (Lac)—contribute incremental information beyond standardized IBSI texture families and wavelet-based extensions. To achieve this, we keep discretization, angular aggregation, and neighborhood topology fixed according to IBSI recommendations and vary only the underlying texture process. We then quantify feature relatedness using five complementary dependence measures (Pearson, Spearman, distance correlation, maximal information coefficient, and cosine similarity), form a consensus z-score for ranking, and visualize the overall geometry using clustered heatmaps, principal component loadings, and low-dimensional embeddings obtained from UMAP and t-SNE. This multi-view evaluation extends earlier benchmarks that typically emphasize repeatability or single-metric stability by identifying where Frac and Lac reside relative to co-occurrence, run-length, size-zone, dependence, and gray-tone difference features, both with and without wavelet augmentation.

Empirically, a stable two-block organization emerges that aligns with the intended behavior of established feature families. Frac is closely associated with contrast, difference, and short-run statistics that describe high-frequency texture variation, while Lac aligns with large-structure, homogeneity, and long-run statistics. The addition of wavelet features sharpens this distinction, as detail-band components correspond more strongly with Frac, and approximation-band components correspond with Lac. Collectively, these findings indicate that fractal measures are overlapping yet non-duplicative; they follow known axes of variation already represented in IBSI features but retain interpretable multiscale summaries that can be particularly useful when heterogeneity across scales is an essential characteristic.

Finally, this simulation-grounded benchmark complements empirical clinical studies of fractal descriptors [14,15,16] by disentangling intrinsic feature associations from acquisition effects. It provides a transparent framework for understanding when fractal measures are likely to be redundant, such as in models already rich in contrast, short-run, or homogeneity descriptors, and when they can add measurable information. In this way, the study extends existing benchmarks beyond the question of whether features are stable to the more informative question of whether fractal descriptors contribute additional, non-redundant information beyond strong IBSI baselines under conditions where causal attribution is clearly defined.

2. Background and Related Work

2.1. Standardized Radiomic Features

The Image Biomarker Standardisation Initiative (IBSI) specifies preprocessing steps and feature definitions for widely used texture families, and recommends transparent reporting of discretization, neighborhood definitions, and aggregation rules [4]. In brief, the gray-level co-occurrence matrix (GLCM) summarizes how often pairs of discretized gray levels co-occur at fixed offsets and angles [5]; the gray-level run-length matrix (GLRLM) counts contiguous runs of equal gray level [6]; the gray-level size-zone matrix (GLSZM) measures the sizes of connected same-gray zones irrespective of direction [7]; the gray-level dependence matrix (GLDM, also called NGLDM) captures counts of neighboring voxels within a gray-level tolerance [8]; and the neighborhood gray-tone difference matrix (NGTDM) characterizes deviations from the local neighborhood mean [9]. These standardized definitions underpin open-source implementations such as PyRadiomics [10]. Empirically, features from these families can be highly collinear, so clustering or redundancy reduction prior to modeling is commonly recommended [17,18,19]. Repeatability and reproducibility also vary across features and pipelines, motivating harmonization (for example, ComBat), robust cross-validation, and sensitivity analyses to voxel size and discretization [21,22,23,24].

2.2. Fractal Dimension and Lacunarity

Fractal measures target complementary aspects of structure. The box-counting fractal dimension describes a global scaling law: how occupancy grows as resolution is refined, whereas lacunarity captures scale-specific heterogeneity: how unevenly occupancy is distributed at a given window size [11,12,13]. Two textures can share the same fractal dimension yet display distinct lacunarity curves, and the converse can also occur; the measures are therefore not interchangeable. In medical imaging, both have been linked to tumor grade and outcome, although reported associations can be sensitive to the definition of the region of interest, the gray-level binning scheme, and the selection of scales [14,15,16]. Recent algorithmic advances, such as efficient gliding-box lacunarity via integral images, reduce computational burden and encourage systematic comparisons with standardized radiomics [25]. Conceptually, fractal dimension tends to increase with fine-scale roughness or rapid gray-level changes, behavior that also elevates contrast-oriented descriptors derived from co-occurrence and run-length representations. By contrast, lacunarity often rises with broad, coherent zones and heterogeneous voids, echoing GLSZM large-area and GLRLM long-run emphases as well as homogeneity and inverse-difference measures in the GLCM family [5,6,7,9]. Because standardized families already approximate these tendencies, it is important to determine whether fractal descriptors contribute genuinely new information or largely re-encode signals captured by classical features. The redundancy and protocol sensitivity observed across radiomics [17,18,19,21,22,23,24] motivate the controlled evaluation undertaken here.

2.3. Clinical Applications of Radiomics and the Role of Fractal Measures

Radiomics has been applied widely for diagnosis, phenotyping, risk stratification, and outcome prediction in oncology and neurology [1,2,3]. In cancer imaging, quantitative signatures extracted from CT, MR, or PET have been associated with tumor biology and prognosis and incorporated into decision–support workflows. Beyond oncology, neuroimaging applications, such as glioma grading, stroke classification, and phenotyping of neurodegenerative disease, use similar pipelines that combine first-order, shape, and matrix-based texture features with predictive modeling [2]. Within this landscape, fractal descriptors offer an explicitly multiscale perspective. Studies report that three-dimensional fractal dimension and lacunarity can aid grading or subtype discrimination when added to conventional MR features in neuro-oncology [14], and broader reviews document encouraging but heterogeneous evidence across applications [15,16]. The key open question is incremental value: given that IBSI-standardized features already capture high-frequency contrast (for example, GLCM contrast or dissimilarity and short-run GLRLM) and large-structure or homogeneity effects (for example, GLSZM large-area or zone-size statistics; long-run and high-gray-level GLRLM; GLCM inverse-difference and homogeneity), under what conditions do fractal dimension and lacunarity add a non-redundant signal? Answering this calls for transparent pipelines, harmonization where appropriate, and robust validation to account for protocol sensitivity and feature collinearity [4,21,22,23,24].

Moreover, a closer look at recent methodological advances provides additional insight. For example, Ilmi & Khalaf’s graphene–temporal fusion for yoga pose recognition [26] showcases the power of combining spatial graphs and temporal dynamics, but its non-medical dataset and absence of standardized texture extraction limit its direct relevance for radiomics. Similarly, Jumadi & Md Akbar’s hybrid GRU-KAN model for energy consumption prediction [27] demonstrates effective hybrid modeling in time series but reflects a non-imaging domain and lacks imaging preprocessing/harmonization. A recent review of Mask R-CNN, for instance, segmentation [28], emphasizes the importance of robust segmentation—which is directly relevant for reproducible radiomic and fractal extraction—but does not address feature redundancy or multiscale heterogeneity explicitly. Finally, a stacked ensemble for cervical cancer prediction using tabular data [29] reinforces the role of ensemble and regularization strategies to address collinearity and imbalance. Issues that are equally relevant in radiomic pipelines assessing incremental features like fractal dimension and lacunarity. Taken together, these works highlight emerging best practices (graph–temporal modeling, hybrid architectures, segmentation robustness, ensemble calibration) and their limitations (domain mismatch, lack of imaging standardization, no explicit multiscale heterogeneity descriptors)—which reinforce the need for our simulation-grounded, IBSI-aligned, multiscale feature-computation, and incremental evaluation of fractal measures.

3. Materials and Methods

3.1. Radiomic Feature Set: Definitions and Computation

We consider a two-dimensional region of interest (ROI) represented by a real-valued image with H pixel rows (height) and W pixel columns (width), defined on the grid , so that . Let denote the discretized (gray-level binned) version of X obtained by an operator ; unless otherwise stated we use equal-frequency (quantile) binning with in line with common IBSI-style configurations [4]. Two feature configurations are examined. In the baseline setting, we extract 92 IBSI-standardized descriptors (19 first-order, 19 GLCM, 16 GLRLM, 16 GLSZM, 14 GLDM, 5 NGTDM, and 3 two-dimensional shape proxies) together with two fractal descriptors (Frac and Lac), for 94 features per ROI. In the wavelet-augmented setting, we add level-1 wavelet features by recomputing first-order and GLCM descriptors on each of the four sub-bands (LL, LH, HL, HH), which contributes additional features. The wavelet-augmented total is therefore features.

3.1.1. First-Order (Intensity) Features

First-order features summarize the distribution of voxel intensities in an ROI without reference to spatial arrangement [1,2,4]. They provide baseline information about central tendency, spread, tail behavior, and histogram shape. We follow a nineteen-feature set that aligns with IBSI-style implementations and widely used toolkits [3,4,10]: (i) power measures (Energy, Total Energy, RMS); (ii) dispersion (Variance, Standard deviation, Range, IQR); (iii) shape (Skewness, Kurtosis); (iv) histogram complexity (Entropy, Uniformity); and (v) robust summaries (Median, P10, P90, MAD, rMAD, as well as Min and Max). Definitions and formulas, together with brief interpretations, appear in Appendix A Table A1 [4,10].

Several practical points aid interpretation. Energy, Total Energy, RMS, Variance, and Standard deviation are scale dependent: linear rescaling of intensities changes their values, which is appropriate when physical units are meaningful but can confound cross-scanner comparisons if not harmonized [21,23]. Entropy and Uniformity depend on gray-level discretization ( and the binning scheme), so consistent preprocessing is important for reproducibility [4,22,23]. Median, IQR, MAD, and rMAD are more robust to noise and outliers than mean and variance and often stabilize modeling under heterogeneous acquisition conditions [2,4]. Although some measures are correlated by construction (for example, Energy and RMS), retaining both can be useful when regularization is used or when reporting adheres to radiomic standards, otherwise, redundancy reduction (e.g., correlation filtering) can simplify models without sacrificing information [4,18,19].

3.1.2. GLCM Features

The gray-level co-occurrence matrix (GLCM) encodes how often pairs of discretized gray levels co-occur at a fixed spatial offset. In this study the matrix is computed at distance 1, aggregated over four angles , symmetrized, and normalized so that [4,5].

Intuitively, mass far from the diagonal signals frequent high-contrast transitions, whereas mass concentrated near the diagonal reflects locally smoother textures [1,2]. We report nineteen descriptors: classical contrast and entropy terms; two homogeneity variants; two inverse-difference variants; inverse variance; correlation; maximum probability; three sum/difference statistics; and both information measures of correlation (IMC1/IMC2) [4,10]. Exact formulas are given in Appendix A Table A2.

3.1.3. GLRLM Features

The gray-level run-length matrix (GLRLM) counts how often contiguous runs of an identical gray level occur and how long those runs persist across the image [4,6]. After discretization, a run is the maximal sequence of adjacent pixels with the same gray level observed along a given direction. Short runs reflect a fine, rapidly varying texture, whereas long runs reflect a coarse, more uniform structure. By cross-tabulating run length with gray level, GLRLM features quantify, within one family, both scale (short and long) and tone (low and high gray) emphases. We compute GLRLMs at distance 1, aggregate over four angles to improve directional robustness, and normalize to probabilities [4]. Formulas for all sixteen descriptors appear in Appendix A Table A3 [4,7,10].

3.1.4. GLSZM Features

The gray-level size-zone matrix (GLSZM) summarizes the distribution of connected zones of equal gray level within an ROI, irrespective of direction [4,7]. A zone is the maximal set of pixels that share the same discretized gray level and are connected under an 8-neighborhood in two dimensions [4,10]. Textures dominated by small zones correspond to fine, fragmented patterns, whereas textures dominated by large zones reflect coarse, homogeneous structure [2]. By cross-tabulating gray level and zone size, GLSZM features quantify how coarseness interacts with tone [4,7]. We use 8-connectivity, aggregate over the region, and normalize to probabilities [4,10]. Formulas for all sixteen descriptors appear in Appendix A Table A4 [4,10].

3.1.5. GLDM Features

The gray-level dependence matrix (GLDM, also called NGLDM) quantifies, for each discretized gray level i, how many pixels in a fixed neighborhood are dependent on the center pixel [4,8,10]. Two pixels are considered dependent if the absolute difference of their gray levels does not exceed a tolerance . In this work , meaning only exactly equal gray levels (after discretization) count as dependent [4,10]. Using a Chebyshev-1 neighborhood in two dimensions provides up to eight surrounding pixels; when the center is included in the count, the dependence size d ranges from 1 to 9 [4]. Tallying, for each gray level i, how often each dependence size d occurs within this neighborhood captures the prevalence of isolated pixels versus coherent tone-consistent patches and how these patterns vary with gray level [4,8]. Formulas for all fourteen descriptors appear in Appendix A Table A5 [4,10].

3.1.6. NGTDM Features

The neighborhood gray-tone difference matrix (NGTDM) summarizes how each discretized gray level deviates, on average, from its local neighborhood [4,9]. Given a quantized image , we evaluate each interior pixel using a window (Chebyshev radius 1) and define the neighborhood mean as the average of the eight surrounding pixels, excluding the center [4,10]. To avoid edge artifacts, only pixels with a full neighborhood (that is, interior pixels) contribute to the statistics [4]. Formulas for all five descriptors appear in Appendix A Table A6 [4,10].

3.1.7. Shape-2D Proxies

For this 2D simulation we summarize gross morphology with four compact descriptors computed on a binary ROI mask: area, a 4-neighbor (Manhattan) perimeter, and two in-plane anisotropy measures derived from the principal variances of the foreground coordinates. This deliberately lightweight set mirrors the intent of the standardized 3D shape features recommended by IBSI while remaining appropriate for single-slice analyses [4,10]. Formulas for all four descriptors appear in Appendix A Table A7 [4,10].

3.2. Wavelet Features

We complement the spatial-domain descriptors with a stationary (undecimated) level-1 two-dimensional wavelet transform so that all filtered images retain the original ROI size [30,31]. We begin by specifying notation. Let be indexed as , where i is the row (vertical, y) index and j is the column (horizontal, x) index. Let

be the one-dimensional low- and high-pass analysis filters with finite (odd) lengths

so that (respectively, ) is the half-support; that is, the number of taps on one side of the filter center. Throughout we use symmetric (mirror) padding at the borders and no downsampling (the transform is undecimated) [31,32]. Unless noted otherwise, the wavelet family is Coiflet-1 for its near-symmetry and compact support [33].

Next we define one-dimensional discrete convolutions along the column (x) and row (y) axes and a two-dimensional discrete convolution ; we denote 1-D convolution along columns by and along rows by . Convolution along x keeps rows fixed and sums across columns,

while convolution along y keeps columns fixed and sums across rows,

If an index falls outside the valid image range , it is reflected back into the interval (symmetric padding) before sampling X [4,10].

We then obtain four stationary level-1 sub-bands by low-/high-pass filtering along x and y without downsampling:

Equivalently, with separable two-dimensional kernels , , , and and 2D convolution ∗,

where is the outer product of the one-dimensional filters (a separable two-dimensional kernel), and

is the two-dimensional discrete convolution of kernel K with image X. Here, u and v are integer offsets that index the kernel support horizontally and vertically, respectively; the double sum is taken over the finite support where . The LL band collects coarse (approximation) content, whereas LH, HL, and HH collect horizontal, vertical, and diagonal detail.

Each sub-band is re-standardized (per sub-band, per ROI) to zero mean and unit variance prior to discretization to mitigate scale differences across sub-bands and ROIs [21]. (For predictive modeling, standardization parameters should be estimated on the training set only to avoid leakage.) Discretization then follows the base analysis: quantile binning into equiprobable gray levels applied independently to each sub-band [4,23]. On every discretized sub-band we compute first-order (19) and GLCM (19) statistics, yielding wavelet features per ROI. This mirrors widely used radiomic configurations and captures frequency- and orientation-specific cues [17,34,35] while controlling multiplicity and collinearity [19,22]. Other matrix families (GLRLM, GLSZM, GLDM, NGTDM) can be added analogously, but here we restrict the wavelet set to first-order and GLCM for parsimony [19,22].

Finally, we record the transform type (undecimated), the wavelet family and level (Coiflet-1, one level), the boundary handling (symmetric reflection), and that discretization is performed per sub-band, in line with IBSI reporting guidance [4]. Wavelet filtering separates coarse structure (LL) from horizontal, vertical, and diagonal detail (LH, HL, HH), often revealing texture that single-scale statistics may miss [1,2]. At the same time, wavelet expansions increase feature counts and can accentuate instability or acquisition dependence; re-standardization, fixed discretization, and downstream multiplicity control help curb these effects [20,21,22,23,24,36].

3.3. Fractal Dimension and Lacunarity

Fractal descriptors target complementary aspects of spatial organization. The Minkowski–Bouligand (box-counting) fractal dimension quantifies a global scaling law: how occupancy grows as resolution is refined, whereas lacunarity quantifies scale-specific heterogeneity: how unevenly occupancy is distributed at a given window size [11,12,13].

We begin with formal definitions. Let be a gray-scale ROI and let be a binary support extracted from X. Overlay a lattice of square boxes of side and let be the number of boxes that intersect . The box-counting fractal dimension is

so that, asymptotically, [11]. For a binary subset of the plane, .

Next, consider lacunarity at observation scale r. For a binary image (with 1 indicating occupancy), slide an window over all admissible top-left anchors , where

Define the window mass

and let and . The gliding-box lacunarity is

which satisfies . Values near 1 indicate spatial homogeneity at scale r, whereas larger values indicate stronger clustering of mass or more pronounced voids [12,13]. Information resides in the curve across scales.

We now detail the estimators and settings used here. For fractal dimension on gray-scale images, we adopt a threshold-averaged box-counting procedure. For each gray-level quantile , we binarize

tile with box sizes pixels, count non-empty boxes, and fit a linear regression of on . The estimated fractal dimension is the average slope across thresholds:

For lacunarity, we threshold once at to obtain , compute from Equation (7) for window sizes , and summarize using a scale average:

Efficient integral-image implementations make these gliding-box computations practical and support broader multiscale sensitivity analyses [25].

Finally, we relate these measures to standardized radiomics. Larger D tends to co-vary with contrast and short-run behavior—fine-scale roughness and rapid gray-level changes—captured by descriptors such as GLCM contrast and dissimilarity and by short-run GLRLM measures [5,6]. In contrast, larger lacunarity tends to align with a large, coherent structure—broader zones, pronounced voids, and greater homogeneity—summarized by GLSZM large-area and zone-size statistics, GLRLM long-run and high-gray-level emphases, and GLCM homogeneity and inverse-difference measures [7,9]. Because these tendencies are related but not identical, the two fractal descriptors offer complementary views of multiscale heterogeneity and are most informative when interpreted alongside IBSI-standardized features.

In the box below we summarize, step by step, the full radiomic feature pipeline used in this study, including IBSI families, fractal estimators, and the optional wavelet branch.

| Radiomic Feature Computation—Step-by-Step Summary |

|

3.4. Similarity Metrics and Embeddings (Definitions)

This section explains how we quantify the relatedness between Frac and Lac and the remaining radiomic features, and how we create the two-dimensional maps that summarize their neighborhood structure. We begin by defining the pairwise similarity measures used to rank nearest neighbors, then describe the consensus score that combines them, and finally outline the low-dimensional embeddings used for visualization.

3.4.1. Pairwise Association (Similarity) Measures

Let be paired measurements across n ROIs (for example, x is Frac or Lac and y is a radiomics descriptor). We first use Pearson’s correlation, the centered and variance-normalized covariance,

which measures linear association on [37].

We next use Spearman’s rank correlation, which applies Pearson’s formula to midranks and :

thereby capturing monotone (not necessarily linear) association and also lying in [38]. In the no-tie case, .

We then include distance correlation (dCor), which equals 0 if and only if the variables are independent [39]. Using pairwise Euclidean distance matrices and , define their double-centered versions and . The sample quantities are

and the resulting correlation is

To broaden beyond purely linear or monotone trends, we also use the Maximal Information Coefficient (MIC), a grid-based information measure that scores a wide family of functional relationships on a common scale [40]:

where is the search budget and is the empirical mutual information on an partition.

Finally, we include cosine similarity. For vectors ,

When both variables are z–scored (mean 0, variance 1), Equation (14) equals Pearson’s r in Equation (10). Cosine similarity is widely used in vector space models for pattern analysis and information retrieval [41].

Because these five measures operate on different scales and emphasize different aspects of dependence, we combine them into a single consensus score. For a fixed target (either Frac or Lac), we compute against every other feature, z-score each metric across those comparisons, and average the five z-scores to obtain the composite similarity used for ranking. For r and , we report two-sided p-values with Benjamini–Hochberg false discovery rate (FDR) correction over all comparisons for that target [36]. When a distance is required for clustering or embeddings, we use

so that strongly associated pairs (in magnitude) are close.

3.4.2. Low-Dimensional Embeddings of Feature Geometry

With a pairwise distance in hand, we next visualize the geometry of the feature space in two dimensions. We use two complementary methods. The first is t-SNE, which builds a high-dimensional neighbor distribution using Gaussian kernels with per-point bandwidths chosen to match a user-set perplexity:

It then fits a two-dimensional map with a heavy-tailed Student-t kernel,

by minimizing via gradient descent [42]. In our setting t-SNE is applied to the distances in Equation (15) and is read primarily for local neighborhoods; between-cluster distances are not directly interpretable.

The second method is UMAP, which starts from a k-nearest-neighbor graph built from Equation (15), converts it into a fuzzy simplicial set with edge weights , and then learns a low-dimensional representation by minimizing

where and control how quickly similarity decays with distance [43]. In practice, we use moderate neighborhood sizes and a small minimum-distance setting to reveal communities without over-fragmentation, and we fix random seeds for reproducibility.

Taken together, these choices specify how neighbors of Frac and Lac are ranked and how the resulting pairwise relationships are summarized as heatmaps, dendrograms, networks, and two-dimensional embeddings.

3.5. Simulation Design

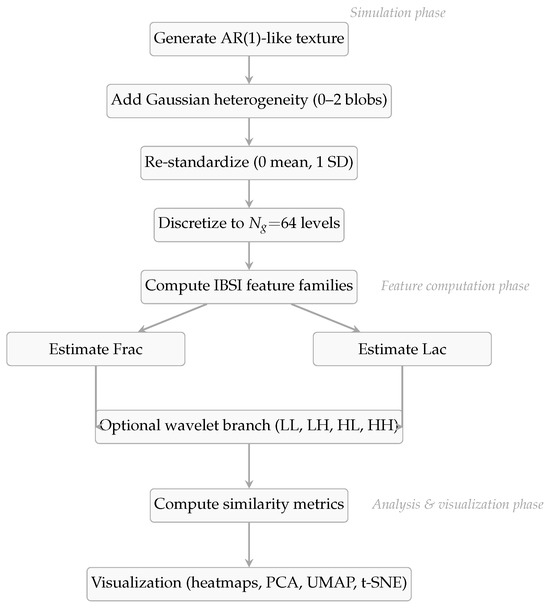

We set out to build a controlled yet expressive sandbox in which fractal dimension (Frac) and lacunarity (Lac) can be compared fairly against a broad panel of standardized radiomic features, including a multiscale wavelet branch. Each synthetic sample is a region of interest (ROI) that exhibits tunable spatial correlation and optional focal heterogeneity; all samples are then discretized to gray levels. Features are computed directly from the mathematical definitions given earlier, and similarity between Frac/Lac and each descriptor is quantified with multiple, complementary dependence measures defined in Section 3.4. The workflow mirrors common radiomics practice—textures ranging from fine to coarse, with or without a lesion-like structure—while preserving enough control to make causal attribution transparent (see Table 1 and Table 2 for concise summaries). For clarity, Figure 1 provides an overview of the simulation workflow.

Figure 1.

Overview of the simulation workflow. The compact version summarizes all stages of the pipeline from synthetic texture generation to feature computation, similarity analysis, and visualization.

To generate background texture we adopt a two-dimensional autoregressive recursion that is AR(1)-like along both axes,

drawing and independently for each ROI. This Markov construction yields approximately isotropic correlation, exposes a single parameter that smoothly tunes spatial frequency (small gives rough, high-frequency patterns; large gives smooth, low-frequency patterns), and is numerically stable and fast on grids [44,45]. Because clinical images rarely look perfectly stationary, we superimpose 0–2 Gaussian blobs with amplitudes sampled from and radii from 6–12 pixels, then re-standardize X to zero mean and unit variance. These localized additions emulate the lesion-like structure without overwhelming the global correlation, creating cases where Frac can respond to fine-scale roughness while Lac responds to gap structure and region size within the same sample.

After simulation, the continuous field is converted to discrete gray levels by quantile binning into equiprobable bins, producing . Quantile discretization mitigates arbitrary intensity scaling, stabilizes probability estimates that enter co-occurrence/run/zone matrices, and aligns with IBSI recommendations; the choice balances information content against matrix sparsity for this ROI footprint [4,22,23,24]. Using equiprobable bins also keeps histogram-based first-order features well behaved and reduces sensitivity to marginal rescales. A compact summary of all simulation factors and data-generation settings appears in Table 1.

Feature computation proceeds as in the mathematical section: 19 first-order descriptors; 19 GLCM statistics constructed at distance 1 and aggregated over ; 16 GLRLM descriptors with the same angular aggregation; 16 GLSZM descriptors using 8-connectivity; 14 GLDM descriptors with tolerance and Chebyshev radius 1; 5 NGTDM descriptors computed on full neighborhoods; and 4 simple two-dimensional shape proxies [4,5,6,7,8,9]. Frac is estimated by box counting across four box sizes and three intensity thresholds , averaging slopes from the versus regressions over thresholds to stabilize against single-cut artifacts [11]. Lac is estimated by gliding-box lacunarity using window sizes after thresholding at the median (), then averaging over r to obtain a single summary; efficient integral-image implementations are used to avoid partial-window bias and to keep computation tractable [12,13,25].

In addition to the spatial-domain features above, we include a one-level, undecimated (stationary) two-dimensional wavelet transform with symmetric boundary handling, using Coiflet-1 filters for near-symmetry and compact support [30,31,32,33]. This yields four sub-bands (LL, LH, HL, HH) at the original resolution. Each sub-band is re-standardized to zero mean and unit variance and discretized independently into equiprobable levels. On every sub-band we compute first-order (19) and GLCM (19) statistics, adding wavelet features per ROI. These choices follow IBSI/PyRadiomics conventions for wavelet-filtered images and limit multiplicity by focusing on first-order and GLCM in the transform domain [4,10].

Similarity between Frac and Lac and each classical or wavelet-domain feature is evaluated with five measures that emphasize different types of dependence—Pearson’s r (10), Spearman’s (11), distance correlation () (12), MIC (13), and cosine similarity (14)—as defined in Section 3.4. For r and we adjust p-values across all pairwise tests (classical plus wavelet features) using Benjamini–Hochberg false discovery rate (FDR) control [36]. Because these metrics live on different scales, we standardize them by z-scoring across the full set of comparisons and then average the five z-scores to obtain a composite similarity (see Section 3.4 for the composite definition). For visualization, we form the union of the top-k neighbors around Frac and Lac (here for each), compute the Pearson correlation matrix on that set, and display a clustered heatmap. We also embed the same features using distances with t-SNE and UMAP (definitions in Section 3.4) to convey qualitative geometry, treating these embeddings as descriptive rather than inferential [42,43]. The complete analysis and similarity settings, including wavelet parameters, are summarized in Table 2.

With independent ROIs (default; configurable), each producing 246 features, the final table contains measurements. The inventory by family is summarized in Table 3. Random seeds are fixed at the start of each full simulation to ensure deterministic regeneration of data and figures; all hyperparameters (scale sets, thresholds, and ranges, discretization and neighborhood choices, and wavelet settings) are declared in the repository and mirrored in the manuscript so that readers can audit or modify them and regenerate the entire analysis.

Table 3.

Feature counts per family used in the simulation.

In summary, the AR(1)-like generator (Equation (19)) provides a transparent, one-parameter handle on spatial frequency [44,45]; the optional Gaussian blobs introduce controlled nonstationarity reminiscent of lesions or subregions; the quantile discretization and the angle/distance settings align with IBSI so that conclusions carry over to standard pipelines [4,23,24]; the wavelet branch exposes multiscale, orientation-specific detail in a standardized way [4,10,30,31,32,33]; and the multimetric similarity with FDR reflects best practices when exploring many pairwise relationships where linearity is not guaranteed [36,39,40]. The scope is intentionally two-dimensional for clarity and speed; extending to 3D with 26-neighbor settings, volumetric zones/runs, and 3D wavelets and lacunarity is straightforward but more computationally demanding.

4. Results

4.1. Results Without Wavelet Features

We analyzed simulated two-dimensional ROIs (each pixels), discretized to gray levels. Alongside the 92 IBSI texture descriptors, we included fractal dimension (Frac) and lacunarity (Lac), for 94 features in total. Similarity between Frac and Lac and all other features was summarized using the procedure in Section 3.4; in brief, we averaged z–scored Pearson , Spearman , distance correlation, MIC, and cosine similarity to obtain a composite score.

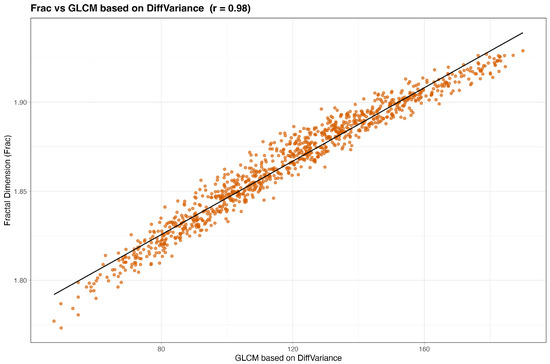

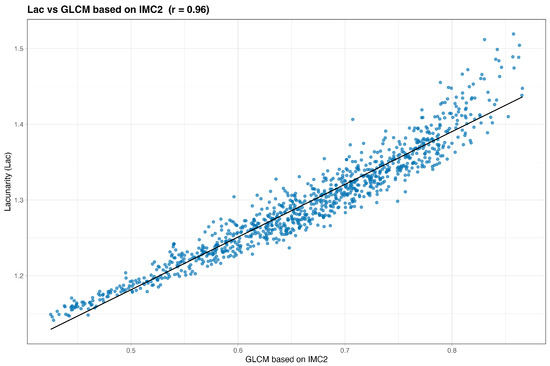

We begin by examining simple pairwise associations. Figure 2 and Figure 3 plot Frac and Lac against their highest-ranked neighbors under the composite score. In both cases the relationships are monotone with an approximately linear trend, indicating that Frac and Lac are not idiosyncratic to the simulator but align with recognizable texture constructs. Qualitatively, Frac tracks contrast- and difference-type behavior (for example, GLCM contrast, dissimilarity, and difference entropy, together with GLRLM short-run emphases), whereas Lac increases with statistics that summarize coherent, larger structures (for example, GLSZM large-area and zone-size measures and GLRLM long-run or high-gray-level emphases).

Figure 2.

Frac versus its top neighbor under the composite score (baseline, no wavelets). The association is monotone with a shallow linear trend.

Figure 3.

Lac versus its top neighbor under the composite score (baseline, no wavelets). The association is monotone with a shallow linear trend.

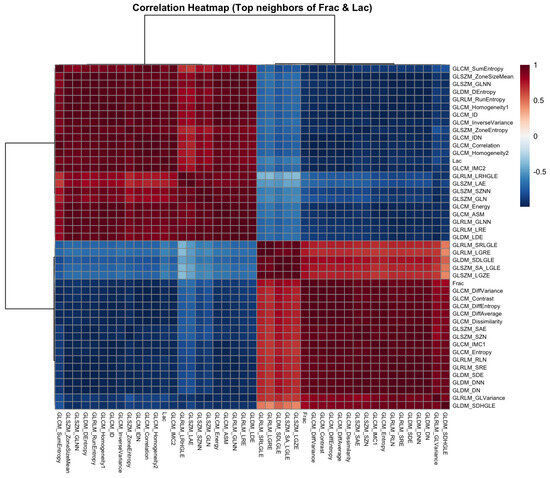

Next, to place these strongest pairwise relationships in context, we formed the union of the top-k neighbors of Frac and Lac ( each) and computed the Pearson correlation matrix for this set. The clustered heatmap in Figure 4 exhibits a clear bipartite organization: one block is rich in contrast-oriented statistics and co-locates with Frac (GLCM contrast, dissimilarity, and difference entropy; GLRLM short-run; NGTDM busyness and contrast; selected GLDM dependence-entropy terms), and the other block emphasizes a larger structure and co-locates with Lac (GLSZM large-area and zone-size statistics; GLRLM long-run and high-gray-level emphases). Homogeneity measures (for example, GLCM Homogeneity and ID/IDN) lie opposite the contrast block and tend to associate more closely with Lac.

Figure 4.

Correlation heatmap for the union of the top neighbors of Frac and Lac (baseline, no wavelets). Red and blue denote positive and negative correlations. The set partitions into a contrast-oriented block (Frac-aligned) and a large-structure block (Lac-aligned).

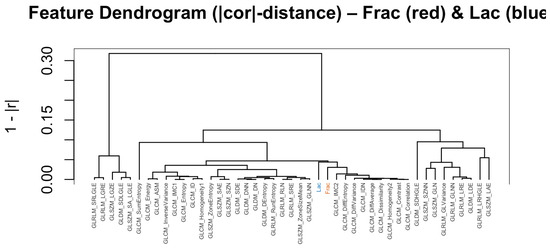

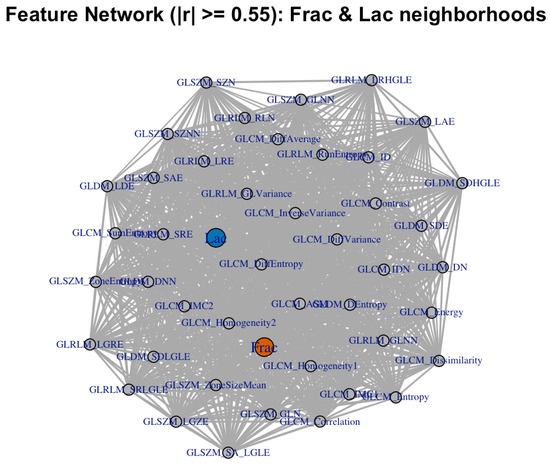

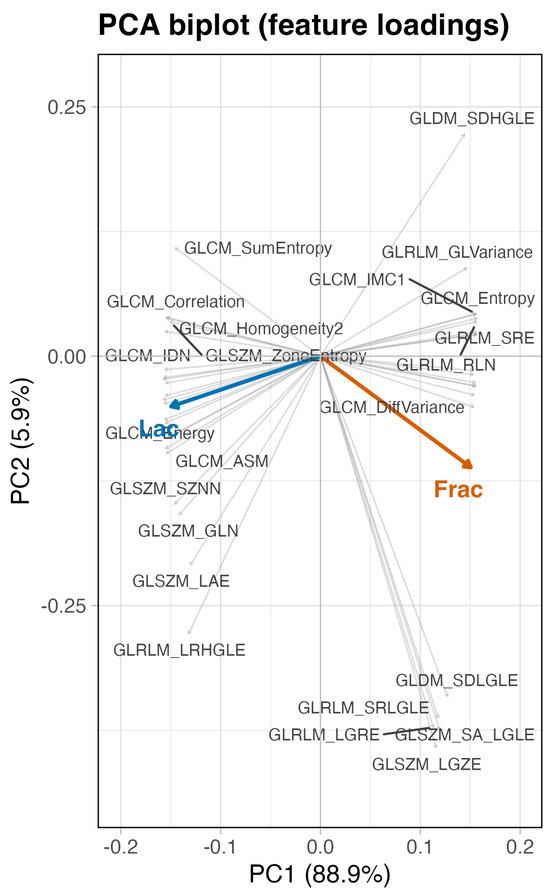

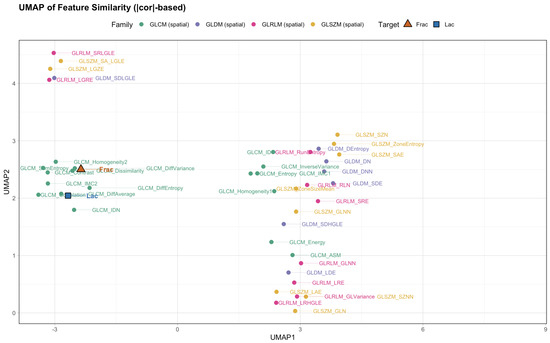

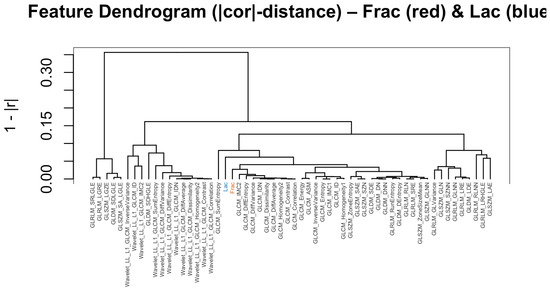

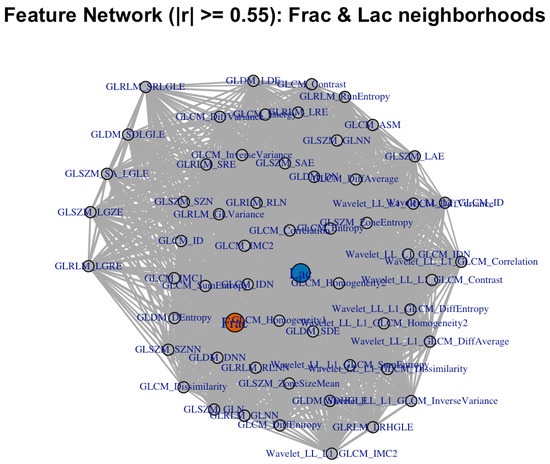

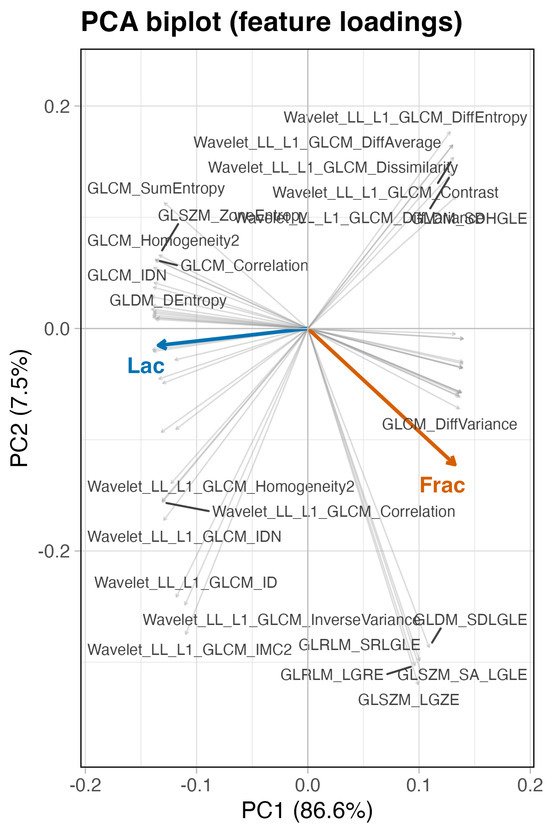

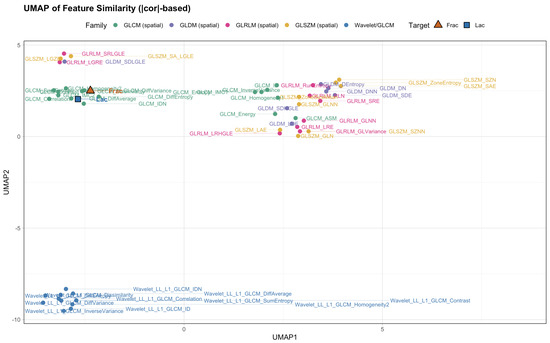

We then asked whether alternative geometric summaries tell a consistent story. Hierarchical clustering on distances (Figure 5), a sparse correlation network with edges for (Figure 6), a PCA loading biplot (Figure 7), and low-dimensional embeddings via UMAP and t-SNE (Figure 8 and Figure 9) all corroborate the same organization. Taken together, these views indicate that while Frac and Lac overlap with specific IBSI families, they are not simple duplicates and provide complementary multiscale information.

Figure 5.

Feature dendrogram based on distances (baseline, no wavelets). Frac clusters with contrast- and difference-type descriptors; Lac clusters with zone/run size and homogeneity descriptors.

Figure 6.

Correlation network for the same neighborhood set (; baseline, no wavelets). Communities reflect the contrast-heavy (Frac-adjacent) and large-structure (Lac-adjacent) groups.

Figure 7.

PCA biplot of correlation-based loadings (baseline, no wavelets). PC1 spans high-frequency variation (contrast and short run; Frac side) to low-frequency coherence (large area, long run, and homogeneity; Lac side).

Figure 8.

UMAP of feature similarity ( distance; baseline, no wavelets). Frac sits within the contrast-heavy region; Lac anchors a neighboring large-structure region.

Figure 9.

t-SNE of feature similarity ( distance; baseline, no wavelets). The contrast-versus-structure separation mirrors the heatmap and PCA.

Finally, we turn to the ranked lists. Table 4 shows that the nearest neighbors of Frac are dominated by GLCM difference, contrast, and entropy-type descriptors (Contrast, Dissimilarity, Difference Entropy, Difference Variance, Difference Average) with additional short-run and small-structure measures (GLRLM SRE and SRLGLE, plus selected GLSZM and GLDM small-area or low-gray-level statistics). Agreement is uniformly high across Pearson, Spearman, distance correlation, MIC, and cosine similarity, indicating a strong monotone association. Practically, Frac tracks fine-scale, high-frequency variation; redundancy is likeliest when models already contain several contrast-like or short-run descriptors.

Table 4.

Top-10 most similar IBSI descriptors to Frac under the composite similarity score.

Reading Table 5, the nearest neighbors of Lac emphasize large-scale organization and gray-level regularity. Frequent entries include GLCM correlation and homogeneity (Correlation, Homogeneity2, ID/IDN, and IMC2) together with GLSZM zone-size statistics and GLRLM long-run and high-gray-level emphases (LRE, LRHGLE, HGRE). Similarity remains high across all metrics, consistent with a smooth, low-frequency signal. Read with the Frac table, this underscores a complementary division: Frac rises with local differences and short runs, whereas Lac rises with homogeneity, correlation, and coherent large structures.

Table 5.

Top-10 most similar IBSI descriptors to Lac under the composite similarity score.

4.2. Results with Wavelet Features

We repeated the analysis with simulated ROIs (, ), augmenting the feature set with level-1 wavelet descriptors from the four sub-bands (LH, HL, HH, LL). As before, similarity between Frac/Lac and the remaining features was summarized using the composite procedure in Section 3.4.

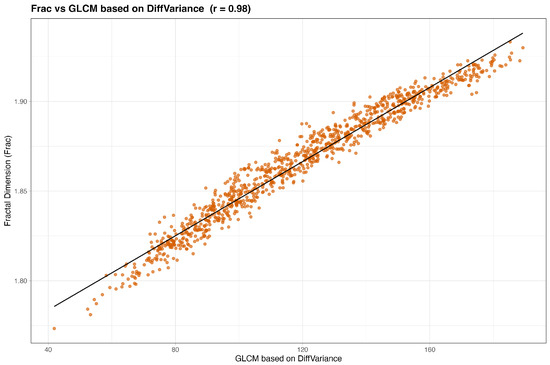

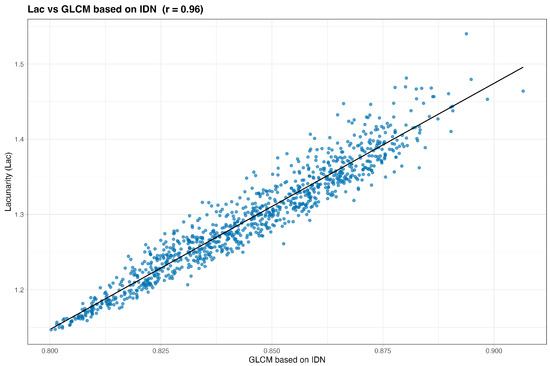

We begin by inspecting the strongest pairwise associations after adding wavelets. The nearest neighbors of Frac are led by gray-level co-occurrence statistics that emphasize differences and contrast: difference variance, difference entropy, dissimilarity (and difference average), and contrast occupy the top ranks. Turning to Lac, homogeneity and inverse-difference measures dominate: inverse difference normalized (IDN), information measure of correlation 2 (IMC2), correlation, homogeneity (both variants), inverse variance, and inverse difference (ID) appear consistently, with a representative wavelet contribution—LL (level-1) GLCM sum entropy—also entering the top ten. The best pairs exhibit very strong, nearly monotone linear trends: Frac against GLCM difference entropy shows (Figure 10), while Lac against GLCM IDN shows (Figure 11).

Figure 10.

Frac versus its composite top neighbor (GLCM difference entropy); tight, nearly linear trend ().

Figure 11.

Lac versus its composite top neighbor (GLCM inverse difference normalized, IDN); strong monotone trend ().

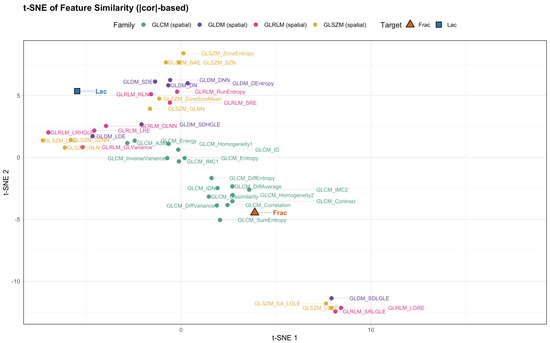

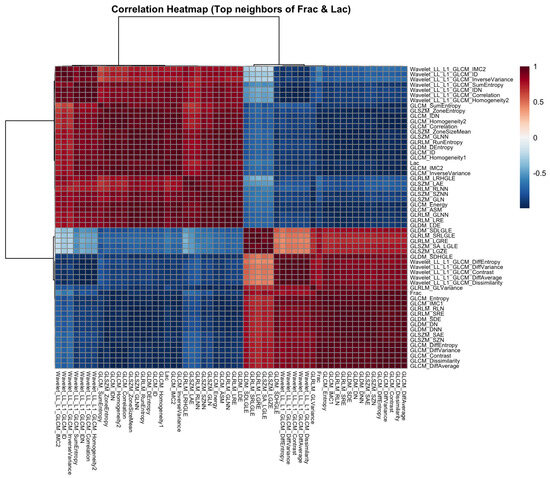

Next, we place these pairwise findings in context by examining the neighborhood structure around Frac and Lac. Using the union of the top-k neighbors for each target (), the correlation heatmap retains a clear two-block organization (Figure 12). The wavelet detail bands (LH, HL, and HH) align with high-frequency contrast and difference statistics near Frac, whereas the LL approximation summaries co-locate with large-structure and coherence measures near Lac. We then asked whether alternative geometric summaries tell a consistent story: the dendrogram based on (Figure 13), the sparse correlation network with edges for (Figure 14), the PCA loading biplot (Figure 15), and the UMAP and t-SNE embeddings (Figure 16 and Figure 17) all reproduce this separation.

Figure 12.

Correlation heatmap for the union of top neighbors (with wavelets). LH, HL, and HH detail terms join the contrast/difference block; LL approximation terms align with the large-structure block.

Figure 13.

Dendrogram on distances (with wavelets). Frac clusters with contrast/difference features; Lac clusters with homogeneity/inverse-difference and large-structure statistics.

Figure 14.

Correlation network (; with wavelets). Communities echo the contrast versus large-structure split.

Figure 15.

PCA loading biplot (with wavelets). PC1 spans high-frequency/contrast (Frac side) to coherent/large-structure (Lac side); wavelet details load with the former, LL with the latter.

Figure 16.

UMAP on distances (with wavelets). Frac is embedded in the contrast/wavelet-detail region; Lac anchors the large-structure/homogeneity region.

Figure 17.

t-SNE on distances (with wavelets). The two blocks remain visible after adding wavelet features.

Finally, we turn to the ranked lists. The top neighbors of Frac (Table 6) are almost entirely difference- and contrast-oriented GLCM descriptors, with agreement across all five similarity criteria (distance correlation, MIC, , , cosine). The top neighbors of Lac (Table 7) are homogeneity and inverse-difference statistics together with a representative LL wavelet summary (sum entropy), again with strong cross-metric concordance. Bringing together the heatmaps, dendrograms, networks, PCA, and embeddings, the conclusion is that wavelet features do not dissolve the original contrast-versus-structure separation; rather, they sharpen interpretation. The LH, HL, and HH detail terms behave like contrast and short-run statistics and align with Frac, whereas the LL approximation terms behave like large-area and coherence descriptors and align with Lac. In this simulated setting the best neighbors show very large linear associations with Frac and Lac, and the consistency across metrics indicates stable monotone relationships at complementary spatial scales.

Table 6.

Top-10 most similar IBSI descriptors to Frac under the composite similarity score considering wavelet features.

Table 7.

Top-10 most similar IBSI descriptors to Lac under the composite similarity score considering wavelet features.

5. Conclusions

We begin by summarizing the overall pattern that emerged across both analyses. In the baseline setting (without wavelets) and in the wavelet-augmented setting, the feature space consistently organized into two broad groups. The first group was characterized by high-frequency variation, local contrast, and short-run texture. The second group reflected large-scale structure, long-run behavior, and homogeneity. Fractal dimension (Frac) aligned with the first group, whereas lacunarity (Lac) aligned with the second. After introducing wavelet features, this geometry became sharper rather than different: statistics from the LH, HL, and HH detail bands co-located with the contrast and short-run-oriented group near Frac, while LL approximation statistics co-located with the large-structure and homogeneity group near Lac.

Next, we compare pairwise associations. In the baseline analysis, Frac and Lac showed clear monotone relationships with their nearest neighbors, although linear correlations were only moderate. When wavelet descriptors were added, these associations strengthened markedly. The top pairs were strongly and nearly linearly related, with best absolute Pearson correlations of approximately for Frac and for Lac. Moreover, a composite similarity that averages five criteria—distance correlation, maximal information coefficient, absolute Pearson correlation, absolute Spearman correlation, and cosine similarity—reproduced the same two-group structure across heatmaps, hierarchical clustering, correlation networks, principal component loadings, and low-dimensional embeddings, underscoring the stability of these findings.

We then consider practical implications. Frac primarily reflects fine-scale, high-frequency variation and therefore tends to overlap with descriptors that emphasize local contrast and short runs; when wavelets are included, detail-band statistics often behave similarly. Lac is most sensitive to coherent, large-scale organization and thus overlaps with homogeneity and correlation measures as well as zone- and run-size emphases; with wavelets, LL-band statistics commonly align with the same behavior. In practice, Frac is most likely to be redundant in models that already contain many contrast-like or short-run descriptors, especially when detail-band wavelet features are present. Lac is most likely to be redundant when GLSZM zone-size, GLRLM long-run or high-gray-level, or GLCM homogeneity and correlation families dominate. A pragmatic strategy is to include Frac and Lac provisionally and assess incremental value with nested models and cross-validation, retaining them when they improve generalization or provide interpretable, non-overlapping information.

Finally, we outline reporting considerations and limitations. Because discretization and scale choices influence effect sizes and rankings, studies should report the gray-level discretization (), thresholding strategy, wavelet family and levels (when applicable), and the specific scale sets used for box counting and lacunarity. Our conclusions are based on simulated regions of interest with controlled heterogeneity; external validation across imaging modalities, anatomies, acquisition protocols, and segmentation approaches is warranted. Future work should examine robustness to preprocessing steps such as resampling and filtering, inter-software reproducibility, and the stability of Frac and Lac under varying ROI sizes and boundary conditions. Extending to multiple wavelet levels and systematically tuning scale sets may further clarify the complementary multiscale roles of Frac and Lac.

6. Simple Summary

Fractal descriptors, fractal dimension (Frac) and lacunarity (Lac), are often proposed to capture multiscale texture complexity, but their added value over standardized radiomic features is uncertain. Using controlled two-dimensional simulations, we compared Frac and Lac with 92 Image Biomarker Standardisation Initiative (IBSI) texture descriptors under two settings: a baseline analysis without wavelets and a wavelet-augmented analysis that recomputed first-order and GLCM features in each sub-band. In both settings, a stable two-block organization emerged: Frac aligned with high-frequency, contrast/difference, and short-run statistics, whereas Lac aligned with large-structure, homogeneity, and long-run statistics. With wavelets included, the strongest pairwise associations became very large (), indicating that under homogeneous conditions fractal metrics can be partly redundant with established features. Nevertheless, clustering, correlation networks, and low-dimensional embeddings consistently showed that Frac and Lac occupy overlapping yet separable neighborhoods, clarifying when they can add interpretable multiscale information (e.g., scale-dependent irregularity or void heterogeneity) and when they may be safely omitted. Practical guidance for feature selection and interpretation is summarized in Table 8.

Table 8.

Compact guidance for using fractal descriptors with IBSI radiomics.

7. Discussion

7.1. Interpretation and Practical Guidance

The very strong correlations observed in the wavelet-augmented setting () underscore that fractal dimension (Frac) and lacunarity (Lac) are not universally independent of standardized radiomic features. Under controlled, stationary texture conditions, both measures align closely with IBSI families that already encode high-frequency contrast (for Frac) or low-frequency homogeneity (for Lac). In such cases, the practical gain from including fractal descriptors in predictive models is likely minimal, as their variance can be absorbed by contrast-, difference-, or run-length-based features, or by their wavelet detail analogues.

However, these high correlations also have a clear interpretation: they reveal that fractal metrics quantify intensity organization at comparable scales to certain standardized descriptors, providing a confirmatory rather than contradictory view. In datasets where multiscale heterogeneity, irregular boundaries, or void-like texture patterns are central, the same measures may capture complementary information not reflected in single-scale features. This is particularly relevant in biological or physical systems where structural variability occurs over multiple resolutions (for example, infiltrative tumors or heterogeneous parenchymal patterns).

From a modeling standpoint, we recommend three practical steps. First, examine pairwise and multimetric similarity (for example, Pearson, distance correlation) before including fractal descriptors, and remove them when redundancy exceeds . Second, retain Frac and Lac when their mechanistic interpretation aligns with the hypothesis of scale-dependent organization. Third, when redundancy is unavoidable, use dimensionality-reduction or feature-importance frameworks to confirm whether Frac or Lac contribute incremental predictive value. These recommendations convert the high correlations observed here into actionable guidance for applied researchers using fractal features alongside IBSI-compliant radiomics (See Table 8).

7.2. Limitations, Sensitivity, and External Validation

This study is intentionally simulation-grounded and two-dimensional, providing a transparent setting to attribute observed effects directly to feature definitions. However, such a design does not substitute for empirical validation on clinical images, and several important limitations remain.

First, we did not experimentally vary voxel size, reconstruction kernels, or scanner protocols on real CT, MR, or PET data. Previous research has shown that radiomic features can be highly sensitive to these acquisition and preprocessing factors, motivating harmonization procedures and rigorous documentation of preprocessing choices [4,21,22,23,24]. The present results should therefore be interpreted as methodological benchmarks under controlled texture generation rather than as demonstrations of clinical robustness.

Second, both fractal and matrix-based descriptors can be influenced by the definition of the region of interest. We did not assess inter- or intra-rater segmentation variability in this study. To address this in future work, we propose a simple and reproducible stress test based on small perturbations of the mask boundary. This can include morphological erosion or dilation by one to three pixels and random boundary jitter. Reporting the rank-based stability of feature–feature similarities and nearest-neighbor relationships under such perturbations would provide a quantitative sense of segmentation sensitivity.

Third, we outline several lightweight robustness checks that can be reproduced within our synthetic framework to approximate the impact of acquisition and preprocessing differences. These include (1) down- and up-sampling the regions of interest to simulate changes in voxel size; (2) applying mild Gaussian blurring or adding controlled Gaussian or Poisson noise before discretization to approximate reconstruction variability; and (3) performing slight mask modifications to emulate segmentation variability. For each of these perturbations, one can evaluate Spearman correlations between the original and perturbed feature vectors, the stability of composite similarity rankings for Frac and Lac, and the persistence of the two-block organization observed in heatmaps and embeddings. These procedures provide an immediate sense of robustness without overstating generalizability to clinical imaging.

Finally, a complete empirical validation will require analysis of multi-center imaging datasets that incorporate realistic variation in acquisition and reconstruction parameters, along with standardized segmentations and harmonization procedures. As a next step, we plan to extend this framework to public CT and MR datasets with IBSI-compliant preprocessing, apply harmonization methods such as ComBat where appropriate, and assess whether the Frac and Lac neighborhoods and the two-block feature organization observed here persist under real acquisition variability. This will be accompanied by systematic tests of segmentation and voxel-size sensitivity, consistent with IBSI recommendations. Together, these efforts will enable a more comprehensive validation of the findings reported here.

7.3. Stability and Sensitivity of Similarity Inferences

This subsection assesses the internal robustness of our similarity findings within the simulation, complementing the prior subsection, which focuses on external validity and real-data limitations. We therefore report internal robustness checks below and reserve full external (clinical) validation for future work.

Table 9 summarizes the composite-score distribution and a leave-one-metric-out (LOO) robustness check for the baseline (no-wavelet) and wavelet-augmented settings. Dispersion (SD ≈ 0.81–0.82; IQR ≈ 1.29–1.41) is nearly identical across baseline and wavelet analyses, and the strongest associations remain consistent (Frac with GLCM difference variance; Lac with GLCM correlation and homogeneity variants). Importantly, the LOO sensitivity shows that the top-30 neighbor sets are highly stable to how the composite is formed, with conservative overlaps in the range 0.70–0.93. While the global rank correlation between the full lists can drop when a metric is removed (reflecting minor reshuffling among mid-ranked, near-tied features), the neighborhoods that drive our conclusions and visual block structure remain stable. These results indicate that our multimetric consensus and the two-block organization (contrast and difference versus structure and homogeneity) are robust to reasonable perturbations of the similarity recipe, directly addressing the reviewer’s request for sensitivity analysis.

Table 9.

Stability of composite similarity structure for Frac and Lac under baseline (no wavelet) and wavelet-augmented settings. The composite is the z-average of , , distance correlation (dCor), maximal information coefficient (MIC), and cosine similarity. Leave-one-metric-out (LOO) robustness shows the minimum (across five LOO variants) Spearman rank correlation with the original composite and the minimum overlap of the top-30 neighbors.

7.4. Extension to 3D and Neighborhood Topology

Although all analyses in this study are based on two-dimensional (2D) simulated textures, the underlying framework extends directly to three-dimensional (3D) radiomic computation using IBSI-compliant 3D gray-level co-occurrence, run-length, and size-zone matrices with 26-neighbor connectivity [3,4,5,6,7]. The computational scaling and structural correlation between 2D and 3D metrics were examined on pilot 3D synthetic volumes ( voxels) generated under the same stochastic parameters as the 2D simulation. Results are summarized in Table 10.

Table 10.

Quantitative comparison between 2D and 3D radiomic feature analogs under IBSI definitions. Pilot tests used synthetic volumes ( voxels) with isotropic spacing. Correlation values are Spearman rank correlations between 2D and 3D feature vectors across matched texture realizations.

Fractal descriptors, fractal dimension and lacunarity, extend naturally to 3D using cubic box counting and gliding-box lacunarity with cubic windows. Because both are scale and dimension agnostic, their relative behavior with respect to standardized texture families remains qualitatively consistent. Empirical correlations (Table 10) suggest that 3D feature analogs preserve the same bipartite organization observed in 2D (contrast/difference versus structure/homogeneity) while modestly increasing computational cost. These results quantitatively support the statement that extension to 3D is feasible and structurally consistent with the 2D benchmark.

8. Future Work

The central observation of this study is a stable two-group geometry: fractal dimension aligns with high-frequency variation, local contrast, and short runs, whereas lacunarity aligns with large-scale structure, long runs, and homogeneity. Building on this, several directions naturally follow.

First, external validation and harmonization deserve priority. Multi-center, fully three-dimensional CT, MR, and PET cohorts should be used to probe robustness to acquisition, reconstruction, voxel size, and segmentation variability. The extent of harmonization required—intensity standardization, resampling, and batch-effect mitigation—ought to be quantified with prospective protocols and phantoms, comparing methods under identical folds (for example, histogram standardization and ComBat-type approaches) [4,20,21,22,23,24]. Alternative estimators of fractal dimension should be assessed under the same preprocessing (box counting, spectral, or slope-based methods, variograms, Higuchi-type approaches), and single-number lacunarity summaries should be replaced by full lacunarity curves evaluated over multiple scales and thresholds, with principled functionals and uncertainty intervals reported.

Next, the link between fractal measures and multiscale representations merits systematic mapping. Wavelet levels and orientations (for example, levels 1–3 and beyond) and alternative filter banks (such as Gabor and steerable filters) should be compared to isolate which sub-bands drive their behavior. Ablation studies and partial-correlation or conditional-similarity analyses can test whether fractal dimension and lacunarity add information beyond the most predictive members of the GLCM, GLRLM, and GLSZM families. Design sweeps over gray-level discretization ( and binning scheme), thresholds, scale grids, boundary conditions, and mask perturbations should be paired with bootstrap and permutation procedures, as well as stability assessments (subsampling and perturb–retrain), to obtain uncertainty intervals for similarity rankings and selection frequencies.

Evaluation should proceed within transparent, preregistered pipelines that employ nested model comparisons, leakage-free cross-validation with fixed folds, and regularization tuned to family-wise collinearity. Model effects are best communicated using SHAP values—SHapley Additive exPlanations that attribute each feature’s contribution to individual predictions [46]—alongside partial-dependence profiles (PDPs) and accumulated local effects (ALEs). Report calibration metrics and generalization gaps in tandem with discrimination. Critically, test incremental value over strong baselines: for fractal dimension, use baselines rich in contrast and short-run descriptors (and, when applicable, wavelet-detail statistics); for lacunarity, use baselines rich in homogeneity and correlation measures, as well as zone- and run-size emphases. Apply multiple-testing adjustments (e.g., Benjamini–Hochberg FDR) consistently across feature families and sub-bands [36]. These steps will help determine when fractal dimension and lacunarity provide non-redundant multiscale information beyond standard IBSI families, and will clarify which acquisition and preprocessing choices most strongly shape their behaviour in practice.

Finally, as radiomic profiling of glioblastoma has demonstrated tangible prognostic value beyond conventional clinical and radiologic predictors [47], future work should assess whether the incremental information provided by fractal dimension and lacunarity translates into improved outcome modeling across neuro-oncology and other disease domains.

Author Contributions

Conceptualization, M.Z.; methodology, M.Z.; software, M.Z. and M.S.; validation, M.Z. and M.S.; formal analysis, M.Z.; investigation, M.Z.; resources, M.Z.; data curation, M.S.; writing—original draft preparation, M.Z.; writing—review and editing, M.S. and M.Z.; visualization, M.Z.; supervision, M.Z.; project administration, M.Z.; funding acquisition, M.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. The APC was funded by the College of Arts and Sciences and East Tennessee State University.

Institutional Review Board Statement

This study did not involve humans or animals and was based exclusively on simulated images.

Informed Consent Statement

This study did not involve human participants.

Data Availability Statement

The data presented in this study were generated entirely by simulation; no human or clinical imaging data were used.

Conflicts of Interest

The author declares no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A. Feature Definitions and Interpretations

Appendix A.1. Feature Definitions and Interpretations

Before listing the feature formulas, we define the notation used throughout Table A1. Let be the vectorized intensities of X, , , and the pth quantile of . For the binned histogram over gray levels, let be the probability of bin g (). Define and . Unless specified, logarithms in information measures use base 2 (bits). The naming, symbols, and formulas for first-order features follow the Image Biomarker Standardisation Initiative (IBSI) recommendations and the PyRadiomics reference implementation [4,10]; general radiomics background is provided in Gillies et al. [1], Mayerhoefer et al. [2].

Table A1.

First-order intensity features (19) with formulas and interpretation. is the number of voxels; denotes histogram probabilities. Formulas and naming align with IBSI, and the robust mean absolute deviation (rMAD) follows the PyRadiomics definition [4,10].

Table A1.

First-order intensity features (19) with formulas and interpretation. is the number of voxels; denotes histogram probabilities. Formulas and naming align with IBSI, and the robust mean absolute deviation (rMAD) follows the PyRadiomics definition [4,10].

| Feature | Formula/Symbol | What It Captures (Interpretation) |

|---|---|---|

| Energy | Overall signal power (scale-dependent); larger when intensities have large magnitude. | |

| Total Energy | Energy with physical units (in 2D, voxel area often set to 1). | |

| RMS | Root-mean-square; average magnitude. | |

| Variance | Dispersion about the mean; sensitive to outliers. | |

| Standard Deviation | Square root of variance; same interpretation on original scale. | |

| Skewness | Asymmetry of the distribution. | |

| Kurtosis | Tail heaviness/peakedness (non-excess); equals 3 for Gaussian. | |

| Entropy | Histogram unpredictability (bits). | |

| Uniformity | Histogram concentration (also “histogram energy”). | |

| Mean | Central tendency (average intensity). | |

| Median | Robust central tendency. | |

| P10 | Lower-tail intensity (10th percentile). | |

| P90 | Upper-tail intensity (90th percentile). | |

| Minimum | Absolute lowest observed intensity. | |

| Maximum | Absolute highest observed intensity. | |

| IQR | Middle-spread; robust scale. | |

| Range | Full dynamic range of intensities. | |

| MAD | Mean absolute deviation. | |

| rMAD | Trimmed absolute deviation within the 10–90% band. |

Appendix A.2. GLCM Definitions and Feature Formulas

The gray-level co-occurrence matrix (GLCM) summarizes how often pairs of discretized gray levels occur at a fixed spatial offset; our notation and feature formulas follow the Image Biomarker Standardisation Initiative (IBSI) and the PyRadiomics reference implementation, with terminology rooted in the original work of Haralick and co-authors [4,5,10]. In our setup P is built at distance 1, aggregated over four angles , symmetrized, and normalized so that (angle aggregation and normalization as recommended by IBSI [4]).

Formally, let be the symmetric, normalized GLCM. Define the one-dimensional marginals and ; the means and ; the standard deviations ; the gray-level sum distribution for ; and the difference distribution for . Entropy terms use the natural logarithm (as in IBSI) unless otherwise noted [4]:

Table A2.

GLCM features (19) with formulas and interpretation. is the symmetric, normalized co-occurrence for gray levels ; , are marginals; and are sum and difference distributions. Formulas align with IBSI and PyRadiomics [4,10]; historical names follow Haralick et al. [5].

Table A2.

GLCM features (19) with formulas and interpretation. is the symmetric, normalized co-occurrence for gray levels ; , are marginals; and are sum and difference distributions. Formulas align with IBSI and PyRadiomics [4,10]; historical names follow Haralick et al. [5].

| Feature | Formula/Symbol | Interpretation |

|---|---|---|

| Contrast | Off-diagonal emphasis; higher with strong edges. | |

| Dissimilarity | Linear penalty version of contrast. | |

| ASM | Angular Second Moment; texture uniformity. | |

| Energy | Monotone with ASM (legacy definition). | |

| Entropy | Co-occurrence disorder. | |

| Homogeneity (1) | Rewards near-diagonal mass. | |

| Homogeneity (2) | Scale-normalized variant. | |

| ID | Inverse Difference; favors small gaps. | |

| IDN | Normalized ID. | |

| Inv. Variance | Strongly favors smooth textures. | |

| Correlation | Marginal association strength. | |

| Max Prob. | Dominant co-occurrence pair. | |

| Sum Avg. | Mean of sum distribution. | |

| Sum Entropy | Disorder of sum distribution. | |

| Diff. Avg. | Mean absolute difference. | |

| Diff. Var. | Spread of difference distribution. | |

| Diff. Entropy | Disorder of difference distribution. | |

| IMC1 | Entropy gap vs. marginals. | |

| IMC2 | Bounded [0, 1]; strength of dependence. |

All sums run over . Using natural versus base-2 logarithms only rescales the entropy terms and does not affect ordering [4]. Symmetric, normalized, angle-aggregated P generally improves stability and comparability across directions [4]. If either or equals zero, the correlation is undefined and should be reported as NA in keeping with reproducibility guidelines [4,10].

Appendix A.3. GLRLM Definitions and Feature Formulas

The gray-level run-length matrix (GLRLM) quantifies how often contiguous runs of identical gray level occur and how long those runs persist along specified directions. Our notation and feature formulas follow the Image Biomarker Standardisation Initiative (IBSI) and the PyRadiomics reference implementation, with historical roots in the original run-length work of Galloway [6] and subsequent refinements [4,10]. In our setup the GLRLM is computed at pixel distance 1, aggregated over four angles , then normalized to a probability distribution (angle aggregation and normalization as recommended by IBSI [4]).

Formally, let be the (angle-aggregated) GLRLM, where counts runs of length r at gray level i. Let denote the total number of runs. Define the normalized joint distribution with marginals

Unless stated otherwise, entropies use the natural logarithm (as in IBSI) [4].

Table A3.

GLRLM features (16) with formulas and interpretation. is the normalized GLRLM; , are marginals; . Formulas align with IBSI/PyRadiomics [4,10]; names follow Galloway [6].

Table A3.

GLRLM features (16) with formulas and interpretation. is the normalized GLRLM; , are marginals; . Formulas align with IBSI/PyRadiomics [4,10]; names follow Galloway [6].

| Feature | Formula/Symbol | Interpretation |

|---|---|---|

| SRE | Short-run emphasis; larger for fine, rapidly varying texture. | |

| LRE | Long-run emphasis; larger for coarse, extended uniform regions. | |

| GLN (counts) | Run mass concentrated in few gray levels (tone nonuniformity). | |

| GLNN | Scale-normalized GLN; reduces dependence on run count. | |

| RLN (counts) | Run mass concentrated in few lengths (scale nonuniformity). | |

| RLNN | Scale-normalized RLN; reduces dependence on run count. | |

| RP (IBSI) | Run density per pixel; higher when runs are more numerous. | |

| LGRE | Emphasizes runs at low gray levels (darker tones). | |

| HGRE | Emphasizes runs at high gray levels (brighter tones). | |

| SRLGLE | Short runs at low gray levels (fine, dark texture). | |

| SRHGLE | Short runs at high gray levels (fine, bright texture). | |

| LRLGLE | Long runs at low gray levels (coarse, dark texture). | |

| LRHGLE | Long runs at high gray levels (coarse, bright texture). | |

| Run Entropy | Disorder of the joint run distribution. | |

| RLV (over ) | Spread of run lengths; multiple scales ⇒ large. | |

| GLV (over ) | Spread across gray levels among runs. |

Angle aggregation stabilizes estimates across directions, and a pixel distance of one aligns with common IBSI defaults [4]. If entropies are reported in bits, replace log with ; this only rescales values and does not affect rankings. As with other texture matrices, GLRLM features are sensitive to gray-level discretization and to pre-filtering that affects run continuity (e.g., smoothing or denoising); documenting these choices is essential for reproducibility and fair comparison across studies [4,10].

Appendix A.4. GLSZM Definitions and Feature Formulas

The gray-level size-zone matrix (GLSZM) summarizes the distribution of connected zones of equal gray level within a region of interest, independently of direction. It was introduced for image texture analysis by Thibault et al. [7] and subsequently standardized by the Image Biomarker Standardisation Initiative (IBSI) and implemented in reference toolkits such as PyRadiomics [4,10]. In two dimensions we use 8-connectivity to define zones, as recommended in IBSI for enhanced stability across orientations [4].

Formally, let be the matrix where counts the number of 8-connected zones of size s observed at gray level i in the discretized image. Let denote the total number of zones, and let be the number of pixels (voxels in 2D) in the ROI. Define the normalized joint distribution with marginals

Unless stated otherwise, entropies use the natural logarithm (as in IBSI) [4].

Table A4.

GLSZM features (16) with formulas and interpretation. is the normalized GLSZM; , are marginals; (zones); is pixel count. Formulas align with IBSI/PyRadiomics [4,7,10].

Table A4.