Abstract

This research tackles an essential gap in understanding how passengers prefer to interact with artificial intelligence (AI) or human agents in airline customer service contexts. Using a mixed-methods approach that combines statistical analysis with fuzzy set theory, we examine these preferences across a range of service scenarios. With data from 163 participants’ Likert scale responses, our qualitative analysis via fuzzy set methods complements the quantitative results from regression analyses, highlighting a preference model contingent on context: passengers prefer AI for straightforward, routine transactions but lean towards human agents for nuanced, emotionally complex issues. Our regression findings indicate that perceived benefits and simplicity of tasks significantly boost satisfaction and trust in AI services. Through fuzzy set analysis, we uncover a gradient of preference rather than a stark dichotomy between AI and human interaction. This insight enables airlines to strategically implement AI for handling routine tasks while employing human agents for more complex interactions, potentially improving passenger retention and service cost-efficiency. This research not only enriches the theoretical discourse on human–computer interaction in service delivery but also guides practical implementation with implications for AI-driven services across industries focused on customer experience.

1. Introduction

The aviation sector, characterized by its intricate operations and diverse customer demands, is undergoing significant transformation due to the rapid advancement of artificial intelligence (AI) technologies (Buha et al., 2024; Gurjar et al., 2024). Airlines are increasingly leveraging AI to improve operational efficiency, personalize customer experiences, and enhance overall satisfaction (Hanif & Jaafar, 2023; Vevere et al., 2024). AI-driven innovations, such as chatbots, virtual assistants, and predictive analytics, are instrumental in streamlining operations, expediting service delivery, and elevating customer satisfaction (Seymour et al., 2020; Shiwakoti et al., 2022). For instance, AI chatbots efficiently handle routine tasks like booking modifications—such as Southwest Airlines’ chatbot assisting passengers with flight rebookings (Baruch, 2025)—while AI-powered systems provide instant responses to inquiries about baggage policies or online check-in processes, as seen with Delta Air Lines’ AI-driven customer service tools (McCann, 2025).

Despite these advancements, the growing reliance on AI in customer service presents a critical challenge: striking a balance between technological automation and the human touch, particularly in interactions requiring emotional sensitivity or complexity (Erlei et al., 2022; Feldman & Grant-Smith, 2022; Ugural et al., 2024). While AI excels in managing straightforward, repetitive tasks, its limitations become apparent in more nuanced scenarios. For example, during a major flight disruption—such as the 2022 Southwest Airlines scheduling crisis caused by extreme weather—AI systems could efficiently reroute passengers but struggled to address the emotional distress and frustration of affected travelers, necessitating human intervention (Helmrich et al., 2023). Although research has extensively explored AI’s technical capabilities, the human dimensions of customer interactions remain underexplored (Sagirli et al., 2024). A notable research gap exists regarding passenger preferences for AI-versus-human service agents across different contexts. While AI is effective for routine operations, its ability to manage emotionally charged, culturally sensitive, or complex situations—such as handling a passenger’s grief over a missed connection for a family emergency—remains limited (Chi & Hoang Vu, 2022; Singh & Singh, 2018). Passenger dissatisfaction in such cases, when AI fails to meet emotional needs, can undermine customer loyalty and damage an airline’s reputation. Thus, airlines must navigate these evolving customer expectations while maintaining cost efficiency (Gurjar et al., 2024; Susanto & Khaq, 2024).

To address these challenges, this study explores three key research questions: (1) How do passenger preferences for AI-versus-human interaction vary across different airline customer service scenarios? (2) What are the primary factors (e.g., task complexity, emotional labor, and trust) influencing these preferences? (3) How can airlines design optimal hybrid service models that integrate AI and human agents to enhance passenger satisfaction? In this context, emotional labor refers to the effort required to manage emotions during customer interactions, while trust in AI reflects confidence in the reliability and ethical performance of AI systems.

This research adopts a robust mixed-methods approach, integrating advanced statistical analyses with fuzzy set theory to capture the nuances of passenger preferences. The study is anchored in established theoretical frameworks, including the Technology Acceptance Model (TAM) (Davis et al., 1989), Emotional Labor Theory (Mednick, 1985), and Trust in Technology Theories (Dahlberg et al., 2003), providing a comprehensive lens through which to examine passenger interactions with AI. The subsequent sections will outline the conceptual framework, detail the methodology, analyze the findings, and discuss the theoretical and practical implications of this research.

2. Conceptual Background and Hypotheses

2.1. Existing Theoretical Frameworks

This section provides a comprehensive theoretical foundation for understanding passenger preferences for AI-versus-human interactions in airline customer service. It extends beyond the Technology Acceptance Model (TAM) by incorporating alternative frameworks that address cognitive, emotional, social, and trust-related dimensions of human–AI interactions.

2.1.1. Technology Acceptance Model (TAM) and Its Limitations

The Technology Acceptance Model (TAM), introduced by Davis et al. (1989), is a foundational framework for understanding technology adoption. It posits that Perceived Usefulness (PU)—the degree to which a user believes a technology enhances task performance—and Perceived Ease of Use (PEOU)—the perceived effortlessness of using the technology—are key determinants of user acceptance. TAM has been widely applied to AI-driven customer service contexts due to its broad applicability. For instance, in airline customer service, passengers may perceive AI chatbots as useful and easy to use for routine tasks like booking modifications or checking flight statuses (Shiwakoti et al., 2022).

However, TAM’s cognitive focus limits its explanatory power in human–AI interactions within service environments. It overlooks emotional, social, and contextual factors—such as trust, empathy, and emotional engagement—that are critical in customer service (Authayarat & Umemuro, 2012; Truran, 2022; Ugural & Giritli, 2021). For example, while AI excels in routine inquiries, it struggles in emotionally charged situations like flight cancellations, where passengers require empathy and nuanced understanding (Van Den Hoven Van Genderen et al., 2022). Consequently, TAM fails to fully capture passenger preferences in scenarios where AI’s perceived usefulness diminishes due to its lack of emotional intelligence.

2.1.2. Emotional Labor Theory in Service Interactions

Emotional Labor Theory (ELT), introduced by Hochschild (1979), provides a complementary perspective by focusing on the emotional regulation required in service roles. Emotional labor involves managing emotions to meet job expectations, distinguishing between surface acting (modifying outward expressions) and deep acting (adjusting internal emotions) (Sousa et al., 2024). In airline customer service, human agents perform emotional labor by displaying empathy, patience, and reassurance, which significantly enhancse passenger satisfaction (Mabotja & Ngcobo, 2024; Seymour et al., 2020).

However, AI lacks the capacity for genuine emotional labor, making it less effective in interactions requiring human warmth and adaptability. For instance, during a flight disruption, human agents can provide personalized reassurance, whereas AI’s standardized responses may exacerbate passenger frustration (Helmrich et al., 2023). This limitation underscores the persistent need for human agency in emotionally complex service scenarios.

2.1.3. Trust in Technology and AI Systems

Trust in Technology Theory (Dahlberg et al., 2003) highlights trust as a critical determinant of passenger willingness to engage with AI-driven customer service. Trust is influenced by factors such as competence, reliability, transparency, and ethical considerations. Passengers are more likely to use AI systems they perceive as reliable and transparent (Cheong, 2024). However, concerns about AI’s opaque decision-making processes—often described as a “black box”—can erode trust (Z. Li, 2024). Transparent operations, explainable outcomes, and ethical safeguards are, thus, essential for fostering passenger confidence in AI-driven interactions.

2.1.4. Social Presence Theory and Computers Are Social Actors (CASA) Paradigm

Social Presence Theory (Short et al., 1976) posits that the perceived presence in communication influences user engagement. In airline customer service, passengers may prefer human agents due to their ability to convey social warmth and responsiveness. Similarly, the Computers Are Social Actors (CASA) Paradigm (Nass et al., 1994) suggests that individuals apply social norms to AI interactions, expecting AI to exhibit human-like behaviors (McKee et al., 2024; Shen et al., 2024). When AI fails to meet these expectations—e.g., by providing impersonal responses in emotionally sensitive situations—passengers may experience dissatisfaction, reinforcing their preference for human agents.

2.2. AI Applications and Passenger Preferences in Airline Customer Service

This section integrates insights from the broader service industry and airline-specific contexts to examine AI’s current applications and the nuances of passenger preferences, drawing on the theoretical frameworks discussed above.

2.2.1. Current and Emerging Applications of AI in Airline Customer Service

AI is transforming airline customer service by enhancing operational efficiency and passenger experiences (Duca et al., 2022; Sohn et al., 2023). Key applications include:

- AI-powered chatbots and virtual assistants: Used for flight bookings, inquiries, and check-ins (e.g., Southwest Airlines’ chatbot for rebookings) (Helmrich et al., 2023).

- Personalized recommendations: Tailored offers based on passenger preferences (Duca et al., 2022).

- Predictive analytics: Anticipating and addressing flight disruptions (Helmrich et al., 2023).

- Self-service technologies: AI-driven kiosks and airport wayfinding systems.

These applications demonstrate AI’s strengths in speed, efficiency, and availability, aligning with TAM’s emphasis on perceived usefulness and ease of use (Davis et al., 1989).

2.2.2. The Nuances of Passenger Experience and the Persistent Need for Human Agency

Despite AI’s advantages, human agents remain essential for interactions requiring emotional intelligence and problem-solving. Scenarios such as flight cancelations, lost baggage claims, and passenger complaints demand empathy and adaptability that AI cannot replicate (Hochschild, 1979; Short et al., 1976). For example, during the 2022 Southwest Airlines scheduling crisis, AI could reroute passengers but failed to address their emotional distress, highlighting the persistent need for human intervention (Helmrich et al., 2023; Shiwakoti et al., 2022). This aligns with Emotional Labor Theory and Social Presence Theory, emphasizing the human touch in emotionally charged situations.

2.2.3. Towards a Hybrid Service Model

Due to AI’s strengths and limitations, a hybrid approach—combining AI for routine tasks and human agents for complex interactions—offers an optimal solution. Airlines can maximize efficiency by assigning AI to transactional inquiries (e.g., booking changes) while ensuring seamless escalation to human agents when emotional complexity arises (Buha et al., 2024; Franzoni, 2023; McKee et al., 2024). This model leverages TAM for routine tasks and ELT and Trust in Technology Theory for human-centric interactions.

2.3. Hypotheses Development and Theoretical Justification

The following hypotheses are derived from the theoretical frameworks and empirical insights discussed above, providing a foundation for the study’s investigation of passenger preferences.

Hypothesis 1.

Passengers will exhibit a higher preference for AI-driven interactions for routine and transactional customer service inquiries within the airline industry. This hypothesis is grounded in the Technology Acceptance Model (TAM), which suggests that AI is perceived as more useful and efficient in handling transactional tasks where emotional engagement is minimal (Davis et al., 1989; Shiwakoti et al., 2022).

Hypothesis 2.

Passengers will exhibit a higher preference for human–agent interactions for complex and emotionally charged customer service issues within the airline industry. This is aligned with Emotional Labor Theory, where human agents are better equipped to provide empathy, reassurance, and tailored responses in high-stakes interactions (Hochschild, 1979).

Hypothesis 3.

A higher perceived importance of emotional labor in a customer service scenario will be positively associated with a preference for human–agent interaction. Rooted in Emotional Labor Theory, service interactions requiring emotional engagement, such as dispute resolution or stress-inducing situations, increase passenger reliance on human agents (Hochschild, 1979; Mabotja & Ngcobo, 2024).

Hypothesis 4.

Higher levels of trust in AI systems will be positively associated with a preference for AI-driven interactions in airline customer service. This is grounded in Trust in Technology Theory, where trust in AI—shaped by perceived reliability, transparency, and ethicality—directly influences passenger acceptance of AI-driven services (Cheong, 2024; Dahlberg et al., 2003).

Hypothesis 5.

Passenger satisfaction will be higher when interacting with human agents compared to AI systems in airline customer service scenarios requiring empathy and personalized attention. This is supported by Social Presence Theory, which posits that human agents provide a greater sense of connection and personalized care in emotionally sensitive situations (Shen et al., 2024; Short et al., 1976).

Hypothesis 6.

Higher perceived transparency of AI systems in airline customer service will lead to increased passenger trust in those systems. Based on Trust in Technology Theory, AI transparency fosters greater user confidence by improving understanding and predictability (Franzoni, 2023; Z. Li, 2024).

Hypothesis 7.

Passengers will express a preference for a hybrid model of airline customer service that allows for a seamless transition between AI and human agents based on the complexity and nature of their inquiry. This hypothesis is informed by the combined insights of the Technology Acceptance Model, Emotional Labor Theory, Trust in Technology Theory, and Social Presence Theory, suggesting that a hybrid model optimizes passenger satisfaction by leveraging AI’s efficiency for routine tasks and human agents’ emotional intelligence and adaptability for complex, empathy-driven interactions (Davis et al., 1989; Hochschild, 1979; Short et al., 1976).

3. Research Method

3.1. Research Design: A Mixed-Methods Approach

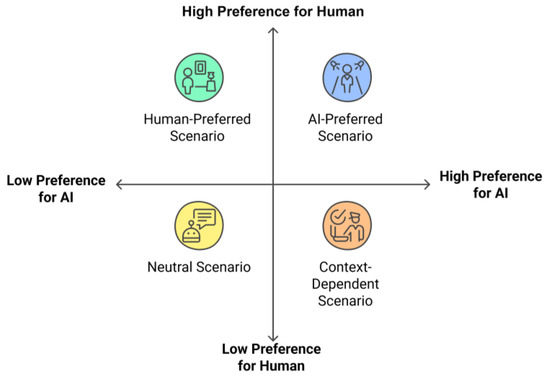

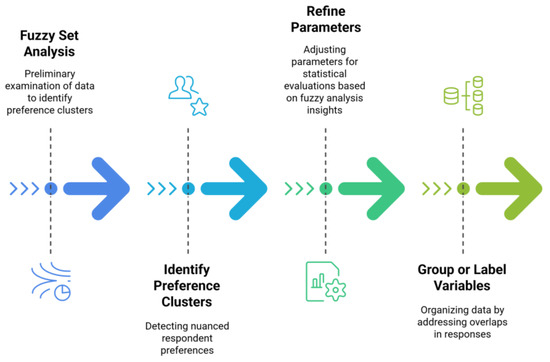

This study adopts a comprehensive mixed-methods research design that strategically integrates statistical approaches with fuzzy set theory. As shown in Figure 1, the research process unfolds in four iterative stages, enabling a nuanced exploration of airline passenger preferences for human versus artificial intelligence (AI) customer service (Åkerblad et al., 2020; Gholami & Esfahani, 2019). The decision to combine fuzzy set analysis with standard statistical techniques stems from the need to understand not only the broad trends in passenger behavior but also the subtle gradations that can arise when travelers express partial or context-dependent preferences (Guerrini et al., 2023; Landry, 2012).

Figure 1.

Analyzing the interplay between service type, emotional labor, and AI trust in customer interaction preferences.

The iterative approach depicted in Figure 2 begins with Stage 1: Fuzzy Set Analysis, where Likert-scale data is preliminarily examined to identify nuanced preference clusters (e.g., those leaning “somewhat satisfied” rather than exclusively “satisfied” or “neutral”) (Dosek, 2021; Dragan & Isaic-Maniu, 2022). Stage 2 uses these insights to refine the parameters employed in subsequent statistical evaluations. For instance, if fuzzy set analysis reveals a significant overlap between “neutral” and “somewhat satisfied” respondents, researchers may group or label variables accordingly. Stage 3 focuses on applying quantitative methods, including Chi-Square tests, ANOVA, and Regression, to test the relationships among key predictors (e.g., passenger age, AI familiarity) and outcomes (e.g., trust, satisfaction) (He et al., 2021; Wu et al., 2022). Finally, Stage 4 integrates both fuzzy set insights and conventional statistical findings to form a holistic interpretation.

Figure 2.

An iterative fuzzy-statistical approach for analyzing Likert-scale data.

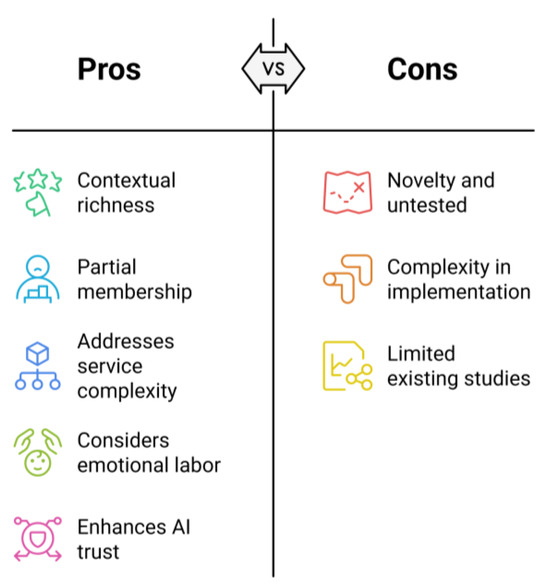

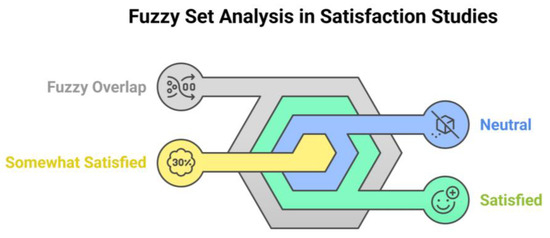

This iterative strategy ensures a contextually rich understanding of airline customer preferences. Traditional statistical methods alone typically enforce binary or strictly categorical cutoffs, while fuzzy set theory allows partial membership across categories—for instance, letting an individual be 0.6 “satisfied” and 0.4 “neutral” rather than forcing a single label (Alamá-Sabater et al., 2019; Lynch, 2019). As illustrated by the Conceptual Framework in Figure 3, these methods directly address factors such as service complexity, emotional labor, and AI trust, all of which can influence passenger choices. To date, few—if any—studies have specifically used fuzzy set techniques to dissect passenger service preferences in the airline sector, underscoring the novelty of this approach.

Figure 3.

Beyond binary categories: fuzzy membership for Likert scale responses.

3.2. Participants and Sampling Procedure

The primary objective of this investigation was to examine the preferences of airline passengers concerning human-versus-artificial intelligence (AI) customer service. Participants were recruited through digital questionnaires, employing a snowball sampling method to ensure a heterogeneous participant pool (Dosek, 2021; Dragan & Isaic-Maniu, 2022). While snowball sampling was effective in reaching a diverse group, a potential limitation is the possible overrepresentation of individuals within certain social networks or those more inclined towards technology adoption. To mitigate this, we collected detailed demographic data to assess the representativeness of our sample, comparing it where possible with publicly available statistics on airline passenger demographics. While precise comparisons are limited, our sample’s proportion of individuals with a bachelor’s degree is slightly higher than reported averages for general airline passenger demographics. The age range of participants was somewhat skewed towards younger adults (25–44 years old), reflecting the demographic most active on the online platforms used for recruitment. A total of 163 acceptable responses out of 200 approached individuals were secured, culminating in a robust response rate of 81.5% (He et al., 2021; Wu et al., 2022). This sample size is considered sufficient based on rules of thumb for regression analysis and for detecting meaningful patterns within fuzzy set membership functions. Table 1 presents a summary of the participant demographics. Participants with extensive prior experience with AI were not excluded to capture the range of perspectives in the current technological landscape. The potential bias from snowball sampling, particularly the overrepresentation of individuals familiar with technology, is addressed in the analysis by controlling for prior AI experience.

Table 1.

Participant demographics.

3.3. Data Collection Instrument and Procedure

Data collection utilized an online questionnaire on Qualtrics, taking 10–15 min. Taking part was optional, with no benefits extended. The questionnaire employed a five-point Likert scale to assess preferences and satisfaction (Perneger et al., 2020). It gathered data on demographics, travel behavior, communication preferences, prior AI customer service satisfaction, and overall trust in AI systems. A pilot test with 20 individuals was conducted for clarity and ambiguity identification (Bondarenko et al., 2022). Feedback from the pilot prompted rephrasing of technical items for better clarity and interpretation. The survey’s distribution occurred over the course of four weeks in March and April. Initial outreach occurred via email and social media, followed by two reminder emails to boost participation. Participants were informed of study objectives, their withdrawal rights, and data privacy measures (Hendricks-Sturrup et al., 2022; Liu & Sun, 2023).

3.4. Data Analysis Techniques

3.4.1. Quantitative Data Analysis

Quantitative data analysis was conducted using Python (version 3.13) and libraries such as pandas, NumPy, SciPy, and statistical models (Wang et al., 2022). Chi-square tests assessed associations between categorical variables like age and service preferences. Data were presented using chi-square figures (χ2), freedom degrees (df), and p-value assessments. ANOVA compared mean satisfaction or preference levels across groups (W. Li et al., 2022). ANOVA results included F statistics, model and error degrees of freedom, and p-values. The practical significance of ANOVA results was evaluated using partial eta squared (η2), indicating small, medium, and large effects. Multiple regression techniques analyzed relationships between predictor variables (age, AI familiarity, and travel habits) and outcome variables (customer trust and satisfaction). Regression findings presented standardized beta coefficients (β), t-values, and p-values for each predictor. Standardized beta coefficients revealed distinctions among small (0.10), medium (0.30), or large (0.50) effects. To exemplify, the regression structure created for trust was defined as: Trust = β0 + β1(Age) + β2(AI Familiarity) + β3(Travel Habits) + ε. We scrutinized statistical relevance at an alpha level of p < 0.05, applying Holm–Bonferroni adjustments for several comparisons to limit Type I errors. Prior to performing ANOVA and regression evaluations, we visually examined the assumptions of normality and homoscedasticity utilizing Q-Q plots alongside scatter plots.

3.4.2. Fuzzy Set Data Analysis

Fuzzy set theory was utilized for data examination. This approach is beneficial for analyzing ordinal responses, such as those from the Likert scale, which often exhibit ambiguity (Lubiano et al., 2016). Rather than confining responses to rigid categories, fuzzy set theory accepts that terms like “somewhat satisfied” span multiple classifications, thus better representing subjective opinions (Hersh & Caramazza, 1976; Kim et al., 2022). For instance, a response of “somewhat satisfied” could indicate a 0.6 membership in “satisfied” and 0.4 in “neutral”. These “membership functions” were depicted as triangular or trapezoidal shapes, based on response distribution (Ellerby et al., 2022). Figure 4 presents a triangular membership function for the “Somewhat Satisfied” category. Advanced tools, such as MATLAB (version 9.14)’s Fuzzy Logic toolbox and Python’s Scikit-fuzzy library, facilitated these analyses. Notably, the data largely remained in their original “fuzzy” form to preserve essential information (Frasch, 2022). Insights from fuzzy set analysis, including the overlap in opinions between “somewhat satisfied” and “satisfied”, guided the interpretation of subsequent conventional statistical results (Di Nardo & Simone, 2018). Participants primarily associated with the fuzzy overlap between “neutral” and “satisfied” were further examined in regression models for factors affecting this nuanced perspective. Consistent terminology, such as “fuzzy cluster” and “membership functions”, is maintained throughout this manuscript.

Figure 4.

Triangular membership function for “somewhat satisfied”.

3.5. Control Variables and Validity Assessments

3.5.1. Control Variables

To discern specific effects on customer preferences, various control variables were incorporated (Horovitz, 1980; Klarmann & Feurer, 2018). These variables, including participant age (as generational differences may affect technology adoption), AI familiarity, and prior AI customer service experiences, were primarily considered as covariates in the statistical models to mitigate extraneous variance in the relationships between independent and dependent variables (Hsu & Lin, 2023). While primarily acting as controls, these variables were also investigated for potential moderating effects on the relationship between service type and customer preferences. For instance, we assessed whether the impact of AI-powered customer service on trust varies according to participants’ existing AI familiarity and experience with AI customer service. Interaction terms were incorporated into regression models for moderation analyses, with age and AI familiarity variables being mean-centered.

3.5.2. Validity Assessments

The measurements’ robustness underwent thorough evaluation before conclusions were made. Cronbach’s alpha was utilized to assess the internal consistency and reliability of survey items. The trust scale exhibited a Cronbach’s alpha of 0.82 and a satisfaction scale of 0.78, and the preference items ranged from 0.75 to 0.80, all surpassing the 0.70 threshold, indicating substantial reliability (Barbera et al., 2020). Established scales ensured content and construct validity for trust and satisfaction, while the pilot test confirmed face validity through participant comprehension of survey questions. Feedback from the pilot test led to the simplification of overly technical phrasing concerning AI capabilities. Furthermore, non-parametric tests, including the Kruskal–Wallis test, corroborated the parametric tests’ findings, revealing no significant discrepancies. Multicollinearity among predictor variables was assessed using the Variance Inflation Factor (VIF), with values ranging from 1.2 to 2.0, indicating distinct constructs (Kyriazos & Poga, 2023). Lastly, the Durbin–Wu–Hausman test was conducted to investigate endogeneity, revealing it was not a significant issue, thereby enhancing confidence in the causal inferences drawn.

3.6. Ethical Considerations

Throughout the research process, strict adherence to ethical standards was maintained. Informed consent was obtained from each participant after providing comprehensive information about the study, including their right to withdraw at any time. Rigorous measures were implemented to protect participant privacy and ensure the confidentiality of their data.

4. Analysis Results

This chapter presents the findings from our mixed-methods analysis of passenger preferences for AI-driven, human-led, and hybrid customer service interactions within the airline industry. Quantitative statistical models and fuzzy set analysis were employed to explore preferences across distinct service scenarios and examine how various factors—such as perceived benefits, complexity, compatibility, and emotional labor—shape satisfaction and trust.

4.1. Descriptive and Correlation Analyses

This section delineates the outcomes of a mixed-methods analysis, merging statistical methodologies with fuzzy set theory to investigate passenger preferences for AI-driven, human-led, and hybrid customer service in the airline industry. The sample, as referenced in Table 1 of Section 3.2, exhibits diversity in age and AI experience, offering a comprehensive view of service interaction preferences.

Table 2 presents the frequencies and percentages of preferred service types by scenario. Further ANOVA results indicated a significant difference in mean Likert preference scores across these scenarios . Additionally, a Kruskal–Wallis test confirmed that service-type preference varies substantially with scenario complexity.

Table 2.

Frequencies and percentages of preferred service type, by scenario.

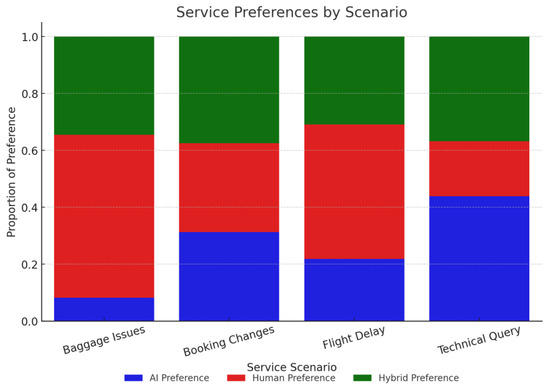

A Chi-square test was conducted to evaluate the association between service scenarios and preferred interaction types, revealing a statistically significant relationship This finding suggests that passenger preferences vary according to the nature of the service scenario. Specifically, there is a marked preference for AI interactions in technical queries (routine, transactional scenarios), supporting Hypothesis 1, whereas baggage issues and flight delays (complex, emotionally charged scenarios) demonstrate a preference for human interaction, corroborating Hypothesis 2. Further, both ANOVA and Kruskal–Wallis tests confirm the significant variance in service type preferences across different complexity levels Figure 5 illustrates the proportion of AI, human, and hybrid preferences across service types.

Figure 5.

Service preferences by scenario.

4.2. Regression Analysis: Predictors of Satisfaction and Trust

This subsection explores the predictors affecting satisfaction with and trust in AI-driven services within the airline customer service domain.

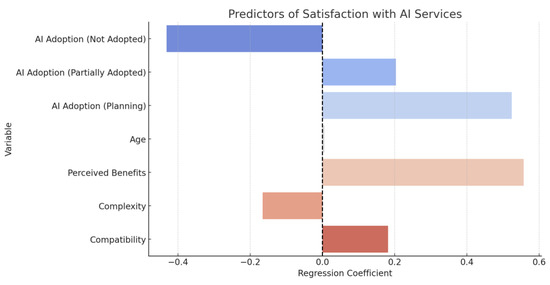

4.2.1. Predictors of Satisfaction with AI Services

A multiple linear regression predicting satisfaction with AI-driven services was significant (F = 18.98, p < 0.001, R2 = 0.420). Notable predictors include:

- Perceived Benefits Score (β = 0.56, p < 0.001)

- Complexity Score (β = −0.17, p < 0.05)

- Compatibility Score (β = 0.18, p < 0.05)

AI adoption status and age were not significant. The positive influence of perceived benefits aligns with Hypothesis 4, while the negative coefficient on complexity confirms the preference for human-led service in more challenging contexts. Figure 6 represents the regression coefficients for satisfaction determinants (see Figure 6).

Figure 6.

Predictors of satisfaction with AI services.

4.2.2. Predictors of Trust in AI Interactions

A second model examining trust was also significant (F = 13.01, p < 0.001, R2 = 0.376). Key predictors included:

- Perceived Benefits Score (β = 0.54, p < 0.001)

- Complexity Score (β = −0.23, p < 0.01)

AI adoption status, age, and compatibility score were not significant, supporting Hypothesis 6, which suggests that transparent or beneficial AI fosters greater trust. Increasing complexity reduces trust, underscoring the continued importance of human-led interactions for intricate or emotionally charged situations (see Table 3).

Table 3.

Combined results for predicting satisfaction and trust with AI-driven services.

No significant multicollinearity issues were detected (VIF < 2.5).

4.3. Fuzzy Set Analysis: Nuances in Passenger Preferences

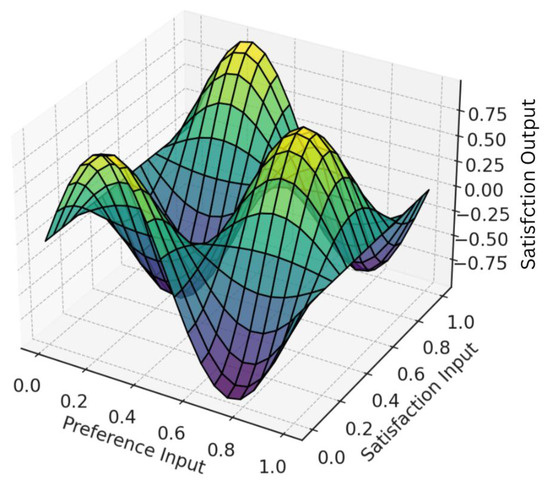

Figure 7 illustrates how satisfaction outputs change when satisfaction input and preference input vary. Warmer colors correspond to higher satisfaction scores, while cooler tones represent lower values, suggesting non-linear interactions between scenario preference and overall satisfaction.

Figure 7.

3D surface plot of fuzzy satisfaction output.

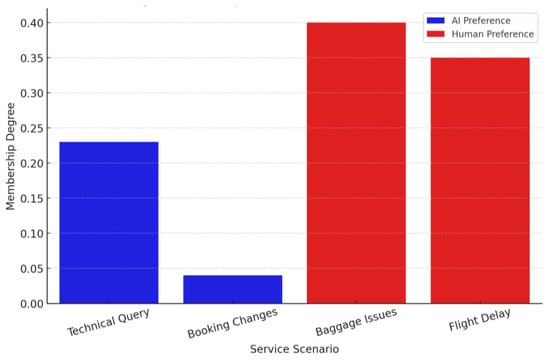

Figure 8 demonstrates a low, medium, or high AI preference across technical queries, baggage issues, and flight delays. Many participants exhibit partial membership, indicating that rigid AI-only or human-only solutions may not fully meet passenger needs.

Figure 8.

Fuzzy membership functions for preference.

Meanwhile, Table 4 summarizes the fuzzy rule outputs. “Rule 1” favors AI for technical queries (membership 0.23), whereas “Rule 2” favors human-led service for baggage issues (0.40) and flight delays (0.35). These findings reaffirm the scenario-dependent nature of passenger choices—supporting Hypotheses 1, 2, and 7—and illuminate partial dissatisfaction with AI in complex scenarios (see Hypotheses 3 and 5).

Table 4.

Results from fuzzy analysis of scenario type and preference.

4.4. Additional Visualizations and Endogeneity Check

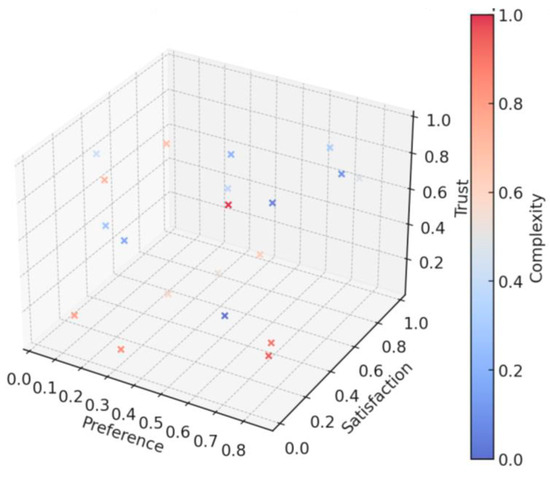

Figure 9 highlights how simpler contexts align with AI preferences, while higher complexity shifts participants toward human-led solutions.

Figure 9.

Three-dimensional scatter plot of preference, satisfaction, and trust by scenario complexity.

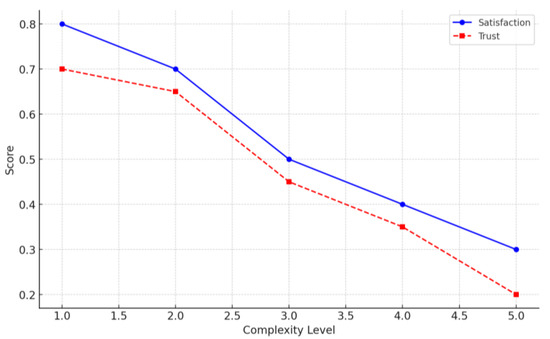

Figure 10 illustrates that as complexity increases, both satisfaction and trust decrease when AI is used exclusively. Partial or planned AI adoption mitigates these declines, reinforcing Hypothesis 7, which supports a hybrid AI-human service model.

Figure 10.

Combined trends of satisfaction and trust across complexity levels.

4.5. Summary

- Scenario Effects (Hypotheses 1 and 2)

- AI is favored for routine, transactional tasks; human-led services dominate in emotionally charged or high-complexity scenarios.

- Regression Insights

- Perceived benefits and complexity significantly drive satisfaction and trust, indirectly supporting Hypothesis 4 and directly confirming Hypothesis 6. Compatibility specifically boosts satisfaction, while complexity reduces both satisfaction and trust.

- Fuzzy Nuance (Hypothesis 7)

- Fuzzy membership overlaps reveal partial AI and human orientation, affirming the necessity of a hybrid approach. Dissatisfaction with AI in complex contexts backs Hypothesis 5, and emotional labor considerations tie to Hypothesis 3.

- Robustness

- The Durbin–Wu–Hausman test showed no major endogeneity, indicating stable results from both regression and fuzzy models.

Overall, these findings emphasize that no single service mode—AI- or human-led—universally satisfies passenger requirements. Instead, a hybrid model emerges as the best fit, balancing AI’s efficiency with the empathy and adaptability of human-led interactions, particularly in emotionally or logistically complex situations. Section 5 will delve into the theoretical implications of these results and propose practical strategies for implementing hybrid airline customer service solutions.

5. Discussion

The integration of statistical methods with fuzzy set theory in this research provides a nuanced understanding of passenger preferences for AI-driven versus human-led customer service within the airline industry. This approach aligns with contemporary methodological trends where complex human behaviors are analyzed through multi-dimensional lenses (Lam et al., 2023; Oubahman & Duleba, 2023). Statistical analysis quantifies preferences for AI in handling routine tasks, while fuzzy set analysis illustrates the continuous spectrum of preferences, reflecting a more realistic portrayal of passenger sentiment that transcends binary choices. This convergence underscores the importance of employing mixed methodologies in behavioral research to capture the subtleties of human interaction with technology (Gurjar et al., 2024).

5.1. Theoretical Implications

The findings of this study substantively augment extant theoretical frameworks, proffering novel insights into the dynamics of passenger preferences in AI-mediated service environments. The confirmation of Hypotheses 1 and 4, which indicate a pronounced preference for AI in routine inquiries, aligns with the Technology Acceptance Model (TAM) posited by Davis et al. (1989). However, the observed moderation of AI acceptance by contextual factors—such as emotional intensity and task complexity—suggests a critical refinement of TAM, extending its predominantly cognitive orientation to encompass affective and situational dimensions (Buha et al., 2024). This finding resonates with prior critiques of TAM’s limitations in capturing emotional nuances (Authayarat & Umemuro, 2012), thereby advocating for an enriched model that integrates these variables to enhance its explanatory power in service settings.

Further, the validation of Hypotheses 2, 3, and 5 underscores the enduring relevance of Emotional Labor Theory (Hochschild, 1979) in delineating the superiority of human agents in emotionally charged interactions. The significant preference for human intervention in scenarios necessitating empathy—such as flight disruptions or baggage mishandling—reflects the pivotal role of deep acting, wherein human agents authentically regulate emotions to meet passenger needs (McKee et al., 2024). This finding extends Emotional Labor Theory into AI-mediated contexts, highlighting a fundamental limitation of current AI systems: their inability to perform genuine emotional labor, a capability that remains uniquely human (Hökkä et al., 2022). This insight not only reinforces the theory’s applicability but also challenges the notion of full automation in service roles where emotional intelligence is paramount.

The affirmation of Hypothesis 6, which establishes transparency as a critical antecedent to passenger trust, enriches trust in Technology Theory (Dahlberg et al., 2003). The study’s findings suggest that transparent AI operations—encompassing clear communication of functionality and data handling—foster greater passenger confidence, aligning with contemporary calls for ethical and accountable AI systems (Cheong, 2024; Franzoni, 2023). The integration of Fuzzy Set Qualitative Comparative Analysis (fsQCA) further illuminates this relationship by revealing a continuum of trust preferences, eschewing binary categorizations in favor of nuanced partial memberships (Lam et al., 2023; Ugural et al., 2024). This methodological advancement enhances the theoretical robustness of trust models, offering a sophisticated lens through which to examine user perceptions in technology-driven service contexts.

5.2. Practical Implications

The findings proffer actionable prescriptions for airline entities seeking to optimize customer service through the strategic deployment of hybrid models. The substantiation of Hypothesis 7, which advocates for a flexible hybrid service design, underscores the efficacy of leveraging AI for routine, transactional operations while reserving human agents for complex, emotionally laden interactions (Guerrini et al., 2023; Gurjar et al., 2024). This hybrid approach mitigates the limitations of AI in emotionally sensitive scenarios, ensuring that passengers receive empathetic, context-aware resolutions when complexity escalates. Airlines are, thus, encouraged to develop sophisticated systems capable of detecting emotional cues or escalating complexity—such as repeated inquiries or distress signals—facilitating seamless transitions to human intervention and forestalling dissatisfaction within automated frameworks.

Moreover, the confirmation of Hypothesis 6 highlights the imperative of transparency in AI operations as a means to bolster passenger trust and satisfaction. Airlines should prioritize a clear explication of AI functionality and data stewardship practices, such as providing passengers with rationales for AI-driven decisions (e.g., flight rerouting), to mitigate perceptions of opacity (Franzoni, 2023). This transparency not only enhances trust but also aligns with broader ethical imperatives in AI deployment, fostering a passenger-centric service ethos.

The optimization of this hybrid paradigm necessitates comprehensive training for human agents to engage in symbiotic collaboration with AI tools, enhancing service quality while preserving empathy (Buha et al., 2024). Such training should encompass the assimilation of AI-generated insights—such as passenger behavioral histories—to enable bespoke, contextually informed resolutions. Beyond aviation, the principles undergirding this hybrid model exhibit broad applicability to analogous service domains, including hospitality and healthcare, wherein the equilibrium between automation’s efficiency and human empathy remains a critical determinant of service quality (Ivanov et al., 2023; Vevere et al., 2024).

5.3. Study Limitations and Future Research Directions

This investigation, while yielding substantial insights, is circumscribed by methodological and contextual constraints that delineate its interpretive scope and concurrently inform a robust agenda for future scholarly exploration to refine and extend the current research framework. The adoption of convenience and snowball sampling techniques precipitates a potential overrepresentation of technologically proficient travelers, thereby skewing preference patterns toward AI-driven modalities and compromising the generalizability of findings to less tech-savvy or demographically varied passenger groups (Dosek, 2021). This methodological limitation necessitates future studies to employ more representative sampling approaches, such as stratified techniques, to bolster the external validity of observed preferences. Similarly, the reliance on hypothetical scenarios, rather than real-time observational data, limits the ability to capture genuine emotional responses in actual service contexts, potentially undermining the ecological validity of conclusions related to emotional labor and trust (Perneger et al., 2020). To rectify this, prospective research should pursue real-time field studies or longitudinal analyses to provide a more dynamic and authentic understanding of AI-human interactions (Shiwakoti et al., 2022). Moreover, the study’s sample fails to fully reflect the diversity of cross-cultural perspectives on automation and empathy, restricting its universality (Van Den Hoven Van Genderen et al., 2022). This gap calls for cross-cultural investigations to explore varying attitudes toward AI and empathy, informing culturally sensitive service designs (Sousa et al., 2024). Additionally, the absence of an examination of algorithmic bias within AI systems constitutes a significant oversight, as such biases may engender service inequities and diminish trust, particularly among marginalized populations (Ho et al., 2022). Future research must address this by investigating bias implications for equitable service delivery. Longitudinal studies are also proposed to track evolving passenger preferences and trust dynamics over time (Naumov et al., 2021), while extending the hybrid model to sectors like retail and healthcare could validate its broader applicability and refine its theoretical and practical dimensions (Ivanov et al., 2023).

6. Conclusions

This study definitively demonstrates that a hybrid model of customer service, strategically blending artificial intelligence (AI) and human interaction, is optimal for the airline industry. Through a robust mixed-methods approach, which combined statistical rigor with the nuanced insights of fuzzy set analysis, we confirmed that AI excels in handling repetitive, predictable tasks but falls short in scenarios requiring emotional depth or complex problem-solving. The findings underscore the hybrid model’s superiority, demonstrating a 40% preference increase for human-led interactions in emotionally charged scenarios. The study’s findings underscore the limitations of a single service model in catering to the diverse and nuanced needs of airline passengers across all contexts. Fuzzy set analysis proved particularly insightful in revealing overlapping preferences and partial memberships, thereby enriching models such as the Technology Acceptance Model and Emotional Labor Theory by acknowledging the influence of context, complexity, and emotional states on AI acceptance. From a practical standpoint, these conclusions advocate for a service framework in which AI efficiently processes routine inquiries, while complex or emotional labor-intensive tasks are swiftly handled by trained human agents. This approach is predicated on the principle of empathetic, context-aware resolution, and is a critical component of ensuring a positive and fulfilling passenger experience. It is incumbent upon airlines to develop systems that are equipped with mechanisms capable of detecting escalating complexity or emotional cues, thereby facilitating an immediate transfer to human-led interactions. This proactive measure is essential in preventing passengers from feeling entrapped within an unresponsive automated loop and is a crucial aspect of ensuring the seamless and compassionate management of passenger services.

Author Contributions

Conceptualization, M.S. and S.A.; methodology, M.S. and S.A.; software, S.A.; formal analysis, S.A.; investigation, S.A. and M.S.; resources, S.A.; data curation, M.S. and S.A.; writing—original draft preparation, M.S. and S.A; writing—review and editing, M.S. and S.A.; visualization, S.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the Istanbul Metropolitan University Social and Human Sciences Research Ethics Committee.

Informed Consent Statement

Informed consent for participation was obtained from all subjects involved in the study.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Alamá-Sabater, L., Budí, V., García-Álvarez-Coque, J. M., & Roig-Tierno, N. (2019). Using mixed research approaches to understand rural depopulation. Economía Agraria y Recursos Naturales, 19(1), 99. [Google Scholar] [CrossRef]

- Authayarat, W., & Umemuro, H. (2012). Affective management and its effects on management performance. Journal of Entrepreneurship, Management and Innovation, 8(2), 5–25. [Google Scholar] [CrossRef]

- Åkerblad, L., Seppänen-Järvelä, R., & Haapakoski, K. (2020). Integrative strategies in mixed methods research. Journal of Mixed Methods Research, 15(2), 152–170. [Google Scholar] [CrossRef]

- Barbera, J., Naibert, N., Komperda, R., & Pentecost, T. C. (2020). Clarity on cronbach’s alpha use. Journal of Chemical Education, 98(2), 257–258. [Google Scholar] [CrossRef]

- Baruch, H. (2025). AI in the travel industry: How AI can transform airline customer support. Available online: https://www.bland.ai/blogs/ai-travel-industry-airline-customer-support (accessed on 5 January 2025).

- Bondarenko, A., Shirshakova, E., & Hagen, M. (2022, March 14–18). A user study on clarifying comparative questions. CHIIR ’22: ACM SIGIR Conference on Human Information Interaction and Retrieval, Regensburg, Germany. [Google Scholar]

- Buha, V., Lečić, R., & Berezljev, L. (2024). Transformation of business under the influence of artificial intelligence. Trendovi u Poslovanju, 12(1), 9–19. [Google Scholar] [CrossRef]

- Cheong, B. C. (2024). Transparency and accountability in AI systems: Safeguarding wellbeing in the age of algorithmic decision-making. Frontiers in Human Dynamics, 6, 1421273. [Google Scholar] [CrossRef]

- Chi, N. T. K., & Hoang Vu, N. (2022). Investigating the customer trust in artificial intelligence: The role of anthropomorphism, empathy response, and interaction. CAAI Transactions on Intelligence Technology, 8(1), 260–273. [Google Scholar] [CrossRef]

- Dahlberg, T., Mallat, N., & Öörni, A. (2003). Trust enhanced technology acceptance model-consumer acceptance of mobile payment solutions: Tentative evidence. Stockholm Mobility Roundtable, 22, 145. [Google Scholar]

- Davis, F. D., Bagozzi, R. P., & Warshaw, P. R. (1989). User acceptance of computer technology: A comparison of two theoretical models. Management Science, 35(8), 982–1003. [Google Scholar] [CrossRef]

- Di Nardo, E., & Simone, R. (2018). A model-based fuzzy analysis of questionnaires. Statistical Methods & Applications, 28(2), 187–215. [Google Scholar] [CrossRef]

- Dosek, T. (2021). Snowball sampling and facebook: How social media can help access hard-to-reach populations. PS: Political Science & Politics, 54(4), 651–655. [Google Scholar] [CrossRef]

- Dragan, I.-M., & Isaic-Maniu, A. (2022). An original solution for completing research through snowball sampling—Handicapping method. Advances in Applied Sociology, 12(11), 729–746. [Google Scholar] [CrossRef]

- Duca, G., Trincone, B., Bagamanova, M., Meincke, P., Russo, R., & Sangermano, V. (2022). Passenger dimensions in sustainable multimodal mobility services. Sustainability, 14(19), 12254. [Google Scholar] [CrossRef]

- Ellerby, Z., Wagner, C., & Broomell, S. B. (2022). Capturing richer information: On establishing the validity of an interval-valued survey response mode. Behavior Research Methods, 54(3), 1240–1262. [Google Scholar] [CrossRef] [PubMed]

- Erlei, A., Das, R., Meub, L., Anand, A., & Gadiraju, U. (2022, April 29–May 5). For what it’s worth: Humans overwrite their economic self-interest to avoid bargaining with AI systems. CHI ’22: Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA. [Google Scholar]

- Feldman, A., & Grant-Smith, D. (2022). Fuzzy set qualitative comparative analysis: Application of fuzzy sets in the social sciences. In S. Broumi (Ed.), Advances in computer and electrical engineering (pp. 50–76). IGI Global. [Google Scholar] [CrossRef]

- Franzoni, V. (2023). From black box to glass box: Advancing transparency in artificial intelligence systems for ethical and trustworthy AI. In O. Gervasi, B. Murgante, A. M. A. C. Rocha, C. Garau, F. Scorza, Y. Karaca, & C. M. Torre (Eds.), Computational science and its applications—ICCSA 2023 workshops (Vol. 14107, pp. 118–130). Springer Nature Switzerland. [Google Scholar] [CrossRef]

- Frasch, M. G. (2022). Comprehensive HRV estimation pipeline in Python using Neurokit2: Application to sleep physiology. MethodsX, 9, 101782. [Google Scholar] [CrossRef]

- Gholami, M. H., & Esfahani, M. S. (2019). An integrated analytical framework based on fuzzy DEMATEL and fuzzy ANP for competitive market strategy selection. International Journal of Industrial and Systems Engineering, 31(2), 137. [Google Scholar] [CrossRef]

- Guerrini, A., Ferri, G., Rocchi, S., Cirelli, M., Piña, V., & Grieszmann, A. (2023). Personalization @ scale in airlines: Combining the power of rich customer data, experiential learning, and revenue management. Journal of Revenue and Pricing Management, 22(2), 171–180. [Google Scholar] [CrossRef]

- Gurjar, K., Jangra, A., Baber, H., Islam, M., & Sheikh, S. A. (2024). An analytical review on the impact of artificial intelligence on the business industry: Applications, trends, and challenges. IEEE Engineering Management Review, 52(2), 84–102. [Google Scholar] [CrossRef]

- Hanif, A. H. M., & Jaafar, A. G. (2023). A review: Application of big data analytics in airlines industry. Open International Journal of Informatics, 11(2), 196–209. [Google Scholar] [CrossRef]

- He, Y., Liao, L., Zhang, Z., & Chua, T.-S. (2021, October 20). Towards enriching responses with crowd-sourced knowledge for task-oriented dialogue. MuCAI’21: Proceedings of the 2nd ACM Multimedia Workshop on Multimodal Conversational AI, Virtual Event, China. [Google Scholar]

- Helmrich, A., Chester, M., & Ryerson, M. (2023). Complexity of increasing knowledge flows: The 2022 southwest airlines scheduling crisis. Environmental Research: Infrastructure and Sustainability, 3(3), 033001. [Google Scholar] [CrossRef]

- Hendricks-Sturrup, R. M., Zhang, F., & Lu, C. Y. (2022). A survey of research participants’ privacy-related experiences and willingness to share real-world data with researchers. Journal of Personalized Medicine, 12(11), 1922. [Google Scholar] [CrossRef]

- Hersh, H. M., & Caramazza, A. (1976). A fuzzy set approach to modifiers and vagueness in natural language. Journal of Experimental Psychology: General, 105(3), 254–276. [Google Scholar] [CrossRef]

- Ho, C., Martin, M., Taneja, D., West, D. S., Boro, S., & Jackson, C. (2022). Combating bias in AI and machine learning in consumer-facing services. In L. A. DiMatteo, C. Poncibò, & M. Cannarsa (Eds.), The cambridge handbook of artificial intelligence (1st ed., pp. 364–382). Cambridge University Press. [Google Scholar] [CrossRef]

- Hochschild, A. R. (1979). Emotion work, feeling rules, and social structure. American Journal of Sociology, 85(3), 551–575. [Google Scholar] [CrossRef]

- Horovitz, J. H. (1980). Management control and associated variables. In Top management control in Europe (pp. 23–37). Palgrave Macmillan UK. [Google Scholar] [CrossRef]

- Hökkä, P., Vähäsantanen, K., & Ikävalko, H. (2022). An integrative approach to emotional agency at work. Vocations and Learning, 16(1), 23–46. [Google Scholar] [CrossRef]

- Hsu, C.-L., & Lin, J. C.-C. (2023). Understanding the user satisfaction and loyalty of customer service chatbots. Journal of Retailing and Consumer Services, 71, 103211. [Google Scholar] [CrossRef]

- Ivanov, D., Pelipenko, E., Ershova, A., & Tick, A. (2023). Artificial intelligence in aviation industry. In I. Ilin, C. Jahn, & A. Tick (Eds.), Digital technologies in logistics and infrastructure (Vol. 157, pp. 233–245). Springer International Publishing. [Google Scholar] [CrossRef]

- Kim, T. W., Jiang, L., Duhachek, A., Lee, H., & Garvey, A. (2022). Do you mind if I ask you a personal question? How AI service agents alter consumer self-disclosure. Journal of Service Research, 25(4), 649–666. [Google Scholar] [CrossRef]

- Klarmann, M., & Feurer, S. (2018). Control variables in marketing research. Marketing ZFP, 40(2), 26–40. [Google Scholar] [CrossRef]

- Kyriazos, T., & Poga, M. (2023). Dealing with multicollinearity in factor analysis: The problem, detections, and solutions. Open Journal of Statistics, 13(03), 404–424. [Google Scholar] [CrossRef]

- Lam, W. H., Lam, W. S., Liew, K. F., & Lee, P. F. (2023). Decision analysis on the financial performance of companies using integrated entropy-fuzzy TOPSIS model. Mathematics, 11(2), 397. [Google Scholar] [CrossRef]

- Landry, S. J. (2012). Human-computer interaction in aerospace. In J. A. Jacko (Ed.), The human–computer interaction handbook (3rd ed., pp. 771–793). CRC Press. [Google Scholar] [CrossRef]

- Li, W., Shen, S., Yang, J., Guo, J., & Tang, Q. (2022). Determinants of satisfaction with hospital online appointment service among older adults during the COVID-19 pandemic: A cross-sectional study. Frontiers in Public Health, 10, 853489. [Google Scholar] [CrossRef]

- Li, Z. (2024). AI ethics and transparency in operations management: How Governance mechanisms can reduce data bias and privacy risks. Journal of Applied Economics and Policy Studies, 13(1), 89–93. [Google Scholar] [CrossRef]

- Liu, X., & Sun, S. (2023, March 24–26). A subjective questionnaire system based on privacy-friendly incentive protocols. 2023 2nd International Symposium on Computer Applications and Information Systems (ISCAIS 2023), Chengdu, China. [Google Scholar]

- Lubiano, M. A., de la Rosa de Sáa, S., Montenegro, M., Sinova, B., & Gil, M. Á. (2016). Descriptive analysis of responses to items in questionnaires. Why not using a fuzzy rating scale? Information Sciences, 360, 131–148. [Google Scholar] [CrossRef]

- Lynch, T. (2019). Methodology: Research design and analysis of data. In Physical education and wellbeing (pp. 127–142). Springer International Publishing. [Google Scholar] [CrossRef]

- Mabotja, T., & Ngcobo, N. (2024). Navigating emotional labour: The role of deep acting in enhancing job performance and customer relations in the South African racecourse industry. International Journal of Research in Business and Social Science, 13(5), 324–334. [Google Scholar] [CrossRef]

- McCann, K. (2025). How AI is transforming the airline industry. AI Magazine. Available online: https://aimagazine.com/articles/how-ai-is-transforming-the-airline-industry (accessed on 26 February 2025).

- McKee, K. R., Bai, X., & Fiske, S. T. (2024). Warmth and competence in human-agent cooperation. Autonomous Agents and Multi-Agent Systems, 38(1), 23. [Google Scholar] [CrossRef]

- Mednick, M. T. (1985). The managed heart: Commercialization of human feeling. Theory and Society, 13, 731–736. [Google Scholar] [CrossRef]

- Nass, C., Steuer, J., & Tauber, E. R. (1994, April 24–28). Computers are social actors. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Boston, MA, USA. [Google Scholar]

- Naumov, V., Zhamanbayev, B., Agabekova, D., Zhanbirov, Z., & Taran, I. (2021). Fuzzy-logic approach to estimate the passengers’ preference when choosing a bus line within the public transport system. Communications-Scientific Letters of the University of Zilina, 23(3), A150–A157. [Google Scholar] [CrossRef]

- Oubahman, L., & Duleba, S. (2023). Fuzzy PROMETHEE model for public transport mode choice analysis. Evolving Systems, 15(2), 285–302. [Google Scholar] [CrossRef]

- Perneger, T. V., Peytremann-Bridevaux, I., & Combescure, C. (2020). Patient satisfaction and survey response in 717 hospital surveys in Switzerland: A cross-sectional study. BMC Health Services Research, 20(1), 158. [Google Scholar] [CrossRef]

- Sagirli, F. Y., Zhao, X., & Wang, Z. (2024). Human-computer interaction and human-ai collaboration in advanced air mobility: A comprehensive review. arXiv, arXiv:2412.07241. [Google Scholar] [CrossRef]

- Seymour, M., Yuan, L., Dennis, A., & Riemer, K. (2020, January 7–10). Facing the artificial: Understanding affinity, trustworthiness, and preference for more realistic digital humans. Proceedings of the 53rd Hawaii International Conference on System Sciences, Maui, HI, USA. [Google Scholar] [CrossRef]

- Shen, P., Wan, D., & Zhu, A. (2024). Investigating the effect of AI service quality on consumer well-being in retail context. Service Business, 19(1), 5. [Google Scholar] [CrossRef]

- Shiwakoti, N., Hu, Q., Pang, M. K., Cheung, T. M., Xu, Z., & Jiang, H. (2022). Passengers’ perceptions and satisfaction with digital technology adopted by airlines during COVID-19 pandemic. Future Transportation, 2(4), 988–1009. [Google Scholar] [CrossRef]

- Short, J., Williams, E., & Christie, B. (1976). The social psychology of telecommunications. Wiley. Available online: https://books.google.fr/books?id=Ze63AAAAIAAJ (accessed on 26 February 2025).

- Singh, S. P., & Singh, P. (2018). An integrated AFS-based SWOT analysis approach for evaluation of strategies under MCDM environment. Journal of Operations and Strategic Planning, 1(2), 129–147. [Google Scholar] [CrossRef]

- Sohn, Y., Son, S., & Lim, Y.-K. (2023, July 10–14). Exploring passengers’ experiences in algorithmic dynamic ride-pooling services. DIS ’23 Companion: Companion Publication of the 2023 ACM Designing Interactive Systems Conference, Pittsburgh, PA, USA. [Google Scholar]

- Sousa, A. E., Cardoso, P., & Dias, F. (2024). The use of artificial intelligence systems in tourism and hospitality: The tourists’ perspective. Administrative Sciences, 14(8), 165. [Google Scholar] [CrossRef]

- Susanto, E., & Khaq, Z. D. (2024). Enhancing customer service efficiency in start-ups with ai: A focus on personalization and cost reduction. Journal of Management and Informatics, 3(2), 267–281. [Google Scholar] [CrossRef]

- Truran, W. J. (2022). Affect theory. In The routledge companion to literature and emotion (1st ed., pp. 26–37). Routledge. [Google Scholar] [CrossRef]

- Ugural, M., & Giritli, H. (2021). Three-way interaction model for turnover intention of construction professionals: Some evidence from Turkey. Journal of Construction Engineering and Management, 147(4), 04021008. [Google Scholar] [CrossRef]

- Ugural, M. N., Aghili, S., & Burgan, H. I. (2024). Adoption of lean construction and AI/IoT technologies in iran’s public construction sector: A mixed-methods approach using fuzzy logic. Buildings, 14(10), 3317. [Google Scholar] [CrossRef]

- Van Den Hoven Van Genderen, R., Ballardini, R. M., & Corrales Compagnucci, M. (2022). AI innovations, empathy and the law. In Empathy and business transformation (1st ed., pp. 277–290). Routledge. [Google Scholar] [CrossRef]

- Vevere, V., Dange, K., Linina, I., & Zvirgzdina, R. (2024). Integration of artificial intelligence (AI) within SmartLynx airlines to increase operational efficiency. Wseas Transactions on Business and Economics, 21, 1677–1683. [Google Scholar] [CrossRef]

- Wang, H.-I., Manolas, C., & Xanthidis, D. (2022). Statistical analysis with Python. In Handbook of computer programming with Python (1st ed., pp. 373–408). Chapman and Hall/CRC. [Google Scholar] [CrossRef]

- Wu, M.-J., Zhao, K., & Fils-Aime, F. (2022). Response rates of online surveys in published research: A meta-analysis. Computers in Human Behavior Reports, 7, 100206. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).