AI-Driven Privacy Trade-Offs in Digital News Content: Consumer Perception of Personalized Advertising and Dynamic Paywall

Abstract

1. Introduction

2. Research Objective

2.1. Research Questions

- RQ1. How does propensity to value privacy affect perceived AI privacy risk, control, and concern?

- RQ2. How do perceived risk and perceived control affect privacy concern?

- RQ3. How do privacy concerns affect consumers’ intention to disclose personal information?

- RQ4. How does perceived benefit influence consumers’ intention to disclose personal information?

2.2. Hypotheses Development

3. Methods

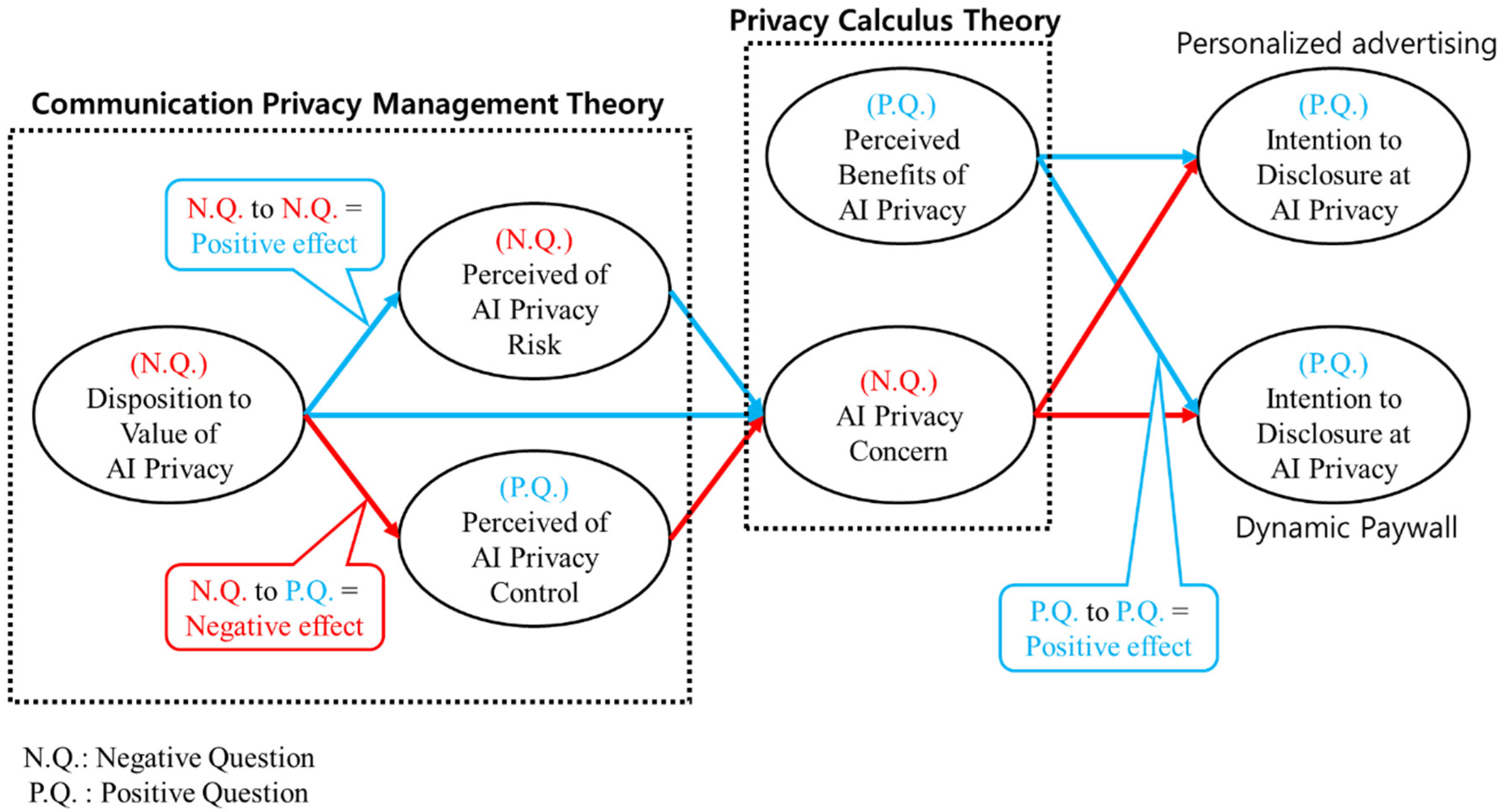

3.1. Theoretical Frameworks

3.2. Research Model

3.3. Sampling

3.4. Survey Development

3.5. Validation of Survey Responses

4. Analyses and Results

4.1. Description: Prefer to Personalized Advertising

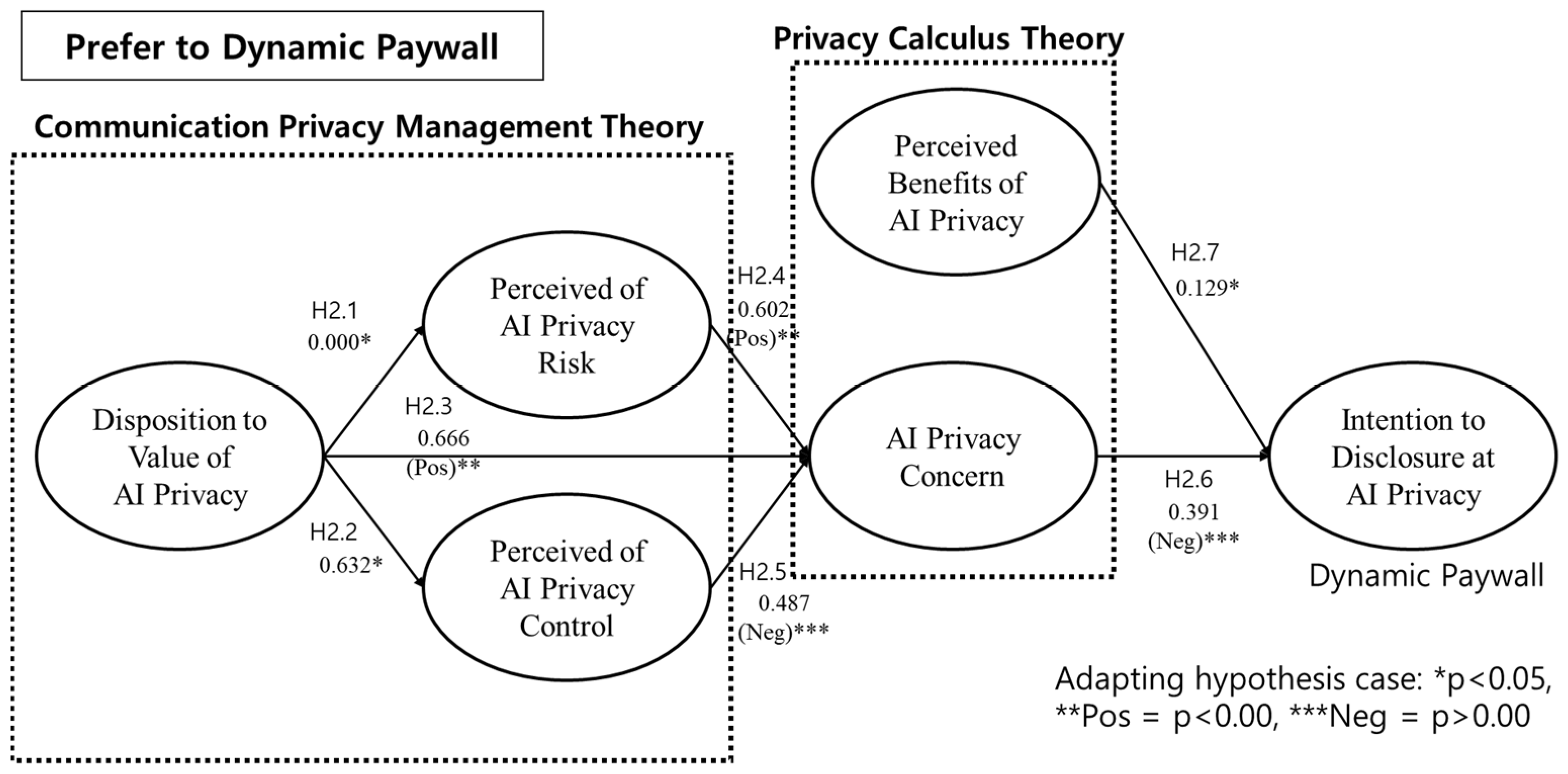

4.2. Description: Prefer to Dynamic Paywall

4.3. Result: Prefer to Personalized Advertising (Study 1)

- H1.1 (Disposition to Value Privacy → Perceived AI Privacy Risk)

- H1.2 (Disposition to Value Privacy → Perceived AI Privacy Control)

- H1.3 (Disposition to Value Privacy → AI Privacy Concerns)

- H1.4 (Perceived AI Privacy Risk →AI Privacy Concerns)

- H1.5 (Perceived AI Privacy →AI Privacy concerns)

- H1.6 (AI Privacy Concerns → Intention to Disclose Information to AI)

- H1.7 (Perceived Benefits → Intention to Disclose Information to AI)

4.4. Result: Prefer to Dynamic Paywall (Study 2)

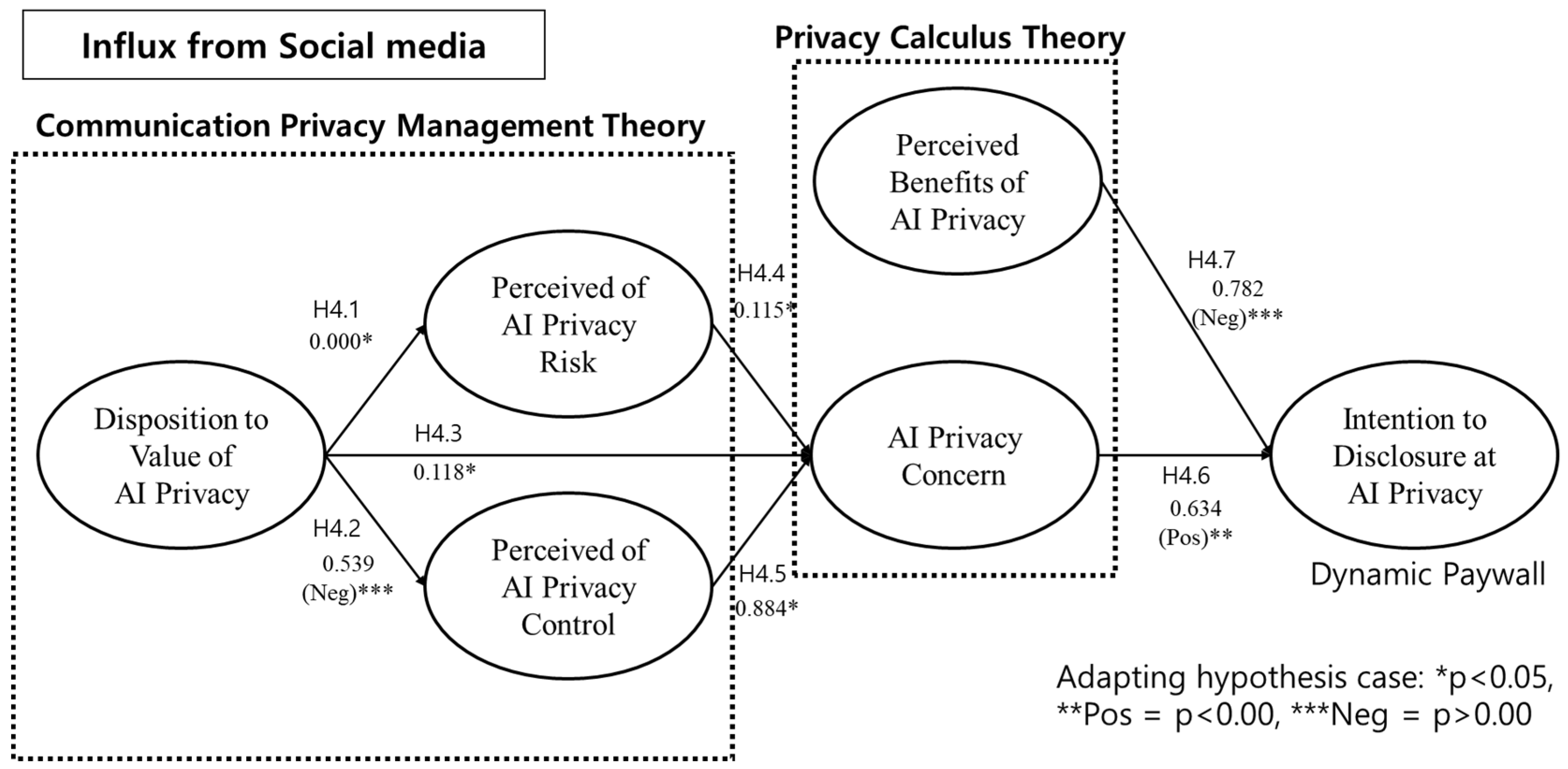

4.5. User Influx from Portal Service & Social Media

Analysis of Key Variables and Their Effects

- H3.1 (Disposition to Value Privacy → Perceived AI Privacy Risk)

- H3.2 (Disposition to Value Privacy → Perceived AI Privacy Control)

- H3.3 (Disposition to Value Privacy → Perceived AI Privacy Risk)

- H3.6 (AI Privacy Concerns → Intention to Disclose Information to AI)

- H4.1 (Disposition to Value Privacy → Perceived AI Privacy Risk)

- H4.2 (Disposition to Value Privacy → Perceived AI Privacy Control)

- H4.6 (AI Privacy Concerns → Intention to Disclose Information to AI)

5. Discussion and Conclusions

5.1. Conclusions

5.1.1. Prefer to Personalized Advertising

5.1.2. Prefer to Dynamic Paywall

5.2. Academic and Theoretical Implications of the Results

5.3. Managerial Implications of Results

5.4. Limitations and Future Studies

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Acquisti, A., Brandimarte, L., & Loewenstein, G. (2015). Privacy and human behavior in the age of information. Science, 347(6221), 509–514. [Google Scholar] [CrossRef]

- Awad, N. F., & Krishnan, M. S. (2006). The personalization privacy paradox: An empirical evaluation of information transparency and the willingness to be profiled online for personalization. MIS Quarterly, 30(1), 13–28. [Google Scholar] [CrossRef]

- Belanche, D., Casaló, L. V., Flavián, C., & Pérez-Rueda, A. (2021). The role of customers in the gig economy: How perceptions of working conditions and service quality influence the use and recommendation of food delivery services. Service Business, 15(1), 45–75. [Google Scholar] [CrossRef]

- Binns, R., Van Kleek, M., Veale, M., Lyngs, U., Zhao, J., & Shadbolt, N. (2018, April 21–26). It’s reducing a human being to a percentage’ perceptions of justice in algorithmic decisions. 2018 CHI Conference on Human Factors in Computing Systems (pp. 1–14), Montreal, QC, Canada. [Google Scholar] [CrossRef]

- Chae, I., Ha, J., & Schweidel, D. A. (2022). Paywall suspensions and digital news subscriptions. Marketing Science, 42(4), 729–745. [Google Scholar] [CrossRef]

- Criado, N., & Such, J. M. (2015). Implicit contextual integrity in online social networks. Information Sciences, 325, 48–69. [Google Scholar] [CrossRef]

- Davoudi, H., An, A., Zihayat, M., & Edall, G. (2018, August 19–23). Adaptive paywall mechanism for digital news media. 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (pp. 205–214), London, UK. [Google Scholar] [CrossRef]

- Diney, T., & Hart, P. (2006). An extended privacy calculus model for E-commerce transactions. Information Systems Research, 17(1), 61–80. [Google Scholar] [CrossRef]

- Eslami, M., Krishna, K., Sandvig, C., & Karahalios, K. (2018, April 21–26). Communicating algorithmic process in online behavioral advertising. 2018 CHI Conference on Human Factors in Computing Systems (pp. 1–13), Montreal, QC, Canada. [Google Scholar] [CrossRef]

- Evans, D. S. (2009). The online advertising industry: Economics, evolution, and privacy. Journal of Economic Perspectives, 23(3), 37–60. [Google Scholar] [CrossRef]

- Haynes, D., & Robinson, L. (2023). Delphi study of risk to individuals who disclose personal information online. Journal of Information Science, 49(1), 93–106. [Google Scholar] [CrossRef]

- Hong, N., Lee, S., & Kim, S. (2023). Generational conflict in the digital journalism environment: Focusing on journalist interviews. Journal of Communication Science, 23(2), 109–155. [Google Scholar] [CrossRef]

- Jaber, F., & Abbad, M. A. (2023). A realistic evaluation of the dark side of data in the digital ecosystem. Journal of Information Science, 51(3), 667–683. [Google Scholar] [CrossRef]

- Kim, J., & Kim, J. (2017). A study on the internet user’s economic behavior of provision of personal information: Focused on the privacy calculus, CPM theory. The Journal of Information Systems, 26(1), 93–123. [Google Scholar] [CrossRef]

- Lee, H. P., Yang, Y. J., Von Davier, T. S., Forlizzi, J., & Das, S. (2024, May 11–16). Deepfakes, phrenology, surveillance, and more! A taxonomy of AI privacy risks. Chi Conference on Human Factors in Computing Systems (pp. 1–19), Honolulu, HI, USA. [Google Scholar] [CrossRef]

- Li, Y. (2012). Theories in online information privacy research: A critical review and an integrated framework. Decision Support Systems, 54(1), 471–481. [Google Scholar] [CrossRef]

- Martin, K. D., & Murphy, P. E. (2017). The role of data privacy in marketing. Journal of the Academy of Marketing Science, 45(2), 135–155. [Google Scholar] [CrossRef]

- Masur, P. K., & Ranzini, G. (2025). Privacy calculus, privacy paradox, and context collapse: A replication of three key studies in communication privacy research. Journal of Communication, jqaf007. [Google Scholar] [CrossRef]

- Medoff, N. (2001). Just a click away: Advertising on the internet. Allyn and Bacon. [Google Scholar]

- Mittelstadt, B. D., Allo, P., Taddeo, M., Wachter, S., & Floridi, L. (2016). The ethics of algorithms: Mapping the debate. Big Data & Society, 3(2), 1–21. [Google Scholar] [CrossRef]

- Morel, V., Karegar, F., & Santos, C. (2025). “I will never pay for this” Perception of fairness and factors affecting behaviour on ‘pay-or-ok’ models. arXiv, arXiv:2505.12892. [Google Scholar] [CrossRef]

- Möller, J. E. (2024). Situational privacy: Theorizing privacy as communication and media practice. Communication Theory, 34(3), 130–142. [Google Scholar] [CrossRef]

- Nemitz, P. (2018). Constitutional democracy and technology in the age of artificial intelligence. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 376(2133), 20180089. [Google Scholar] [CrossRef]

- Petronio, S. (2002). Boundaries of privacy: Dialectics of disclosure. State University of New York Press. [Google Scholar]

- Richardson, V. J., Smith, R. E., & Watson, M. W. (2019). Much ado about nothing: The (lack of) economic impact of data privacy breaches. Journal of Information Systems, 33(3), 227–265. [Google Scholar] [CrossRef]

- Sandvig, C., Hamilton, K., Karahalios, K., & Langbort, C. (2014). Auditing algorithms: Research methods for detecting discrimination on internet platforms. Data and Discrimination: Converting Critical Concerns into Productive Inquiry, 22, 4349–4357. [Google Scholar]

- Shin, D. (2021). The effects of explainability and causability on perception, trust, and acceptance: Implications for explainable AI. International Journal of Human-Computer Studies, 146, 102551. [Google Scholar] [CrossRef]

- Sutanto, J., Palme, E., Tan, C. H., & Phang, C. W. (2013). Addressing the personalization-privacy paradox: An empirical assessment from a field experiment on smartphone users. MIS Quarterly, 37(4), 1141–1164. [Google Scholar] [CrossRef]

- Taddicken, M. (2014). The ‘privacy paradox’ in the social web: The impact of privacy concerns, individual characteristics, and the perceived social relevance on different forms of self-disclosure. Journal of Computer-Mediated Communication, 19(2), 248–273. [Google Scholar] [CrossRef]

- Trepte, S., Scharkow, M., & Dienlin, T. (2020). The privacy calculus contextualized: The influence of affordances. Computers in Human Behavior, 104, 106115. [Google Scholar] [CrossRef]

- Wang, C., Zhou, B., & Joshi, Y. V. (2023). Endogenous consumption and metered paywalls. Marketing Science, 43(1), 158–177. [Google Scholar] [CrossRef]

- Xu, Z., Thurman, N., Berhami, J., Strasser Ceballos, C., & Fehling, O. (2025). Converting online news visitors to subscribers: Exploring the effectiveness of paywall strategies using behavioural data. Journalism Studies, 26(4), 464–484. [Google Scholar] [CrossRef]

- ZareRavasan, A. (2023). Boosting innovation performance through big data analytics: An empirical investigation on the role of firm agility. Journal of Information Science, 49(5), 1293–1308. [Google Scholar] [CrossRef]

- Zuboff, S. (2019). The age of surveillance capitalism: The fight for a human future at the new frontier of power. PublicAffairs. [Google Scholar]

| Variables | Personalized Advertising | Dynamic Paywall |

|---|---|---|

| Common | Privacy collection through AI | |

| Difference |

|

|

| Purpose | AI-driven privacy collection to enhance the provision of advertising content preferred by consumers on the news homepage | AI-driven privacy collection to enhance the provision of news content preferred by consumers |

| AI’s Privacy Collection Types | <Broad digital activities—Free news content> Personal identification data, External digital activities (data such as shopping website visit records, location information data for customized advertisements, etc.) | <Certain digital activities—News content for paid subscriptions> Personal identification data, Internal digital activities (data such as what type of news the consumer frequently clicks on and stays on for a long time, etc.) |

| Variables | Definition | References |

|---|---|---|

| Disposition to Value of Privacy | A person’s tendency to value privacy and give strong value to his or her personal information protection | Li (2012); Xu et al. (2025) |

| Perception of AI Privacy Risk | The degree to which a person recognizes the risks he/she may experience from providing personal information in the context of using AI | Awad and Krishnan (2006); Lee et al. (2024) |

| Perception of AI Privacy Control | Cognitive conviction that users believe they can control and manage their information in the use of AI-based data | Belanche et al. (2021); Kim and Kim (2017) |

| AI Privacy Concern | The level at which AI systems are concerned when collecting, analyzing, and utilizing personal information | Taddicken (2014); Acquisti et al. (2015) |

| Perceived Benefits of AI Privacy | Real benefits expected from AI-based customized content & advertising | Shin (2021); Sutanto et al. (2013) |

| Intention to disclosure | Intention to provide your personal information to the news platform | Awad and Krishnan (2006); Martin and Murphy (2017) |

| Variables | Measurement Instruments |

|---|---|

| Disposition to Value of Privacy (Negative) | (H1.1) I tend to be more concerned about AI-related privacy than others. (H1.2) Compared to others, I am more interested in privacy being protected by AI. (H1.3) I think it is the most important thing for me that privacy is protected from AI. |

| Perception of AI Privacy Risk (Negative) | (H2.1) I think there is a risk that providing data to AI may compromise privacy. (H2.2) I think my privacy can be used unfairly by AI. (H2.3) I think providing privacy to AI involves a number of unexpected problems. |

| Perception of AI Privacy Control (Positive) | (H3.1) I think I can control who has an access to the privacy collected by AI. (H3.2) I think I can decide the range of personal information to disclose to AI. (H3.3) I believe that I can control over how AI can utilize the personal information. |

| AI Privacy Concern (Negative) | (H4.1) I am concerned that the privacy provided to AI can be misused or abused. (H4.2) I am concerned about providing privacy to AI because privacy can be used for other purposes. (H4.3) I am concerned about providing personal information to AI because privacy can be used in unexpected ways. |

| Perceived Benefits of AI Privacy (Positive) | (H5.1) I think I will receive sophisticated services by providing privacy to AI. (H5.2) I think I will receive various services by providing privacy to AI. (H5.3) I think I will receive services of interest by providing privacy to AI. |

| Intention to disclose at AI Privacy for online news contents & ads services (Positive) | (H6.1) I think that by providing privacy to AI, I will receive sophisticated online news contents & ads services. (H6.2) I think that by providing privacy to AI, I will receive a variety of online news contents & ads services. (H6.3) I believe that by providing privacy to AI, I will receive online news contents & ads services of interest. |

| Variable | Category | Frequency (N = 336) | Percentage (%) |

|---|---|---|---|

| Gender | Male | 153 | 45.5% |

| Female | 183 | 54.5% | |

| Age | Teens | 14 | 4.2% |

| 20s | 74 | 22.0% | |

| 30s | 112 | 33.3% | |

| 40s+ | 136 | 40.5% | |

| Occupation | Office worker | 264 | 78.6% |

| Student | 34 | 10.1% | |

| Self-employed | 32 | 9.5% | |

| Unemployed | 6 | 1.8% |

| N | Min. | Max. | Ave. | Standard Deviation | Skewness | Kurtosis | |

|---|---|---|---|---|---|---|---|

| H1.1 | 168 | 1 | 5 | 3.50 | 1.16 | −0.42 | −0.81 |

| H1.2 | 3.38 | 1.18 | −0.16 | −0.98 | |||

| H1.3 | 3.71 | 1.20 | −0.51 | −1.03 | |||

| H2.1 | 4.05 | 1.07 | −1.28 | 1.24 | |||

| H2.2 | 4.24 | 0.84 | −0.96 | 0.32 | |||

| H2.3 | 4.24 | 0.78 | −0.75 | −0.07 | |||

| H3.1 | 3.12 | 1.24 | −0.08 | −1.15 | |||

| H3.2 | 3.05 | 1.16 | −0.09 | −0.95 | |||

| H3.3 | 2.95 | 1.26 | −0.06 | −1.22 | |||

| H4.1 | 3.48 | 0.91 | −0.70 | 0.02 | |||

| H4.2 | 3.55 | 0.88 | −0.25 | −0.64 | |||

| H4.3 | 3.50 | 0.83 | −0.39 | −0.52 | |||

| H5.1 | 4.05 | 0.95 | −0.77 | −0.32 | |||

| H5.2 | 4.05 | 1.03 | −1.04 | 0.54 | |||

| H5.3 | 3.98 | 0.99 | −1.00 | 0.68 | |||

| H6.1 | 3.36 | 0.95 | −0.43 | −0.51 | |||

| H6.2 | 3.45 | 0.96 | −0.69 | 0.33 | |||

| H6.3 | 3.57 | 0.85 | −0.93 | 0.84 |

| H5 | H2 | H6 | H3 | H1 | H4 | Cronbach’s Alpha | |

|---|---|---|---|---|---|---|---|

| H5.2 | 0.92 | −0.02 | 0.06 | −0.04 | −0.00 | 0.18 | 0.83 |

| H5.1 | 0.91 | −0.00 | −0.14 | 0.10 | 0.01 | 0.06 | |

| H5.3 | 0.90 | 0.00 | −0.07 | 0.06 | 0.12 | −0.15 | |

| H2.2 | −0.04 | 0.89 | 0.07 | 0.02 | 0.18 | −0.05 | |

| H2.1 | −0.13 | 0.80 | 0.14 | −0.08 | 0.28 | −0.10 | |

| H2.3 | 0.29 | 0.76 | −0.06 | −0.08 | 0.39 | −0.01 | |

| H6.2 | 0.22 | 0.02 | 0.89 | 0.13 | −0.23 | 0.14 | |

| H6.1 | −0.12 | 0.06 | 0.88 | −0.02 | 0.13 | 0.29 | |

| H6.3 | −0.12 | 0.06 | 0.86 | 0.12 | 0.12 | 0.14 | |

| H3.2 | −0.07 | −0.09 | 0.04 | 0.94 | 0.02 | 0.11 | |

| H3.3 | 0.19 | −0.09 | 0.02 | 0.92 | 0.06 | 0.05 | |

| H3.1 | −0.04 | 0.00 | 0.20 | 0.86 | 0.17 | −0.11 | |

| H1.2 | −0.12 | 0.17 | −0.05 | 0.31 | 0.83 | −0.12 | |

| H1.1 | 0.09 | 0.55 | 0.08 | −0.04 | 0.70 | −0.04 | |

| H1.3 | 0.49 | 0.48 | −0.01 | 0.15 | 0.62 | −0.08 | |

| H4.1 | −0.28 | 0.07 | 0.32 | −0.17 | 0.08 | 0.85 | |

| H4.2 | 0.27 | −0.05 | 0.37 | 0.25 | −0.29 | 0.71 | |

| H4.3 | 0.20 | −0.08 | 0.56 | 0.16 | −0.29 | 0.61 | |

| Kaiser-Meyer-Olkin Measure of Sampling Adequacy = 0.67 Bartlett’s Test of Sphericity. Chi-Square X2 = 2851.90 (df = 153, p < 0.001) ** | |||||||

| H1 | H2 | H3 | H4 | H5 | H6 | |

|---|---|---|---|---|---|---|

| H1 | 1.00 | |||||

| H2 | 0.63 | 1.00 | ||||

| H3 | 0.20 | −0.07 | 1.00 | |||

| H4 | −0.21 | −0.09 | 0.14 | 1.00 | ||

| H5 | 0.50 | 0.80 | −0.11 | −0.01 | 1.00 | |

| H6 | 0.03 | 0.08 | 0.18 | 0.64 | 0.06 | 1.00 |

| Hypothesis | Path | Research Hypothesis Test Results | ||||||

|---|---|---|---|---|---|---|---|---|

| Standardization Error | t-Value | Regression Coefficient (Beta) | p-Value | f2 | Adopting Hypothesis | |||

| Prefer to Personalized advertising (n = 120) | H1.1 | Des → Ris | 0.05 | 7.85 | 0.59 (Pos) ** | 0.000 * | 0.22 | Supported |

| H1.2 | Des → Cont | 0.09 | 3.80 | 0.33 (Neg) *** | 0.000 * | 0.10 | Not supported | |

| H1.3 | Des → Conc | 0.07 | −0.28 | −0.03 (Pos) ** | 0.777 * | 0.01 | Not supported | |

| H1.4 | Ris → Conc | 0.09 | 2.34 | 0.21 (Pos) ** | 0.021 * | 0.08 | Supported | |

| H1.5 | Cont → Conc | 0.06 | −0.24 | −0.02 (Neg) *** | 0.810 * | 0.00 | Not supported | |

| H1.6 | Conc → Int | 0.07 | 11.80 | 0.74 (Neg) *** | 0.000 * | 0.40 | Not supported | |

| H1.7 | Bne → Int | 0.09 | 2.00 | 0.18 (Pos) ** | 0.048 * | 0.05 | Supported | |

| Prefer to Dynamic Paywall (n = 48) | H2.1 | Des → Ris | 0.10 | 7.27 | 0.73 (Pos) ** | 0.000 * | 0.27 | Supported |

| H2.2 | Des → Cont | 0.18 | −0.48 | −0.07 (Neg) *** | 0.632 * | 0.01 | Not supported | |

| H2.3 | Des → Conc | 0.08 | −6.06 | −0.67 (Pos) ** | 0.000 * | 0.20 | Not supported | |

| H2.4 | Ris → Conc | 0.08 | −5.11 | −0.60 (Pos) ** | 0.000 * | 0.18 | Not supported | |

| H2.5 | Cont → Conc | 0.08 | 3.79 | 0.49 (Neg) *** | 0.000 * | 0.15 | Not supported | |

| H2.6 | Conc → Int | 0.14 | 2.88 | 0.39 (Neg) *** | 0.006 * | 0.12 | Not supported | |

| H2.7 | Bne → Int | 0.11 | −1.55 | −0.22 (Pos) ** | 0.129 * | 0.02 | Not supported | |

| Hypothesis | Path | Research Hypothesis Test Results | ||||||

|---|---|---|---|---|---|---|---|---|

| Standardization Error | t-Value | Regression Coefficient (Beta) | p-Value | f2 | Adopting Hypothesis | |||

| Portal (n = 120) | H3.1 | Des → Ris | 0.06 | 9.61 | 0.66 (Pos) ** | 0.000 * | 0.25 | Supported |

| H3.2 | Des → Cont | 0.09 | 1.42 | 0.13 (Neg) *** | 0.157 | 0.02 | Not supported | |

| H3.3 | Des → Conc | 0.06 | −2.19 | −0.20 (Pos) ** | 0.031 * | 0.06 | Not supported | |

| H3.4 | Ris → Conc | 0.09 | −0.63 | −0.06 (Pos) ** | 0.529 | 0.00 | Not supported | |

| H3.5 | Cont → Conc | 0.07 | 1.95 | 0.18 (Neg) *** | 0.053 | 0.03 | Not supported | |

| H3.6 | Conc → Int | 0.08 | 8.78 | 0.63 (Neg) *** | 0.000 * | 0.35 | Not supported | |

| H3.7 | Bne → Int | 0.08 | 1.56 | 0.14 (Pos) ** | 0.122 | 0.02 | Not supported | |

| Social media (n = 48) | H4.1 | Des → Ris | 0.12 | 5.02 | 0.68 (Pos) ** | 0.000 * | 0.23 | Supported |

| H4.2 | Des → Cont | 0.35 | −1.16 | 0.54 (Neg) *** | 0.000 * | 0.05 | Not supported | |

| H4.3 | Des → Conc | 0.22 | −1.61 | −0.28 (Pos) ** | 0.118 | 0.04 | Not supported | |

| H4.4 | Ris → Conc | 0.23 | −1.62 | −0.28 (Pos) ** | 0.115 | 0.04 | Not supported | |

| H4.5 | Cont → Conc | 0.10 | −0.03 | −0.027 (Neg) *** | 0.884 | 0.00 | Not supported | |

| H4.6 | Conc → Int | 0.14 | 6.88 | 0.78 (Neg) *** | 0.000 * | 0.38 | Not supported | |

| H4.7 | Bne → Int | 0.22 | −4.50 | −0.63 (Pos) ** | 0.000 * | 0.22 | Not supported | |

| Prefer to Personalized Advertising | Prefer to Dynamic Paywall |

|---|---|

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, J.W. AI-Driven Privacy Trade-Offs in Digital News Content: Consumer Perception of Personalized Advertising and Dynamic Paywall. Journal. Media 2025, 6, 170. https://doi.org/10.3390/journalmedia6040170

Shin JW. AI-Driven Privacy Trade-Offs in Digital News Content: Consumer Perception of Personalized Advertising and Dynamic Paywall. Journalism and Media. 2025; 6(4):170. https://doi.org/10.3390/journalmedia6040170

Chicago/Turabian StyleShin, Jae Woo. 2025. "AI-Driven Privacy Trade-Offs in Digital News Content: Consumer Perception of Personalized Advertising and Dynamic Paywall" Journalism and Media 6, no. 4: 170. https://doi.org/10.3390/journalmedia6040170

APA StyleShin, J. W. (2025). AI-Driven Privacy Trade-Offs in Digital News Content: Consumer Perception of Personalized Advertising and Dynamic Paywall. Journalism and Media, 6(4), 170. https://doi.org/10.3390/journalmedia6040170