This editorial introduces the Special Issue “Journalism, Media, and Artificial Intelligence: Let Us Define the Journey,” which explores the evolving relationship between journalism and artificial intelligence (AI). This issue collates fifteen peer-reviewed contributions from 36 authors spanning 12 countries, collectively examining AI’s role in content production, investigative reporting, ethical governance, audience perception, and scholarly reflection. A parameter-based framework is used to classify each contribution under one of five core themes so as to organize this diverse collection of work, while also recognizing their cross-cutting relevance. This editorial synthesizes key insights, identifies emerging trends, and proposes a conceptual framework to guide future research. It highlights the challenges and opportunities posed by AI, particularly in relation to trust, transparency, accountability, and professional responsibility. This collection of research aims to support a more informed, inclusive, and critically grounded understanding of AI’s role in shaping the future of journalism.

1. Introduction

We live in an information age, yet, ironically, achieving the core function of journalism—i.e., to provide people with access to unbiased, accurate, and trustworthy information—has never been more difficult. The late 20th-century critique by Herman and Chomsky, articulated in their propaganda model (

Herman & Chomsky, 1988), remains alarmingly relevant: the structural inequalities of wealth and power continue to shape what news is produced, how it is framed, and what ultimately reaches the public. These dynamics are exacerbated by contemporary phenomena such as partisanship, misinformation, platform-driven content cycles, and algorithmic opacity.

As UN Secretary-General António Guterres noted, “at a time when disinformation and mistrust of the news media are growing, a free press is essential for peace, justice, sustainable development, and human rights” (

UN News, 2019). Yet, despite its foundational role in democratic societies, journalism today struggles to meet these expectations, challenged by financial pressures, weakened editorial independence, and increasing technological disruptions.

This Special Issue, titled “Journalism, Media, and Artificial Intelligence: Let Us Define the Journey”, was created in response to these deep-rooted concerns. Through it, we asked the following questions: What role can artificial intelligence (AI) play in building the next generation of journalism? How can AI be used, responsibly and effectively, across the journalism lifecycle, from news gathering and production to editorial curation and audience engagement?

In the call for papers for this Special Issue, we emphasized the transformative potential of AI-powered and data-driven methods (

Beckett, 2019;

Canavilhas, 2022), such as those under the umbrella of deep journalism (

Ahmad et al., 2022;

Mehmood, 2022). These approaches promise not only scalable information discovery but also multi-perspective, cross-sectional, and impartial reporting that challenges bias and makes rigorous insights accessible to all.

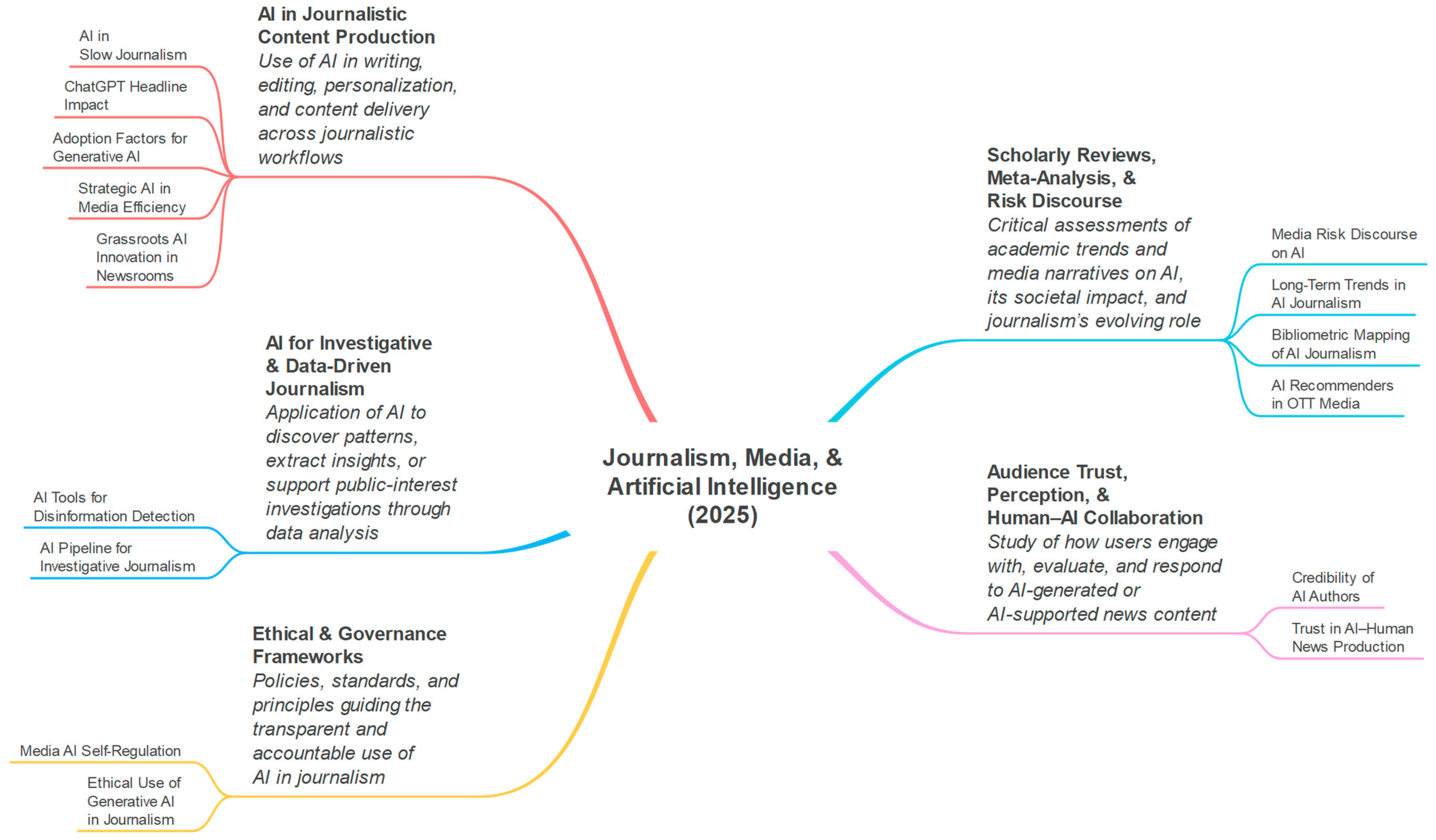

We received 29 submissions to this Special Issue, of which 15 were accepted for publication following peer review. These contributions represent 36 authors from 12 countries, reflecting the global and interdisciplinary nature of this Special Issue. The papers cover a rich diversity of geographies, disciplines, and research methods, encompassing both empirical studies and critical reviews. To meaningfully analyze these contributions and reflect on the collective journey they chart, we have organized them into the following five core thematic parameters:

AI in Journalistic Content Production;

AI for Investigative and Data-Driven Journalism;

Ethical and Governance Frameworks;

Audience Trust, Perception, and Human–AI Collaboration;

Scholarly Reviews, Meta-Analysis, and Risk Discourse.

We use the term parameter to refer to a thematically cohesive construct that synthesizes fragmented research around a shared conceptual or functional concern. These parameters serve not only to organize the discussion but also to act as design elements for identifying, aligning, and evolving the key dimensions of AI integration into journalism. Each parameter reflects a dynamic area of transformation, enabling us to trace the applications, challenges, and directions across both research and practice.

Each paper is assigned to one primary parameter, where it is discussed in detail. Some papers also engage with other parameters. These are identified as secondary papers and will be briefly noted within the relevant sections to highlight their cross-cutting relevance; however, they are only fully discussed in the section focused on the parameter to which they hold primary membership. This two-tiered classification allows us to balance thematic clarity with intellectual depth and avoid duplication, while also recognizing the interdisciplinary nature of this field. Together, the fifteen papers in this Special Issue form a timely and insightful body of work that helps us to define and interrogate the evolving relationship between journalism, media, and artificial intelligence.

To provide a structured view of this Special Issue’s contributions, we have developed a taxonomy that maps all fifteen papers to the five core parameters (see

Figure 1). Each paper is placed under its primary thematic focus using short, general titles that aid comprehension and highlight the article’s central contribution. These titles are deliberately broad to point to the wider domains where future research and development can evolve, while still remaining specific enough to reflect the content of each paper. This visual structure offers readers a clear overview of the Special Issue’s scope and thematic distribution, and the diversity of the methodological and conceptual insights presented.

In the sections that follow, we examine each of these thematic parameters, highlighting how they individually and collectively shape the evolving relationship between journalism and artificial intelligence. This is followed by a synthesis of their cross-cutting insights, the introduction of a conceptual framework for future research, and concluding reflections on the journey defined by this Special Issue.

7. Synthesis and Reflections

The fifteen papers in this Special Issue collectively map the broad and evolving landscape at the intersection of journalism, media, and artificial intelligence. They show that AI is no longer a marginal or speculative addition to journalism, but an active agent reshaping editorial workflows, audience engagement, investigative capacity, and institutional norms. Across the five thematic parameters, several cross-cutting insights emerge.

First, ethical and governance concerns are not confined to dedicated policy discussions. Even in studies primarily focused on content production, audience behaviour, or technical innovation, questions of transparency, authorship, and editorial control repeatedly surface. This is evident in the analyses of institutional self-regulation (

Sánchez-García et al., 2025), generative AI ethics (

Shi & Sun, 2024), AI authorship perceptions (

Lermann Henestrosa & Kimmerle, 2024), and even headline design (

Gherheș et al., 2024). These works reflect an underlying consensus that innovation in journalism must remain anchored to professional values, even as tools evolve.

Second, trust and audience perceptions are central to the uptake and success of AI in journalism. The findings from multiple studies indicate that readers react differently based on how AI involvement is disclosed (

Lermann Henestrosa & Kimmerle, 2024), and that the perceived credibility of content changes when the authorship is attributed to machines (

Heim & Chan-Olmsted, 2023). Whether trust is reduced by the label of machine authorship (

Lermann Henestrosa & Kimmerle, 2024), or maintained when it is known that humans are in control (

Heim & Chan-Olmsted, 2023), the social contract between journalists and audiences is being renegotiated in real time. These responses underscore the need for transparency, meaningful human oversight, and shared ethical frameworks (

Shi & Sun, 2024).

Third, the adoption of AI appears to occur through a variety of pathways. In some cases, innovation is led by major media organizations formalizing internal policies and workflows (

Sánchez-García et al., 2025). In others, grassroots or small-scale initiatives use open-source tools to experiment with AI-driven reporting (

Pinto & Barbosa, 2024). The divergence in adoption patterns is also visible in the regional studies presented from the Arab Gulf (

Ali et al., 2024), Brazil (

Pinto & Barbosa, 2024), and Spain (

Albizu-Rivas et al., 2024); these cases highlight the importance of local context and institutional capacity in shaping how AI is used and what kinds of journalism it enables or displaces.

Fourth, there is a growing effort to critically assess the state of the field of AI in journalism itself. Bibliometric reviews (

Ioscote et al., 2024;

Sonni et al., 2024), discourse studies (

González-Arias & López-García, 2024), and systematic reflections (

Vicente & Burnay, 2024) contribute to a more grounded understanding of the academic landscape. These studies suggest that while research on AI in journalism has expanded significantly, there remain gaps in education, interdisciplinary collaboration, and geographic representation.

Finally, the use of AI for investigative purposes points to a promising future for deep journalism. By automating aspects of the discovery process and enabling new forms of data analysis, AI can help to highlight issues that were previously difficult to investigate (

Alaql et al., 2023;

Santos, 2023). However, realizing this potential will depend on the availability of ethical, transparent, and user-friendly tools that serve public-interest-related objectives rather than institutional or commercial agendas.

This Special Issue does not claim to offer a complete roadmap. Instead, it presents a set of grounded, situated explorations that help to define the journey ahead. What emerges is not a single trajectory, but rather a pluralistic and dynamic field where questions of ethics, utility, trust, and responsibility must be addressed at every stage of technological integration.

8. A Framework for Future Research

Building on the five parameters and the cross-cutting themes identified in this Special Issue, we outline a conceptual framework that can guide future research at the intersection of journalism, media, and artificial intelligence (see

Figure 2). The diversity of the papers published herein does not only reveal multiple areas of inquiry, but also a set of shared concerns that demand a coherent and responsive research agenda. Rather than proposing a fixed model or linear roadmap, we suggest thinking in terms of a dynamic cycle that reflects how journalism and AI interact across different layers of media practice and responsibility.

At the heart of this framework is the recognition that AI is now embedded across the journalism lifecycle. The papers included in this issue have shown how AI is used in the creation of content through writing tools, in personalization engines, and in workflow automation (

Albizu-Rivas et al., 2024;

Ali et al., 2024;

Binlibdah, 2024;

Gherheș et al., 2024;

Pinto & Barbosa, 2024). These studies emphasize that the focus of content production is not only output, but also editorial judgement, communicative intent, and audience reception. As such, future research must address both the technological performance of AI systems and the epistemological foundations of the content they help to produce.

Equally important is the role of AI in discovery and investigation. As demonstrated by the studies on disinformation detection and labour market analysis (

Alaql et al., 2023;

Santos, 2023), AI has the capacity to highlight patterns, classify themes, and support journalistic inquiry into socially relevant issues. Future research in this area should continue to explore how machine learning and natural language processing can enhance the depth and breadth of journalistic investigations, particularly in domains where public data is underused or inaccessible. The challenge lies not only in developing AI’s technical capacity, but in ensuring that such tools are usable, interpretable, and aligned with the goals of public-interest journalism.

Ethical and governance dimensions must also remain central in future studies. Papers in this issue have shown that media organizations are beginning to formalize internal policies on AI use (

Sánchez-García et al., 2025), and that scholars are articulating normative frameworks for responsible innovation (

Shi & Sun, 2024). These efforts should be extended to include comparative studies of regulatory regimes, institutional accountability mechanisms, and the newsroom-level implementation of ethical AI practices. As the influence of generative and algorithmic systems grows, so too does the urgency of embedding ethics and governance into everyday journalistic practices.

Audience trust and perception have emerged as critical variables mediating how AI-generated or AI-assisted content is received (

Heim & Chan-Olmsted, 2023;

Lermann Henestrosa & Kimmerle, 2024). Several contributions show that users evaluate content differently depending on its presentation, authorship disclosure, and perceived authenticity (

Ali et al., 2024;

Gherheș et al., 2024;

Shi & Sun, 2024). Future research must investigate how trust is built, lost, and potentially restored in environments where automation plays an increasingly visible role. This includes drawing attention toward transparency practices, co-authorship models, and audience expectations across different cultures and media systems.

Finally, continued investment in meta-reflection and scholarly synthesis is required. The bibliometric and discursive studies included in this issue (

González-Arias & López-García, 2024;

Ioscote et al., 2024;

Sonni et al., 2024;

Vicente & Burnay, 2024) not only chart the past progress of this field, but also reveal areas of underdevelopment, such as media education, regional disparities, and methodological diversity. Future work should not only track academic output, but critically evaluate the assumptions, vocabularies, and frameworks used to structure research on AI and journalism.

This emerging framework proposes that future research engage with AI in journalism not only as a set of tools or topics, but as a system of responsibilities. These responsibilities span the technical, editorial, institutional, and civic dimensions of journalistic work. A sustainable research agenda must therefore be interdisciplinary, reflexive, and explicitly committed to preserving journalism’s democratic and public-serving functions in an increasingly automated world.

9. Conclusions

This Special Issue began with a call to critically examine and proactively shape the evolving relationship between journalism and artificial intelligence. At its core, the call emphasized the urgent need to improve public access to unbiased information in a media landscape marked by structural inequalities, rapid technological shifts, and growing public distrust. Drawing inspiration from the propaganda model articulated by Herman and Chomsky, and reaffirmed by international voices such as UN Secretary-General António Guterres, we asked how journalism could reclaim its public-serving mission in an increasingly algorithmic environment. The proposed answer lay in exploring AI not simply as a disruptive force, but as a possible enabler of next-generation journalism, a form of journalism that is rigorous, inclusive, data-informed, and transparent.

The fifteen papers included in this issue offer rich, multidimensional responses to this challenge. Across five thematic parameters (AI in content production, AI for investigative journalism, ethical and governance frameworks, audience trust and perception, and scholarly reviews and risk discourse), these contributions collectively chart a field which is in motion. They show that AI is used to write headlines, streamline workflows, detect disinformation, map labour market trends, and personalize content delivery. At the same time, they reveal that journalists, audiences, and institutions are grappling with deep questions about authorship, credibility, professional responsibility, and public trust.

The contributions to this editorial are organized using a two-level classification system. Each paper has been assigned to one primary parameter, where it is discussed in detail, and may also be referenced as a secondary contribution to another parameter where thematically relevant. This structure reflects the inherently interdisciplinary nature of the field, while maintaining clarity and coherence in thematic analysis.

In reflecting on the insights generated across these parameters, we have proposed a conceptual framework for future research, the Journalism–AI Responsibility Cycle, which emphasizes the interdependence of technological innovation, ethical governance, audience engagement, and institutional accountability. This model invites researchers to move beyond narrow application studies and engage with AI in journalism as a system of responsibilities that span creation, investigation, trust, and critical reflection.

The title of this Special Issue, “Let Us Define the Journey”, is not rhetorical. It is a call for a collaborative agenda-setting process, one that recognizes the urgency of the current moment and the opportunity that AI offers to transform journalism not only technologically, but institutionally and ethically. The journey defined in these pages is still unfolding, but the contributions presented herein offer strong foundations and critical guideposts for the path ahead.