Is AI Stirring Innovation or Chaos? Psychological Determinants of AI Fake News Exposure (AI-FNE) and Its Effects on Young Adults

Abstract

1. Introduction

2. Literature Review

2.1. Artificial Intelligence and Fake News Exposure

2.2. Psychological Determinants of AI Fake News

2.3. AI Fake News, Media Trust, and Antisocial Behaviour

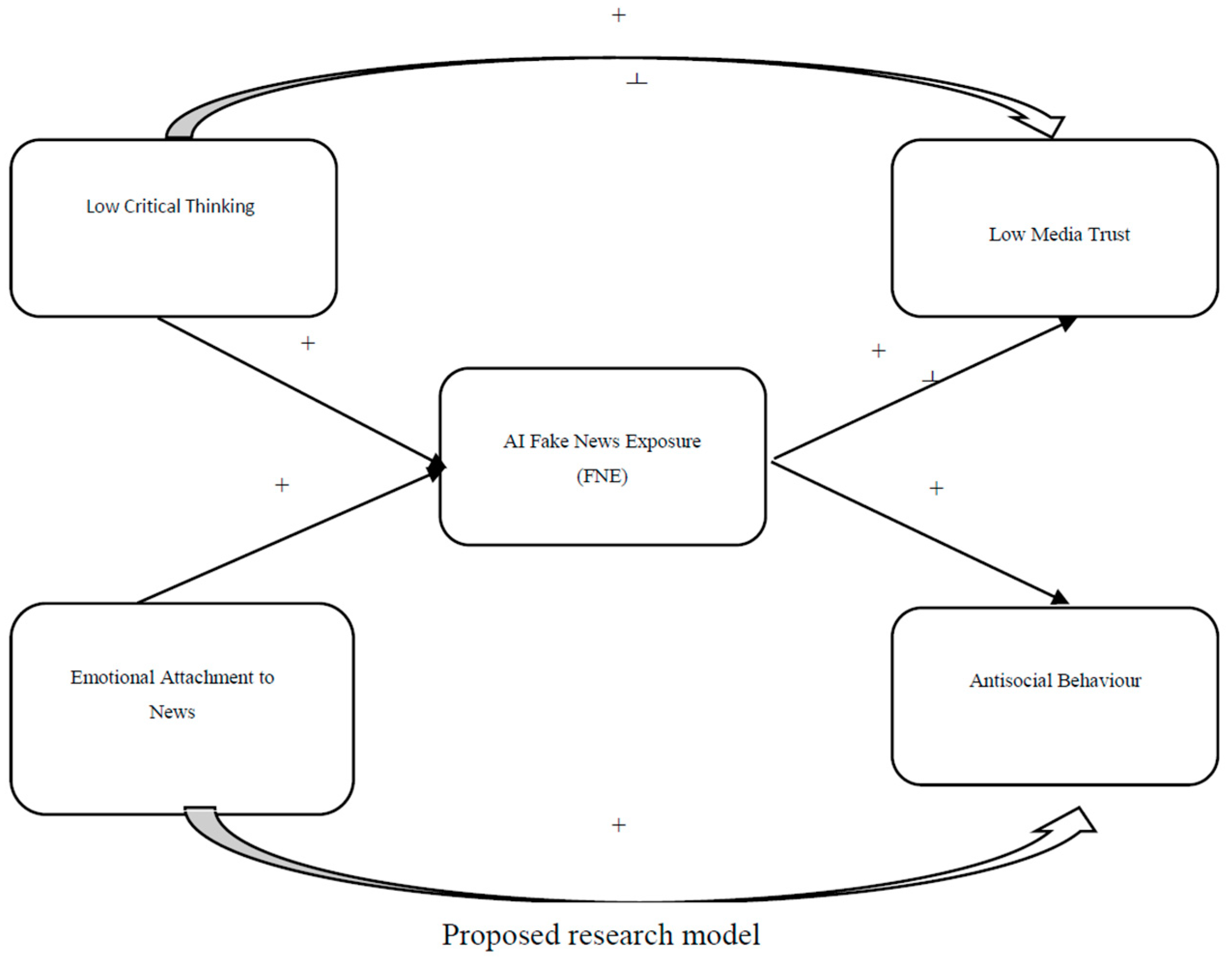

2.4. The Present Study

3. Methods

3.1. Measurement

3.1.1. Exposure to Fake News

3.1.2. Low Critical Thinking

3.1.3. Media Trust

3.1.4. Emotional Attachment to News

3.1.5. Antisocial Behaviour

4. Results

4.1. Statistical Analysis and Results

4.2. Structural Equation Modelling

5. Discussion

6. Conclusions and Recommendations

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ahmed, K. A. (2018). In Bangladesh: Direct control of media trumps fake news. The Journal of Asian Studies, 77(4), 909–922. [Google Scholar] [CrossRef]

- Akhtar, P., Ghouri, A., Khan, H., Amin ul Haq, M., Awan, U., Zahoor, N., & Ashraf, A. (2023). Detecting fake news and disinformation using artificial intelligence and machine learning to avoid supply chain disruptions. Annals of Operations Research, 327(2), 633–657. [Google Scholar] [CrossRef] [PubMed]

- Ali, K., & Zain-ul-abdin, K. (2021). Post-truth propaganda: Heuristic processing of political fake news on Facebook during the 2016 US presidential election. Journal of Applied Communication Research, 49(1), 109–128. [Google Scholar] [CrossRef]

- Anderson, J. C., & Gerbing, D. W. (1988). Structural equation modeling in practice: A review and recommended two-step approach. Psychological Bulletin, 103(3), 411. [Google Scholar] [CrossRef]

- Aoun Barakat, K., & Dabbous, A. (2021). An empirical approach to understanding users’ fake news identification on social media. Online Information Review, 45(6), 1080–1096. [Google Scholar] [CrossRef]

- Arikewuyo, A., Ozad, B., & Lasisi, T. T. (2019). Erotic use of social media pornography in gratifying romantic relationship desires. The Spanish Journal of Psychology, 22, E61. [Google Scholar] [CrossRef]

- Arikewuyo, A. O., Lasisi, T. T., Abdulbaqi, S. S., Omoloso, A. I., & Arikewuyo, H. O. (2022). Evaluating the use of social media in escalating conflicts in romantic relationships. Journal of Public Affairs, 22(1), e2331. [Google Scholar] [CrossRef]

- Bagozzi, R. P., & Yi, Y. (1988). On the evaluation of structural equation models. Journal of the Academy of Marketing Science, 16(1), 74–94. [Google Scholar] [CrossRef]

- Balakrishnan, V., Abdul Rahman, L. H., Tan, J. K., & Lee, Y. S. (2023). COVID-19 fake news among the general population: Motives, sociodemographic, attitude/behavior and impacts–a systematic review. Online Information Review, 47(5), 944–973. [Google Scholar] [CrossRef]

- Bazrkar, A., Moradzad, M., & Shayegan, S. (2024). The use of artificial intelligence in employee recruitment in the furniture industry of Iran according to the role of contextual factors. Studia Universitatis “Vasile Goldis” Arad–Economics Series, 34(2), 86–109. [Google Scholar] [CrossRef]

- Bendixen, M., Endresen, I. M., & Olweus, D. (2003). Variety and frequency scales of antisocial involvement: Which one is better? Legal and Criminological Psychology, 8(2), 135–150. [Google Scholar]

- Bendixen, M., & Olweus, D. (1999). Measurement of antisocial behaviour in early adolescence and adolescence: Psychometric properties and substantive findings. Criminal Behaviour and Mental Health, 9, 323–354. [Google Scholar]

- Berger, J., & Milkman, K. L. (2012). Emotion and virality: What makes online content go viral? Journal of Marketing Research, 49(2), 192–205. [Google Scholar]

- Berrondo-Otermin, M., & Sarasa-Cabezuelo, A. (2023). Application of artificial intelligence techniques to detect fake news: A review. Electronics, 12(24), 5041. [Google Scholar] [CrossRef]

- Bielby, W. T., & Hauser, R. M. (1977). Structural equation models. Annual Review of Sociology, 3, 137–161. [Google Scholar]

- Bushman, B. J., & Anderson, C. A. (2009). The impact of negative news on aggression and antisocial behavior. Psychological Science, 20(5), 531–536. [Google Scholar] [CrossRef]

- Byrne, B. M. (2013). Structural equation modeling with Mplus: Basic concepts, applications, and programming. Routledge. [Google Scholar]

- Cardoso, M., Ares, E., Ferreira, L. P., & Pelaez, G. (2023). Using index function and artificial intelligence to assess sustainability: A bibliometric analysis. International Journal of Industrial Engineering and Management, 14(4), 311–325. [Google Scholar]

- Chan, M. (2024). News literacy, fake news recognition, and authentication behaviors after exposure to fake news on social media. New Media & Society, 26(8), 4669–4688. [Google Scholar]

- Chen, Z. F., & Cheng, Y. (2020). Consumer response to fake news about brands on social media: The effects of self-efficacy, media trust, and persuasion knowledge on brand trust. Journal of Product & Brand Management, 29(2), 188–198. [Google Scholar] [CrossRef]

- Ciortea-Neamţiu, Ş. (2020). Critical thinking in a world of fake news. Teaching the public to make good choices. Studia Universitatis Babes-Bolyai-Ephemerides, 65(2), 21–39. [Google Scholar]

- Commisso, C. (2017). The post-truth archive: Considerations for archiving context in fake news repositories. Preservation, Digital Technology & Culture, 46(3), 99–102. [Google Scholar]

- Dabbous, A., & Aoun Barakat, K. (2022). Fake news detection and social media trust: A cross-cultural perspective. Behaviour & Information Technology, 41(14), 2953–2972. [Google Scholar] [CrossRef]

- De Leeuw, E. D. (2012). Choosing the method of data collection. In International handbook of survey methodology (pp. 113–135). Routledge. [Google Scholar]

- Divya, D. T. (2024). Fake news detection stay informed: How to spot fake news effectively. International Journal of Trend in Scientific Research and Development, 8(5), 753–759. [Google Scholar]

- Elías, C. (2020). Coronavirus in Spain: Fear of ‘official’ fake news boosts WhatsApp and alternative sources. Media and Communication, 8(2), 462–466. [Google Scholar] [CrossRef]

- Escolà-Gascón, Á. D. (2021). Critical thinking predicts reductions in Spanish physicians’ stress levels and promotes fake news detection. Thinking Skills and Creativity, 42, 100934. [Google Scholar] [CrossRef]

- Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39–50. [Google Scholar]

- Gallagher, D., Ting, L., & Palmer, A. (2008). A journey into the unknown; taking the fear out of structural equation modeling with AMOS for the first-time user. The Marketing Review, 8(3), 255–275. [Google Scholar] [CrossRef]

- Gaozhao, D. (2021). Flagging fake news on social media: An experimental study of media consumers’ identification of fake news. Government Information Quarterly, 38(3), 101591. [Google Scholar] [CrossRef]

- Garg, S., & Sharma, D. K. (2022). Linguistic features based framework for automatic fake news detection. Computers & Industrial Engineering, 172, 108432. [Google Scholar]

- Gonzalez, F. J. (2019). This session is fake news: The impact of fake news and political polarization on media and attitude change, and strategies for societal intervention. Advances in Consumer Research, 47, 105–110. [Google Scholar]

- Greene, C. M., & Murphy, G. (2021). Quantifying the effects of fake news on behavior: Evidence from a study of COVID-19 misinformation. Journal of Experimental Psychology: Applied, 27(4), 773. [Google Scholar] [PubMed]

- Gregory, R. W., Henfridsson, O., Kaganer, E., & Kyriakou, S. H. (2021). The role of artificial intelligence and data network effects for creating user value. Academy of Management Review, 6(3), 534–551. [Google Scholar]

- Horn, S., & Veermans, K. (2019). Critical thinking efficacy and transfer skills defend against ‘fake news’ at an international school in Finland. Journal of Research in International Education, 18(1), 23–41. [Google Scholar] [CrossRef]

- Iqbal, A., Shahzad, K., & Khan, S. A. (2023). The relationship of artificial intelligence (AI) with fake news detection (FND): A systematic literature review. Global Knowledge, Memory and Communication. (ahead-of-print). [Google Scholar]

- Islam, N., Shaikh, A., Qaiser, A., Asiri, Y., Almakdi, S., Sulaiman, A., Moazzam, V., & Babar, S. A. (2021). Ternion: An autonomous model for fake news detection. Applied Sciences, 11(19), 9292. [Google Scholar]

- Islas-Carmona, J. O.-C.-U. (2024). Disinformation and political propaganda: An exploration of the risks of artificial intelligence. Explorations in Media Ecology, 23(2), 105–120. [Google Scholar]

- Jahng, M. R. (2021). Is fake news the new social media crisis? Examining the public evaluation of crisis management for corporate organizations targeted in fake news. International Journal of Strategic Communication, 15(1), 18–36. [Google Scholar]

- Karadayi-Usta, S. (2024). Role of artificial intelligence and augmented reality in fashion industry from consumer perspective: Sustainability through waste and return mitigation. Engineering Applications of Artificial Intelligence, 133, 108114. [Google Scholar]

- Kietzmann, J., Lee, L. W., McCarthy, I. P., & Kietzmann, T. C. (2020). Deepfakes: Trick or treat? Business Horizons, 63(2), 135–146. [Google Scholar]

- Kim, S. K., Huh, J. H., & Kim, B. G. (2024). Artificial intelligence blockchain based fake news discrimination. IEEE Access, 12, 53838–53854. [Google Scholar]

- Kline, R. B. (2023). Principles and practice of structural equation modeling. Guilford Publications. [Google Scholar]

- Krstić, L., Aleksić, V., & Krstić, M. (2022). Artificial intelligence in education: A review. In Proceedings of the 9th international scientific conference technics and informatics in education—TIE 2022 (pp. 223–228). University of Kragujevac, Faculty of Technical Sciences. [Google Scholar] [CrossRef]

- Kumari, R., Gupta, V., Ashok, N., Ghosal, T., & Ekbal, A. (2024). Emotion aided multi-task framework for video embedded misinformation detection. Multimedia Tools and Applications, 83(12), 37161–37185. [Google Scholar]

- Landon-Murray, M., & Mujkic, E. (2019). Disinformation in contemporary US foreign policy: Impacts and ethics in an era of fake news, social media, and artificial intelligence. Public Integrity, 21(5), 512–522. [Google Scholar]

- Larraz, I., Salaverría, R., & Serrano-Puche, J. (2024). Combating repeated lies: The impact of fact-checking on persistent falsehoods by politicians. Media and Communication, 12. [Google Scholar] [CrossRef]

- Lecheler, S., & Bos, L. (2015). He mediating role of emotions: News framing effects on opinions about immigration. Journalism & Mass Communication Quarterly, 92(4), 812–838. [Google Scholar]

- Lida, T., Song, J., & Estrada, J. L. (2024). Fake news and its electoral consequences: A survey experiment on Mexico. Ai & Society, 39(3), 1065–1078. [Google Scholar]

- Lim, W. M. (2023). Fact or fake? The search for truth in an infodemic of disinformation, misinformation, and malinformation with deepfake and fake news. Journal of Strategic Marketing, 1–37. [Google Scholar] [CrossRef]

- Liu, J. L. (2023). Exploring rumor behavior during the COVID-19 pandemic through an information processing perspective: The moderating role of critical thinking. Computers in Human Behavior, 147, 107842. [Google Scholar] [CrossRef]

- Lutzke, L., Drummond, C., Slovic, P., & Árvai, J. (2019). Priming critical thinking: Simple interventions limit the influence of fake news about climate change on Facebook. Global Environmental Change, 58, 101964. [Google Scholar] [CrossRef]

- Machete, P., & Turpin, M. (2020). The use of critical thinking to identify fake news: A systematic literature review. In Responsible design, implementation and use of information and communication technology: 19th IFIP WG 6.11 conference on e-business, e-services, and e-society, I3E 2020, Skukuza, South Africa, April 6–8, 2020, Proceedings, Part II 19 (pp. 235–246). Springer International Publishing. [Google Scholar]

- Malär, L., Krohmer, H., Hoyer, W. D., & Nyffenegger, B. (2011). Emotional brand attachment and brand personality: The relative importance of the actual and the ideal self. Journal of Marketing, 75(4), 35–52. [Google Scholar]

- Martel, C., Pennycook, G., & Rand, D. G. (2020). Reliance on emotion promotes belief in fake news. Cognitive Research: Principles and Implications, 5, 1–20. [Google Scholar]

- McDougall, J. (2019). Media literacy versus fake news: Critical thinking, resilience and civic engagement. Media Studies, 10(19), 29–45. [Google Scholar]

- Mihailidis, P., & Viotty, S. (2021). Critical thinking and media trust: Implications for democracy. Journal of Democracy, 32(3), 95–109. [Google Scholar] [CrossRef]

- Mills, A. J. (2020). Brand management in the era of fake news: Narrative response as a strategy to insulate brand value. Journal of Product & Brand Management, 29(2), 159–167. [Google Scholar]

- Mohammed, A., Elega, A. A., Ahmad, M. B., & Oloyede, F. (2024). Friends or foes? Exploring the framing of artificial intelligence innovations in Africa-focused journalism. Journalism and Media, 5(4), 1749–1770. [Google Scholar]

- Mukherjee, A. M. (2023). Artificial intelligence and its relevance in fake news and deepafakes: A perspective. Globsyn Management Journal, 17(1/2), 93–95. [Google Scholar]

- Nabi, R. L., & Sullivan, J. L. (2001). Emotional engagement with news and its impact on hostility and aggression. Journal of Communication, 51(3), 475–499. [Google Scholar] [CrossRef]

- Nazar, S. (2020). Artificial Intelligence and New Level of Fake News. In IOP conference series: Materials science and engineering (Vol. 879, No. 1, p. 012006). IOP Publishing. [Google Scholar]

- Nazari, Z., & Oruji, M. (2022). News consumption and behavior of young adults and the issue of fake news. Journal of Information Science Theory and Practice, 10(2), 1–16. [Google Scholar]

- Obada, D. R. (2022). In flow! why do users share fake news about environmentally friendly brands on social media? International Journal of Environmental Research and Public Health, 19(8), 4861. [Google Scholar]

- Olweus, D. (1989). Prevalence and incidence in the study of antisocial behavior: Definitions and measurements. In Cross-national research in self-reported crime and delinquency (pp. 187–201). Springer Netherlands. [Google Scholar]

- Orhan, A. (2023). Fake news detection on social media: The predictive role of university students’ critical thinking dispositions and new media literacy. Smart Learning Environments, 10(1), 29. [Google Scholar]

- Ozbay, F. A. (2020). Fake news detection within online social media using supervised artificial intelligence algorithms. Physica A: Statistical Mechanics and Its Applications, 540, 123174. [Google Scholar] [CrossRef]

- Palos-Sánchez, P. R., Baena-Luna, P., Badicu, A., & Infante-Moro, J. C. (2022). Artificial intelligence and human resources management: A bibliometric analysis. Applied Artificial Intelligence, 36(1), 2145631. [Google Scholar] [CrossRef]

- Paschen, J. (2020). Investigating the emotional appeal of fake news using artificial intelligence and human contributions. Journal of Product & Brand Management, 29(2), 223–233. [Google Scholar]

- Pennycook, G., Cannon, T. D., & Rand, D. G. (2018). Prior exposure increases perceived accuracy of fake news. Journal of experimental psychology: General, 147(12), 1865. [Google Scholar] [CrossRef]

- Pérez-Escoda, A., Pedrero-Esteban, L. M., Rubio-Romero, J., & Jiménez-Narros, C. (2021). Fake news reaching young people on social networks: Distrust challenging media literacy. Publications, 9(2), 24. [Google Scholar] [CrossRef]

- Puig, B., Blanco-Anaya, P., & Pérez-Maceira, J. J. (2021). “Fake news” or real science? Critical thinking to assess information on COVID-19. In Frontiers in education (Vol. 6, p. 646909). Frontiers Media SA. [Google Scholar]

- Raman, R. N., Nedungadi, P., Sahu, A. K., Kowalski, R., & Ramanathan, S. (2024). Fake news research trends, linkages to generative artificial intelligence and sustainable development goals. Heliyon, 10(3), e24727. [Google Scholar] [CrossRef] [PubMed]

- Roozenbeek, J., & van der Linden, S. (2022). The relationship between critical thinking and trust in media during the COVID-19 pandemic. Health Communication, 37(10), 1213–1222. [Google Scholar]

- Ross, A. S., & Rivers, D. J. (2018). Discursive deflection: Accusation of “fake news” and the spread of mis-and disinformation in the tweets of President Trump. Social Media+ Society, 4(2), 2056305118776010. [Google Scholar] [CrossRef]

- Sardinha, T. B. (2024). AI-generated vs human-authored texts: A multidimensional comparison. Applied Corpus Linguistics, 4(1), 10008. [Google Scholar] [CrossRef]

- Schlemitz, A., & Mezhuyev, V. (2024). Approaches for data collection and process standardization in smart manufacturing: Systematic literature review. Journal of Industrial Information Integration, 38, 100578. [Google Scholar] [CrossRef]

- Schulz, A., Fletcher, R., & Nielsen, R. K. (2024). The role of news media knowledge for how people use social media for news in five countries. New Media & Society, 26(7), 4056–4077. [Google Scholar]

- Setiawan, R. P., Sengan, S., Anam, M., Subbiah, C., Phasinam, K., & Vairaven, M. (2022). Certain investigation of fake news detection from facebook and twitter using artificial intelligence approach. Wireless Personal Communications, 127, 1737–1762. [Google Scholar]

- Shephard, M. P., Robertson, D. J., Huhe, N., & Anderson, A. (2023). Everyday non-partisan fake news: Sharing behavior, platform specificity, and detection. Frontiers in Psychology, 14, 1118407. [Google Scholar] [CrossRef]

- Slater, M. D., & Rouner, D. (2002). The role of emotional attachment to news in predicting antisocial behavior. Media Psychology, 4(3), 291–311. [Google Scholar] [CrossRef]

- Sonni, A. F., Hafied, H., Irwanto, I., & Latuheru, R. (2024). Digital newsroom transformation: A systematic review of the impact of artificial intelligence on journalistic practices, news narratives, and ethical challenges. Journalism and Media, 5(4), 1554–1570. [Google Scholar]

- Strömbäck, J., Tsfati, Y., Boomgaarden, H., Damstra, A., Lindgren, E., Vliegenthart, R., & Lindholm, T. (2020). News media trust and its impact on media use: Toward a framework for future research. Annals of the International Communication Association, 44(2), 139–156. [Google Scholar] [CrossRef]

- Stupavský, I., & Dakić, P. (2023). The impact of fake news on traveling and antisocial behavior in online communities. Overview. Applied Sciences, 13(21), 11719. [Google Scholar]

- Tavakoli, S. S., Mozaffari, A., Danaei, A., & Rashidi, E. (2023). Explaining the effect of artificial intelligence on the technology acceptance model in media: A cloud computing approach. The Electronic Library, 4(1), 1–29. [Google Scholar]

- Tayie, S. S., & Calvo, S. T. (2023). Alfabetización periodística entre jóvenes egipcios y españoles: Noticias falsas, discurso de odio y confianza en medios. Comunicar: Revista Científica de Comunicación y Educación, 74, 73–87. [Google Scholar]

- Thakkar, J. J. (2020). Structural equation modelling. In Application for research and practice. Springer. [Google Scholar]

- Thomson, M., MacInnis, D. J., & Whan Park, C. (2005). The ties that bind: Measuring the strength of consumers’ emotional attachments to brands. Journal of Consumer Psychology, 15(1), 77–91. [Google Scholar]

- Túñez-López, J. M., Fieiras-Ceide, C., & Vaz-Álvarez, M. (2021). Impact of Artificial Intelligence on Journalism: Transformations in the company, products, contents and professional profile. Communication & Society, 34(1), 177–193. [Google Scholar]

- Usman, B., Eric Msughter, A., & Olaitan Ridwanullah, A. (2022). Social media literacy: Fake news consumption and perception of COVID-19 in Nigeria. Cogent Arts & Humanities, 9(1), 2138011. [Google Scholar]

- Veinberg, S. (2018). Unfamiliar concepts as an obstacle for critical thinking in public discussions regarding women’s rights issues in Latvia. Reflective thinking in the ‘fake news’ era. ESSACHESS-Journal for Communication Studies, 22(2), 31–49. [Google Scholar]

- Verma, N., & Fleischmann, K. R. (2017). Human values and trust in scientific journals, the mainstream media and fake news. Proceedings of the Association for Information Science and Technology, 54, 426–435. [Google Scholar] [CrossRef]

- Verma, N., Fleischmann, K. R., & Koltai, K. S. (2018). Demographic factors and trust in different news sources. Proceedings of the Association for Information Science and Technology, 55(1), 524–533. [Google Scholar]

- Verma, P. K., Agrawal, P., Madaan, V., & Prodan, R. (2023). MCred: Multi-modal message credibility for fake news detection using BERT and CNN. Journal of Ambient Intelligence and Humanized Computing, 14(8), 10617–10629. [Google Scholar] [CrossRef]

- Vosoughi, S., Roy, D., & Aral, S. (2018). The role of emotion in the spread of misinformation. Science, 359(6380), 1146–1151. [Google Scholar] [PubMed]

- Wan, M., Zhong, Y., Gao, X., & Lee, S. Y. (2023). Fake news, real emotions: Emotion analysis of COVID-19 infodemic in weibo. IEEE Transactions on Affective Computing, 15, 815–827. [Google Scholar]

- Węcel, K., Sawiński, M., Stróżyna, M., Lewoniewski, W., Księżniak, E., & Stolarski, P. (2003). Artificial intelligence—Friend or foe in fake news campaigns. Economics and Business Review, 9(2), 41–70. [Google Scholar]

- Weiner, J. M. (2011). Is there a difference between critical thinking and information literacy? A Systematic Review 2000–2009. Journal of Information Literacy, 5(2), 1600. [Google Scholar]

- Weiss, A. P., Alwan, A., & Garcia, E. P. (2020). Surveying fake news: Assessing university faculty’s fragmented definition of fake news and its impact on teaching critical thinking. International Journal for Educational Integrity, 16, 1–30. [Google Scholar] [CrossRef]

- Wijayati, D. T. (2022). A study of artificial intelligence on employee performance and work engagement: The moderating role of change leadership. International Journal of Manpower, 43(2), 486–512. [Google Scholar]

- Xie, B. (2023). Detecting fake news by RNN-based gatekeeping behavior model on social networks. Expert Systems with Applications, 231, 120716. [Google Scholar] [CrossRef]

- Zhang, C., Gupta, A., Qin, X., & Zhou, Y. (2023). A computational approach for real-time detection of fake news. Expert Systems with Applications, 221, 119656. [Google Scholar] [CrossRef]

| Characteristics | N | % |

|---|---|---|

| Gender | ||

| Female | 140 | 63.3 |

| Male | 241 | 36.7 |

| Age | ||

| 18–23 | 184 | 43% |

| 24–29 | 124 | 32.5 |

| 30–35 | 61 | 16.0 |

| 36–40 | 32 | 8.4 |

| Level of Education | ||

| Living School Certificate | 38 | 10.0 |

| Diploma/OND | 36 | 9.4 |

| Undergraduate | 229 | 60.1 |

| Masters | 67 | 17.6 |

| PhD | 11 | 2.9 |

| Main Sources of News | ||

| TV | 12 | 3.1 |

| Radio | 8 | 2.1 |

| Internet Newspaper | 59 | 15.5 |

| Social Media | 293 | 76.9 |

| Online Radio/TV | 9 | 2.4 |

| Time Spent on News | ||

| Less than 30 min | 162 | 42.5 |

| 30 min to 2 h | 167 | 43.8 |

| 2 h–4 h | 52 | 13.7 |

| News activities | ||

| Share and discuss | 184 | 48.3 |

| Read and Share | 82 | 21.5 |

| Read and Discuss | 115 | 30.2 |

| Construct | Item | SE | CR | AVE |

|---|---|---|---|---|

| 0.87 | 0.739 | |||

| AI-FNE | AI-FNE_1 | 0.73 | ||

| (α = 0.75, M = 3.62; SD = 0.83) | AI-FNE_2 | 0.71 | ||

| LCT | 0.93 | 0.748 | ||

| (α = 0.89, M = 2.61; SD = 1.10) | LCT_1 | 0.79 | ||

| LCT_2 | 0.89 | |||

| LCT_3 | 0.76 | |||

| LCT_4 | 0.85 | |||

| LCT_5 | 0.78 | |||

| LCT_6 | 0.72 | |||

| LCT_7 | 0.86 | |||

| EA | 0.88 | 0.777 | ||

| (α = 0.85, M = 3.11; SD = 0.74) | EA_1 | 0.71 | ||

| EA_2 | 0.84 | |||

| EA_3 | 0.77 | |||

| EA_4 | 0.79 | |||

| EA_5 | 0.75 | |||

| EA_6 | 0.88 | |||

| EA_7 | 0.78 | |||

| LMT | 0.734 | |||

| (α = 0.75, M = 3.02; SD = 0.78) | LMT_1 | 0.88 | ||

| LMT_2 | 0.85 | |||

| LMT_3 | 0.78 | |||

| LMT_4 | 0.70 | |||

| AsB | 0.865 | |||

| (α = 0.86, M = 2.76; SD = 1.12) | NBI_1 | 0.83 | ||

| NBI_2 | 0.74 | |||

| NBI_3 | 0.86 | |||

| NBI_4 | 0.75 | |||

| NBI_5 | 0.84 | |||

| NBI_6 | 0.73 | |||

| NBI_7 | 0.77 | |||

| NBI_8 | 0.84 | |||

| NBI_9 | 0.88 | |||

| NBI_10 | 0.89 | |||

| NBI_11 | 0.87 | |||

| NBI_12 | 0.72 | |||

| NBI_13 | 0.79 | |||

| NBI_14 | 0.84 | |||

| NBI_15 | 0.77 | |||

| NBI_16 | 0.73 | |||

| NBI_17 | 0.71 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | ||

|---|---|---|---|---|---|---|---|---|

| 1 | News Activities | 1 | 0.422 * | 0.277 | 0.145 * | 0.208 * | 0.192 | 0.152 * |

| 2 | News Seeking Motivations | 1 | 0.385 * | 0.140 | 0.198 * | 0.226 * | 0.159 * | |

| 3 | AI Fake News Exposure | 1 | 0.493 * | 0.501 * | 0.592 * | 0.411 * | ||

| 4 | Low Critical Thinking | 1 | 0.393 * | 0.503 * | 0.427 * | |||

| 5 | Emotional Attachment to News Content | 1 | 0.543 * | 0.398 * | ||||

| 6 | Low Media Trust | 1 | 0.523 * | |||||

| 7 | Antisocial behaviour | 1 | ||||||

| M | 3.62 | 2.61 | 3.11 | 3.02 | 2.76 | |||

| SD | 0.83 | 1.10 | 0.74 | 0.78 | 1.12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arikewuyo, A.O. Is AI Stirring Innovation or Chaos? Psychological Determinants of AI Fake News Exposure (AI-FNE) and Its Effects on Young Adults. Journal. Media 2025, 6, 53. https://doi.org/10.3390/journalmedia6020053

Arikewuyo AO. Is AI Stirring Innovation or Chaos? Psychological Determinants of AI Fake News Exposure (AI-FNE) and Its Effects on Young Adults. Journalism and Media. 2025; 6(2):53. https://doi.org/10.3390/journalmedia6020053

Chicago/Turabian StyleArikewuyo, Abdulgaffar Olawale. 2025. "Is AI Stirring Innovation or Chaos? Psychological Determinants of AI Fake News Exposure (AI-FNE) and Its Effects on Young Adults" Journalism and Media 6, no. 2: 53. https://doi.org/10.3390/journalmedia6020053

APA StyleArikewuyo, A. O. (2025). Is AI Stirring Innovation or Chaos? Psychological Determinants of AI Fake News Exposure (AI-FNE) and Its Effects on Young Adults. Journalism and Media, 6(2), 53. https://doi.org/10.3390/journalmedia6020053