Mobile Selective Exposure: Confirmation Bias and Impact of Social Cues during Mobile News Consumption in the United States

Abstract

1. Introduction

1.1. Confirmation Bias

Confirmation Bias on Smartphones

1.2. Impact of Social Cues

Impact of Social Cues on Smartphones

1.3. Confirmation Bias and Impact of Social Cues

2. Study 1

2.1. Overview

2.2. Method

2.2.1. Participants

2.2.2. Procedure

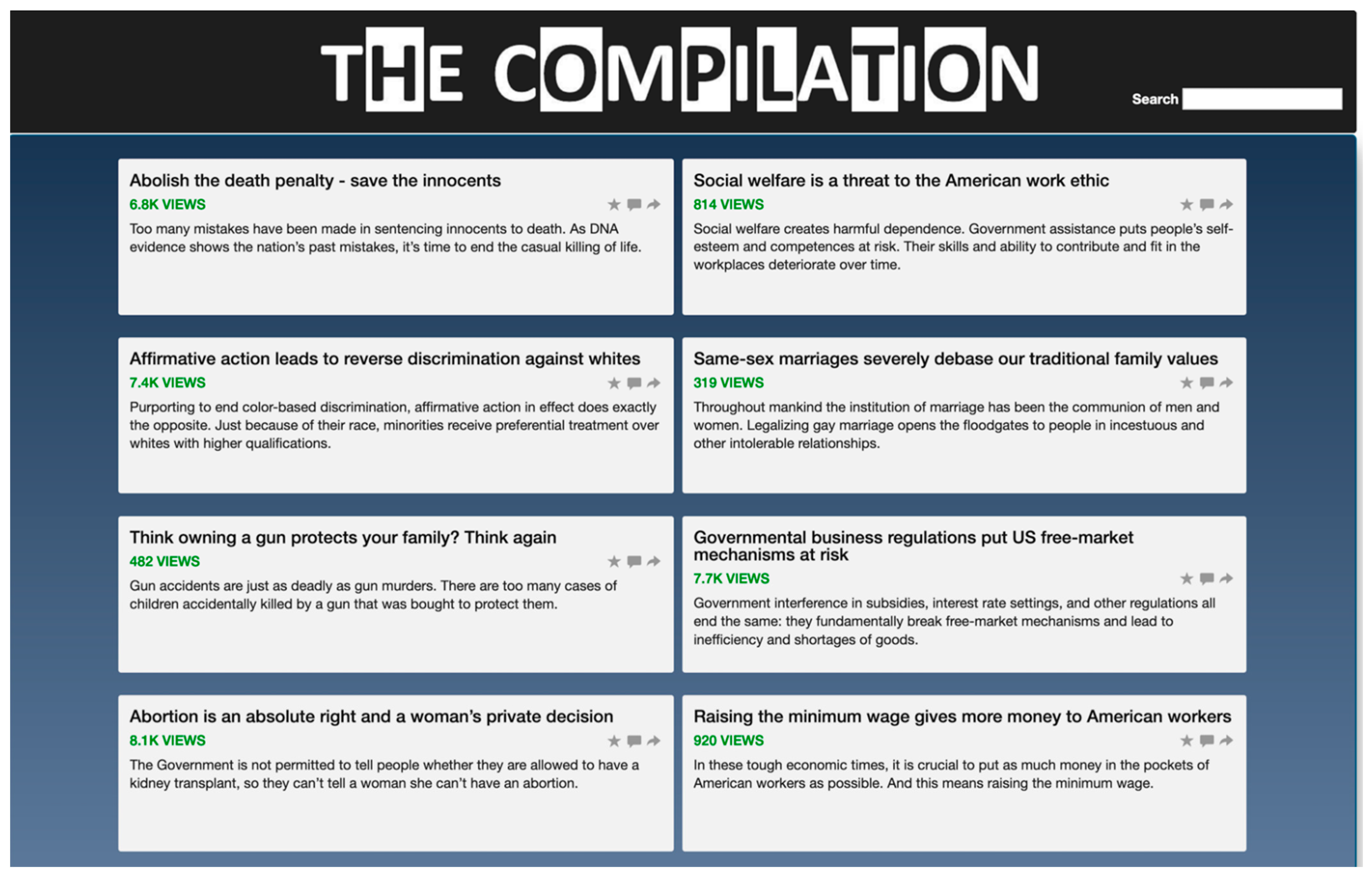

2.2.3. Stimuli and Stimuli Pretest

2.2.4. Measures

2.3. Results

2.3.1. Confirmation Bias

2.3.2. Impact of Social Cues

2.3.3. Confirmation Bias and Impact of Social Cues

2.4. Summary

3. Study 2

3.1. Overview

3.2. Method

3.2.1. Participants

3.2.2. Procedure

3.3. Results

3.3.1. Confirmation Bias

3.3.2. Impact of Social Cues

3.3.3. Confirmation Bias and Impact of Social Cues

3.4. Summary

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

| 1 | Both studies were preregistered. However, the predictions in this manuscript depart from these preregistrations, due to subsequent literature review and the goal of streamlining predictions across the two studies. Due to these departures from the preregistrations, we treat all analyses as exploratory. Yet, we still share our preregistrations for transparency. |

| 2 | Notably, to ensure random assignment, we excluded participants who reported that they could not access both a smartphone and a computer. |

| 3 | See note 2 above. |

References

- Abramowitz, Alan I., and Kyle L. Saunders. 2008. Is polarization a myth? The Journal of Politics 70: 542–55. [Google Scholar] [CrossRef]

- Bao, Patti, Jeffrey Pierce, Stephen Whittaker, and Shumin Zhai. 2011. Smart phone use by non-mobile business users. Paper presented at 13th International Conference on Human Computer Interaction with Mobile Devices and Services (MobileHCI’11), Stockholm, Sweden, August 30–September 2; pp. 445–54. [Google Scholar] [CrossRef]

- Bayer, Joseph B., Sonya Dal Cin, Scott W. Campbell, and Elliot Panek. 2016. Consciousness and self-regulation in mobile communication. Human Communication Research 42: 71–97. [Google Scholar] [CrossRef]

- Bennett, W. Lance, and Shanto Iyengar. 2008. A new era of minimal effects? The changing foundations of political communication. Journal of Communication 58: 707–31. [Google Scholar] [CrossRef]

- Brewer, Marilynn B. 1991. The social self: On being the same and different at the same time. Personality and Social Psychology Bulletin 17: 475–82. [Google Scholar] [CrossRef]

- Chae, Minhee, and Jinwoo Kim. 2004. Do size and structure matter to mobile users? An empirical study of the effects of screen size, information structure, and task complexity on user activities with standard web phones. Behaviour & Information Technology 23: 165–81. [Google Scholar] [CrossRef]

- Chaiken, Shelly. 1987. The heuristic model of persuasion. In Social Influence: The Ontario Symposium. Edited by Mark P. Zanna, James M. Olson and C. Peter Herman. New York: Psychology Press, vol. 5, pp. 3–39. [Google Scholar]

- Claypool, Mark, Phong Le, Makoto Wased, and David Brown. 2001. Implicit interest indicators. Paper presented at 6th International Conference on Intelligent User Interfaces, Santa Fe, NM, USA, January 14–17; pp. 33–40. [Google Scholar] [CrossRef]

- Doherty, Carroll, Jocelyn Kiley, and Bridget Jameson. 2016. Partisanship and Political Animosity in 2016. Washington, DC: Pew Research Center. Available online: https://www.pewresearch.org/politics/2016/06/22/partisanship-and-political-animosity-in-2016/ (accessed on 12 October 2020).

- Dunaway, Johanna, and Stuart Suroka. 2019. Smartphone-size screens constrain cognitive access to video news stories. Information, Communication & Society 24: 69–84. [Google Scholar] [CrossRef]

- Dunaway, Johanna, Kathleen Searles, Mingxiao Sui, and Newly Paul. 2018. News attention in a mobile era. Journal of Computer-Mediated Communication 23: 107–24. [Google Scholar] [CrossRef]

- Dvir-Gvirsman, Shira. 2019. I like what I see: Studying the influence of popularity cues on attention allocation and news selection. Information, Communication & Society 22: 286–305. [Google Scholar] [CrossRef]

- Eveland, William P. 2003. A “mix of attributes” approach to the study of media effects and new communication technologies. Journal of Communication 53: 395–410. [Google Scholar] [CrossRef]

- Festinger, Leon. 1957. A Theory of Cognitive Dissonance. Stanford: Stanford University Press, vol. 2. [Google Scholar]

- Ghose, Anindya, Avi Goldfarb, and Sang Pil Han. 2013. How is the mobile Internet different? Search costs and local activities. Information Systems Research 24: 613–31. [Google Scholar] [CrossRef]

- Haim, Mario, Anna Sophie Kümpel, and Hans-Bernd Brosius. 2018. Popularity cues in online media: A review of conceptualizations, operationalizations, and general effects. SCM Studies in Communication and Media 7: 186–207. [Google Scholar] [CrossRef]

- Iyengar, Shanto, Yphtach Lelkes, Matthew Levendusky, Neil Malhotra, and Sean J. Westwood. 2019. The origins and consequences of affective polarization in the United States. Annual Review of Political Science 22: 129–46. [Google Scholar] [CrossRef]

- Jang, S. Mo. 2014. Challenges to selective exposure: Selective seeking and avoidance in a multitasking media environment. Mass Communication and Society 17: 665–88. [Google Scholar] [CrossRef]

- Kaiser, Johannes, Tobias R. Keller, and Katharina Kleinen-von Königslöw. 2021. Incidental news exposure on Facebook as a social experience: The influence of recommender and media cues on news selection. Communication Research 48: 77–99. [Google Scholar] [CrossRef]

- Kamvar, Maryam, and Shumeet Baluja. 2006. A large scale study of wireless search behavior: Google mobile search. Paper presented at SIGCHI Conference on Human Factors in Computing Systems, Montréal, QC, Canada, April 22–27; pp. 701–9. [Google Scholar] [CrossRef]

- Kang, Hyunjin, and S. Shyam Sundar. 2016. When self is the source: Effects of media customization on message processing. Media Psychology 19: 561–88. [Google Scholar] [CrossRef]

- Kim, Ki Joon, and S. Shyam Sundar. 2016. Mobile persuasion: Can screen size and presentation mode make a difference to trust? Human Communication Research 42: 45–70. [Google Scholar] [CrossRef]

- Kim, Minchul, and Yanqin Lu. 2020. Testing partisan selective exposure in a multidimensional choice context: Evidence from a conjoint experiment. Mass Communication and Society 23: 107–27. [Google Scholar] [CrossRef]

- Knobloch-Westerwick, Silvia. 2014. Choice and Preference in Media Use: Advances in Selective Exposure Theory and Research. New York: Routledge. [Google Scholar]

- Knobloch-Westerwick, Silvia. 2015. The selective exposure self-and affect-management (SESAM) model: Applications in the realms of race, politics, and health. Communication Research 42: 959–85. [Google Scholar] [CrossRef]

- Knobloch-Westerwick, Silvia, Nikhil Sharma, Derek L. Hansen, and Scott Alter. 2005. Impact of popularity indications on readers’ selective exposure to online news. Journal of Broadcasting & Electronic Media 49: 296–313. [Google Scholar] [CrossRef]

- Lazarsfeld, Paul F., Bernard Berelson, and Hazel Gaudet. 1944. The People’s Choice. New York: Duell, Sloan & Pearce. [Google Scholar]

- Lee, Seoyeon, and S. Shyam Sundar. 2015. Cosmetic customization of mobile phones: Cultural antecedents, psychological correlates. Media Psychology 18: 1–23. [Google Scholar] [CrossRef]

- Levendusky, Matthew S. 2013. Why do partisan media polarize viewers? American Journal of Political Science 57: 611–23. [Google Scholar] [CrossRef]

- Li, Ruobing, Michail Vafeiadis, Anli Xiao, and Guolan Yang. 2020. The role of corporate credibility and bandwagon cues in sponsored social media advertising. Corporate Communications: An International Journal 25: 495–513. [Google Scholar] [CrossRef]

- McKnight, Jessica. 2018. The impact of cues on perceptions and selection of content in online environment. Paper presented at University of Münster 4th Annual Summer School, Münster, Germany, May 29–June 3. [Google Scholar]

- Medero, Kristina, Kelly Merrill, and Morgan Quinn Ross. 2022. Modeling access across the digital divide for intersectional groups seeking web-based health information: National survey. Journal of Medical Internet Research 24: e32678. [Google Scholar] [CrossRef] [PubMed]

- Melumad, Shiri, and Michel Tuan Pham. 2020. The smartphone as a pacifying technology. Journal of Consumer Research 47: 237–50. [Google Scholar] [CrossRef]

- Messing, Solomon, and Sean J. Westwood. 2014. Selective exposure in the age of social media: Endorsements trump partisan source affiliation when selecting news online. Communication Research 41: 1042–63. [Google Scholar] [CrossRef]

- Molyneux, Logan. 2015. Civic Engagement in a Mobile Landscape: Testing the Roles of Duration and Frequency in Learning from News. Doctoral thesis, The University of Texas at Austin, Austin, TX, USA. [Google Scholar]

- Molyneux, Logan. 2018. Mobile news consumption: A habit of snacking. Digital Journalism 6: 634–50. [Google Scholar] [CrossRef]

- Moore, Ryan. C., and Jeffrey T. Hancock. 2020. Older adults, social technologies, and the coronavirus pandemic: Challenges, strengths, and strategies for support. Social Media + Society 6. [Google Scholar] [CrossRef]

- Mothes, Cornelia, and Jakob Ohme. 2019. Partisan selective exposure in times of political and technological upheaval: A social media field experiment. Media and Communication 7: 42–53. [Google Scholar] [CrossRef]

- Napoli, Philip M., and Jonathan A. Obar. 2014. The emerging mobile Internet underclass: A critique of mobile Internet access. The Information Society 30: 323–34. [Google Scholar] [CrossRef]

- Neuwirth, Kurt, Edward Frederick, and Charles Mayo. 2002. Person-effects and heuristic-systematic processing. Communication Research 29: 320–59. [Google Scholar] [CrossRef]

- Newport, Frank, and Andrew Dugan. 2017. Partisan Differences Growing on a Number of Issues. Washington, DC: Gallup. Available online: https://news.gallup.com/opinion/polling-matters/215210/partisan-differences-growing-number-issues.aspx (accessed on 12 October 2020).

- Ofcom. 2018. Scrolling News: The Changing Face of Online News Consumption. Available online: https://www.ofcom.org.uk/__data/assets/pdf_file/0022/115915/Scrolling-News.pdf (accessed on 5 November 2020).

- Ohme, Jakob. 2020. Mobile but not mobilized? Differential gains from mobile news consumption for citizens’ political knowledge and campaign participation. Digital Journalism 8: 103–25. [Google Scholar] [CrossRef]

- Ohme, Jakob, and Cornelia Mothes. 2020. What affects first-and second-level selective exposure to journalistic news? A social media online experiment. Journalism Studies 21: 1220–42. [Google Scholar] [CrossRef]

- Ohme, Jakob, Ewa Masłowska, and Cornelia Mothes. 2022a. Mobile news learning—Investigating political knowledge gains in a social media newsfeed with mobile eye tracking. Political Communication 39: 339–57. [Google Scholar] [CrossRef]

- Ohme, Jakob, Kathleen Searles, and Claes H. de Vreese. 2022b. Information processing on smartphones in public versus private. Journal of Computer-Mediated Communication 27: zmac022. [Google Scholar] [CrossRef]

- Orben, Amy, and Andrew K. Przybylski. 2019. The association between adolescent well-being and digital technology use. Nature Human Behaviour 3: 173–82. [Google Scholar] [CrossRef]

- Oyserman, Daphna. 2001. Self-concept and identity. In The Blackwell Handbook of Social Psychology: Intraindividual Processes. Edited by Abraham Tesser and Norbert Schwarz. Oxford: Blackwell, pp. 499–517. [Google Scholar] [CrossRef]

- Pearson, George David Hooke, and Silvia Knobloch-Westerwick. 2019. Is the confirmation bias bubble larger online? Pre-election confirmation bias in selective exposure to online versus print political information. Mass Communication and Society 22: 466–86. [Google Scholar] [CrossRef]

- Porten-Cheé, Pablo, Jörg Haßler, Pablo Jost, Christiane Eilders, and Marcus Maurer. 2018. Popularity cues in online media: Theoretical and methodological perspectives. SCM Studies in Communication and Media 7: 208–30. [Google Scholar] [CrossRef]

- Ross, Morgan Quinn, and Joseph B. Bayer. 2021. Explicating self-phones: Dimensions and correlates of smartphone self-extension. Mobile Media & Communication 9: 488–512. [Google Scholar] [CrossRef]

- Stroud, Natalie Jomini. 2010. Polarization and partisan selective exposure. Journal of Communication 60: 556–76. [Google Scholar] [CrossRef]

- Sundar, S. Shyam. 2007. The MAIN model: A heuristic approach to understanding technology effects on credibility. In Digital Media, Youth, and Credibility. Edited by Miriam J. Metzger and Andrew J. Flanagin. MIT Press: Cambridge, pp. 73–100. [Google Scholar] [CrossRef]

- Sundar, S. Shyam, and Clifford Nass. 2001. Conceptualizing sources in online news. Journal of Communication 51: 52–72. [Google Scholar] [CrossRef]

- Sundar, S. Shyam, Haiyan Jia, T. Franklin Waddell, and Yan Huang. 2015. Toward a theory of interactive media effects (TIME): Four models for explaining how interface features affect user psychology. In The Handbook of the Psychology of Communication Technology. Edited by S. Shyam Sundar. Chichester: Wiley Blackwell, pp. 47–86. [Google Scholar] [CrossRef]

- Sunstein, Cass R. 2009. Going to Extremes: How Like Minds Unite and Divide. New York: Oxford University Press. [Google Scholar]

- Walker, Mason. 2019. Americans Favor Mobile Devices over Desktops and Laptops for Getting News. Washington, DC: Pew Research Center. Available online: https://www.pewresearch.org/fact-tank/2019/11/19/americans-favor-mobile-devices-over-desktops-and-laptops-for-getting-news/ (accessed on 12 October 2020).

- Westerwick, Axel, Benjamin K. Johnson, and Silvia Knobloch-Westerwick. 2017. Confirmation biases in selective exposure to political online information: Source bias vs. content bias. Communication Monographs 84: 343–64. [Google Scholar] [CrossRef]

- Westlund, Oscar. 2015. News consumption in an age of mobile media: Patterns, people, place, and participation. Mobile Media & Communication 3: 151–59. [Google Scholar] [CrossRef]

- Wojcieszak, Magdalena, and R. Kelly Garrett. 2018. Social identity, selective exposure, and affective polarization: How priming national identity shapes attitudes toward immigrants via news selection. Human Communication Research 44: 247–73. [Google Scholar] [CrossRef]

- Yang, Tian, Sílvia Majó-Vázquez, Rasmus K. Nielsen, and Sandra González-Bailón. 2020. Exposure to news grows less fragmented with increase in mobile access. Proceedings of the National Academy of Sciences of the United States of America 117: 28678–83. [Google Scholar] [CrossRef] [PubMed]

| Proportion of Articles Selected | Average Time Spent per Article (s) | |||

|---|---|---|---|---|

| Consistent | Discrepant | Consistent | Discrepant | |

| Overall | 0.44 (0.39) a | 0.37 (0.38) c | 14.98 (21.57) a | 11.63 (20.06) c |

| Smartphone | 0.41 (0.38) a | 0.34 (0.37) b | 16.04 (21.87) a | 8.95 (16.36) c |

| Computer | 0.47 (0.40) | 0.40 (0.39) | 14.06 (21.32) | 13.91 (22.54) |

| Proportion of Articles Selected | Average Time Spent per Article (s) | |||

|---|---|---|---|---|

| High | Low | High | Low | |

| Overall | 0.44 (0.39) a | 0.37 (0.38) b | 14.40 (22.46) | 12.25 (19.16) |

| Smartphone | 0.41 (0.39) | 0.34 (0.35) | 13.80 (21.15) | 11.29 (18.00) |

| Computer | 0.46 (0.40) | 0.40 (0.40) | 14.91 (23.59) | 13.07 (20.12) |

| Proportion of Articles Selected | Average Time Spent per Article (s) | |||||||

|---|---|---|---|---|---|---|---|---|

| High | Low | High | Low | |||||

| Consistent | Discrepant | Consistent | Discrepant | Consistent | Discrepant | Consistent | Discrepant | |

| Overall | 0.47 (0.41) | 0.40 (0.38) | 0.42 (0.37) a | 0.33 (0.38) b | 16.29 (24.02) | 12.45 (20.64) | 13.66 (18.77) | 10.82 (19.50) |

| Smartphone | 0.43 (0.41) | 0.38 (0.37) | 0.39 (0.34) a | 0.29 (0.36) b | 16.27 (21.51) | 11.30 (20.62) | 15.81 (22.37) a | 6.57 (10.01) c |

| Computer | 0.50 (0.41) | 0.42 (0.38) | 0.44 (0.39) | 0.37 (0.33) | 16.30 (26.10) | 13.46 (20.74) | 11.76 (14.75) | 14.34 (24.27) |

| Proportion of Articles Selected | Average Time Spent per Article (s) | |||

|---|---|---|---|---|

| Consistent | Discrepant | Consistent | Discrepant | |

| Overall | 0.45 (0.39) a | 0.35 (0.41) c | 14.30 (23.14) a | 8.13 (14.76) c |

| Smartphone | 0.41 (0.42) a | 0.33 (0.41) c | 14.31 (23.84) a | 7.76 (15.00) c |

| Computer | 0.48 (0.41) a | 0.37 (0.41) c | 14.29 (22.44) a | 8.52 (14.52) c |

| Proportion of Articles Selected | Average Time Spent per Article (s) | |||

|---|---|---|---|---|

| High | Low | High | Low | |

| Overall | 0.39 (0.42) | 0.41 (0.42) | 10.39 (18.72) | 11.97 (20.43) |

| Smartphone | 0.35 (0.41) | 0.40 (0.42) | 10.14 (21.27) | 11.77 (18.82) |

| Computer | 0.43 (0.42) | 0.42 (0.41) | 10.65 (15.71) | 12.18 (22.00) |

| Proportion of Articles Selected | Average Time Spent per Article (s) | |||||||

|---|---|---|---|---|---|---|---|---|

| High | Low | High | Low | |||||

| Consistent | Discrepant | Consistent | Discrepant | Consistent | Discrepant | Consistent | Discrepant | |

| Overall | 0.45 (0.42) a | 0.33 (0.40) c | 0.45 (0.41) a | 0.37 (0.42) c | 13.64 (22.92) a | 7.30 (12.84) c | 14.95 (23.37) a | 8.98 (16.48) c |

| Smartphone | 0.40 (0.42) a | 0.29 (0.40) c | 0.43 (0.42) | 0.37 (0.43) | 14.17 (27.34) a | 6.31 (12.01) c | 14.45 (19.90) a | 9.19 (17.40) c |

| Computer | 0.50 (0.43) a | 0.37 (0.41) c | 0.46 (0.40) a | 0.37 (0.42) c | 13.10 (17.36) a | 8.30 (13.61) c | 15.44 (26.43) a | 8.75 (15.47) c |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ross, M.Q.; Crum, J.; Wang, S.; Knobloch-Westerwick, S. Mobile Selective Exposure: Confirmation Bias and Impact of Social Cues during Mobile News Consumption in the United States. Journal. Media 2023, 4, 146-161. https://doi.org/10.3390/journalmedia4010011

Ross MQ, Crum J, Wang S, Knobloch-Westerwick S. Mobile Selective Exposure: Confirmation Bias and Impact of Social Cues during Mobile News Consumption in the United States. Journalism and Media. 2023; 4(1):146-161. https://doi.org/10.3390/journalmedia4010011

Chicago/Turabian StyleRoss, Morgan Quinn, Jarod Crum, Shengkai Wang, and Silvia Knobloch-Westerwick. 2023. "Mobile Selective Exposure: Confirmation Bias and Impact of Social Cues during Mobile News Consumption in the United States" Journalism and Media 4, no. 1: 146-161. https://doi.org/10.3390/journalmedia4010011

APA StyleRoss, M. Q., Crum, J., Wang, S., & Knobloch-Westerwick, S. (2023). Mobile Selective Exposure: Confirmation Bias and Impact of Social Cues during Mobile News Consumption in the United States. Journalism and Media, 4(1), 146-161. https://doi.org/10.3390/journalmedia4010011